Investigations of Object Detection in Images/Videos Using Various Deep Learning Techniques and Embedded Platforms—A Comprehensive Review

Abstract

1. Introduction

- To achieve high detection accuracy, related challenges are:

- Intra-class variations: variations in real-world objects include color, size, shape, material, and pose variations.

- Image conditions and unconstrained environments: factors such as lighting, weather conditions, occlusion, object physical location, viewpoint, clutter, shadow, blur, and motion.

- Imaging noise: factors such as low-resolution images, compression noise, filter distortions.

- Thousands of structured and unstructured real-world object categories to be distinguished by the detector.

- To achieve high efficiency, related challenges are:

- Low-end mobile devices have limited memory, limited speed, and low computational capabilities.

- Thousands of open-world object classes should be distinguished.

- Large scale image or video data.

- Inability to handle previously unseen objects.

Features of the Proposed Review

- It also covers some specific problems in computer vision (CV) application areas, such as pedestrian detection, the military, crowd detection, intelligent transportation systems, medical imaging analysis, face detection, object detection in sports videos, and other domains.

- It provides an outlook on the available deep learning frameworks, application program Interface (API) services, and specific datasets used for object detection applications.

- It also puts forth the idea of deploying deep learning models into various embedded platforms for real-time object detection. In the case of a pre-trained model being adopted, replacing the feature extractor with an efficient backbone network would improve the real-time performance of the CNN.

- It describes how a GPU-based CNN object detection framework would improve real-time detection performance on edge devices.

2. Object Detection

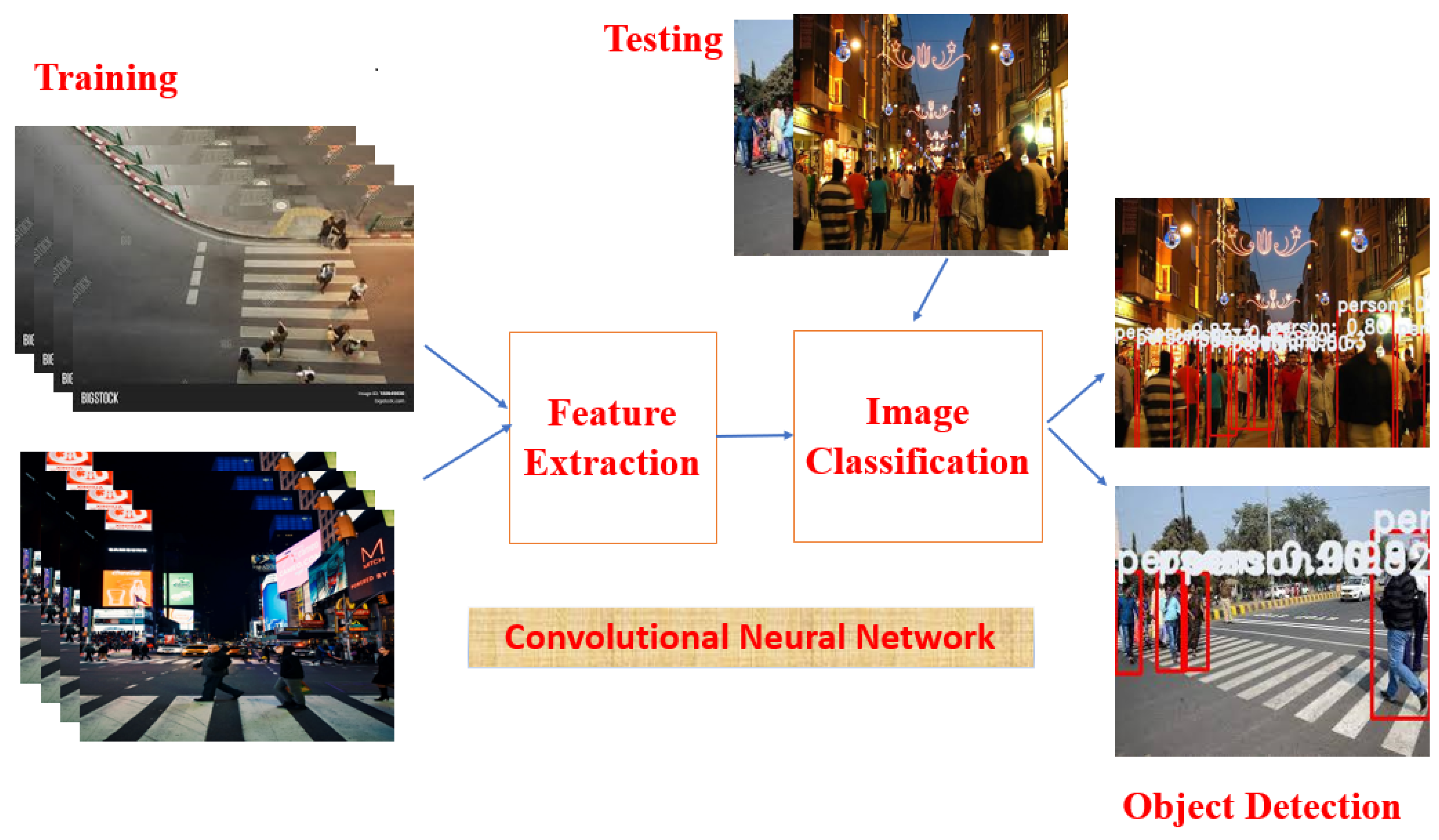

2.1. Basic Block Diagram of Object Detection

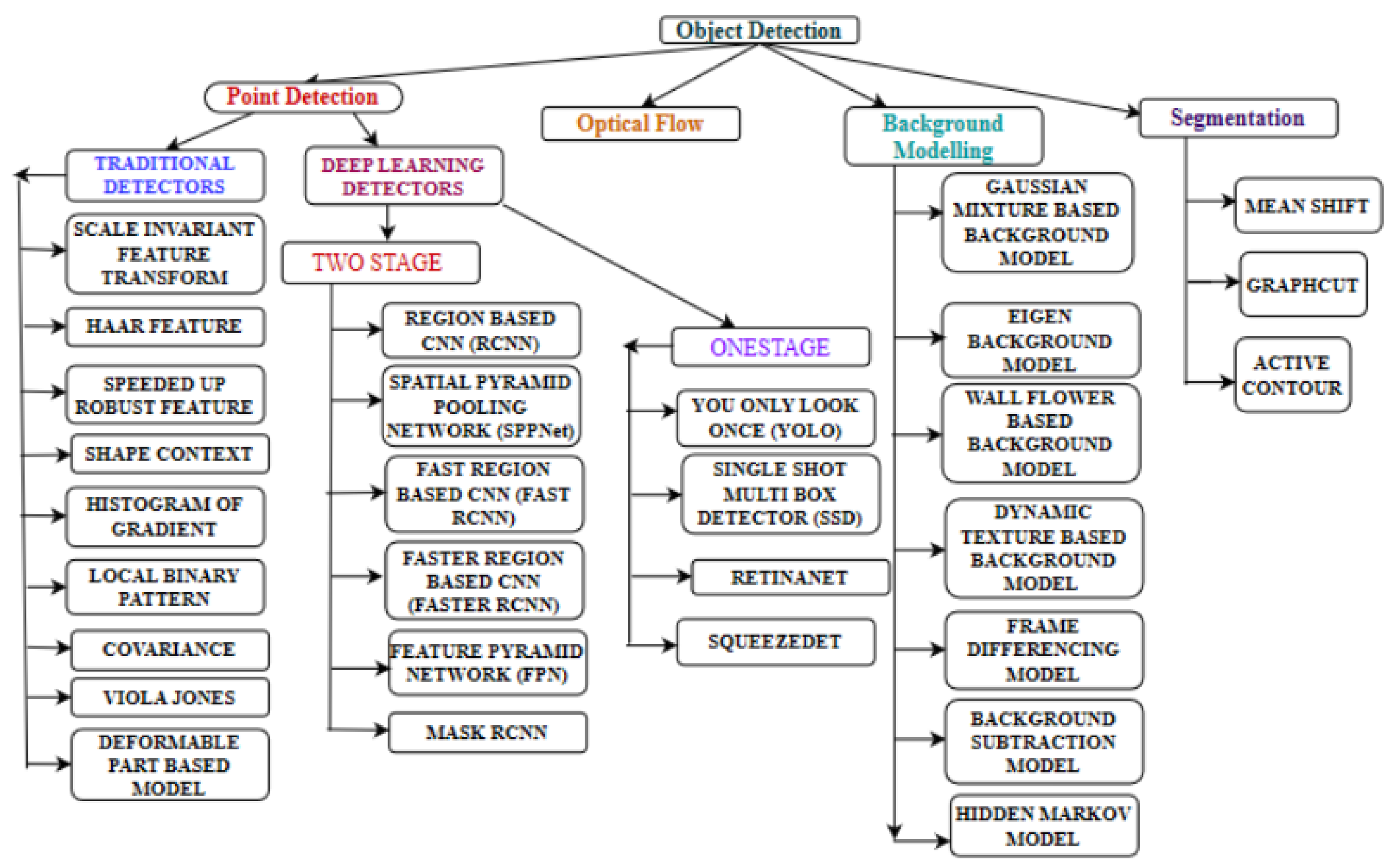

2.2. Various Deep Learning Approaches for Object Detection

2.2.1. Viola–Jones Detector

2.2.2. HOG Detector

2.2.3. Deformable Part-Based Model (DPM)

2.3. Classification-Based Object Detectors (Two-Stage Detectors)

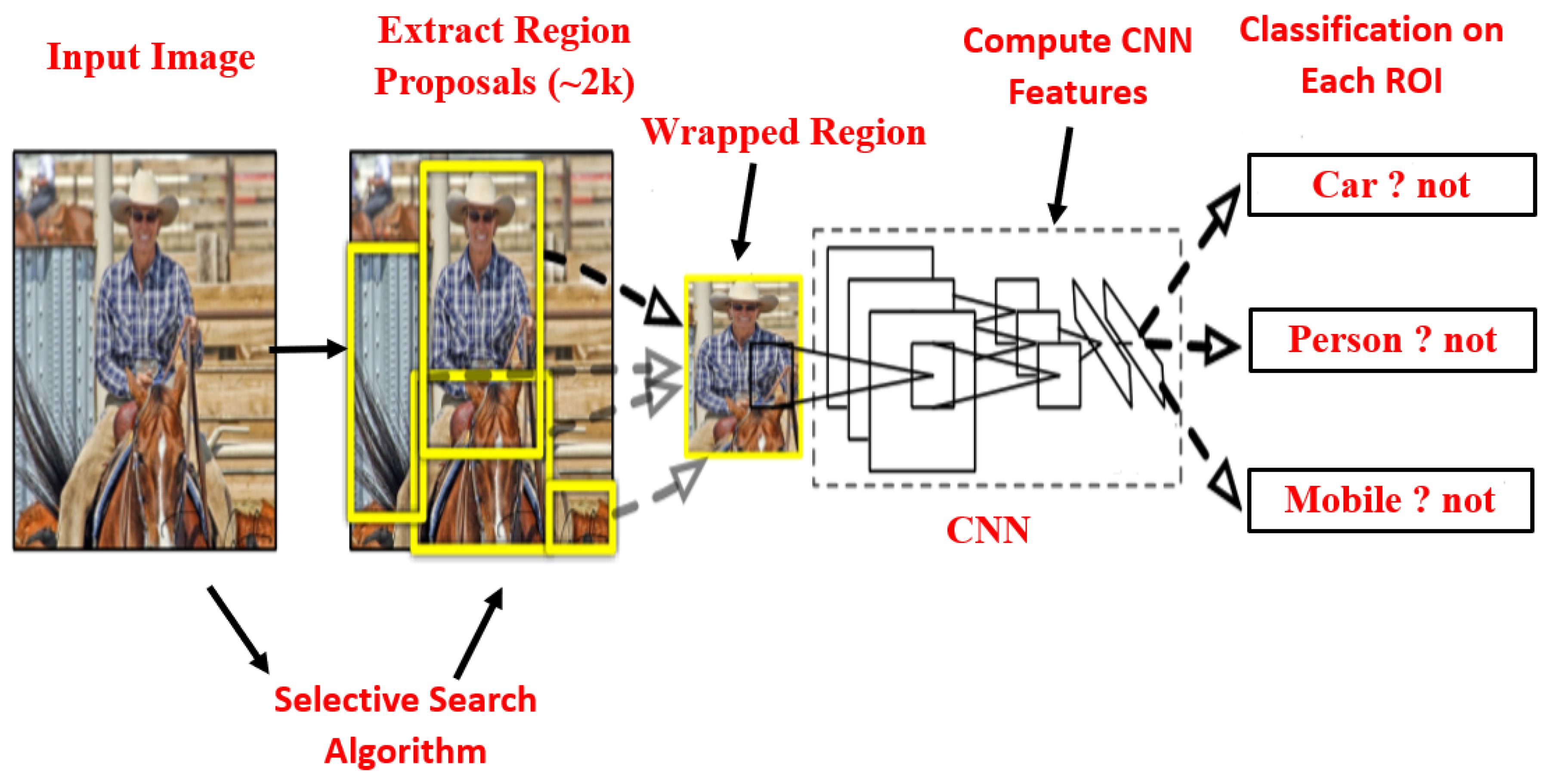

2.3.1. Region-Based Convolutional Neural Network (RCNN)

2.3.2. Spatial Pyramid Pooling Network (SPPNet)

2.3.3. Fast Region Convolutional Neural Network (Fast RCNN)

2.3.4. Faster Region Convolutional Neural Network (Faster RCNN)

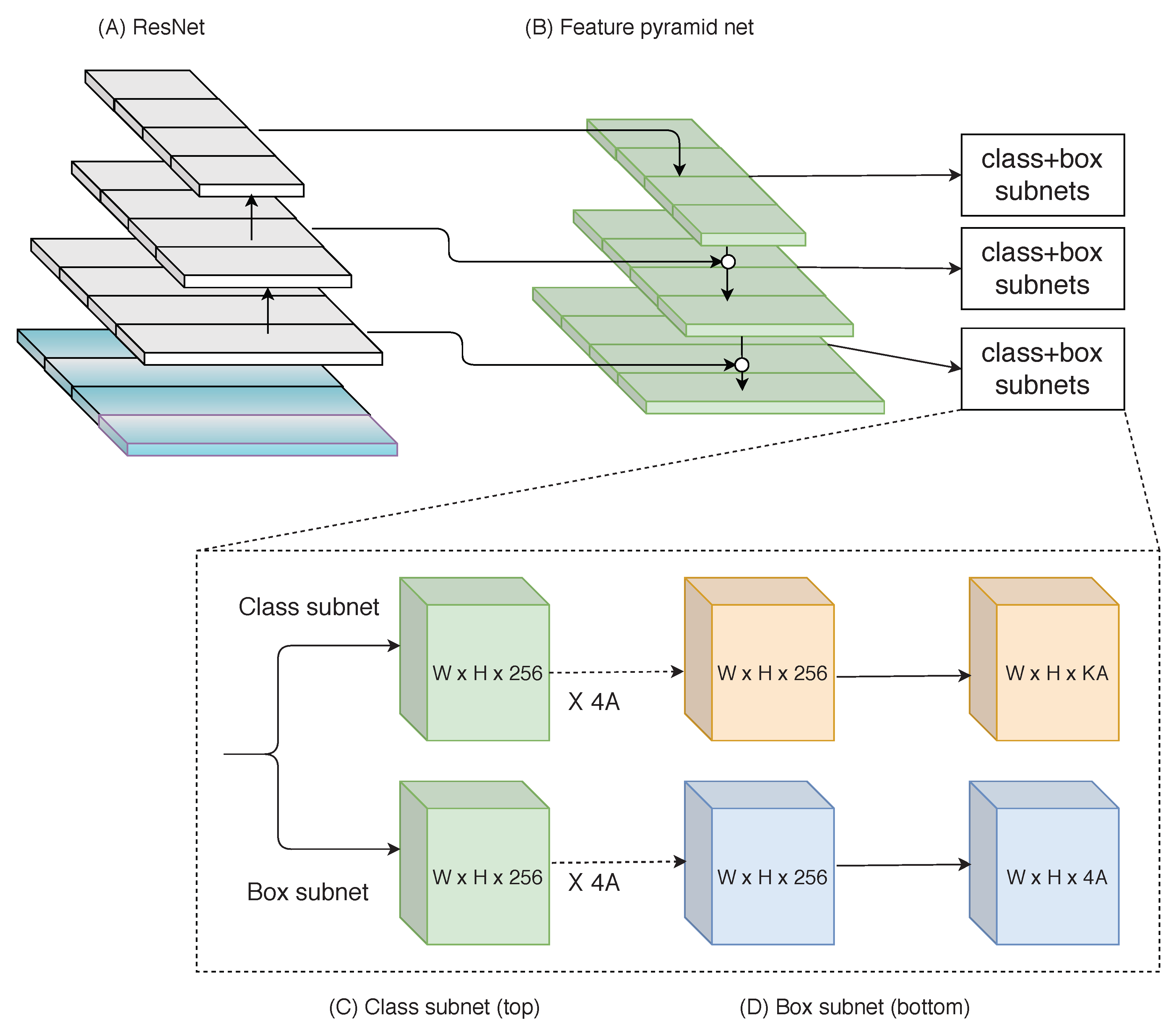

2.3.5. Feature Pyramid Networks (FPN)

2.3.6. Mask R-CNN

2.4. Regression-Based Object Detectors (One-Stage Detectors)

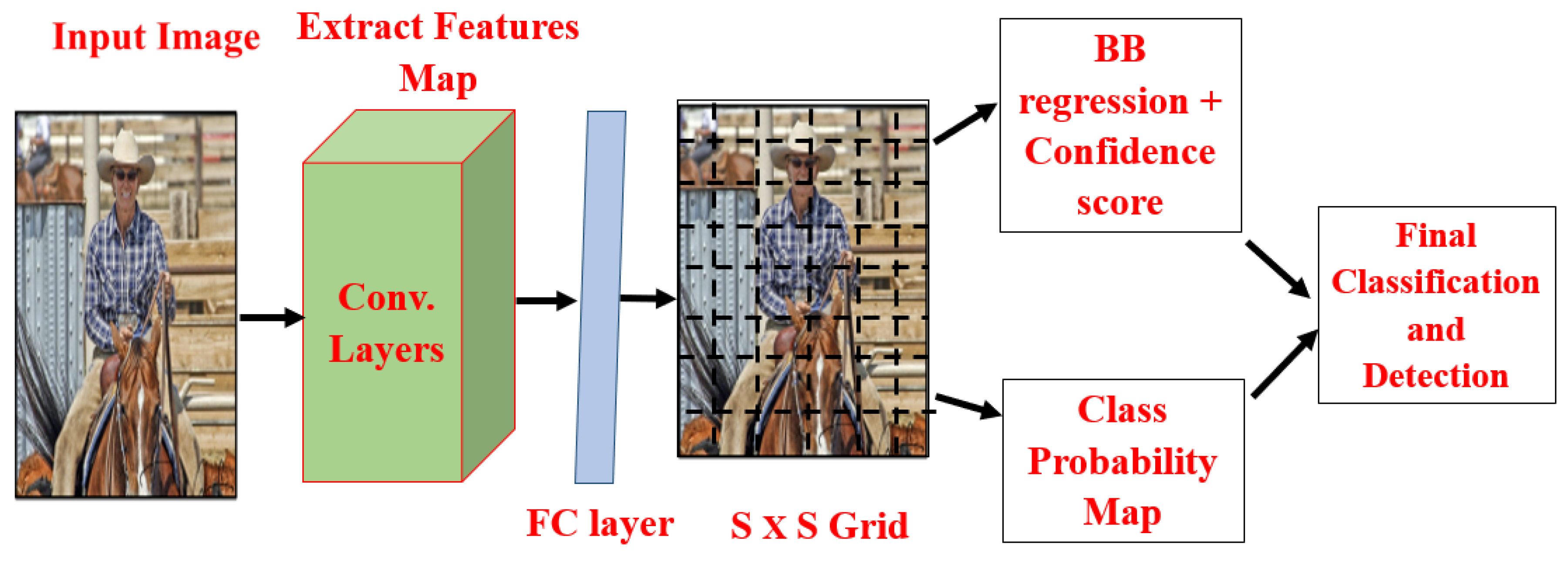

2.4.1. You Only Look Once (YOLO)

2.4.2. Single Shot Multi-Box Detector (SSD)

2.4.3. Retina-Net

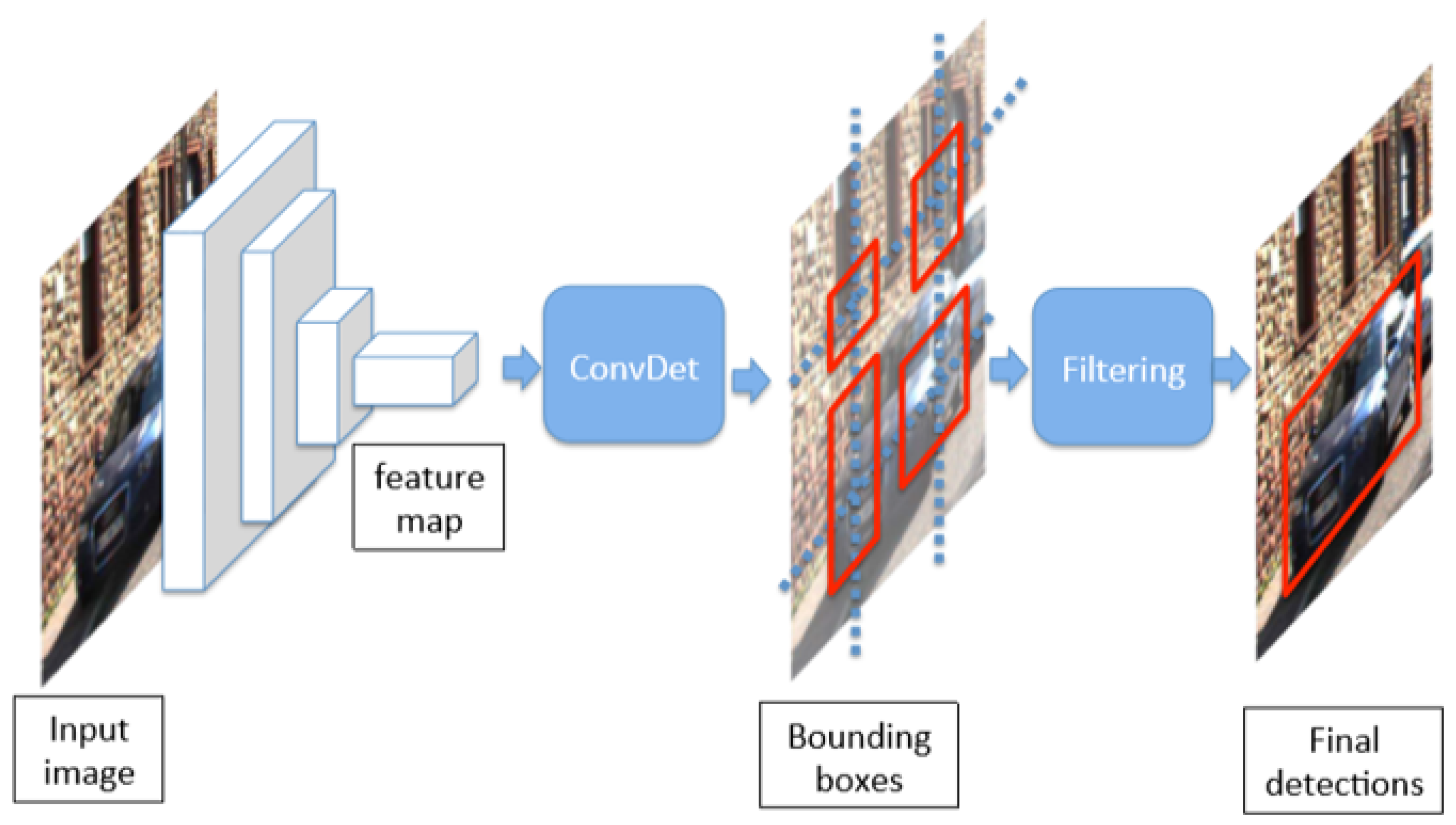

2.4.4. SqueezeDet

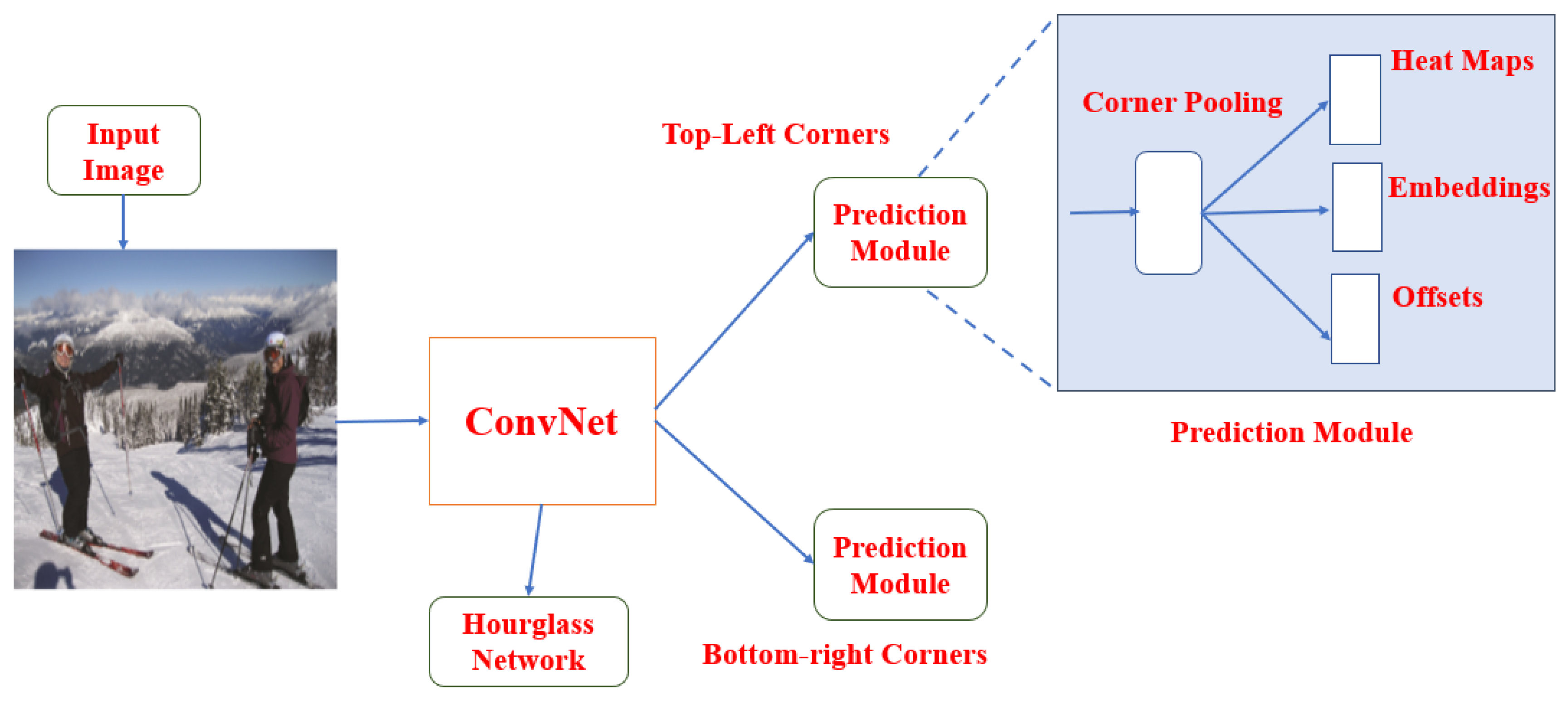

2.4.5. CornerNet

3. Available Deep Learning Frameworks and API Services

4. Object Detection Datasets and Metrics

- ImageNet [70]: It is based on WordNet Hierarchy. WordNet is also referred to as Synset. To define each synset, on average 1000 images are provided by ImageNet. ImageNet dataset offers billions of images in WordNet Hierarchy. It has a total of 14,197,122 images—images with bounding box annotations of 1,034,908 and an image resolution of pixels. In ImageNet, to detect local features, 1.2 million images exhibit SIFT (scale-invariant feature transform) features.

- WIDERFACE [71]: This dataset contains total of 32,202 images and which includes around 400,000 faces for a wide range of scales. The dataset is split into three parts: training data 40%, validation data 10%, and testing data 50%.

- FDDB [72]: “Face Detection Dataset and Benchmark” contains 2845 images with a total of 5171 faces. Since it is a small dataset, it is generally used for testing only, and WIDERFACE dataset is used for training the object detector.

- CityPersons [73]: It is a newly created and challenging pedestrian dataset on the top of the Cityscapes [74] dataset. This dataset is very useful, especially in more difficult cases, such as with small-scale data and heavily occluded pedestrians. It contains 5000 images that were captured from various cities in Germany.

- INRIA [13]: INRIA is a popular person dataset used in pedestrian detection. It contains 614 person images for training and 288 person images for testing.

- KITTI [47]: This dataset contains high resolution 7481 labeled images and 7518 testing images. “Person class” in this dataset is divided into two subclasses—pedestrian and cyclist, and it is tested on three evaluation metrics—easy (E), moderate (M) and hard (H).

- ETH [75]: This dataset contains three videoclips and which have a total of 1804 frames, and it is commonly used as a testing dataset.

- 80M Tiny image dataset [76]: This dataset contains around 80 million colored images.

- Microsoft COCO (MS-COCO) [77]: “Microsoft Common Objects in Context” dataset has 330,000 images comprising 250,000 labeled images; 150 are object instances, 80 are object categories, 91 are stuff categories, and 5 are captions per image. This dataset exhibits features such as context recognition, multi-objects per image, and object segmentation.

- CIFAR-100 dataset [78]: This dataset provides only 100 object classes; each contains 600 images of which 500 and 100 per class are training and testing images; 100 object classes are clustered into 20 superclasses, and each image comes with a “fine” label (the class to which it belongs) and a “coarse” label (the superclass to which it belongs).

- Caltech-256 [81]: It contains 256 object classes with a total of 30,607 images for each class 80 images, and is not suitable for object localization.

- ILSVRC [82]: Since 2010, the “ImageNet Large Scale Visual Recognition Challenge (ILSVRC)” has been conducted every year for object detection and classification. The ILSVRC dataset contains 10 times more object classes than PASCAL VOC. It contains 200 object classes, whereas the PASCAL VOC dataset contains only 20 object classes.

- PASCAL VOC [83]: The “Pattern Analysis, Statistical Modelling and Computational Learning (PASCAL) Visual Object Classes (VOC)” challenge provides standardized image datasets for object class recognition tasks, and a common set of tools to access available datasets, and enables evaluations and comparisons of the performances of various object detection methods from 2008 to 2012. For object detection, all the researchers mostly follow MS-COCO and PASCAL-VOC datasets.

5. Object Detection Application Domains

5.1. Pedestrian Detection

5.2. Face Detection

5.3. Military Applications

5.4. Medical Image Analysis

5.5. Intelligent Transportation Systems

5.6. Crowd Detection

5.7. Object Detection in Sports Videos

5.8. Other Domains

6. Approaches of Deep Learning for Object Detection

7. GPU-Based Embedded Platforms for Real Time Object Detection

7.1. Raspberry Pi 4

7.2. ZYNQ BOARD

7.3. NVIDIA JETSON TX2 BOARD

7.4. GPU-Based CNN Object Detection

8. Research Directions

- More efficient detection frameworks: The main reason for the success of object detection is due to the development of superior detection frameworks, both in two-stage and one-stage detectors (RCNN, Fast/Faster/Mask RCNN, YOLO, and SSD). Two-stage detectors exhibit high accuracy, whereas single-stage detectors are simple and faster. Object detectors depend a lot on the underlying backbone models, and most of them are optimized for classification of images, possibly causing a learning bias; and it could be helpful to develop new object detectors learning from scratch.

- Compact and efficient CNN features: CNN layers are increased in depth from several layers (AlexNet) to hundreds of layers (ResNet, ResNext, CentreNet, DenseNet). All these networks require a lot of data and high-end GPUs for training, since they have billions of parameters. Thus, to reduce network redundancy further, researchers should show interest in designing lightweight and compact networks.

- Weakly supervised detection: At present all the state-of-the-art detectors use only labeled data with either object segmentation masks or bounding boxes on the fully supervised models. But in the absence of labeled training data, fully supervised learning is not scalable, so it is essential to design a model where only partially labeled data are available.

- Efficient backbone architecture for object detection: Done by adopting weights of pre-trained classification models, since they are trained on large-scale datasets for object detection tasks. Thus, adopting a pre-trained model might not result in an optimal solution due the conflicts between image classification and object detection tasks. Currently, most object detectors are based on classification backbones, and only a few use different backbone models (like SqueezeDet based on SqueezeNet). So there is a need to develop a detection-aware light-weight backbone model for real-time object detection.

- Object detection in other modalities: Currently most of the object detectors work only with 2D images, but detection in other modalities—3D, LIDAR, etc.—would be highly relevant in application areas such as self-driving cars [274], drones, and robots. However, again the 3D object detection may raise new challenges using video, depth, and cloud points.

- Network optimization: Selecting an optimal detection network brings a perfect balance between speed, memory, and accuracy for a specific application and on embedded hardware. Though the detection accuracy is reduced, it is better to teach compact models with few parameters, and this situation might be overcome by introducing hint learning, knowledge distillation, and better pre-training schemes.

- Scale adaption [19]: It is more obvious in face detection and crowd detection; objects usually exist on different scales. In order to increase the robustness to learn spatial transformation, it is necessary to train designed detectors in scale-invariant, multi-scale, or scale-adaptive ways.

- (a)

- For scale-adaptive detectors, make an attention mechanism, form a cascaded network, and scale a distribution estimation for detecting objects adaptively.

- (b)

- For multi-scale detectors, both the GAN (generative adversarial network) and FPN (feature pyramid network) generate a multi-scale feature map.

- (c)

- For scale-invariant detectors, reverse connection, hard negative mining, backbone models such as AlexNet (rotation invariance) and ResNet are all beneficial.

- Cross-dataset training [275]: Cross-dataset training for object detection aims to detect the union of all the classes across different existing datasets with a single model and without additional labeling, which in turn saves the heavy burden of labeling new classes on all the existing datasets. Using cross-dataset training, one only needs to label the new classes on the new dataset. It is widely used in industrial applications that are usually faced with increasing classes.

9. Conclusions and Future Scope

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| 2D | 2-dimensional |

| ALPR | Automatic License Plate Recognition |

| AP | Average Precision |

| API | Application Program Interface |

| ARD | Acute Respiratory Disease |

| ARM | Advanced RISC Machine |

| AV | Autonomous Vehicle |

| BB | Bounding Box |

| BCNN | Binarized deep Convolutional Neural Network |

| BLE | Bluetooth Low Energy |

| BSP | Board Support Package |

| CAD | Computer Aided Design |

| CAN | Controller Area Network |

| CNN | Convolutional Neural Network |

| COCO | Common Objects in Context |

| COPD | Chronic Obstructive Pulmonary Disease |

| CPU | Central Processing Unit |

| CUDA | Compute Unified Device Architecture |

| CV | Computer Vision |

| DBN | Deep Belief Network |

| DCNN | Deep Convolutional Neural Network |

| DDR | Double Data Rate |

| DNN | Deep Neural Network |

| DPM | Deformable Part-based Model |

| FC | Fully-Convolutional |

| FCN | Fully-Convolutional Network |

| FDDB | Face Detection Dataset and Benchmark |

| FPGA | Field Programmable Gate Array |

| FPN | Feature Pyramid Networks |

| FPS | Frames Per Second |

| GAN | Generative Adversarial Network |

| GPIO | General Purpose Input/Output |

| GPU | Graphics Processing Unit |

| HCI | Human-Computer Interaction |

| HDMI | High-Definition Multimedia Interface |

| HLS | HTTP Live Streaming |

| HOG | Histogram of Oriented Gradients |

| HTTP | Hypertext Transfer Protocol |

| I2C | Inter-IC |

| I2S | Inter-IC Sound |

| ICF | Integral Channel Features |

| IEEE | Institute of Electrical and Electronics Engineers |

| ILSVRC | ImageNet Large Scale Visual Recognition Challenge |

| IoT | Internet-of-things |

| IOU | Intersection over Union |

| ISE | Integrated Synthesis Environment |

| ITS | Intelligent Transportation Systems |

| LAN | Local Area Network |

| mAP | mean Average Precision |

| MRI | Magnetic Resonance Imaging |

| MS-COCO | Microsoft Common Objects in Context |

| PASCAL | a Procedural Programming Language |

| PCI | Peripheral Component Interconnect |

| PL | Programmable Logic |

| PoE | Power over Ethernet |

| PS | Processing System |

| PVANET | a lightweight feature extraction network architecture for object detection |

| RAM | Random Access Memory |

| RBM | Restricted Boltzmann Machine |

| RCNN | Regions with Convolutional Neural Netwoks |

| REST | Representational State Transfer |

| RFCN | Region-based Fully-Convolutional Networks |

| RGB | Red Green Blue |

| ROI | Region of Interest |

| RPN | Region Proposal Network |

| SVM | Support Vector Machine |

| SD | Secure Digital |

| SDK | Software Development Kit |

| SIFT | Scale-Invariant Feature Transform |

| SoC | System-on-Chip |

| SPI | Serial Peripheral Interface |

| SPP | Spatial Pyramid Pooling |

| SPPNet | Spatial Pyramid Pooling Network |

| SSD | Single Shot Multi-Box Detector |

| SUN | Scene UNderstanding dataset |

| SVM | Support Vector Machine |

| TPPL | Task-driven Progressive Part Localization |

| TTL | Transistor-Transistor Logic |

| UAV | Unmanned Aerial Vehicles |

| USB | Universal Serial Bus |

| VHR | Very High Resolution |

| VJ | Viola–Jones |

| VOC | Visual Object Classes Challenge |

| YOLO | You Only Look Once |

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: San Diego, CA, USA, 2012; pp. 1097–1105. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: San Diego, CA, USA, 2015; pp. 91–99. [Google Scholar]

- Nguyen, H.T.; Lee, E.H.; Lee, S. Study on the Classification Performance of Underwater Sonar Image Classification Based on Convolutional Neural Networks for Detecting a Submerged Human Body. Sensors 2020, 20, 94. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Fourie, J.; Mills, S.; Green, R. Harmony filter: A robust visual tracking system using the improved harmony search algorithm. Image Vis. Comput. 2010, 28, 1702–1716. [Google Scholar] [CrossRef]

- Cuevas, E.; Ortega-Sánchez, N.; Zaldivar, D.; Pérez-Cisneros, M. Circle detection by harmony search optimization. J. Intell. Robot. Syst. 2012, 66, 359–376. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- McIvor, A.M. Background subtraction techniques. Proc. Image Vis. Comput. 2000, 4, 3099–3104. [Google Scholar]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; Volume 1, p. I. [Google Scholar]

- Viola, P.; Jones, M.J. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Sonference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Felzenszwalb, P.; McAllester, D.; Ramanan, D. A discriminatively trained, multiscale, deformable part model. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D. Cascade object detection with deformable part models. In Proceedings of the Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2241–2248. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Zou, Z.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. arXiv 2019, arXiv:1905.05055. [Google Scholar]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Pathak, A.R.; Pandey, M.; Rautaray, S. Application of deep learning for object detection. Procedia Comput. Sci. 2018, 132, 1706–1717. [Google Scholar] [CrossRef]

- Sultana, F.; Sufian, A.; Dutta, P. A review of object detection models based on convolutional neural network. arXiv 2019, arXiv:1905.01614. [Google Scholar]

- Zhao, Z.Q.; Zheng, P.; Xu, S.t.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed]

- Mittal, U.; Srivastava, S.; Chawla, P. Review of different techniques for object detection using deep learning. In Proceedings of the Third International Conference on Advanced Informatics for Computing Research, Shimla, India, 15–16 June 2019; pp. 1–8. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the International Conference on Computer Vision, Kerkyra, Corfu, Greece, 20–25 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Belongie, S.; Malik, J.; Puzicha, J. Shape matching and object recognition using shape contexts. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 509–522. [Google Scholar] [CrossRef]

- Girshick, R.B.; Felzenszwalb, P.F.; Mcallester, D.A. Object detection with grammar models. In Advances in Neural Information Processing Systems; Curran Associates Inc.: San Francisco, CA, USA, 2011; pp. 442–450. [Google Scholar]

- Girshick, R.B. From Rigid Templates to Grammars: Object Detection with Structured Models. Ph.D. Thesis, The University of Chicago, Chicago, IL, USA, 2012. [Google Scholar]

- Li, Y.F.; Kwok, J.T.; Tsang, I.W.; Zhou, Z.H. A convex method for locating regions of interest with multi-instance learning. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Antwerp, Belgium, 14–18 September 2009; Springer: Berlin, Germany, 2009; pp. 15–30. [Google Scholar]

- Uijlings, J.R.; Van De Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Girshick, R.B.; Felzenszwalb, P.F.; McAllester, D. Discriminatively Trained Deformable Part Models, Release 5. 2012. Available online: http://people.cs.uchicago.edu/~rbg/latent-release5/ (accessed on 7 May 2020).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 1440–1448. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Nancy, France, 14–18 September 2014; Springer: Berlin, Germany, 2014; pp. 818–833. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object detection via region-based fully convolutional networks. In Advances in Neural Information Processing Systems; Curran Associates Inc.: San Francisco, CA, USA, 2016; pp. 379–387. [Google Scholar]

- Li, Z.; Peng, C.; Yu, G.; Zhang, X.; Deng, Y.; Sun, J. Light-head R-CNN: In defense of two-stage object detector. arXiv 2017, arXiv:1711.07264. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 Octomber 2016; Springer: Berlin, Germany, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the International Cconference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Wu, B.; Iandola, F.; Jin, P.H.; Keutzer, K. SqueezeDet: Unified, small, low power fully convolutional neural networks for real-time object detection for autonomous driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 129–137. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and< 0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Object detection with keypoint triplets. arXiv 2019, arXiv:1904.08189. [Google Scholar]

- Fu, C.Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. Dssd: Deconvolutional single shot detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Mathematica. Available online: https://www.wolfram.com/mathematica/ (accessed on 31 December 2019).

- Dlib. Available online: Dlib.net (accessed on 31 December 2019).

- Theano. Available online: http://deeplearning.net/software/theano/ (accessed on 31 December 2019).

- Caffe. Available online: http://caffe.berkeleyvision.org/ (accessed on 31 December 2019).

- Deeplearning4j. Available online: https://deeplearning4j.org (accessed on 31 December 2019).

- Cahiner. Available online: https://chainer.org (accessed on 31 December 2019).

- Keras. Available online: https://keras.io/ (accessed on 31 December 2019).

- Mathworks—Deep Learning. Available online: https://in.mathworks.com/solutions/deep-learning.html (accessed on 31 December 2019).

- Apache. Available online: http://singa.apache.org (accessed on 31 December 2019).

- TensorFlow. Available online: https://www.tensorflow.org/ (accessed on 31 December 2019).

- Pytorch. Available online: https://pytorch.org (accessed on 31 December 2019).

- BigDL. Available online: https://github.com/intel-analytics/BigDL (accessed on 31 December 2019).

- Apache. Available online: http://www.apache.org (accessed on 31 December 2019).

- MXnet. Available online: http://mxnet.io/ (accessed on 31 December 2019).

- Microsoft Cognitive Service. Available online: https://www.microsoft.com/cognitive-services/en-us/computer-vision-api (accessed on 31 December 2019).

- Amazon Recognition. Available online: https://aws.amazon.com/rekognition/ (accessed on 31 December 2019).

- IBM Watson Vision Recognition service. Available online: http://www.ibm.com/watson/developercloud/visual-recognition.html (accessed on 31 December 2019).

- Google Cloud Vision API. Available online: https://cloud.google.com/vision/ (accessed on 31 December 2019).

- Cloud Sight. Available online: https://cloudsight.readme.io/v1.0/docs (accessed on 31 December 2019).

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Yang, S.; Luo, P.; Loy, C.C.; Tang, X. Wider face: A face detection benchmark. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5525–5533. [Google Scholar]

- Jain, V.; Learned-Miller, E. Fddb: A Benchmark for Face Detection in Unconstrained Settings; Technical Report, UMass Amherst Technical Report; UMass Amherst Libraries: Amherst, MA, USA, 2010. [Google Scholar]

- Zhang, S.; Benenson, R.; Schiele, B. Citypersons: A diverse dataset for pedestrian detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3213–3221. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 3213–3223. [Google Scholar]

- Ess, A.; Leibe, B.; Van Gool, L. Depth and appearance for mobile scene analysis. In Proceedings of the 2007 IEEE International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Torralba, A.; Fergus, R.; Freeman, W.T. 80 million tiny images: A large data set for nonparametric object and scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1958–1970. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 5–12 September 2014; Springer: Berlin, Germany, 2014; pp. 740–755. [Google Scholar]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images. Master’s Thesis, University of Tront, Toronto, ON, Canada, 2009. [Google Scholar]

- Wah, C.; Branson, S.; Welinder, P.; Perona, P.; Belongie, S.; Goering, C.; Berg, T.; Belhumeur, P. Caltech-UCSD Birds-200-2011; California Institute of Technology: Pasadena, CA, USA, 2011. [Google Scholar]

- Welinder, P.; Branson, S.; Mita, T.; Wah, C.; Schroff, F.; Belongie, S.; Perona, P. Caltech-UCSD birds 200; California Institute of Technology: Pasadena, CA, USA, 2010. [Google Scholar]

- Griffin, G.; Holub, A.; Perona, P. Caltech-256 Object Category Dataset; California Institute of Technology: Pasadena, CA, USA, 2007. [Google Scholar]

- ILSVRC Detection Challenge Results. Available online: http://www.image-net.org/challenges/LSVRC/ (accessed on 31 December 2019).

- Everingham, M.; Eslami, S.A.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. LabelMe: A database and web-based tool for image annotation. Int. J. Comput. Vis. 2008, 77, 157–173. [Google Scholar] [CrossRef]

- Xiao, J.; Hays, J.; Ehinger, K.A.; Oliva, A.; Torralba, A. Sun database: Large-scale scene recognition from abbey to zoo. In Proceedings of the Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3485–3492. [Google Scholar]

- Open Images. Available online: https://www.kaggle.com/bigquery/open-images (accessed on 31 December 2019).

- Kragh, M.F.; Christiansen, P.; Laursen, M.S.; Larsen, M.; Steen, K.A.; Green, O.; Karstoft, H.; Jørgensen, R.N. FieldSAFE: Dataset for obstacle detection in agriculture. Sensors 2017, 17, 2579. [Google Scholar] [CrossRef] [PubMed]

- Grady, N.W.; Underwood, M.; Roy, A.; Chang, W.L. Big data: Challenges, practices and technologies: NIST big data public working group workshop at IEEE big data 2014. In Proceedings of the International Conference on Big Data, Washington, DC, USA, 27–30 October 2014; pp. 11–15. [Google Scholar]

- Dollár, P.; Tu, Z.; Perona, P.; Belongie, S. Integral Channel Features; BMVC Press: London, UK, 2009. [Google Scholar]

- Maji, S.; Berg, A.C.; Malik, J. Classification using intersection kernel support vector machines is efficient. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Zhu, Q.; Yeh, M.C.; Cheng, K.T.; Avidan, S. Fast human detection using a cascade of histograms of oriented gradients. In Proceedings of the Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; Volume 2, pp. 1491–1498. [Google Scholar]

- Mohan, A.; Papageorgiou, C.; Poggio, T. Example-based object detection in images by components. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 349–361. [Google Scholar] [CrossRef]

- Wang, X.; Han, T.X.; Yan, S. An HOG-LBP human detector with partial occlusion handling. In Proceedings of the International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 32–39. [Google Scholar]

- Wu, B.; Nevatia, R. Detection of multiple, partially occluded humans in a single image by bayesian combination of edgelet part detectors. In Proceedings of the International Conference on Computer Vision, Beijing, China, 17–21 October 2005; Volume 1, pp. 90–97. [Google Scholar]

- Andreopoulos, A.; Tsotsos, J.K. 50 years of object recognition: Directions forward. Comput. Vis. Image Underst. 2013, 117, 827–891. [Google Scholar] [CrossRef]

- Sadeghi, M.A.; Forsyth, D. 30hz object detection with dpm v5. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin, Germany, 2014; pp. 65–79. [Google Scholar]

- Hosang, J.; Omran, M.; Benenson, R.; Schiele, B. Taking a deeper look at pedestrians. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4073–4082. [Google Scholar]

- Yi, Z.; Yongliang, S.; Jun, Z. An improved tiny-yolov3 pedestrian detection algorithm. Optik 2019, 183, 17–23. [Google Scholar] [CrossRef]

- Zhang, L.; Lin, L.; Liang, X.; He, K. Is faster R-CNNN doing well for pedestrian detection? In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin, Germany, 2016; pp. 443–457. [Google Scholar]

- Song, T.; Sun, L.; Xie, D.; Sun, H.; Pu, S. Small-scale pedestrian detection based on somatic topology localization and temporal feature aggregation. arXiv 2018, arXiv:1807.01438. [Google Scholar]

- Cao, J.; Pang, Y.; Li, X. Learning multilayer channel features for pedestrian detection. IEEE Trans. Image Process. 2017, 26, 3210–3220. [Google Scholar] [CrossRef] [PubMed]

- Mao, J.; Xiao, T.; Jiang, Y.; Cao, Z. What can help pedestrian detection? In Proceedings of the Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3127–3136. [Google Scholar]

- Krishna, H.; Jawahar, C. Improving small object detection. In Proceedings of the 4th IAPR Asian Conference on Pattern Recognition (ACPR), Nanjing, China, 26–29 November 2017; pp. 340–345. [Google Scholar]

- Hu, Q.; Wang, P.; Shen, C.; van den Hengel, A.; Porikli, F. Pushing the limits of deep cnns for pedestrian detection. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 1358–1368. [Google Scholar] [CrossRef]

- Lee, Y.; Bui, T.D.; Shin, J. Pedestrian detection based on deep fusion network using feature correlation. In Proceedings of the Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Honolulu, HI, USA, 12–15 November 2018; pp. 694–699. [Google Scholar]

- Cai, Z.; Saberian, M.; Vasconcelos, N. Learning complexity-aware cascades for deep pedestrian detection. In Proceedings of the IEEE International Conference on Computer Vision, Las Condes, Chile, 11–18 December 2015; pp. 3361–3369. [Google Scholar]

- Bosquet, B.; Mucientes, M.; Brea, V.M. STDnet: Exploiting high resolution feature maps for small object detection. Eng. Appl. Artif. Intell. 2020, 91, 103615. [Google Scholar] [CrossRef]

- Tian, Y.; Luo, P.; Wang, X.; Tang, X. Deep learning strong parts for pedestrian detection. In Proceedings of the International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1904–1912. [Google Scholar]

- Ouyang, W.; Zhou, H.; Li, H.; Li, Q.; Yan, J.; Wang, X. Jointly learning deep features, deformable parts, occlusion and classification for pedestrian detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1874–1887. [Google Scholar] [CrossRef]

- Zhang, S.; Yang, J.; Schiele, B. Occluded pedestrian detection through guided attention in CNNs. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6995–7003. [Google Scholar]

- Gao, M.; Yu, R.; Li, A.; Morariu, V.I.; Davis, L.S. Dynamic zoom-in network for fast object detection in large images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6926–6935. [Google Scholar]

- Lu, Y.; Javidi, T.; Lazebnik, S. Adaptive object detection using adjacency and zoom prediction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2351–2359. [Google Scholar]

- Wang, X.; Xiao, T.; Jiang, Y.; Shao, S.; Sun, J.; Shen, C. Repulsion loss: Detecting pedestrians in a crowd. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7774–7783. [Google Scholar]

- Tian, Y.; Luo, P.; Wang, X.; Tang, X. Pedestrian detection aided by deep learning semantic tasks. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5079–5087. [Google Scholar]

- Shrivastava, A.; Gupta, A.; Girshick, R. Training region-based object detectors with online hard example mining. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 761–769. [Google Scholar]

- Tang, T.; Zhou, S.; Deng, Z.; Zou, H.; Lei, L. Vehicle detection in aerial images based on region convolutional neural networks and hard negative example mining. Sensors 2017, 17, 336. [Google Scholar] [CrossRef]

- Zhang, S.; Wen, L.; Bian, X.; Lei, Z.; Li, S.Z. Single-shot refinement neural network for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4203–4212. [Google Scholar]

- Jin, J.; Fu, K.; Zhang, C. Traffic sign recognition with hinge loss trained convolutional neural networks. IEEE Trans. Intell. Transp. Syst. 2014, 15, 1991–2000. [Google Scholar] [CrossRef]

- Zhou, M.; Jing, M.; Liu, D.; Xia, Z.; Zou, Z.; Shi, Z. Multi-resolution networks for ship detection in infrared remote sensing images. Infrared Phys. Technol. 2018, 92, 183–189. [Google Scholar] [CrossRef]

- Xu, D.; Ouyang, W.; Ricci, E.; Wang, X.; Sebe, N. Learning cross-modal deep representations for robust pedestrian detection. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5363–5371. [Google Scholar]

- Zhang, S.; Wen, L.; Bian, X.; Lei, Z.; Li, S.Z. Occlusion-aware R-CNN: Detecting pedestrians in a crowd. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 637–653. [Google Scholar]

- Zhou, C.; Yuan, J. Bi-box regression for pedestrian detection and occlusion estimation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 135–151. [Google Scholar]

- Hsu, W.Y. Automatic pedestrian detection in partially occluded single image. Integr. Comput.-Aided Eng. 2018, 25, 369–379. [Google Scholar] [CrossRef]

- Ren, Y.; Zhu, C.; Xiao, S. Deformable faster r-cnn with aggregating multi-layer features for partially occluded object detection in optical remote sensing images. Remote Sens. 2018, 10, 1470. [Google Scholar] [CrossRef]

- Li, W.; Ni, H.; Wang, Y.; Fu, B.; Liu, P.; Wang, S. Detection of partially occluded pedestrians by an enhanced cascade detector. IET Intell. Transp. Syst. 2014, 8, 621–630. [Google Scholar] [CrossRef]

- Yang, G.; Huang, T.S. Human face detection in a complex background. Pattern Recognit. 1994, 27, 53–63. [Google Scholar] [CrossRef]

- Craw, I.; Tock, D.; Bennett, A. Finding face features. In Proceedings of the European Conference on Computer Vision, Santa Margherita Ligure, Italy, 9–22 May 1992; Springer: Berlin, Germany, 1992; pp. 92–96. [Google Scholar]

- Turk, M.; Pentland, A. Eigenfaces for recognition. J. Cogn. Neurosci. 1991, 3, 71–86. [Google Scholar] [CrossRef] [PubMed]

- Vaillant, R.; Monrocq, C.; Le Cun, Y. Original approach for the localisation of objects in images. IEE Proc. Vision Image Signal Process. 1994, 141, 245–250. [Google Scholar] [CrossRef]

- Pentland; Moghaddam; Starner. View-based and modular eigenspaces for face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 84–91. [Google Scholar]

- Rowley, H.A.; Baluja, S.; Kanade, T. Human face detection in visual scenes. In Advances in Neural Information Processing Systems; Curran Associates Inc.: San Francisco, CA, USA, 1996; pp. 875–881. [Google Scholar]

- Rowley, H.A.; Baluja, S.; Kanade, T. Neural network-based face detection. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 23–38. [Google Scholar] [CrossRef]

- Osuna, E.; Freund, R.; Girosit, F. Training support vector machines: An application to face detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; pp. 130–136. [Google Scholar]

- Byun, H.; Lee, S.W. Applications of support vector machines for pattern recognition: A survey. In Proceedings of the International Workshop on Support Vector Machine, Niagara Falls, ON, Canada, 10 August 2002; Springer: Berlin, Germany, 2002; pp. 213–236. [Google Scholar]

- Xiao, R.; Zhu, L.; Zhang, H.J. Boosting chain learning for object detection. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 14–17 October 2003; pp. 709–715. [Google Scholar]

- Zhang, Y.; Zhao, D.; Sun, J.; Zou, G.; Li, W. Adaptive convolutional neural network and its application in face recognition. Neural Process. Lett. 2016, 43, 389–399. [Google Scholar] [CrossRef]

- Wu, S.; Kan, M.; Shan, S.; Chen, X. Hierarchical Attention for Part-Aware Face Detection. Int. J. Comput. Vis. 2019, 127, 560–578. [Google Scholar] [CrossRef]

- Li, H.; Lin, Z.; Shen, X.; Brandt, J.; Hua, G. A convolutional neural network cascade for face detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, Boston, MA, USA, 7–12 June 2015; pp. 5325–5334. [Google Scholar]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Hao, Z.; Liu, Y.; Qin, H.; Yan, J.; Li, X.; Hu, X. Scale-aware face detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6186–6195. [Google Scholar]

- Najibi, M.; Samangouei, P.; Chellappa, R.; Davis, L.S. SSH: Single stage headless face detector. In Proceedings of the IEEE International Conference on Computer Vision, Honolulu, HI, USA, 21–26 July 2017; pp. 4875–4884. [Google Scholar]

- Shi, X.; Shan, S.; Kan, M.; Wu, S.; Chen, X. Real-time rotation-invariant face detection with progressive calibration networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 July 2018; pp. 2295–2303. [Google Scholar]

- Chen, D.; Hua, G.; Wen, F.; Sun, J. Supervised transformer network for efficient face detection. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin, Germany, 2016; pp. 122–138. [Google Scholar]

- Yang, S.; Luo, P.; Loy, C.C.; Tang, X. Faceness-net: Face detection through deep facial part responses. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1845–1859. [Google Scholar] [CrossRef]

- Ghodrati, A.; Diba, A.; Pedersoli, M.; Tuytelaars, T.; Van Gool, L. Deepproposal: Hunting objects by cascading deep convolutional layers. In Proceedings of the IEEE International Conference on Computer Vision, Las Condes, Chile, 11–18 December 2015; pp. 2578–2586. [Google Scholar]

- Wang, J.; Yuan, Y.; Yu, G. Face attention network: An effective face detector for the occluded faces. arXiv 2017, arXiv:1711.07246. [Google Scholar]

- Wang, X.; Shrivastava, A.; Gupta, A. A-fast-RCNN: Hard positive generation via adversary for object detection. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2606–2615. [Google Scholar]

- Zhou, Y.; Liu, D.; Huang, T. Survey of face detection on low-quality images. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition, Xi’an, China, 15–19 May 2018; pp. 769–773. [Google Scholar]

- Yang, S.; Xiong, Y.; Loy, C.C.; Tang, X. Face detection through scale-friendly deep convolutional networks. arXiv 2017, arXiv:1706.02863. [Google Scholar]

- Zhang, S.; Zhu, X.; Lei, Z.; Shi, H.; Wang, X.; Li, S.Z. S3fd: Single shot scale-invariant face detector. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 192–201. [Google Scholar]

- Cai, Z.; Fan, Q.; Feris, R.S.; Vasconcelos, N. A unified multi-scale deep convolutional neural network for fast object detection. In Proceedings of the European conference on computer vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin, Germany, 2016; pp. 354–370. [Google Scholar]

- Zhang, C.; Xu, X.; Tu, D. Face detection using improved faster rcnn. arXiv 2018, arXiv:1802.02142. [Google Scholar]

- Li, Y.; Chen, Y.; Wang, N.; Zhang, Z. Scale-aware trident networks for object detection. In Proceedings of the IEEE International Conference on Computer Vision, South Korea, 27 October–2 November 2019; pp. 6054–6063. [Google Scholar]

- Li, Z.; Peng, C.; Yu, G.; Zhang, X.; Deng, Y.; Sun, J. Detnet: A backbone network for object detection. arXiv 2018, arXiv:1804.06215. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 385–400. [Google Scholar]

- Zhang, F.; Du, B.; Zhang, L.; Xu, M. Weakly supervised learning based on coupled convolutional neural networks for aircraft detection. IEEE Trans. Geosci. Remote. Sens. 2016, 54, 5553–5563. [Google Scholar] [CrossRef]

- Han, J.; Zhang, D.; Cheng, G.; Guo, L.; Ren, J. Object detection in optical remote sensing images based on weakly supervised learning and high-level feature learning. IEEE Trans. Geosci. Remote. Sens. 2014, 53, 3325–3337. [Google Scholar] [CrossRef]

- Li, Q.; Wang, Y.; Liu, Q.; Wang, W. Hough transform guided deep feature extraction for dense building detection in remote sensing images. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 1872–1876. [Google Scholar]

- Mou, L.; Zhu, X.X. Vehicle instance segmentation from aerial image and video using a multitask learning residual fully convolutional network. IEEE Trans. Geosci. Remote. Sens. 2018, 56, 6699–6711. [Google Scholar] [CrossRef]

- Chen, X.; Xiang, S.; Liu, C.L.; Pan, C.H. Vehicle detection in satellite images by hybrid deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1797–1801. [Google Scholar] [CrossRef]

- Ammour, N.; Alhichri, H.; Bazi, Y.; Benjdira, B.; Alajlan, N.; Zuair, M. Deep learning approach for car detection in UAV imagery. Remote Sens. 2017, 9, 312. [Google Scholar] [CrossRef]

- Ma, W.; Guo, Q.; Wu, Y.; Zhao, W.; Zhang, X.; Jiao, L. A novel multi-model decision fusion network for object detection in remote sensing images. Remote Sens. 2019, 11, 737. [Google Scholar] [CrossRef]

- Zhang, X.; Zhu, K.; Chen, G.; Tan, X.; Zhang, L.; Dai, F.; Liao, P.; Gong, Y. Geospatial object detection on high resolution remote sensing imagery based on double multi-scale feature pyramid network. Remote Sens. 2019, 11, 755. [Google Scholar] [CrossRef]

- Wang, J.; Ding, J.; Guo, H.; Cheng, W.; Pan, T.; Yang, W. Mask OBB: A Semantic Attention-Based Mask Oriented Bounding Box Representation for Multi-Category Object Detection in Aerial Images. Remote Sens. 2019, 11, 2930. [Google Scholar] [CrossRef]

- Cheng, G.; Zhou, P.; Han, J. Learning rotation-invariant convolutional neural networks for object detection in VHR optical remote sensing images. IEEE Trans. Geosci. Remote. Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Li, Q.; Mou, L.; Xu, Q.; Zhang, Y.; Zhu, X.X. R3-net: A deep network for multi-oriented vehicle detection in aerial images and videos. arXiv 2018, arXiv:1808.05560. [Google Scholar]

- Pang, J.; Li, C.; Shi, J.; Xu, Z.; Feng, H. R2-CNN: Fast Tiny Object Detection in Large-Scale Remote Sensing Images. IEEE Trans. Geosci. Remote. Sens. 2019, 57, 5512–5524. [Google Scholar] [CrossRef]

- Qian, X.; Lin, S.; Cheng, G.; Yao, X.; Ren, H.; Wang, W. Object Detection in Remote Sensing Images Based on Improved Bounding Box Regression and Multi-Level Features Fusion. Remote Sens. 2020, 12, 143. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Zhou, P.; Guo, L. Multi-class geospatial object detection and geographic image classification based on collection of part detectors. ISPRS J. Photogramm. Remote. Sens. 2014, 98, 119–132. [Google Scholar] [CrossRef]

- Liu, K.; Mattyus, G. Fast multiclass vehicle detection on aerial images. IEEE Geosci. Remote. Sens. Lett. 2015, 12, 1938–1942. [Google Scholar]

- Razakarivony, S.; Jurie, F. Vehicle detection in aerial imagery: A small target detection benchmark. J. Vis. Commun. Image Represent. 2016, 34, 187–203. [Google Scholar] [CrossRef]

- Zhang, Y.; Yuan, Y.; Feng, Y.; Lu, X. Hierarchical and robust convolutional neural network for very high-resolution remote sensing object detection. IEEE Trans. Geosci. Remote. Sens. 2019, 57, 5535–5548. [Google Scholar] [CrossRef]

- Islam, J.; Zhang, Y. Early Diagnosis of Alzheimer’s Disease: A Neuroimaging Study with Deep Learning Architectures. In Proceedings of the IEEE Conference on Computer vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 19–21 June 2018; pp. 1881–1883. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-first AAAI conference on artificial intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Marcus, D.S.; Fotenos, A.F.; Csernansky, J.G.; Morris, J.C.; Buckner, R.L. Open access series of imaging studies: Longitudinal MRI data in nondemented and demented older adults. J. Cogn. Neurosci. 2010, 22, 2677–2684. [Google Scholar] [CrossRef] [PubMed]

- Alaverdyan, Z.; Jung, J.; Bouet, R.; Lartizien, C. Regularized siamese neural network for unsupervised outlier detection on brain multiparametric magnetic resonance imaging: Application to epilepsy lesion screening. Med Image Anal. 2020, 60, 101618. [Google Scholar] [CrossRef] [PubMed]

- Laukamp, K.R.; Thiele, F.; Shakirin, G.; Zopfs, D.; Faymonville, A.; Timmer, M.; Maintz, D.; Perkuhn, M.; Borggrefe, J. Fully automated detection and segmentation of meningiomas using deep learning on routine multiparametric MRI. Eur. Radiol. 2019, 29, 124–132. [Google Scholar] [CrossRef] [PubMed]

- Katzmann, A.; Muehlberg, A.; Suehling, M.; Noerenberg, D.; Holch, J.W.; Heinemann, V.; Gross, H.M. Predicting Lesion Growth and Patient Survival in Colorectal Cancer Patients Using Deep Neural Networks. In Proceedings of the Conference track: Medical Imaging with Deep Learning, Amsterdam, The Netherlands, 4–6 July 2018. [Google Scholar]

- Bejnordi, B.E.; Veta, M.; Van Diest, P.J.; Van Ginneken, B.; Karssemeijer, N.; Litjens, G.; Van Der Laak, J.A.; Hermsen, M.; Manson, Q.F.; Balkenhol, M.; et al. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA 2017, 318, 2199–2210. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Cain, E.H.; Saha, A.; Zhu, Z.; Mazurowski, M.A. Breast mass detection in mammography and tomosynthesis via fully convolutional network-based heatmap regression. In Medical Imaging 2018: Computer-Aided Diagnosis. International Society for Optics and Photonics; SPIE: Bellingham WA, USA, 2018; Volume 10575, p. 1057525. [Google Scholar]

- Dalmış, M.U.; Vreemann, S.; Kooi, T.; Mann, R.M.; Karssemeijer, N.; Gubern-Mérida, A. Fully automated detection of breast cancer in screening MRI using convolutional neural networks. J. Med Imaging 2018, 5, 014502. [Google Scholar] [CrossRef] [PubMed]

- Abràmoff, M.D.; Lou, Y.; Erginay, A.; Clarida, W.; Amelon, R.; Folk, J.C.; Niemeijer, M. Improved automated detection of diabetic retinopathy on a publicly available dataset through integration of deep learning. Investig. Ophthalmol. Vis. Sci. 2016, 57, 5200–5206. [Google Scholar] [CrossRef]

- Winkels, M.; Cohen, T.S. 3D G-CNNs for pulmonary nodule detection. arXiv 2018, arXiv:1804.04656. [Google Scholar]

- Kermany, D.S.; Goldbaum, M.; Cai, W.; Valentim, C.C.; Liang, H.; Baxter, S.L.; McKeown, A.; Yang, G.; Wu, X.; Yan, F.; et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell 2018, 172, 1122–1131. [Google Scholar] [CrossRef]

- Food, U. Drug Administration. FDA Permits Marketing of Artificial Intelligence-Based Device to Detect Certain Diabetes-Related Eye Problems; SciPol: Durham, NC, USA, 2018. [Google Scholar]

- Gutman, D.; Codella, N.C.; Celebi, E.; Helba, B.; Marchetti, M.; Mishra, N.; Halpern, A. Skin lesion analysis toward melanoma detection: A challenge at the international symposium on biomedical imaging (ISBI) 2016, hosted by the international skin imaging collaboration (ISIC). arXiv 2016, arXiv:1605.01397. [Google Scholar]

- González, G.; Ash, S.Y.; Vegas-Sánchez-Ferrero, G.; Onieva Onieva, J.; Rahaghi, F.N.; Ross, J.C.; Díaz, A.; San José Estépar, R.; Washko, G.R. Disease staging and prognosis in smokers using deep learning in chest computed tomography. Am. J. Respir. Crit. Care Med. 2018, 197, 193–203. [Google Scholar] [CrossRef]

- Depeursinge, A.; Vargas, A.; Platon, A.; Geissbuhler, A.; Poletti, P.A.; Müller, H. Building a reference multimedia database for interstitial lung diseases. Comput. Med Imaging Graph. 2012, 36, 227–238. [Google Scholar] [CrossRef] [PubMed]

- Armato III, S.G.; McLennan, G.; Bidaut, L.; McNitt-Gray, M.F.; Meyer, C.R.; Reeves, A.P.; Zhao, B.; Aberle, D.R.; Henschke, C.I.; Hoffman, E.A.; et al. The lung image database consortium (LIDC) and image database resource initiative (IDRI): A completed reference database of lung nodules on CT scans. Med Phys. 2011, 38, 915–931. [Google Scholar] [CrossRef] [PubMed]

- Petersen, R.C.; Aisen, P.; Beckett, L.A.; Donohue, M.; Gamst, A.; Harvey, D.J.; Jack, C.; Jagust, W.; Shaw, L.; Toga, A.; et al. Alzheimer’s disease neuroimaging initiative (ADNI): Clinical characterization. Neurology 2010, 74, 201–209. [Google Scholar] [CrossRef] [PubMed]

- Menze, B.H.; Jakab, A.; Bauer, S.; Kalpathy-Cramer, J.; Farahani, K.; Kirby, J.; Burren, Y.; Porz, N.; Slotboom, J.; Wiest, R.; et al. The multimodal brain tumor image segmentation benchmark (BRATS). IEEE Trans. Med Imaging 2014, 34, 1993–2024. [Google Scholar] [CrossRef] [PubMed]

- Marcus, D.S.; Wang, T.H.; Parker, J.; Csernansky, J.G.; Morris, J.C.; Buckner, R.L. Open Access Series of Imaging Studies (OASIS): Cross-sectional MRI data in young, middle aged, nondemented, and demented older adults. J. Cogn. Neurosci. 2007, 19, 1498–1507. [Google Scholar] [CrossRef]

- Bowyer, K.; Kopans, D.; Kegelmeyer, W.; Moore, R.; Sallam, M.; Chang, K.; Woods, K. The digital database for screening mammography. In Proceedings of the Third International Workshop on Digital Mammography, Chicago, IL, USA, 9–12 June 1996; Volume 58, p. 27. [Google Scholar]

- Suckling, J.; Parker, J.; Dance, D.; Astley, S.; Hutt, I.; Boggis, C.; Ricketts, I.; Stamatakis, E.; Cerneaz, N.; Kok, S.; et al. Mammographic Image Analysis Society (MIAS) Database v1. 21; University of Cambridge: Cambridge, UK, 2015. [Google Scholar]

- Bandi, P.; Geessink, O.; Manson, Q.; Van Dijk, M.; Balkenhol, M.; Hermsen, M.; Bejnordi, B.E.; Lee, B.; Paeng, K.; Zhong, A.; et al. From detection of individual metastases to classification of lymph node status at the patient level: The camelyon17 challenge. IEEE Trans. Med Imaging 2018, 38, 550–560. [Google Scholar] [CrossRef]

- Moreira, I.C.; Amaral, I.; Domingues, I.; Cardoso, A.; Cardoso, M.J.; Cardoso, J.S. Inbreast: Toward a full-field digital mammographic database. Acad. Radiol. 2012, 19, 236–248. [Google Scholar] [CrossRef]

- Staal, J.; Abràmoff, M.D.; Niemeijer, M.; Viergever, M.A.; Van Ginneken, B. Ridge-based vessel segmentation in color images of the retina. IEEE Trans. Med Imaging 2004, 23, 501–509. [Google Scholar] [CrossRef]

- Hoover, A.; Kouznetsova, V.; Goldbaum, M. Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response. IEEE Trans. Med Imaging 2000, 19, 203–210. [Google Scholar] [CrossRef]

- Decencière, E.; Zhang, X.; Cazuguel, G.; Lay, B.; Cochener, B.; Trone, C.; Gain, P.; Ordonez, R.; Massin, P.; Erginay, A.; et al. Feedback on a publicly distributed image database: The Messidor database. Image Anal. Stereol. 2014, 33, 231–234. [Google Scholar] [CrossRef]

- Hu, W.; Zhuo, Q.; Zhang, C.; Li, J. Fast branch convolutional neural network for traffic sign recognition. IEEE Intell. Transp. Syst. Mag. 2017, 9, 114–126. [Google Scholar] [CrossRef]

- Shao, F.; Wang, X.; Meng, F.; Rui, T.; Wang, D.; Tang, J. Real-time traffic sign detection and recognition method based on simplified Gabor wavelets and CNNs. Sensors 2018, 18, 3192. [Google Scholar] [CrossRef] [PubMed]

- Shao, F.; Wang, X.; Meng, F.; Zhu, J.; Wang, D.; Dai, J. Improved faster R-CNN traffic sign detection based on a second region of interest and highly possible regions proposal network. Sensors 2019, 19, 2288. [Google Scholar] [CrossRef] [PubMed]

- Cao, J.; Song, C.; Peng, S.; Xiao, F.; Song, S. Improved traffic sign detection and recognition algorithm for intelligent vehicles. Sensors 2019, 19, 4021. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Huang, M.; Jin, X.; Li, X. A real-time chinese traffic sign detection algorithm based on modified YOLOv2. Algorithms 2017, 10, 127. [Google Scholar] [CrossRef]

- Luo, H.; Yang, Y.; Tong, B.; Wu, F.; Fan, B. Traffic sign recognition using a multi-task convolutional neural network. IEEE Trans. Intell. Transp. Syst. 2017, 19, 1100–1111. [Google Scholar] [CrossRef]

- Li, J.; Wang, Z. Real-time traffic sign recognition based on efficient CNNs in the wild. IEEE Trans. Intell. Transp. Syst. 2018, 20, 975–984. [Google Scholar] [CrossRef]

- Masood, S.Z.; Shu, G.; Dehghan, A.; Ortiz, E.G. License plate detection and recognition using deeply learned convolutional neural networks. arXiv 2017, arXiv:1703.07330. [Google Scholar]

- Laroca, R.; Zanlorensi, L.A.; Gonçalves, G.R.; Todt, E.; Schwartz, W.R.; Menotti, D. An efficient and layout-independent automatic license plate recognition system based on the YOLO detector. arXiv 2019, arXiv:1909.01754. [Google Scholar]

- Hendry; Chen, R.-C. Automatic License Plate Recognition via sliding-window darknet-YOLO deep learning. Image Vis. Comput. 2019, 87, 47–56. [Google Scholar]

- Raza, M.A.; Qi, C.; Asif, M.R.; Khan, M.A. An Adaptive Approach for Multi-National Vehicle License Plate Recognition Using Multi-Level Deep Features and Foreground Polarity Detection Model. Appl. Sci. 2020, 10, 2165. [Google Scholar] [CrossRef]

- Gonçalves, G.R.; Diniz, M.A.; Laroca, R.; Menotti, D.; Schwartz, W.R. Real-time automatic license plate recognition through deep multi-task networks. In Proceedings of the 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Paraná, Brazil, 29 October–1 November 2018; pp. 110–117. [Google Scholar]

- Arnold, E.; Al-Jarrah, O.Y.; Dianati, M.; Fallah, S.; Oxtoby, D.; Mouzakitis, A. A survey on 3d object detection methods for autonomous driving applications. IEEE Trans. Intell. Transp. Syst. 2019, 20, 3782–3795. [Google Scholar] [CrossRef]

- Pham, C.C.; Jeon, J.W. Robust object proposals re-ranking for object detection in autonomous driving using convolutional neural networks. Signal Process. Image Commun. 2017, 53, 110–122. [Google Scholar] [CrossRef]

- Li, B.; Zhang, T.; Xia, T. Vehicle detection from 3d lidar using fully convolutional network. arXiv 2016, arXiv:1608.07916. [Google Scholar]

- Helbing, D.; Brockmann, D.; Chadefaux, T.; Donnay, K.; Blanke, U.; Woolley-Meza, O.; Moussaid, M.; Johansson, A.; Krause, J.; Schutte, S.; et al. Saving human lives: What complexity science and information systems can contribute. J. Stat. Phys. 2015, 158, 735–781. [Google Scholar] [CrossRef]

- Saleh, S.A.M.; Suandi, S.A.; Ibrahim, H. Recent survey on crowd density estimation and counting for visual surveillance. Eng. Appl. Artif. Intell. 2015, 41, 103–114. [Google Scholar] [CrossRef]

- Jones, M.J.; Snow, D. Pedestrian detection using boosted features over many frames. In Proceedings of the International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008; pp. 1–4. [Google Scholar]

- Viola, P.; Jones, M.J.; Snow, D. Detecting pedestrians using patterns of motion and appearance. Int. J. Comput. Vis. 2005, 63, 153–161. [Google Scholar] [CrossRef]

- Leibe, B.; Seemann, E.; Schiele, B. Pedestrian detection in crowded scenes. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 878–885. [Google Scholar]

- Lin, S.F.; Chen, J.Y.; Chao, H.X. Estimation of number of people in crowded scenes using perspective transformation. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2001, 31, 645–654. [Google Scholar]

- Junior, J.C.S.J.; Musse, S.R.; Jung, C.R. Crowd analysis using computer vision techniques. IEEE Signal Process. Mag. 2010, 27, 66–77. [Google Scholar]

- Kok, V.J.; Lim, M.K.; Chan, C.S. Crowd behavior analysis: A review where physics meets biology. Neurocomputing 2016, 177, 342–362. [Google Scholar] [CrossRef]

- Sun, M.; Zhang, D.; Qian, L.; Shen, Y. Crowd Abnormal Behavior Detection Based on Label Distribution Learning. In Proceedings of the International Conference on Intelligent Computation Technology and Automation, Nanchang, China, 14–15 June 2015; pp. 345–348. [Google Scholar]

- Zhao, L.; Li, S. Object Detection Algorithm Based on Improved YOLOv3. Electronics 2020, 9, 537. [Google Scholar] [CrossRef]

- Reno, V.; Mosca, N.; Marani, R.; Nitti, M.; D’Orazio, T.; Stella, E. Convolutional neural networks based ball detection in tennis games. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 19–21 June 2018; pp. 1758–1764. [Google Scholar]

- Kang, K.; Ouyang, W.; Li, H.; Wang, X. Object detection from video tubelets with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 817–825. [Google Scholar]

- Pobar, M.; Ivasic-Kos, M. Active Player Detection in Handball Scenes Based on Activity Measures. Sensors 2020, 20, 1475. [Google Scholar] [CrossRef] [PubMed]

- Pobar, M.; Ivašić-Kos, M. Detection of the leading player in handball scenes using Mask R-CNN and STIPS. In Proceedings of the Eleventh International Conference on Machine Vision (ICMV 2018), International Society for Optics and Photonics, Munich, Germany, 1–3 November 2019; Volume 11041, p. 110411V. [Google Scholar]

- Pobar, M.; Ivasic-Kos, M. Mask R-CNN and Optical flow based method for detection and marking of handball actions. In Proceedings of the 11th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Beijing, China, 13–15 October 2018; pp. 1–6. [Google Scholar]

- Burić, M.; Pobar, M.; Ivašić-Kos, M. Object detection in sports videos. In Proceedings of the 2018 41st International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 21–25 May 2018; pp. 1034–1039. [Google Scholar]

- Acuna, D. Towards real-time detection and tracking of basketball players using deep neural networks. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Afif, M.; Ayachi, R.; Said, Y.; Atri, M. Deep Learning Based Application for Indoor Scene Recognition. Neural Process. Lett. 2020, 1–11. [Google Scholar] [CrossRef]

- Tapu, R.; Mocanu, B.; Zaharia, T. DEEP-SEE: Joint object detection, tracking and recognition with application to visually impaired navigational assistance. Sensors 2017, 17, 2473. [Google Scholar] [CrossRef] [PubMed]

- Yang, W.; Tan, R.T.; Feng, J.; Liu, J.; Guo, Z.; Yan, S. Deep joint rain detection and removal from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1357–1366. [Google Scholar]

- Hu, X.; Zhu, L.; Fu, C.W.; Qin, J.; Heng, P.A. Direction-aware spatial context features for shadow detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7454–7462. [Google Scholar]

- Yang, Z.; Li, Q.; Wenyin, L.; Lv, J. Shared multi-view data representation for multi-domain event detection. IEEE Trans. Pattern Anal. Mach. Intell. 2019. [Google Scholar] [CrossRef]

- Hashmi, M.F.; Gupta, V.; Vijay, D.; Rathwa, V. Computer Vision-Based Assistive Technology for Helping Visually Impaired and Blind People Using Deep Learning Framework. In Handbook of Research on Emerging Trends and Applications of Machine Learning; IGI Global: Hershey, PA, USA, 2020; pp. 577–598. [Google Scholar]

- Buzzelli, M.; Albé, A.; Ciocca, G. A vision-based system for monitoring elderly people at home. Appl. Sci. 2020, 10, 374. [Google Scholar] [CrossRef]

- Szegedy, C.; Toshev, A.; Erhan, D. Deep neural networks for object detection. In Advances in Neural Information Processing Systems; Curran Associates Inc.: San Francisco, CA, USA, 2013; pp. 2553–2561. [Google Scholar]

- Du Terrail, J.O.; Jurie, F. On the use of deep neural networks for the detection of small vehicles in ortho-images. In Proceedings of the 2017 IEEE International Conference on Image Processing, Beijing, China, 17–20 September 2017; pp. 4212–4216. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Erhan, D.; Szegedy, C.; Toshev, A.; Anguelov, D. Scalable object detection using deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2147–2154. [Google Scholar]

- Ohn-Bar, E.; Trivedi, M.M. Multi-scale volumes for deep object detection and localization. Pattern Recognit. 2017, 61, 557–572. [Google Scholar] [CrossRef]

- Huang, C.; He, Z.; Cao, G.; Cao, W. Task-driven progressive part localization for fine-grained object recognition. IEEE Trans. Multimed. 2016, 18, 2372–2383. [Google Scholar] [CrossRef]

- Liu, N.; Han, J. DHSNet: Deep hierarchical saliency network for salient object detection. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 678–686. [Google Scholar]

- Li, X.; Zhao, L.; Wei, L.; Yang, M.H.; Wu, F.; Zhuang, Y.; Ling, H.; Wang, J. DeepSaliency: Multi-task deep neural network model for salient object detection. IEEE Trans. Image Process. 2016, 25, 3919–3930. [Google Scholar] [CrossRef]

- Wang, L.; Lu, H.; Ruan, X.; Yang, M.H. Deep networks for saliency detection via local estimation and global search. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3183–3192. [Google Scholar]

- Li, G.; Yu, Y. Deep contrast learning for salient object detection. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 478–487. [Google Scholar]

- Gao, M.L.; He, X.; Luo, D.; Yu, Y.M. Object tracking based on harmony search: Comparative study. J. Electron. Imaging 2012, 21, 043001. [Google Scholar] [CrossRef]

- Hao, Z. Improved Faster R-CNN for Detecting Small Objects and Occluded Objects in Electron Microscope Imaging. Acta Microsc. 2020, 29. [Google Scholar]

- Leung, H.K.; Chen, X.Z.; Yu, C.W.; Liang, H.Y.; Wu, J.Y.; Chen, Y.L. A Deep-Learning-Based Vehicle Detection Approach for Insufficient and Nighttime Illumination Conditions. Appl. Sci. 2019, 9, 4769. [Google Scholar] [CrossRef]

- Park, J.; Chen, J.; Cho, Y.K.; Kang, D.Y.; Son, B.J. CNN-based person detection using infrared images for night-time intrusion warning systems. Sensors 2020, 20, 34. [Google Scholar] [CrossRef] [PubMed]

- Kim, K.H.; Hong, S.; Roh, B.; Cheon, Y.; Park, M. PVANET: Deep but lightweight neural networks for real-time object detection. arXiv 2016, arXiv:1608.08021. [Google Scholar]

- Shih, Y.F.; Yeh, Y.M.; Lin, Y.Y.; Weng, M.F.; Lu, Y.C.; Chuang, Y.Y. Deep co-occurrence feature learning for visual object recognition. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4123–4132. [Google Scholar]

- Denton, E.L.; Chintala, S.; Szlam, A.; Fergus, R. Deep Generative Image Models using a Laplacian Pyramid of Adversarial Networks. In Proceedings of the 28th International Conference on Neural Information Processing Systems, Montréal, ON, Canada, 7–12 December 2015; Volume 1, pp. 1486–1494. [Google Scholar]

- Takác, M.; Bijral, A.S.; Richtárik, P.; Srebro, N. Mini-Batch Primal and Dual Methods for SVMs. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 1022–1030. [Google Scholar]

- Goring, C.; Rodner, E.; Freytag, A.; Denzler, J. Nonparametric part transfer for fine-grained recognition. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 2489–2496. [Google Scholar]

- Lin, D.; Shen, X.; Lu, C.; Jia, J. Deep LAC: Deep localization, alignment and classification for fine-grained recognition. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1666–1674. [Google Scholar]

- Zhang, N.; Donahue, J.; Girshick, R.; Darrell, T. Part-based R-CNNs for fine-grained category detection. In Proceedings of the European Conference on Computer Vision, Zürich, Switzerland, 6–12 September 2014; Springer: Berlin, Germany, 2014; pp. 834–849. [Google Scholar]

- RaspberryPI. Available online: https://www.raspberrypi.org/ (accessed on 31 December 2019).

- Nakahara, H.; Yonekawa, H.; Sato, S. An object detector based on multiscale sliding window search using a fully pipelined binarized CNN on an FPGA. In Proceedings of the International Conference on Field Programmable Technology, Melbourne, Australia, 11–13 December 2017; pp. 168–175. [Google Scholar]

- Soma, P.; Jatoth, R.K. Hardware Implementation Issues on Image Processing Algorithms. In Proceedings of the International Conference on Computing Communication and Automation, Greater Noida, India, 14–15 December 2018; pp. 1–6. [Google Scholar]

- JetsonTX2. Available online: https://elinux.org/JetsonTX2 (accessed on 31 December 2019).

- Garland, M.; Le Grand, S.; Nickolls, J.; Anderson, J.; Hardwick, J.; Morton, S.; Phillips, E.; Zhang, Y.; Volkov, V. Parallel computing experiences with CUDA. IEEE Micro 2008, 28, 13–27. [Google Scholar] [CrossRef]

- Stone, J.E.; Gohara, D.; Shi, G. OpenCL: A parallel programming standard for heterogeneous computing systems. Comput. Sci. Eng. 2010, 12, 66–73. [Google Scholar] [CrossRef]

- NVIDIA Collective Communications Library (NCCL). Available online: https://developer.nvidia.com/nccl (accessed on 31 December 2019).

- Hwang, S.; Lee, Y. FPGA-based real-time lane detection for advanced driver assistance systems. In Proceedings of the IEEE Asia Pacific Conference on Circuits and Systems, Jeju, South Korea, 25–28 October 2016; pp. 218–219. [Google Scholar]

- Sajjanar, S.; Mankani, S.K.; Dongrekar, P.R.; Kumar, N.S.; Mohana.; Aradhya, H.V.R. Implementation of real time moving object detection and tracking on FPGA for video surveillance applications. In Proceedings of the IEEE Distributed Computing, VLSI, Electrical Circuits and Robotics (DISCOVER), Mangalore, India, 13–14 August 2016; pp. 289–295. [Google Scholar]

- Tijtgat, N.; Van Ranst, W.; Goedeme, T.; Volckaert, B.; De Turck, F. Embedded real-time object detection for a UAV warning system. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2110–2118. [Google Scholar]

- Hossain, S.; Lee, D.j. Deep Learning-Based Real-Time Multiple-Object Detection and Tracking from Aerial Imagery via a Flying Robot with GPU-Based Embedded Devices. Sensors 2019, 19, 3371. [Google Scholar] [CrossRef]

- Stepanenko, S.; Yakimov, P. Using high-performance deep learning platform to accelerate object detection. In Proceedings of the International Conference on Information Technology and Nanotechnology, Samara, Russia, 26–29 May 2019; pp. 1–7. [Google Scholar]

- Körez, A.; Barışçı, N. Object Detection with Low Capacity GPU Systems Using Improved Faster R-CNN. Appl. Sci. 2020, 10, 83. [Google Scholar] [CrossRef]

- Çambay, V.Y.; Uçar, A.; Arserim, M.A. Object Detection on FPGAs and GPUs by Using Accelerated Deep Learning. In Proceedings of the 2019 International Artificial Intelligence and Data Processing Symposium (IDAP), Malatya, Turkey, 28–30 September 2019; pp. 1–5. [Google Scholar]

- Moon, Y.Y.; Geem, Z.W.; Han, G.T. Vanishing point detection for self-driving car using harmony search algorithm. Swarm Evol. Comput. 2018, 41, 111–119. [Google Scholar] [CrossRef]

- Yao, Y.; Wang, Y.; Guo, Y.; Lin, J.; Qin, H.; Yan, J. Cross-dataset Training for Class Increasing Object Detection. arXiv 2020, arXiv:2001.04621. [Google Scholar]

- Bojarski, M.; Del Testa, D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, L.D.; Monfort, M.; Muller, U.; Zhang, J.; et al. End to end learning for self-driving cars. arXiv 2016, arXiv:1604.07316. [Google Scholar]

| S.No | Architecture | mAP (MS-COCO) | mAP (Pascal-Voc 2007) | FPS |

|---|---|---|---|---|

| 1 | RCNN [17] | – | 66% | 0.1 |

| 2 | SPPNet [32] | – | 63.10% | 1 |

| 3 | Fast RCNN [33] | 35.90% | 70.00% | 0.5 |

| 4 | Faster RCNN [2] | 36.20% | 73.20% | 6 |

| 5 | Mask RCNN [44] | - | 78.20% | 5 |

| 6 | YOLO [40] | – | 63.40% | 45 |

| 7 | SSD [41] | 31.20% | 76.80% | 8 |

| 8 | YOLOv2 [42] | 21.60% | 78.60% | 67 |

| 9 | YOLOv3 [43] | 33.00% | – | 35 |

| 10 | SqueezeDet [45] | - | – | 57.2 |

| 11 | SqueezeDet+ [45] | - | – | 32.1 |

| 12 | CornerNet [48] | 69.2 | – | 4 |

| Architecture | Backbone Model | AP | AP(E) | AP(M) | AP(H) |

|---|---|---|---|---|---|

| Two-stage Detectors | |||||

| RCNN [17] | VGG16 | – | – | – | |

| SppNet [32] | VGG16 | – | – | – | – |

| Fast RCNN [33] | VGG16 | 19.7 | – | – | – |

| Faster RCNN with FPN [37] | VGG16 | 36.2 | 18.2 | 39 | 48.2 |

| Mask RCNN [44] | ResNext-101 | 39.8 | 22.1 | 43.2 | 51.2 |

| One-stage Detectors | |||||

| YOLOv2 [42] | DarkNet53 | 33 | 18.3 | 35.4 | 41.9 |

| YOLOv3 [43] | DarkNet19 | 21.6 | 5 | 22.4 | 35.5 |

| SSD300 [41] | VGG16 | 25.1 | 6.6 | 24.4 | 36.5 |

| SSD512 [41] | VGG16 | 28.8 | 10.9 | 31.8 | 43.5 |

| SSD513 [50] | ResNet-101 | 31.2 | 10.2 | 34.5 | 49.8 |

| RetinaNet500 [40] | ResNet-101 | 34.4 | 14.7 | 38.5 | 49.1 |

| RetinaNet800 [40] | ResNet101-FPN | 39.1 | 21.8 | 42.7 | 50.2 |

| SqueezeDet* [45] | SqueezeNet | 76.7 | 77.1 | 68.3 | 65.8 |

| SqueezeDet+* [45] | SqueezeNet | 80.4 | 81.4 | 71.3 | 68.5 |

| CornerNet511(single-scale) [46] | Hourglass-104 | 40.6 | 19.1 | 42.8 | 54.3 |

| CornerNet511(multi-scale) [46] | Hourglass-104 | 42.2 | 20.7 | 44.8 | 56.6 |

| Name | Designer | Software License | Supported Platforms | Features | Languages Supported | RNN | CNN | DBN RBM | Parallel Execution |

|---|---|---|---|---|---|---|---|---|---|

| Wolfram Mathema- tica [51] | Wolfarm Research | Proprietary | Windows, macOS, Linux, Cloud computing | Machine learning, data science, image processing, neural networks, geometry, visualization | C++, Wolfram Language, CUDA | Yes | Yes | Yes | Yes |

| Dlib [52] | Davis King | Boost software license | Cross Platform | Used for creating robust and complex software in C++ to solve real-world problems. Useful for both industry and academia | C++ | No | Yes | Yes | Yes |

| Theano [53] | Université de Montréal | BSD | Cross Platform | Use GPUs and perform symbolic differentiation, to define, optimize and evaluate expressions involving multi-dimensional arrays efficiently | Python | Yes | Yes | Yes | Yes |

| Caffe [54] | Berkeley Vision and Learning Center | BSD | Linux, macOS, Windows | Expressive architecture and speed. By setting a single flag, switches from CPU to GPU, to train on a GPU machine and deploy it on handheld devices. | Python, MATLAB, C++ | Yes | Yes | No | X |

| Deeplearn- ing4j [55] | Deeplearn- ing4j community; originally A. Gibson | Apache 2.0 | Windows, macOS, Linux, Android | It combines variational autoencoders, sequence- to-sequences autoencoders, convolutional nets or recurrent nets as needed in a distributed deep learning framework | Java, Scala, Clojure, Python (Keras) | Yes | Yes | Yes | Yes |