1. Introduction

The proportion of the elderly in the population in most advanced countries now exceeds 15% [

1], and the problems associated with aging including dementia and chronic illnesses are also increasing year by year. The care of the elderly is important but is especially difficult for those living alone. The situation is made worse given the current serious shortage of healthcare personnel. The concept of the Activities of Daily Living (ADL) first emerged in the 1950s in the field of healthcare as a tool to assess the daily self-care activities of disabled, and physically or mentally handicapped patients, such as those with dementia or chronic mental health problems. ADL usually include ordinary activities such as eating, shifting position, mobility, bathing, dressing, etc. These are typically rated on some scale such as the Barthel Index, Katz Index or Karnofsky Index, with the Barthel Index being most commonly used as a basis for evaluation of home care [

2,

3,

4,

5]. Motion recognition is a common technology today which has found applications in games, interactive computer-human interfaces, telepresence technology, and medical care. In the medical field it is currently used in remote rehabilitation systems, which enable a virtual rehabilitation teacher to evaluate the effectiveness of at home rehab and alleviate the problem of lack of medical resources in remote areas [

6,

7]. Motion recognition methods that focus on the description of image features have inspired researchers to improve the efficiency of the technology. ADL recognition for years has been discussed for years and some work has been carried out to improve sensor ability [

8]. Recent studies have mostly concentrated on the development of methodologies, including machine learning, probabilistic finite, entropy-based, and knowledge-driven approaches [

9,

10,

11]. The processing of data for ADL is also important in order to improving recognition accuracy [

12,

13,

14]. It is desirable to deal to use a comprehensive methodology that provides solutions for the recognition of human behaviors, image processing, and movement calculation to achieve the goal of smart care.

This paper describes a Deep Neural Network (DNN)-based recognition system that combines ADL recognition, image/video processing, movement calculation, and DNN for the purpose of achieving smart care. It utilizes skeletal data obtained from color images from webcams or surveillance records, using the DNN for ADL recognition. The system takes advantage of low-cost and easy-to-install color cameras for monitoring indoor environments and incorporates a feature extraction method for motion recognition.

2. Related Applications for Neural Networks

Typically, neural networks (NN) perform well when dealing with simple problems, such as back-propagation (BP), where choosing the appropriate features is important [

15,

16,

17]. However, this methodology may not be sufficient to deal with complex architectures and high-dimensional data. Complex architectures can be avoided by application of the concept of deep learning utilizing unsupervised learning methods to extract features from the data and then moving on to the process of supervised learning for labelling data [

18,

19]. In 2012, the Google Brain team applied deep learning to process YouTube videos [

13]. They also developed the Tensor Processing Unit (TPU) [

20,

21,

22,

23], a customized special Integrated Circuit (IC) application. There are more and more examples of system architectures for the application of deep learning including DNN, Deep Belief Networks (DBN) and Convolutional Neuron Networks (CNN) [

24,

25]. CNN focuses on feature extraction and its classifier where the data are processed in its convolutional layer and connected in the pooling layer for full classification. The Convolutional Architecture for Fast Feature Embedding (Caffe) is another typical deep learning framework [

26]. It has the advantages of being easy to use, fast training, and modularity [

26,

27]. In recent years, the use of Caffe version 2 has spread to being used in mobile devices with Android and IOS systems [

28,

29,

30]. The above-studies demonstrate that DNN-oriented approaches and applications have become more efficient.

3. Data Collection and Processing

Easy acquisition and availability to the general population is the key to simulating real ADL. The ADL camera, available to most users must have the following basic prerequisites: resolution of 640 by 480, length of recorded video from 10 to 20 s and Frames Per Second (FPS) = 30. These conditions are essential for practical purposes. Therefore, the following criteria are set for the evaluations: (1) the video capture to the subject angle and distance can vary by 30 degrees from left to right, front to back; (2) the subjects can range in height 160–180 cm with a mean height around 173 cm. This range includes 80% of the population in Taiwan. (3) The top 10 most common ADL are selected including standing, bending, squatting, sitting, eating, raising one hand, raising two hands, sitting plus drinking, standing plus drinking, and falling [

31,

32,

33]. Each subject performs one common ADL for 10 to 20 s for 4 times, giving 40 videos in total for training. Snap shots of each common ADL are illustrated in

Figure 1. Based on the policy requested by Ministry of Science and Technology (MOST), Taiwan, every individual participating the research has signed the agreement and been permitted.

For indoor ADL determination one usually needs to consider consistent changes in the position of the human body as well as human skeletal information even for the same individual. It is necessary to normalize the skeletal information for pre-processing to generate features to facilitate neural network identification. The process of database normalization is divided into two parts, data translation and data scaling. Data translation is the translation of the neck as the new origin

, where each joint point

is subtracted from the coordinates of the neck

to perform translation, substituting the relevant nodes into the new joint point

. It can be expressed as follows:

where

is the new coordinate of the joint point x at time t;

is the coordinate of the joint point at time t;

is the coordinate point of the neck at time

t. During data scaling the skeleton is captured on a 2D image. The original length of the limbs is not known but the coordinates of the skeleton on the 2D image are pointed out. Consider the distance from the center of the hip joint to the neck at time

t as the unit length. The algorithm is formulated as in Equation (2) where it can be seen that the proportion of

h(

t) in the whole body when bending over is smaller than that in the whole body when standing:

Now,

s(

t) is solved for in Equation (3) using the two limb lengths as the basis for normalization:

The maximum value for

s(

t) and

h(

t) is expressed as the unit length

U(

t) in Equation (4). By dividing the skeletal coordinate points, the new zooming joint coordinate point

can be obtained in Equation (5):

The computation of the joint angle and changes in length from wrist to joint for extracting the features including the angle of the limb joint, angle between the end of the limb and the horizontal, and change in length from wrist to joint are described below.

3.1. Limb Joint Angle

The upper limbs are comprised of a combination of the left elbow, left shoulder, neck, right shoulder and right elbow. Similarly, the lower limbs have joint angles for the left knee, left hip joint, right hip joint, and right knee. A joint angle can be calculated using three selected points

−

, where

is the center point for calculation of the vectors

and

, as shown in Equations (6) and (7):

The angle is calculated with

and the horizontal axis is from

to

so the value of

must be converted from

to 0 to

to

as in Equation (8):

where

is the input vector and

. The inverse tangent function is used to calculate the angle. Substituting

and

into Equations (6) and (7) we obtained Equation (8) in order to yield the angles

i and

j. The angle

a(

i,

j) relative to

can be found by

The angles calculated above are shown in

Figure 2 where

to

are the upper limb joint angles, and

to

are the lower limb joint angles.

3.2. Angle between the Limb End and the Horizontal

The human skeleton is closely related to the nearby space while the individual is moving. The computation of the angles between the limbs and the horizontal axis is the same as for the joint angles, by plugging the vectors into Equation (8). There are a total of four angles shown in

Figure 3.

3.3. Length Change for Wrist to Joint

The position/height from the wrists to the joints can be clearly recognized in postures such as raising the hands, sitting, and squatting. Assuming that the shoulder and knee joints are taken as the reference points with which to calculate the change in positions known as the height difference in the

y-axis. The calculation process is expressed as:

where

represents the position of the

y−axis for the joint point; and

is the length from the hip joint to the neck. The computation to determine the relative distance between the wrist and check point

c is shown in Equation (11) below:

where

is the

y-axis position of the wrist;

is the

y-axis position of the check point in a given space.

Next, we adopt the sliding window concept to filter out noise. All input images are classified into [0, 1] and sorted in a sequence. When a pre-terminated window is set at time =

t, e.g., 3 slots, there are two images labeled “0” and one labeled “1”. The movement in the image is labeled “0” by voting from the count of “0” and “1”. At time =

t + 1, voting again determines the movement in the image which is labeled “1”, as shown in

Figure 4.

4. DNN-Based Systems for ADL Recognition

The most commonly used neural networks to solve practical problems are the single layer BPNN, multi-layer BPNN, CNN, and DNN. This study develops a recognition system for ADL using DNN and compares it with the single layer BPNN, multi-layer BPNN, and CNN methods to evaluate the feasibility of the proposed system. We start with consideration of the parameters for these neural networks, using the following suggested parameter settings [

34,

35,

36]: (1) activation functions = sigmoid; (2) learning rate = 0.8 for BP neural networks and 0.01 for DNN; (3) optimal method = gradient correction for BP neural networks and Adam optimization for DNN; (4) five hidden layers with 5–50 neurons in each layer for DNN, e.g., DNN with a [20, 20, 20, 20, 20] classifier; (5) two fully connected classifiers for CNN to deal with 2048 dimensions, 384 neurons for the first hidden layers, 192 neurons for the second hidden layers since the kernel size of convolution layer = 5 by 5 with step = 1 by 1 and pooling layer = 3 by 3 with step = 2 by 2.

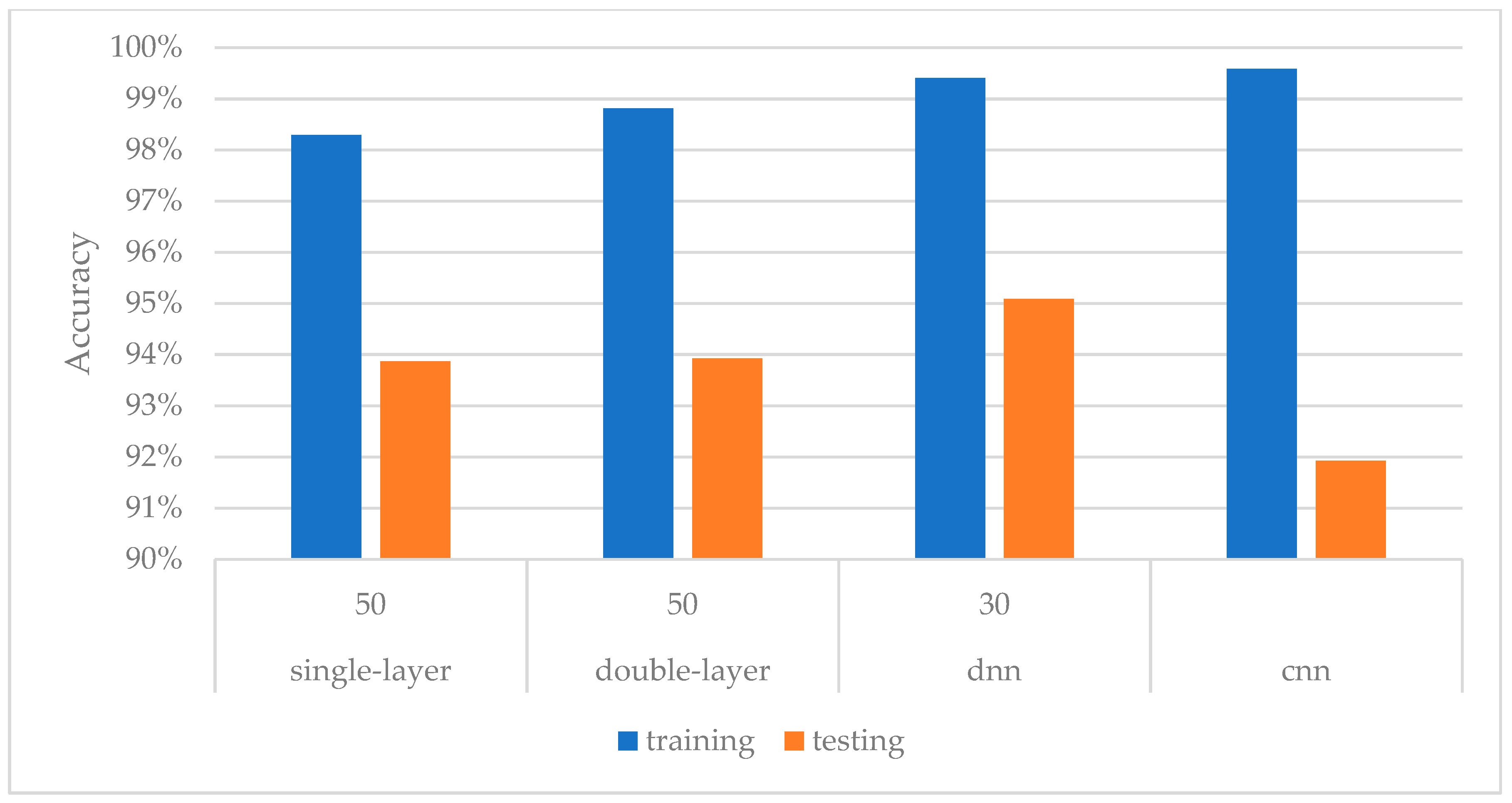

The feature extraction for recognition of ADL is examined for comparison among the four methods. Comparison is conducted using 3-fold cross-validation. As can be seen in

Figure 5, all four methods have recognition accuracy rates of greater than 98% at least. The DNN with a [30, 30, 30, 30, 30] classifier and CNN systems perform even better, reaching and accuracy rate of over 99%. Next, we carry out multi angle tests using these four methods. In reality, it is not guaranteed that we can obtain front facing video of every subject. We shoot side and front footage twice in each practice session, and then randomly mix these videos together for system recognition. As can be seen in

Figure 6, DNN with a [30, 30, 30, 30, 30] classifier and CNN systems have almost the same test results, with an accuracy rate of approximately 97.5%, slightly better than for the multi- and single layer BPNNs. To check whether the ADL recognition process works for most subjects, to meet the initial three requirements, we randomly select video footage of different subjects with different combinations of movement for 3-fold cross-validation. Frankly speaking, all the NN applications perform well for ADL recognition. As shown in

Figure 7, all four systems have high accuracy rates greater than 98% for training. The DNN system with a [30, 30, 30, 30, 30] classifier has the highest accuracy rate, around 95%, for testing, among these four systems. The results confirm that the DNN-based system for ADL recognition performs the best among the major NN applications.

An important step to ensure better efficiency and accuracy for DNN-based recognition system is to determine the size of the sliding window. The frame in the

Figure 4 is an example for a 3-click window. A small window can reduce the computation/processing time but may not be able to yield high accuracy. On the other hand, a relatively large window may provide a higher accuracy rate but will usually cause system lags in recognition, sometimes significant enough to render the system impractical.

Figure 8 exhibits a [30, 30, 30, 30, 30] DNN classifier with window sizes of 1, 3, 5, 7, and 9. When the click number increases, the computational penalty for the size of the window leads to computational lag, because too much information may contain enough noise to reduce the accuracy of the results. It is clear that the best accuracy is obtained with a 7-click sliding window. The developed ADL recognition system is completed using a [30, 30, 30, 30, 30] DNN classifier with a 7-click sliding window to filter out noise, to facilitate recognition, to increase efficiency, and to yield higher accuracy.

5. Evaluation and Results

The proposed system is empirically evaluated by adopting lengthier video footage with various ADL than that used in the previous section. A 5-min video containing all 10 types of the most common ADL was used. Each type of ADL appeared randomly 3 to 5 times in the footage. Each ADL was manually marked and compared with the evaluation results In

Table 1. The evaluation results include the accuracy rate and mis-classification rate. The average accuracy rate is 95.1%. The proposed system performs perfectly (100% accuracy rate) for 5 types of ADL: standing, raising one hand, raising two hands, sitting plus drinking, and standing plus drinking. The proposed system also works effectively with an accuracy recognition rate greater than 95% for the ADL of sitting, eating, and falling. It is the industry health care standard that the proposed system detects falling with a high accuracy rate at 97%, as falling is especially dangerous for seniors. There are two types of ADL (bending and squatting) that are relatively easy for the proposed system to misclassify: bending can be misclassified as squatting or sitting, and squatting may be misclassified as sitting or bending. Compared with

Figure 1, there was one case where eating was misclassified as bending perhaps due to the similarity of the bending of the arm(s) or leg(s) to the eating ADL while sitting. The reason for misclassification is mainly lies the similarity of skeletal positioning in certain postures, as shown in

Table 1 and

Figure 1. Our proposed methodology combines human behavior recognition, image processing, movement calculation, and DNN for smart care applications. The originality of our approach lies in the calculation of the limb joint angle, angle between the limb and the horizontal axis, changes in length for the wrist joint, and using the sliding click method to filter out video noise. We have demonstrated the originality of our approach and it feasibility for both practical and academic applications.

6. Conclusions

A feasible detection system for ADL is beneficial for practitioners, especially for health care and safety applications designed to protect seniors from falling. This research develops a NN-based system to detect the ten most common types of ADL including standing, bending, squatting, sitting, eating, raising one hand, raising two hands, sitting plus drinking, standing plus drinking, and falling. The proposed system starts with ADL image collection and processing using the sliding window concept, followed by the skeletal recognition technique for identification of ADL. Four types of most common NN methods are compared to determine which one is optimal for ADL recognition. The results show the DNN-based system to have the optimal outcome of a 95% accuracy rate in comparison to the single layer BPNN, multi-layer BPNN, and CNN systems. The empirical evaluation using lengthier footage containing all types of ADL randomly mixed together yields a high average accuracy rate of 95.1%. The proposed system even performs perfectly with a 100% accuracy rate for five types of ADL: standing, raising one hand, raising two hands rising, sitting plus drinking, and standing plus drinking.

This study describes a novel mechanism suitable for both practical and academic applications. Integrating the easily accessible ADL images, data processing, and noise filtering concepts with DNN method, we produce a camera-ready system for practical use. It has a high successful identification rate >95% for the recognition of most common ADL, especially for the detection of falling at 97%. The system does not require high-end equipment to obtain footage for recognition and can easily process most common ADL. It is affordable and efficient enough to benefit the users. Some suggestions for follow-up studies could include in more efficient algorithms that yield higher accuracy rates and more detailed posture recognition. The evaluation was conducted using a 5-min footage containing all 10 types of the most common ADL. Although each type of ADL appeared randomly 3 to 5 times in the footage, a larger data set is needed for further evaluation of the method such as lengthier videos and numerous videos with more complex or mixed ADLs. Future studies may also focus on improving the relatively poor recognition rates for postures such as bending and squatting either by providing higher-resolution video or developing more advanced algorithms.

Author Contributions

Conceptualization M.S.; Methodology, M.S. and S.T.; Validation, D.W.H. and J.C.; Resources, M.S. and S.T.; Writing—original draft preparation, D.W.H. and J.C.; writing—review and editing, J.C. and H.W. All authors have read and agreed to the published version of the manuscript.

Funding

This paper was partly supported by the Ministry of Science and Technology (MOST), Taiwan, for promoting academic excellent of universities under grant numbers of [MOST 109-2622-E-008-018-CC2]; [MOST 108-2221-E-008-002-MY3]; [MOST 109-2221-E-008-059-MY3]; [MOST 107-2221-E-008-084-MY2]; [MOST 109-2634-F-008-007]; [MOST 108-2634-F-008-001].

Conflicts of Interest

The authors declare no conflict of interest.

References

- Department of Economic and Social Affairs. World Population Ageing 2019; United Nations: New York, NY, USA, 2020. [Google Scholar]

- Landi, F.; Tua, E.; Onder, G.; Carrara, B.; Sgadari, A.; Rinaldi, C.; Gambassi, G.; Lattanzio, F.; Bernabei, R. Minimum data set for home care: A valid instrument to assess frail older people living in the community. Med. Care 2000, 38, 1184–1190. [Google Scholar] [CrossRef] [PubMed]

- Fortinsky, R.H.; Granger, C.V.; Seltzer, G.B. The use of functional assessment in understanding home care needs. Med. Care 1981, 19, 489–497. [Google Scholar] [CrossRef] [PubMed]

- Sainsbury, A.; Seebass, G.; Bansal, A.; Young, J.B. Reliability of the Barthel Index when used with older people. Age Ageing 2005, 34, 228–232. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shah, S.; Vanclay, F.; Cooper, B. Improving the sensitivity of the Barthel Index for stroke rehabilitation. J. Clin. Epidemiol. 1989, 42, 703–709. [Google Scholar] [CrossRef]

- Alamri, A.; Eid, M.; Iglesias, R.; Shirmohammadi, S.; El-Saddik, A. Haptic Virtual Rehabilitation Exercises for Poststroke Diagnosis. IEEE Trans. Instrum. Meas. 2008, 57, 1876–1884. [Google Scholar] [CrossRef]

- Brennan, D.M.; Mawson, S.; Brownsell, S. Telerehabilitation: Enabling the remote delivery of healthcare, rehabilitation, and self management. Stud. Health Technol. Inform. 2009, 145, 48. [Google Scholar]

- Gambi, E.; Temperini, G.; Galassi, R.; Senigagliesi, L.; De Santis, A. ADL Recognition Through Machine Learning Algorithms on IoT Air Quality Sensor Dataset. IEEE Sens. J. 2020, 20, 13562–13570. [Google Scholar] [CrossRef]

- Viard, K.; Fanti, M.P.; Faraut, G.; Lesage, J.J. Human activity discovery and recognition using probabilistic finite-state automata. IEEE Trans. Autom. Sci. Eng. 2020, 17, 2085–2096. [Google Scholar] [CrossRef]

- Howedi, A.; Lotfi, A.; Pourabdollah, A. An Entropy-Based Approach for Anomaly Detection in Activities of Daily Living in the Presence of a Visitor. Entropy 2020, 22, 845. [Google Scholar] [CrossRef]

- Rawashdeh, M.; Al Zamil, M.G.; Samarah, S.; Hossain, M.; Muhammad, G. A knowledge-driven approach for activity recognition in smart homes based on activity profiling. Future Gener. Comput. Syst. 2020, 107, 924–941. [Google Scholar] [CrossRef]

- Rafferty, J.; Nugent, C.; Liu, J.; Chen, L. An approach to provide dynamic, illustrative, video-based guidance within a goal-driven smart home. J. Ambient. Intell. Hum. Comput. 2016, 11, 3045–3056. [Google Scholar] [CrossRef]

- Kim, K.; Jalal, A.; Mahmood, M. Vision-Based Human Activity Recognition System Using Depth Silhouettes: A Smart Home System for Monitoring the Residents. J. Electr. Eng. Technol. 2019, 14, 2567–2573. [Google Scholar] [CrossRef]

- Jalal, A.; Kamal, S.; Kim, D. A Depth Video-based Human Detection and Activity Recognition using Multi-features and Embedded Hidden Markov Models for Health Care Monitoring Systems. Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 54–62. [Google Scholar] [CrossRef] [Green Version]

- Harikumar, R.; Vinothkumar, B. Performance analysis of neural networks for classification of medical images with wavelets as a feature extractor. Int. J. Imaging Syst. Technol. 2015, 25, 33–40. [Google Scholar] [CrossRef]

- Gupta, A.; Shreevastava, M. Medical diagnosis using back propagation algorithm. Int. J. Emerg. Technol. Adv. Eng. 2011, 1, 55–58. [Google Scholar]

- Arulmurugan, R.; Anandakumar, H. Early detection of lung cancer using wavelet feature descriptor and feed forward back propagation neural networks classifier. VipIMAGE 2019 2018, 103–110. [Google Scholar]

- Najafabadi, M.M.; Villanustre, F.; Khoshgoftaar, T.M.; Seliya, N.; Wald, R.; Muharemagic, E. Deep learning applications and challenges in big data analytics. J. Big Data 2015, 2, 1. [Google Scholar] [CrossRef] [Green Version]

- Shen, D.; Wu, G.; Suk, H. Deep Learning in Medical Image Analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef] [Green Version]

- Markoff, J. How many computers to identify a cat? 16,000. New York Times, 25 June 2002. [Google Scholar]

- Jouppi, N.; Young, C.; Patil, N.; Patterson, D. Motivation for and Evaluation of the First Tensor Processing Unit. IEEE Micro 2018, 38, 10–19. [Google Scholar] [CrossRef]

- Pandey, P.; Basu, P.; Chakraborty, K. Green TPU: Improving timing error resilience of a near-threshold tensor processing unit. In Proceedings of the 2019 56th ACM/IEEE Design Automation Conference (DAC), Las Vegas, NV, USA, 2–6 June 2019; pp. 1–6. [Google Scholar]

- Dean, J.; Patterson, D.; Young, C. A new golden age in computer architecture: Empowering the machine-learning revolution. IEEE Micro 2018, 38, 21–29. [Google Scholar] [CrossRef]

- Zhao, G.; Zhang, G.; Ge, Q.; Liu, X. Research advances in fault diagnosis and prognostic based on deep learning. In Proceedings of the 2016 Prognostics and System Health Management Conference (PHM-Chengdu), Chengdu, China, 19–25 October 2016; pp. 1–6. [Google Scholar]

- Abu Ghosh, M.M.; Maghari, A.Y. A Comparative Study on Handwriting Digit Recognition Using Neural Networks. In Proceedings of the 2017 International Conference on Promising Electronic Technologies (ICPET), Deir El-Balah, Poland, 16–17 October 2017; pp. 77–81. [Google Scholar]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, L.; Darrell, T. Caffe: Convolutional architecture for fast feature embedding. In Proceedings of the 22nd ACM international conference on Multimedia, Orlando, FL, USA, 21–25 October 2015; pp. 675–678. [Google Scholar]

- Je, S.; Nguyen, H.; Lee, J. Image Recognition Method Using Modular Systems. In Proceedings of the 2015 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 7–9 December 2015; pp. 504–508. [Google Scholar]

- Ignatov, A.; Timofte, R.; Chou, W.; Wang, K.; Wu, M.; Hartley, T.; Van Gool, L. AI Benchmark: Running Deep Neural Networks on Android Smartphones. In Proceedings of the Computer Vision, European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Turchenko, V.; Luczak, A. Creation of a deep convolutional auto-encoder in caffe. In Proceedings of the 2017 9th IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Bucharest, Romania, 21–23 September 2017; pp. 651–659. [Google Scholar]

- Ikeda, M.; Oda, T.; Barolli, L. A vegetable category recognition system: A comparison study for caffe and Chainer DNN frameworks. Soft Comput. 2017, 23, 3129–3136. [Google Scholar] [CrossRef]

- Hn, C.; Saito, T.; Kai, I. Functional disability in activities of daily living and instrumental activities of daily living among Nepalese Newar elderly. Public Health 2008, 122, 394–396. [Google Scholar]

- Liu, Y.-H.; Chang, H.-J.; Huang, C.-C. The Unmet Activities of Daily Living (ADL) Needs of Dependent Elders and their Related Factors: An Approach from Both an Individual- and Area-Level Perspective. Int. J. Gerontol. 2012, 6, 163–168. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Tapia, G.; Jose, A.G. Household heating associated with disability in activities of daily living among Chinese middle-aged and elderly: A longitudinal study. Environ. Health Prev. Med. 2020, 25, 49. [Google Scholar] [CrossRef] [PubMed]

- Song, W.J.; Choi, S.G.; Lee, E.S. Prediction and Comparison of Electrochemical Machining on Shape Memory Alloy (SMA) using Deep Neural Network (DNN). J. Electrochem. Sci. Technol. 2018, 10, 276–283. [Google Scholar] [CrossRef]

- Wang, R.; Jiang, Y.; Lou, J.; Cheng, H.K. TDR: Two-stage deep recommendation model based on mSDA and DNN. Expert Syst. Appl. 2020, 145, 113116. [Google Scholar] [CrossRef]

- Zaki, A.U.M.; Hu, Y.; Jiang, X. Partial discharge localization in 3-D with a multi-DNN model based on a virtual measurement method. IEEE Access 2020, 8, 87434–87445. [Google Scholar] [CrossRef]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).