Forecasting Irregular Seasonal Power Consumption. An Application to a Hot-Dip Galvanizing Process

Abstract

Featured Application

Abstract

1. Introduction

2. Materials and Methods

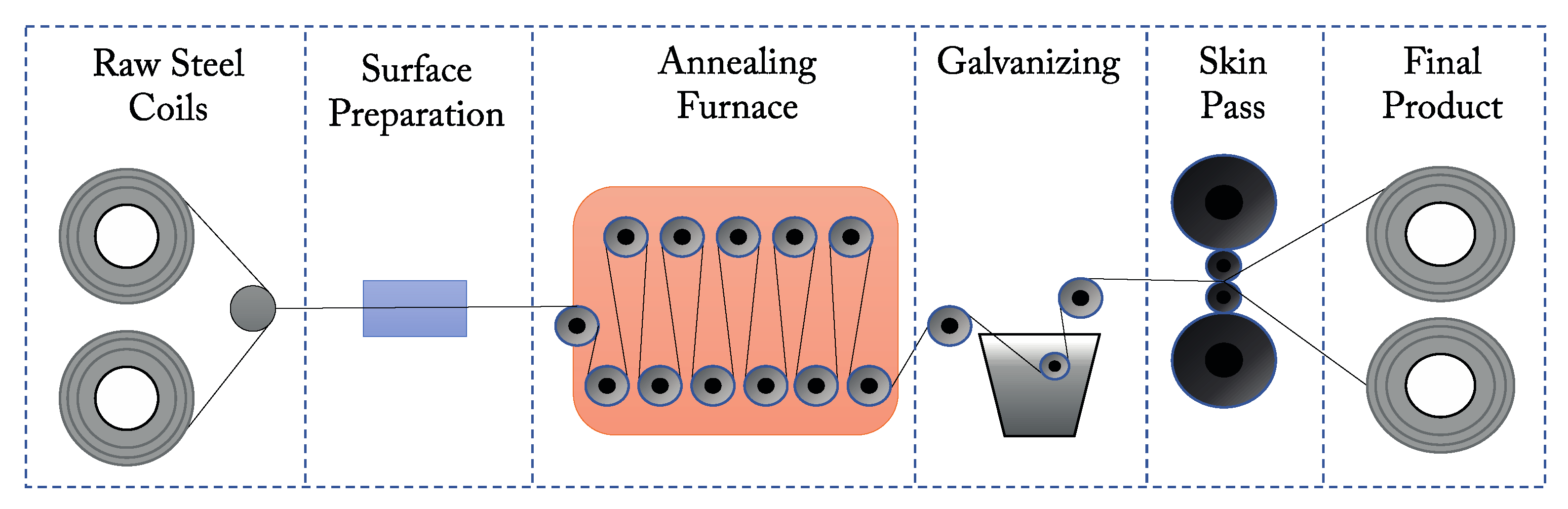

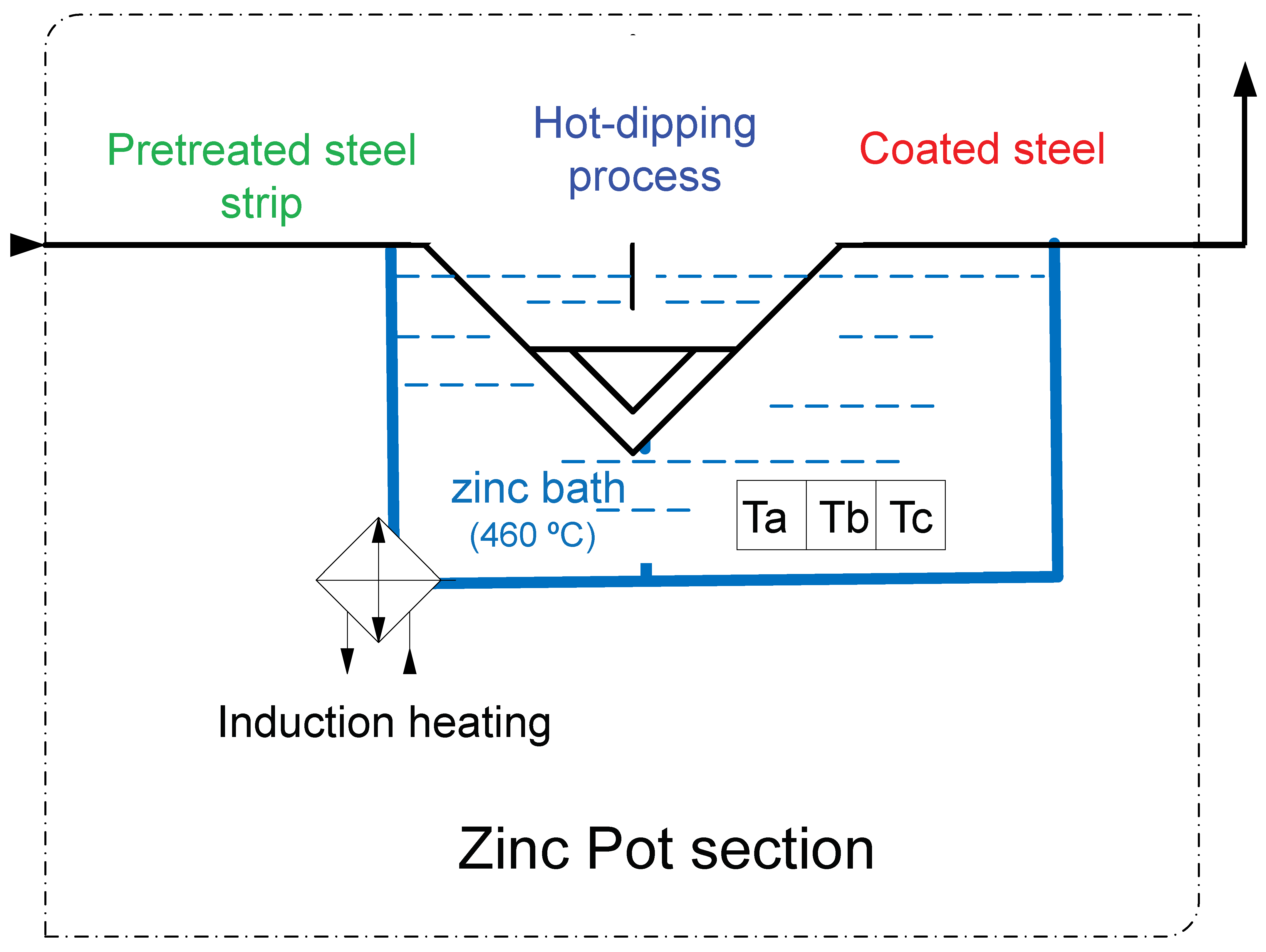

2.1. Study Area

2.2. Forecasting Methods

2.2.1. Artificial Neural Networks

2.2.2. ARIMA Models

2.2.3. Multiple Seasonal Holt-Winters Models

2.2.4. State Space Models

2.2.5. Multiple Seasonal Holt-Winters Models with Discrete Interval Moving Seasonalities

3. Results

3.1. Analysis of the Seasonality of the Series

3.2. Application of Models with Regular Seasonality

3.2.1. Application of ANN

- Previous hour’s average electricity consumption.

- Consumption of electricity from the previous hour.

- Timestamp (only in NARX model).

3.2.2. Application of ARIMA Models

3.2.3. Application of nHWT Models

3.2.4. Application of SSM Models

3.3. Application of Discrete Seasonality Models (nHWT-DIMS)

3.4. Model Fit Comparison

3.5. Forecasts Comparison

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| Al | Aluminum |

| AIC, AICc | Akaike’s information criterion |

| ALLSSA | Anti-leakage least-squares spectral analysis |

| ANN | Artificial neural networks |

| AR(1) | Auto regressive model of order 1 |

| ARIMA | Autoregressive integrated moving average |

| ARMA | Autoregressive moving average |

| BATS | Exponential smoothing state space model with Box-Cox transformation, ARMA errors, trend and seasonal components |

| BIC | Bayesian information criterion |

| BRT | Bagged regression trees |

| DIMS | Discrete interval moving seasonalities |

| LASSO | Least absolute shrinkage and selection operator |

| LSWA | Least-squares wavelet analysis |

| LSWS | Least square wavelet spectrum |

| MSE | Mean squared error |

| MAPE | Mean absolut percentage error |

| MLP | Multilayer perceptron |

| NARX | Non-linear autoregressive neural networks with exogenous variables |

| nHWT | Multiple seasonal Holt-Winters |

| nHWT-DIMS | Multiple seasonal Holt-Winters with discrete interval moving seasonalities |

| NLR | Non-linear regression |

| RMSE | Root of mean squared error |

| SARIMAX | Seasonal autoregressive integrated moving average exogenous model |

| SIC | Schwarz’s information criterion |

| SSM | State-space models |

| STL | Seasonal–trend decomposition procedure using Loess |

| SVM | Support vector machines |

| TBATS | Exponential smoothing state space model with Box-Cox transformation, ARMA errors, trend and trigonometric seasonal components |

| TDL | Tapped delay line |

| Zn | Zinc |

References

- Weron, R. Modeling and Forecasting Electricity Loads and Prices: A Statistical Approach; John Wiley & Sons, Ltd.: Chichester, UK, 2006; ISBN 978-0-470-05753-7. [Google Scholar]

- Michalski, R.S.; Carbonell, J.G.; Mitchell, T.M. (Eds.) Machine Learning. An Artificial Intelligence Approach; Morgan Kaufmann: San Francisco, CA, USA, 1983; ISBN 978-0-08-051054-5. [Google Scholar]

- Wang, H.; Lei, Z.; Zhang, X.; Zhou, B.; Peng, J. A review of deep learning for renewable energy forecasting. Energy Convers. Manag. 2019, 198, 111799. [Google Scholar] [CrossRef]

- Fallah, S.; Ganjkhani, M.; Shamshirband, S.; Chau, K.; Fallah, S.N.; Ganjkhani, M.; Shamshirband, S.; Chau, K. Computational Intelligence on Short–Term Load Forecasting: A Methodological Overview. Energies 2019, 12, 393. [Google Scholar] [CrossRef]

- Buitrago, J.; Asfour, S. Short–term forecasting of electric loads using nonlinear autoregressive artificial neural networks with exogenous vector inputs. Energies 2017, 10, 40. [Google Scholar] [CrossRef]

- López, M.; Valero, S.; Senabre, C. Short–term load forecasting of multiregion systems using mixed effects models. In Proceedings of the 2017 14th International Conference on the European Energy Market (EEM), Dresden, Germany, 6–9 June 2017; pp. 1–5. [Google Scholar]

- Ahmad, W.; Ayub, N.; Ali, T.; Irfan, M.; Awais, M.; Shiraz, M.; Glowacz, A. Towards short term electricity load forecasting using improved support vector machine and extreme learning machine. Energies 2020, 13, 2907. [Google Scholar] [CrossRef]

- Khan, A.R.; Razzaq, S.; Alquthami, T.; Moghal, M.R.; Amin, A.; Mahmood, A. Day ahead load forecasting for IESCO using Artificial Neural Network and Bagged Regression Tree. In Proceedings of the 2018 1st International Conference on Power, Energy and Smart Grid (ICPESG), Mirpur, Pakistan, 12–13 April 2018; pp. 1–6. [Google Scholar]

- Zahedi, G.; Azizi, S.; Bahadori, A.; Elkamel, A.; Wan Alwi, S.R. Electricity demand estimation using an adaptive neuro–fuzzy network: A case study from the Ontario province—Canada. Energy 2013, 49, 323–328. [Google Scholar] [CrossRef]

- Box, G.E.P.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control, 5th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2015; ISBN 978-1-118-67502-01. [Google Scholar]

- Makridakis, S.; Hibon, M. ARMA models and the Box–Jenkins methodology. J. Forecast. 1997, 16, 147–163. [Google Scholar] [CrossRef]

- Cancelo, J.R.; Espasa, A.; Grafe, R. Forecasting the electricity load from one day to one week ahead for the Spanish system operator. Int. J. Forecast. 2008, 24, 588–602. [Google Scholar] [CrossRef]

- Elamin, N.; Fukushige, M. Modeling and forecasting hourly electricity demand by SARIMAX with interactions. Energy 2018, 165, 257–268. [Google Scholar] [CrossRef]

- Bercu, S.; Proïa, F. A SARIMAX coupled modelling applied to individual load curves intraday forecasting. J. Appl. Stat. 2013, 40, 1333–1348. [Google Scholar] [CrossRef]

- Taylor, J.W.; de Menezes, L.M.; McSharry, P.E. A comparison of univariate methods for forecasting electricity demand up to a day ahead. Int. J. Forecast. 2006, 22, 1–16. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Koehler, A.B.; Ord, J.K.; Snyder, R.D. Forecasting with Exponential Smoothing: The State Space Approach; Springer: Berlin/Heidelberg, Germany, 2008; ISBN 978-3-540-71916-8. [Google Scholar]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter. Proc. Siggraph Course 2006, 7, 1–16. [Google Scholar]

- De Livera, A.M.; Hyndman, R.J.; Snyder, R.D. Forecasting time series with complex seasonal patterns using exponential smoothing. J. Am. Stat. Assoc. 2011, 106, 1513–1527. [Google Scholar] [CrossRef]

- Bermúdez, J.D. Exponential smoothing with covariates applied to electricity demand forecast. Eur. J. Ind. Eng. 2013, 7, 333–349. [Google Scholar] [CrossRef]

- Winters, P.R. Forecasting sales by exponentially weighted moving averages. Management 1960, 6, 324–342. [Google Scholar]

- Taylor, J.W.; McSharry, P.E. Short–term load forecasting methods: An evaluation based on European data. Power Syst. IEEE Trans. 2007, 22, 2213–2219. [Google Scholar] [CrossRef]

- Taylor, J.W. Short–term electricity demand forecasting using double seasonal exponential smoothing. J. Oper. Res. Soc. 2003, 54, 799–805. [Google Scholar] [CrossRef]

- Taylor, J.W. Triple seasonal methods for short–term electricity demand forecasting. Eur. J. Oper. Res. 2010, 204, 139–152. [Google Scholar] [CrossRef]

- García–Díaz, J.C.; Trull, O. Competitive Models for the Spanish Short–Term Electricity Demand Forecasting. In Time Series Analysis and Forecasting: Selected Contributions from the ITISE Conference; Rojas, I., Pomares, H., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 217–231. ISBN 978-3-319-28725-6. [Google Scholar]

- Trull, O.; García–Díaz, J.C.; Troncoso, A. Application of Discrete–Interval Moving Seasonalities to Spanish Electricity Demand Forecasting during Easter. Energies 2019, 12, 1083. [Google Scholar] [CrossRef]

- Hong, T.; Xie, J.; Black, J. Global energy forecasting competition 2017: Hierarchical probabilistic load forecasting. Int. J. Forecast. 2019, 35, 1389–1399. [Google Scholar] [CrossRef]

- Makridakis, S.; Spiliotis, E.; Assimakopoulos, V. The M4 Competition: Results, findings, conclusion and way forward. Int. J. Forecast. 2018, 34, 802–808. [Google Scholar] [CrossRef]

- Smyl, S. A hybrid method of exponential smoothing and recurrent neural networks for time series forecasting. Int. J. Forecast. 2020, 36, 75–85. [Google Scholar] [CrossRef]

- Choi, T.-M.; Yu, Y.; Au, K.-F. A hybrid SARIMA wavelet transform method for sales forecasting. Decis. Support Syst. 2011, 51, 130–140. [Google Scholar] [CrossRef]

- Sudheer, G.; Suseelatha, A. Short term load forecasting using wavelet transform combined with Holt–Winters and weighted nearest neighbor models. Int. J. Electr. Power Energy Syst. 2015, 64, 340–346. [Google Scholar] [CrossRef]

- Fard, A.K.; Akbari-Zadeh, M.-R. A hybrid method based on wavelet, ANN and ARIMA model for short-term load forecasting. J. Exp. Theor. Artif. Intell. 2014, 26, 167–182. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso: A retrospective. J. R. Stat. Soc. Ser. B Stat. Methodol. 2011, 73, 273–282. [Google Scholar] [CrossRef]

- Dudek, G. Pattern–based local linear regression models for short-term load forecasting. Electr. Power Syst. Res. 2016, 130. [Google Scholar] [CrossRef]

- Bickel, P.J.; Gel, Y.R. Banded regularization of autocovariance matrices in application to parameter estimation and forecasting of time series. J. R. Stat. Soc. Ser. B Stat. Methodol. 2011, 73, 711–728. [Google Scholar] [CrossRef]

- Ghaderpour, E.; Vujadinovic, T. The potential of the least-squares spectral and cross-wavelet analyses for near-real-time disturbance detection within unequally spaced satellite image time series. Remote Sens. 2020, 12, 2446. [Google Scholar] [CrossRef]

- Ghaderpour, E.; Pagiatakis, S.D. Least-Squares Wavelet Analysis of Unequally Spaced and Non-stationary Time Series and Its Applications. Math. Geosci. 2017, 49, 819–844. [Google Scholar] [CrossRef]

- Taylor, J.W.; Buizza, R. Using weather ensemble predictions in electricity demand forecasting. Int. J. Forecast. 2003, 19, 57–70. [Google Scholar] [CrossRef]

- Shibli, S.M.A.; Meena, B.N.; Remya, R. A review on recent approaches in the field of hot dip zinc galvanizing process. Surf. Coat. Technol. 2015, 262, 210–215. [Google Scholar] [CrossRef]

- Bush, G.W. Developments in the continuous galvanizing of steel. JOM 1989, 41, 34–36. [Google Scholar] [CrossRef]

- Debón, A.; García-Díaz, J.C. Fault diagnosis and comparing risk for the steel coil manufacturing process using statistical models for binary data. Reliab. Eng. Syst. Saf. 2012, 100, 102–114. [Google Scholar] [CrossRef]

- García-Díaz, J.C. Fault detection and diagnosis in monitoring a hot dip galvanizing line using multivariate statistical process control. Saf. Reliab. Risk Anal. Theory Methods Appl. 2009, 1, 201–204. [Google Scholar]

- Ajersch, F.; Ilinca, F.; Hétu, J.F. Simulation of flow in a continuous galvanizing bath: Part II. Transient aluminum distribution resulting from ingot addition. Metall. Mater. Trans. B 2004, 35, 171–178. [Google Scholar] [CrossRef][Green Version]

- Tang, N.Y. Characteristics of continuous-galvanizing baths. Metall. Mater. Trans. B 1999, 30, 144–148. [Google Scholar] [CrossRef]

- Hippert, H.S.; Pedreira, C.E.; Souza, R.C. Neural networks for short-term load forecasting: A review and evaluation. IEEE Trans. Power Syst. 2001, 16, 44–55. [Google Scholar] [CrossRef]

- Baliyan, A.; Gaurav, K.; Kumar Mishra, S. A review of short term load forecasting using artificial neural network models. Procedia Comput. Sci. 2015, 48, 121–125. [Google Scholar] [CrossRef]

- Xie, H.; Tang, H.; Liao, Y.H. Time series prediction based on narx neural networks: An advanced approach. Proc. Int. Conf. Mach. Learn. Cybern. 2009, 3, 1275–1279. [Google Scholar]

- Liu, Q.; Chen, W.; Hu, H.; Zhu, Q.; Xie, Z. An Optimal NARX Neural Network Identification Model for a Magnetorheological Damper with Force–Distortion Behavior. Front. Mater. 2020, 7, 1–12. [Google Scholar] [CrossRef]

- Deoras, A. Electricity Load and Price Forecasting Webinar Case Study. Available online: https://es.mathworks.com/matlabcentral/fileexchange/28684–electricity–load–and–price–forecasting–webinar–case–study (accessed on 6 July 2020).

- Moré, J.J. The Levenberg–Marquardt algorithm: Implementation and theory. In Numerical Analysis. Notes in Mathematics; Watson, G.A., Ed.; Springer: Berlin/Heidelberg, Germany, 1978; Volume 630, pp. 105–116. ISBN 978-3-540-08538-6. [Google Scholar]

- Box, G.E.P.; Jenkins, G.M. Time Series Analysis: Forecasting and Control; Holden-Day: San Francisco, CA, USA, 1970. [Google Scholar]

- Lu, Y.; AbouRizk, S.M. Automated Box–Jenkins forecasting modelling. Autom. Constr. 2009, 18, 547–558. [Google Scholar] [CrossRef]

- Brockwell, P.J.; Davis, R.A.; Fienberg, S.E. Time Series: Theory and Methods: Theory and Methods, 2nd ed.; Springer Science & Business Media: New York, NY, USA, 1991; ISBN 0387974296. [Google Scholar]

- Holt, C.C. Forecasting Seasonals and Trends by Exponentially Weighted Averages; Carnegie Institute of Technology, Graduate school of Industrial Administration.: Pittsburgh, PA, USA, 1957. [Google Scholar]

- Brown, R.G. Statistical Forecasting for Inventory Control; McGraw-Hill: New York, NY, USA, 1959. [Google Scholar]

- Gardner, E.S., Jr.; McKenzie, E. Forecasting Trends in Time Series. Manag. Sci. 1985, 31, 1237–1246. [Google Scholar] [CrossRef]

- Gardner, E.S., Jr.; McKenzie, E. Why the damped trend works. J. Oper. Res. Soc. 2011, 62, 1177–1180. [Google Scholar] [CrossRef]

- Chatfield, C. The Holt–Winters forecasting procedure. Appl. Stat. 1978, 27, 264–279. [Google Scholar] [CrossRef]

- Gardner, E.S., Jr. Exponential smoothing: The state of the art—Part II. Int. J. Forecast. 2006, 22, 637–666. [Google Scholar] [CrossRef]

- Segura, J.V.; Vercher, E. A spreadsheet modeling approach to the Holt–Winters optimal forecasting. Eur. J. Oper. Res. 2001, 131, 375–388. [Google Scholar] [CrossRef]

- Bermúdez, J.D.; Segura, J.V.; Vercher, E. Improving demand forecasting accuracy using nonlinear programming software. J. Oper. Res. Soc. 2006, 57, 94–100. [Google Scholar] [CrossRef]

- Nelder, J.A.; Mead, R. A Simplex Method for Function Minimization. Comput. J. 1965, 7, 308–313. [Google Scholar] [CrossRef]

- Lagarias, J.C.; Reeds, J.A.; Wright, M.H.; Wright, P.E. Convergence properties of the nelder–mead simplex method in low dimensions. SIAM J. Optim. 1998, 9, 112–147. [Google Scholar] [CrossRef]

- Koller, D.; Friedman, N. Probabilistic Graphical Models: Principles and Techniques; MIT Press: Cambridge, MA, USA, 2009; ISBN 978-0262013192. [Google Scholar]

- Durbin, J.; Koopman, S.J. A simple and efficient simulation smoother for state space time series analysis. Biometrika 2002, 89, 603–615. [Google Scholar] [CrossRef]

- Trull, O.; García–Díaz, J.C.; Troncoso, A. Stability of multiple seasonal holt–winters models applied to hourly electricity demand in Spain. Appl. Sci. 2020, 10, 2630. [Google Scholar] [CrossRef]

- Cleveland, R.B.; Cleveland, W.S.; McRae, J.E.; Terpenning, I. STL: A seasonal–trend decomposition procedure based on loess. J. Off. Stat. 1990, 6, 3–73. [Google Scholar]

- Fan, J.; Yao, Q. Characteristics of Time Series. In Nonlinear Time Series. Nonparametric and Parametric Methods; Springer: New York, NY, USA, 2003; ISBN 978-0-387-26142-3. [Google Scholar]

- Alessio, S.M. Digital Signal Processing and Spectral Analysis for Scientists; Springer: Cham, Switzerland, 2016; ISBN 978-3-319-25466-1. [Google Scholar]

- Ghaderpour, E.; Ince, E.S.; Pagiatakis, S.D. Least-squares cross-wavelet analysis and its applications in geophysical time series. J. Geod. 2018, 92, 1223–1236. [Google Scholar] [CrossRef]

- Ghaderpour, E.; Pagiatakis, S.D. LSWAVE: A MATLAB software for the least-squares wavelet and cross-wavelet analyses. GPS Solut. 2019, 23, 1–8. [Google Scholar] [CrossRef]

- Gómez, V. SSMMATLAB: A Set of MATLAB Programs for the Statistical Analysis of State Space Models. J. Stat. Softw. 2015, 66, 1–37. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Khandakar, Y. Automatic time series forecasting: The forecast package for R. J. Stat. Softw. 2008, 27. [Google Scholar] [CrossRef]

- Kim, S.; Kim, H. A new metric of absolute percentage error for intermittent demand forecasts. Int. J. Forecast. 2016, 32, 669–679. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Athanasopoulos, G. Forecasting: Principles and Practice, 2nd ed.; OTexts: Melbourne, Australia, 2018; Chapter 3.4; ISBN 978-0-9875071-1-2. [Google Scholar]

| Neural Network | Parameters | Fit RMSE |

|---|---|---|

| NARX | 1 input layer 1 hidden layer with 20 neurons 1 output layer TDL = 3 | 33.95 |

| NLR | 1 input layer 1 hidden layer with 20 neurons 1 output layer | 33.21 |

| Parameters | Fit RMSE | |

|---|---|---|

| AR | 0.170 | 50.42 |

| MA | = 0.174 | |

| SAR | −0.067 | |

| SMA | −0.065 |

| Model Arguments | Parameters | Fit RMSE |

|---|---|---|

| BATS (0.717,0, 5,2, 10) | 0.717, 0.120,0.206,

− 0.004, = 0.945, − 0.625, 0.022, 0.104, − 0.500, − 0.153, 0.515. | 46.77 |

| TBATS (0.756,0, 5,2, {10,1}) | 0.756, 0.862, 0.105, − 0.0004, 0.0001, = 1.293, – 0.552, − 0.359, 0.227, – 0.1968, = −1.358, 0.783. | 45.44 |

| DIMS | Nr. | Time Starts | Time Ends | Recursivity |

|---|---|---|---|---|

| DIMS a | 1 | 14th November at 05:42 am | 14th November at 08:24 am | –––––––– |

| 2 | 14th at 04:00 pm | 14th at 06:42 pm | 618 min. | |

| 3 | 15th at 00:18 am | 15th at 03:00 am | 498 min | |

| 4 | 15th at 07:30 am | 15th at 10:12 am | 432 min | |

| 5 | 15th at 06:42 pm | 15th at 09:24 pm | 672 min | |

| 6 | 16th at 07:24 pm | 16th at 10:06 pm | 1482 min | |

| 7 | 17th at 10:42 am | 17th at 01:24 pm | 918 min | |

| 8 | 18th at 06:06 am | 18th at 08:48 am | 1164 min | |

| 9 | 19th at 02:30 am | 19th at 05:12 am | 1224 min | |

| 10 | 19th at 08:48 pm | 19th at 23:30 pm | 1098 min | |

| 11 | 21th at 06:06 pm | 21th at 20:48 pm | 2718 min | |

| DIMS b | 1 | 16th November at 07:06 am | 16th November at 09:48 am | –––––––– |

| 2 | 20th at 05:06 am | 20th at 07:48 am | 5640 min | |

| 3 | 20th at 05:24 pm | 20th at 08:06 pm | 738 min | |

| 4 | 21th at 02:36 am | 21th at 05:18 am | 552 min | |

| 5 | 22th at 02:24 am | 22th at 05:06 am | 1428 min |

| RMSE on Fit | Average MAPE for Forecasts | |

|---|---|---|

| NARX | 33.95 | 26.63% |

| NN-NLR | 33.21 | 13.94% |

| ARIMA | 50.42 | 24.03% |

| nHWT | 54.93 | 18.55% |

| TBATS | 46.77 | 37.60% |

| BATS | 45.44 | 37.61% |

| nHWT-DIMS | 58.65 | 16.00% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Trull, O.; García-Díaz, J.C.; Peiró-Signes, A. Forecasting Irregular Seasonal Power Consumption. An Application to a Hot-Dip Galvanizing Process. Appl. Sci. 2021, 11, 75. https://doi.org/10.3390/app11010075

Trull O, García-Díaz JC, Peiró-Signes A. Forecasting Irregular Seasonal Power Consumption. An Application to a Hot-Dip Galvanizing Process. Applied Sciences. 2021; 11(1):75. https://doi.org/10.3390/app11010075

Chicago/Turabian StyleTrull, Oscar, Juan Carlos García-Díaz, and Angel Peiró-Signes. 2021. "Forecasting Irregular Seasonal Power Consumption. An Application to a Hot-Dip Galvanizing Process" Applied Sciences 11, no. 1: 75. https://doi.org/10.3390/app11010075

APA StyleTrull, O., García-Díaz, J. C., & Peiró-Signes, A. (2021). Forecasting Irregular Seasonal Power Consumption. An Application to a Hot-Dip Galvanizing Process. Applied Sciences, 11(1), 75. https://doi.org/10.3390/app11010075