1. Introduction

Logs are an essential part of computer systems. The main purpose of logging is to record the necessary information generated during the running of programs and systems, and logs are widely used for runtime state recovering, performance analyzing, failure tracing and anomaly detecting. Due to the importance of logs, the vast majority of public released software and systems have certain types of log services. For large-scale applications, such as software and systems running in distributed systems and environments, the generated log files may contain a very large amount of data. The traditional manual method for analyzing such a big number of logs has become a time-consuming and error-prone task. So how to automatically analyze the logs are of great significance for reducing system maintainers’ workload and tracing the causes of failures and anomalies.

To achieve the goal of automatic log analysis, many researchers use various data mining methods to analyze logs and diagnose anomalies in recent years. For example, researchers use decision tree, self-encoder, and bidirectional recurrent neural network method based on the attention mechanism to diagnose abnormalities from logs. The above methods have achieved relatively good results in automated log analysis. However, the first step of these automated analysis methods is to classify and structure logs so that features can be extracted and used in different data mining methods. Being a common foundation, the more accurate the classified log categories are, the better the results of the corresponding data mining task would be. Therefore, how to accurately classify logs and extract important information from them becomes very important.

Since developers are accustomed to using free text to record log messages, the original log messages are usually unstructured and flexible. The goal of log pattern extraction is to classify the unstructured parts of a log and split each of the classified logs into the constant part and variable part. For example, a Linux system log is as follows:

Sep, 30, 03:50:55, HostName, sshd, 309, Invalid user UserName from 0.0.0.0

If separated this log by commas, you can see that the first three fields of the log represent time, and the middle three fields represent the hostname, daemon name, and PID number respectively. These fields as mentioned above belong to the structured part of the log and can be extracted simply using regular expressions, while the log pattern extraction algorithm mainly focuses on the content of the last field, which needs to transform the content of the last field as below:

where the

Invalid user from is the constant part of the log, and the sign

represents the variable part of the log. In the study of log pattern extraction, Zhao [

1] propose the algorithm Match, which uses word matching rate to determine the similarity of two logs, and uses this to determine the type of logs. At the same time, they also propose a tree matching algorithm to classify the logs. Later, they propose the Lmatch algorithm [

2], which improves the accuracy of the word matching rate algorithm. The algorithm treats each word in the log as a basic unit and then calculates the number of matching words through the longest common subsequence between the logs, and the total words in the two logs are compared to calculate the word matching rate finally. Although the above methods have achieved good results, there are still some aspects that can be improved. First, the word matching algorithm in the paper is too simple and does not take into account the weight information of different parts of a log. Secondly, the original method stores the logs based on the hash table of the first word in the log pattern. Because of the complexity and variability of the log, it is too simple to partition it just by hashing functions. Finally, the parameter adjustment method of the log pattern extraction algorithm is not clear enough.

Based on the above challenges, this article describes the log pattern extraction algorithm LTmatch(LCS Tree match algorithm). This algorithm treats the constant part and the variable part differently when determining the word matching rate of two logs, and uses a weighted matching algorithm. In this way, the detailed changes of the constant part and the variable part of the log in different types of logs can be determined when the logs are matched, so the matching result is optimized. In the storage structure of the log pattern, a tree structure based on log words is used for storage, which further refines the division of the log classification structure. The rules for extracting the log template can distinguish the variable part and the constant part of the log. Moreover, whether the variable part length is changed or fixed can be found by using two different variable symbols. This makes the final template of the log more conducive to subsequent analysis. In the parameter optimization of the entire algorithm, a large number of experiments were carried out to adjust the parameters and the best parameters are determined for a variety of different types of logs. In general, this article has the following two contributions:

First, the method in this paper further optimizes the three points of the workflow, which includes the optimal word matching rate, log warehouse structure, and log template extracting.

Second, the log pattern extraction algorithm performs multi-dimensional experimental analysis on the open-source log datasets, and the experimental results prove the advantages of this method.

In the following, we will introduce related work in

Section 2, describe our method in detail in

Section 3, conduct experiments in

Section 4, and the conclusion is got in

Section 5.

3. Log Pattern Extraction Algorithm

This section introduces the proposed log pattern extraction algorithm and related optimal. As described in the introduction, the main focus of log pattern extraction is the unstructured part of the log. Therefore, unless otherwise specified, a log described later refers to the content of the unstructured part of the log (for example, the last field:

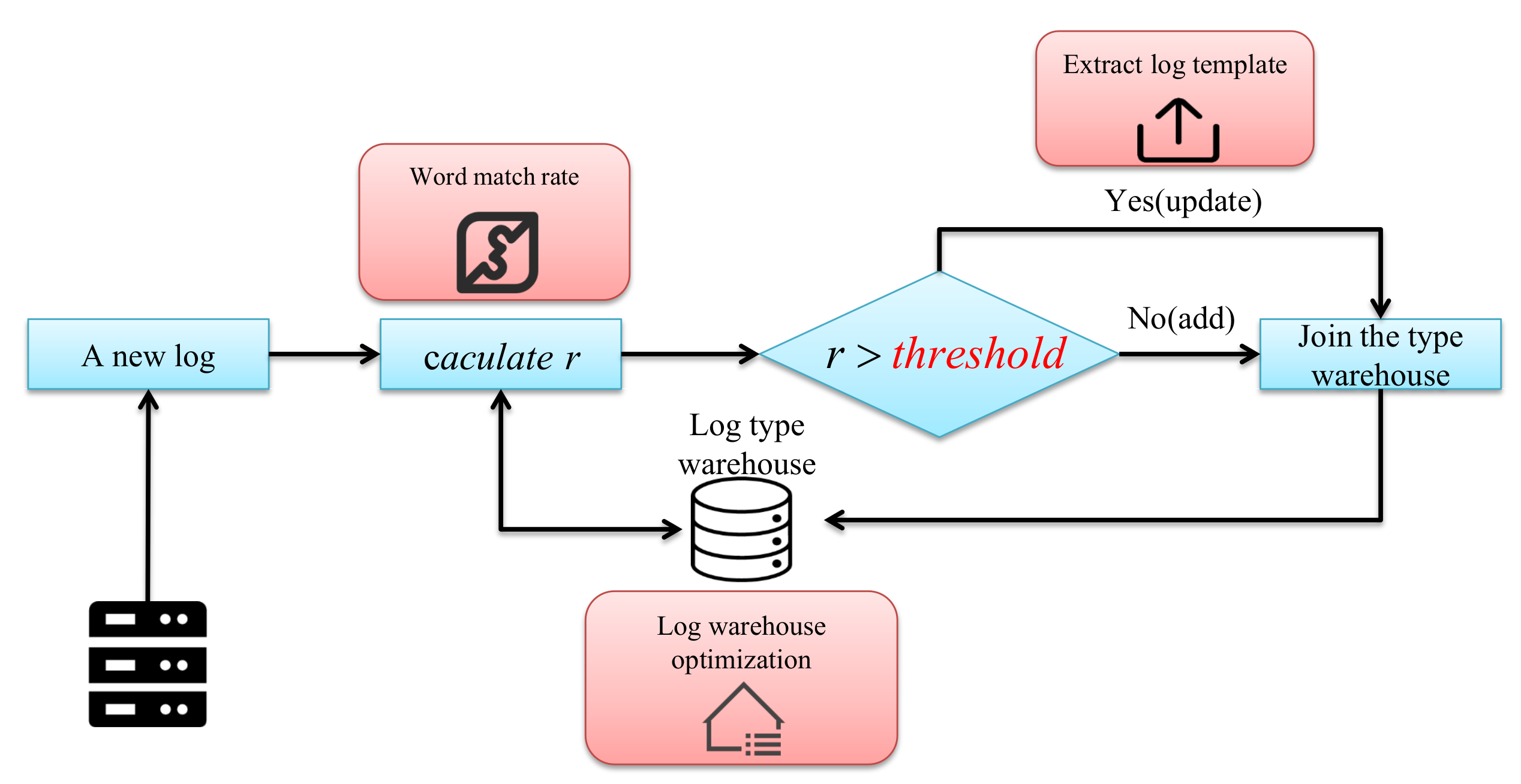

Invalid user UserName from 0.0.0.0 of the example log in the introduction part). The log pattern extraction algorithm is generally divided into online methods and offline methods. Offline methods usually require historical log data, and then cluster the historical logs through a round of traversal, after that it can extract a template for each type of log. Although this method is intuitive, it cannot form a new category in real-time if a new log does not meet the known category in actual applications. Therefore, the online log classification method is more valuable in the actual application. In general, the flow of the online log classification algorithm is shown in

Figure 1.

As shown in the picture above of the overall process, a log is obtained from the server, and then the word matching rate is calculated with the existing log in the log type warehouse. If the result is greater than the threshold, it is considered to belong to the category, and then the template of the category is updated. If on the opposite, add this log to the log warehouse as a new type. It can be seen from this process that there are three key issues that need to be solved in the entire process. One is how to determine the word matching rate of the two logs. The second is how to construct a log warehouse that is conducive to store or search the log patterns. The third is how to extract the log template. Therefore, we will introduce the word matching rate in

Section 3.1, the log warehouse in

Section 3.2, the log template extraction method in

Section 3.3. In the

Section 3.4, we will perform an example to show the overall process.

3.1. The Word Matching Rate of Two Logs

3.1.1. Basic Word Matching Rate Algorithm

Regarding the question of how to determine whether two logs belong to the same pattern, the most intuitive method is to determine whether the two logs match enough words, so the word matching rate can be used to determine the log pattern. The algorithm treats each word in the log as a basic unit and then matches it. Specifically, assuming that the original log is

l, the log to be matched is

, and the number of words contained in the two logs is

m and

n respectively, the word matching rate calculation formula for the two logs is as follows:

where

represents the number of one-to-one matching words in the corresponding positions of the two logs. However, if the word matching rate algorithm based on Formula (

1) is used, it will bring a side effect. That is, when the number of words in the variable part of the log is uncertain, the constant part of the two logs will be misaligned as the constant part is matched, which means the Match algorithm will fail. In order to make up for this defect, the improved calculation formula for word matching rate is as follows:

where

represents the number of words matched when the two logs are calculated using the longest common subsequence. The algorithm that uses this word matching rate for pattern refining is called the LMatch algorithm.

3.1.2. Optimized Word Matching Rate Algorithm

Further analysis of Formula (

2) can find that if the 2 of the numerator in Formula (

2) is moved to the denominator, the denominator becomes the harmonic average of the length of the original log and the log to be compared. According to actual processing experience, it can be found that the number of constant parts and variable parts of different types of logs will be slightly different when the logs are matched. Therefore, to improve the calculation accuracy, the optimized word matching rate algorithm further multiplies the length of the two logs by an adjustment weight. According to this idea, Formula (

2) is improved, and the calculation formula of the final word matching rate algorithm is as follows:

where

w represents the adjustment weight, which is a parameter that needs to be adjusted according to different training sets in practice. The algorithm that uses Formula (

3) to refine the pattern is called the LTMatch algorithm.

3.2. Log Type Warehouse

3.2.1. Basic Log Type Warehouse

The basic unit of log type warehouse is a log template string containing constant words and variable wildcards. In the actual process to construct the warehouse, whenever there is a new log for matching, the necessary step is to use this new log data and all log template strings to calculate the word matching rate. Therefore, the basic log type warehouse can directly use the list for storage, so that when calculating the word matching rate, only a simple cycle of the list is required to complete the matching calculation. The number of iterations during the cycle is equal to the number of log patterns in the warehouse.

3.2.2. Optimized Log Type Warehouse Storage Structure

When storing the logs based on the basic log type warehouse, it can be found that it is necessary to calculate the longest common subsequence of the log and all known log patterns separately whenever a new log appears. As the log pattern increases, the scale of the computation will become larger and larger, so if the number of comparisons can be reduced, the overall efficiency of the algorithm can be significantly improved. The optimized log type warehouse aims to solve this problem.

On the optimization method of log warehouse, this article is inspired by Drain [

16]. The log type warehouse in the “LTmatch” algorithm uses a storage form based on a tree structure, in which the node of each tree stores the word at the corresponding position of the log. If a number appears in the word, it will be stored in the tree node starting with the wildcard <∗>. Different from the log store structure of Drain, the first layer of Drain (the root node is considered to be the 0th layer in this article) stores the number of logs. The top-level classification of the tree cannot be performed by fixing the length of the log because the optimized word matching rate algorithm is based on the longest common subsequence(LCS). The LCS method can find different lengths of the logs that belong to the same category naturally. Therefore, the tree structure we used starts with a node of depth 1 (the depth of the root node is 0 in this article). By analogy, the node with depth n stores the nth word of the log. A schematic diagram of a log pattern warehouse constructed in this way is shown in

Figure 2.

The depth of the log warehouse tree structure constructed in

Figure 2 is two. Because the leaf node stores a list of log clusters in practice, which is different from the structure of other nodes in the tree, so it does not add the value of the parameter “depth” in the program. Specifically, all of the branch nodes that construct the tree structure contain a depth parameter and a word. In particular, the depth of the root node is 0, the word “Root” is used to represent the root node, and the other branch nodes store the words that represent the actual log. Same as the method Drain in the paper, if a number appears in a word or the number of nodes in this layer exceeds a predefined value of the parameter “max_children”, the log template will be uniformly located at a special node “<∗>” in the current layer. The leaf node of the log warehouse tree structure stores a list, each element is represents a log cluster structure. The structure contains a log template representing the log cluster and a size variable to save the corresponding log number which is the occurrences of the log type.

In the actual construction of the log warehouse tree structure, the matching rules, and template extraction process based on LCS may change the words of the template, which will lead to the unstable position of the log template in the tree structure. To solve this problem, a template extraction algorithm based on tree depth is used in template extraction. Before getting the log template, the function read the depth parameter first. Then keep the previous depth word unchanged when extracting the template. The specific algorithm is shown in the following

Section 3.3.2. This calculation mode is also more reasonable for the log because the variables of the log usually appear in the back position.

According to the storage mode of the tree structure in

Figure 2, it can be seen that as long as the log warehouse is saved according to the storage format. When a new log comes, it can be matched to the corresponding subset of the log pattern warehouse according to the information at the beginning of the log. Then calculate the LCS between this log and all the log templates in the list of the subset, which greatly reduces the number of log comparisons.

3.3. Log Template Extraction Method

3.3.1. Basic Log Template Extraction Algorithm

The goal of the log template extraction algorithm is to get the constant part of a log and use a special symbol to represent the variable part. Therefore, after obtaining the LCS of the logs, the basic log template extraction algorithm only needs to traverse each word in the LCS once. See Algorithm 1 for detail.

| Algorithm 1 Log Template Extraction Algorithm |

| Input: log template in warehouse , compared log l |

| Output: log template to return |

- 1:

Initialize: set

- 2:

- 3:

- 4:

for

do - 5:

if

then - 6:

- 7:

- 8:

else - 9:

- 10:

end if - 11:

if

then - 12:

break - 13:

end if - 14:

end for - 15:

if

then - 16:

- 17:

end if

|

It can be seen from Algorithm 1 that the log template extraction algorithm does not consider whether the first few characters of the log are variables or not because the algorithm corresponds to the basic log template warehouse, which stores all log templates in a list structure, so regardless of the change of the log template will not affect the position of the template in the list. The algorithm only pays attention to the correspondence between the LCS of the two logs and the different positions of the log to be matched when performing matching.

3.3.2. Optimized Log Template Extraction Algorithm

In the basic log template extraction Algorithm 1, the log template for the basic log type warehouse can be obtained. However, as described in

Section 3.2.2, when the tree structure of the log storage warehouse is used, in order to ensure the stability of the log template, the basic version of the log template extraction algorithm needs to be improved, and the improved log template extraction algorithm needs to ensure the preview depth length of the word at the position does not change. On the other hand, considering that the advantage of LCS is that the constant part of the log can be accurately obtained, the change of the variable part of the word can be obtained through the precise comparison of the constant part. Therefore, in order to make full use of the advantages of LCS, the improved log template extraction algorithm should be able to further confirm whether the variable corresponds to a single variable or a multivariate variable.

In order to meet the above requirements, when the log template extraction algorithm is designed, the

depth parameter needs to be considered first. During the construction of the log template, if the loop has reached depth, end the loop early and add the first

depth words of the logs to be compared directly to the log template. On the other hand, in order to make the information of the final template not only distinguish between variables and constants but also to further refine the variables, the variable part of the algorithm uses two kinds of special signs. One is “<∗>” to express the single variable, the other one is “<+>” to express the multivariate variable. In practice, it is necessary to calculate the longest common subsequence of the two logs to be matched first, and then calculate the lengths of non-matching positions which decision the kinds of special signs to be replaced. If the number of variables between two constant words is greater than or equal to 2, it means that this position is a multivariate variable, so use the special sign <+> for description. Specifically, the log pattern refining algorithm flow is summarized as shown in Algorithm 2.

| Algorithm 2 Log Template Extraction Algorithm Based On Tree Structure |

| Input: log template in warehouse , compared log l, the depth of tree |

| Output: log template to return |

- 1:

Initialize: set

- 2:

- 3:

if

then - 4:

return

- 5:

end if - 6:

for

do - 7:

if

then - 8:

break - 9:

end if - 10:

if

then - 11:

- 12:

- 13:

else - 14:

if

then - 15:

- 16:

else if

then - 17:

- 18:

- 19:

else if

then - 20:

continue - 21:

else - 22:

- 23:

end if - 24:

end if - 25:

end for - 26:

- 27:

|

According to the Algorithm 2, the template of any two logs can be obtained. The advantage of this algorithm is that it not only increases the information of the log template but also makes the word matching rate algorithm of the log more reasonable because the length of the log template is used in the word matching rate algorithm. Obviously, the position of the multivariate variable in the length should not reflect different length information under the change of the variable length.

Finally, the entire online log type classification algorithm process represents the Lmatch algorithm in the subsequent experiments if the basic algorithm of log word matching rate, log type warehouse, and log template extraction algorithm in the previous sections are brought into

Figure 1. The improved algorithm LTmatch proposed in this paper is obtained by compositing the three optimizations into the process shown in Fiure

Figure 1.

3.4. Example Procesure of the LTmatch Algorithm

In this subsection, we will display an example to explain the whole process of the log parsing proceeding. Before feeding the logs to the log parsing algorithm, all the logs will be preprocessed by replaced the frequent signs with a special token surrounded with “<>”. Assuming there are three logs will be fed into the log parsing algorithm. The original logs and preprocessed logs are shown in the second column and the third column in

Table 1.

First, Log1 comes to the online log classification algorithm, the algorithm will search the

Log warehouse, then it finds the Log warehouse is empty. So this piece of the log will be inserted into the log warehouse according to the

depth parameter in Algorithm 2. Assuming we set the

depth to 2. The log will be inserted to the node of “password”. after that, the log warehouse as shown in

Figure 3a.

Second, the log2 comes, the algorithm will search the log warehouse by the first word and the second word of log2, and then it finds that the “Failed” node and “password” existed in the log warehouse. So the log2 will calculate the

word matching rate with all the Log Clusters below the node “password” in turn until the result is bigger than the preset threshold. In this example, there is only one Log Cluster below the node “password”, so Log2 will calculate the word matching rate with the LogCluster in

Figure 3a. Assuming the threshold is 0.45 and the weight is 0.4, the first three words and the word “from” of the Log1 and Log2 match, and Log1 has eleven words, Log2 has six words. Therefore, according to Formula (

3), we can calculate r(log1, log2) = 4/(0.4*11 + 0.6*6) = 0.5 > 0.45. So the two logs belong to one class. Then, the log cluster will be updated. To clearly the

Log Template Extraction Method, we display the two logs to be updated below:

Failed password for invalid user UserNameA from <IP> port <NUM> ssh2

Failed password for UserNameB from <IP> port <NUM> ssh2

If we use Algorithm 1, the template of two logs above will be the

Failed password for <∗><∗><∗> from <IP> port <NUM> ssh2. This result is because the variable is too long to make three <∗> add to the template. If we use the algorithm 2, the result of template will be the

Failed password for <+> from <IP> port <NUM> ssh2. This result will make the template more simple and reasonable. After that, the log warehouse as shown in

Figure 3b.

Third, Log3 comes to the online log classification algorithm, the algorithm will search the Log warehouse, then it finds there is not node “Starting” in the log warehouse. So this piece of the log will be inserted into the log warehouse by constructing the node “Starting” and node “Session”. Then the log warehouse as shown in

Figure 3c.

Following the process described above, logs are added to the log warehouse one by one. We will prove the advantages of this algorithm through experiments in the next section.

4. Experiments

In this section, the accuracy and robustness advantages of the LTmatch log parsing algorithm are determined by experimenting with 16 different log types of data sets and comparing them with a variety of the most advanced log pattern extraction algorithms.

4.1. Dataset

The real-world log data is relatively small because the log information of companies and scientific research institutions usually contains the private information of users. Fortunately, Zhu [

17] published an open-source data set Loghub [

18] in the paper, which contains 16 Logs generated by different systems and platforms. It contains a total of more than 40 million logs, with a capacity of 77 G. For specific log content introduction, please refer to the original paper. In our experiment, we divided these public data into two data sets and then conducted log classification research experiments. The detailed introductions are as follows:

Dataset 1: The sample published by the author of the paper on the GitHub platform is selected. In this sample, 2K logs are randomly selected for 16 types of logs, and then manually marked by professionals, and the corresponding log pattern is obtained.

Dataset 2: The Loghub dataset log was published by the author of the paper, in which 450,000 logs are selected from the BGL and HDFS logs respectively, and then the real results are labeled according to the log template.

4.2. Evaluation Methods

In order to verify the method in this paper as detailed as possible, we conduct experiments through the following evaluation methods and rules.

Accuracy: The ratio of correctly resolved log types to the total number of log types. After parsing, each log message corresponds to an event template, so each event template will correspond to a set of log messages. If and only if this group of messages is exactly the same as a group of log messages corresponding to the real artificially tagged data, the parsing result of the log pattern is considered correct.

To avoid random errors caused by the experiment, we calculated the results of each group of experiments ten times and obtained the average value. For fairness of comparison, we apply the same preprocessing rules (e.g., IP or number replacement) to each log extracting algorithm. The parameters of all the log extracting algorithms are fine-tuned through grid search and the best results are reported. All experiments are performed on a computer with 8 G memory, model Intel(R) Xeon(R) Gold 6148, and CPU clocked at 2.40 GHz.

4.3. The Accuracy of Log Extracting Algorithms

This section conducts the experiment on the accuracy of the log pattern extraction algorithm. The experiment selected dataset 1 as the benchmark, and the comparison methods were the five methods with the highest average accuracy shown in Zhu [

17]. The experimental results are shown in

Table 2. The first five columns in the table are five methods for comparison, and the methods in the last two columns are the methods proposed in this paper. In the contrast method, LenMa uses the clustering algorithm in traditional machine learning to determine the log type. The Sepll algorithm uses the longest common subsequence based on the prefix tree to determine the log type. The difference from the prefix tree in this paper is that the prefix tree is used to store the characters of the longest common subsequence that has appeared. The AEL algorithm uses a heuristic algorithm to get the log type. IPLoM uses iterative splitting technology to fine-grain the types of logs. Drain uses a fixed depth tree to store the log structure. The first layer of its storage tree stores the length of the log, so when comparing, it is guaranteed that each log to be compared is a log of the same length.

In the results shown in

Table 2, each row represents the type of experiment log, and each column represents a different log parsing method. To make the results clearer, the last row in the table (except the last result) shows the average accuracy of the method. The last column in the table is the best accuracy calculated in each log dataset, and the last value is the best average accuracy. For each dataset, the best accuracy is token by a sign “*” and the best accuracy values are also shown in the last column of the table. In particular, the accuracy of all results greater than 0.9 is marked in bold, because these results can be considered to be excellent on these datasets.

From the results of

Table 2, we can find out that LTmatch has achieved the best average accuracy, and the algorithm also contains the most optimal accuracy. Compared with the Lmatch algorithm before optimization, the average accuracy is improved by 9.65%, which shows that the fine-grained division of the tree structure can improve the accuracy of log classification. Compared with other log parsing algorithms, the LTmatch algorithm we proposed is 2.67% better than the best algorithm Drain among the other methods. It proves that the algorithm in this paper can more accurately identify the log type by comparing the longest common subsequence based on the weight.

4.4. Robustness

In this section, in order to verify the robustness of the method we proposed, we use the method of LTmatch to conduct accuracy experiments on log datasets of different volumes, so this experiment chooses the labeled logs in dataset 2 as the experiment object. In comparison, the same method as the previous section was selected for the experiment, and the final experimental result is shown in

Figure 4.

First, the comparison between the optimization method we proposed and the original method is analyzed. It can be seen from

Figure 4 that the initial results of Lmatch are better on HDFS and GBL data, but after a certain amount of logging, the accuracy of the results begins to decrease significantly, which shows that directly using the word matching rate algorithm based on the longest common subsequence has no obvious advantage in parsing log patterns. The accuracy of LTmatch has remained stable when the log capacity changes, indicating that the improved log pattern extraction algorithm has more strong generalization ability than the algorithm before the improvement.

When comparing with all other types of log classification algorithms, it can be seen from the

Figure 4a on the GBL dataset, the initial accuracy of LenMa algorithm, IPLoM algorithm, and Spell algorithm is relatively high, but they have begun to decline when the number of logs reaches 0.45 million. The remaining Drain, AEL, and the LTmatch algorithm we proposed have maintained stable accuracy. On the HDFS dataset in

Figure 4b, except for Spell and the LTmatch algorithm we proposed, the accuracy has been maintained at a high level, and other methods have begun to decline on the number of logs of 0.15 million. It can be seen from the experimental results of the two different log datasets HDFS and GBL on different data volumes that only the LTmatch algorithm can always remain stable when the log type changes. The rest of all other types of log classification algorithms cannot keep a stable accuracy of log classification in different volumes of the logs from multiple datasets. Therefore, the generalization ability of the log classification method we proposed is the best among all the state-of-art methods.

4.5. Efficiency Analysis

In order to analyze the efficiency of the algorithm, this article uses the same dataset as the previous section and uses the same method to classify patterns and record the time on the logs that are split into the same scale. The final experimental results are shown in

Figure 5. It should be noted that in order to make the result clearer, the maximum time period is set to 145 s in

Figure 5a, so the LenMa algorithm is not fully displayed, but the trend of increasing time is already obvious.

First, the improved algorithm LTmatch in this paper is compared with the original algorithm Lmatch. It can be seen that the LTmatch algorithm has improved efficiency in both GBL data and HDFS data, which shows that the optimized algorithm introduces a tree structure for log type storage increases the complexity of the container of the log templates, while it has a better advantage of efficiency.

Compared with all other algorithms, on the GBL dataset, except for the LenMa algorithm, which has a faster increase in time consumption, other algorithms have linear growth. The efficiency of the LTmatch algorithm we proposed can reach the top three. On the HDFS data set, although the algorithm we proposed is relatively backward, it has always been relatively stable and increases linearly. This is because the time complexity of the LTmatch algorithm is

, where

d is representing the depth of the parse tree, and

c is the number of log templates contained in the leaf nodes currently searched.

and

are the number of words in the two logs that were matched separately. Obviously,

are constants.

c is also a constant compared to the increase in the number of logs when the overall algorithm is in progress. Moreover, the number of templates itself is much smaller than the total number of logs, and at the same time, after the tree is divided, the number of templates for each node is even less. Through the above discussion, it can be seen that the LTmatch algorithm has a level of

time complexity. The actual situation of time increase in

Figure 5 is also consistent with it. Therefore, the analysis efficiency of LTmatch is acceptable under the condition of ensuring the highest accuracy.

4.6. Discussion

In this section, we will further discuss the rationality of the LTmatch algorithm proposed in this article.

In order to illustrate the rationality of the weight-based log word matching rate design in the LTmatch algorithm, the ratio of the number of constants and variables in log templates of all the templates in the log dataset 1 is counted. The results are shown in

Table 3. According to the

Table 3, it can be seen that the variable part of the log template is generally less than the length of the constant part, which is very common sense, because in most cases, the variable of the log only records the key change information of the program during operation, and the longer length of the constant part is helpful for the readability of the log. However, the constant part and the variable part of different logs have certain differences, indicating that the proportion of different types of logs corresponding to templates will have certain differences in practice. Therefore, when designing the word matching rate algorithm, the weight is designed so that the algorithm can learn the required weight from the characteristics of the log, thus improving the overall accuracy of the algorithm.