Gesture Recognition of Sign Language Alphabet Using a Magnetic Positioning System

Abstract

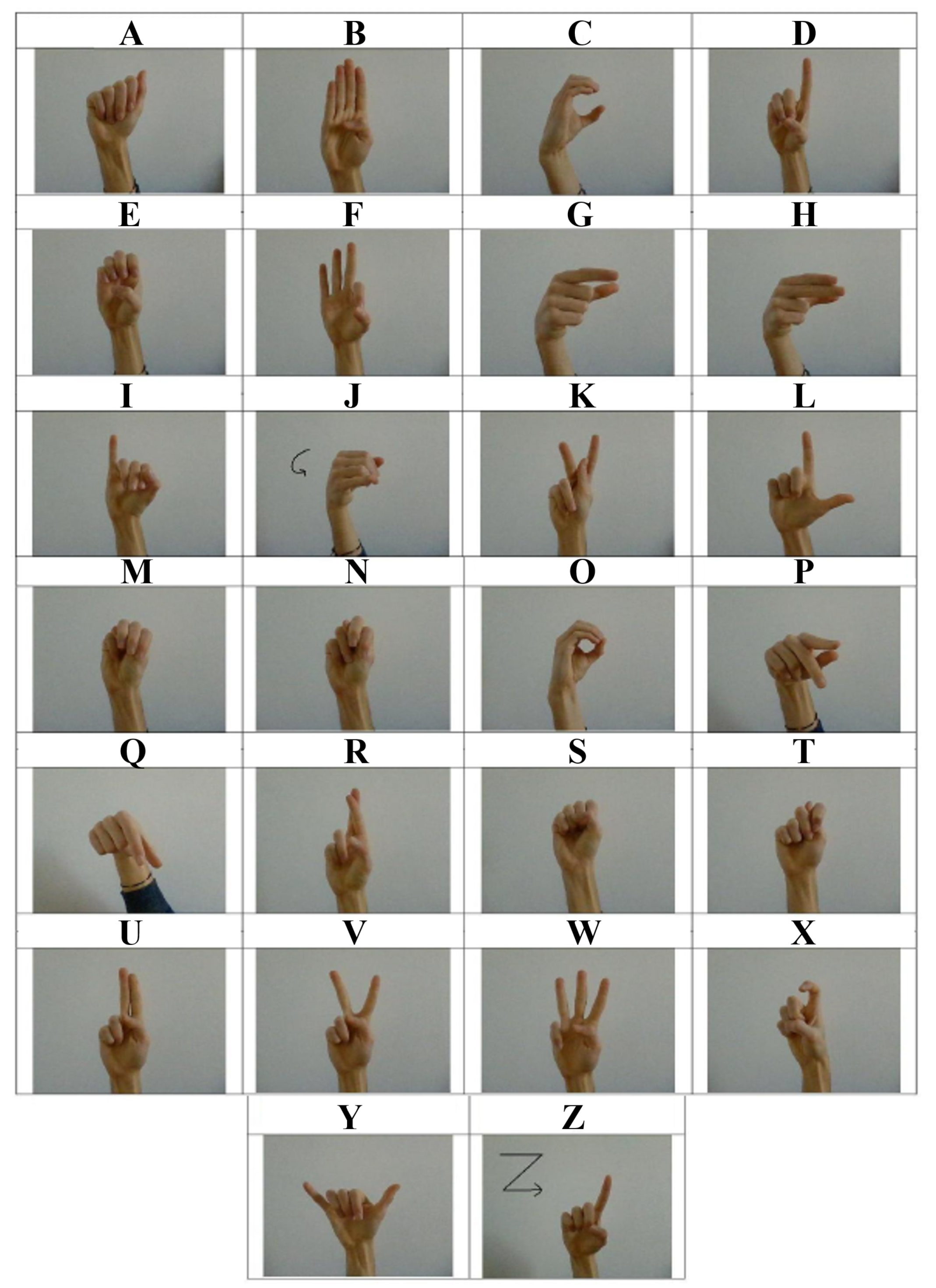

:1. Introduction

- Static or continuous letter/number gesture recognition (classification problem);

- Static or continuous single word recognition (classification problem);

- Sentence-level sign language recognition (NLP problem).

2. Materials and Methods

2.1. Magnetic Positioning System (MPS)

2.1.1. MPS System Architecture

2.1.2. Transmitting Nodes

2.2. Reconstruction of the Hand Gesture

2.3. Machine Learning Algorithm

2.3.1. Support Vector Machine

2.3.2. Description of Tools Used

- A palm detection model that takes as input a 2D image and returns an oriented bounding box that contains the detected hand;

- A hand landmark model that takes as input the output box from the previous model and returns a prediction of the hand skeleton with landmark points, a number indicating the probability of hand presence in the cropped image and a binary classification of handedness, i.e., if the detected hand is a left or a right hand.

2.3.3. Description of the Dataset

2.3.4. Models

- Model A.

- Only the fingertips, i.e., the points corresponding to labels 4, 8, 12, 16, 20 of Figure 10, are used as input. The reference system used is the one chosen by MediaPipe: the origin of the xy plane is placed at the top-left corner, while the origin of the z axis is placed at the wrist. The x and y are normalized to [0, 1] by dividing by image width and height, respectively, while z uses approximately the same scale as x [51]. The model is validated by using raw data extracted from six nodes of the magnetic positioning system. The hand kinematic model is not used. The additional node is the one placed at the wrist as it was necessary to determine the origin of the z axis.

- Model B.

- The coordinates of the 21 hand landmarks given by MediaPipe are used as the input. The reference system used is the same as Model A, but this time, the model is validated by using data from the magnetic positioning system that have been processed using the kinematic hand model described in Section 2.2. This allowed us to reconstruct the position of 21 points within the hand, while using only seven transmitting nodes in the MPS.

- Model C.

- The same features chosen for Model B are used, but a reference coordinate system aligned to the hand is considered. Specifically, the x versor has the same direction of the vector that connect the endpoints of themiddle metacarpal bone, the z versor is orthogonal to the plane in which metacarpal bones of the index finger and middle finger lie, and the y versor is the cross product between the x and z versor. The main advantage is that this model is also capable of recognizing gestures when the hand is rotated at an arbitrary orientation.

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Encyclopedia Britannica, “Sign Language”. 12 November 2020. Available online: https://www.britannica.com/topic/sign-language (accessed on 12 May 2021).

- Eberhard, D.M.; Simons, G.F.; Fennig, C.D. (Eds.) Ethnologue: Languages of the World, 24th ed.; SIL International: Dallas, TX, USA, 2021; Available online: https://www.ethnologue.com/subgroups/sign-language (accessed on 12 May 2021).

- World Federation of the Deaf. Available online: http://wfdeaf.org/our-work/ (accessed on 12 May 2021).

- Wadhawan, A.; Kumar, P. Sign Language Recognition Systems: A Decade Systematic Literature Review. Arch. Comput. Methods Eng. 2019, 28, 785–813. [Google Scholar] [CrossRef]

- Fingerspelling. Wikipedia. Available online: https://en.wikipedia.org/wiki/Fingerspelling (accessed on 12 May 2021).

- Cooper, H.; Holt, B.; Bowden, R. Sign Language Recognition. In Visual Analysis of Humans; Moeslund, T., Hilton, A., Krüger, V., Sigal, L., Eds.; Springer: London, UK, 2011. [Google Scholar]

- Dong, J.; Tang, Z.; Zhao, Q. Gesture recognition in augmented reality assisted assembly training. J. Phys. Conf. Ser. 2019, 1176, 032030. [Google Scholar] [CrossRef]

- Ascari Schultz, R.E.O.; Silva, L.; Pereira, R. Personalized interactive gesture recognition assistive technology. In Proceedings of the 18th Brazilian Symposium on Human Factors in Computing Systems, Vitória, Brazil, 22–25 October 2019. [Google Scholar] [CrossRef]

- Kakkoth, S.S.; Gharge, S. Real Time Hand Gesture Recognition and its Applications in Assistive Technologies for Disabled. In Proceedings of the Fourth International Conference on Computing Communication Control and Automation (ICCUBEA), Pune, India, 16–18 August 2018. [Google Scholar] [CrossRef]

- Simão, M.A.; Gibaru, O.; Neto, P. Online Recognition of Incomplete Gesture Data to Interface Collaborative Robots. IEEE Trans. Ind. Electron. 2019, 66, 9372–9382. [Google Scholar] [CrossRef]

- Ding, I.; Chang, C.; He, C. A kinect-based gesture command control method for human action imitations of humanoid robots. In Proceedings of the 2014 International Conference on Fuzzy Theory and Its Applications (iFUZZY2014), Kaohsiung, Taiwan, 26–28 November 2014; pp. 208–211. [Google Scholar] [CrossRef]

- Yang, S.; Lee, S.; Byun, Y. Gesture Recognition for Home Automation Using Transfer Learning. In Proceedings of the 2018 International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Bangkok, Thailand, 21–24 October 2018; pp. 136–138. [Google Scholar] [CrossRef]

- Ye, Q.; Yang, L.; Xue, G. Hand-free Gesture Recognition for Vehicle Infotainment System Control. In Proceedings of the 2018 IEEE Vehicular Networking Conference (VNC), Taipei, Taiwan, 5–7 December 2018; pp. 1–2. [Google Scholar] [CrossRef]

- Akhtar, Z.U.A.; Wang, H. WiFi-Based Gesture Recognition for Vehicular Infotainment System—An Integrated Approach. Appl. Sci. 2019, 9, 5268. [Google Scholar] [CrossRef] [Green Version]

- Meng, Y.; Li, J.; Zhu, H.; Liang, X.; Liu, Y.; Ruan, N. Revealing your mobile password via WiFi signals: Attacks and countermeasures. IEEE Trans. Mob. Comput. 2019, 19, 432–449. [Google Scholar] [CrossRef]

- Cheok, M.J.; Omar, Z.; Jaward, M.H. A review of hand gesture and sign language recognition techniques. Int. J. Mach. Learn. Cyber. 2019, 10, 131–153. [Google Scholar] [CrossRef]

- Elakkiya, R. Machine learning based sign language recognition: A review and its research frontier. J. Ambient. Intell. Hum. Comput. 2020. [Google Scholar] [CrossRef]

- Rastgoo, R.; Kiani, K.; Escalera, S. Sign Language Recognition: A Deep Survey. Expert Syst. Appl. 2021, 164. [Google Scholar] [CrossRef]

- Sharma, S.; Kumar, K. ASL-3DCNN: American sign language recognition technique using 3-D convolutional neural networks. Multimed. Tools Appl. 2021. [Google Scholar] [CrossRef]

- Luqman, H.; El-Alfy, E.S.; BinMakhashen, G.M. Joint space representation and recognition of sign language fingerspelling using Gabor filter and convolutional neural network. Multimed. Tools Appl. 2021, 80, 10213–10234. [Google Scholar] [CrossRef]

- Shi, B.; Del Rio, A.M.; Keane, J.; Michaux, J.; Brentari, D.; Shakhnarovich, G.; Livescu, K. American sign language fingerspelling recognition in the wild. In Proceedings of the 2018 IEEE Spoken Language Technology Workshop (SLT), Athens, Greece, 18–21 December 2018; pp. 145–152. [Google Scholar] [CrossRef] [Green Version]

- Jiang, X.; Zhang, Y.D. Chinese sign language fingerspelling via six-layer convolutional neural network with leaky rectified linear units for therapy and rehabilitation. J. Med. Imaging Health Inform. 2019, 9, 2031–2090. [Google Scholar] [CrossRef]

- Tao, W.; Leu, M.C.; Yin, Z. American Sign Language alphabet recognition using Convolutional Neural Networks with multiview augmentation and inference fusion. Eng. Appl. Artif. Intell. 2018, 76, 202–213. [Google Scholar] [CrossRef]

- Bird, J.J.; Ekárt, A.; Faria, D.R. British Sign Language Recognition via Late Fusion of Computer Vision and Leap Motion with Transfer Learning to American Sign Language. Sensors 2020, 20, 5151. [Google Scholar] [CrossRef] [PubMed]

- Barbhuiya, A.A.; Karsh, R.K.; Jain, R. CNN based feature extraction and classification for sign language. Multimed. Tools Appl. 2021, 80, 3051–3069. [Google Scholar] [CrossRef]

- Warchoł, D.; Kapuściński, T.; Wysocki, M. Recognition of Fingerspelling Sequences in Polish Sign Language Using Point Clouds Obtained from Depth Images. Sensors 2019, 19, 1078. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, C.K.M.; Ng, K.K.H.; Chen, C.-H.; Lau, H.C.W.; Chung, S.Y.; Tsoi, T. American sign language recognition and training method with recurrent neural network. Expert Syst. Appl. 2021, 167, 114403. [Google Scholar] [CrossRef]

- Rastgoo, R.; Kiani, K.; Escalera, S. Multi-Modal Deep Hand Sign Language Recognition in Still Images Using Restricted Boltzmann Machine. Entropy 2018, 20, 809. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chong, T.-W.; Lee, B.-G. American Sign Language Recognition Using Leap Motion Controller with Machine Learning Approach. Sensors 2018, 18, 3554. [Google Scholar] [CrossRef] [Green Version]

- Pezzuoli, F.; Corona, D.; Corradini, M.L.; Cristofaro, A. Development of a Wearable Device for Sign Language Translation. In Human Friendly Robotics; Ficuciello, F., Ruggiero, F., Finzi, A., Eds.; Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- Yuan, G.; Liu, X.; Yan, Q.; Qiao, S.; Wang, Z.; Yuan, L. Hand Gesture Recognition Using Deep Feature Fusion Network Based on Wearable Sensors. IEEE Sensors J. 2020, 21, 539–547. [Google Scholar] [CrossRef]

- Ahmed, M.A.; Zaidan, B.B.; Zaidan, A.A.; Salih, M.M.; Al-qaysi, Z.T.; Alamoodi, A.H. Based on wearable sensory device in 3D-printed humanoid: A new real-time sign language recognition system. Measurement 2021, 108431. [Google Scholar] [CrossRef]

- Khomami, S.A.; Shamekhi, S. Persian sign language recognition using IMU and surface EMG sensors. Measurement 2021, 108471. [Google Scholar] [CrossRef]

- Siddiqui, N.; Chan, R.H.M. Hand Gesture Recognition Using Multiple Acoustic Measurements at Wrist. IEEE Trans. Hum. Mach. Syst. 2021, 51, 56–62. [Google Scholar] [CrossRef]

- Zhao, T.; Liu, J.; Wang, Y.; Liu, H.; Chen, Y. Towards Low-Cost Sign Language Gesture Recognition Leveraging Wearables. IEEE Trans. Mob. Comput. 2021, 20, 1685–1701. [Google Scholar] [CrossRef]

- Santoni, F.; De Angelis, A.; Moschitta, A.; Carbone, P. A Multi-Node Magnetic Positioning System with a Distributed Data Acquisition Architecture. Sensors 2020, 20, 6210. [Google Scholar] [CrossRef]

- Santoni, F.; De Angelis, A.; Skog, I.; Moschitta, A.; Carbone, P. Calibration and Characterization of a Magnetic Positioning System Using a Robotic Arm. IEEE Trans. Instrum. Meas. 2019, 68, 1494–1502. [Google Scholar] [CrossRef]

- Santoni, F.; De Angelis, A.; Moschitta, A.; Carbone, P. MagIK: A Hand-Tracking Magnetic Positioning System Based on a Kinematic Model of the Hand. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Moschitta, A.; De Angelis, A.; Santoni, F.; Dionigi, M.; Carbone, P.; De Angelis, G. Estimation of the Magnetic Dipole Moment of a Coil Using AC Voltage Measurements. IEEE Trans. Instrum. Meas. 2018, 67, 2495–2503. [Google Scholar] [CrossRef]

- Cypress Semiconductor, “CYBLE-222014-01 EZ-BLE™ Creator Module”. Available online: https://www.cypress.com/file/230691/download (accessed on 20 April 2021).

- Heydon, R. Bluetooth Low Energy: The Developer’s Handbook; Prentice Hall: Indianapolis, IN, USA, 2013. [Google Scholar]

- Craig, J.J. Introduction to Robotics, Mechanics and Control, 3rd ed.; Pearson: London, UK, 1986. [Google Scholar]

- Greiner, T.M. Hand Anthropometry of U.S. Army Personnel; Technical Report Natick/TR-92/011; U.S. Army Natick Research, Development and Engineering Center Natick: Natick, MA, USA, 1991.

- Lee, J.; Kunii, T.L. Constraint-Based Hand Animation. In Models and Techniques in Computer Animation; Thalmann, N.M., Thalmann, D., Eds.; Springer: Tokyo, Japan, 1993; pp. 110–127. [Google Scholar]

- Rosenblatt, F. The Perceptron—A Perceiving and Recognizing Automaton; Technical Report 85-460-1; Cornell Aeronautical Laboratory: 1957. Available online: https://blogs.umass.edu/brain-wars/files/2016/03/rosenblatt-1957.pdf (accessed on 19 May 2021).

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer Science and Business Media: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Gunn, S.R. Support Vector Machines for Classification and Regression; Technical Report; University of Southampton: Southampton, UK, 1998. [Google Scholar]

- Zanaty, E.; Afifi, A. Support Vector Machines (SVMs) with Universal Kernels. Appl. Artif. Intell. 2011, 25, 575–589. [Google Scholar] [CrossRef]

- Jordan, M.; Thibaux, R. The Kernel Trick, CS281B/Stat241B: Advanced Topics in Learning and Decision Making. Available online: https://people.eecs.berkeley.edu/~jordan/courses/281B-spring04/lectures/lec3.pdf (accessed on 16 June 2021).

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.; Yong, M.; Lee, J.; et al. MediaPipe: A Framework for Building Perception Pipelines. 2019. Available online: https://arxiv.org/abs/1906.08172 (accessed on 19 May 2021).

- MediaPipe Hands. Available online: https://google.github.io/mediapipe/solutions/hands.html (accessed on 25 April 2021).

- Zhang, F.; Bazarevsky, V.; Vakunov, A.; Tkachenka, A.; Sung, G.; Chang, C.; Grundmann, M. MediaPipe Hands: On-Device Real-Time Hand Tracking. 2020. Available online: https://arxiv.org/abs/2006.10214 (accessed on 19 May 2021).

- ASL Sign Language Alphabet Pictures [Minus J, Z]. Available online: https://www.kaggle.com/signnteam/asl-sign-language-pictures-minus-j-z (accessed on 25 April 2021).

| Joint | a | d | |||

|---|---|---|---|---|---|

| 5 | DIP | 0 | 0 | ||

| 4 | PIP | 0 | 0 | ||

| 3 | MCP,f | 0 | 0 | ||

| 2 | MCP,a | 0 | |||

| 1 | CMC,f | 0 | 0 |

| Joint | a | d | |||

|---|---|---|---|---|---|

| d | IP | 0 | 0 | ||

| c | MCP | 0 | |||

| b | CMC,f | 0 | 0 | 0 | |

| a | CMC,a | 0 | 0 |

| Thumb | Index | Middle | Ring | Pinkie | |

|---|---|---|---|---|---|

| N.D. | N.D. | N.D. | N.D. | ||

| 0 | |||||

| N.D. | |||||

| N.D. | |||||

| c | i = I | II | III | IV | V |

|---|---|---|---|---|---|

| j= 1 | 0.332 | 0.316 | 0.328 | 0.326 | 0.364 |

| 2 | 0.279 | 0.387 | 0.360 | 0.359 | 0.332 |

| 3 | 0.273 | 0.153 | 0.173 | 0.165 | 0.140 |

| 4 | N.D | 0.192 | 0.186 | 0.200 | 0.218 |

| Model A | Model B | Model C | |

|---|---|---|---|

| Signer 1 | 97.01 | 97.92 | 76.77 |

| Signer 2 | 93.52 | 97.51 | 74.37 |

| Signer 3 | 94.84 | 94.68 | 81.47 |

| Global | 94.95 | 96.79 | 77.19 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rinalduzzi, M.; De Angelis, A.; Santoni, F.; Buchicchio, E.; Moschitta, A.; Carbone, P.; Bellitti, P.; Serpelloni, M. Gesture Recognition of Sign Language Alphabet Using a Magnetic Positioning System. Appl. Sci. 2021, 11, 5594. https://doi.org/10.3390/app11125594

Rinalduzzi M, De Angelis A, Santoni F, Buchicchio E, Moschitta A, Carbone P, Bellitti P, Serpelloni M. Gesture Recognition of Sign Language Alphabet Using a Magnetic Positioning System. Applied Sciences. 2021; 11(12):5594. https://doi.org/10.3390/app11125594

Chicago/Turabian StyleRinalduzzi, Matteo, Alessio De Angelis, Francesco Santoni, Emanuele Buchicchio, Antonio Moschitta, Paolo Carbone, Paolo Bellitti, and Mauro Serpelloni. 2021. "Gesture Recognition of Sign Language Alphabet Using a Magnetic Positioning System" Applied Sciences 11, no. 12: 5594. https://doi.org/10.3390/app11125594

APA StyleRinalduzzi, M., De Angelis, A., Santoni, F., Buchicchio, E., Moschitta, A., Carbone, P., Bellitti, P., & Serpelloni, M. (2021). Gesture Recognition of Sign Language Alphabet Using a Magnetic Positioning System. Applied Sciences, 11(12), 5594. https://doi.org/10.3390/app11125594