A Predictive Analytics Infrastructure to Support a Trustworthy Early Warning System

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

3.1. University Description

3.2. Data Source: The Universitat Oberta de Catalunya Data Mart

3.3. European ALTAI Guidelines

- Human agency and oversight;

- Technical robustness and safety;

- Privacy and data governance;

- Transparency;

- Diversity, non-discrimination, and fairness;

- Societal and environmental well-being;

- Accountability.

4. The Predictive Analytics Infrastructure and the Early Warning System

4.1. The Predictive Analytics Infrastructure

4.1.1. Microservices Infrastructure

- Microservices: Each service has been developed independently and scoped on a single purpose. Docker technology [66] has been used to containerize each service.

- Infrastructure as a code: Complete infrastructure is managed by Docker Compose [69] with a single configuration file obtaining the latest version of each service from the Docker Registry.

- Monitoring and Logging: Although Docker Compose provides a simple logging process to monitor the services, the client service provides several dashboards to supervise batch tasks run by the different microservices.

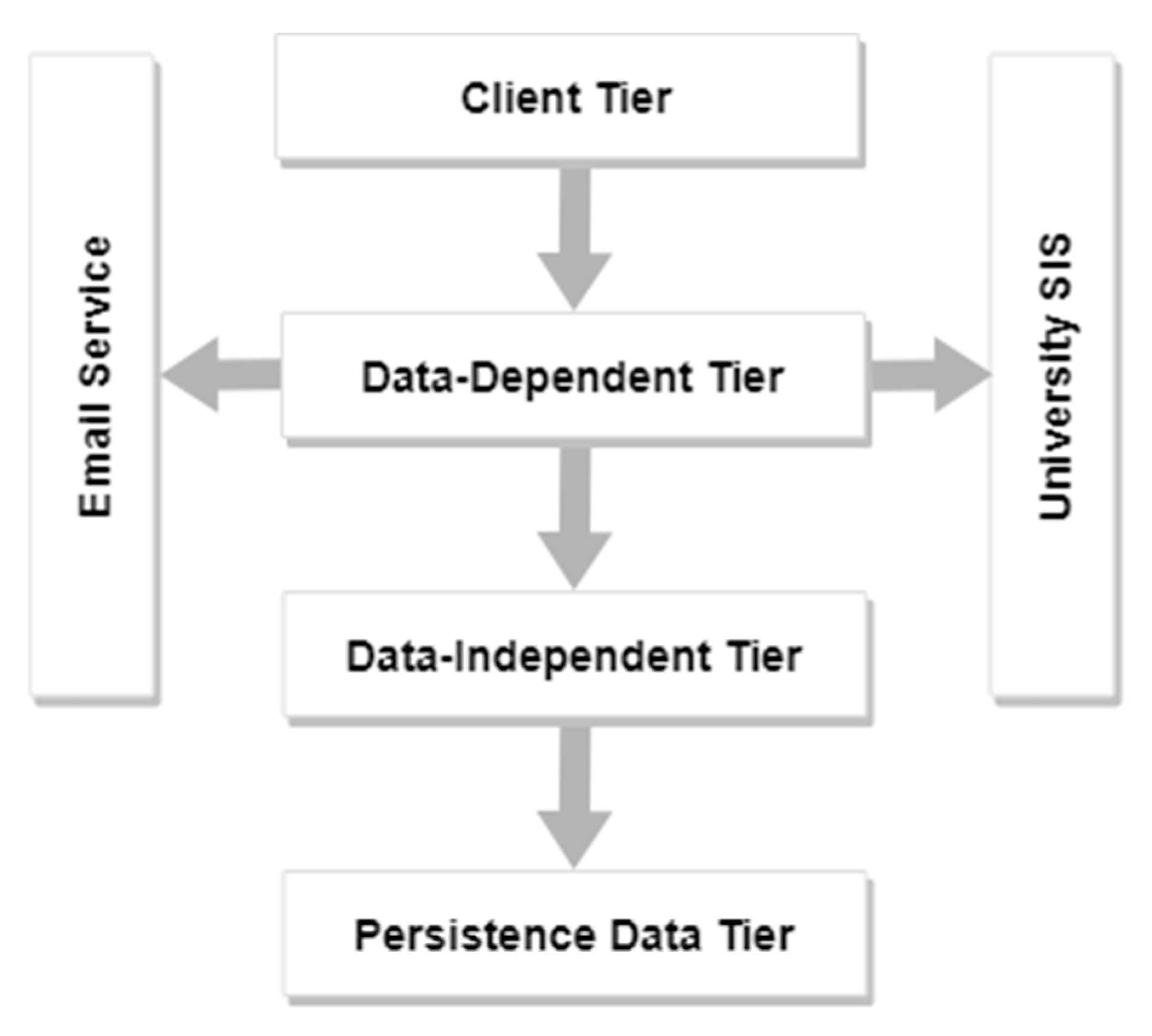

4.1.2. Four-Tier Architecture

4.1.3. UOC Predictive Analytics Infrastructure

- The learner can see the warning level of failing a course, and they have access to personalized recommendations.

- The instructor teacher guides and monitors the learner’s learning process throughout a specific online classroom in a course. They can also send recommendations and see the assigned warning levels in their classroom.

- The coordinating teacher designs the course and is ultimately responsible for guaranteeing that the learners receive the highest quality teaching. They can configure the system for the course, manage recommendations, and have a full view of the learners’ progress.

- The administrator manages the whole system. They can configure available models for courses and can access the logs and monitoring tools of the system.

- The developer manages the low-level details of the infrastructure. They can run specific operations on the DI tier, schedule tasks, check models, and access the logging information of the system.

4.1.4. JSON-Based Query Language for ETL Process

4.1.5. JSON-Based Query Language for Model Creation

4.2. The Early Warning System

4.2.1. Profiled Gradual At-Risk Model

4.2.2. Next Activity At-Risk Simulation

| PrAA1(Fail?) = (Profile, N) | → | Fail? = Yes |

| PrAA1(Fail?) = (Profile, D) | → | Fail? = Yes |

| PrAA1(Fail?) = (Profile, C−) | → | Fail? = Yes |

| PrAA1(Fail?) = (Profile, C+) | → | Fail? = Yes |

| PrAA1(Fail?) = (Profile, B) | → | Fail? = No |

| PrAA1(Fail?) = (Profile, A) | → | Fail? = No |

4.2.3. Warning Level Classification and Intervention Mechanism

5. Results and Discussion

5.1. Human Agency and Oversight

5.2. Technical Robustness and Safety

5.3. Privacy and Data Governance

5.4. Transparency

5.5. Diversity, Non-Discrimination, and Fairness

5.6. Societal and Environmental Well-Being

5.7. Accountability

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yu, T.; Jo, I.-H. Educational Technology Approach Toward Learning Analytics. In Proceedings of the Fourth International Conference on Learning Analytics And Knowledge—LAK ’14, Indianapolis, IN, USA, 24–28 March 2014; ACM Press: New York, NY, USA, 2014; pp. 269–270. [Google Scholar]

- Delgado Kloos, C.; Alario-Hoyos, C.; Estevez-Ayres, I.; Munoz-Merino, P.J.; Ibanez, M.B.; Crespo-Garcia, R.M. Boosting Interaction with Educational Technology. In Proceedings of the 2017 IEEE Global Engineering Education Conference, EDUCON, Athens, Greece, 25–28 April 2017; IEEE: New York, NY, USA, 2017; pp. 1763–1767. [Google Scholar]

- Craig, S.D. Tutoring and Intelligent Tutoring Systems; Nova Science Publishers, Incorporated: New York, NY, USA, 2018. [Google Scholar]

- Romero, C.; Ventura, S. Data mining in education. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2013, 3, 12–27. [Google Scholar] [CrossRef]

- Ratnapala, I.P.; Ragel, R.G.; Deegalla, S. Students behavioural analysis in an online learning environment using data mining. In Proceedings of the 7th International Conference on Information and Automation for Sustainability, Colombo, Sri Lanka, 22–24 December 2014; IEEE: New York, NY, USA, 2014; pp. 1–7. [Google Scholar]

- Romero, C.; Ventura, S.; Espejo, P.G.; Hervás, C. Data mining algorithms to classify students. In Proceedings of the Educational Data Mining 2008—1st International Conference on Educational Data Mining, Montreal, QC, Canada, 20–21 June 2008; Joazeiro de Baker, R.S., Barnes, T., Beck, J.E., Eds.; International Educational Data Mining Society: Boston, MA, USA, 2008; pp. 8–17. [Google Scholar]

- Wolff, A.; Zdrahal, Z.; Herrmannova, D.; Knoth, P. Predicting Student Performance from Combined Data Sources. In Educational Data Mining: Applications and Trends; Peña-Ayala, A., Ed.; Springer International Publisher: Cham, Switzerland, 2014; Volume 524, pp. 175–202. [Google Scholar]

- Marbouti, F.; Diefes-Dux, H.A.; Madhavan, K. Models for early prediction of at-risk students in a course using standards-based grading. Comput. Educ. 2016, 103, 1–15. [Google Scholar] [CrossRef]

- Xing, W.; Chen, X.; Stein, J.; Marcinkowski, M. Temporal predication of dropouts in MOOCs: Reaching the low hanging fruit through stacking generalization. Comput. Hum. Behav. 2016, 58, 119–129. [Google Scholar] [CrossRef]

- Sun, H. Research on the Innovation and Reform of Art Education and Teaching in the Era of Big Data. In Cyber Security Intelligence and Analytics 2021 International Conference on Cyber Security Intelligence and Analytics (CSIA 2021), 19–20 March, Shenyang, China; Xu, Z., Parizi, R.M., Loyola-González, O., Zhang, X., Eds.; Springer: Cham, Switzerland, 2021; Volume 1343, pp. 734–741. [Google Scholar]

- Holmes, W.; Porayska-Pomsta, K.; Holstein, K.; Sutherland, E.; Baker, T.; Shum, S.B.; Santos, O.C.; Rodrigo, M.T.; Cukurova, M.; Bittencourt, I.I.; et al. Ethics of AI in Education: Towards a Community-Wide Framework. Int. J. Artif. Intell. Educ. 2021, 31, 1–23. [Google Scholar]

- European Commission. Fostering a European Approach to Artificial Intelligence. Available online: https://ec.europa.eu/newsroom/dae/document.cfm?doc_id=75790 (accessed on 31 May 2021).

- ANSI Standarization Emporwering AI-Enabled Systems in Healthcare: Workshop Report. Available online: https://share.ansi.org/Shared%20Documents/News%20and%20Publications/Links%20Within%20Stories/Empowering%20AI-Enabled%20Systems,%20Workshop%20Report.pdf (accessed on 31 May 2021).

- Stanton, B.; Jensen, T. Trust and Artificial Intelligence; NIST Interagency/Internal Report (NISTIR); National Institute of Standards and Technology: Gaithersburg, MD, USA, 2021. Available online: https://tsapps.nist.gov/publication/get_pdf.cfm?pub_id=931087 (accessed on 31 May 2021).

- The IEEE Global Initiative. Ethically Aligned Design: A Vision for Prioritizing Human Well-Being with Autonomous and Intelligent Systems; IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems: New York, NY, USA, 2017. [Google Scholar]

- ICO. Guidance on the AI Auditing Framework: Draft Guidance for Consultation. Available online: https://ico.org.uk/media/about-the-ico/consultations/2617219/guidance-on-the-ai-auditing-framework-draft-for-consultation.pdf (accessed on 31 May 2021).

- High-Level Expert Group on Artificial Intelligence (AI HLEG). Assessment List for Trustworthy AI (ALTAI). Available online: https://ec.europa.eu/digital-single-market/en/news/assessment-list-trustworthy-artificial-intelligence-altai-self-assessment (accessed on 31 May 2021).

- International Organization for Standardization. Overview of Trustworthiness in Artificial Intelligence (ISO/IEC TR 24028:2020). Available online: https://www.iso.org/standard/77608.html (accessed on 31 May 2021).

- Karadeniz, A.; Bañeres Besora, D.; Rodríguez González, M.E.; Guerrero Roldán, A.E. Enhancing ICT Personalized Education through a Learning Intelligent System. In Proceedings of the Online, Open and Flexible Higher Education Conference, Madrid, Spain, 16–18 October 2019; pp. 142–147. [Google Scholar]

- Oates, B.J. Researching Information Systems and Computing; SAGE: London, UK, 2006. [Google Scholar]

- Vaishnavi, V.; Kuechler, W. Design Research in Information Systems. Available online: http://www.desrist.org/design-research-in-information-systems/ (accessed on 31 May 2021).

- Baneres, D.; Karadeniz, A.; Guerrero-Roldán, A.E.; Rodríguez, M.E. A Predictive System for Supporting At-Risk Students’ Identification. In Proceedings of the Future Technologies Conference (FTC), Vancouver, BC, Canada, 5–6 November 2020; Arai, K., Kapoor, S., Bhatia, R., Eds.; Springer: Cham, Switzerland, 2021; Volume 1288, pp. 891–904. [Google Scholar]

- Queiroga, E.M.; Lopes, J.L.; Kappel, K.; Aguiar, M.; Araújo, R.M.; Munoz, R.; Villarroel, R.; Cechinel, C. A Learning Analytics Approach to Identify Students at Risk of Dropout: A Case Study with a Technical Distance Education Course. Appl. Sci. 2020, 10, 3998. [Google Scholar] [CrossRef]

- Wan, H.; Liu, K.; Yu, Q.; Gao, X. Pedagogical Intervention Practices: Improving Learning Engagement Based on Early Prediction. IEEE Trans. Learn. Technol. 2019, 12, 278–289. [Google Scholar] [CrossRef]

- Kostopoulos, G.; Karlos, S.; Kotsiantis, S. Multiview Learning for Early Prognosis of Academic Performance: A Case Study. IEEE Trans. Learn. Technol. 2019, 12, 212–224. [Google Scholar] [CrossRef]

- The European Parliament and the Council of the European Union. Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data. Off. J. Eur. Union 2016, 59, 1–88. [Google Scholar]

- Drachsler, H.; Greller, W. Privacy and analytics. In Proceedings of the Sixth International Conference on Learning Analytics & Knowledge—LAK ’16, Edinburgh, UK, 25–29 April 2016; ACM Press: New York, NY, USA, 2016; pp. 89–98. [Google Scholar]

- SEG Education Committee. Principles for the Use of University-held Student Personal Information for Learning Analytics at The University of Sydney. Available online: https://www.sydney.edu.au/education-portfolio/images/common/learning-analytics-principles-april-2016.pdf (accessed on 31 May 2021).

- The Open University. Policy on Ethical Use of Student Data for Learning Analytics. Available online: https://help.open.ac.uk/documents/policies/ethical-use-of-student-data/files/22/ethical-use-of-student-data-policy.pdf (accessed on 31 May 2021).

- Kazim, E.; Koshiyama, A. AI Assurance Processes. SSRN Electron. J. 2020. [Google Scholar] [CrossRef]

- Floridi, L. Establishing the rules for building trustworthy AI. Nat. Mach. Intell. 2019, 1, 261–262. [Google Scholar] [CrossRef]

- House of Lords. AI in the UK: Ready, Willing and Able? Available online: https://publications.parliament.uk/pa/ld201719/ldselect/ldai/100/100.pdf (accessed on 31 May 2021).

- Future of Life Institute. Asilomar AI Principles. Available online: https://futureoflife.org/ai-principles/ (accessed on 31 May 2021).

- Wiens, J.; Saria, S.; Sendak, M.; Ghassemi, M.; Liu, V.X.; Doshi-Velez, F.; Jung, K.; Heller, K.; Kale, D.; Saeed, M.; et al. Do no harm: A roadmap for responsible machine learning for health care. Nat. Med. 2019, 25, 1337–1340. [Google Scholar] [CrossRef]

- Thiebes, S.; Lins, S.; Sunyaev, A. Trustworthy artificial intelligence. Electron. Mark. 2020, 31, 1–18. [Google Scholar] [CrossRef]

- High-Level Independent Group on Artificial Intelligence (AI HLEG). Ethics Guidelines for Trustworthy AI. Available online: https://ec.europa.eu/digital (accessed on 31 May 2021).

- Sundaramurthy, A.H.; Raviprakash, N.; Devarla, D.; Rathis, A. Machine Learning and Artificial Intelligence. XRDS Crossroads ACM Mag. Stud. 2019, 25, 93–103. [Google Scholar]

- Floridi, L.; Cowls, J.; Beltrametti, M.; Chatila, R.; Chazerand, P.; Dignum, V.; Luetge, C.; Madelin, R.; Pagallo, U.; Rossi, F.; et al. AI4People—An Ethical Framework for a Good AI Society: Opportunities, Risks, Principles, and Recommendations. Minds Mach. 2018, 28, 689–707. [Google Scholar] [CrossRef]

- Hsu, C.-C. Factors affecting webpage’s visual interface design and style. Procedia Comput. Sci. 2011, 3, 1315–1320. [Google Scholar] [CrossRef][Green Version]

- Sijing, L.; Lan, W. Artificial Intelligence Education Ethical Problems and Solutions. In Proceedings of the 2018 13th International Conference on Computer Science & Education (ICCSE), Colombo, Sri Lanka, 8–11 August 2018; IEEE: New York, NY, USA, 2018; pp. 1–5. [Google Scholar]

- Vincent-Lancrin, S.; van der Vlies, R. Trustworthy Artificial Intelligence (AI) in Education; In OECD Education Working Papers, No. 218; OECD Publishing: Paris, France, 2020. [Google Scholar]

- Persico, D.; Pozzi, F. Informing learning design with learning analytics to improve teacher inquiry. Br. J. Educ. Technol. 2015, 46, 230–248. [Google Scholar] [CrossRef]

- Franzoni, V.; Milani, A.; Mengoni, P.; Piccinato, F. Artificial Intelligence Visual Metaphors in E-Learning Interfaces for Learning Analytics. Appl. Sci. 2020, 10, 7195. [Google Scholar] [CrossRef]

- Williamson, B. The hidden architecture of higher education: Building a big data infrastructure for the ‘smarter university. Int. J. Educ. Technol. High. Educ. 2018, 15, 1–26. [Google Scholar] [CrossRef]

- Villegas-Ch, W.; Arias-Navarrete, A.; Palacios-Pacheco, X. Proposal of an Architecture for the Integration of a Chatbot with Artificial Intelligence in a Smart Campus for the Improvement of Learning. Sustainability 2020, 12, 1500. [Google Scholar] [CrossRef]

- Prandi, C.; Monti, L.; Ceccarini, C.; Salomoni, P. Smart Campus: Fostering the Community Awareness Through an Intelligent Environment. Mob. Netw. Appl. 2020, 25, 945–952. [Google Scholar] [CrossRef]

- Parsaeefard, S.; Tabrizian, I.; Leon-Garcia, A. Artificial Intelligence as a Service (AI-aaS) on Software-Defined Infrastructure. In Proceedings of the 2019 IEEE Conference on Standards for Communications and Networking (CSCN), Granada, Spain, 28–30 October 2019; IEEE: New York, NY, USA, 2019; pp. 1–7. [Google Scholar]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education–Where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 39. [Google Scholar] [CrossRef]

- Li, X.; Zhang, T. An exploration on artificial intelligence application: From security, privacy and ethic perspective. In Proceedings of the 2017 IEEE 2nd International Conference on Cloud Computing and Big Data Analysis (ICCCBDA), Chengdu, China, 28–30 April 2017; IEEE: New York, NY, USA, 2017; pp. 416–420. [Google Scholar]

- Alonso, J.M.; Casalino, G. Explainable Artificial Intelligence for Human-Centric Data Analysis in Virtual Learning Environments. In Higher Education Learning Methodologies and Technologies Online; HELMeTO 2019. Communications in Computer and Information Science; Burgos, D., Cimitile, M., Ducange, P., Pecori, R., Picerno, P., Raviolo, P., Stracke, C.M., Eds.; Springer: Cham, Switzerland, 2019; Volume 1091, pp. 125–138. [Google Scholar]

- Anwar, M. Supporting Privacy, Trust, and Personalization in Online Learning. Int. J. Artif. Intell. Educ. 2020, 1–15. [Google Scholar] [CrossRef]

- Borenstein, J.; Howard, A. Emerging challenges in AI and the need for AI ethics education. AI Ethics 2021, 1, 61–65. [Google Scholar] [CrossRef]

- Lepri, B.; Oliver, N.; Letouzé, E.; Pentland, A.; Vinck, P. Fair, Transparent, and Accountable Algorithmic Decision-making Processes: The Premise, the Proposed Solutions, and the Open Challenges. Philos. Technol. 2018, 31, 611–627. [Google Scholar] [CrossRef]

- Dignum, V. Responsible Artificial Intelligence: Designing AI for Human Values. ICT Discov. 2017, 1, 1–8. [Google Scholar]

- Qin, F.; Li, K.; Yan, J. Understanding user trust in artificial intelligence-based educational systems: Evidence from China. Br. J. Educ. Technol. 2020, 51, 1693–1710. [Google Scholar] [CrossRef]

- Bogina, V.; Hartman, A.; Kuflik, T.; Shulner-Tal, A. Educating Software and AI Stakeholders About Algorithmic Fairness, Accountability, Transparency and Ethics. Int. J. Artif. Intell. Educ. 2021, 1–26. [Google Scholar]

- Minguillón, J.; Conesa, J.; Rodríguez, M.E.; Santanach, F. Learning analytics in practice: Providing E-learning researchers and practitioners with activity data. In Frontiers of Cyberlearning: Emerging Technologies for Teaching and Learning; Spector, J.M., Kumar, V., Essa, A., Huang, Y.-M., Koper, R., Tortorella, R.A.W., Chang, T.-W., Li, Y., Zhang, Z., Eds.; Springer: Singapore, 2018; pp. 145–167. [Google Scholar]

- del Blanco, A.; Serrano, A.; Freire, M.; Martinez-Ortiz, I.; Fernandez-Manjon, B. E-Learning Standards and Learning Analytics. Can Data Collection be Improved by Using Standard Data Models? In Proceedings of the 2013 IEEE Global Engineering Education Conference (EDUCON), Berlin, Germany, 13–15 March 2013; IEEE: New York, NY, USA, 2013; pp. 1255–1261. [Google Scholar]

- Anisimov, A.A. Review of the data warehouse toolkit. ACM SIGMOD Rec. 2003, 32, 101–102. [Google Scholar] [CrossRef]

- DeCandia, G.; Hastorun, D.; Jampani, M.; Kakulapati, G.; Lakshman, A.; Pilchin, A.; Sivasubramanian, S.; Vosshall, P.; Vogels, W. Dynamo: Amazon’s highly available key-value store. ACM SIGOPS Oper. Syst. Rev. 2007, 41, 205–220. [Google Scholar] [CrossRef]

- White, T. Hadoop: The Definitive Guide; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2012. [Google Scholar]

- Bass, L. The Software Architect and DevOps. IEEE Softw. 2017, 35, 8–10. [Google Scholar] [CrossRef]

- Balalaie, A.; Heydarnoori, A.; Jamshidi, P. Migrating to Cloud-Native Architectures Using Microservices: An Experience Report. In Advances in Service-Oriented and Cloud Computing; ESOCC 2015 Communications in Computer and Information Science; Celesti, A., Leitner, P., Eds.; Springer: Cham, Switzerland, 2016; Volume 567, pp. 201–215. [Google Scholar]

- Balalaie, A.; Heydarnoori, A.; Jamshidi, P. Microservices Architecture Enables DevOps: Migration to a Cloud-Native Architecture. IEEE Softw. 2016, 33, 42–52. [Google Scholar] [CrossRef]

- Pahl, C.; Jamshidi, P. Microservices: A Systematic Mapping Study. In Proceedings of the 6th International Conference on Cloud Computing and Services Science, Rome, Italy, 23–25 April 2016; SCITEPRESS: Setubal, Portugal, 2016; Volume 1, pp. 137–146. [Google Scholar]

- Merkel, D. Docker: Lightweight Linux containers for consistent development and deployment. Linux J. 2014, 2014, 2. [Google Scholar]

- Choudhury, P.; Crowston, K.; Dahlander, L.; Minervini, M.S.; Raghuram, S. GitLab: Work where you want, when you want. J. Organ. Des. 2020, 9, 1–17. [Google Scholar] [CrossRef]

- Siqueira, R.; Camarinha, D.; Wen, M.; Meirelles, P.; Kon, F. Continuous delivery: Building trust in a large-scale, complex government organization. IEEE Softw. 2018, 35, 38–43. [Google Scholar] [CrossRef]

- Jangla, K. Docker Compose. In Accelerating Development Velocity Using Docker; Apress: Berkeley, CA, USA, 2018; pp. 77–98. [Google Scholar]

- Grozev, N.; Buyya, R. Multi-cloud provisioning and load distribution for three-tier applications. ACM Trans. Auton. Adapt. Syst. 2014, 9, 1–21. [Google Scholar] [CrossRef]

- Sharma, R.; Mathur, A. Traefik for Microservices. In Traefik API Gateway for Microservices; Apress: Berkeley, CA, USA, 2021; pp. 159–190. [Google Scholar]

- Casters, M.; Bouman, R.; Dongen, J. Van Pentaho Kettle Solutions: Building Open Source ETL Solutions with Pentaho Data Integration; John Wiley: Hoboken, NJ, USA, 2010. [Google Scholar]

- Team, R.C. R Language Definition; R Foundation for Statistical Computing: Vienna, Austria, 2000. [Google Scholar]

- Etzkorn, L.H. RESTful Web Services; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2021. [Google Scholar]

- Leiba, B. Oauth web authorization protocol. IEEE Internet Comput. 2012, 16, 74–77. [Google Scholar] [CrossRef]

- da Silva, M.D.; Tavares, H.L. Redis Essentials; Packt Publishing Ltd.: Birmingham, UK, 2015. [Google Scholar]

- Bañeres, D.; Rodríguez, M.E.; Guerrero-Roldán, A.E.; Karadeniz, A. An early warning system to detect at-risk students in online higher education. Appl. Sci. 2020, 10, 4427. [Google Scholar] [CrossRef]

- Wang, H.; Park, S.; Fan, W.; Yu, P.S. ViSt: A Dynamic Index Method for Querying XML Data by Tree Structures. In Proceedings of the ACM SIGMOD International Conference on Management of Data, San Diego, CA, USA, 9–12 June 2003; ACM: New York, NY, USA, 2003; pp. 110–121. [Google Scholar]

- Fuhr, N.; Großjohann, K. XIRQL: An XML query language based on information retrieval concepts. ACM Trans. Inf. Syst. 2004, 22, 313–356. [Google Scholar] [CrossRef]

- Florescu, D.; Fourny, G. JSONiq: The history of a query language. IEEE Internet Comput. 2013, 17, 86–90. [Google Scholar] [CrossRef]

- Chamberlin, D.; Robie, J.; Florescu, D. Quilt: An XML Query Language for Heterogeneous Data Sources. In The World Wide Web and Databases. WebDB 2000. Lecture Notes in Computer Science; Goos, G., Hartmanis, J., Leeuwen, J.V., Suciu, D., Vossen, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2001; Volume 1997, pp. 1–25. [Google Scholar]

- Fraga, D.C.; Priyatna, F.; Perez, I.S. Virtual Statistics Knowledge Graph Generation from CSV files. In Proceedings of the Emerging Topics in Semantic Technologies—{ISWC} 2018 Satellite Events, Monterey, CA, USA, 1 October 2018; IOS Press: Amsterdam, The Netherlands, 2018; Volume 36, pp. 235–244. [Google Scholar]

- Sujan, M.; Furniss, D.; Grundy, K.; Grundy, H.; Nelson, D.; Elliott, M.; White, S.; Habli, I.; Reynolds, N. Human factors challenges for the safe use of artificial intelligence in patient care. BMJ Health Care Inform. 2019, 26, e100081. [Google Scholar] [CrossRef] [PubMed]

- Koulu, R. Proceduralizing control and discretion: Human oversight in artificial intelligence policy. Maastrich. J. Eur. Comp. Law 2020, 27, 720–735. [Google Scholar] [CrossRef]

- Chambers, J.M. Linear models. In Statistical Models in S; Chambers, J.M., Hastie, T., Eds.; Chapman and Hall: New York, NY, USA, 2017; pp. 95–144. [Google Scholar]

- Thilagam, P.S.; Ananthanarayana, V.S. Semantic Partition Based Association Rule Mining across Multiple Databases Using Abstraction. In Proceedings of the Sixth International Conference on Machine Learning and Applications (ICMLA 2007), Cincinnati, OH, USA, 13–15 December 2007; IEEE: Los Alamitos, CA, USA, 2007; pp. 81–86. [Google Scholar]

- Universitat Oberta de Catalunya. Privacy Policy. Available online: https://www.uoc.edu/portal/en/_peu/avis_legal/politica-privacitat/index.html (accessed on 31 May 2021).

- Carabantes, M. Black-box artificial intelligence: An epistemological and critical analysis. AI Soc. 2020, 35, 309–317. [Google Scholar] [CrossRef]

- Barredo Arrieta, A.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Wix, J. Data classification. In Constructing the Future: nD Modelling, 1st ed.; Aouad, G., Lee, A., Wu, S., Eds.; Routledge: London, UK, 2006; pp. 209–225. [Google Scholar]

- Morrison, C.; Cutrell, E.; Dhareshwar, A.; Doherty, K.; Thieme, A.; Taylor, A. Imagining artificial intelligence applications with people with visual disabilities using tactile ideation. In Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility—ASSETS 2017, Baltimore, MD, USA, 20 October–1 November 2017; ACM Press: New York, NY, USA, 2017; pp. 81–90. [Google Scholar]

- Trewin, S. AI Fairness for People with Disabilities: Point of View. arXiv 2018, arXiv:1811.10670. [Google Scholar]

- WebAIM. Wave—WAVE is a free Web Accessibility Evaluation Tool. Available online: https://wave.webaim.org/sitewide (accessed on 31 May 2021).

- Iftikhar, S.; Guerrero-Roldán, A.E.; Mor, E.; Bañeres, D. User Experience Evaluation of an e-Assessment System. In Learning and Collaboration Technologies. Designing, Developing and Deploying Learning Experiences—HCII 2020; Springer: Cham, Switzerland, 2020; Volume 12205, pp. 77–91. [Google Scholar]

- Bañeres, D.; Karadeniz, A.; Guerrero-Roldan, A.E.; Rodriguez, M.E. Data legibility and meaningful visualization through a learning intelligent system dashboard. In Proceedings of the 14th International Technology, Education and Development Conference—INTED2020, Valencia, Spain, 2–4 March, 2020; IATED Academy: Valencia, Spain, 2020; Volume 1, pp. 5991–5995. [Google Scholar]

- Rodríguez, M.E.; Guerrero-Roldán, A.E.; Baneres, D.; Karadeniz, A. Towards an Intervention Mechanism for Supporting Learners Performance in Online Learning. In Proceedings of the 12th annual International Conference of Education, Research and Innovation—ICERI2019, Sevilla, Spain, 9–11 November 2019; pp. 5136–5145. [Google Scholar]

- Baneres, D.; Karadeniz, A.; Guerrero-Roldán, A.-E.; Rodríguez-Gonzalez, M.E.; Serra, M. Analysis of the accuracy of an early warning system for learners at-risk: A case study. In Proceedings of the 11th International Conference on Education and New Learning Technologies—EDULEARN19, Palma, Spain, 1–3 July 2019; IATED Academy: Valencia, Spain, 2019; Volume 1, pp. 1289–1297. [Google Scholar]

- Guerrero-Roldán, A.-E.; Rodríguez-González, M.E.; Bañeres, D.; Elasri, A.; Cortadas, P. Experiences in the use of an adaptive intelligent system to enhance online learners’ performance: A case study in Economics and Business courses. Int. J. Educ. Technol. High. Educ. 2021, in press. [Google Scholar]

- Vinichenko, M.V. The Impact of Artificial Intelligence on Behavior of People in the Labor Market. J. Adv. Res. Dyn. Control Syst. 2020, 12, 526–532. [Google Scholar] [CrossRef]

- Frank, M.R.; Autor, D.; Bessen, J.E.; Brynjolfsson, E.; Cebrian, M.; Deming, D.J.; Feldman, M.; Groh, M.; Lobo, J.; Moro, E.; et al. Toward understanding the impact of artificial intelligence on labor. Proc. Natl. Acad. Sci. USA 2019, 116, 6531–6539. [Google Scholar] [CrossRef]

- Selwyn, N. Should Robots Replace Teachers? AI and the Future of Education; John Wiley: Hoboken, NJ, USA, 2019. [Google Scholar]

- Cope, B.; Kalantzis, M.; Searsmith, D. Artificial intelligence for education: Knowledge and its assessment in AI-enabled learning ecologies. Educ. Philos. Theory 2020, 1–17. [Google Scholar] [CrossRef]

- Mahmoud, A.A.; Elkatatny, S.; Ali, A.Z.; Abouelresh, M.; Abdulraheem, A. Evaluation of the total organic carbon (TOC) using different artificial intelligence techniques. Sustainability 2019, 11, 5643. [Google Scholar] [CrossRef]

- García-Martín, E.; Rodrigues, C.F.; Riley, G.; Grahn, H. Estimation of energy consumption in machine learning. J. Parallel Distrib. Comput. 2019, 134, 75–88. [Google Scholar] [CrossRef]

- Mahajan, D.; Zong, Z. Energy efficiency analysis of query optimizations on MongoDB and Cassandra. In Proceedings of the 2017 Eighth International Green and Sustainable Computing Conference (IGSC), Orlando, FL, USA, 23–25 October 2017; IEEE: New York, NY, USA, 2017; pp. 1–6. [Google Scholar]

| Requirement | Self-Assessment | |

|---|---|---|

| Human agency and oversight | Human agency and autonomy |

|

| Human oversight |

| |

| Technical robustness and safety | Security |

|

| Reliability and reproducibility |

| |

| Accuracy |

| |

| Privacy and data governance | Privacy |

|

| Data governance |

| |

| Requirement | Self-Assessment | |

|---|---|---|

| Transparency | Traceability |

|

| Explainability |

| |

| Communication |

| |

| Diversity, non-discrimination, and fairness | Avoidance of unfair bias |

|

| Accessibility |

| |

| Stakeholder participation |

| |

| Societal and environmental well-being | Environmental well-being |

|

| Impact on work and skills |

| |

| Accountability | Auditability |

|

| Risk management |

| |

| Semester Timeline | 10% | 20% | 30% | 40% | 50% | 60% | 70% | 80% | 90% |

|---|---|---|---|---|---|---|---|---|---|

| ACC | 77.56 | 80.86 | 83.72 | 86.20 | 88.43 | 90.46 | 92.39 | 93.99 | 95.25 |

| TNR | 84.79 | 86.30 | 87.78 | 89.30 | 90.95 | 92.46 | 94.04 | 95.54 | 96.91 |

| TPR | 55.71 | 64.51 | 71.70 | 77.40 | 81.88 | 85.42 | 88.53 | 90.47 | 91.22 |

| F1.5 | 53.50 | 61.00 | 67.50 | 73.10 | 77.97 | 81.75 | 85.36 | 88.14 | 90.05 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baneres, D.; Guerrero-Roldán, A.E.; Rodríguez-González, M.E.; Karadeniz, A. A Predictive Analytics Infrastructure to Support a Trustworthy Early Warning System. Appl. Sci. 2021, 11, 5781. https://doi.org/10.3390/app11135781

Baneres D, Guerrero-Roldán AE, Rodríguez-González ME, Karadeniz A. A Predictive Analytics Infrastructure to Support a Trustworthy Early Warning System. Applied Sciences. 2021; 11(13):5781. https://doi.org/10.3390/app11135781

Chicago/Turabian StyleBaneres, David, Ana Elena Guerrero-Roldán, M. Elena Rodríguez-González, and Abdulkadir Karadeniz. 2021. "A Predictive Analytics Infrastructure to Support a Trustworthy Early Warning System" Applied Sciences 11, no. 13: 5781. https://doi.org/10.3390/app11135781

APA StyleBaneres, D., Guerrero-Roldán, A. E., Rodríguez-González, M. E., & Karadeniz, A. (2021). A Predictive Analytics Infrastructure to Support a Trustworthy Early Warning System. Applied Sciences, 11(13), 5781. https://doi.org/10.3390/app11135781