CNN-Based Defect Inspection for Injection Molding Using Edge Computing and Industrial IoT Systems

Abstract

:1. Introduction

2. Background and Related Work

2.1. Defect Detection for the Injection Molding Process

2.2. CNN

2.3. Edge Computing

2.4. Industrial IoT Systems

3. CNN-Based Defect Inspection for Injection Molding

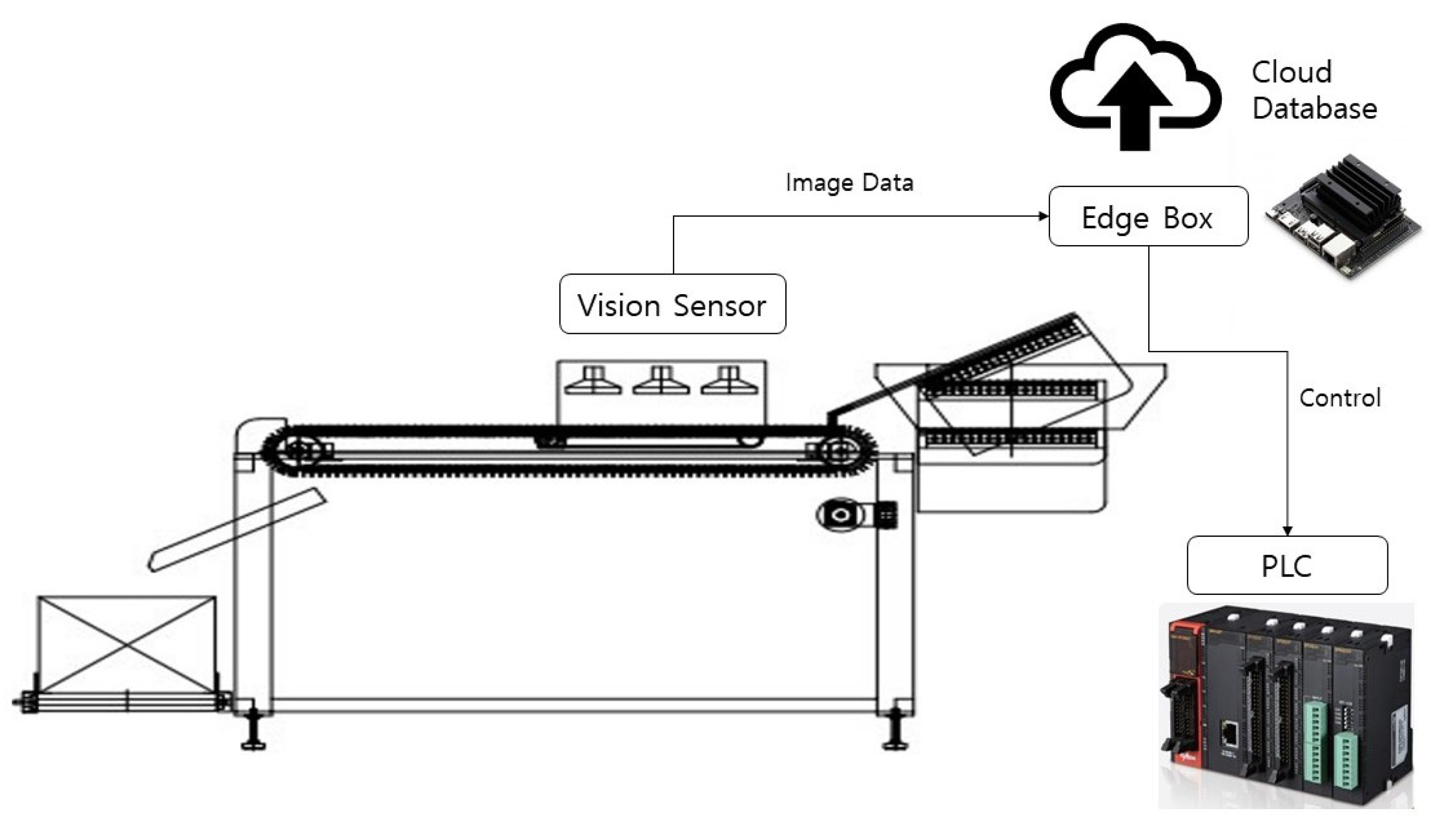

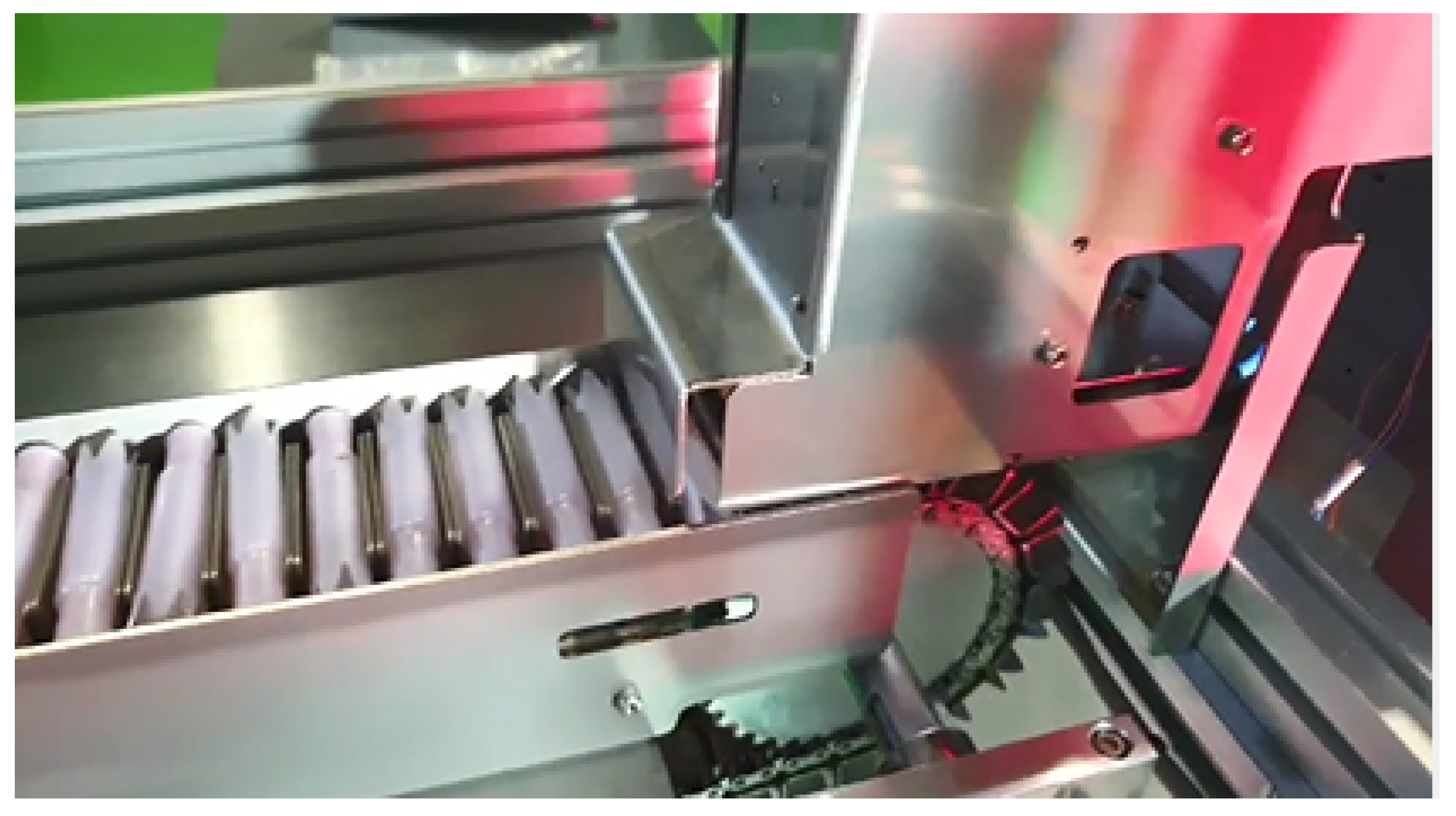

3.1. System Architecture

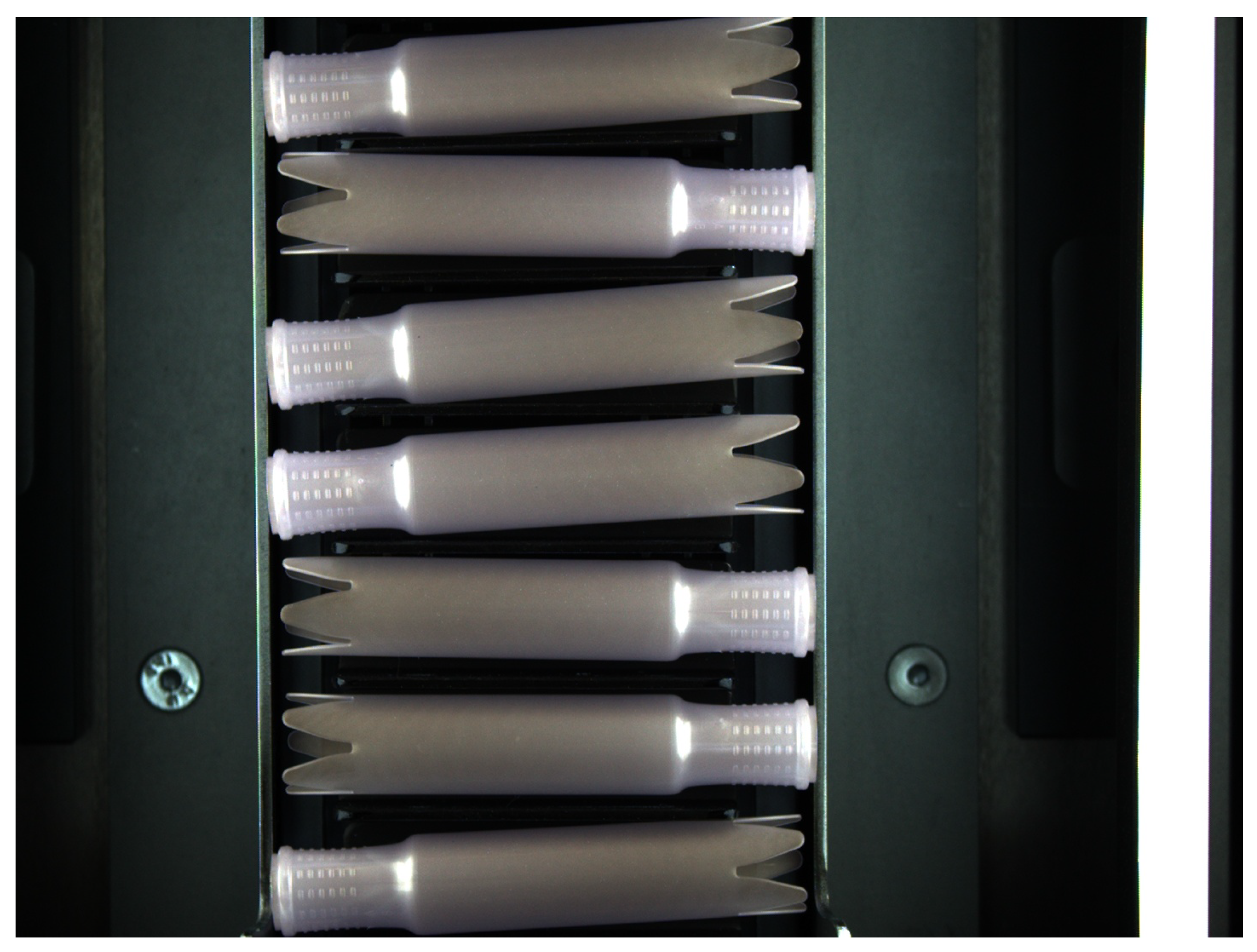

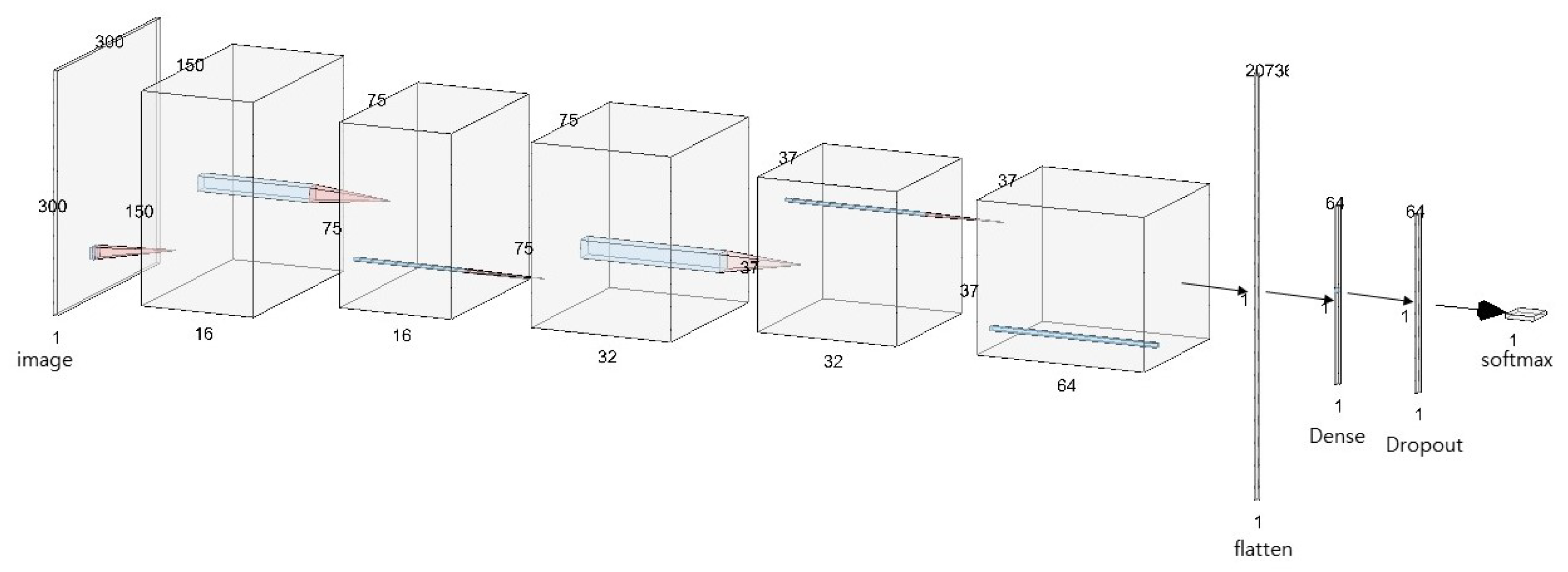

3.2. Defect Detection

4. Experiment and Result Analysis

4.1. Experiment Environment

4.2. Evaluation Metrics

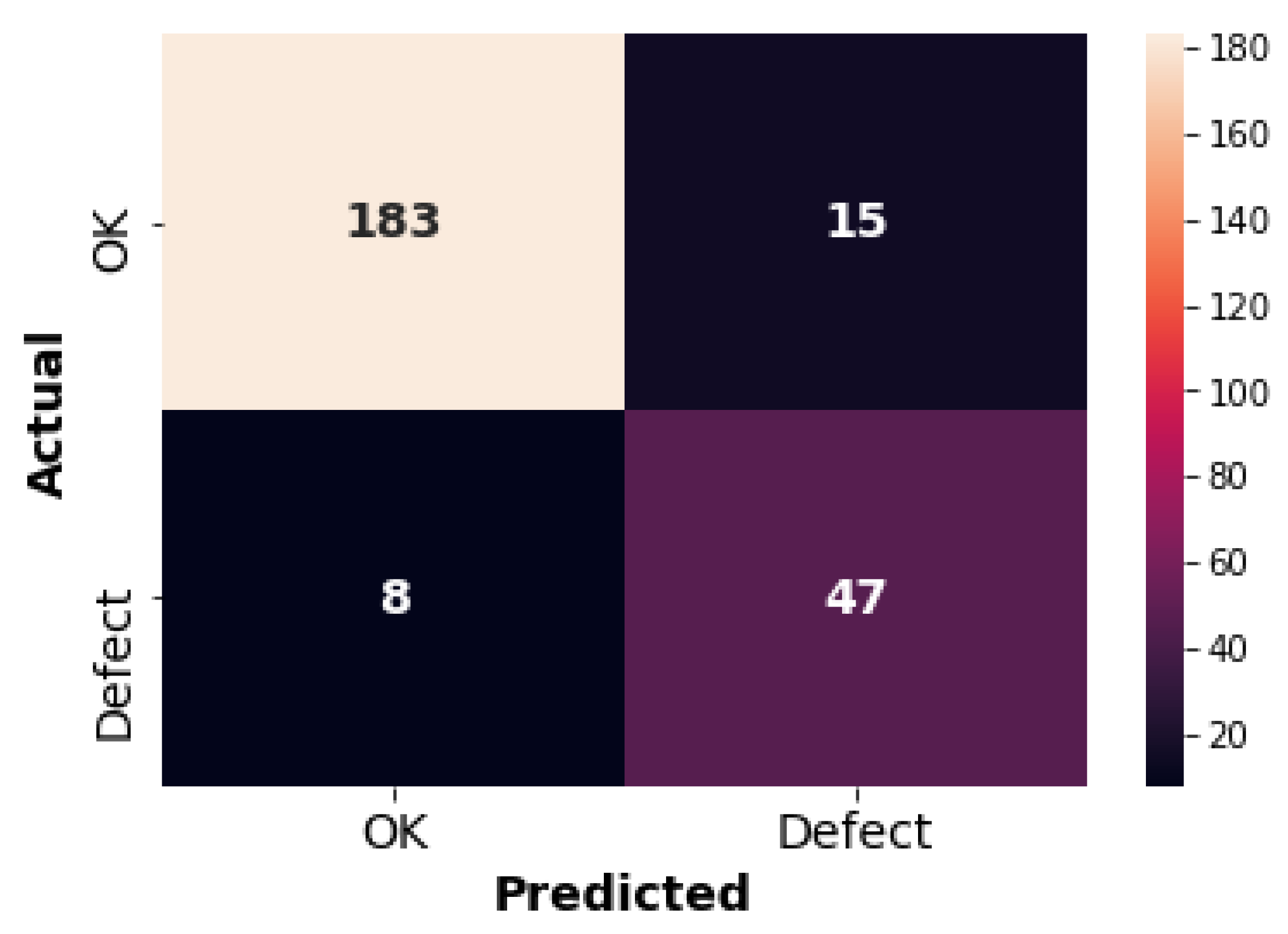

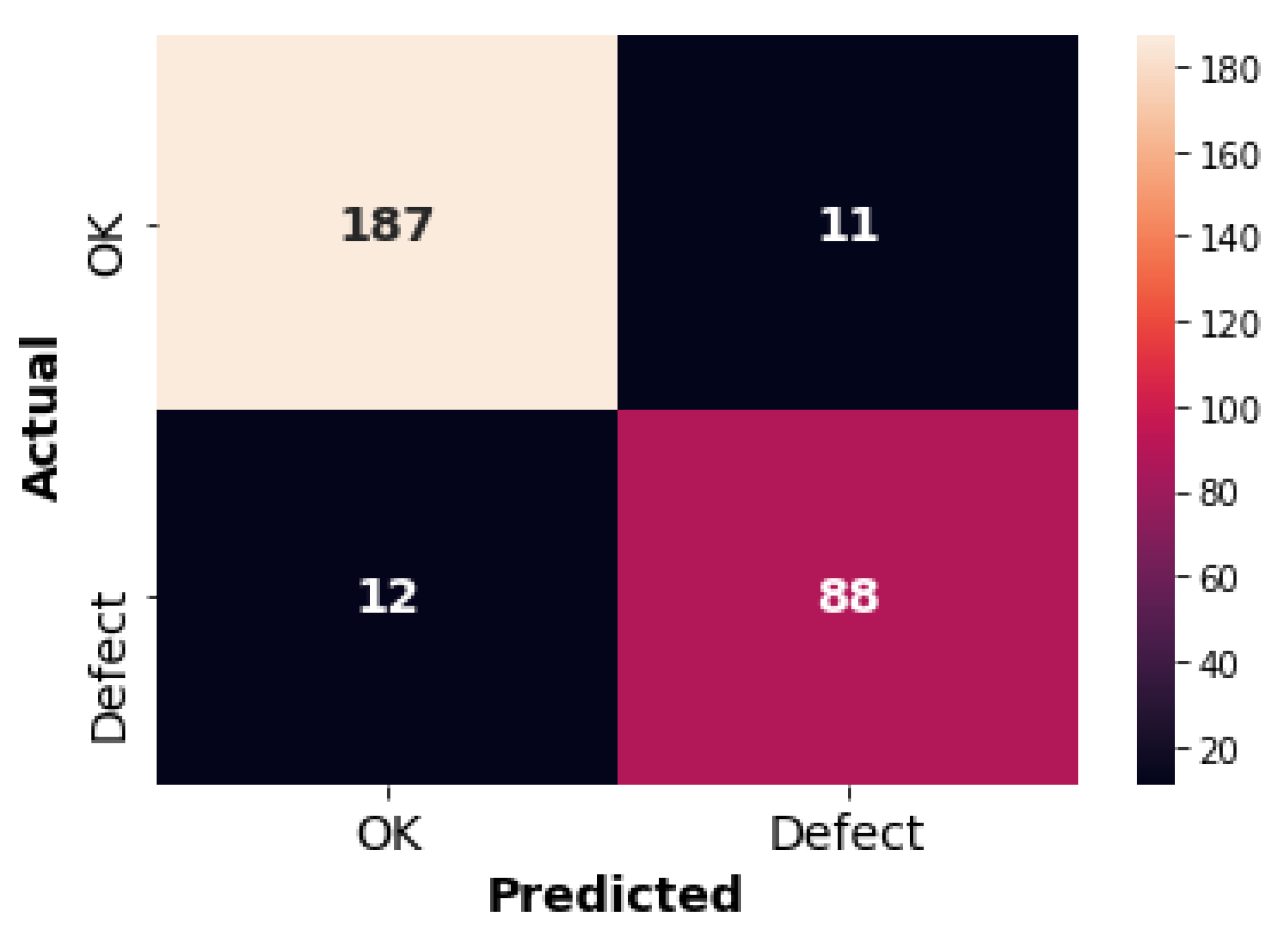

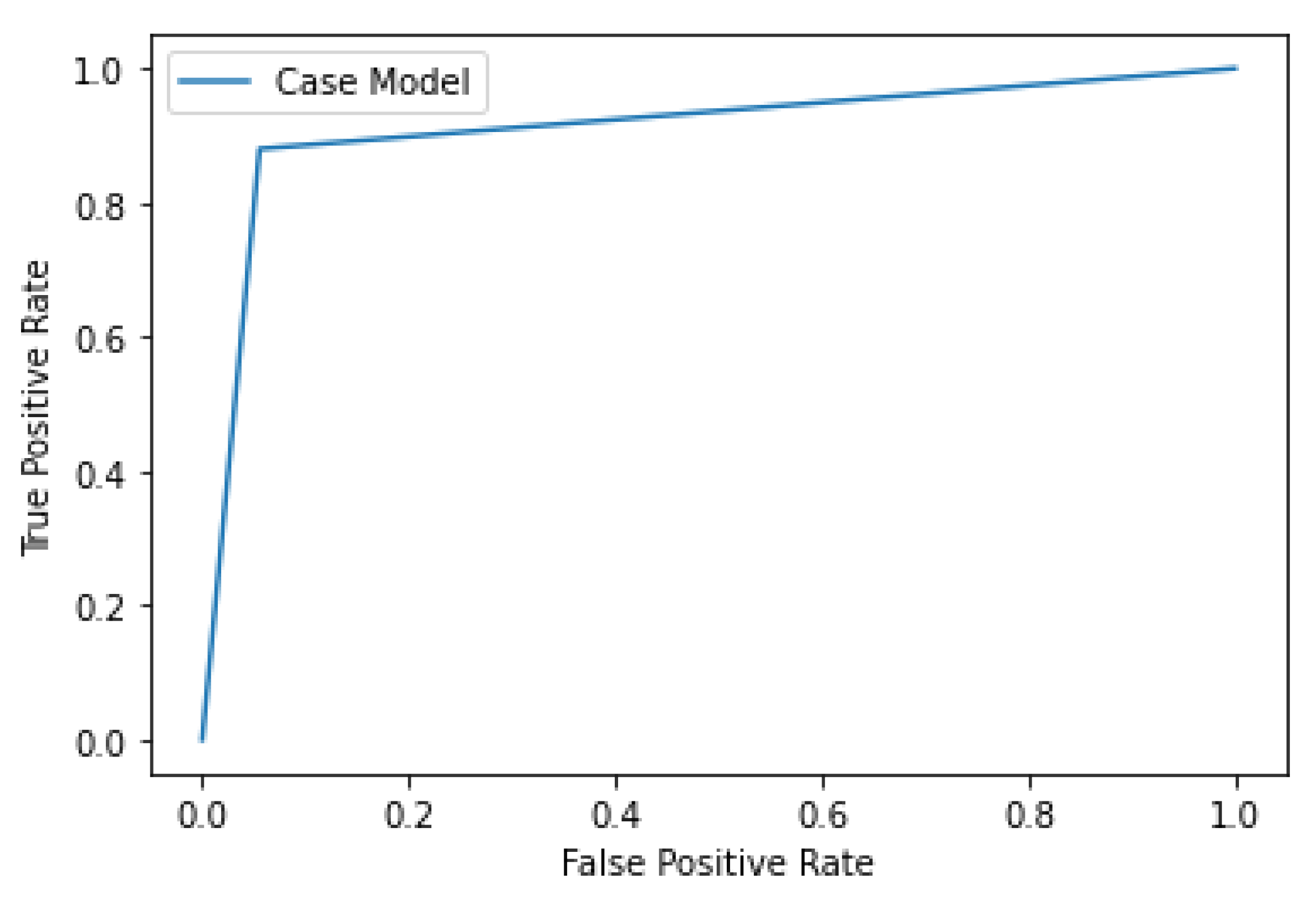

- Confusion Matrix: A matrix that shows the predicted class result compared to the actual class at once;

- Positive (=Normal Status): Normal situation that the quality manager wants to maintain (OK);

- Negative (=Anomaly): Unusual situation in which the quality manager needs to be involved (NG);

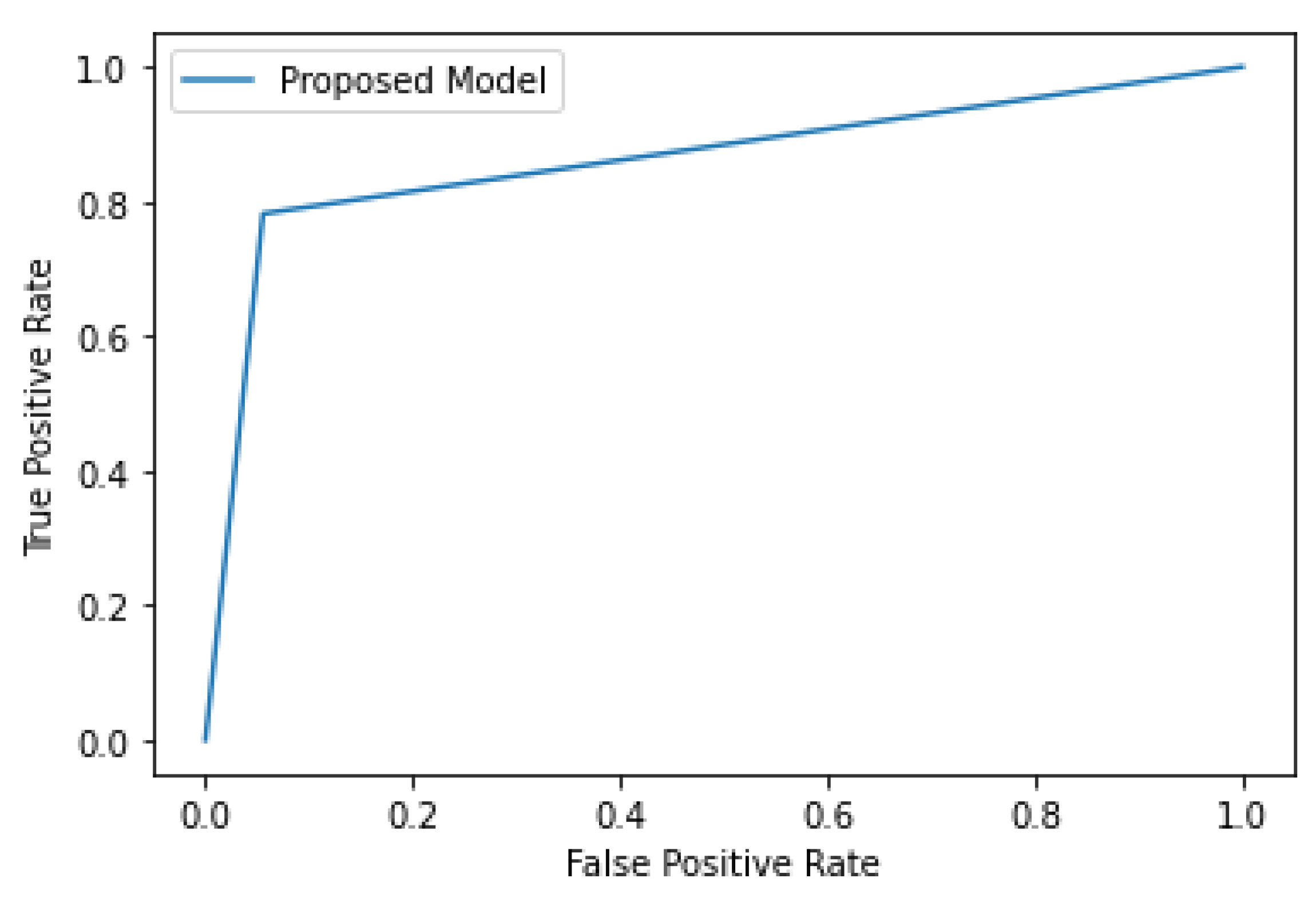

- False Positive (=Type I Error = Missing Error): A situation where AI misses when a failure occurs (FPR);

- False Negative (=Type II Error = False Alarm): A situation where AI reports a failure even though it is not a failure (FNR).

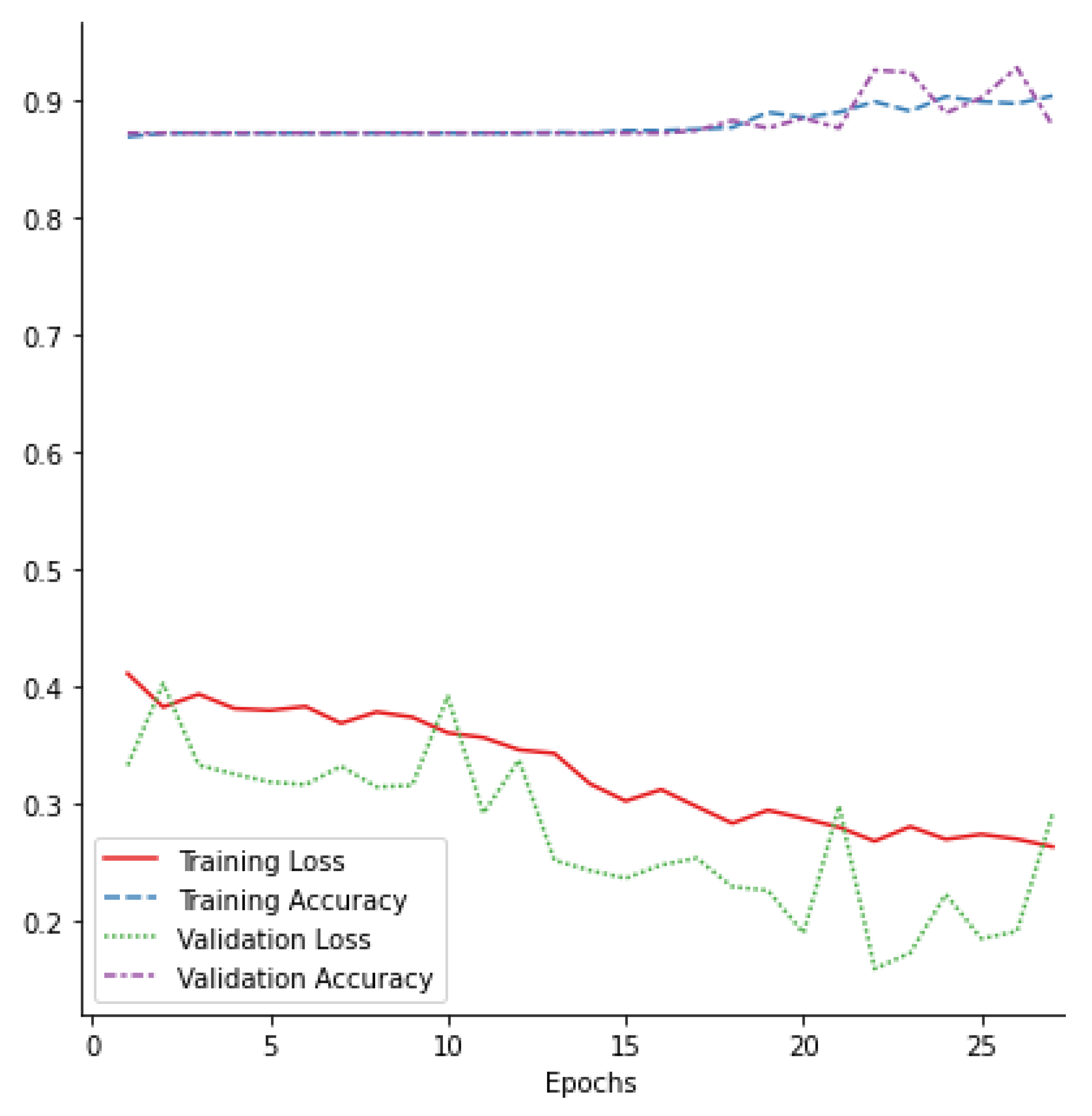

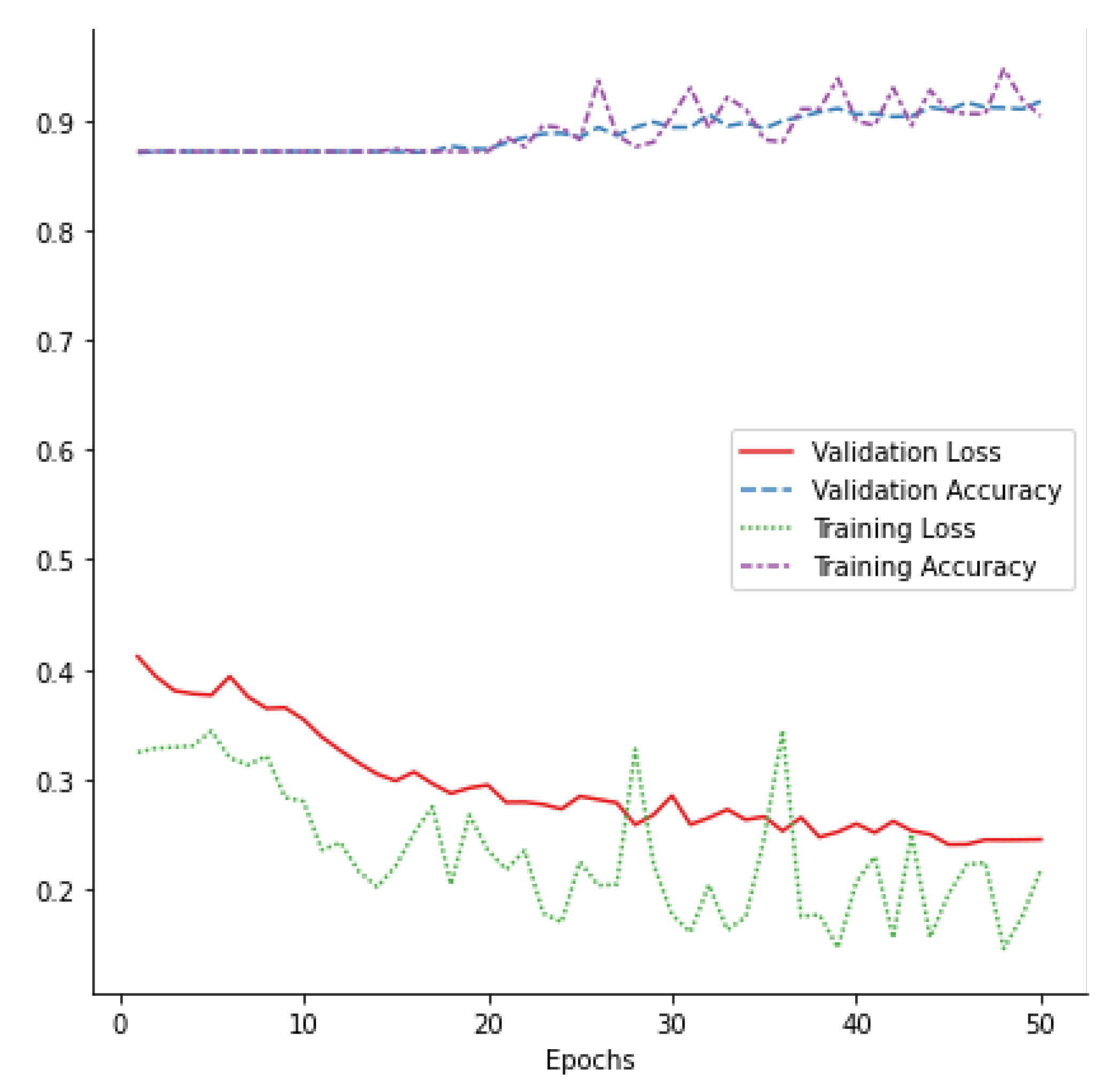

4.3. Experiment and Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Oh, S.; Han, S.; Jeong, J. Multi-Scale Convolutional Recurrent Neural Network for Bearing Fault Detection in Noisy Manufacturing Environments. Appl. Sci. 2021, 11, 3963. [Google Scholar] [CrossRef]

- Han, S.; Oh, S.; Jeong, J. Bearing Fault Diagnosis Based on Multi-scale Convolutional Neural Network Using Data Augmentation. J. Sens. 2021, 2021, 6699637. [Google Scholar] [CrossRef]

- Cha, J.; Oh, S.; Kim, D.; Jeong, J. A Defect Detection Model for Imbalanced Wafer Image Data Using CAE and Xception. In Proceedings of the 2020 International Conference on Intelligent Data Science Technologies and Applications (IDSTA), Valencia, Spain, 19–22 October 2020; pp. 28–33. [Google Scholar]

- Han, S.; Jeong, J. An Weighted CNN Ensemble Model with Small Amount of Data for Bearing Fault Diagnosis. Procedia Comput. Sci. 2020, 175, 88–95. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 1 June 2016; Volume 7, pp. 770–778. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Toderici, G.; Vincent, D.; Johnston, N.; Hwang, S.J.; Minnen, D.; Shor, J.; Covell, M. Full resolution image compression with recurrent neural networks. arXiv 2016, arXiv:1608.05148. [Google Scholar]

- Wang, H.; Li, S.; Song, L.; Cui, L. A novel convolutional neural network based fault recognition method via image fusion of multi-vibration-signals. Comput. Ind. 2019, 105, 182–190. [Google Scholar] [CrossRef]

- Xu, J.; Li, M.; Zhu, Z. Automatic Data Augmentation for 3D Medical Image Segmentation. In Electrical Engineering and Systems Science Image and Video Processing; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Nalepa, J.; Marcinkiewicz, M.; Kawulok, M. Data Augmentation for Brain-Tumor Segmentation: A Review. Front. Comput. Neurosci. 2019, 13, 83. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Antoniou, A.; Storkey, A.; Edwards, H. Data augmentation generative adversarial networks. arXiv 2017, arXiv:1711.04340. [Google Scholar]

- Kramschuster, A.; Cavitt, R.; Ermer, D.; Chen, Z.; Turng, L.-S. Quantitative study of shrinkage and warpage behavior for microcellular and conventional injection molding. Polym. Eng. Sci. 2005, 45, 1408–1418. [Google Scholar] [CrossRef]

- Shen, C.; Kramschuster, A.; Ermer, D.; Turng, L.-S. Study of Shrinkage and Warpage in Microcellular Co-Injection Molding. Int. Polym. Process. 2006, 21, 393–401. [Google Scholar] [CrossRef]

- Kwon, K.; Isayev, A.I.; Kim, K.H. Toward a viscoelastic modeling of anisotropic shrinkage in injection molding of amorphous polymers. J. Appl. Polym. Sci. 2005, 98, 2300–2313. [Google Scholar] [CrossRef]

- Kurt, M.; Kaynak, Y.; Kamber, O.S.; Mutlu, B.; Bakir, B.; Koklü, U. Influence of molding conditions on the shrinkage and roundness of injection molded parts. Int. J. Adv. Manuf. Technol. 2010, 46, 571–578. [Google Scholar] [CrossRef]

- De Santis, F.; Pantani, R.; Speranza, V.; Titomanlio, G. Analysis of Shrinkage Development of a Semicrystalline Polymer during Injection Molding. Ind. Eng. Chem. Res. 2010, 49, 2469–2476. [Google Scholar] [CrossRef]

- Chen, S.-C.; Lin, Y.-C.; Huang, S.-W. Study on the packing effects of external gas-assisted injection molding on part shrinkage in comparison with conventional injection molding. Polym. Eng. Sci. 2010, 50, 2085–2092. [Google Scholar] [CrossRef]

- Jong, W.-R.; Hwang, S.-S.; Tsai, M.-C.; Wu, C.-C.; Kao, C.-H.; Huang, Y.-M. Effect of gas counter pressure on shrinkage and residual stress for injection molding process. J. Polym. Eng. 2017, 37, 505–520. [Google Scholar] [CrossRef]

- Qi, G.-Q.; Xu, Y.-J.; Yang, W.; Xie, B.-H.; Yang, M.-B. Injection Molding Shrinkage and Mechanical Properties of Polypropylene Blends. J. Macromol. Sci. Part B 2011, 50, 1747–1760. [Google Scholar] [CrossRef]

- Lucyshyn, T.; Knapp, G.; Kipperer, M.; Holzer, C. Determination of the transition temperature at different cooling rates and its influence on prediction of shrinkage and warpage in injection molding simulation. J. Appl. Polym. Sci. 2012, 123, 1162–1168. [Google Scholar] [CrossRef]

- Wang, R.; Zeng, J.; Feng, X.; Xia, Y. Evaluation of Effect of Plastic Injection Molding Process Parameters on Shrinkage Based on Neural Network Simulation. J. Macromol. Sci. Part B 2013, 52, 206–221. [Google Scholar] [CrossRef]

- Abdul, R.; Guo, G.; Chen, J.C.; Yoo, J.J.-W. Shrinkage prediction of injection molded high density polyethylene parts with taguchi/artificial neural network hybrid experimental design. Int. J. Interact. Des. Manuf. (IJIDeM) 2019, 14, 345–357. [Google Scholar] [CrossRef]

- Syed, S.F.; Chen, J.C.; Guo, G. Optimization of Tensile Strength and Shrinkage of Talc-Filled Polypropylene as a Packaging Material in Injection Molding. J. Packag. Technol. Res. 2020, 4, 69–78. [Google Scholar] [CrossRef]

- Guo, G.; Li, Y.; Zhao, X.; Rizvi, R. Tensile and longitudinal shrinkage behaviors of polylactide/wood-fiber composites via direct injection molding. Polym. Compos. 2020, 41, 4663–4677. [Google Scholar] [CrossRef]

- Kc, B.; Faruk, O.; Agnelli, J.; Leao, A.; Tjong, J.; Sain, M. Sisal-glass fiber hybrid biocomposite: Optimization of injection molding parameters using Taguchi method for reducing shrinkage. Compos. Part A Appl. Sci. Manuf. 2016, 83, 152–159. [Google Scholar] [CrossRef] [Green Version]

- Mohan, M.; Ansari, M.; Shanks, R. Review on the Effects of Process Parameters on Strength, Shrinkage, and Warpage of Injection Molding Plastic Component. Polym. Technol. Eng. 2017, 56, 1–12. [Google Scholar] [CrossRef]

- Mirjavadi, S.S.; Forsat, M.; Barati, M.R.; Hamouda, A. Investigating nonlinear vibrations of multi-scale truncated conical shell segments with carbon nanotube/fiberglass reinforcement using a higher order conical shell theory. J. Strain Anal. Eng. Des. 2021, 56, 181–192. [Google Scholar] [CrossRef]

- Mirjavadi, S.S.; Afshari, B.M.; Shafiei, N.; Rabby, S.; Kazemi, M. Effect of temperature and porosity on the vibration behavior of two-dimensional functionally graded microscale Timoshenko beam. J. Vib. Control. 2017, 24, 4211–4225. [Google Scholar] [CrossRef]

- Mirjavadi, S.S.; Rabby, S.; Shafiei, N.; Afshari, B.M.; Kazemi, M. On size-dependent free vibration and thermal buckling of axially functionally graded nanobeams in thermal environment. Appl. Phys. A 2017, 123, 315. [Google Scholar] [CrossRef]

- Shafiei, N.; Mirjavadi, S.S.; Afshari, B.M.; Rabby, S.; Hamouda, A. Nonlinear thermal buckling of axially functionally graded micro and nanobeams. Compos. Struct. 2017, 168, 428–439. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 142–149. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards realtime object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Fan, J.; Xu, W.; Wu, Y.; Gong, Y. Human tracking using convolutional neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2010, 21, 1610–1623. [Google Scholar]

- Wu, C.; Jiang, P.; Ding, C.; Feng, F.; Chen, T. Intelligent fault diagnosis of rotating machinery based on one-dimensional convolutional neural network. Comput. Ind. 2019, 108, 53–61. [Google Scholar] [CrossRef]

- Xie, X. A review of recent advances in surface defect detection using texture analysis techniques. ELCVIA 2008, 7, 1–22. [Google Scholar] [CrossRef] [Green Version]

- Gao, Z.; Cecati, C.; Ding, S.X. A survey of fault diagnosis and fault-tolerant techniques—Part I: Fault diagnosis with model-based and signal-based approaches. IEEE Trans. Ind. Electron 2015, 62, 3757–3767. [Google Scholar] [CrossRef] [Green Version]

- Boukouvalas, C.; Kittler, J.; Marik, R.; Petrou, M. Color grading of randomly textured ceramic tiles using color histograms. IEEE Trans. Ind. Electron 1999, 46, 219–226. [Google Scholar] [CrossRef]

- Pietikainen, M.; Maenpaa, T.; Viertola, J. Color texture classification with color histograms and local binary patterns. In Workshop on Texture Analysis in Machine Visio; Machine Vision Group, University of Oulu: Oulu, Finland, 2002; pp. 109–112. [Google Scholar]

- Escofet, J.; Navarro, R.; Pladellorens, M.M.J. Detection of local defects in textile webs using Gabor filters. Opt. Eng. 1998, 37, 2297–2307. [Google Scholar]

- Ren, R.; Hung, T.; Tan, K.C. A generic deep-learning-based approach for automated surface inspection. IEEE Trans. Cybern. 2017, 48, 929–940. [Google Scholar] [CrossRef]

- Staar, B.; Lütjen, M.; Freitag, M. Anomaly detection with convolutional neural networks for industrial surface inspection. Proc. CIRP 2019, 79, 484–489. [Google Scholar] [CrossRef]

- Wang, T.; Chen, Y.; Qiao, M.; Snoussi, H. A fast and robust convolutional neural network-based defect detection model in product quality control. Int. J. Adv. Manuf. Technol. 2018, 94, 3465–3471. [Google Scholar] [CrossRef]

- Tao, X.; Zhang, D.; Ma, W.; Liu, X.; Xu, D. Automatic metallic surface defect detection and recognition with convolutional neural networks. Appl. Sci. 2018, 8, 1575. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.; Liu, Z.; Wang, H.; Núñez, A.; Han, Z. Automatic defect detection of fasteners on the catenary support device using deep convolutional neural network. IEEE Trans. Instrum. Meas. 2017, 67, 257–269. [Google Scholar] [CrossRef] [Green Version]

- Zhou, S.; Chen, Y.; Zhang, D.; Xie, J.; Zhou, Y. Classification of surface defects on steel sheet using convolutional neural networks. Mater. Technol. 2017, 51, 123–131. [Google Scholar]

- Deng, J.; Lu, Y.; Lee, V.C.S. Concrete crack detection with handwriting script interferences using faster region-based convolutional neural network. Comput. Aided Civ. Infrastruct. Eng. 2020, 35, 373–388. [Google Scholar] [CrossRef]

- Chun, P.J.; Izumi, S.; Yamane, T. Automatic detection method of cracks from concrete surface imagery using two-step light gradient boosting machine. Comput.-Aided Civ. Infrastruct. Eng. 2020. [Google Scholar] [CrossRef]

- Yamane, T.; Chun, P.J. Crack Detection from a Concrete Surface Image Based on Semantic Segmentation Using Deep Learning. J. Adv. Concr. Technol. 2020, 18, 493–504. [Google Scholar] [CrossRef]

- Greenberg, A.; Hamilton, J.; Maltz, D.A.; Patel, P. The cost of a cloud: Research problems in data center networks. ACM SIGCOMM Comput. Commun. Rev. 2008, 39, 68–73. [Google Scholar] [CrossRef]

- Cuervo, E. MAUI: Making smartphones last longer with code offload. In Proceedings of the 8th International Conference on Mobile Systems, Applications, and Services, San Francisco, CA, USA, 15–18 June 2010; pp. 49–62. [Google Scholar]

- Satyanarayanan, M.; Bahl, V.; Caceres, R.; Davies, N. The Case for VM-based Cloudlets in Mobile Computing. IEEE Pervasive Comput. 2011, 8, 14–23. [Google Scholar] [CrossRef]

- Bonomi, F.; Milito, R.; Zhu, J.; Addepalli, S. Fog computing and its role in the internet of things. In Proceedings of the First Edition of the MCC Workshop on Mobile Cloud Computing, Helsinki, Finland, 17 August 2012; pp. 13–16. [Google Scholar]

- Borgia, E. The Internet of Things vision: Key features, applications and open issues. Comput. Commun. 2014, 54, 1–31. [Google Scholar] [CrossRef]

- Gubbi, J.; Buyya, R.; Marusic, S.; Palaniswami, M. Internet of Things (IoT): A vision, architectural elements, and future directions. Future Gener. Comput. Syst. 2013, 29, 1645–1660. [Google Scholar] [CrossRef] [Green Version]

- Xu, L.D.; He, W.; Li, S. Internet of Things in Industries: A Survey. IEEE Trans. Ind. Inform. 2014, 10, 2233–2243. [Google Scholar] [CrossRef]

- Fortino, G.; Savaglio, C.; Zhou, M. Toward opportunistic services for the industrial Internet of Things. In Proceedings of the 2017 13th IEEE Conference on Automation Science and Engineering, Xi’an, China, 20–23 August 2017; pp. 825–830. [Google Scholar]

- Dou, R.; Nan, G. Optimizing Sensor Network Coverage and Regional Connectivity in Industrial IoT Systems. IEEE Syst. J. 2017, 11, 1351–1360. [Google Scholar] [CrossRef]

- Lombardi, M.; Pascale, F.; Santaniello, D. Internet of Things: A General Overview between Architectures, Protocols and Applications. Information 2021, 12, 87. [Google Scholar] [CrossRef]

- Erhan, L.; Ndubuaku, M.; Di Mauro, M.; Song, W.; Chen, M.; Fortino, G.; Bagdasar, O.; Liotta, A. Smart anomaly detection in sensor systems: A multi-perspective review. Inf. Fusion 2021, 67, 64–79. [Google Scholar] [CrossRef]

- Lee, S.; Abdullah, A.; Jhanjhi, N.; Kok, S. Classification of botnet attacks in IoT smart factory using honeypot combined with machine learning. PeerJ Comput. Sci. 2021, 7, e350. [Google Scholar] [CrossRef]

- Kamath, V.; Morgan, J.; Ali, M.I. Industrial IoT and Digital Twins for a Smart Factory: An open source toolkit for application design and benchmarking. In Proceedings of the 2020 Global Internet of Things Summit (GIoTS), Dublin, Ireland, 3 June 2020; pp. 1–6. [Google Scholar] [CrossRef]

| Input | Output | |

|---|---|---|

| Algorithm 1 | Raw image | Cropped image |

| Algorithm 2 | Cropped image | The number of cell that is defect |

| Algorithm 3 | The number of cell that is defect | Time from n-th cell to discharge |

| Layer Name | Output Size | Network | Connected to |

|---|---|---|---|

| Input Layer | (300 × 300) | Conv2D | |

| Conv Layer1 | (150 × 150 × 16) | Conv2D, kernel size = 7 × 7 | Input Layer |

| Pool Layer1 | (75 × 75 × 16) | Maxpooling2D, size = 2 × 2 | Conv Layer1 |

| Conv Layer2 | (75 × 75 × 32) | Conv2D, kernel size = 3 × 3 | Pool Layer1 |

| Pool Layer2 | (37 × 37 × 32) | Maxpooling2D, size = 2 × 2 | Conv Layer2 |

| Conv Layer3 | (37 × 37 × 64) | Conv2D, kernel size = 3 × 3 | Pool Layer2 |

| Pool Layer3 | (18 × 18 × 64) | Maxpooling2D, size = 2 × 2 | Conv Layer3 |

| Flatten Layer | (20,376) | Flatten | Pool Layer3 |

| Dense Layer | (64) | Dense | Flatten Layer |

| Dropout Layer | (64) | Dropout, rate = 0.2 | Dense Layer |

| Softmax | (1) | Dense | Dropout Layer |

| Hardware Environment | Software Environment |

|---|---|

| CPU: Intel Core i7-8700 K, 3.7 GHz, | Windows TensorFlow 2.0 framework |

| Six-core twelve threads, 16 GB | Python 3.7 |

| GPU: Geforce GTX 1080 Ti |

| Normal | Defect | |

|---|---|---|

| Training Data | 1714 | 200 |

| Validation Data | 316 | 100 |

| Test Data | 198 | 55 |

| Precision | Recall | F1-Score | |

|---|---|---|---|

| Normal | 0.9581 | 0.9242 | 0.9409 |

| Defect | 0.7581 | 0.8545 | 0.8034 |

| Accuracy | 0.9091 | ||

| Macro Average | 0.8581 | 0.8894 | 0.8721 |

| Weighted Average | 0.9146 | 0.9091 | 0.9110 |

| Normal | Defect | |

|---|---|---|

| Training Data | 3428 | 400 |

| Validation Data | 632 | 200 |

| Test Data | 198 | 100 |

| Precision | Recall | F1-Score | |

|---|---|---|---|

| Normal | 0.9632 | 0.9242 | 0.9433 |

| Defect | 0.8611 | 0.9300 | 0.8942 |

| Accuracy | 0.9262 | ||

| Macro Average | 0.9121 | 0.9271 | 0.9188 |

| Weighted Average | 0.9289 | 0.9262 | 0.9268 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ha, H.; Jeong, J. CNN-Based Defect Inspection for Injection Molding Using Edge Computing and Industrial IoT Systems. Appl. Sci. 2021, 11, 6378. https://doi.org/10.3390/app11146378

Ha H, Jeong J. CNN-Based Defect Inspection for Injection Molding Using Edge Computing and Industrial IoT Systems. Applied Sciences. 2021; 11(14):6378. https://doi.org/10.3390/app11146378

Chicago/Turabian StyleHa, Hyeonjong, and Jongpil Jeong. 2021. "CNN-Based Defect Inspection for Injection Molding Using Edge Computing and Industrial IoT Systems" Applied Sciences 11, no. 14: 6378. https://doi.org/10.3390/app11146378

APA StyleHa, H., & Jeong, J. (2021). CNN-Based Defect Inspection for Injection Molding Using Edge Computing and Industrial IoT Systems. Applied Sciences, 11(14), 6378. https://doi.org/10.3390/app11146378