Towards Quantum 3D Imaging Devices

Abstract

:1. Introduction

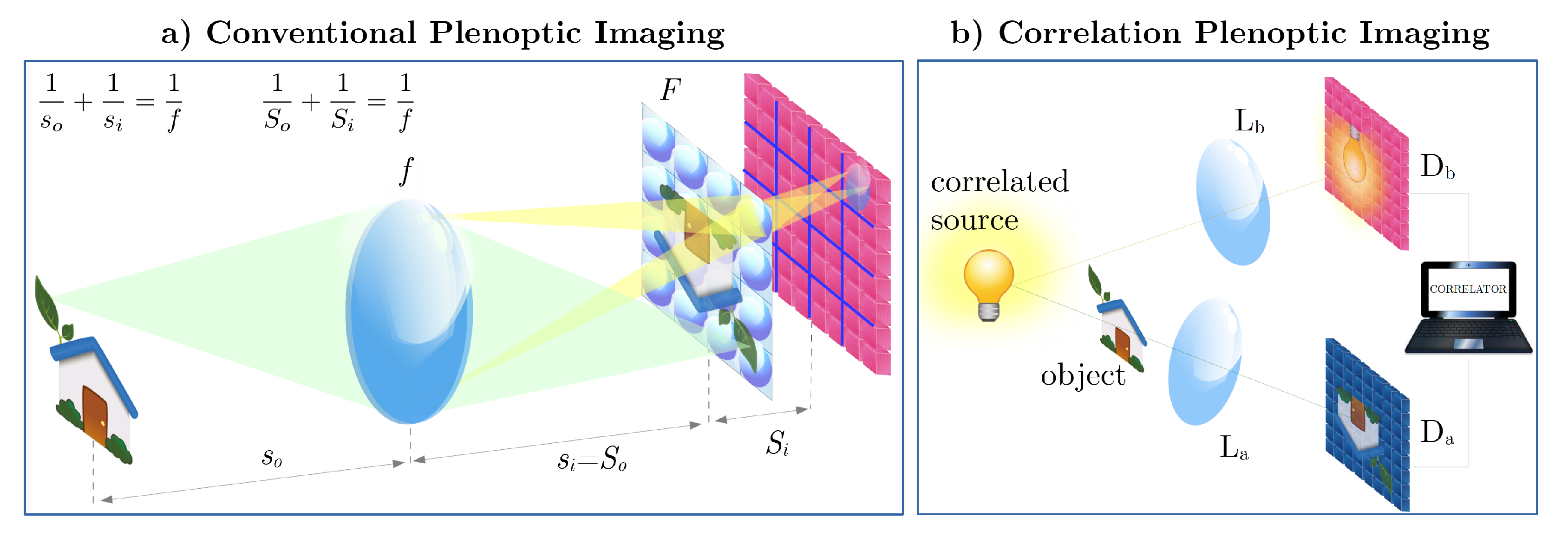

2. Plenoptic Imaging with Correlations: From Working Principle to Recent Advances

- Chaotic light sources, such as pseudothermal light, natural light, LEDs and gas lamps, and even fluorescent samples, operated either in the high-intensity regime or in the “two-photon” regime, in which an average of two photons per coherence area propagates in the setup. Chaotic light sources are well known to be characterized by EPR-like correlations in both momentum and position variables [38,39], to be exploited in an optimal way to retrieve an accurate plenoptic correlation function in the shortest possible time. In order to efficiently retrieve spatio-temporal correlations, tight filtering of the source can be necessary to match the filtered source coherence time with the response time of the SPAD arrays that can be as low as 1 ns. Alternatively, pseudorandom sources with a controllable coherence time, made by impinging laser light on a fast-switching digital micromirror device (DMD), can be employed. Interestingly, recent studies have shown that, in the case of chaotic light illumination, the plenoptic properties of the correlation function do not need to rely strictly on ghost imaging: correlations can be measured between any two planes where ordinary images (see Figure 1b) are formed [35]. This discovery has led to the intriguing result that the SNR of CPI improves when ghost imaging of the object is replaced by standard imaging [40]. In particular, excellent noise performances are expected in the case of images of birefringent objects placed between crossed polarizers. This kind of source is particularly relevant in view of applications in fields like biomedical imaging (cornea, zebrafish, invertebrates, biological phantoms such as starch dispersions), security (distance detection, DOF extension), and satellite imaging.

- Momentum–position entangled beams, generated by spontaneous parametric down-conversion (SPDC), which have the potential to combine QPI with sub-shot noise imaging [41], thus enabling high-SNR imaging of low-absorbing samples, a challenging issue in both biomedical imaging and security.

- 1

- Collecting synchronized pairs of frames from a sensor , with resolution , and , with resolution . Synchronization of two separate digital sensors entails technical complications that can be overcome by using two disjoint parts of the same sensor [16]. The total acquisition time iswhere is the frame exposure time that must be shorter than the coherence time of impinging light in order to exploit the maximal information on intensity fluctuations, while is the dead time between subsequent frames, usually fixed by the employed sensors.

- 2

- Each acquired frame is transferred to a computer to be processed. This step can occur either progressively during the capture of the subsequent frames, or at the end of the acquisition process.

- 3

- The collected frames are used to obtain an estimate of the correlation of intensity fluctuations (1) aswhere , with , is the intensity measured in frame k in correspondence of the pixel on the detector centered on the coordinate . In this way, an information initially encoded in numbers is used to reconstruct a correlation function determined by values.

3. Hardware Speedup: Advanced Sensors and Ultra-Fast Computing Platforms

3.1. SPAD Arrays as High-Resolution Time-Resolved Sensors

3.2. Computational Hardware Platform

4. Quantum and Classical Image-Processing Algorithms

4.1. Compressive Sensing

4.2. Plenoptic Tomography

4.3. Quantum Tomography and Quantum Fisher Information

5. Perspectives

- a compact single-lens plenoptic camera for 3D imaging, based on the photon number correlations of a dim chaotic light source;

- an ultra-low noise plenoptic device, based on the correlation properties of entangled photon pairs emitted by spontaneous parametric down-conversion (SPDC), enabling 3D imaging of low-absorbing samples, at the shot-noise limit or below.

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SPAD | Single-Photon Avalanche Diode |

| QPI | Quantum Plenoptic Imaging |

| fps | frames per second |

| GPU | Graphics Processing Unit |

| CPI | Correlation Plenoptic Imaging |

| SNR | Signal-to-Noise Ratio |

| DOF | Depth of Field |

| EPR | Einstein–Podolski–Rosen |

| FPGA | Field-Programmable Gate Array |

| CPU | Central Processing Unit |

| GI | Ghost Imaging |

| CS | Compressive Sensing |

| DCT | Discrete Cosine Transform |

References

- Sansoni, G.; Trebeschi, M.; Docchio, F. State-of-The-Art and Applications of 3D Imaging Sensors in Industry, Cultural Heritage, Medicine, and Criminal Investigation. Sensors 2009, 9, 568. [Google Scholar] [CrossRef]

- Geng, J. Structured-light 3D surface imaging: A tutorial. Adv. Opt. Photonics 2011, 3, 128. [Google Scholar] [CrossRef]

- Hansard, M.; Lee, S.; Choi, O.; Horaud, R. Time of Flight Cameras: Principles, Methods, and Applications, 2013th ed.; Springer: Berlin, Germany, 2013. [Google Scholar]

- Mertz, J. Introduction to Optical Microscopy; Cambridge University Press: Cambridge, UK, 2019. [Google Scholar]

- Prevedel, R.; Yoon, Y.-G.; Hoffmann, M.; Pak, N.; Wetzstein, G.; Kato, S.; Schrödel, T.; Raskar, R.; Zimmer, M.; Boyden, E.S.; et al. Simultaneous whole-animal 3D imaging of neuronal activity using light-field microscopy. Nat. Methods 2014, 11, 727. [Google Scholar] [CrossRef]

- Hall, E.M.; Thurow, B.S.; Guildenbecher, D.R. Comparison of three-dimensional particle tracking and sizing using plenoptic imaging and digital in-line holography. Appl. Opt. 2016, 55, 6410–6420. [Google Scholar] [CrossRef]

- Kim, M.K. Principles and techniques of digital holographic microscopy. SPIE Rev. 2010, 1, 018005. [Google Scholar] [CrossRef] [Green Version]

- Zheng, G.; Horstmeyer, R.; Yang, C. Wide-field, high-resolution Fourier ptychographic microscopy. Nat. Photonics 2013, 7, 739. [Google Scholar] [CrossRef] [PubMed]

- Albota, M.A.; Aull, B.F.; Fouche, D.G.; Heinrichs, R.M.; Kocher, D.G.; Marino, R.M.; Mooney, J.G.; Newbury, N.R.; O’Brien, M.E.; Player, B.E.; et al. Three-dimensional imaging laser radars with Geiger-mode avalanche photodiode arrays. Lincoln Lab. J. 2002, 13, 351–370. [Google Scholar]

- Marino, R.M.; Davis, W.R. Jigsaw: A foliage-penetrating 3D imaging laser radar system. Lincoln Lab. J. 2005, 15, 23–36. [Google Scholar]

- Hansard, M.; Lee, S.; Choi, O.; Horaud, R.P. Time-of-Flight Cameras: Principles, Methods and Applications; Springer Science & Business Media: Berlin, Germany, 2012. [Google Scholar]

- McCarthy, A.; Krichel, N.J.; Gemmell, N.R.; Ren, X.; Tanner, M.G.; Dorenbos, S.N.; Zwiller, V.; Hadfield, R.H.; Buller, G.S. Kilometer-range, high resolution depth imaging via 1560 nm wavelength single-photon detection. Opt. Express 2013, 21, 8904–8915. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- McCarthy, A.; Ren, X.; Della Frera, A.; Gemmell, N.R.; Krichel, N.J.; Scarcella, C.; Ruggeri, A.; Tosi, A.; Buller, G.S. Kilometer-range depth imaging at 1550 nm wavelength using an InGaAs/InP single-photon avalanche diode detector. Opt. Express 2013, 21, 22098–22113. [Google Scholar] [CrossRef] [Green Version]

- Altmann, Y.; McLaughlin, S.; Padgett, M.J.; Goyal, V.K.; Hero, A.O.; Faccio, D. Quantum-inspired computational imaging. Science 2018, 361, eaat2298. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mertz, J. Introduction to Optical Microscopy; Roberts and Company Publishers: Englewood, CO, USA, 2010; Volume 138. [Google Scholar]

- Pepe, F.V.; Di Lena, F.; Mazzilli, A.; Garuccio, A.; Scarcelli, G.; D’Angelo, M. Diffraction-limited plenoptic imaging with correlated light. Phys. Rev. Lett. 2017, 119, 243602. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zappa, F.; Tisa, S.; Tosi, A.; Cova, S. Principles and features of single-photon avalanche diode arrays. Sens. Actuators A 2007, 140, 103–112. [Google Scholar] [CrossRef]

- Charbon, E. Single-photon imaging in complementary metal oxide semiconductor processes. Philos. Trans. R. Soc. A 2014, 372, 20130100. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Antolovic, I.M.; Bruschini, C.; Charbon, E. Dynamic range extension for photon counting arrays. Opt. Express 2018, 26, 22234. [Google Scholar] [CrossRef] [PubMed]

- Veerappan, C.; Charbon, E. A low dark count p-i-n diode based SPAD in CMOS technology. IEEE Trans. Electron Devices 2016, 63, 65. [Google Scholar] [CrossRef]

- Antolovic, I.M.; Ulku, A.C.; Kizilkan, E.; Lindner, S.; Zanella, F.; Ferrini, R.; Schnieper, M.; Charbon, E.; Bruschini, C. Optical-stack optimization for improved SPAD photon detection efficiency. Proc. SPIE 2019, 10926, 359–365. [Google Scholar]

- Ulku, A.C.; Bruschini, C.; Antolovic, I.M.; Charbon, E.; Kuo, Y.; Ankri, R.; Weiss, S.; Michalet, X. A 512 × 512 SPAD image sensor with integrated gating for widefield FLIM. IEEE J. Sel. Top. Quantum Electron. 2019, 25, 6801212. [Google Scholar] [CrossRef]

- Nguyen, A.H.; Pickering, M.; Lambert, A. The FPGA implementation of an image registration algorithm using binary images. J. Real Time Image Pr. 2016, 11, 799. [Google Scholar] [CrossRef]

- Holloway, J.; Kannan, V.; Zhang, Y.; Chandler, D.M.; Sohoni, S. GPU Acceleration of the Most Apparent Distortion Image Quality Assessment Algorithm. J. Imaging 2018, 4, 111. [Google Scholar] [CrossRef] [Green Version]

- Dadkhah, M.; Deen, M.J.; Shirani, S. Compressive Sensing Image Sensors-Hardware Implementation. Sensors 2013, 13, 4961. [Google Scholar] [CrossRef] [Green Version]

- Chan, S.H.; Elgendy, O.A.; Wang, X. Images from Bits: Non-Iterative Image Reconstruction for Quanta Image Sensors. Sensors 2016, 16, 1961. [Google Scholar] [CrossRef] [Green Version]

- Rontani, D.; Choi, D.; Chang, C.-Y.; Locquet, A.; Citrin, D.S. Compressive Sensing with Optical Chaos. Sci. Rep. 2016, 6, 35206. [Google Scholar] [CrossRef] [Green Version]

- Gul, M.S.K.; Gunturk, B.K. Spatial and Angular Resolution Enhancement of Light Fields Using Convolutional Neural Networks. IEEE Trans. Image Process. 2018, 27, 2146. [Google Scholar] [CrossRef] [Green Version]

- Motka, L.; Stoklasa, B.; D’Angelo, M.; Facchi, P.; Garuccio, A.; Hradil, Z.; Pascazio, S.; Pepe, F.V.; Teo, Y.S.; Rehacek, J.; et al. Optical resolution from Fisher information. EPJ Plus 2016, 131, 130. [Google Scholar] [CrossRef] [Green Version]

- Řeháček, J.; Paúr, M.; Stoklasa, B.; Koutný, D.; Hradil, Z.; Sánchez-Soto, L.L. Intensity-based axial localization at the quantum limit. Phys. Rev. Lett. 2019, 123, 193601. [Google Scholar] [CrossRef] [PubMed]

- QuantERA Call 2019. Available online: https://www.quantera.eu/calls-for-proposals/call-2019 (accessed on 22 March 2021).

- Raytrix. 3D Light Field Camera Technology. Available online: https://raytrix.de (accessed on 22 March 2021).

- D’Angelo, M.; Pepe, F.V.; Garuccio, A.; Scarcelli, G. Correlation Plenoptic Imaging. Phys. Rev. Lett. 2016, 116, 223602. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pepe, F.V.; Di Lena, F.; Garuccio, A.; Scarcelli, G.; D’Angelo, M. Correlation Plenoptic Imaging with Entangled Photons. Technologies 2016, 4, 17. [Google Scholar] [CrossRef] [Green Version]

- Pepe, F.V.; Vaccarelli, O.; Garuccio, A.; Scarcelli, G.; D’Angelo, M. Exploring plenoptic properties of correlation imaging with chaotic light. J. Opt. 2017, 19, 114001. [Google Scholar] [CrossRef] [Green Version]

- Di Lena, F.; Massaro, G.; Lupo, A.; Garuccio, A.; Pepe, F.V.; D’Angelo, M. Correlation plenoptic imaging between arbitrary planes. Opt. Express 2020, 28, 35857. [Google Scholar] [CrossRef] [PubMed]

- Scagliola, A.; Di Lena, F.; Garuccio, A.; D’Angelo, M.; Pepe, F.V. Correlation plenoptic imaging for microscopy applications. Phys. Lett. A 2020, 384, 126472. [Google Scholar] [CrossRef]

- D’Angelo, M.; Shih, Y.H. Quantum Imaging. Laser Phys. Lett. 2005, 2, 567–596. [Google Scholar] [CrossRef]

- Gatti, A.; Brambilla, E.; Bache, M.; Lugiato, L.A. Ghost Imaging with Thermal Light: Comparing Entanglement and Classical Correlation. Phys. Rev. Lett. 2004, 93, 093602. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Scala, G.; D’Angelo, M.; Garuccio, A.; Pascazio, S.; Pepe, F.V. Signal-to-noise properties of correlation plenoptic imaging with chaotic light. Phys. Rev. A 2019, 99, 053808. [Google Scholar] [CrossRef] [Green Version]

- Brida, G.; Genovese, M.; Ruo Berchera, I. Experimental realization of sub-shot-noise quantum imaging. Nat. Photonics 2010, 4, 227. [Google Scholar] [CrossRef] [Green Version]

- Ferri, F.; Magatti, D.; Lugiato, L.A.; Gatti, A. Differential Ghost Imaging. Phys. Rev. Lett 2010, 104, 253603. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bruschini, C.; Homulle, H.; Antolovic, I.M.; Burri, S.; Charbon, E. Single-photon avalanche diode imagers in biophotonics: Review and outlook. Light Sci. Appl. 2019, 8, 87. [Google Scholar] [CrossRef]

- Caccia, M.; Nardo, L.; Santoro, R.; Schaffhauser, D. Silicon photomultipliers and SPAD imagers in biophotonics: Advances and perspectives. Nucl. Instrum. Methods Phys. Res. A 2019, 926, 101–117. [Google Scholar] [CrossRef]

- Ulku, A.; Ardelean, A.; Antolovic, M.; Weiss, S.; Charbon, E.; Bruschini, C.; Michalet, X. Wide-field time-gated SPAD imager for phasor-based FLIM applications. Methods Appl. Fluoresc. 2020, 8, 024002. [Google Scholar] [CrossRef]

- Zanddizari, H.; Rajan, S.; Zarrabi, H. Increasing the quality of reconstructed signal in compressive sensing utilizing Kronecker technique. Biomed. Eng. Lett. 2018, 8, 239. [Google Scholar] [CrossRef]

- Mertens, L.; Sonnleitner, M.; Leach, J.; Agnew, M.; Padgett, M.J. Image reconstruction from photon sparse data. Sci. Rep. 2017, 7, 42164. [Google Scholar] [CrossRef] [Green Version]

- Katz, O.; Bromberg, Y.; Silberberg, Y. Compressive ghost imaging. Appl. Phys. Lett. 2009, 95, 131110. [Google Scholar] [CrossRef] [Green Version]

- Jiying, L.; Jubo, Z.; Chuan, L.; Shisheng, H. High-quality quantum-imaging algorithm and experiment based on compressive sensing. Opt. Lett. 2010, 35, 1206–1208. [Google Scholar] [CrossRef] [PubMed]

- Aßmann, M.; Bayer, M. Compressive adaptive computational ghost imaging. Sci. Rep. 2013, 3, 1545. [Google Scholar]

- Chen, Y.; Cheng, Z.; Fan, X.; Cheng, Y.; Liang, Z. Compressive sensing ghost imaging based on image gradient. Optik 2019, 182, 1021–1029. [Google Scholar]

- Liu, H.-C. Imaging reconstruction comparison of different ghost imaging algorithms. Sci. Rep. 2020, 10, 14626. [Google Scholar] [CrossRef] [PubMed]

- Tibshirani, R. Regression shrinkage and selection via the lasso: A retrospective. J. R. Statist. Soc. B 2011, 73, 273–282. [Google Scholar] [CrossRef]

- Řeháček, J.; Hradil, Z.; Zawisky, M.; Treimer, W.; Strobl, M. Maximum-likelihood absorption tomography. EPL 2002, 59, 694. [Google Scholar] [CrossRef] [Green Version]

- Hradil, Z.; Rehacek, J.; Fiurasek, J.; Jezek, M. Maximum Likelihood Methods in Quantum Mechanics, in Quantum State Estimation. In Lecture Notes in Physics; Paris, M.G.A., Rehacek, J., Eds.; Springer: Berlin, Germany, 2004; pp. 59–112. [Google Scholar]

- Rehacek, J.; Hradil, Z.; Stoklasa, B.; Paur, M.; Grover, J.; Krzic, A.; Sanchez-Soto, L.L. Multiparameter quantum metrology of incoherent point sources: Towards realistic superresolution. Phys. Rev. A 2017, 96, 062107. [Google Scholar] [CrossRef] [Green Version]

- Paur, M.; Stoklasa, B.; Hradil, Z.; Sanchez-Soto, L.L.; Rehacek, J. Achieving the ultimate optical resolution. Optica 2016, 3, 1144. [Google Scholar] [CrossRef]

- Paur, M.; Stoklasa, B.; Grover, J.; Krzic, A.; Sanchez-Soto, L.L.; Hradil, Z.; Rehacek, J. Tempering Rayleigh’s curse with PSF shaping. Optica 2018, 5, 1177. [Google Scholar] [CrossRef]

| Jetson Xavier AGX | |

|---|---|

| GPU | 512-core NVIDIA Volta™ GPU with 64 Tensor Cores |

| CPU | 8-core NVIDIA Carmel Arm®v8.2 64-bit CPU 8MB L2 + 4MB L3 |

| Memory | 16 GB 256-bit LPDDR4x 136.5GB/s |

| PCIE | 1 × 8 + 1 × 4 + 1 × 2 + 2 × 1 (PCIe Gen4, Root Port & Endpoint) |

| DL Accelerator | 2× NVDLA Engines |

| Vision Accelerator | 7-Way VLIW Vision Processor |

| Connectivity | 10/100/1000 BASE-T Ethernet |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abbattista, C.; Amoruso, L.; Burri, S.; Charbon, E.; Di Lena, F.; Garuccio, A.; Giannella, D.; Hradil, Z.; Iacobellis, M.; Massaro, G.; et al. Towards Quantum 3D Imaging Devices. Appl. Sci. 2021, 11, 6414. https://doi.org/10.3390/app11146414

Abbattista C, Amoruso L, Burri S, Charbon E, Di Lena F, Garuccio A, Giannella D, Hradil Z, Iacobellis M, Massaro G, et al. Towards Quantum 3D Imaging Devices. Applied Sciences. 2021; 11(14):6414. https://doi.org/10.3390/app11146414

Chicago/Turabian StyleAbbattista, Cristoforo, Leonardo Amoruso, Samuel Burri, Edoardo Charbon, Francesco Di Lena, Augusto Garuccio, Davide Giannella, Zdeněk Hradil, Michele Iacobellis, Gianlorenzo Massaro, and et al. 2021. "Towards Quantum 3D Imaging Devices" Applied Sciences 11, no. 14: 6414. https://doi.org/10.3390/app11146414