Designing Reenacted Chatbots to Enhance Museum Experience

Abstract

:1. Introduction

2. Background

2.1. Chatbot

2.2. Museum Experience

2.3. Chatbot in GLAMs (Galleries, Libraries, Archives, Museums)

3. Research Questions

- RQ1: What factors of the chatbot design influence the users’ interaction and museum experience?

- RQ2: What are the specific relationships between individuals’ learning style and museum experience?

4. Implementation

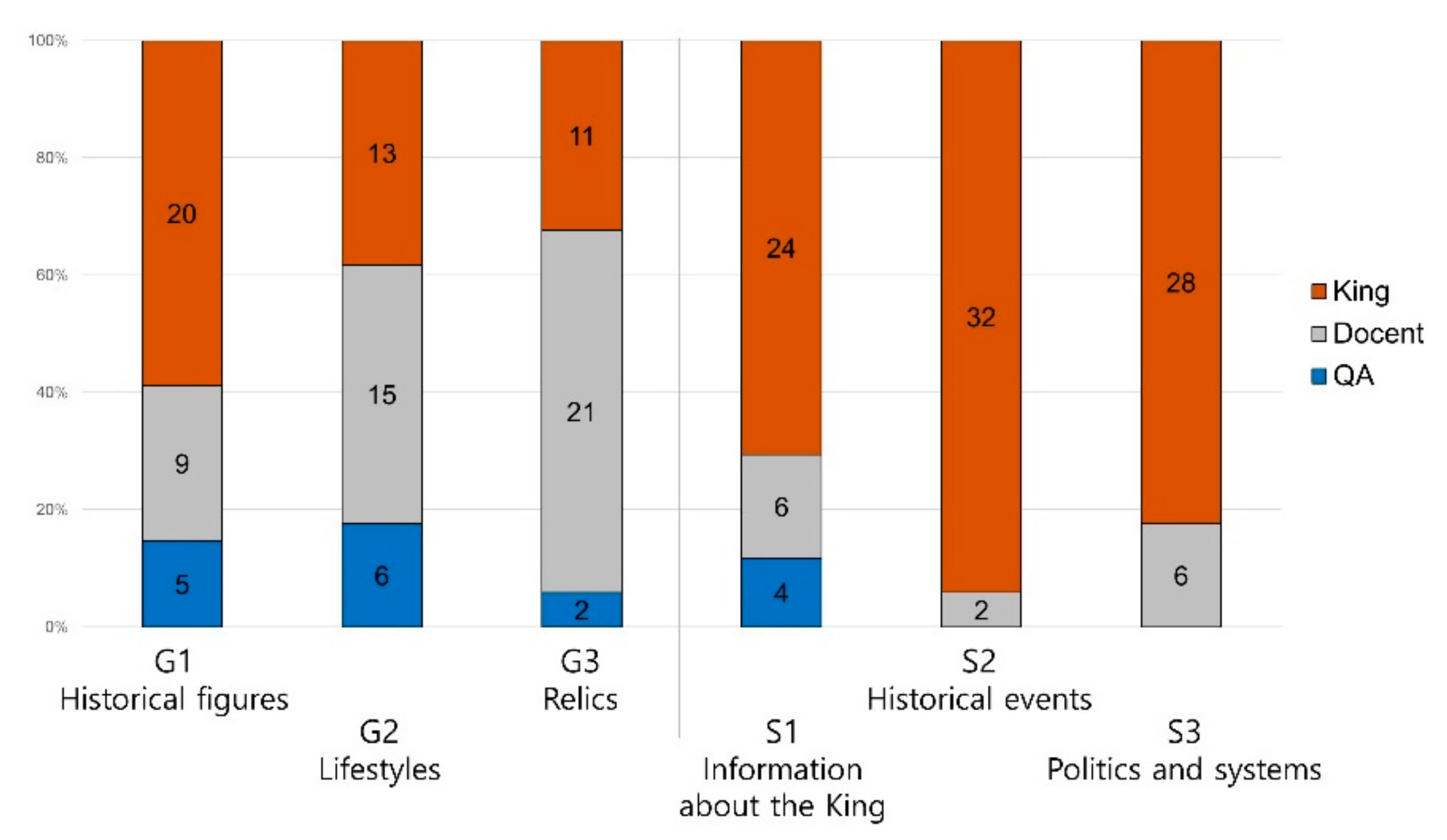

4.1. Content Design

4.2. Model Design

4.2.1. QA System

4.2.2. Docent

4.2.3. Historical Figure

5. Methodology

5.1. Participants’ Demographics

5.2. Measures

5.2.1. Kolb’s Learning Style

5.2.2. User Experience Questionnaire (UEQ)

5.2.3. Museum Experience Scale (MES)

5.3. Procedure

6. Analysis and Results

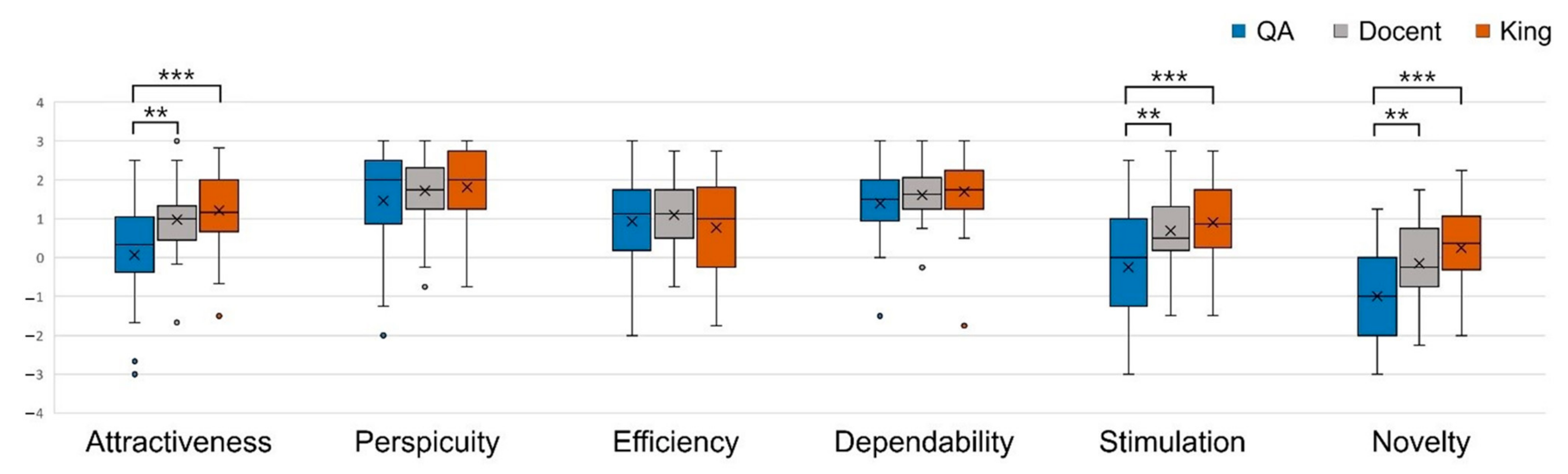

6.1. Chatbot Interaction: UEQ

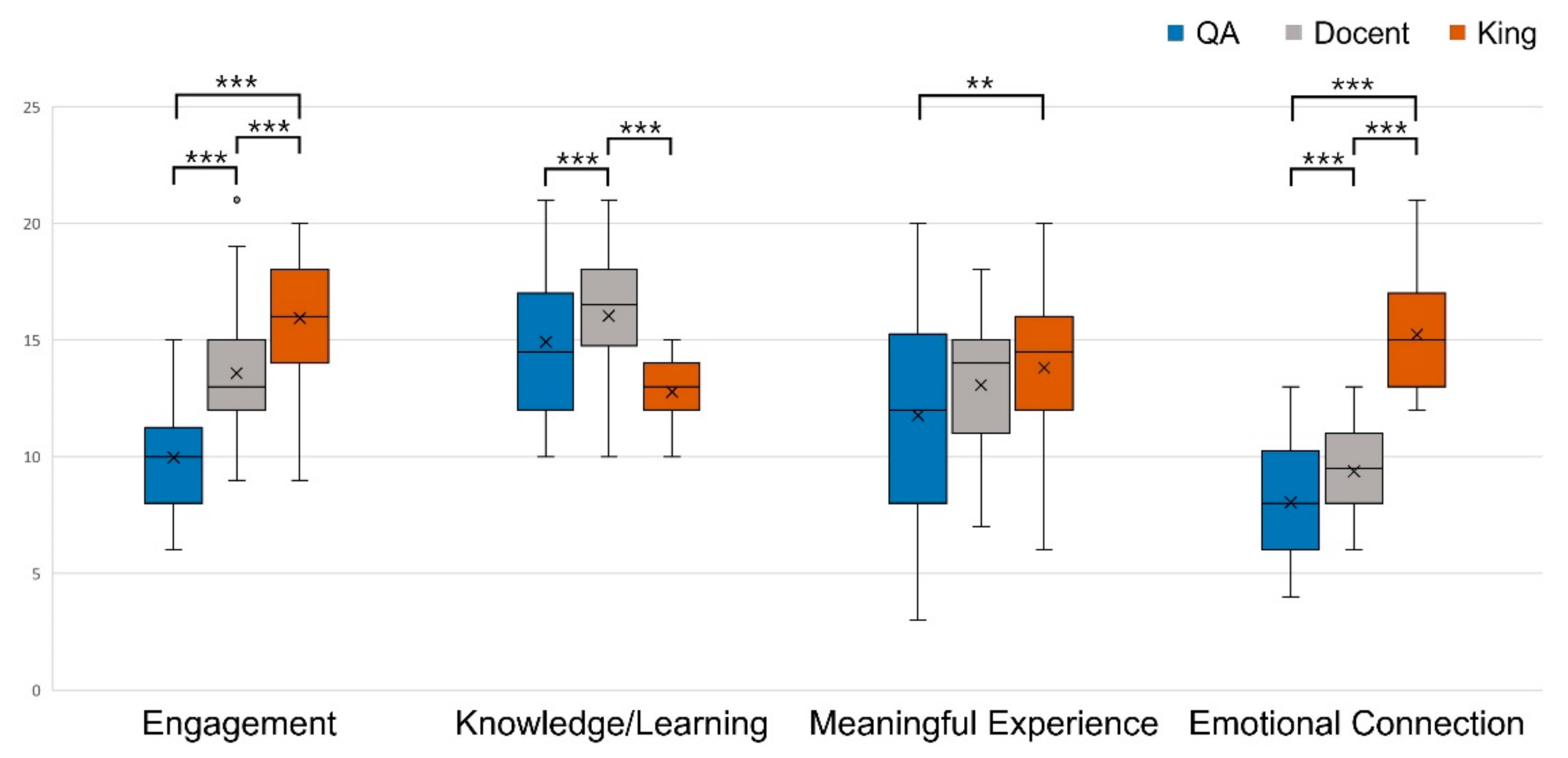

6.2. Museum Experience: MES

6.2.1. Chatbot

- Engagement:

- Knowledge/Learning:

- Meaningful Experience:

- Emotional Connection:

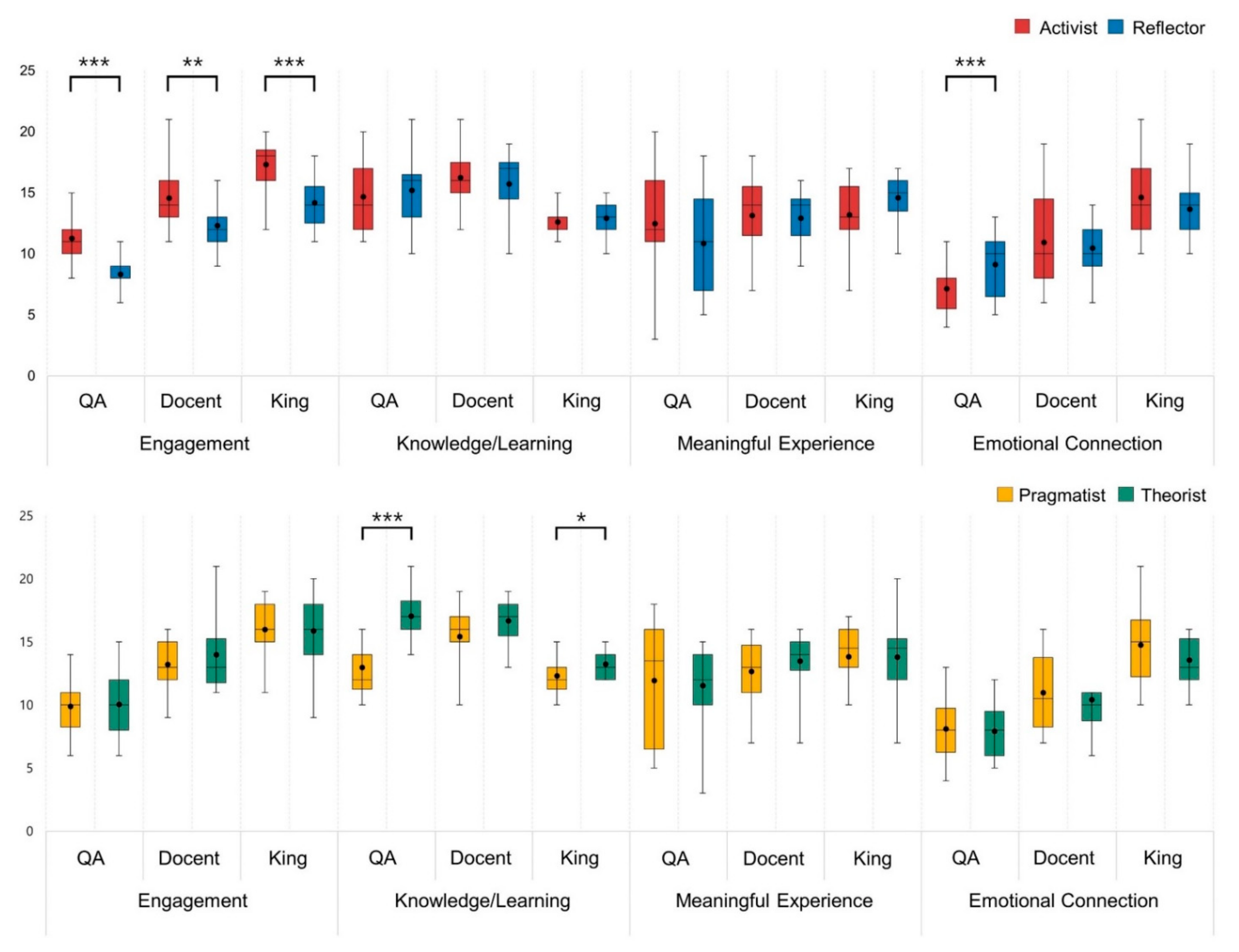

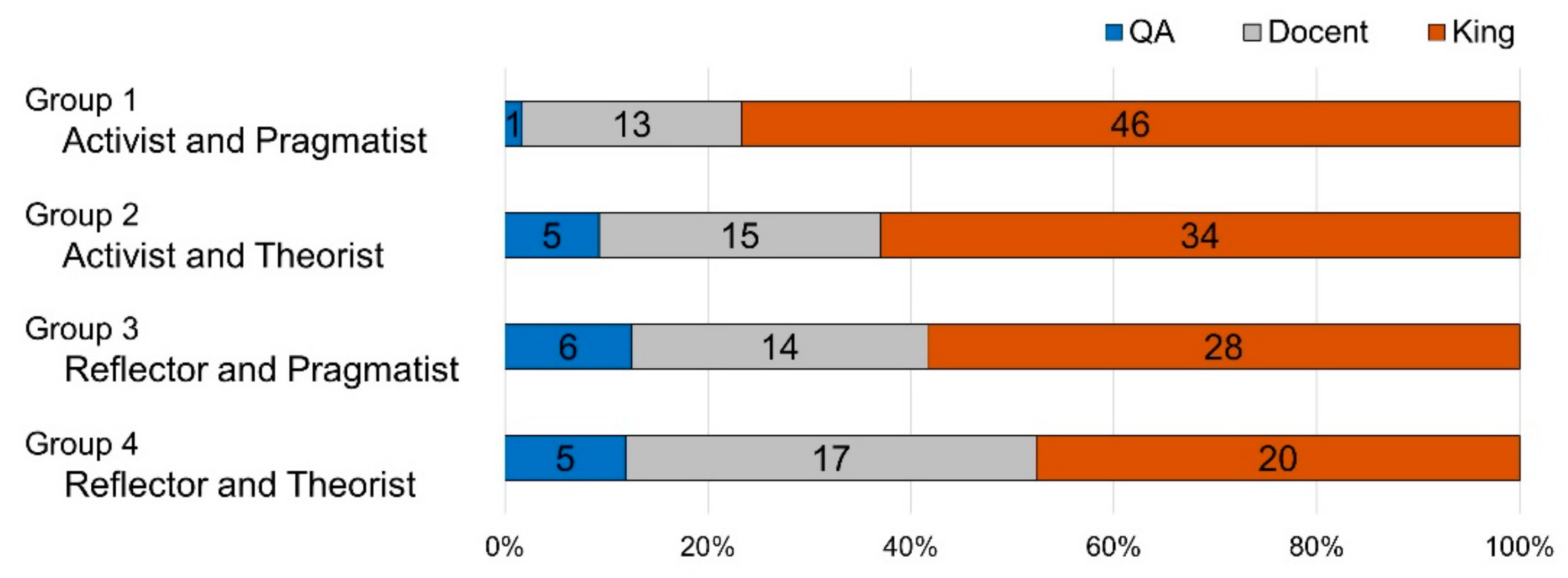

6.2.2. Chatbot and Learning Style

- Activist vs. Reflector:

- Pragmatist vs. Theorist:

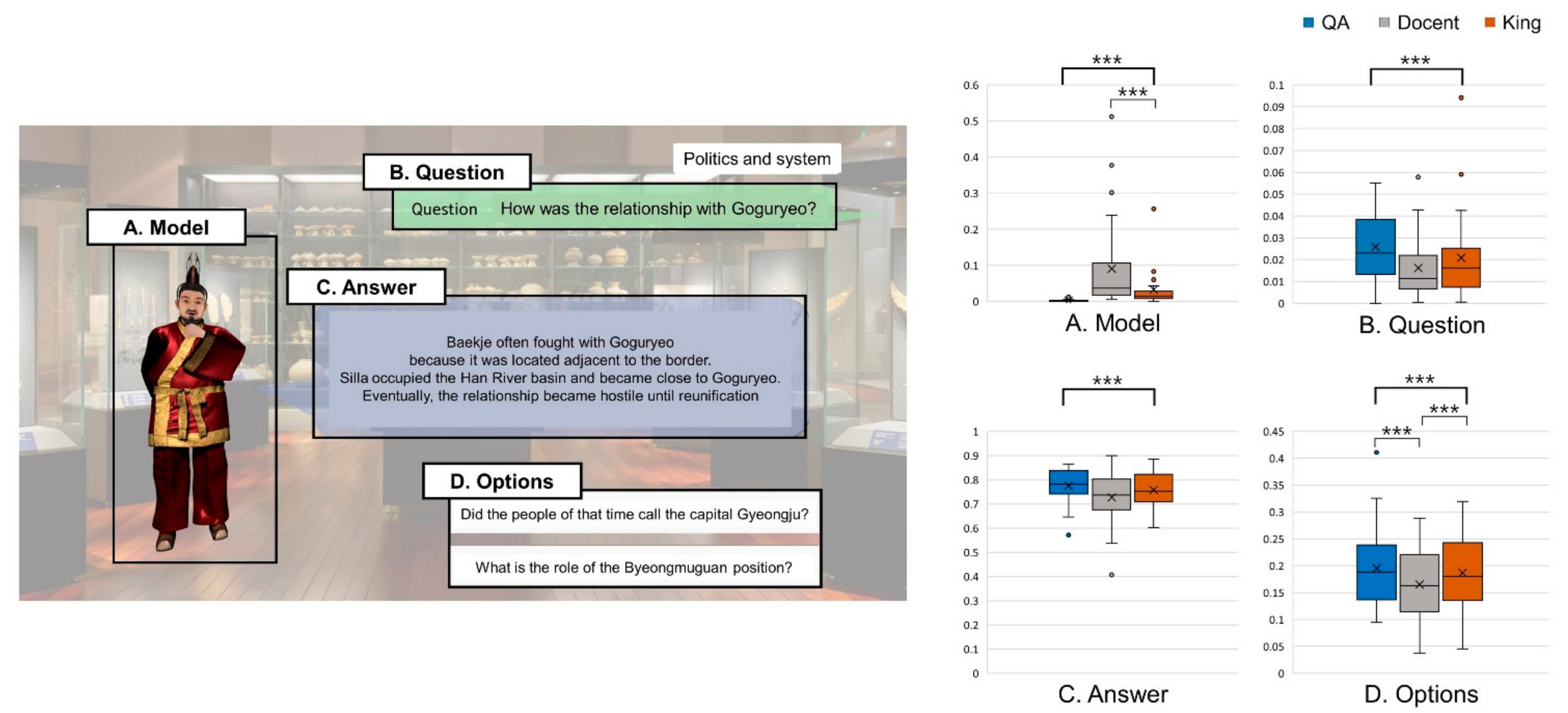

- Analysis with Gaze and Behavior Data:

7. Discussion

7.1. What User Type Best Matches the Chatbot Model?

7.2. User Behaviors while Interacting with the Chatbots

7.3. Concerns Regarding the Bias of Presenting a Historical Figure

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Levstik, L.S.; Barton, K.C. Researching History Education: Theory, Method, and Context, 1st ed.; Routledge: New York, NY, USA, 2018. [Google Scholar]

- Levstik, L.S.; Barton, K.C. Committing acts of history: Mediated action, humanistic education and participatory democracy. In Critical Issues in Social Studies Research for the 21st Century; Stanley, W., Ed.; Information Age Publishing: Greenwich, CT, USA, 2001; pp. 119–147. [Google Scholar]

- Marcus, A.S.; Stoddard, J.D. Teaching History with Museums: Strategies for K-12 Social Studies; Taylor & Francis: Abingdon, UK, 2017. [Google Scholar]

- Wilson, A. Creativity in Primary Education, 3rd ed.; SAGE Publications: Thousand Oaks, CA, USA, 2009. [Google Scholar]

- Jackson, N. Creativity in History Teaching and Learning. Subject Perspectives on Creativity in Higher Education Working Paper; The Higher Education Academy: Heslington, UK, 2005; Available online: https://www.creativeacademic.uk/uploads/1/3/5/4/13542890/creativity_in_history.pdf (accessed on 7 August 2021).

- Agnew, V. History’s affective turn: Historical reenactment and its work in the present. Rethink. Hist. 2007, 11, 299–312. [Google Scholar] [CrossRef]

- Aidinopoulou, V.; Sampson, D. An action research study from implementing the flipped classroom model in primary school history teaching and learning. J. Educ. Technol. Soc. 2017, 20, 237–247. [Google Scholar]

- Yilmaz, K. Historical empathy and its implications for classroom practices in schools. Hist. Teach. 2007, 40, 331–337. [Google Scholar] [CrossRef]

- Bernik, A.; Bubaš, G.; Radošević, D. Measurement of the effects of e-learning courses gamification on motivation and satisfaction of students. In Proceedings of the 41st International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO 2018), Opatija, Croatia, 21–25 May 2018; pp. 0806–0811. [Google Scholar] [CrossRef]

- Sreelakshmi, A.S.; Abhinaya, S.B.; Nair, A.; Nirmala, S.J. A Question Answering and Quiz Generation Chatbot for Education. In Proceedings of the Grace Hopper Celebration India (GHCI 2019), Bangalore, India, 6–8 November 2019; pp. 1–6. [Google Scholar]

- Hein, G.E. Museum education. In A Companion to Museum Studies; Macdonald, S., Ed.; Blackwell Publications: Malden, MA, USA, 2006; pp. 340–352. [Google Scholar]

- Falk, J.H.; Dierking, L.D. Learning from Museums: Visitor Experiences and the Making of Meaning; AltaMira Press: Walnut Creek, CA, USA, 2000. [Google Scholar]

- Chung, D.J. The effects of history education activities using Shilla founder’s myth on children’s historical attitude and creative leadership. J. Res. Inst. Silla Cult. 2018, 52, 1–23. [Google Scholar] [CrossRef]

- Pallud, J.; Monod, E. User experience of museum technologies: The phenomenological scales. Eur. J. Inf. Syst. 2010, 19, 562–580. [Google Scholar] [CrossRef]

- Zancanaro, M.; Kuflik, T.; Boger, Z.; Goren-Bar, D.; Goldwasser, D. Analyzing museum visitors’ behavior patterns. In User Modeling 2007; Conati, C., McCoy, K., Paliouras, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 238–246. [Google Scholar]

- Chaves, A.P.; Gerosa, M.A. How should my chatbot interact? A survey on human-chatbot interaction design. Int. J. Hum. Comput. Interact. 2019, 37, 729–758. [Google Scholar] [CrossRef]

- Ho, A.; Hancock, J.; Miner, A.S. Psychological, relational, and emotional effects of self-disclosure after conversations with a chatbot. J. Commun. 2018, 68, 712–733. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.C.; Yamashita, N.; Huang, Y.; Fu, W. “I Hear You, I Feel You”: Encouraging deep self-disclosure through a chatbot. In Proceeding of CHI Conference on Human Factors in Computing Systems (CHI 2020), Honolulu, HI, USA, 25–30 April 2020; pp. 1–12. [Google Scholar]

- Van der Lee, C.; Croes, E.; de Wit, J.; Antheunis, M. Digital confessions: Exploring the role of chatbots in self-disclosure. In Proceeding of Third International Workshop, CONVERSATIONS 2019, Amsterdam, The Netherlands, 19–20 November 2019; p. 21. [Google Scholar]

- AbuShawar, B.; Atwell, E. ALICE chatbot: Trials and outputs. Comput. Sist. 2015, 19, 625–632. [Google Scholar] [CrossRef]

- Ruan, S.; Jiang, L.; Xu, J.; Tham, B.J.K.; Qiu, Z.; Zhu, Y.; Murnane, E.L.; Brunskill, E.; Landay, J.A. QuizBot: A dialogue-based adaptive learning system for factual knowledge. In Proceeding of the CHI Conference on Human Factors in Computing Systems (CHI 2019), Glasgow, Scotland, 4–9 May 2019; p. 357. [Google Scholar]

- Wu, E.H.; Lin, C.; Ou, Y.; Liu, C.; Wang, W.; Chao, C. Advantages and Constraints of a Hybrid Model K-12 E-Learning Assistant Chatbot. IEEE Access 2020, 8, 77788–77801. [Google Scholar] [CrossRef]

- Ondáš, S.; Pleva, M.; Hládek, D. How chatbots can be involved in the education process. In Proceeding of the 2019 17th International Conference on Emerging eLearning Technologies and Applications (ICETA), Starý Smokovec, Slovakia, 21–22 November 2019; pp. 575–580. [Google Scholar]

- Clarizia, F.; Colace, F.; Lombardi, M.; Pascale, F.; Santaniello, D. Chatbot: An education support system for student. In Cyberspace Safety and Security; Castiglione, A., Pop, F., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 291–302. [Google Scholar]

- Fadhil, A.; Villafiorita, A. An adaptive learning with gamification & conversational UIs: The rise of CiboPoliBot. In 25th Conference on User Modeling, Adaptation and Personalization (UMAP 17), Bratislava, Slovakia, July 2017; Tkalcic, M., Thakker, D., Eds.; Association for Computing Machinery: New York, NY, USA, 2017; pp. 408–412. [Google Scholar]

- Hu, T.; Xu, A.; Liu, Z.; You, Q.; Guo, Y.; Sinha, V.; Luo, J.; Akkiraju, R. Touch your heart: A tone-aware chatbot for customer care on social media. In CHI Conference on Human Factors in Computing Systems (CHI 2018), Montreal QC, Canada, April 2018; Mandryk, R., Hancock, M., Eds.; Association for Computing Machinery: New York, NY, USA, 2018; p. 415. [Google Scholar]

- Bickmore, T.; Pfeifer, L.; Schulman, D. Relational agents improve engagement and learning in science museum visitors. In Intelligent Virtual Agents; Vilhjálmsson, H.H., Kopp, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 55–67. [Google Scholar]

- McTear, M.; Callejas, Z.; Griol, D. The Conversational Interface: Talking to Smart Devices; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar]

- Takeuchi, A.; Naito, T. Situated facial displays: Towards social interaction. In SIGCHI Conference on Human Factors in Computing Systems (CHI ‘95), Denver Colorado, USA, 7–11 May 1995; Katz, I.R., Mack, R., Eds.; ACM Press: New York, NY, USA, 1995; pp. 450–455. [Google Scholar]

- Becker, C.; Kopp, S.; Wachsmuth, I. Why emotions should be integrated into conversational agents. In Conversational Informatics: An Engineering Approach; Nishida, T., Ed.; John Wiley & Sons, Ltd.: Chichester, UK, 2007; pp. 49–68. [Google Scholar]

- Cassell, J.; Bickmore, T.; Billinghurst, M.; Campbell, L.; Chang, K.; Vilhjálmsson, H.; Yan, H. Embodiment in conversational interfaces: Rea. In Proceedings of the SIGCHI conference on Human Factors in Computing Systems, Pittsburgh, PA, USA, 15–20 May 1999; pp. 520–527. [Google Scholar]

- His, S. A study of user experiences mediated by nomadic web content in a museum. J. Comput. Assist. Learn. 2003, 19, 308–319. [Google Scholar]

- Carrozzino, M.; Bergamasco, M. Beyond virtual museums: Experiencing immersive virtual reality in real museums. J. Cult. Herit. 2010, 11, 452–458. [Google Scholar] [CrossRef]

- Cesário, V.; Petrelli, D.; Nisi, V. Teenage visitor experience: Classification of behavioral dynamics in museums. In Proceeding of the CHI Conference on Human Factors in Computing Systems (CHI 2020), Honolulu, HI, USA, 25–30 April 2020; pp. 1–13. [Google Scholar]

- Candello, H.; Pinhanez, C.; Pichiliani, M.; Cavalin, P.; Figueiredo, F.; Vasconcelos, M.; Carmo, H.D. The effect of audiences on the user experience with conversational interfaces in physical spaces. In Proceeding of the CHI Conference on Human Factors in Computing Systems (CHI 2019), Glasgow, Scotland, 4–9 May 2019; p. 90. [Google Scholar]

- Machidon, O.M.; Tavčar, A.; Gams, M.; Duguleană, M. CulturalERICA: A conversational agent improving the exploration of European cultural heritage. J. Cult. Herit. 2020, 41, 152–165. [Google Scholar] [CrossRef]

- Varitimiadis, S.; Kotis, K.; Spiliotopoulos, D.; Vassilakis, C.; Margaris, D. ‘Talking’ triples to museum chatbots. In Culture and Computing; Rauterberg, M., Ed.; Springer International Publishing: Cham, Switzerland, 2020; pp. 281–299. [Google Scholar]

- Gaia, G.; Boiano, S.; Borda, A. Engaging museum visitors with AI: The case of chatbots. In Museums and Digital Culture: New Perspectives and Research; Giannini, T., Bowen, J.P., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 309–329. [Google Scholar]

- Massaro, A.; Maritati, V.; Galiano, A. Automated Self-learning Chatbot Initially Build as a FAQs Database Information Retrieval System: Multi-level and Intelligent Universal Virtual Front-office Implementing Neural Network. Informatica 2018, 42, 515–525. [Google Scholar] [CrossRef] [Green Version]

- Efraim, O.; Maraev, V.; Rodrigues, J. Boosting a rule-based chatbot using statistics and user satisfaction ratings. In Conference on Artificial Intelligence and Natural Language; Springer: Cham, Switzerland, 2017; pp. 27–41. [Google Scholar]

- Hien, H.T.; Cuong, P.N.; Nam, L.N.H.; Nhung, H.L.T.K.; Thang, L.D. Intelligent assistants in higher-education environments: The FIT-EBot, a chatbot for administrative and learning support. In Proceedings of the Ninth International Symposium on Information and Communication Technology, Danang City, Viet Nam, 6–7 December 2018; pp. 69–76. [Google Scholar]

- Sandu, N.; Gide, E. Adoption of AI-Chatbots to enhance student learning experience in higher education in India. In Proceedings of the 2019 18th International Conference on Information Technology Based Higher Education and Training (ITHET), Magdeburg, Germany, 26–27 September 2019; pp. 1–5. [Google Scholar]

- Anne Frank House. Available online: https://www.annefrank.org/en/about-us/news-and-press/news/2017/3/21/anne-frank-house-launches-bot-messenger/ (accessed on 7 August 2021).

- Field Museum. Available online: https://www.fieldmuseum.org/exhibitions/maximo-titanosaur?chat=open (accessed on 7 August 2021).

- Andy CarnegieBot. Available online: https://carnegiebot.org/ (accessed on 7 August 2021).

- Schaffer, S.; Gustke, O.; Oldemeier, J.; Reithinger, N. Towards chatbots in the museum. In Proceedings of the 2nd Workshop Mobile Access Cultural Heritage, Barcelona, Spain, 3–6 September 2018. [Google Scholar]

- De Araújo, L.M. Hacking Cultural Heritage. Ph.D. Thesis, University of Bermen, Bremen, Germany, 2018. [Google Scholar]

- Falco, F.D.; Vassos, S. Museum experience design: A modern storytelling methodology. Des. J. 2017, 20, S3975–S3983. [Google Scholar] [CrossRef] [Green Version]

- Kang, S. Educational encounter of history and museum. Stud. Hist. Educ. 2012, 16, 7–13. [Google Scholar] [CrossRef]

- Kolb, D.A. The Kolb Learning Style Inventory; Hay Resources Direct: Boston, MA, USA, 2007. [Google Scholar]

- Schrepp, M. User Experience Questionnaire Handbook. All You Need to Know to Apply the UEQ Successfully in your Project; Häfkerstraße: Weyhe, Germany, 2015. [Google Scholar]

- Othman, M.K.; Petrie, H.; Power, C. Engaging visitors in museums with technology: Scales for the measurement of visitor and multimedia guide experience. In Human-Computer Interaction—INTERACT 2011; Campos, P., Graham, N., Jorge, J., Nunes, N., Palanque, P., Winckler, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 92–99. [Google Scholar]

| General Knowledge (Kingdom of Silla) | Specific Knowledge (King Jinheung) |

| G1. Historical figures | S1. Information about the king |

| G2. Lifestyles | S2. Historical events |

| G3. Relics | S3. Politics and systems |

| Chatbot Model | Embodiment | Reenactment | Language Style | |

| QA system | Not Embodied | Not Reenacted | Third-person point of view | Phrase |

| Docent | Embodied | Not Reenacted | Third-person point of view | Modern sentence |

| Historical figures | Embodied | Reenacted | First-person point of view | Archaic sentence |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Noh, Y.-G.; Hong, J.-H. Designing Reenacted Chatbots to Enhance Museum Experience. Appl. Sci. 2021, 11, 7420. https://doi.org/10.3390/app11167420

Noh Y-G, Hong J-H. Designing Reenacted Chatbots to Enhance Museum Experience. Applied Sciences. 2021; 11(16):7420. https://doi.org/10.3390/app11167420

Chicago/Turabian StyleNoh, Yeo-Gyeong, and Jin-Hyuk Hong. 2021. "Designing Reenacted Chatbots to Enhance Museum Experience" Applied Sciences 11, no. 16: 7420. https://doi.org/10.3390/app11167420

APA StyleNoh, Y.-G., & Hong, J.-H. (2021). Designing Reenacted Chatbots to Enhance Museum Experience. Applied Sciences, 11(16), 7420. https://doi.org/10.3390/app11167420