Measuring Facial Illuminance with Smartphones and Mobile Devices

Abstract

:Featured Application

Abstract

1. Introduction

2. Materials and Methods

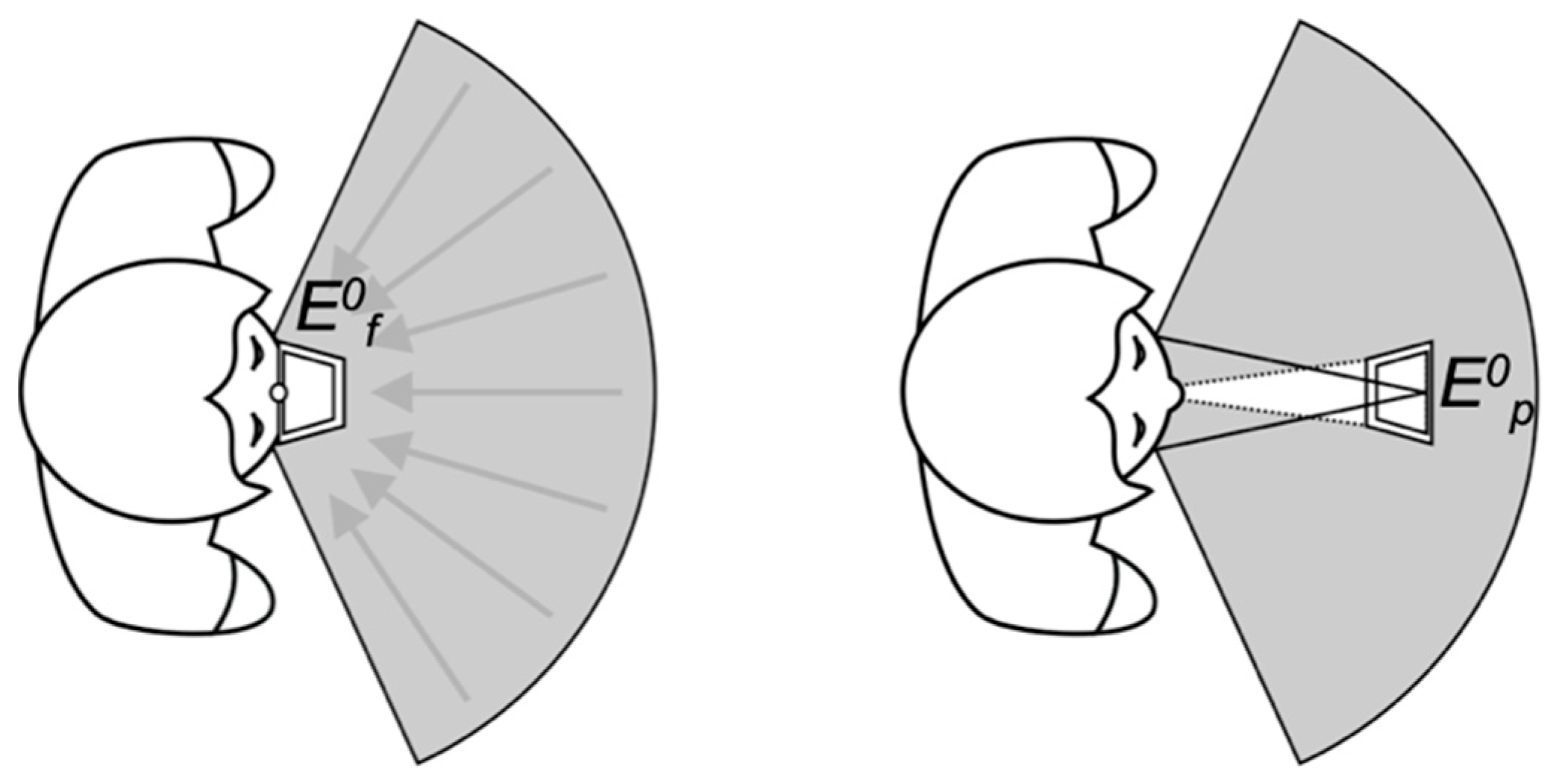

2.1. Quantification of Face Illuminance

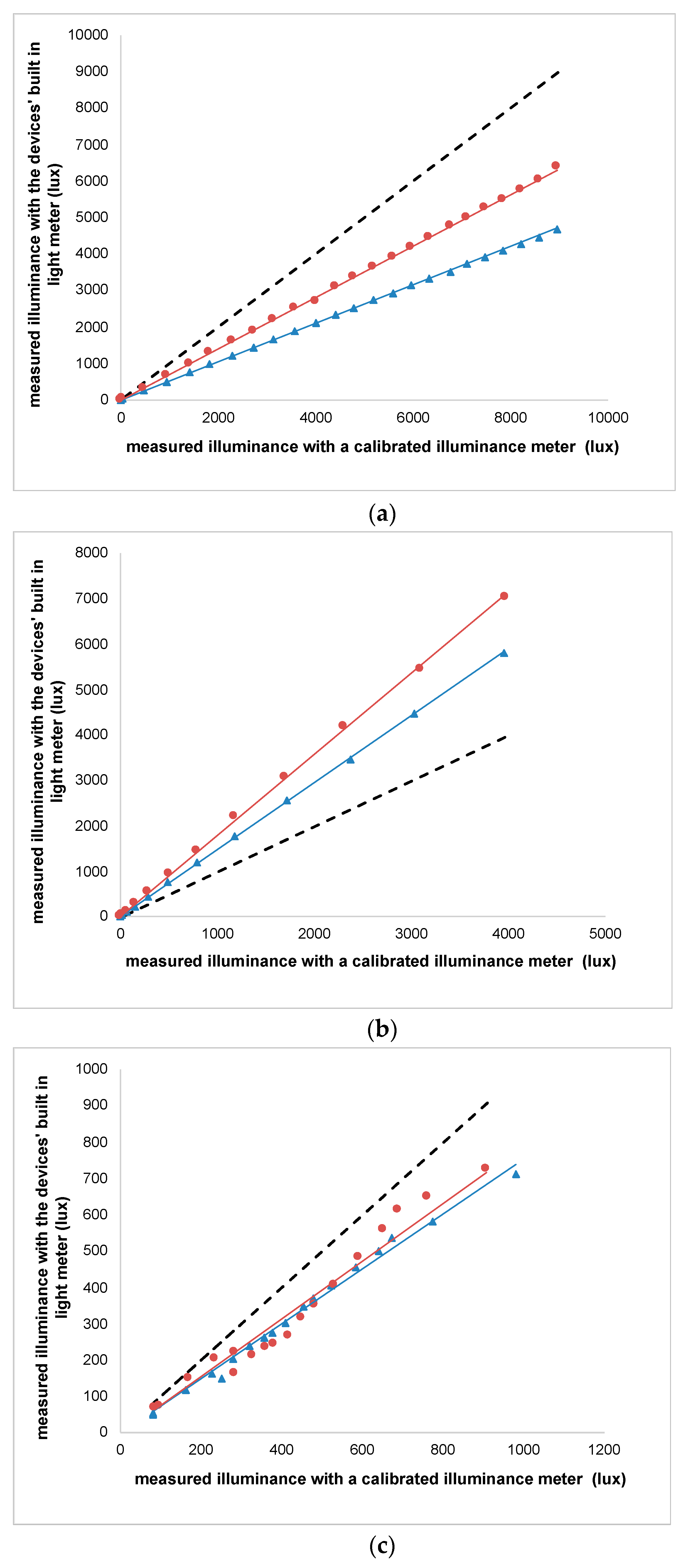

2.2. Calibration

2.3. Angular Selectivity of Device Ambient Light Sensors

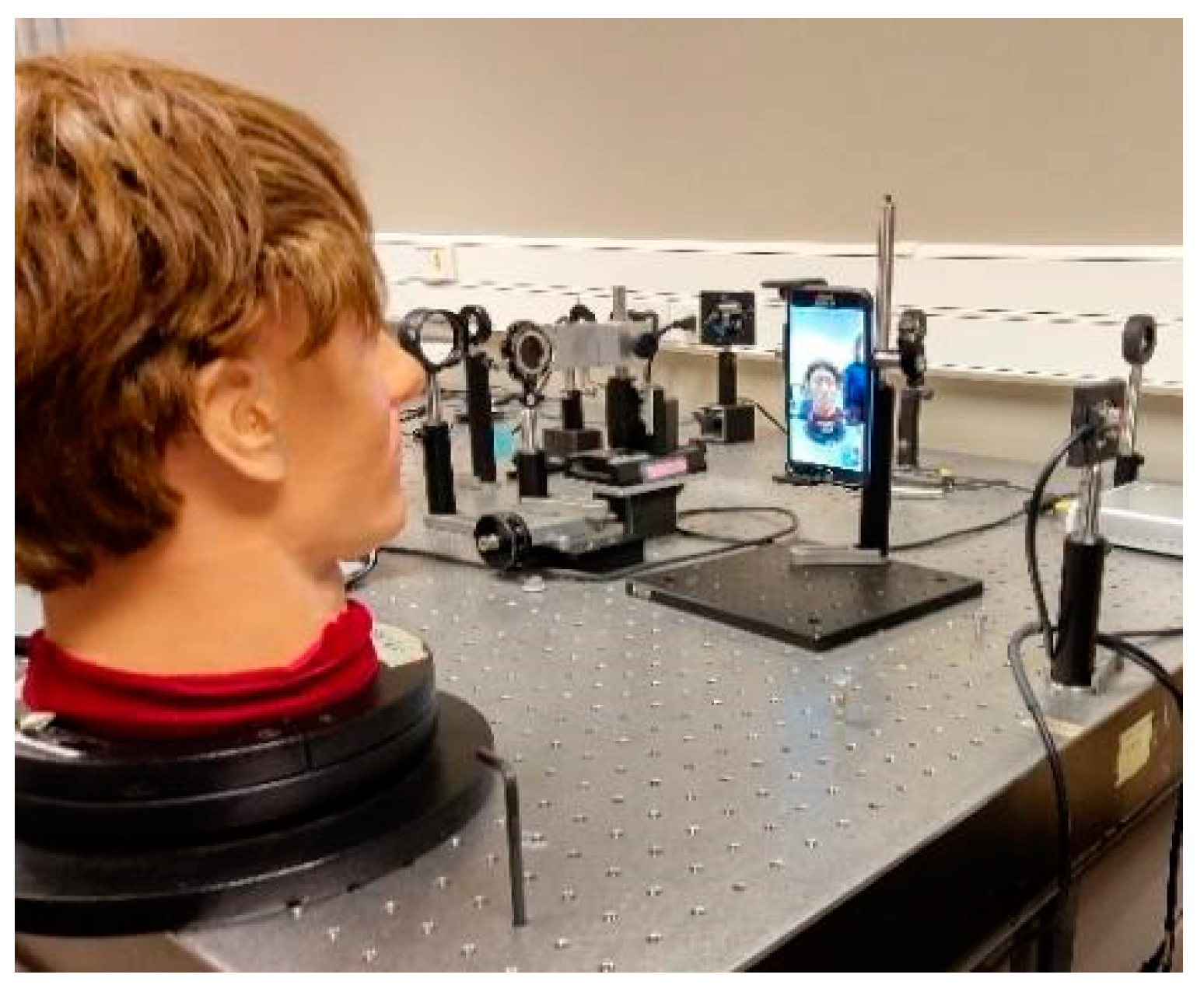

2.4. Measures of Face Illuminance Using the Camera

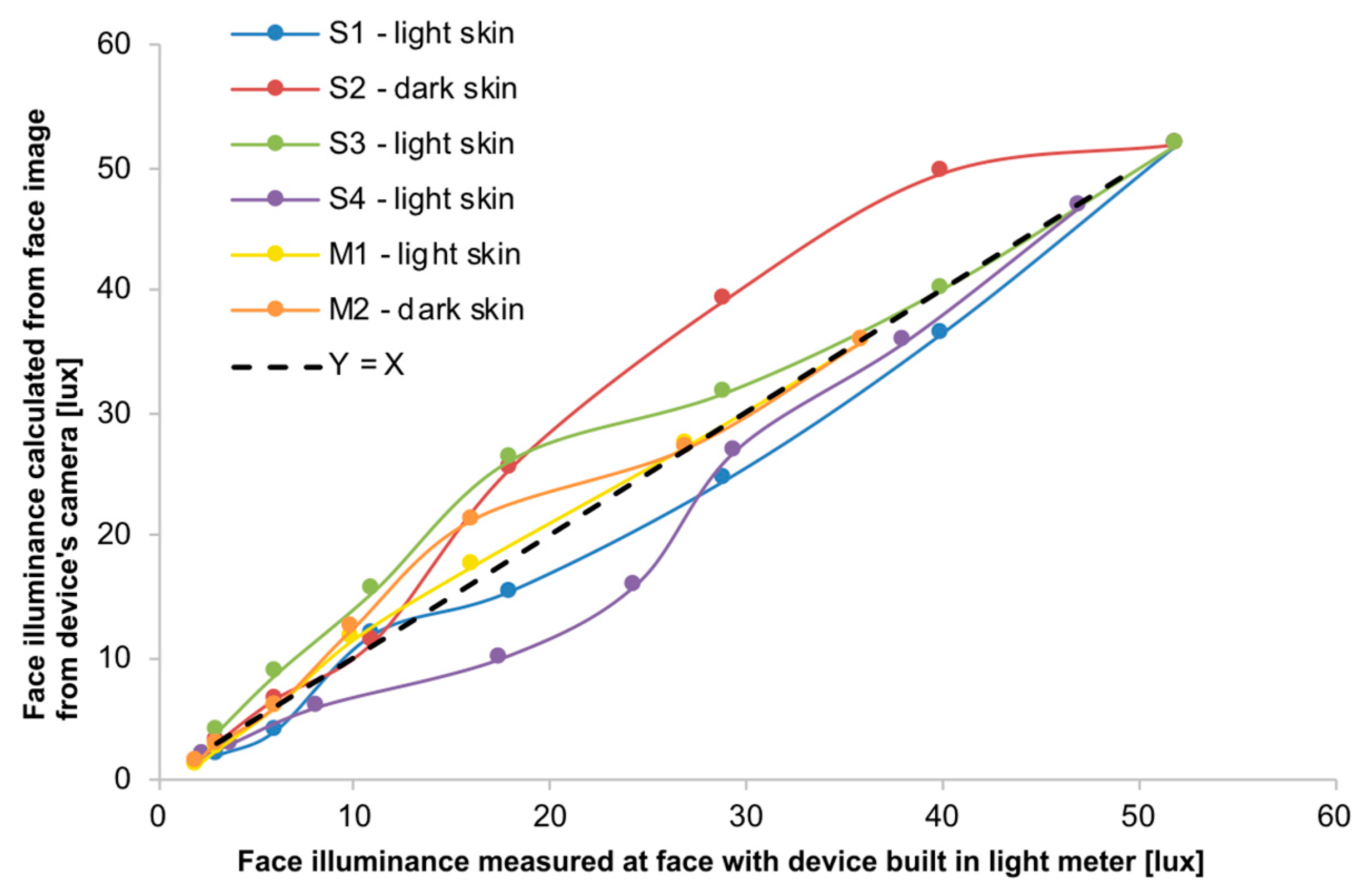

3. Results

4. Discussion

5. Conclusions

- (1)

- Illuminance resulting from the light sensors included in the smartphones is highly linear, and reveals a narrower spatial weighting than a full-hemifield lux meter.

- (2)

- It is possible to use a smartphone camera to measure the face illuminance in real-time with an accuracy around 80% once a one-time calibration has been performed using the devices in-built light sensor.

6. Patents

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Morgan, I.G.; Ohno-Matsui, K.; Saw, S.-M. Myopia. Lancet 2012, 379, 1739–1748. [Google Scholar] [CrossRef]

- Sun, J.; Zhou, J.; Zhao, P.; Lian, J.; Zhu, H.; Zhou, Y.; Sun, Y.; Wang, Y.; Zhao, L.-Q.; Wei, Y.; et al. High Prevalence of Myopia and High Myopia in 5060 Chinese University Students in Shanghai. Investig. Ophthalmol. Vis. Sci. 2012, 53, 7504–7509. [Google Scholar] [CrossRef] [Green Version]

- Jung, S.-K.; Lee, J.H.; Kakizaki, H.; Jee, D. Prevalence of Myopia and its Association with Body Stature and Educational Level in 19-Year-Old Male Conscripts in Seoul, South Korea. Investig. Ophthalmol. Vis. Sci. 2012, 53, 5579–5583. [Google Scholar] [CrossRef] [Green Version]

- Ng, S.W.; Popkin, B.M. Time use and physical activity: A shift away from movement across the globe. Obes. Rev. 2012, 13, 659–680. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ip, J.M.; Rose, K.A.; Morgan, I.G.; Burlutsky, G.; Mitchell, P. Myopia and the Urban Environment: Findings in a Sample of 12-Year-Old Australian School Children. Investig. Ophthalmol. Vis. Sci. 2008, 49, 3858–3863. [Google Scholar] [CrossRef] [Green Version]

- Joo, J.; Sang, Y. Exploring Koreans’ smartphone usage: An integrated model of the technology acceptance model and uses and gratifications theory. Comput. Hum. Behav. 2013, 29, 2512–2518. [Google Scholar] [CrossRef]

- Ichhpujani, P.; Singh, R.B.; Foulsham, W.; Thakur, S.; Lamba, A.S. Visual implications of digital device usage in school children: A cross-sectional study. BMC Ophthalmol. 2019, 19, 76. [Google Scholar] [CrossRef]

- Wu, P.-C.; Tsai, C.-L.; Wu, H.-L.; Yang, Y.-H.; Kuo, H.-K. Outdoor Activity during Class Recess Reduces Myopia Onset and Progression in School Children. Ophthalmology 2013, 120, 1080–1085. [Google Scholar] [CrossRef] [PubMed]

- Morgan, I.; Rose, K. How genetic is school myopia? Prog. Retin. Eye Res. 2005, 24, 1–38. [Google Scholar] [CrossRef] [PubMed]

- Wu, P.-C.; Chen, C.-T.; Lin, K.-K.; Sun, C.-C.; Kuo, C.-N.; Huang, H.-M.; Poon, L.Y.-C.; Yang, M.-L.; Chen, C.-Y.; Huang, J.-C.; et al. Myopia Prevention and Outdoor Light Intensity in a School-Based Cluster Randomized Trial. Ophthalmology 2018, 125, 1239–1250. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Flitcroft, D. The complex interactions of retinal, optical and environmental factors in myopia aetiology. Prog. Retin. Eye Res. 2012, 31, 622–660. [Google Scholar] [CrossRef]

- Ashby, R.; Ohlendorf, A.; Schaeffel, F. The Effect of Ambient Illuminance on the Development of Deprivation Myopia in Chicks. Investig. Ophthalmol. Vis. Sci. 2009, 50, 5348–5354. [Google Scholar] [CrossRef] [Green Version]

- Cohen, Y.; Belkin, M.; Yehezkel, O.; Solomon, A.S.; Polat, U. Dependency between light intensity and refractive development under light–dark cycles. Exp. Eye Res. 2011, 92, 40–46. [Google Scholar] [CrossRef]

- Smith, E.L.; Hung, L.-F.; Huang, J. Protective Effects of High Ambient Lighting on the Development of Form-Deprivation Myopia in Rhesus Monkeys. Investig. Ophthalmol. Vis. Sci. 2012, 53, 421–428. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gwiazda, J.; Deng, L.; Manny, R.E.; Norton, T.T. Seasonal Variations in the Progression of Myopia in Children Enrolled in the Correction of Myopia Evaluation Trial. Investig. Ophthalmol. Vis. Sci. 2014, 55, 752–758. [Google Scholar] [CrossRef]

- Mccrann, S.; Loughman, J.; Butler, J.S.; Paudel, N.; Flitcroft, D.I. Smartphone use as a possible risk factor for myopia. Clin. Exp. Optom. 2021, 104, 35–41. [Google Scholar] [CrossRef]

- Read, S.; Collins, M.; Vincent, S. Light Exposure and Physical Activity in Myopic and Emmetropic Children. Optom. Vis. Sci. 2014, 91, 330–341. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Read, S.A.; Collins, M.; Vincent, S. Light Exposure and Eye Growth in Childhood. Investig. Ophthalmol. Vis. Sci. 2015, 56, 6779–6787. [Google Scholar] [CrossRef]

- Read, S. Ocular and Environmental Factors Associated with Eye Growth in Childhood. Optom. Vis. Sci. 2016, 93, 1031–1041. [Google Scholar] [CrossRef] [Green Version]

- Verkicharla, P.K.; Ramamurthy, D.; Nguyen, Q.D.; Zhang, X.; Pu, S.-H.; Malhotra, R.; Ostbye, T.; Lamoureux, E.L.; Saw, S.-M. Development of the FitSight Fitness Tracker to Increase Time Outdoors to Prevent Myopia. Transl. Vis. Sci. Technol. 2017, 6, 20. [Google Scholar] [CrossRef] [PubMed]

- Dharani, R.; Lee, C.F.; Theng, Z.X.; Drury, V.B.; Ngo, C.; Sandar, M.; Wong, T.Y.; Finkelstein, E.A.; Saw, S.M. Comparison of measurements of time outdoors and light levels as risk factors for myopia in young Singapore children. Eye 2012, 26, 911–918. [Google Scholar] [CrossRef] [Green Version]

- Wen, L.; Cao, Y.; Cheng, Q.; Li, X.; Pan, L.; Li, L.; Zhu, H.; Lan, W.; Yang, Z. Objectively measured near work, outdoor exposure and myopia in children. Br. J. Ophthalmol. 2020, 104, 1542–1547. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pajic, B.; Zakharov, P.; Pajic-Eggspuehler, B.; Cvejic, Z. User Friendliness of a Wearable Visual Behavior Monitor for Cataract and Refractive Surgery. Appl. Sci. 2020, 10, 2190. [Google Scholar] [CrossRef] [Green Version]

- Rose, K.A.; Morgan, I.G.; Ip, J.; Kifley, A.; Huynh, S.; Smith, W.; Mitchell, P. Outdoor Activity Reduces the Prevalence of Myopia in Children. Ophthalmology 2008, 115, 1279–1285. [Google Scholar] [CrossRef]

- Rideout, V.M.B. Common Sense Media. Available online: https://www.commonsense.org (accessed on 21 May 2021).

- Do, T.-H.; Yoo, M. Performance Analysis of Visible Light Communication Using CMOS Sensors. Sensors 2016, 16, 309. [Google Scholar] [CrossRef] [PubMed]

- Bradley, A.; Jaskulski, M.T.; Lopez-Gil, N. A Computer-Implemented Method and System for Preventing Sight Deterioration Caused by Prolonged Use of Electronic Visual Displays in Low-Light Conditions. U.S. Patent 16/977,574, 3 February 2019. [Google Scholar]

- Hiscocks, P.D. Measuring Luminance with a Digital Camera; Syscomp Electronic Design Limited. 2011. Available online: https://scholar.google.com/scholar?hl=es-ES&q=Hiscocks+PD.+Measuring+luminance+with+a+digital+camera.+Technical+report%2C+Syscomp+Electronic+Design+Limited%2C+2014 (accessed on 10 July 2021).

- Texas Instruments. OPT3007 Ultra-Thin Ambient Light Sensor; Texas Instruments: Dallas, TX, USA, 2017. [Google Scholar]

- Ferraro, V.; Mele, M.; Marinelli, V. Sky luminance measurements and comparisons with calculation models. J. Atmos. Sol. Terr. Phys. 2011, 73, 1780–1789. [Google Scholar] [CrossRef]

- Sarwar, M.; Soomro, T.R. Impact of Smartphone’s on Society. Eur. J. Sci. Res. 2013, 98, 216–226. [Google Scholar]

| Device | Wide Angle Homogeneous Source | Small Angular Source | Office Environment |

|---|---|---|---|

| Galaxy Tab S2 | y = 0.7033x R2 = 0.9999 | y = 1.7879x R2 = 0.9997 | y = 0.7887x R2 = 0.9893 |

| Samsung S6 | y = 0.5247x R2 = 1 | y = 1.4747x R2 = 0.9999 | y = 0.7543x R2 = 0.9983 |

| Subject | Slope and Regression Coefficient |

|---|---|

| S1 | y = 0.9439x, R2 = 0.9870 |

| S2 | y = 1.1478x, R2 = 0.9458 |

| S3 | y = 1.0554x, R2 = 0.9488 |

| S4 | y = 0.9073x, R2 = 0.9506 |

| M1 | y = 1.0218x, R2 = 0.9952 |

| M2 | y = 1.0482x, R2 = 0.9714 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Salmerón-Campillo, R.M.; Bradley, A.; Jaskulski, M.; López-Gil, N. Measuring Facial Illuminance with Smartphones and Mobile Devices. Appl. Sci. 2021, 11, 7566. https://doi.org/10.3390/app11167566

Salmerón-Campillo RM, Bradley A, Jaskulski M, López-Gil N. Measuring Facial Illuminance with Smartphones and Mobile Devices. Applied Sciences. 2021; 11(16):7566. https://doi.org/10.3390/app11167566

Chicago/Turabian StyleSalmerón-Campillo, Rosa María, Arthur Bradley, Mateusz Jaskulski, and Norberto López-Gil. 2021. "Measuring Facial Illuminance with Smartphones and Mobile Devices" Applied Sciences 11, no. 16: 7566. https://doi.org/10.3390/app11167566

APA StyleSalmerón-Campillo, R. M., Bradley, A., Jaskulski, M., & López-Gil, N. (2021). Measuring Facial Illuminance with Smartphones and Mobile Devices. Applied Sciences, 11(16), 7566. https://doi.org/10.3390/app11167566