Quantifying Perceived Facial Asymmetry to Enhance Physician–Patient Communications

Abstract

1. Introduction

2. Materials and Methods

2.1. Acquisition of 3D Facial Images and Pre-Process

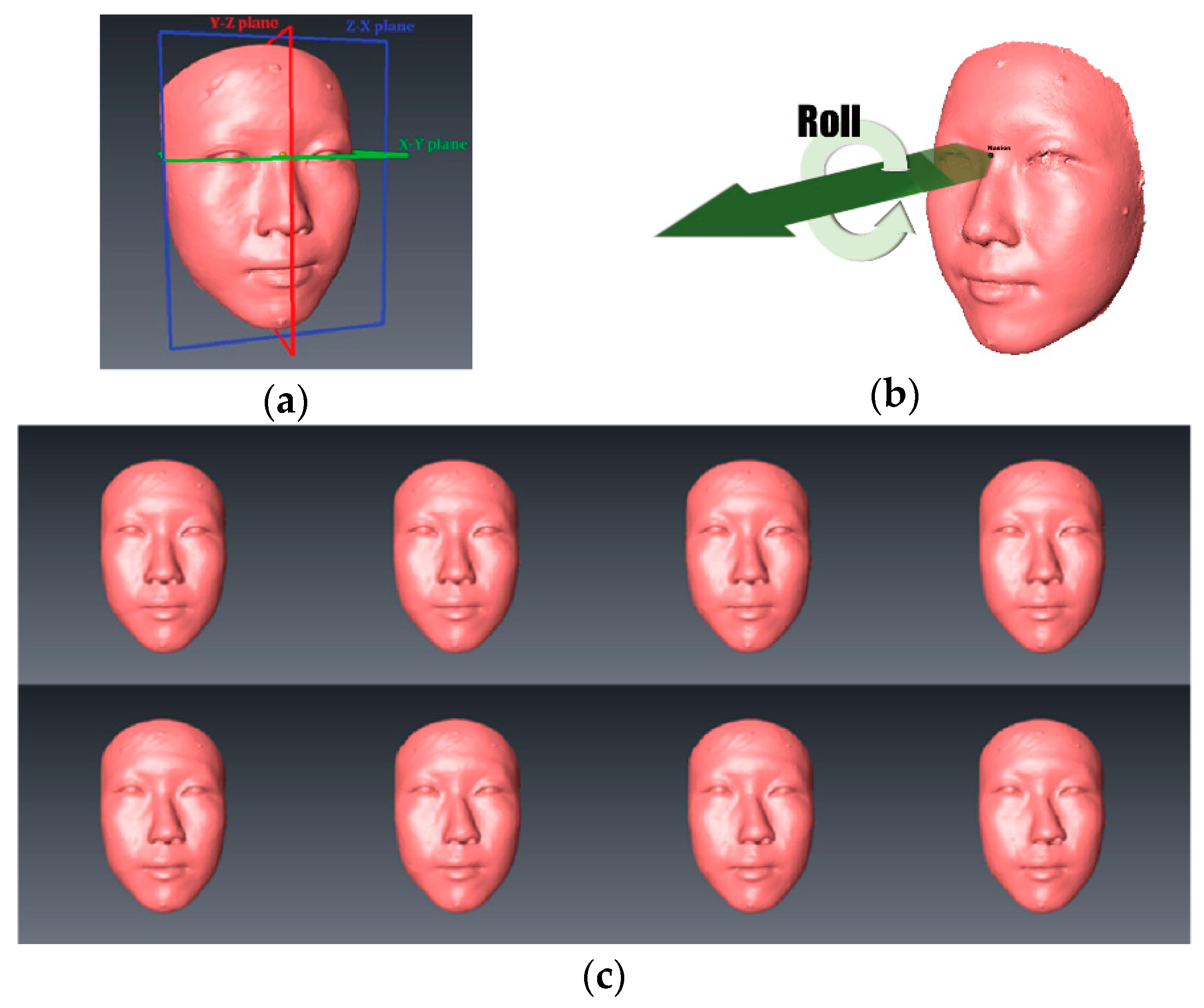

2.2. Facial Landmarks and the Corresponding 3D Coordinate System

2.3. Visual Questionnaire Surveys

2.4. Asymmetry Classifier

2.5. Overall Asymmetry Index (oAI) and Asymmetry Quadruple

3. Results

4. Discussion

5. Conclusions

6. Patents

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Meyer-Marcotty, P.; Stellzig-Eisenhauer, A.; Bareis, U.; Hartmann, J.; Kochel, J. Three-dimensional perception of facial asymmetry. Eur. J. Orthod. 2011, 33, 647–653. [Google Scholar] [CrossRef] [PubMed]

- Rubenstein, A.J.; Kalakanis, L.; Langlois, J.H. Infant preferences for attractive faces: A cognitive explanation. Dev. Psychol. 1999, 35, 848–855. [Google Scholar] [CrossRef] [PubMed]

- Bronstad, P.M.; Russell, R. Beauty is in the ‘we’ of the beholder: Greater agreement on facial attractiveness among close relations. Perception 2007, 36, 1674–1681. [Google Scholar] [CrossRef]

- Bengtsson, M.; Wall, G.; Miranda-Burgos, P.; Rasmusson, L. Treatment outcome in orthognathic surgery—A prospective comparison of accuracy in computer assisted two and three-dimensional prediction techniques. J. Craniomaxillofac. Surg. 2017. [Google Scholar] [CrossRef]

- Cheong, Y.W.; Lo, L.J. Facial asymmetry: Etiology, evaluation, and management. Chang. Gung Med. J. 2011, 34, 341–351. [Google Scholar]

- Farkas, L.G. Anthropometry of the Head and Face; Raven Press: New York, NY, USA, 1994. [Google Scholar]

- Huang, C.S.; Liu, X.Q.; Chen, Y.R. Facial asymmetry index in normal young adults. Orthod. Craniofacial Res. 2013, 16, 97–104. [Google Scholar] [CrossRef] [PubMed]

- Jackson, T.H.; Mitroff, S.R.; Clark, K.; Proffit, W.R.; Lee, J.Y.; Nguyen, T.T. Face symmetry assessment abilities: Clinical implications for diagnosing asymmetry. Am. J. Orthod. Dentofac. Orthop. 2013, 144, 663–671. [Google Scholar] [CrossRef]

- Alqattan, M.; Djordjevic, J.; Zhurov, A.I.; Richmond, S. Comparison between landmark and surface-based three-dimensional analyses of facial asymmetry in adults. Eur. J. Orthod. 2015, 37, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Thiesen, G.; Gribel, B.F.; Freitas, M.P. Facial asymmetry: A current review. Dent. Press J. Orthod. 2015, 20, 110–125. [Google Scholar] [CrossRef]

- Chin, Y.P.; Leno, M.B.; Dumrongwongsiri, S.; Chung, K.H.; Lin, H.H.; Lo, L.J. The pterygomaxillary junction: An imaging study for surgical information of LeFort I osteotomy. Sci. Rep. 2017, 7, 9953. [Google Scholar] [CrossRef]

- Peacock, Z.S.; Lee, C.C.; Klein, K.P.; Kaban, L.B. Orthognathic surgery in patients over 40 years of age: Indications and special considerations. J. Oral Maxillofac. Surg. 2014, 72, 1995–2004. [Google Scholar] [CrossRef]

- Chiang, W.-C.; Lin, H.-H.; Huang, C.-S.; Lo, L.-J.; Wan, S.-Y. The cluster assessment of facial attractiveness using fuzzy neural network classifier based on 3D Moiré features. Pattern Recognit. 2014, 47, 1249–1260. [Google Scholar] [CrossRef]

- ISAPS. ISAPS Global Statistics. Available online: http://www.isaps.org/news/isaps-global-statistics (accessed on 13 August 2021).

- Xu, Z.P.; Zhang, J.J.; Yan, N.; Yingying, H. Treatment Equality May Lead to Harmonious Patient-Doctor Relationship during COVID-19 in Mobile Cabin Hospitals. Front. Public Health 2021, 9, 557646. [Google Scholar] [CrossRef]

- Chen, C.; Lin, C.F.; Chen, C.C.; Chiu, S.F.; Shih, F.Y.; Lyu, S.Y.; Lee, M.B. Potential media influence on the high incidence of medical disputes from the perspective of plastic surgeons. J. Med. Assoc. 2017, 116, 634–641. [Google Scholar] [CrossRef]

- Amirthalingam, K. Medical dispute resolution, patient safety and the doctor-patient relationship. Singap. Med. J. 2017, 58, 681–684. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhang, L.; Yao, G.; Fang, Y. Analysis of current situation and influencing factor of medical disputes among different levels of medical institutions based on the game theory in Xiamen of China: A cross-sectional survey. Medicine 2018, 97, e12501. [Google Scholar] [CrossRef]

- Aoki, N.; Uda, K.; Ohta, S.; Kiuchi, T.; Fukui, T. Impact of miscommunication in medical dispute cases in Japan. Int. J. Qual. Health Care 2008, 20, 358–362. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Department of Health, T.C.G. Public Health of Taipei City Annual Report. Available online: https://english.doh.gov.taipei/News_Content.aspx?n=63300A6F51400770&sms=15A8D5A7A6A5F2DC&s=BC2E2C9A4BDDB232 (accessed on 13 August 2021).

- Chu, E.A.; Farrag, T.Y.; Ishii, L.E.; Byrne, P.J. Threshold of visual perception of facial asymmetry in a facial paralysis model. Arch. Facial Plast. Surg. 2011, 13, 14–19. [Google Scholar] [CrossRef]

- Naini, F.B.; Donaldson, A.N.; McDonald, F.; Cobourne, M.T. Assessing the influence of asymmeftry affecting the mandible and chin point on perceived attractiveness in the orthognathic patient, clinician, and layperson. J. Oral Maxillofac. Surg. 2012, 70, 192–206. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Zhang, D.; Sun, M.-M.; Chen, F.-M. Facial beauty analysis based on geometric feature: Toward attractiveness assessment application. Expert Syst. Appl. 2017, 82, 252–265. [Google Scholar] [CrossRef]

- Masuoka, N.; Muramatsu, A.; Ariji, Y.; Nawa, H.; Goto, S.; Ariji, E. Discriminative thresholds of cephalometric indexes in the subjective evaluation of facial asymmetry. Am. J. Orthod. Dentofac. Orthop. 2007, 131, 609–613. [Google Scholar] [CrossRef]

- Ferrario, V.F.; Sforza, C.; Schmitz, J.H.; Santoro, F. Three-dimensional facial morphometric assessment of soft tissue changes after orthognathic surgery. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontol. 1999, 88, 549–556. [Google Scholar] [CrossRef]

- Hajeer, M.Y.; Ayoub, A.F.; Millett, D.T. Three-dimensional assessment of facial soft-tissue asymmetry before and after orthognathic surgery. Br. J. Oral Maxillofac. Surg. 2004, 42, 396–404. [Google Scholar] [CrossRef]

- Djordjevic, J.; Pirttiniemi, P.; Harila, V.; Heikkinen, T.; Toma, A.M.; Zhurov, A.I.; Richmond, S. Three-dimensional longitudinal assessment of facial symmetry in adolescents. Eur. J. Orthod. 2013, 35, 143–151. [Google Scholar] [CrossRef]

- Cevidanes, L.H.; Bailey, L.J.; Tucker, S.F.; Styner, M.A.; Mol, A.; Phillips, C.L.; Proffit, W.R.; Turvey, T. Three-dimensional cone-beam computed tomography for assessment of mandibular changes after orthognathic surgery. Am. J. Orthod. Dentofac. Orthop. 2007, 131, 44–50. [Google Scholar] [CrossRef] [PubMed]

- Hsu, P.J.; Denadai, R.; Pai, B.C.J.; Lin, H.H.; Lo, L.J. Outcome of facial contour asymmetry after conventional two-dimensional versus computer-assisted three-dimensional planning in cleft orthognathic surgery. Sci. Rep. 2020, 10, 2346. [Google Scholar] [CrossRef] [PubMed]

- Lo, L.J.; Yang, C.T.; Ho, C.T.; Liao, C.H.; Lin, H.H. Automatic Assessment of 3-Dimensional Facial Soft Tissue Symmetry Before and After Orthognathic Surgery Using a Machine Learning Model: A Preliminary Experience. Ann. Plast. Surg. 2021, 86, S224–S228. [Google Scholar] [CrossRef]

- Lee, M.S.; Chung, D.H.; Lee, J.W.; Cha, K.S. Assessing soft-tissue characteristics of facial asymmetry with photographs. Am. J. Orthod. Dentofac. Orthop. 2010, 138, 23–31. [Google Scholar] [CrossRef]

- Padwa, B.L.; Kaiser, M.O.; Kaban, L.B. Occlusal cant in the frontal plane as a reflection of facial asymmetry. J. Oral Maxillofac. Surg. 1997, 55, 811–816. [Google Scholar] [CrossRef]

- McAvinchey, G.; Maxim, F.; Nix, B.; Djordjevic, J.; Linklater, R.; Landini, G. The perception of facial asymmetry using 3-dimensional simulated images. Angle Orthod. 2014, 84, 957–965. [Google Scholar] [CrossRef] [PubMed]

- Yamamoto, M.; Takaki, T.; Shibahara, T. Assessment of facial asymmetry based by subjective evaluation and cephalometric measurement. J. Oral Maxillofac. Surg. Med. Pathol. 2012, 24, 11–17. [Google Scholar] [CrossRef]

- Chamorro-Premuzic, T.; Reichenbacher, L. Effects of personality and threat of evaluation on divergent and convergent thinking. J. Res. Personal. 2008, 42, 1095–1101. [Google Scholar] [CrossRef]

- Cropley, A. In Praise of Convergent Thinking. Creat. Res. J. 2006, 18, 391–404. [Google Scholar] [CrossRef]

- Garson, G.D. Interpreting neural-network connection weights. AI Expert 1991, 6, 46–51. [Google Scholar]

| Landmarks | ID (i) | L(i) | Definition |

|---|---|---|---|

| Glabella | 1 | G | Most prominent midline point between eyebrows |

| Nasion | 2 | N | Deepest point of nasal bridge |

| Pronasale | 3 | Prn | Most protruded point of the apex nasi |

| Subnasale | 4 | Sn | Midpoint of angle at columella base |

| Labial (superius) | 5 | Ls | Midpoint of the upper vermilion line |

| Labial (inferius) | 6 | Li | Midpoint of the lower vermilion line |

| Stomion | 7 | Sto | Midpoint of the mouth orifice |

| Menton | 8 | Me | Most inferior point on chin |

| Exocanthion (left and right) | 9 * | Ex | Outer commissure of the eye fissure |

| Endocanthion (left and right) | 10 * | En | Inner commissure of the eye fissure |

| Alar curvature (Left and Right) | 11 * | Al | Most lateral point on alar contour |

| Cheilion (left and right) | 12 * | Ch | Point located at lateral labial commissure |

| Zygion (left and right) | 13 * | Zy | The most lateral extents of the zygomatic arches |

| Gonion (left and right) | 14 * | Go | The inferior aspect of the mandible at its most acute point |

| Nose(n) | Chin(c) | |||

|---|---|---|---|---|

| θ(°) | θ(°) | |||

| 01 | 0.5 | 0.35 | 0.56 | 1.14 |

| 03 | 1.5 | 1.06 | 1.68 | 3.42 |

| 05 | 2.5 | 1.77 | 2.81 | 5.70 |

| 07 | 3.5 | 2.48 | 3.93 | 7.97 |

| 09 | 4.5 | 3.19 | 5.05 | 10.25 |

| 11 | 5.5 | 3.90 | 6.17 | 12.52 |

| 13 | 6.5 | 4.60 | 7.29 | 14.78 |

| 15 | 7.5 | 5.31 | 8.42 | 17.04 |

| 0.1572 | −0.9305 | 0.1164 | 0.1997 | −0.4651 | −0.6731 | 0.1136 | 0.2616 | −0.6093 | −1.0658 | −0.6998 | −0.3851 | −1.0148 | −0.4178 |

| 1.0199 | 1.0967 | −0.8636 | −0.8685 | 0.3984 | −1.3503 | −1.8537 | −1.8547 | 0.9310 | 0.0100 | 0.0306 | −2.0512 | 1.0864 | −1.0609 |

| −1.1120 | 0.1507 | −1.4890 | −2.3591 | −0.1408 | 0.9426 | 0.7155 | 1.1847 | −0.4150 | −0.4978 | −2.3130 | 0.2910 | −1.2981 | 0.2095 |

| 0.6825 | 0.0520 | −0.1678 | −1.4755 | 0.5084 | −0.6806 | 0.1095 | −0.5615 | 0.1468 | 0.4848 | −0.7662 | 0.4254 | 0.4785 | −0.5332 |

| 0.0814 | 0.2938 | −1.8753 | −2.6104 | −0.4818 | −2.1416 | −1.1659 | −1.1171 | 0.4416 | 0.4628 | −2.5384 | −2.0989 | 0.3153 | −2.1255 |

| −0.4233 | −0.3582 | 0.3842 | 0.4808 | 0.1527 | −1.2400 | −0.5632 | −1.4579 | −0.1468 | −0.3758 | 0.1567 | −0.7575 | 0.7093 | −1.7727 |

| 0.0191 | 0.2685 | 3.0053 | 2.9216 | 0.3100 | 0.3137 | −0.4040 | −1.0149 | 0.8525 | 0.5245 | 2.1812 | −0.4173 | −0.3188 | 0.1759 |

| 0.2811 | 0.9569 | 0.2252 | 0.0875 | 0.4633 | −0.2850 | −0.6547 | −0.4486 | 0.6601 | 0.1391 | 0.7050 | 0.9174 | 0.0115 | 0.1564 |

| 1.1533 | 0.9079 | 0.4449 | 0.8827 | 0.7849 | −0.9138 | −1.5615 | −0.8682 | 0.2965 | −0.2506 | 0.5101 | −1.6634 | 0.1175 | −0.9619 |

| 0.1950 | 0.1871 | 0.8557 | 0.6553 | −0.1134 | 0.0447 | 0.0944 | 0.4108 | −0.3876 | −0.7262 | 1.6998 | 0.3650 | −0.0469 | −0.0631 |

| 1.0615 | 0.4904 | 2.1091 | −1.0133 | −1.5437 | −0.6059 | 2.7268 | −1.1142 | −3.4308 | 1.6700 |

| 1.1757 | 4.4862 | −3.4968 | −1.2510 | −4.3407 | −4.1067 | −2.1687 | −1.2679 | −2.1432 | 0.6080 |

| Hidden | −1.2863 | −1.8825 | 1.2718 | −0.9064 | −0.3507 | −0.4427 | −0.3438 | 0.7215 | 0.8118 | 2.0438 |

| Output | 0.2226 | 2.4557 |

| i | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| L(i) | G | N | Prn | Sn | Ls | Li | Sto | Me | Ex | En | Al | Ch | Zy | Go |

| RIi (%) | 5.1 | 5.7 | 8.2 | 11.1 | 4.1 | 7.8 | 6.4 | 8.6 | 5.1 | 5.4 | 11.7 | 8.8 | 5.3 | 6.8 |

| Ranking | 13 | 9 | 5 | 2 | 14 | 6 | 8 | 4 | 12 | 10 | 1 | 3 | 11 | 7 |

| (0.8182, , 0.88) | (2.2582, , 0.46) | ||

| (1.5815, , 0.74) | (3.0214, , 0.45) | ||

| (2.3462, , 0.53) | (3.7861, , 0.52) | ||

| (3.1108, , 0.51) | (4.5507, , 0.65) | ||

| (3.8749, , 0.73) | (5.3148, , 0.77) | ||

| (4.6382, , 0.86) | (6.0782, , 0.70) | ||

| (5.4006, , 0.83) | (6.8405, , 0.76) | ||

| (6.1618, PA, 0.93) | (7.6018, , 0.93) | ||

| (1.1772, , 0.80) | (2.6179, , 0.35) | ||

| (1.9404, , 0.79) | (3.3811, , 0.42) | ||

| (2.7051, , 0.51) | (4.1459, , 0.37) | ||

| (3.4697, , 0.53) | (4.9105, , 0.43) | ||

| (4.2338, , 0.60) | (5.6746, , 0.74) | ||

| (4.9972, , 0.73) | (6.4379, , 0.80) | ||

| (5.7595, , 0.91) | (7.2003, , 0.87) | ||

| (6.5208, , 0.92) | (7.9615, , 0.88) | ||

| (1.5375, , 0.66) | (2.9771, , 0.42) | ||

| (2.3007, , 0.46) | (3.7403, , 041) | ||

| (3.0654, , 0.50) | (4.5050, , 0.48) | ||

| (3.8300, , 0.53) | (5.2697, , 0.67) | ||

| (4.5941, , 0.53) | (6.0338, , 0.61) | ||

| (5.3575, , 0.87) | (6.7971, , 0.69) | ||

| (6.1199, , 0.91) | (7.5595, , 0.90) | ||

| (6.8811, , 0.91) | (8.3207, , 0.90) | ||

| (1.8979, , 0.54) | (3.3356, , 0.61) | ||

| (2.6612, , 0.56) | (4.0988, , 0.58) | ||

| (3.4259, , 0.43) | (4.8635, , 0.58) | ||

| (4.1905, , 0.51) | (5.6281, , 0.58) | ||

| (4.9546, , 0.52) | (6.3922, , 0.81) | ||

| (5.7179, , 0.82) | (7.1556, , 0.78) | ||

| (6.4803, , 0.86) | (7.9180, , 0.86) | ||

| (7.2415, , 0.89) | (8.6792, , 0.91) |

| = 01 | = 03 | = 05 | = 07 | = 09 | = 11 | = 13 | = 15 | |

|---|---|---|---|---|---|---|---|---|

| = 01 | PN | PN | PAN | PAN | PA | PA | PA | PA |

| = 03 | PN | PN | PAN | PAN | PA | PA | PA | PA |

| = 05 | PN | PN | PAN | PAN | PA | PA | PA | PA |

| = 07 | PN | PN | PAN | PAN | PA | PA | PA | PA |

| = 09 | PAN | PAN | PAN | PA | PA | PA | PA | PA |

| = 11 | PA | PAN | PAN | PAN | PA | PA | PA | PA |

| = 13 | PN | PAN | PA | PA | PA | PA | PA | PA |

| = 15 | PA | PA | PA | PA | PA | PA | PA | PA |

| PN | PAN | PA | |

| 0.35 | 0.46 * | 0.19 | |

| 0.33 | 0.32 | 0.35 * | |

| 0.42 * | 0.21 | 0.36 | |

| 0.15 | 0.43 * | 0.42 |

| = 01 | = 03 | = 05 | = 07 | = 09 | = 11 | = 13 | = 15 | |

|---|---|---|---|---|---|---|---|---|

| = 01 | PN | PN | PAN | PAN | PA | PA | PA | PA |

| = 03 | PN | PN | PAN | PAN | PA | PA | PA | PA |

| = 05 | PN | PN | PAN | PAN | PA | PA | PA | PA |

| = 07 | PN | PN | PAN | PAN | PA | PA | PA | PA |

| = 09 | PN | PAN | PAN | PA | PA | PA | PA | PA |

| = 11 | PN | PAN | PAN | PA | PA | PA | PA | PA |

| = 13 | PA | PAN | PA | PA | PA | PA | PA | PA |

| = 15 | PA | PA | PA | PA | PA | PA | PA | PA |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wan, S.-Y.; Tsai, P.-Y.; Lo, L.-J. Quantifying Perceived Facial Asymmetry to Enhance Physician–Patient Communications. Appl. Sci. 2021, 11, 8398. https://doi.org/10.3390/app11188398

Wan S-Y, Tsai P-Y, Lo L-J. Quantifying Perceived Facial Asymmetry to Enhance Physician–Patient Communications. Applied Sciences. 2021; 11(18):8398. https://doi.org/10.3390/app11188398

Chicago/Turabian StyleWan, Shu-Yen, Pei-Ying Tsai, and Lun-Jou Lo. 2021. "Quantifying Perceived Facial Asymmetry to Enhance Physician–Patient Communications" Applied Sciences 11, no. 18: 8398. https://doi.org/10.3390/app11188398

APA StyleWan, S.-Y., Tsai, P.-Y., & Lo, L.-J. (2021). Quantifying Perceived Facial Asymmetry to Enhance Physician–Patient Communications. Applied Sciences, 11(18), 8398. https://doi.org/10.3390/app11188398