In this section, the development of the ML model for scream detection in burning sites is discussed.

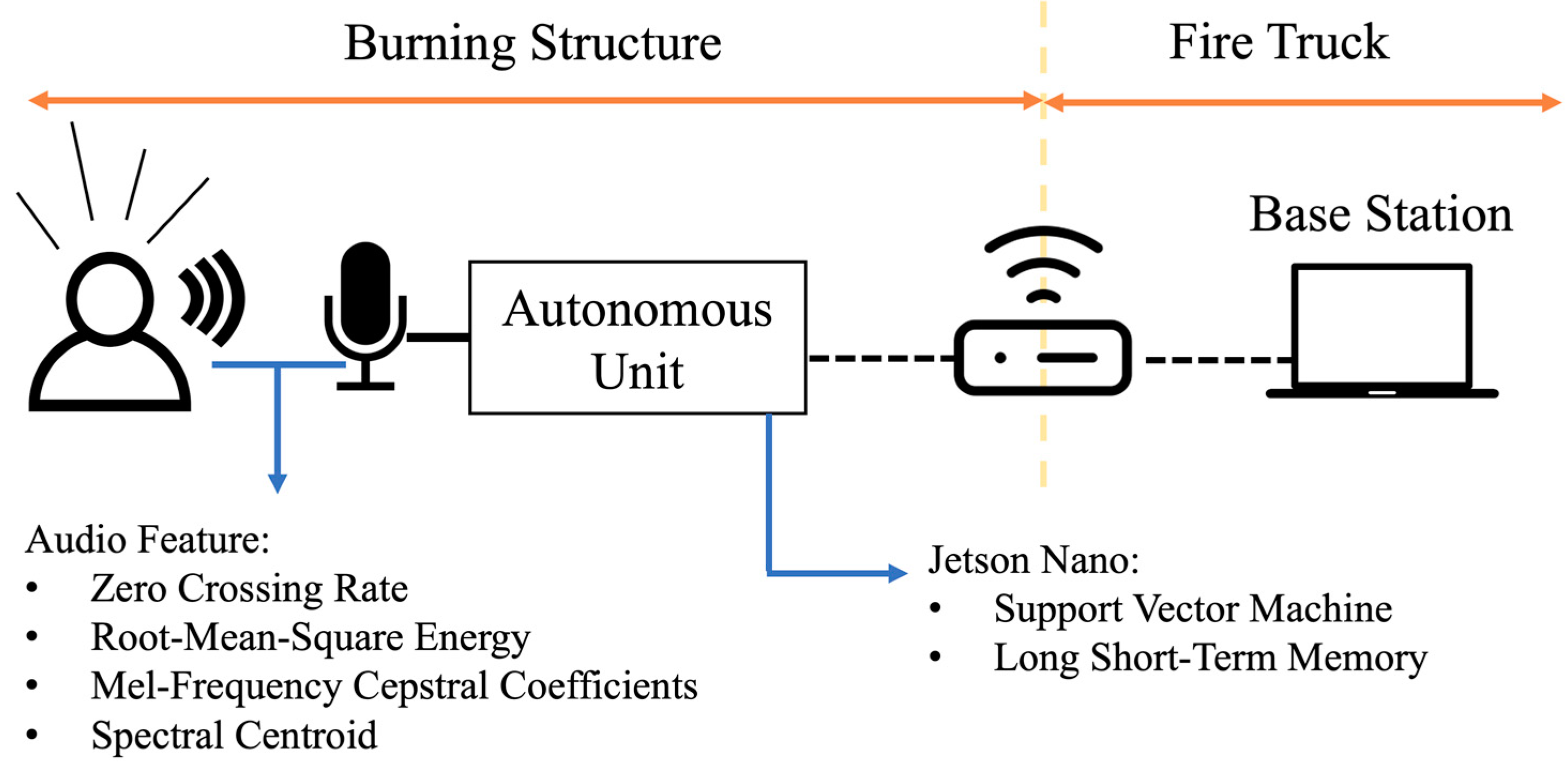

Figure 3 shows the architectural building blocks of the framework for preparing the model. We discuss below the creation procedure of the custom dataset used in this application. Raw audio files from the custom dataset goes through an audio pre-processing block, followed by an audio feature extraction block to extract necessary features. The feature data will then be split into three sets: ‘Training’, ‘Validation’, and ‘Testing’ set. Lastly, training and testing are performed on the ML classifier to produce classification. To build an efficient model to classify scream in AESV, we evaluated SVM and LSTM networks as ML classifiers.

3.1. Custom Dataset

Due to the unavailability of a dataset focused on audio events appearing in burning sites, we have developed our own custom dataset by collecting audio samples from multiple audio-based datasets. We have considered five types of audio event that may appear in fire scenario. These are the five classes for our dataset labeled as ‘scream’, ‘glass breaking’, ‘background’, ‘alarms’, and ‘conversations’, where ‘scream’ is our class of interest for this specific application. The ‘scream’ category contains both male and female screams. Audio samples of scream and glass breaking have been collected from MIVIA audio events dataset [

42]. MIVIA also has another surveillance application: gunshots; however, we discarded this event in our custom dataset due to less relevance with burning site noises. Audio samples from background have been collected from CHiME [

43]. This event includes audio recordings of human speakers, television, and household appliances in a domestic environment. Alarm samples have been collected from open access ‘freesound’ [

44] online dataset, which contains audio recordings of fire alarms, emergency alarms, siren and smoke detector alarms. Alarms and screams both have high pitch and intensity or loudness; therefore, we included alarms as a class so that the model learns to differentiate between these two audio events. Lastly, we have collected human conversation (in telephone, hallway, office and canteen) audio samples from CHiME and ‘Sound Privacy’ dataset [

45]. The intention behind adding this class was to prevent the ML model operating in the AESV from mistaking normal conversation from either the firefighters or people outside of the building for people still trapped inside. These collected samples are clean files with no added background noise.

Table 1 lists the number of collected audio files for each category and their source. These audio files have different durations ranging from 1 s to 3 s.

To mimic the actual fire scene, we have added complex background noise (burning sounds, fire sounds, siren, telephone ringing) with the clean dataset to create a noise-added dataset. These noise files have been added to the clean signal files at nine different signal-to-noise ratio (SNR) levels (−10 dB, −5 dB, 0 dB, 5 dB, 10 dB, 15 dB, 20 dB, 25 dB, 30 dB). Here, SNR corresponds to the ratio between the power of clean file versus the power of the noise file. Higher value of SNR corresponds to cleaner audio signal and lower SNR corresponds to noisier audio signal. The approach of varying SNR levels also covers the aspect of audio events occurring at various distances. Audio file with higher SNR appears as the source that is closer to the recording device, whereas audio file with lower SNR appears as the source that is farther from the recording device. We included different SNR conditions to develop a model that is robust to noise.

To create the noise-added file, we first randomly chose a background noise file and chopped it equal to the length of the clean file. Then, the power is calculated for both clean and noise file. From this power values, scale factor in signal is calculated for the desired SNR (in dB) level using Equation (1).

Here,

and

refers to power of clean and noise signals, respectively. The noise is then superimposed to the clean file using Equation (2).

All the files from the clean dataset have been added with background noise with nine levels of SNR. The noise addition was done in MATLAB.

Table 1 shows the final counts of noise added samples for each class.

Figure 4 shows the spectrograms of three audio samples from the ‘scream’ class in our custom dataset. The left spectrogram corresponds to a clean scream file (SNR 30 dB). The middle and right spectrograms represent the noise-added scream file at SNR of 5 dB and −10 dB, respectively. As can be seen from

Figure 4, the spectral information is corrupted noticeably at 5 dB and even more so at −10 dB, especially at higher frequencies, making the scream detection task challenging under low SNR conditions.

3.2. Data Visualization with t-SNE Analysis

The t-distributed stochastic neighbor embedding (t-SNE) is an unsupervised, non-linear technique mainly used for high-dimensional data visualization [

46]. The t-SNE algorithm optimizes a similarity measure between instances in the high and low dimensional space. Our input data is high dimensional data having sixteen dimensions. To visualize how the data samples are clustered in the custom dataset, we applied t-SNE to our dataset. To generate this plot, we selected two components for 2-dimensional plotting, the perplexity of 50 and a learning rate of 100.

Figure 5 refers to the t-SNE plot using parameter settings mentioned above. Five different clusters can be seen in the plot. These clusters refer to the five classes of our dataset. The classes are labeled as ‘scream’ as zero, ‘glass breaking’ as one, ‘background’ as two, ‘alarms’ as three, and ‘conversation’ as four. The clusters are separated from each other, apart from the edge samples. For instance, some of the ‘glass-breaking’ samples overlap with ‘scream’ samples shown in red color. In high-noise or low SNR conditions, high levels of noise make the scream and glass breaking signals sound somewhat similar. Similar observations can be made for ‘scream’ and ‘alarm’ audio samples. Interestingly, the ‘scream’ audio samples do not overlap with conversation and ‘background’ samples.

3.3. Audio Feature Extraction

Extracting features from audio files play a crucial role in the classification process. For the proposed research, the goal is to develop a custom set of dominant features that are able to separate classes reliably.

Feature extraction process from raw audio files include windowing with appropriate frame size, applying short-term Fourier transform, employing appropriate overlap and window function to squeeze as much data as possible, thus reducing the spectral leakage [

47]. We have used a sampling frequency of 16 KHz, a frame size of 512 samples (32 ms), and a 50% overlap (256 samples or 16 ms) between frames. A total of 16 features were extracted per 32 ms frame duration. The Python library

‘Librosa’ [

48] was used to extract these features and store it in a comma-separated (.csv) file for use in the further classification process. The following section discusses different features used in this research work.

We also looked into two important features of scream, ‘pitch’ and ‘loudness’. However, the library function we used for feature extraction is unable to extract pitch and loudness correctly for low SNR conditions (−10 dB, −5 dB, 0 dB). Therefore, we decided not to use these features in our feature set.

Table 2 shows the feature description and their number for our proposed model.

3.5. Classifier

Extracted features from the training set are then fed to the classifier to classify the audio source into classes ‘scream’, ‘glass breaking’, ‘alarms’, ‘conversation’, and ‘background.’ According to previous research on scream detection, machine learning and deep learning architectures have proven efficient. We examined support vector machines (SVM) and long short-term memory (LSTM) performance for our scream classification model. SVM was selected based on the previous research discussed in

Section 2.3 and review paper in [

49], which suggests SVM has outperformed neural networks in scream detection. Additionally, SVM is a much simpler model which makes it suitable for implementation on AESV [

34]. Since this application deals with audio files, the implementation of the LSTM network is a good fit for their excellent performance of time series recurrent classification. The research work presented in [

53] shows that the LSTM network has outperformed DNN and convolutional neural networks (CNN) in scream detection in noisy environments. This is similar to our case since fire emergency scenes tend to be extremely noisy. We performed experimentation and evaluation of these two classifiers to achieve high scream classification accuracy.

3.5.1. Support Vector Machine

Support vector machine (SVM) is a supervised machine learning technique based on statistical learning theory, introduced by Cortes and Vapnik in 1995 [

54]. The primary mechanism of SVM is to look for a hyperplane in an N-dimensional space to classify data points distinctly. A hyperplane is a decision boundary to optimize a plane with the maximum margin between data points from classes called support vectors. The previous research work on scream detection using SVM report high detection rate in noisy environments. SVM can typically be extended to multi-class classification [

55]; thus, it is a suitable classifier for our multi-class problem. This classifier also efficiently handles non-linear data by applying different types of kernels as linear, polynomial, radial basis function (RBF), and sigmoid kernel.

To feed the SVM model, all the extracted features per frame except for ZCR was further averaged over the entire audio file. The average value of highest 10% ZCR over each audio was calculated. This resulted in an input shape of (15,147, 16) for SVM classification. This step is required to reduce the number of features to avoid overfitting. For this work, we have used the built-in SVM model from ‘scikit-learn’ library [

56] of Python. We have conducted several experiments to determine optimized hyperparameters values for the SVM classifier. The hyperparameters are:

Kernel type: ‘linear’, ‘rbf’, ‘polynomial’, and ‘sigmoid.’

Regularization parameter (C): For large values of C, the optimization will choose a smaller-margin hyperplane. Conversely, a particularly small value of C will cause the optimizer to look for a larger margin separating the hyperplane, even if that hyperplane misclassifies more points [

57].

Degree: Degrees of the polynomial kernel.

Gamma (γ): This is the kernel coefficient. For a higher gamma value, SVM tries to exactly fit the training data set [

57].

The result and analysis section documents the best result and configuration of the SVM classifier for scream classification in burning structures.

3.5.2. Long Short-Term Memory (LSTM)

Long short-term memory (LSTM) networks are a recurrent neural network (RNN), which is efficient in learning order dependence in sequence prediction problems. To address the vanishing gradient problems of some RNN, the LSTM network was introduced to address this issue of RNN [

58]. LSTM cells are compiled of multiple gates: input gate (

i), forget gate (

f), output gate (

o). The functionalities of these gates are as follows: forget gate suppresses irrelevant information while letting applicable information pass through, input gate takes part in updating the cell state, and output gates update the value of hidden units.

We have implemented the built-in LSTM network from the ‘Keras’ [

59] library associated with TensorFlow [

60]. Unlike SVM, LSTM requires feeding the features from one sequence of events to the next sequence. Therefore, no averaging function has been applied to the extracted features. Since our audio data had different audio durations, we calculated the median number of frames for all the audio files in the custom dataset by setting a sampling rate of 16 kHz, frame length of 32 ms, and hop length (that is, overlap) of 16 ms in order to make all the audio files to same length. The median number of frames was calculated to be 110, which becomes the time-step for our dataset. The audio files were clipped at 110 frames if they were longer, and zero padding was used as in [

61] for the audio files shorter than 110 frames. The next step was to extract sixteen features for each time-step as a sequence of the data. The LSTM unit requires a three-dimensional input to process in the shape of the batch size, time steps, and the number of features. To develop the best-fitted model for the LSTM, we performed experimentation changing the hyperparameters as follows:

We documented the best settings from these experiments in the results and analysis section of LSTM.