Building a Reputation Attack Detector for Effective Trust Evaluation in a Cloud Services Environment

Abstract

:1. Introduction

1.1. Problem Statement

1.2. Contributions

- 🗸

- Ensuring that the greatest security is provided to customers of cloud services because they may be dealing with extremely sensitive data when using trust management services;

- 🗸

- Securing cloud services by effectively detecting malicious and inappropriate behaviors through the use of trust algorithms that can identify on/off and collusion attacks;

- 🗸

- Making sure that trust management service availability is adequate for the dynamic nature of cloud services;

- 🗸

- Providing dependable solutions to avoid reputation attacks and increase the precision of customers’ trust values by considering the significance of interaction.

2. Related Works

3. Design and Methodology

3.1. On/Off Attack

| Algorithm 1 On/off Attack Algorithm | |

| 1: | |

| 2: | |

| 3: | |

| 4: | |

| 5: | |

| 6: | |

| 7: | |

| 8: | |

| 9: | |

| 10: | |

| 11: | |

| 12: | |

| 13: | |

| 14: | |

| 15: | |

3.2. Collusion Attack

| Algorithm 2 Collusion Attack Algorithm | |

| 1: | |

| 2: | |

| 3: | |

| 4: | |

| 5: | |

| 6: | |

| 7: | |

| 8: | |

| 9: | |

| 10: | |

| 11: | |

| 12: | |

| 13: | |

| 14: | |

| 15: | |

| 16: | |

| 17: | |

| 18: | |

| 19: | |

| 20: | |

| 21: | |

| 22: | |

| 23: | |

| 24: | |

| 25: | |

| 26: | |

| 27: | |

| 28: | |

| 29: | |

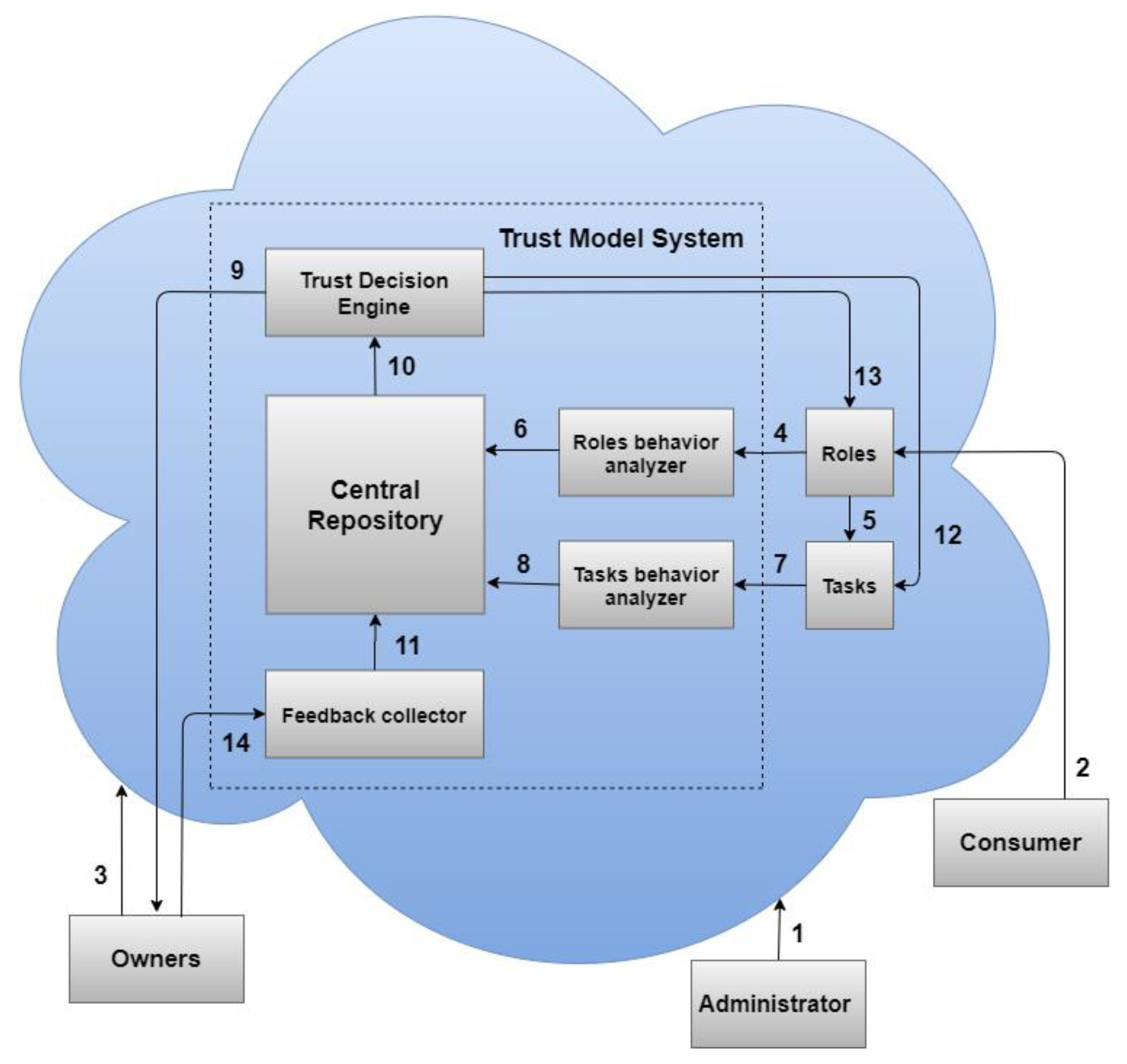

4. Proposed Framework Architecture

4.1. Trust Management System (TMS)

4.2. Proposed Method

- ▪

- The system is initiated by the administrator, who also establishes the system’s hierarchy of roles and tasks. Channel 1 makes it easier to upload the system’s created parameters for roles and tasks to the cloud;

- ▪

- When consumers want cloud data access rights, they first submit an access request through Channel 2 based on their tasks and roles;

- ▪

- If the consumer request is accepted, Channel 5 is used by the role entity to transfer the request to the task’s entity. The cloud responds by providing consumers with a cryptographic task-role based access control (T-RBAC) plan;

- ▪

- Encryption and uploading of data performed through Channel 3 can only be given by the owner if they feel the role or task can be trusted. The owner also informs the feedback collector of the consumers’ identities during this stage;

- ▪

- When an owner finds leakage of their data due to an untrustworthy consumer, they should report this role or task to the feedback collector through Channel 14;

- ▪

- When an approved owner provides new feedback, the feedback collector sends it to the centralized database through Channel 11 to archive each confidence report and interaction record produced when the roles and tasks interact;

- ▪

- The interaction logs are then stored in a centralized database, which is used by the trust decision engine to determine the trust value of roles and tasks using Channel 10;

- ▪

- At any point, the roles’ entity may approve trust assessments for the roles from the TMS, whereupon the roles’ entity responds to the trust management system through Channel 13 to receive feedback from the trust decision engine;

- ▪

- The role entity reviews the role parameters that decide a consumer’s cloud role membership until the feedback is obtained from the trust decision engine, and any malicious consumer membership is terminated;

- ▪

- When an owner provides negative feedback regarding a role as a result of data leakage, the role entity transfers the leakage details to the role behavior analyzer through Channel 4;

- ▪

- At any point, the tasks entity may approve trust assessments for the tasks from the TMS, whereupon the tasks entity responds to the trust management system through Channel 12 to receive feedback from the trust decision engine;

- ▪

- The task entity reviews the parameters of the task that decide a consumer’s cloud task membership until the feedback is obtained from the trust decision engine, and any malicious consumer membership is terminated;

- ▪

- When an owner provides negative feedback regarding a task as a result of data leakage, the task entity transfers the leakage details to the task behavior analyzer through Channel 7;

- ▪

- The analyzers then use Channels 6 and 8 to keep updating the trust details for the roles and tasks in the centralized database;

- ▪

- If an owner requests that their data be uploaded and encrypted in the cloud, the TMS performs a trust assessment. Once the TMS receives the request, it follows up with the owners through Channel 9;

- ▪

- The trust decision engine provides the owners with details of the trust assessment for their roles and tasks. The data owners then determine whether or not to allow consumers access to their services based on the results.

5. Simulation Results

Comparison of Security and Accuracy

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Noor, T.H.; Sheng, M.; Alfazi, A. Reputation Attacks Detection for Effective Trust Assessment among Cloud Services. In Proceedings of the 12th IEEE International Conference on Trust, Security and Privacy in Computing and Communications, Melbourne, Australia, 16–18 July 2013; pp. 469–476. [Google Scholar]

- Chang, E. General Attacks and Approaches in Cloud-Scale Networks. In Proceedings of the IEEE International Conference on Computer Communications, Valencia, Spain, 29 July–1 August 2019. [Google Scholar]

- Mahajan, S.; Mahajan, S.; Jadhav, S.; Kolate, S. Trust Management in E-commerce Websites. Int. Res. J. Eng. Technol. (IRJET) 2017, 4, 2934–2936. [Google Scholar]

- Noor, T.H.; Sheng, M.; Yao, L.; Dustdar, S.; Ngu, A.H. CloudArmor: Supporting Reputation-Based Trust Management for Cloud Services. IEEE Trans. Parallel Distrib. Syst. 2015, 27, 367–380. [Google Scholar] [CrossRef]

- Varalakshmi, P.; Judgi, T.; Balaji, D. Trust Management Model Based on Malicious Filtered Feedback in Cloud. In Data Science Analytics and Applications; Springer: Berlin/Heidelberg, Germany, 2018; pp. 178–187. [Google Scholar]

- Li, X.; Du, J. Adaptive and attribute-based trust model for service-level agreement guarantee in cloud computing. IET Inf. Secur. 2013, 7, 39–50. [Google Scholar] [CrossRef]

- Huang, L.; Xiong, Z.; Wang, G. A Trust-role Access Control Model Facing Cloud Computing. In Proceedings of the 35th Chinese Control Conference, Chengdu, China, 27–29 July 2016. [Google Scholar]

- Lin, G.; Wang, D.; Bie, Y.; Lei, M. MTBAC: A mutual trust based access control model in Cloud computing. China Commun. 2014, 11, 154–162. [Google Scholar]

- Zhu, C.; Nicanfar, H.; Leung, V.; Yang, L.T. An Authenticated Trust and Reputation Calculation and Management System for Cloud and Sensor Networks Integration. IEEE Trans. Inf. Forensics Secur. 2014, 10, 118–131. [Google Scholar]

- Chen, X.; Ding, J.; Lu, Z. A decentralized trust management system for intelligent transportation environments. IEEE Trans. Intell. Transp. Syst. 2020, 1–14. [Google Scholar] [CrossRef]

- Zhang, P.; Kong, Y.; Zhou, M. A Domain Partition-Based Trust Model for Unreliable Clouds. IEEE Trans. Inf. Forensics Secur. 2018, 13, 2167–2178. [Google Scholar] [CrossRef]

- Tan, Z.; Tang, Z.; Li, R.; Sallam, A.; Yang, L. Research of Workflow Access Control Strategy based on Trust. In Proceedings of the 11th Web Information System and Application Conference, Tianjin, China, 12–14 September 2014. [Google Scholar]

- Li, X.; Ma, H.; Zhou, F.; Yao, W.; Yao, W. T-Broker: A Trust-Aware Service Brokering Scheme for Multiple Cloud Collaborative Services. IEEE Trans. Inf. Forensics Secur. 2015, 10, 1402–1415. [Google Scholar] [CrossRef]

- Varsha, M.; Patil, P. A Survey on Authentication and Access Control for Cloud Computing using RBDAC Mechanism. Int. J. Innov. Res. Comput. Commun. Eng. 2015, 3, 12125–12129. [Google Scholar]

- Li, X.; Ma, H.; Zhou, F.; Gui, X. Service Operator-Aware Trust Scheme for Resource Matchmaking across Multiple Clouds. IEEE Trans. Parallel Distrib. Syst. 2014, 26, 1419–1429. [Google Scholar] [CrossRef]

- Bhattasali, T.; Chaki, R.; Chaki, N.; Saeed, K. An Adaptation of Context and Trust Aware Workflow Oriented Access Control for Remote Healthcare. Int. J. Softw. Eng. Knowl. Eng. 2018, 28, 781–810. [Google Scholar] [CrossRef]

- Noor, T.H.; Sheng, Q.Z.; Alfazi, A. Detecting Occasional Reputation Attacks on Cloud Services. In Web Engineering; Springer: Berlin/Heidelberg, Germany, 2013; pp. 416–423. [Google Scholar]

- Labraoui, N.; Gueroui, M.; Sekhri, L. On-Off Attacks Mitigation against Trust Systems in Wireless Sensor Networks. In Proceedings of the 5th International Conference on Computer Science and Its Applications (CIIA), Saida, Algeria, 20–21 May 2015; pp. 406–415. [Google Scholar]

- Noor, T.H.; Sheng, Q.Z.; Bouguettaya, A. Trust Management in Cloud Services; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Tong, W.M.; Liang, J.Q.; Lu, L.; Jin, X.J. Intrusion detection scheme based node trust value in WSNs. Syst. Eng. Electron. 2015, 37, 1644–1649. [Google Scholar]

- Ghafoorian, M.; Abbasinezhad-Mood, D.; Shakeri, H. A Thorough Trust and Reputation Based RBAC Model for Secure Data Storage in the Cloud. IEEE Trans. Parallel Distrib. Syst. 2018, 30, 778–788. [Google Scholar] [CrossRef]

- Nwebonyi, F.N.; Martins, R.; Correia, M.E. Reputation based approach for improved fairness and robustness in P2P protocols. Peer-to-Peer Netw. Appl. 2018, 12, 951–968. [Google Scholar] [CrossRef]

- Deng, W.; Zhou, Z. A Flexible RBAC Model Based on Trust in Open System. In Proceedings of the 2012 Third Global Congress on Intelligent Systems, Wuhan, China, 6–8 November 2012; pp. 400–404. [Google Scholar]

- Liang, J.; Zhang, M.; Leung, V.C. A reliable trust computing mechanism based on multisource feedback and fog computing in social sensor cloud. IEEE Internet Things J. 2020, 7, 5481–5490. [Google Scholar] [CrossRef]

- Zhou, L.; Varadharajan, V.; Hitchens, M. Integrating Trust with Cryptographic Role-Based Access Control for Secure Cloud Data Storage. In Proceedings of the 2013 12th IEEE International Conference on Trust, Security and Privacy in Computing and Communications, Melbourne, Australia, 16–18 July 2013; pp. 1–12. [Google Scholar]

- Chang, W.; Xu, F.; Dou, J. A Trust and Unauthorized Operation Based RBAC (TUORBAC) Model. In Proceedings of the International Conference on Control Engineering and Communication Technology, Shenyang, China, 7–9 December 2012. [Google Scholar]

- Marudhadevi, D.; Dhatchayani, V.N.; Sriram, V.S. A Trust Evaluation Model for Cloud Computing Using Service Level Agreement. Comput. J. 2014, 58, 2225–2232. [Google Scholar] [CrossRef]

- Tsai, W.T.; Zhong, P.; Bai, X.; Elston, J. Role-Based Trust Model for Community of Interest. In Proceedings of the 2009 IEEE International Conference on Service-Oriented Computing and Applications (SOCA), Taipei, Taiwan, 14–15 January 2009. [Google Scholar]

- Fan, Y.; Zhang, Y. Trusted Access Control Model Based on Role and Task in Cloud Computing. In Proceedings of the 7th International Conference on Information Technology in Medicine and Education, Huangshan, China, 13–15 November 2015. [Google Scholar]

- Bhatt, S.; Sandhu, R.; Patwa, F. An Access Control Framework for Cloud-Enabled Wearable Internet of Things. In Proceedings of the 3rd International Conference on Collaboration and Internet Computing (CIC), San Jose, CA, USA, 15–17 October 2017; pp. 213–233. [Google Scholar]

- Alshammari, S.; Telaihan, S.; Eassa, F. Designing a Flexible Architecture based on mobile agents for Executing Query in Cloud Databases. In Proceedings of the 21st Saudi Computer Society National Computer Conference (NCC), Riyadh, Saudi Arabia, 25–26 April 2018; pp. 1–6. [Google Scholar]

- Alshammari, S.; Albeshri, A.; Alsubhi, K. Integrating a High-Reliability Multicriteria Trust Evaluation Model with Task Role-Based Access Control for Cloud Services. Symmetry 2021, 13, 492. [Google Scholar] [CrossRef]

- Alshammari, S.T.; Albeshri, A.; Alsubhi, K. Building a trust model system to avoid cloud services reputation attacks. Egypt. Inform. J. 2021. [Google Scholar] [CrossRef]

- Uikey, C.; Bhilare, D.S. TrustRBAC: Trust role based access control model in multi-domain cloud environments. In Proceedings of the 2017 International Conference on Information, Communication, Instrumentation and Control (ICICIC), Indore, India, 17–19 August 2017; pp. 1–7. [Google Scholar]

- Fortino, G.; Fotia, L.; Messina, F.; Rosaci, D.; Sarne, G.M.L. Trust and Reputation in the Internet of Things: State-of-the-Art and Research Challenges. IEEE Access 2020, 8, 60117–60125. [Google Scholar] [CrossRef]

- Barsoum, A.; Hasan, A. Enabling Dynamic Data and Indirect Mutual Trust for Cloud Computing Storage Systems. IEEE Trans. Parallel Distrib. Syst. 2012, 24, 2375–2385. [Google Scholar] [CrossRef]

| 6 | 21 | 32 | 12 | 36 | 1 | 18 | |

| 126 | 126 | 126 | 126 | 126 | 126 | 126 | |

| 0.05 | 0.17 | 0.25 | 0.10 | 0.29 | 0.01 | 0.14 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alshammari, S.T.; Alsubhi, K. Building a Reputation Attack Detector for Effective Trust Evaluation in a Cloud Services Environment. Appl. Sci. 2021, 11, 8496. https://doi.org/10.3390/app11188496

Alshammari ST, Alsubhi K. Building a Reputation Attack Detector for Effective Trust Evaluation in a Cloud Services Environment. Applied Sciences. 2021; 11(18):8496. https://doi.org/10.3390/app11188496

Chicago/Turabian StyleAlshammari, Salah T., and Khalid Alsubhi. 2021. "Building a Reputation Attack Detector for Effective Trust Evaluation in a Cloud Services Environment" Applied Sciences 11, no. 18: 8496. https://doi.org/10.3390/app11188496

APA StyleAlshammari, S. T., & Alsubhi, K. (2021). Building a Reputation Attack Detector for Effective Trust Evaluation in a Cloud Services Environment. Applied Sciences, 11(18), 8496. https://doi.org/10.3390/app11188496