A Multicast Routing Scheme for the Internet: Simulation and Experimentation in Large-Scale Networks

Abstract

:1. Introduction

- Multicast depends on an overlay routing executed over the unicast routing topology, which suffers in terms of scalability, as it maintains a high number of routing entries and forwarding states at each node;

- It adds a level of indirection as routers forward the multicast traffic to the multicast group, and hosts have to subscribe to that multicast group using a subscription protocol like Internet Group Management Protocol (IGMP) or Multicast Listener Discovery (MLD) in IPv6;

- It uses special address space structure (class D for IPv4 and prefix ff00::/8 for IPv6) which requires that firewalls and Network Address Translation (NAT) routers have to be upgraded and configured to support multicast addresses;

- Economic reasons, as there is a need to determine how upstream providers reimburse downstream providers for installing state and replication of data;

- Management and security concerns about setting up shared trees between domains.

- Provides a scalable routing architecture because each router stores in its routing table only the (direct) neighbour-related entries, rather than tree structures or network graph information;

- Can work with any addressing space structure like IPv4/IPv6, with identifiers like geometric coordinates or with data/information centric content, as it does not rely on the unicast routing information;

- Can support a variety of metrics to decide the optimal branching path from the multicast source to leaf nodes;

- Has a scope that is not limited to routers and thus can be initiated by any host in such a way that a real end-to-end multicast distribution tree is provided and the additional host-router subscription protocol is not needed anymore.

2. Related Work and Our Contribution

2.1. Brief Overview of Multicast Routing in the Internet

2.2. Our Contributions

3. The Greedy Compact Multicast Routing (GCMR) Scheme

3.1. Preliminaries

- The stretch of a routing scheme is defined as the maximum ratio between the cost of the path traversed by a packet and the cost of the shortest path considering all node pairs . For the multicast case, the definition of the stretch changes to the total cost of the edges of the MDT to reach a given group of leaf nodes is divided by the cost of the minimum tree, which is the one produced by solving the corresponding Steiner Tree (ST) problem for the same leaf group [28].

- The memory space (in bits) takes into account the information required to be stored at each node to properly treat the multicast packets. The storage required by the algorithm is directly related to routing system scalability because the less memory a router needs to store its entries, the more scalable the routing system would be. The following routing information bases are defined [16]:

- -

- The Tree Information Base (TIB) essentially stores the state of all multicast distribution trees necessary to forward multicast packets at a router;

- -

- The Unicast Routing Information Base (URIB) is the unicast control message routing and it is used to determine the next shortest hop and/or the path (e.g., like BGP) towards a node of the network;

- -

- The Multicast Routing Information Base (MRIB) is the multicast control message routing and it is used to determine the next-hop neighbour to which any Join/Leave message is sent (towards the tree root or source).

- The communication cost (in number of messages) is defined as the number of messages exchanged to build the MDT (it is also usually known as the message cost). This metric is directly related to the overhead of the protocol, i.e., the higher the communication cost the longer the time needed to construct and maintain the tree.

- The transmission cost (in Mbytes) is defined as the total cost of transmitting packets through the edges of the MDT to reach a given set of leaf nodes. Concretely, we are counting the number of additional bits required to transmit a single packet to all leaf nodes taking into account a 1500-byte IP packet to be transmitted along a ST as a reference.

- The recovery time is defined as the maximum time needed to receive back a multicast transmission at the leaf nodes once a failure occurs in a link or node of the MDT.

3.2. Basic Functionality

3.3. Examples

3.4. Summary

4. Experimental Platform

4.1. Quagga Routing Suite

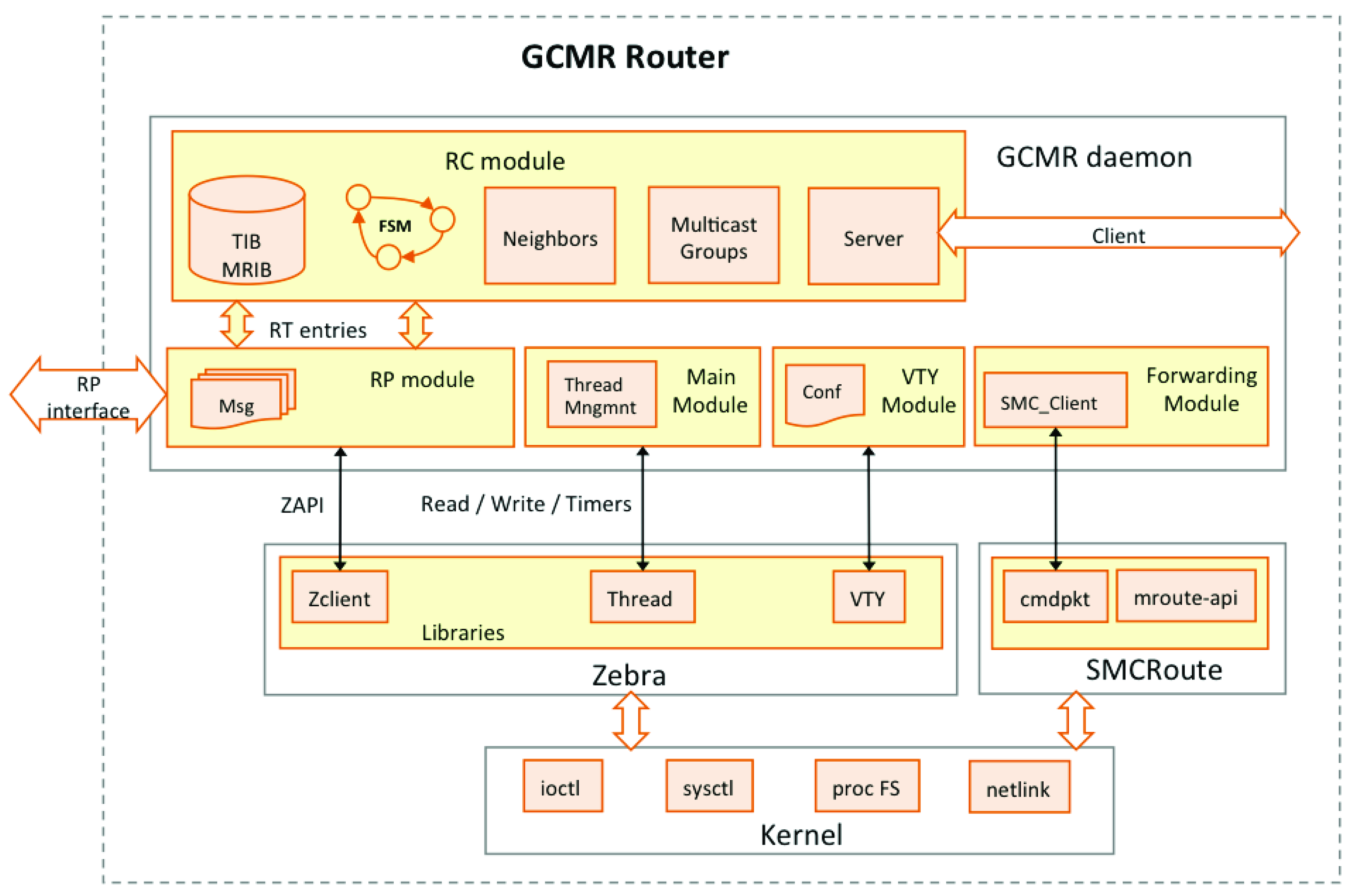

4.2. GCMR Implementation

- Idle: where GCMR initialises resources and structures;

- Local search: when a node sends a local R message to its neighbours or receives it from a neighbour;

- Global search: when local search fails, a node moves to the global search;

- Join: when a node receives a J message and remains in this state as long as a D message is received. In this case, the detach procedure is executed and the node is removed from MDT and returns to the idle state.

- Fail: when a node does not receive a S message from the source, it enters in the fail state and tries to recover it. It returns to the join state if the S message is received back.

4.3. iLab.t Virtual Wall Platform

5. Simulation Analysis

5.1. Reference Multicast Schemes

5.2. Scenario

5.3. Results

6. Experimental Analysis

6.1. Reference Multicast Scheme

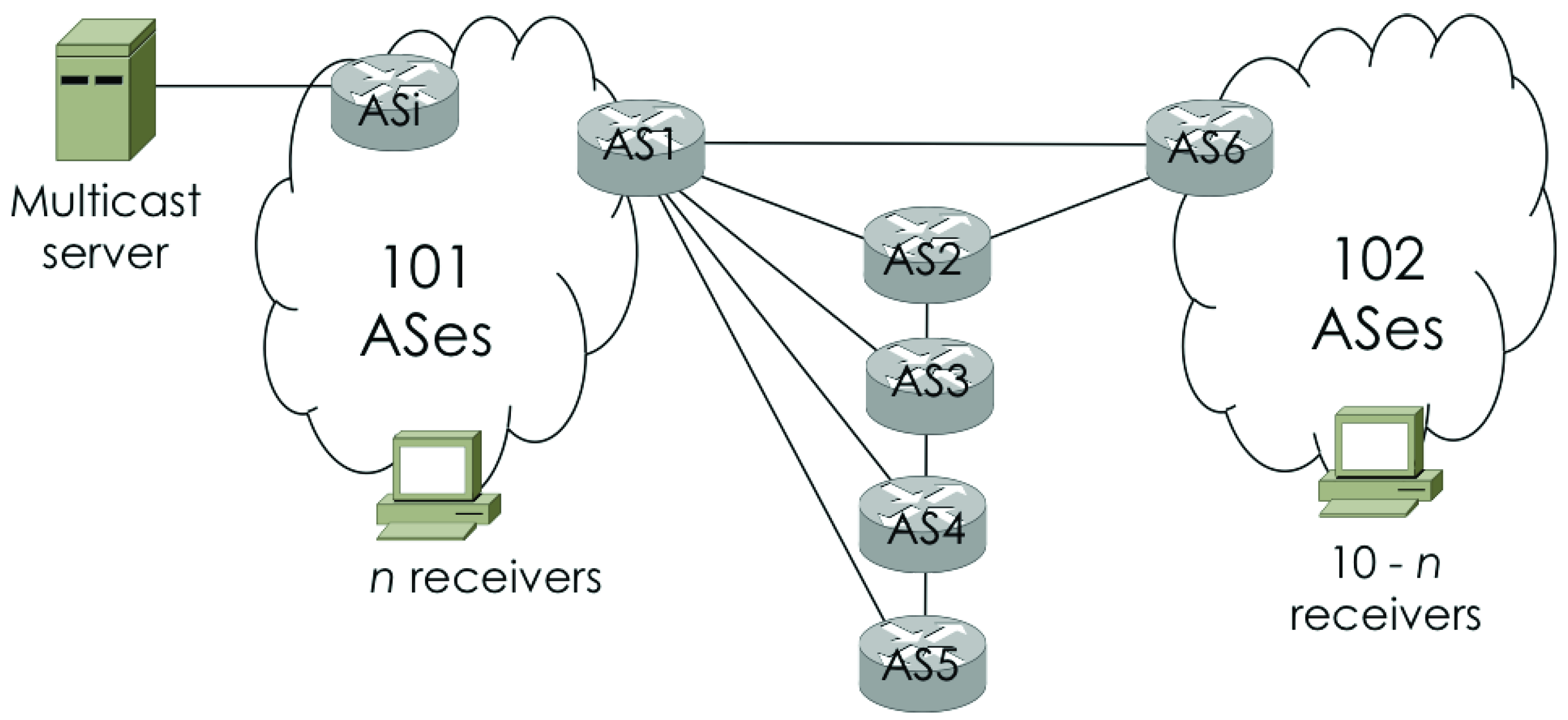

6.2. Scenario

6.3. Results

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AS | Autonomous System |

| BGP | Border Gateway Protocol |

| BIER | Bit Index Explicit Replication |

| BIRT | Bit Index Routing Table |

| GCMR | Greedy Compact Multicat Routing |

| IGMP | Internet Group Management Protocol |

| MDT | Multicast Ditribution Tree |

| MLD | Multicast Listener Discovery |

| MP-BGP | Multiprotocol extension Border Gateway Protocol |

| MRIB | Multicast Routing Information Base |

| PIM-SSM | Protocol Independent Multicat-Source Specific Mode |

| SPT | Shortest Path Tree |

| ST | Steiner Tree |

| TIB | Tree Information Base |

| URIB | Unicast Routing Information Base |

Appendix A

References

- Cheriton, D.R.; Deering, S. Host groups: A multicast extension for datagram internetworks. ACM SIGCOMM Comput. Commun. Rev. 1985, 15, 172–179. [Google Scholar] [CrossRef]

- Ratnasamy, S.; Ermolinskiy, A.; Shenker, S. Revisiting IP multicast. In Proceedings of the ACM SIGCOMM 2006 Conference, Pisa, Italy, 11–15 September 2006; pp. 15–26. [Google Scholar]

- Holland, J. Why Inter-Domain Multicast Now Makes Sense. APNIC Blog 2020. Available online: https://blog.apnic.net/2020/07/28/why-inter-domain-multicast-now-makes-sense/ (accessed on 30 July 2021).

- Wijnands, I.; Rosen, E.; Dolganow, A.; Przygienda, T.; Aldrin, S. Multicast Using Bit Index Explicit Replication (RFC 8279), Internet Engineering Task Force. 2017. Available online: https://tools.ietf.org/html/rfc8279 (accessed on 15 September 2019).

- Holland, J. IP Multicast: Next steps to make it real. In Proceedings of the NANOG 79, Virtual Meeting, 1–3 June 2020; Available online: https://www.nanog.org/events/nanog-79/ (accessed on 30 July 2021).

- Chen, M.; Hughes, G.L. Group Based Multicast Streaming Systems and Methods. U.S. Patent US8806522B2, 12 August 2014. [Google Scholar]

- Tombes, J. Multicast ABR, Low-Latency CMAF, CTE, and Optimized Video Playback; White Paper; Broadpeak’s nanoCDN. 2019. Available online: https://broadpeak.tv/solutions/multicast-abr/ (accessed on 30 July 2021).

- Ramp Holdings. Enterprise Multicast Is Alive and Well; White Paper; Ramp Multicast+. 2020. Available online: https://www.rampecdn.com/enterprise-video-delivery/multicast/ (accessed on 30 July 2021).

- Zarkower, J. How an Evolving Pay TV Infrastructure Can Coexist with OTT. Streaming Media, 6 March 2019; Available online: https://www.streamingmedia.com/Articles/ReadArticle.aspx?ArticleID=130383 (accessed on 30 July 2021).

- Diot, C.; Levine, B.; Lyles, B.; Kassem, H.; Balensiefen, D. Deployment issues for the IP multicast service and architecture. IEEE Netw. 2000, 14, 78–88. [Google Scholar] [CrossRef]

- Pardue, L. Some challenges with IP multicast deployment. In Proceedings of the IAB Design Expectations vs. Deployment Reality in Protocol Development Workshop, Helsinki, Finland, 4–5 June 2019. [Google Scholar]

- Pedroso, P.; Papadimitriou, D.; Careglio, D. Dynamic compact multicast routing on power-law graphs. In Proceedings of the IEEE Global Communications Conference (GLOBECOM 2011), Houston, TX, USA, 5–9 December 2011. [Google Scholar]

- Waitzman, D.; Partridge, C.; Deering, S. Distance Vector Multicast Routing Protocol (RFC 1075), Internet Engineering Task Force. 1988. Available online: https://tools.ietf.org/html/rfc1075 (accessed on 15 September 2019).

- Moy, J. Multicast Extensions to OSPF (RFC 1584), Internet Engineering Task Force. 1994. Available online: https://tools.ietf.org/html/rfc1584 (accessed on 15 September 2019).

- Ballardie, A. Core Based Trees (CBT Version 2) Multicast Routing (RFC 2189), Internet Engineering Task Force. 1997. Available online: https://tools.ietf.org/html/rfc2189 (accessed on 15 September 2019).

- Fenner, B.; Handley, M.; Holbrook, H.; Kouvelas, I.; Parekh, R.; Zhang, Z.; Zheng, L. Protocol Independent Multicast-Sparse Mode (PIM-SM): Protocol Specification (RFC 7761), Internet Engineering Task Force. 2016. Available online: https://tools.ietf.org/html/rfc7761 (accessed on 15 September 2019).

- Adams, A.; Nicholas, J.; Siadak, W. Protocol Independent Multicast-Dense Mode (PIM-SM): Protocol Specification (RFC 3973), Internet Engineering Task Force. 2005. Available online: https://tools.ietf.org/html/rfc3973 (accessed on 15 September 2019).

- Bhattacharyya, S. An Overview of Source-Specific Multicast (SSM) (RFC 3569), Internet Engineering Task Force. 2003. Available online: https://tools.ietf.org/html/rfc3569 (accessed on 15 September 2019).

- Holbrook, H.; Cain, B. Source-Specific Multicast for IP (RFC 4607), Internet Engineering Task Force. 2006. Available online: https://tools.ietf.org/html/rfc4607 (accessed on 15 September 2019).

- Carlsberg, K.; Crowcroft, J. Building shared trees using a one-to-many joining mechanism. ACM SIGCOMM Comput. Commun. Rev. 1997, 27, 5–11. [Google Scholar] [CrossRef]

- Yan, S.; Faloutsos, M.; Banerjee, A. QoS-aware multicast routing for the internet: The design and evaluation of QoSMIC. IEEE/ACM Trans. Netw. 2002, 10, 54–66. [Google Scholar]

- Wang, X.; Zhang, J.; Huang, M.; Yang, S. A green intelligent routing algorithm supporting flexible QoS for many-to-many multicast. Comput. Netw. 2017, 126, 229–245. [Google Scholar] [CrossRef]

- Kreutz, D.; Ramos, F.; Verissimo, P.E.; Rothenberg, C.E.; Azodolmolky, S.; Uhlig, S. Software-Defined Networking: A Comprehensive Survey. Proc. IEEE 2015, 103, 14–76. [Google Scholar] [CrossRef] [Green Version]

- Islam, S.; Muslim, N.; Atwood, J.W. A Survey on Multicasting in Software-Defined Networking. IEEE Commun. Surv. Tutor. 2018, 20, 355–387. [Google Scholar] [CrossRef]

- Al Saeed, Z.; Ahmad, I.; Hussain, I. Multicasting in software defined networks: A comprehensive survey. J. Netw. Comput. Appl. 2018, 104, 61–77. [Google Scholar] [CrossRef]

- Canonico, R.; Romano, S.P. Leveraging SDN to Improve the Performance of Multicast-Enabled IPTV Distribution Systems. IEEE Commun. Stand. Mag. 2017, 1, 42–47. [Google Scholar] [CrossRef]

- Peleg, D.; Upfall, E. A trade-off between space and efficiency for routing tables. J. ACM 1989, 36, 43–52. [Google Scholar] [CrossRef]

- Abraham, I.; Malkhi, D.; Ratajczak, D. Compact multicast routing. In Proceedings of the International Symposium on Distributed Computing (DISC’09), Elche, Spain, 23–25 September 2009; pp. 364–378. [Google Scholar]

- Faloutsos, M.; Faloutsos, P.; Faloutsos, C. On power-law relationships of the Internet topology. ACM Comput. Commun. Rev. 1999, 29, 251–262. [Google Scholar] [CrossRef]

- Choromański, K.; Matuszak, M.; Miękisz, J. Scale-free graph with preferential attachment and evolving Internal vertex structure. J. Stat. Phys. 2013, 151, 1175–1183. [Google Scholar] [CrossRef] [Green Version]

- Desmouceaux, Y.; Clausen, T.H.; Fuertes, J.A.C.; Townsley, W.M. Reliable multicast with BIER. J. Commun. Netw. 2018, 20, 182–197. [Google Scholar] [CrossRef] [Green Version]

- Desmouceaux, Y.; Fuertes, J.A.C.; Clausen, T.H. Reliable BIER with peer caching. IEEE Trans. Netw. Serv. Manag. 2019, 16, 1826–1839. [Google Scholar] [CrossRef] [Green Version]

- Merling, D.; Lindner, S.; Menth, M. P4-based implementation of BIER and BIER-FRR for scalable and resilient multicast. J. Netw. Comput. Appl. 2020, 169, 102764. [Google Scholar] [CrossRef]

- Zheng, L.; Zhang, J.; Parekh, R. Survey Report on Protocol Independent Multicast-Sparse Mode (PIM-SM) Implementations and Deployments (RFC 7063), Internet Engineering Task Force. 2013. Available online: https://tools.ietf.org/html/rfc7063 (accessed on 15 September 2019).

- Bates, T.; Chandra, R.; Rekhter, Y.; Katz, D. Multiprotocol Extensions for BGP-4 (RFC 4760), Internet Engineering Task Force. 2007. Available online: https://tools.ietf.org/html/rfc4760 (accessed on 15 September 2019).

- Wijnands, I.; Rosen, E.; Dolganow, A.; Tantsura, J.; Aldrin, S.; Meilik, I. Encapsulation for Bit Index Explicit Replication (BIER) in MPLS and Non-MPLS Networks (RFC 8296), Internet Engineering Task Force. 2018. Available online: https://tools.ietf.org/html/rfc8296 (accessed on 15 September 2019).

- Oliveira, R.; Zhang, B.; Pei, D.; Zhang, L. Quantifying Path Exploration in the Internet. IEEE/ACM Trans. Netw. 2009, 17, 445–458. [Google Scholar] [CrossRef]

- Cohen, N. Several Graph Problems and their Linear Program formulations. inria-00504914v2. 2019. Available online: https://hal.inria.fr/inria-00504914v2 (accessed on 15 September 2021).

| Stretch | Memory Space Ratio | |||||||

|---|---|---|---|---|---|---|---|---|

| 16k | 26k | 32k | 46k | 16k | 26k | 32k | 46k | |

| ST | 1 | 1 | 1 | 1 | 23.51 | 29.47 | 32.67 | 46.5 |

| PIM-SSM | 1.058 | 1.072 | 1.082 | 1.105 | 146.81 | 160.6 | 202.2 | 270.38 |

| BIER (best) | 1.083 | 1.143 | 1.250 | 1.364 | 9.54 | 14.98 | 20.17 | 25.7 |

| GCMR | 1.031 | 1.038 | 1.044 | 1.049 | 1 | 1 | 1 | 1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Careglio, D.; Agraz, F.; Papadimitriou, D. A Multicast Routing Scheme for the Internet: Simulation and Experimentation in Large-Scale Networks. Appl. Sci. 2021, 11, 8645. https://doi.org/10.3390/app11188645

Careglio D, Agraz F, Papadimitriou D. A Multicast Routing Scheme for the Internet: Simulation and Experimentation in Large-Scale Networks. Applied Sciences. 2021; 11(18):8645. https://doi.org/10.3390/app11188645

Chicago/Turabian StyleCareglio, Davide, Fernando Agraz, and Dimitri Papadimitriou. 2021. "A Multicast Routing Scheme for the Internet: Simulation and Experimentation in Large-Scale Networks" Applied Sciences 11, no. 18: 8645. https://doi.org/10.3390/app11188645