Prototyping IoT-Based Virtual Environments: An Approach toward the Sustainable Remote Management of Distributed Mulsemedia Setups

Abstract

:1. Introduction

- to settle agnostic specification for IoT simulation—as far as possible independent from hardware and software—aiming to lessen complexity in providing projects of sustainable management;

- to provide flexible programming-oriented processes capable of ensuring scalability for devices and respective functionalities;

- to align a general IoT simulation solution that, although, can meet mulsemedia setups specific requirements;

- to outline interfaces and engagement structures envisaging the integration of digital twin-based approaches.

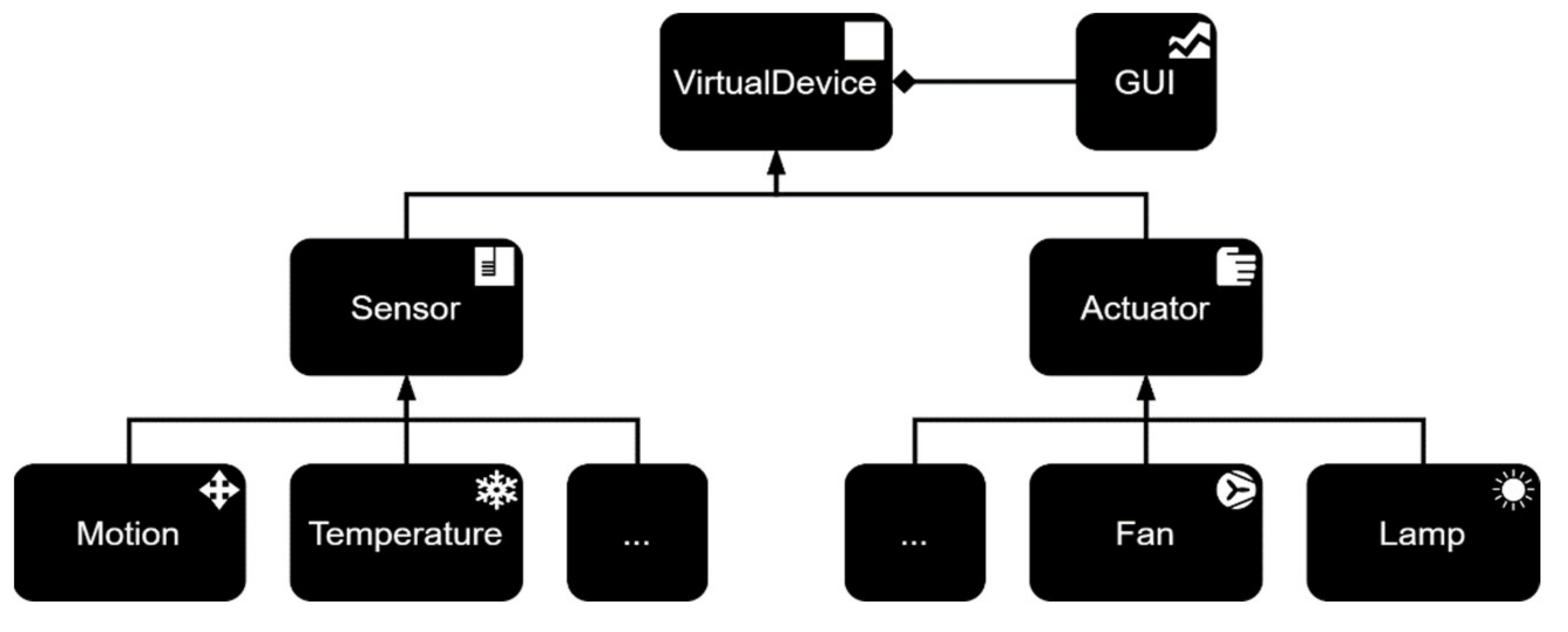

2. Prototyping Software Architecture

2.1. IoT Server

2.2. Virtual Environment

2.3. IoT Server/Virtual Environment Communication

- Devices request: retrieves a list of endpoints and respective capabilities associated to a given virtual environment coordinator;

- Control packet request: orders a condition change in a virtual environment’ actuator;

- Actuators scheduling: configures a relative time-based event list to chronologically engage actuators’ capabilities in a virtual environment;

- Sensor-based event triggering: configures an event listener that is looking out to the virtual environment’ sensors values. When a sensor measures the defined value, an automatic action is triggered in the virtual environment’s actuators.

3. IoT Mulsemedia Environments Prototyping Software

3.1. MQTT Enhanced Web-Based Application

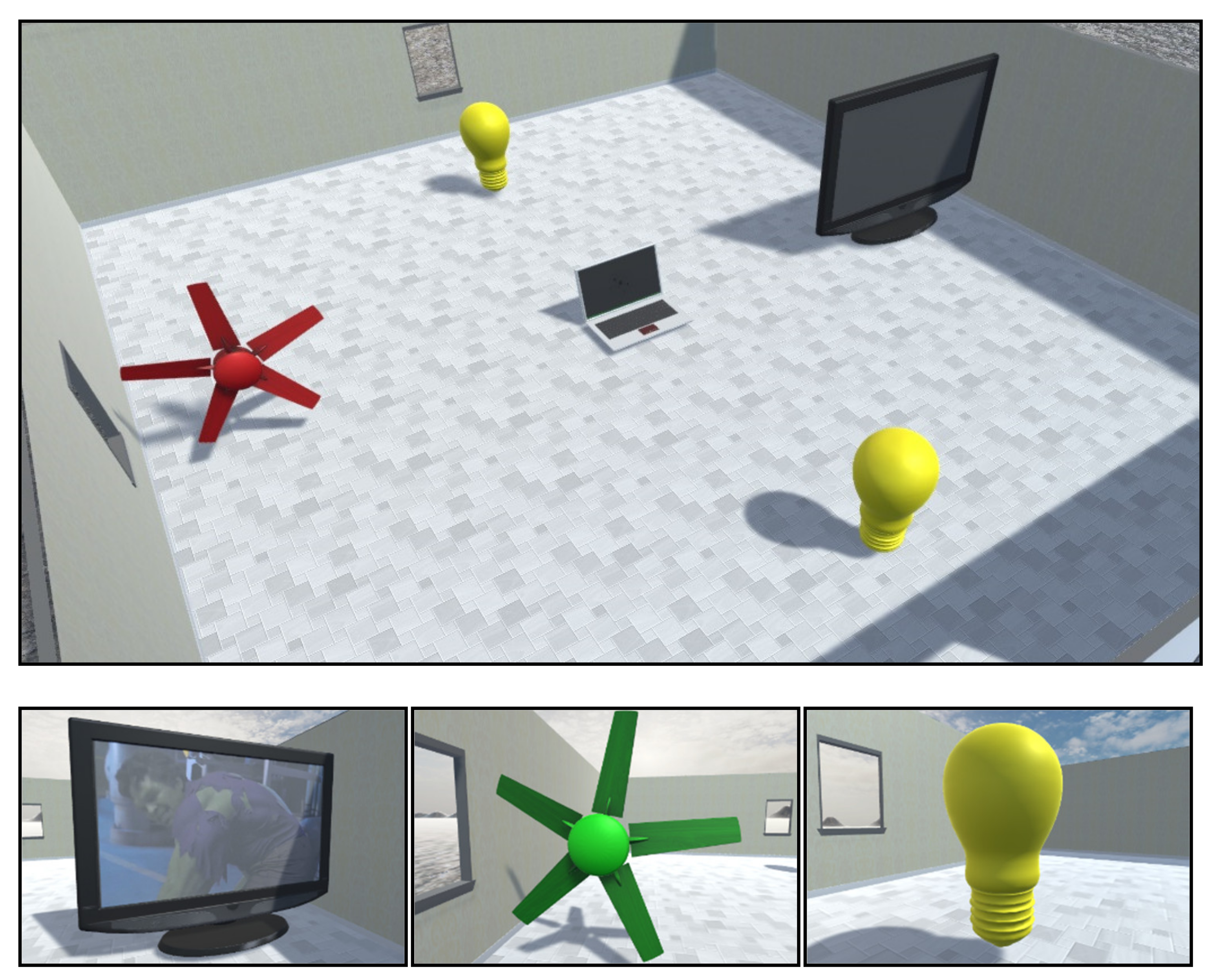

3.2. Virtual Environment

4. Results

4.1. Testing Scenario 1: 2D Virtual Environment Schedule-Oriented Behavior Modelling

4.2. Testing Scenario 2: 3D Things’ Virtual Environment

- Processor: 11th Gen Intel(R) Core (TM) i7-11800H @ 2.30 GHz 2.30 GHz;

- Random Access Memory (RAM): 32 GB @ 2933 MHz SODIMM;

- Graphic Card: NVIDIA GeForce RTX 3080, 16.0 GB GDDR6 RAM (Laptop edition);

- Storage: 1 TB, 3500 MB/R, 3300 MB/W;

- Operative System: Windows 10 Home 64 Bit.

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Coelho, P. Internet Das Coisas-Introduçao Pratica, 1st ed.; FCA-Editora de Informática: Lisboa, Portugal, 2017; ISBN 978-972-722-849-2. [Google Scholar]

- Morais, R.; Silva, N.; Mendes, J.; Adão, T.; Pádua, L.; López-Riquelme, J.A.; Pavón-Pulido, N.; Sousa, J.J.; Peres, E. mySense: A comprehensive data management environment to improve precision agriculture practices. Comput. Electron. Agric. 2019, 162, 882–894. [Google Scholar] [CrossRef]

- Muhammad, K.; Hamza, R.; Ahmad, J.; Lloret, J.; Wang, H.; Baik, S.W. Secure Surveillance Framework for IoT Systems Using Probabilistic Image Encryption. IEEE Trans. Ind. Inform. 2018, 14, 3679–3689. [Google Scholar] [CrossRef]

- Mehmood, Y.; Ahmad, F.; Yaqoob, I.; Adnane, A.; Imran, M.; Guizani, S. Internet-of-Things-Based Smart Cities: Recent Advances and Challenges. IEEE Commun. Mag. 2017, 55, 16–24. [Google Scholar] [CrossRef]

- Nauman, A.; Qadri, Y.A.; Amjad, M.; Bin Zikria, Y.; Afzal, M.K.; Kim, S.W. Multimedia Internet of Things: A Comprehensive Survey. IEEE Access 2020, 8, 8202–8250. [Google Scholar] [CrossRef]

- Jalal, L.; Popescu, V.; Murroni, M. IoT Architecture for Multisensorial Media. In Proceedings of the 2017 IEEE URUCON, Montevideo, Uruguay, 23–25 October 2017; pp. 1–4. [Google Scholar]

- Almajali, S.; Abou-Tair, D.E.D.I.; Salameh, H.B.; Ayyash, M.; Elgala, H. A distributed multi-layer MEC-cloud architecture for processing large scale IoT-based multimedia applications. Multimed. Tools Appl. 2019, 78, 24617–24638. [Google Scholar] [CrossRef]

- Salameh, H.A.B.; Almajali, S.; Ayyash, M.; Elgala, H. Spectrum Assignment in Cognitive Radio Networks for Internet-of-Things Delay-Sensitive Applications Under Jamming Attacks. IEEE Internet Things J. 2018, 5, 1904–1913. [Google Scholar] [CrossRef]

- Nordrum, A. The internet of fewer things [News]. IEEE Spectr. 2016, 53, 12–13. [Google Scholar] [CrossRef]

- Alvi, S.A.; Afzal, B.; Shah, G.A.; Atzori, L.; Mahmood, W. Internet of multimedia things: Vision and challenges. Ad Hoc Netw. 2015, 33, 87–111. [Google Scholar] [CrossRef]

- Kaeri, Y.; Moulin, C.; Sugawara, K.; Manabe, Y. Agent-Based System Architecture Supporting Remote Collaboration via an Internet of Multimedia Things Approach. IEEE Access 2018, 6, 17067–17079. [Google Scholar] [CrossRef]

- Rego, A.; Canovas, A.; Jimenez, J.M.; Lloret, J. An Intelligent System for Video Surveillance in IoT Environments. IEEE Access 2018, 6, 31580–31598. [Google Scholar] [CrossRef]

- Rahman, M.A.; Hossain, M.S.; Hassanain, E.; Muhammad, G. Semantic Multimedia Fog Computing and IoT Environment: Sustainability Perspective. IEEE Commun. Mag. 2018, 56, 80–87. [Google Scholar] [CrossRef]

- Seng, K.P.; Ang, L. A Big Data Layered Architecture and Functional Units for the Multimedia Internet of Things. IEEE Trans. Multi-Scale Comput. Syst. 2018, 4, 500–512. [Google Scholar] [CrossRef]

- Zhou, L.; Chao, H. Multimedia traffic security architecture for the internet of things. IEEE Netw. 2011, 25, 35–40. [Google Scholar] [CrossRef]

- Goel, D.; Pahal, N.; Jain, P.; Chaudhury, S. An Ontology-Driven Context Aware Framework for Smart Traffic Monitoring. In Proceedings of the 2017 IEEE Region 10 Symposium (TENSYMP), Cochin, India, 14–16 July 2017; pp. 1–5. [Google Scholar]

- Parizi, R.M.; Dehghantanha, A.; Choo, K.R. Towards Better Ocular Recognition for Secure Real-World Applications. In Proceedings of the 2018 17th IEEE International Conference on Trust, Security and Privacy in Computing and Communications/12th IEEE International Conference on Big Data Science and Engineering (TrustCom/BigDataSE), New York, NY, USA, 1–3 August 2018; pp. 277–282. [Google Scholar]

- Sornalatha, K.; Kavitha, V.R. IoT Based Smart Museum Using Bluetooth Low Energy. In Proceedings of the 2017 Third International Conference on Advances in Electrical, Electronics, Information, Communication and Bio-Informatics (AEEICB), Chennai, India, 27–28 February 2017; pp. 520–523. [Google Scholar]

- De La Borbolla, I.R.; Chicoskie, M.; Tinnell, T. Applying the Internet of Things (IoT) to biomedical development for surgical research and healthcare professional training. In Proceedings of the 2017 IEEE Technology & Engineering Management Conference (TEMSCON), Santa Clara, CA, USA, 8–10 June 2017; pp. 335–341. [Google Scholar]

- Poluan, S.E.R.; Chen, Y.-A. A Framework for a Multisensory IoT System Based on Service-Oriented Architecture. In Proceedings of the 2020 21st Asia-Pacific Network Operations and Management Symposium (APNOMS), Daegu, Korea, 22–25 September 2020; pp. 369–372. [Google Scholar]

- Rahman, M.A.; Rashid, M.; Barnes, S.; Hossain, M.S.; Hassanain, E.; Guizani, M. An IoT and Blockchain-Based Multi-Sensory In-Home Quality of Life Framework for Cancer Patients. In Proceedings of the 2019 15th International Wireless Communications Mobile Computing Conference (IWCMC), Tangier, Morocco, 24–28 June 2019; pp. 2116–2121. [Google Scholar]

- Garzotto, F.; Gelsomini, M.; Gianotti, M.; Riccardi, F. Engaging Children with Neurodevelopmental Disorder through Multisensory Interactive Experiences in a Smart Space. In Social Internet of Things; Soro, A., Brereton, M., Roe, P., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 167–184. ISBN 978-3-319-94659-7. [Google Scholar]

- Gelsomini, M.; Cosentino, G.; Spitale, M.; Gianotti, M.; Fisicaro, D.; Leonardi, G.; Riccardi, F.; Piselli, A.; Beccaluva, E.; Bonadies, B.; et al. Magika, a Multisensory Environment for Play, Education and Inclusion. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, Scotland, UK, 4–9 May 2019; ACM: New York, NY, USA; pp. 1–6. [Google Scholar]

- Striner, A. Can Multisensory Cues in VR Help Train Pattern Recognition to Citizen Scientists? arXiv 2018, arXiv:1804.00229. [Google Scholar]

- Pelet, J.-E.; Lick, E.; Taieb, B. Internet of Things and Artificial Intelligence in the Hotel Industry: Which Opportunities and Threats for Sensory Marketing? In Proceedings of the International Conference on Research on National Brand and Private Label Marketing, Granada, Spain, 1 May 2019. [Google Scholar]

- Covaci, A.; Zou, L.; Tal, I.; Muntean, G.-M.; Ghinea, G. Is Multimedia Multisensorial? A Review of Mulsemedia Systems. ACM Comput. Surv. 2019, 51, 1–35. [Google Scholar] [CrossRef] [Green Version]

- D’Angelo, G.; Ferretti, S.; Ghini, V. Simulation of the Internet of Things. In Proceedings of the 2016 International Conference on High Performance Computing Simulation (HPCS), Innsbruck, Austria, 18–22 July 2016; pp. 1–8. [Google Scholar]

- Haris, I.; Bisanovic, V.; Wally, B.; Rausch, T.; Ratasich, D.; Dustdar, S.; Kappel, G.; Grosu, R. Sensyml: Simulation Environment for Large-Scale IoT Applications. In Proceedings of the IECON 2019-45th Annual Conference of the IEEE Industrial Electronics Society, Lisbon, Portugal, 14–17 October 2019; Volume 1, pp. 3024–3030. [Google Scholar]

- Zeng, X.; Garg, S.K.; Strazdins, P.; Jayaraman, P.P.; Georgakopoulos, D.; Ranjan, R. IOTSim: A simulator for analysing IoT applications. J. Syst. Arch. 2017, 72, 93–107. [Google Scholar] [CrossRef]

- Calheiros, R.; Ranjan, R.; Beloglazov, A.; De Rose, C.A.F.; Buyya, R. CloudSim: A toolkit for modeling and simulation of cloud computing environments and evaluation of resource provisioning algorithms. Softw. Pract. Exp. 2011, 41, 23–50. [Google Scholar] [CrossRef]

- Ahmad, S.; Mehmood, F.; Mehmood, A.; Kim, D. Design and Implementation of Decoupled IoT Application Store: A Novel Prototype for Virtual Objects Sharing and Discovery. Electronics 2019, 8, 285. [Google Scholar] [CrossRef] [Green Version]

- Han, S.N.; Lee, G.M.; Crespi, N.; Van Luong, N.; Heo, K.; Brut, M.; Gatellier, P. DPWSim: A Devices Profile for Web Services (DPWS) Simulator. IEEE Internet Things J. 2015, 2, 221–229. [Google Scholar] [CrossRef] [Green Version]

- Alce, G.; Ternblad, E.-M.; Wallergård, M. Design and Evaluation of Three Interaction Models for Manipulating Internet of Things (IoT) Devices in Virtual Reality. In Human-Computer Interaction—INTERACT 2019, Proceedings of the Human-Computer Interaction—INTERACT 2019, Paphos, Cyprus, 2–6 September 2019; Lamas, D., Loizides, F., Nacke, L., Petrie, H., Winckler, M., Zaphiris, P., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 267–286. [Google Scholar]

- Hassija, V.; Chamola, V.; Saxena, V.; Jain, D.; Goyal, P.; Sikdar, B. A Survey on IoT Security: Application Areas, Security Threats, and Solution Architectures. IEEE Access 2019, 7, 82721–82743. [Google Scholar] [CrossRef]

- Mishra, B. Performance Evaluation of MQTT Broker Servers. In Computational Science and Its Applications—ICCSA 2018, Proceedings of the Computational Science and Its Applications—ICCSA 2018, Melbourne, VIC, Australia, 2–5 July 2018; Gervasi, O., Murgante, B., Misra, S., Stankova, E., Torre, C.M., Rocha, A.M.A.C., Taniar, D., Apduhan, B.O., Tarantino, E., Ryu, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 599–609. [Google Scholar]

| Topic | Message | Description |

|---|---|---|

| /info/{c} | /response/#timestamp | Requests coordinator features, as well as associated endpoints along with respective capabilities. |

| /out/{e}/{f}/{s} | #value|/response/#timestamp [optional] | Requests a state change regarding a given virtual coordinator’s endpoint. |

| /rule/{e}/{f}/{s} | {“expression”: #exp, “trigger”: #trigger}| /response/#timestamp | Specifies a rule for a given virtual coordinator that consists in opportunely triggering an endpoint action in response to another’s behavior. |

| /query/{e}, /query/{e}/{f}, query/{e}/{f}/{s} | /response/#timestamp| #optional_time_range | Retrieves data (readings/actions) from a given coordinator/endpoint/feature, optionally framed by a time range. |

| Profile | Feature | State | |||||

|---|---|---|---|---|---|---|---|

| ID | Name | ID | Name | Type | ID | Name | Value Set |

| 1 | Lamp | 1 | Power | Actuator | 1 | Switch | 1|On |

| 0|Off | |||||||

| 2 | Fan | 1 | Rotation | Actuator | 1 | Level | 0|Off |

| 1|Low | |||||||

| 2|Medium | |||||||

| 3|High | |||||||

| 3 | Display Comics | 1 | Power | Actuator | 1 | Switch | 1|On |

| 0|Off | |||||||

| 2 | Video | Actuator | 1 | Content | DC | ||

| Marvel | |||||||

| 4 | Temperature | 1 | Value | Sensor | 1 | Degrees | 13 |

| 18 | |||||||

| 25 | |||||||

| 32 | |||||||

| 5 | Motion | 1 | Value | Sensor | 1 | Condition | 0|inactive |

| 1|active | |||||||

| Representation | Environment | Designation | Type | Possible States |

|---|---|---|---|---|

| 2D/3D | Coordinator | Manager/Dispatcher | N/A |

| 2D/3D | Lamp | Actuator |  |

| 2D/3D | Fan | Actuator |  |

| 3D | Display | Actuator |  |

| 2D | Temperature | Sensor |  |

| 2D | Motion | Sensor |  |

| Representation | Name | Identifier |

|---|---|---|

| Test Room 2D | 56-DB-F6-6E-84-37 |

| Lamp A | A8-DD-A4-75-FF-EE |

| Lamp B | AE-85-48-8D-A8-F3 |

| Fan A | E3-8D-9E-C2-6E-2D |

| Fan B | 9B-F7-F4-42-16-1E |

| Temperature | 6E-A4-07-13-FE-CD |

| Motion | 7B-03-4D-95-AB-8E |

| Description | Request | JSON | Events | Trigger |

|---|---|---|---|---|

| Temperature Control | /rule/6E-A4-07-13-FE-CD/1/1 | {“exp”:“>18”, “trigger”: “/out/E3-8D-9E-C2-6E-2D/1/1 Medium”} |  |  |

| /rule/6E-A4-07-13-FE-CD/1/1 | {“exp”:“<=18”, “trigger”: “/out/E3-8D-9E-C2-6E-2D/1/1 Off”} |  |  | |

| Motion Monitoring | /rule/7B-03-4D-95-AB-8E/1/1 | {“exp”:“=1”, “trigger”: “/out/A8-DD-A4-75-FF-EE/1/1 On”} |  |  |

| /rule/7B-03-4D-95-AB-8E/1/1 | {“exp”:“=0”, “trigger”: “/out/A8-DD-A4-75-FF-EE/1/1 Off”} |  |  |

| Description | Time (s) | Request | Output |

|---|---|---|---|

| Initialization | 0 | /out/AE-85-48-8D-A8-F3/1/1 Off |  |

| Initialization | 0 | /out/9B-F7-F4-42-16-1E/1/1 Off |  |

| Turn on lamp B | 5 | /out/AE-85-48-8D-A8-F3/1/1 On |  |

| Set fan B rotation to 1 | 10 | /out/9B-F7-F4-42-16-1E/1/1 Low |  |

| Set fan B rotation to 2 | 20 | /out/9B-F7-F4-42-16-1E/1/1 Medium |  |

| Set fan B rotation to 3 | 25 | /out/9B-F7-F4-42-16-1E/1/1 High |  |

| Turn off lamp B | 30 | /out/AE-85-48-8D-A8-F3/1/1 Off |  |

| Turn off fan B | 30 | /out/9B-F7-F4-42-16-1E/1/1 Off |  |

| Representation | Name | Identifier |

|---|---|---|

| Test Room 3D | 6C-D5-1C-76-E3-85 |

| Lamp A | D6-BB-99-92-99-3A |

| Lamp B | E1-8C-83-02-28-DD |

| Fan | 1C-B2-89-0F-20-01 |

| Display | A9-94-43-C9-EB-8C |

| Action | Time (s) | Request | Output |

|---|---|---|---|

| Initialization | 0 | /out/D6-BB-99-92-99-3A/1/1 Off |  |

| Initialization | 0 | /out/E1-8C-83-02-28-DD/1/1 Off |  |

| Initialization | 0 | /out/1C-B2-89-0F-20-01/1/1 Off |  |

| Initialization | 0 | /out/A9-94-43-C9-EB-8C/1/1 Off |  |

| Turn on display | 1 | /out/A9-94-43-C9-EB-8C/1/1 On |  |

| Play DC vídeo | 1 | /out/A9-94-43-C9-EB-8C/2/1 DC |  |

| Turn on lamp A | 1 | /out/D6-BB-99-92-99-3A/1/1 On |  |

| Set fan rotation to 1 | 1 | /out/1C-B2-89-0F-20-01/1/1 Low |  |

| Play Marvel Video | 30 | /out/A9-94-43-C9-EB-8C/2/1 Marvel |  |

| Set fan rotation to 3 | 38 | /out/1C-B2-89-0F-20-01/1/1 Medium |  |

| Turn off lamp A | 45 | /out/D6-BB-99-92-99-3A/1/1 Off |  |

| Turn on lamp B | 45 | /out/E1-8C-83-02-28-DD/1/1 On |  |

| Turn off lamp B | 60 | /out/E1-8C-83-02-28-DD/1/1 Off |  |

| Turn off fan | 60 | /out/1C-B2-89-0F-20-01/1/1 Off |  |

| Turn off display | 60 | /out/A9-94-43-C9-EB-8C/1/1 Off |  |

| Video | Size (px) | Data Transmission Rate | Total Transmission Speed (bits) | FPS |

|---|---|---|---|---|

| Marvel.mp4 | 1280 × 720 | 1208 kbps | 1334 kbps | 30 |

| DC.mp4 | 1280 × 720 | 1942 kbps | 2069 kbps | 24 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adão, T.; Pinho, T.; Pádua, L.; Magalhães, L.G.; J. Sousa, J.; Peres, E. Prototyping IoT-Based Virtual Environments: An Approach toward the Sustainable Remote Management of Distributed Mulsemedia Setups. Appl. Sci. 2021, 11, 8854. https://doi.org/10.3390/app11198854

Adão T, Pinho T, Pádua L, Magalhães LG, J. Sousa J, Peres E. Prototyping IoT-Based Virtual Environments: An Approach toward the Sustainable Remote Management of Distributed Mulsemedia Setups. Applied Sciences. 2021; 11(19):8854. https://doi.org/10.3390/app11198854

Chicago/Turabian StyleAdão, Telmo, Tatiana Pinho, Luís Pádua, Luís G. Magalhães, Joaquim J. Sousa, and Emanuel Peres. 2021. "Prototyping IoT-Based Virtual Environments: An Approach toward the Sustainable Remote Management of Distributed Mulsemedia Setups" Applied Sciences 11, no. 19: 8854. https://doi.org/10.3390/app11198854