Concealed Object Detection and Recognition System Based on Millimeter Wave FMCW Radar

Abstract

:1. Introduction

2. Materials and Methods

2.1. Test Object Distance

2.2. Synthetic Aperture Radar (SAR) and Multiple-Input Multiple-Output (MIMO) Radar Antennas Technique

2.2.1. Radar Enabled Three Transmitting Antennas and Four Receiving Antennas

2.2.2. Actual Measurement Parameter Setting

2.2.3. Image Resolution

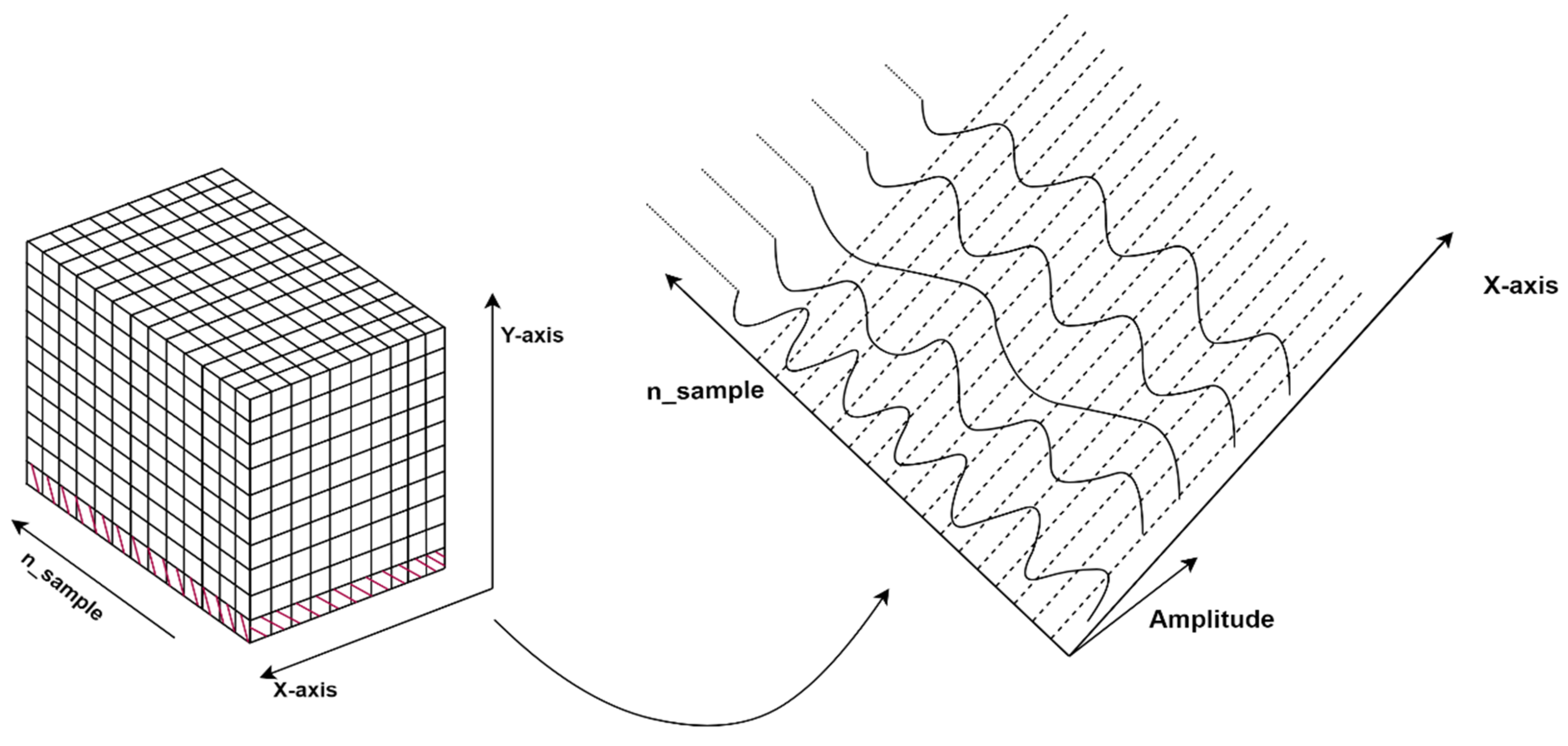

2.2.4. Building 3D Data Block

2.2.5. Reconstruction Image

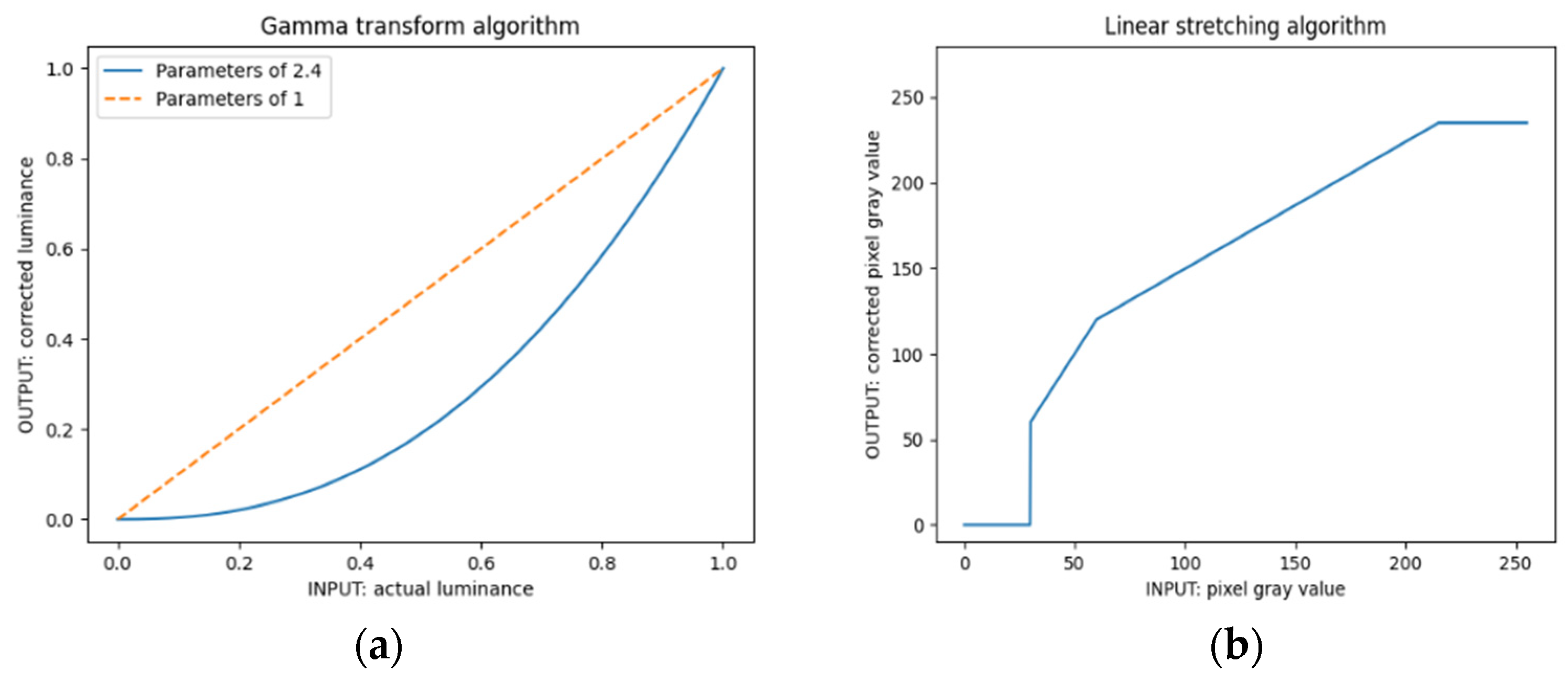

2.3. Image Preprocessing

2.4. SAR Image Recognition Algorithm

2.4.1. Lightweight Convolutional Neural Networks

- ShuffleNetV2

- MobileNetV3

- GhostNet

2.4.2. Two Optimization Algorithms of Attention Mechanism

- GhostNet_SEResNet56

3. Results and Discussion

4. Conclusions

- By using the MIMO-SAR radar, the aperture of the radar antenna is expanded to 90 mm in the X-axis direction. Eight virtual channels are established in the Y-direction, which widens the length of the longitudinal direction aperture in each transverse scanning can be equivalent to . Image resolution can reach 1.90 mm in X-direction and 1.73 mm in Y-direction, when the object is 90 mm away from the radar. The MIMO-SAR imaging system can effectively reduce the scanning time cost, the system economic cost and improve the image resolution.

- Gamma transform with a coefficient of 2.4 and linear stretch processing are innovatively carried out for the SAR images to remove the noise caused by distance error and improve visual recognition, which lays a good foundation for the subsequent supervised learning network.

- The lightweight convolutional neural network is small in size and occupies less resources, but the prediction accuracy is not high. After the optimization of the SE and SK attention mechanism, the prediction accuracy is improved with the increase of a small part of the resource occupancy rate. Combined with the prediction accuracy; computational complexity: Madd, FLOPs; memory occupation rate: MemR + W, parameters. GhostNet_SEResNet56 is the optimal prediction algorithm for SAR data set, which prediction accuracy of the validation set is 98.18%; computational complexity: 134.55 MMadd and 67.81 MFLOPs; memory occupation rate: 18.98 MB (MemR + W) and 0.45 M (parameters).

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, C.J.; Ling, H. High-resolution, downward-looking radar imaging using a small consumer drone. In Proceedings of the 2016 IEEE International Symposium on Antennas and Propagation (APSURSI), Fajardo, PR, USA, 26 June–1 July 2016; pp. 2037–2038. [Google Scholar]

- Zhuge, X.; Yarovoy, A.G. A Sparse Aperture MIMO-SAR-Based UWB Imaging System for Concealed Weapon Detection. IEEE Trans. Geosci. Remote Sens. 2010, 49, 509–518. [Google Scholar] [CrossRef]

- Turppa, E.; Kortelainen, J.M.; Antropov, O.; Kiuru, T. Vital sign monitoring using FMCW radar in various sleeping scenarios. Sensors 2020, 20, 6505. [Google Scholar] [CrossRef] [PubMed]

- Tian, G.Y.; Al-Qubaa, A.; Wilson, J. Design of an electromagnetic imaging system for weapon detection based on GMR sensor arrays. Sens. Actuators A Phys. 2012, 174, 75–84. [Google Scholar] [CrossRef]

- Smith, J.W.; Yanik, M.E.; Torlak, M. Near-Field MIMO-ISAR Millimeter-Wave Imaging. In Proceedings of the 2020 IEEE Radar Conference (RadarConf20), Florence, Italy, 21–25 September 2020. [Google Scholar] [CrossRef]

- Rezaei, M.; Zamani, H.; Fakharzadeh, M.; Memarian, M. Quality improvement of millimeter-wave imaging systems using optimized dual polarized arrays. IEEE Trans. Antennas Propag. 2021, 1. [Google Scholar] [CrossRef]

- Richards, M.A. A beginner’s guide to interferometric SAR concepts and signal processing [AESS tutorial IV]. IEEE Aerosp. Electron. Syst. Mag. 2007, 22, 5–29. [Google Scholar] [CrossRef]

- Bliss, D.; Forsythe, K. Multiple-input multiple-output (MIMO) radar and imaging: Degrees of freedom and resolution. In Proceedings of the Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 9–12 November 2003; Volume 1, pp. 54–59. [Google Scholar]

- Tapia, S.L.; Molina, R.; de la Blanca, N.P. Detection and localization of objects in Passive Millimeter Wave Images. In Proceedings of the 2016 24th European Signal Processing Conference (EUSIPCO), Budapest, Hungary, 29 August–2 September 2016; pp. 2101–2105. [Google Scholar]

- Ran, H.; Wen, S.; Wang, S.; Cao, Y.; Zhou, P.; Huang, T. Memristor-Based Edge Computing of ShuffleNetV2 for Image Classification. IEEE Trans. Comput. Des. Integr. Circuits Syst. 2021, 40, 1701–1710. [Google Scholar] [CrossRef]

- Guo, R.; Xie, X. Object detection method of autonomous vehicle based on lightweight deep learning. In SAE Technical Paper Series; SAE International: Warrendale, PA, USA, 2021. [Google Scholar]

- Wei, B.; Shen, X.; Yuan, Y. Remote sensing scene classification based on improved Ghostnet. In Proceedings of the International Conference on Computer Science and Communication. The Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2020; Volume 1621. [Google Scholar]

- Feng, S. Signal Processing Algorithm and Implementation of Millimeter Wave Active Detection System. Master’s Thesis, Nanjing University of Technology, Nanjing, China, March 2017. [Google Scholar]

- Fan, B.; Gao, J.-K.; Li, H.-J.; Jiang, Z.-J.; He, Y. Near-fifield 3D SAR imaging using a scanning linear MIMO array with arbitrary topologies. IEEE Access 2020, 8, 6782–6791. [Google Scholar] [CrossRef]

- Texas Instruments. The Overview of the TI’s mmWave Sensors. Available online: http://www.ti.com/sensors/mmwave/overview.html. (accessed on 24 September 2021).

- Zhuge, C.; Yarovoy, A.G. Three-dimensional near-fifield MIMO array imaging using range migration techniques. IEEE Trans. Image Process. 2012, 21, 3026–3033. [Google Scholar] [CrossRef] [PubMed]

- Ng, B.P.; Lie, J.P.; Er, M.H.; Feng, A. A Practical Simple Geometry and Gain/Phase Calibration Technique for Antenna Array Processing. IEEE Trans. Antennas Propag. 2009, 57, 1963–1972. [Google Scholar] [CrossRef]

- Stolt, R.H. Migration by Fourier transform. Geophysics 1978, 43, 23–48. [Google Scholar] [CrossRef]

- Kollem, S.; Rama, L.; Reddy, K. Modified transform-based gamma correction for MRI tumor image denoising and segmentation by optimized histon-based elephant herding algorithm. Int. J. Imaging Syst. Technol. 2020, 30, 1271–1293. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.; Sun, J. ShuffleNet V2: Practical guidelines for efficient CNN architecture design. In Proceedings of the European Conference on Computer Vision; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Qian, S.; Ning, C.; Hu, Y. MobileNetV3 for image classification. In Proceedings of the 2021 IEEE 2nd International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), Nanchang, China, 26–28 March 2021; pp. 490–497. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. [Google Scholar]

- He, J.; Jiang, D. Fully automatic model based on SE-resnet for bone age assessment. IEEE Access 2021, 9, 62460–62466. [Google Scholar] [CrossRef]

| Target Distance | 0.35 m | 0.5 m | 0.75 m |

|---|---|---|---|

| First round measurement | 0.36 m | 0.51 m | 0.76 m |

| 0.34 m | 0.51 m | 0.76 m | |

| 0.35 m | 0.52 m | 0.75 m | |

| Second round measurement | 0.34 m | 0.52 m | 0.75 m |

| 0.35 m | 049 m | 0.76 m | |

| 0.35 m | 0.51 m | 0.74 m |

| Parameter Name | Value/Unit |

|---|---|

| Num_horizontalScan | 180 points |

| Num_longitudinalScan | 13 points |

| Horizontal_scanSize_mm | 90 mm |

| Longitudinal_scanSize_mm | 98.67 mm |

| Horizontal_stepSize_mm | 0.500 mm |

| Longitudinal_stepSize_mm | 7.590 mm |

| Platform_Speed_mmps | 20 mm/s |

| Z0 | 90 mm |

| Parameter | Value |

|---|---|

| RxToEnable | [1,2,3,4] |

| TxToEnable | [1,2,3] |

| Slope_MHzperus | 70.295 |

| Samples_per_Chirp | 256 |

| Sampling_Rate_ksps | 5000 |

| Num_Frames | 1 |

| Chirps_per_Frame | 1 |

| Frame_Repetition_Period_ms | 25.000 |

| Object Recognition Algorithm | Accuracy (Valid) | Madd (MMadd) | Parameters (M) | FLOPs (MFLOPs) | MemR + W (MB) |

|---|---|---|---|---|---|

| ShuffleNetV2 | 84.55% | 284.89 | 1.26 | 144.16 | 47.32 |

| ShuffleNetV2_SE | 87.27% | 285.15 | 1.40 | 144.30 | 47.85 |

| ShuffleNetV2_SK | 89.09% | 293.94 | 1.69 | 148.85 | 56.51 |

| MobileNetV3(SE) | 96.36% | 115.41 | 1.24 | 58.60 | 30.93 |

| MobileNetV3_SK | 98.18% | 119.98 | 1.30 | 60.99 | 56.51 |

| GhostNet_ResNet56 | 95.45% | 134.54 | 0.44 | 67.80 | 18.95 |

| GhostNet_SEResNet56 | 98.18% | 134.55 | 0.45 | 67.81 | 18.98 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Zhang, K.; Sun, Z.; Wu, Q.; He, W.; Wang, H. Concealed Object Detection and Recognition System Based on Millimeter Wave FMCW Radar. Appl. Sci. 2021, 11, 8926. https://doi.org/10.3390/app11198926

Liu J, Zhang K, Sun Z, Wu Q, He W, Wang H. Concealed Object Detection and Recognition System Based on Millimeter Wave FMCW Radar. Applied Sciences. 2021; 11(19):8926. https://doi.org/10.3390/app11198926

Chicago/Turabian StyleLiu, Jie, Kai Zhang, Zhenlin Sun, Qiang Wu, Wei He, and Hao Wang. 2021. "Concealed Object Detection and Recognition System Based on Millimeter Wave FMCW Radar" Applied Sciences 11, no. 19: 8926. https://doi.org/10.3390/app11198926

APA StyleLiu, J., Zhang, K., Sun, Z., Wu, Q., He, W., & Wang, H. (2021). Concealed Object Detection and Recognition System Based on Millimeter Wave FMCW Radar. Applied Sciences, 11(19), 8926. https://doi.org/10.3390/app11198926