1. Introduction

With a considerable increase in the number of chronic, disabled or mobility-impaired patients, virtual rehabilitation stands out as a modern alternative to treat these patients through the use of virtual reality, augmented reality and motion capture technologies. In this type of rehabilitation, patients undergo a virtual environment in which they develop the exercises corresponding to their treatment.

In addition, at the present time in which we live, the pandemic of the COVID-19 disease triggered by the SARS-CoV-2 virus has caused an unprecedented crisis in all areas, with the health sector being among the sectors that have suffered the most. Understandably, many healthcare providers are now more focused on attending to the urgent and life-threatening health needs of people.

One of the main measures that have been taken worldwide in the face of the pandemic situation experienced has been the reduction of face-to-face activities at all levels, which has given rise to three main fields of action: implementation of an online consultation modality or remote, for which use is made of different platforms (technological or nontechnological) and formats; mobilization and support of health personnel; and attention to the health and well-being of patients.

In this context, the use of technologies that allow telecare, including rehabilitation treatments, is offering a safe alternative, and in many cases, the only possible one.

On the other hand, given the lack of motivation of patients to accomplish treatment at home, a new methodology based on gamification has begun to be used. Incorporating an element of entertainment through gamification of these activities can stimulate the patient to perform the activities while playing the game. These types of games that include physical activity are called “exergames” [

1,

2,

3].

This new methodology makes use of exergames, which allows the patient’s motivation in the realization of telerehabilitation exercises to be increased. Exergames aim to stimulate the mobility of the body through the use of interactive environments with immersive experiences that simulate different sensations of presence and require physical effort to play [

4]. Research indicates that they can produce improvements in fitness [

5], posture [

6], cognitive function [

7], balance [

8] and other nonphysical effects [

9]. The fact that exergames are presented as games and gamify tasks provides the extra potential to increase motivation; therefore, the continuity of exercise or rehabilitation programs by patients. Consequently, diverse exergames have been successfully applied to medical conditions such as stroke [

10], autism [

11] or motor function impairments [

12,

13], among many others.

There are different examples in the literature about the use of exergames in telerehabilitation [

14]. Some of them have adopted tools and technologies of video consoles to manage the monitoring of patient movements. In this sense, we can find works such as [

15,

16,

17], where they make use of the Microsoft Kinect sensors [

18] of the Xbox console. This sensor allows the movements of the patient to be monitored in a 3D space, allowing the patient to interact with the application directly with their body, without using additional controls. In [

19,

20,

21], they use the Wii remote or the Wii Balance Board of the Nintendo Wii console, although in these cases, the use of patient control devices is necessary. In [

22,

23,

24], virtual reality systems are used with promising results in rehabilitation, although they are different technologies from those proposed. They are of interest to the work because they make use of gamification and vision systems. In these referenced works, it has been necessary to use sensors such as LeapMotion, VR glasses (for example, Oculus Quest) and other complex sensors to visualize and track movements.

In recent years, these methodologies have been complemented with other technologies such as virtual reality. Augmented reality (AR) is the term used to describe the set of technologies that allow a user to visualize part of the real world through a technological device with graphic information added by it. The device, or set of devices, adds virtual information to the already existing physical information (that is, a virtual part appears in reality). In this way, tangible physical elements are combined with virtual elements, thus creating an augmented reality in real time. These technologies can be used in different formats and make use of various devices, from mobile phones to complex sensors [

25].

However, most low-cost exergames, such as those using smartphones, promote healthy lifestyles more than specific exercises [

26]. There are other research works more in line with the proposal of this paper, in which augmented reality mirrors are applied. Sleeve AR [

17] provides a platform for exercising the upper extremities, where the patient is shown a real-time guide to exercise by means of AR information projected on the patient’s arm and on the floor. Physio@Home [

27] displays visual objects such as arrows and arm traces to guide patients through exercises and movements so that they can perform physical therapy exercises at home without the presence of a therapist. Finally, the NeuroR [

28] system applies an augmented reality mirror to exercise the upper limbs. The system overlays a virtual arm on top of the body of the patient and uses an electromyography device to control its movement. The problem with these approaches and proposals is that they need sensors, devices and technologies that are not easily accessible in a middle-class home.

In other proposals [

29,

30,

31], mobile devices are used, such as smartphones or tablets, but most of them are intended as exergames to carry out activities abroad, not thinking about telerehabilitation activities. Therefore, we think that the alternative is the development of exergames that allow the monitoring of patient movements, especially indoors and also as accessible as possible. The most accessible alternative is to be able to estimate a human pose using a single RGB camera.

The current advance in artificial vision techniques and learning methods based on the convolutional neural network (CNN) has led to an improvement in the performance of the estimation of the human pose and therefore growing interest in it. According to the different dimensions of the human pose result, existing works could be classified into 2D pose detection. To achieve this goal, there are several 2D pose detection libraries [

32]. Among the most popular bottom-up approaches to estimate the human pose of various people, several open-source models and deep-learning uses stand out, among which we highlight the OpenPose library [

33], DensePose [

34] and ImageRecognition software wrnchAI [

35]. As with many bottom-up approaches, OpenPose first detects parts (key points) that belong to each person in the image, and then assigns parts to different individuals. WrnchAI is a high-performance deep-learning runtime engine, engineered and engineered to extract human motion and behavior from standard video. Dense Pose proposes DensePose-RCNN, which is a variant of Mask-RCNN, which can densely return the UV coordinates of specific parts in each region of the human body at multiple frames per second. In [

36], a recent comparison of the alternatives is presented, where both cover the needs and requirements of ExerCam. After testing the proposals, we have finally decided to use OpenPose in our work, given its ease of integration with our project. What made us opt for OpenPose especially is that OpenPose is an open-source library, with a free license compared to wrnchAI that counts with an annual license of USD 5000.

In this paper, an application of augmented reality mirror type based on 2D body pose detection called ExerCam is presented. ExerCam superimposes augmented information in real time on the video. In this way, the patient can observe on the screen a virtual skeleton on their body, where the areas to be treated are highlighted. Additionally, virtual objects are superimposed on the video (objects to hit, decoration, instructions, user interface, etc.), which allows a gamified scene to be created. ExerCam does not need any special controller or sensor for its operation, as it works with a simple, webcam-type RGB camera, which makes the application totally accessible and low cost. Therefore, the aportation of our work is the presentation of ExerCam as a low-cost system, including the use of 2D vision, which could be an alternative tool to be taken into account in telerehabilitation therapies. A study of healthy subjects has been conducted in order to assess the technical viability and usability of the developed application.

The paper is organized as follows:

Section 2 explains the ExerCam application developed in depth. In

Section 3, the results obtained are presented and discussed, highlighting the validity of the use of ExerCam as a tool for measuring the range of motion (ROM), necessary to be able to perform an evaluation of the evolution of the patients. Finally,

Section 4 presents our conclusions.

2. Materials and Methods

For the purpose of this research, the ExerCam application was developed. The main objective of this system is to stimulate patients to perform the therapy prescribed by specialists. In addition, we want to offer specialists (doctors, therapists) a tool to track the progress of their patients.

ExerCam is designed as an application for telerehabilitation-based exergames. For this, we make use of the augmented reality mirror-type technology based on 2D body pose detection. The system, as seen in

Figure 1, makes use of an RGB camera (for example, webcam type) installed in the patient’s computer. This camera provides the necessary video input to be able to apply techniques of human pose estimation and augmented reality performed in the ExerPlayer module, which allows the gamified scene to be created. ExerPlayer will show the video on the patient’s screen, along with the different virtual elements of augmented reality, the main element being a virtual skeleton superimposed on the patient’s body. Depending on the mode of use (calibration, game or task), other virtual objects (objects to hit, decoration, instructions, user interface, etc.) are also superimposed on the video. All the information about the results of the tasks, exercises and patient data is stored in a database on the cloud, together with the information of the tasks and exercises prescribed by the therapist, which will allow the information to be available and updated in both the ExerPlayer and ExerWeb modules. At the other end of the system is the ExerWeb module, where thanks to its web interface and the different algorithms implemented, the therapist can monitor the patient’s evolution from a distance and set new types of exercises or tasks.

In the following subsections, the libraries and modules used in the ExerCam architecture are explained in greater detail.

2.1. OpenPose 2D Library

As previously mentioned, the application base is the OpenPose library [

37], which allows 2D human pose estimation to be performed. In [

38], an online and real-time multiperson 2D pose estimator and tracker is proposed.

Figure 2 shows the network structure, where the part affinity fields (PAFs) and confidence maps are predicted iteratively. The PAFs predicted in the first stage are represented by Lt. The predictions in the second stage are confidence maps, represented by St. To achieve this, OpenPose uses a two-step process (see

Figure 2).

First, it extracts features from the image using a VGG-19 model and passes those features through a pair of convolutional neural networks (CNNs) running in parallel. One of the pair’s CNNs calculates confidence maps (S) to detect body parts. The other calculates a set of 2D vector fields of PAFs called part affinity fields (L) and combines the parts to form the skeleton of the individual person poses. These parallel branches can be repeated many times to refine PAF and confidence map predictions. Then, part confidence maps (S) and part affinity fields (L) are:

2.2. ExerPlayer Module

ExerPlayer runs as a desktop application, installed on the patient’s computer. The central component of the ExerPlayer module is the OpenPose library, which allows the real-time human pose estimation to be obtained through the patient’s webcam. ExerPlayer makes use of the REST API to transmit and receive data regarding the tasks and exercises of patients. This module allows the patient to perform exercises from home thanks to the application developed as an augmented reality mirror type, where the patient is reflected on the screen, along with other virtual objects. In this way, it provides the means to stimulate physical activity, by inducing movement, or gross motor learning, as it is able to provide the three key principles in motor learning: repetition, feedback and motivation [

39]. ExerPlayer has three types of virtual objects (see

Figure 3a):

To access the ExerPlayer module, the patient must be registered in the system and enter their credentials. Once the application is accessed, the patient has several modes of use: (1) calibration mode, (2) task mode and (3) game mode.

2.2.1. ExerPlayer Calibration Mode

One of the main problems in vision systems is usually due to changes in the conditions of the environment (lighting, distances, camera orientation, etc.). For ExerCam, these problems are taken into account, and that is why in each session, both for performing tasks and for playing games, a previous calibration process should be carried out. ExerCam automatically launches an obligatory static calibration before executing a task or game.

In calibration mode, a static posture evaluation (SPE) [

42] is performed for the patient. For this, the patient must adopt the neutral or zero position (look forward, arms hanging at the side of the body, thumbs directed forward and lower limbs side by side with knees in full extension), which will allow the application to calibrate the exact measurements and characteristics of the patient. Mainly, the distances between joints are recorded in the system (the connection between two joints or joint. This connection is known as pair or limb) and possible malformations of the patient (for example, lack of extremities). This information is sent encapsulated in JavaScript Object Notation (JSON) (JavaScript Object Notation) format to the DB ExerCam Database via the REpresentational State Transfer (REST) API [

43].

To simplify the calibration, the application has visual aids to help patients place themselves in the right position and make sure that the camera is oriented in the right direction (see

Figure 3a). This aid consists of a rectangle on the screen. Patients can place their body inside the rectangle, and in the case that patients move from their original position during the exercise, they can move back to it. In this way, the camera will have a different distance for each patient. It is also recommended that the camera is oriented orthogonally to the patient, although the system works correctly even with the camera oriented with a certain inclination angle. Once the system is calibrated and a task or game session is started, the camera orientation cannot be changed, neither can the distance between the patient and the camera (in the initial or resting position, during the game, they can move). For future works, a dynamic calibration is proposed, as opposed to static calibration, to avoid possible maladjustment in the measurements in the case of changes in the environment.

2.2.2. ExerPlayer Task Mode

The objective of the task mode is to provide the therapist with a mechanism to evaluate the range of motion (ROM) of specific joints and the reaction time of each patient. Additionally, it can be used as a goniometer. The task is to perform a specific movement that has been previously prescribed by the therapist (in

Figure 4, an example of the shoulder abduction task is shown), and for this, the application gives visual support through a band of targets and the virtual skeleton (see

Figure 4a,b). Through the REST API, the data of the tasks prescribed by the therapist are received via HTML. With the GET statement, the exact positions of all the targets of the exercise are obtained.

The targets and their position are automatically generated by the target location algorithm (TLA). The TLA algorithm developed for ExerCam calculates the exact position where the targets should be and ensures that the movement performed is correct. The TLA algorithm adapts the distance and location of the targets to each patient and each session, taking into account the main joint of the task and the parameters collected in the calibration mode. The number of targets to be displayed is set by a parameter configurable by the therapist.

For the development of the TLA algorithm, the movements of the articulations included in the tasks were studied in depth. What all the tasks have in common is that joint movements describe a circular movement so that the targets will form a semicircle by taking as a central point the joint to be measured. In this sense, the placement algorithm will require a central point (it will be the main articulation of the task), a radius (distance between joints) and a start and end angle.

Table 1 shows, for each of the tasks implemented in ExerPlayer, the maximum and minimum values assigned for each joint. These values were obtained from theoretical values marked by the articular scales established in the R.D. 1971/1999 (based on the American Medical Association guide) [

44]. As an aid, the TLA algorithm sets the initial position of the main joint of the task and shows a target of the color of the corresponding main joint (see

Figure 4a). In this way, the patient can be positioned correctly.

For the example of the left shoulder abduction task, the algorithm TLA takes the patient’s shoulder joint (Center 2) as its central point and shows the shoulder target in the center of the positioning zone (see

Figure 4a). For other joints, the target is drawn in other positions. The algorithm calculates the value of the radius

r, as shown in Equation (3), where the radius is equal to the distance between the shoulder joints, represented by

C(h,k), and the wrist

P(h, k). The joints involved in each task are reflected in the Radius column of

Table 1.

Once the radius is obtained, the algorithm of the incremental method of drawing a circle [

45] is applied, and given an angular step, constant and selected by the therapist (

dθ), the coordinates of the centers of the targets are obtained in an incremental manner The calculation of two consecutive center points is shown in the following Equation (4).

Once the task is completed, the application generates a JSON file with all the data obtained (patient data, task data, time spent, targets reached, coordinates of the joints involved in the task). This file is sent via the REST API to the DB ExerCam Database and is used later by the evaluation algorithm to show the results of the tasks (ROM calculation. In

Section 2.3.1, the evaluation algorithm is explained with the results from the ROM calculation) to the therapist.

2.2.3. ExerPlayer Game Mode

As already mentioned, the use of rehabilitative devices and telerehabilitation reduces the cost of rehabilitation. However, patient motivation is critical for efficient recovery [

46]. This is why ExerCam offers a game-based telerehabilitation service.

In the game mode, the patient accesses their section of games planned and customized by the therapist. A game is based on an augmented reality session, where virtual targets will appear strategically placed in the scene. The game mode executes the gamified activities, aimed at the realization of concrete movements and with a number of repetitions established by the therapist, with the aim of working different motor functions. When the patient tries to reach or avoid the targets (depending on the game), the specific movements of their therapy are performed. The objective of the game mode is to improve balance (both standing and sitting), coordination, load transfers, range and alignment of movements, strengthen the nondominant side and hand–eye coordination, reaction time and muscle strengthening. To design these games, we followed the indications of the therapist and the guidelines for the design of rehabilitation activities outlined by the Commissioning Guidance for Rehabilitation of NHS England [

47].

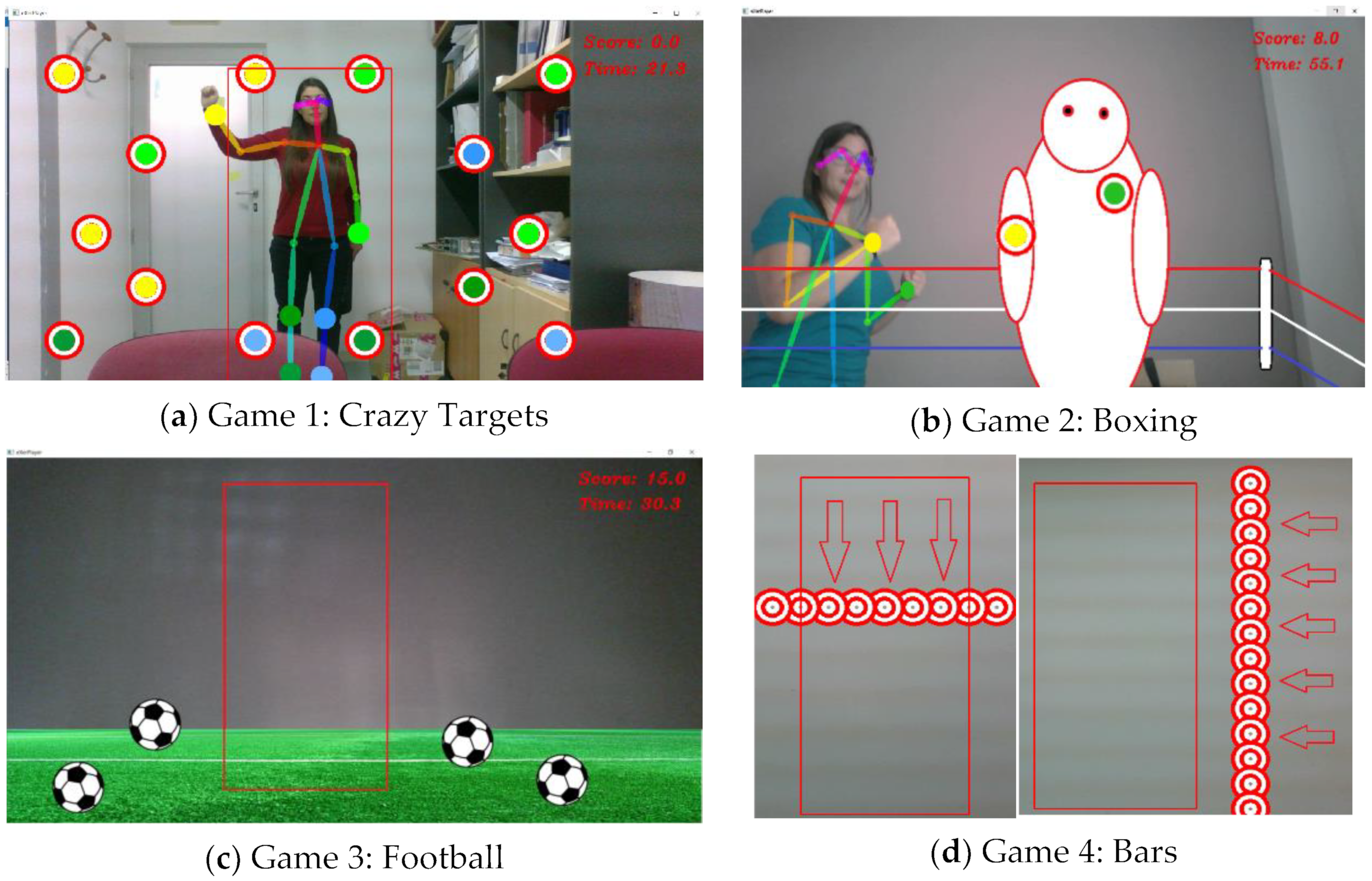

In game mode, as shown in

Figure 5, four different games are available, two of them are oriented to the stimulation of the upper train and the other two to the stimulation of the lower train. The four games developed are the following:

Game 1: Crazy Targets. This is a very simple game where the patient must touch the circular targets that appear on the scene. Although in this game, targets can be placed throughout the screen, it is specially designed to stimulate the extremities of the upper train. In addition, the game can be configured with different levels of difficulty, where the difficulty is imposed by applying movement to the targets (defining the trajectory and speed of the targets), specifying the articulation with which the target must be reached (in this case the targets are shown in the same color as the assigned joint), limiting the lifetime of the target (the target disappears when it is hit or when it reaches the maximum lifetime) or limiting the time to complete the game.

Game 2: Boxing. This game is designed for stimulation of the upper extremities. In this game, the patient becomes a great boxer and must hit the key points of the opponent until they are knocked out. In this game, the targets are static, and they appear in different positions, one by one, as they are hit. The level of difficulty can be increased by identifying the specific joint with which the target should be reached (in this case the targets are shown in the same color as the assigned joint), increasing the number of targets that appear at the same time, limiting the lifetime of the target or limiting the time to complete the game.

Game 3: Football. This game is designed for stimulation of the lower body in a football atmosphere. In this game, the patient must "kick the ball." This game is similar to Game 1. The patient has to reach the targets that appear on the screen, but this time, the targets will look like a soccer ball. As in Game 1, the targets can be configured to increase the level of difficulty by providing movement of the balls, specifying the articulation with which the target should be reached, limiting the lifetime of the target or limiting the time to complete the game.

Game 4: Bars. This game is designed for lower train stimulation. Unlike the other three games, in this game, the patient has to prevent the bars (formed by a set of aligned targets) from reaching them. The bars move around the screen, forcing the patient to make lateral displacements, jumps or squats. In this game, the difficulty can be increased by increasing the speed with which the bars move or with the ranges of the trajectories (start and end of the sweep performed by the bars).

For the placement of the targets in any of the four games, the therapist can select one of the predefined distributions for each game or can design a specific distribution for each prescribed session. After each game, a score is given so that the patient has immediate feedback about their performance.

It is important to note that in game mode, the patient has greater freedom of movement and rather than perfectly repeat a certain movement, the idea is to foment the exercise of a certain part of the body.

2.3. ExerWeb Module

The ExerWeb module provides a dynamic web interface (see

Figure 6) from where the therapist can manage patients, prescribe tasks or games and consult or analyze the results in a ubiquitous and totally remote way with the patient. Once the therapist is authenticated on the website, ExerWeb has a top menu accessible from any page of the website. From this menu, the therapist can access the Therapist section and the enabled management mechanisms.

2.3.1. Patient Management

ExerWeb has an ExerCam Patient Management web page. This page is the default page accessed when a therapist enters the website (see

Figure 7a). The Patient Management page offers a preview with the most relevant patient data in a table format. This section includes links to create, edit, delete, access the results of tasks and games and prescribe tasks and games to the patient. Both the creation mechanisms and the prescription of tasks are managed through forms (see

Figure 7b).

When the results of a patient are accessed, the history of tasks and games prescribed to that patient is shown (see

Figure 7a,b). Each of the prescribed tasks or games has an associated file of results that were generated with the evaluation algorithm and can be downloaded in different formats (txt, JSON or CSV). Likewise, two graphs are displayed (or fewer if not they have enough data) with the evolution of the last two tasks performed and two graphs (or fewer if they do not have enough data) with the evolution of the last two games performed.

The evaluation algorithm applied to the games consists of showing the degree of improvement set by the therapist when prescribing the game (time to overcome the activity, minimum necessary points, etc.). In the case of the tasks, the evaluation algorithm consists of calculating the ROM value.

For the calculation of the ROM value, two possible solutions were considered: (1) a first solution based on the targets reached and (2) a second solution based on the variation of joint coordinates. The operations carried out are the following:

For the example of shoulder abduction, the vectors involved correspond to those shown in Equations (9) and (10).

2.3.2. Task Management

From the Task Management section, the therapist can design specific tasks to later prescribe them to patients. For both the creation of a new task and the prescription of tasks to patients, a form format is used (see

Figure 8b). In the creation of a new task, the therapist will indicate the articulation on which the task is carried out, the movement to be carried out, the beginning degree, the final degree and the increase in grades between targets. For the prescription of tasks, the therapist will select a task and a patient will indicate a deadline to complete the task, the maximum initial grade and the minimum final grade to complete the task. The result of each task prescribed to a patient should be consulted from the Patient Management section (see

Figure 9b).

2.3.3. Game Management

Game management consists only of prescribing games to patients, as it currently does not allow the creation of new games. In the prescription of games, the therapist will select one of the games and a patient, indicate a deadline to complete the game and customize the game to the needs of the patient. A game can be customized indicating the duration, repetitions, difficulty, number of targets, score needed to overcome the task and adding a file with the positions in which the targets should appear.

Once exercises are performed by patients, ExerCam provides actionable information to therapists and patients through the detailed results. The result of each game prescribed to a patient should be consulted, as with the tasks, from the Patient Management section (see

Figure 9a).

On this page of results of a patient, a list of the tasks prescribed to that patient is shown first, showing an icon of whether the task has been exceeded or not (it is assumed that the task has been successfully completed if the joint ROM exceeds the minimum set for that task). Following the list are two graphs (maximum) with the historical results of the last two tasks. In the case of not having different associated tasks, a graph is shown, none if there are no patient data. Following the task Results section, a section with the results of the games is shown, showing in this case if the game has been exceeded based on the score obtained.

4. Conclusions

This paper studies the feasibility of applying 2D body pose estimation to the development of augmented reality exergames. To that end, the ExerCam application was developed. It works as an augmented reality mirror that overlays virtual objects on images captured by a webcam. As advantages of the proposal compared to other works discussed in

Section 1, ExerCam is a new telerehabilitation system for patients and therapists. Patients can perform therapy from home using a laptop and perform the tasks and exercises programmed by therapists. Therapists, in turn, can carry out a continuous and remote evaluation of the evolution of the patients. With a 3D system, patient information and monitor movements in game mode can be acquired more accurately, but then, a more complex system would be needed. The novelty of the system resides in the use of a low-cost 2D vision system for the estimation of the human pose to be able to obtain an application of augmented reality mirror type. ExerCam can be executed on a laptop (even in the future on a Tablet) without advanced devices so it becomes an accessible system for most people.

On the other hand, it has been possible to adapt a series of exercises proposed with a gamified scene, which avoids the feeling of monotony and boredom of traditional rehabilitation and motivates the participants to continue performing therapy at home. Its use in specific medical conditions has not been studied, although the accuracy of the calculation of the ROM through ExerCam has been demonstrated. However, the application allows the creation of tasks that could promote physical exercise and gross motor skills.

As indicated in

Section 3.1, in this first study, a distance of ten degrees between targets was chosen for the tasks in the task mode. This choice will be in the hands of the therapist who prescribes the tasks; however, for these first tests, 10 degrees was chosen, as the maximum standardized error for ROM calculation applications is usually 12 degrees. This way, one can obtain a maximum of 10 degrees of error in the ROM calculation applications in task mode parting from the target positions. If the distance between targets is decreased (or increased) to 5 degrees, we would have a maximum error of five degrees in task mode with targets. It is important to note that the ROM calculation with the highest precision obtained with ExerCam is obtained, as expected, with the ROM value from the variation of the joint coordinates.

In the results obtained from the calculation of the ROM using the variation of the joint, the measurement error committed by ExerCam is less than 3%, so the system is valid as a resource for measuring and evaluating the evolution of participants. It should also be taken into account that in manual measurements, there is a bias error such that, as the sample number increases, this error tends to decrease [

51].

ExerCam, as well as other exergames, is an application oriented toward users, and as such, experience and satisfaction are important factors to take into account. For that reason, after the execution of the exercises, the SUS questionnaire was given to the participants and therapist, allowing them to assess the usability of the system from the perspective of the user. In the results of the questionnaire, shown in

Table 6 and

Table 7, it can be observed that most of the users answered that the application was easy to use (with Items I2, I3 and I8 having scores greater than 4). According to I7, most of the participants think that other people would be able to learn to use the application quickly. From answers to I10, they do not think that it is necessary to acquire new knowledge or skills in order to use the application properly. According to I5 and I6, participants also think that the application is well engineered. Finally, the results obtained in Question I4 are remarkable, as some of the users think that they would need the support of a technical person to be able to use them, whereas other users do not agree. This is due to the heterogeneity in the participants of the study, particularly to their generational difference, the oldest people being those that answered that they need support. Regarding the responses to the SUS test given by the therapist, we can see in

Table 6 how the therapist sees the use of ExerCam as useful and easy and would like to use the system frequently. The therapist also reinforces the hypothesis of the usefulness of this system as a telerehabilitation tool.

As shown in

Figure 11, the total score obtained for each participant was positive, obtaining an average of 83.5 (excellent according to the adjective scale method). This result is significant and provides a level of confidence in the use of ExerCam.

It is also worth mentioning that a generic usability test was used, as at this stage, our application is aimed at being a prototype to demonstrate the feasibility of 2D pose estimation in augmented reality exergames. Nevertheless, depending on the purpose of developed applications, other questionnaires should be used. For example, for virtual rehabilitation applications, the USEQ test is available [

52].

It would also be interesting to carry out extensive and prolonged clinical studies that allow the effects of the rehabilitation system to be verified experimentally.

Furthermore, ExerCam can be used for different purposes, allowing trainers or therapists to define tasks based on the appearance of a virtual target on the screen, which the player has to reach.