Progressive Kernel Extreme Learning Machine for Food Image Analysis via Optimal Features from Quality Resilient CNN

Abstract

:1. Introduction

2. Related Work

3. Methodology

3.1. General Architecture

3.2. Visual Features via Quality Resilient Convolutional Neural Network

3.3. Gradient/Deep Explainer Based Strategy for Feature Selection

Feature Selection Steps for High-Dimensional Class-Imbalanced Image Data

| Algorithm 1 Feature selection steps based on Gradient/Deep explainer |

| Input - Feature vectors of training/test images extracted from the fully connected layer of the InceptionResNetV2 model. - Training images - Ranked outputs Output Indexes of selected attributes. Algorithm 1. Load deep learning model (InceptionResNetV2). 2. for i = 1 to c 3. for k = 1 to t 4. For the input image, compute the SHAP score of the attributes at the max pooling layer using gradient explainer according to Equation (4). 5. Apply average pooling to get a single vector of SHAP values. In case, the ranked outputs are more than 1 take average to get single vector. 6. Add the feature vector of SHAP values with the sum of SHAP values of previous feature vectors. 7. endfor 8. Compute the average SHAP score of each attribute of the feature vectors for the class by using Equation (5). 9. endfor 10. Compute the mean score of each attribute by taking the average SHAP score of all classes using Equation (6). 11. Determine the indexes of attributes with the highest SHAP score. 12. Select the features from training/testing feature vectors corresponding to indexes y of attributes with the highest SHAP score. |

3.4. Progressive Learning for Food Category and Ingredient Classification

3.4.1. Adaptive Reduced Class Incremental Kernel Extreme Learning Machine

Initialization Phase

Adding New Class

Growing Hidden Neurons

Sequential Learning

3.4.2. Progressive Kernel Extreme Learning Machine

Initialization Phase

Progressive Learning

Testing Phase

Novelty/Noise Detection

4. Experiment and Results

4.1. Datasets

4.1.1. Food101

4.1.2. UECFOOD100

4.1.3. UECFOOD256

4.1.4. Pakistani Food

4.1.5. PFID-Baseline/PFID

4.1.6. VireoFood-172

| Dataset | Total Class | Total Instance | Train / Test Instance |

|---|---|---|---|

| Food101 | 101 | 101,330 | 75,750/25,250 |

| UECFOOD100 | 100 | 14,361 | 12,864/1497 |

| UECFOOD256 | 256 | 31,148 | 28,033/3115 |

| PFID | 15 | 1388 | 1248/140 |

| PFID-Baseline | 61 | 1388 | 1248/140 |

| Pakistani Food | 100 | 4928 | 4448/480 |

| VireoFood-172 | 172 | 110,241 | 66,071/33,154 |

| Datasets | Total Ingredients | Total Instance |

|---|---|---|

| Food101 | 446 | 101,330 |

| Food101 (Simple) | 227 | 101,330 |

| VireoFood-172 | 353 | 110,241 |

4.2. Implementation

4.3. Performance Measures

4.3.1. Accuracy

4.3.2. F1-Score

4.3.3. Recall

4.3.4. Precision

4.3.5. Precision

4.3.6. Recall

4.3.7. F1-Score

4.3.8. Intransigence

4.3.9. Forgetting

4.3.10. Base Session

4.3.11. New Session

4.3.12. All Session

4.4. Results

4.4.1. Visual Features via Quality Resilient Convolutional Neural Network

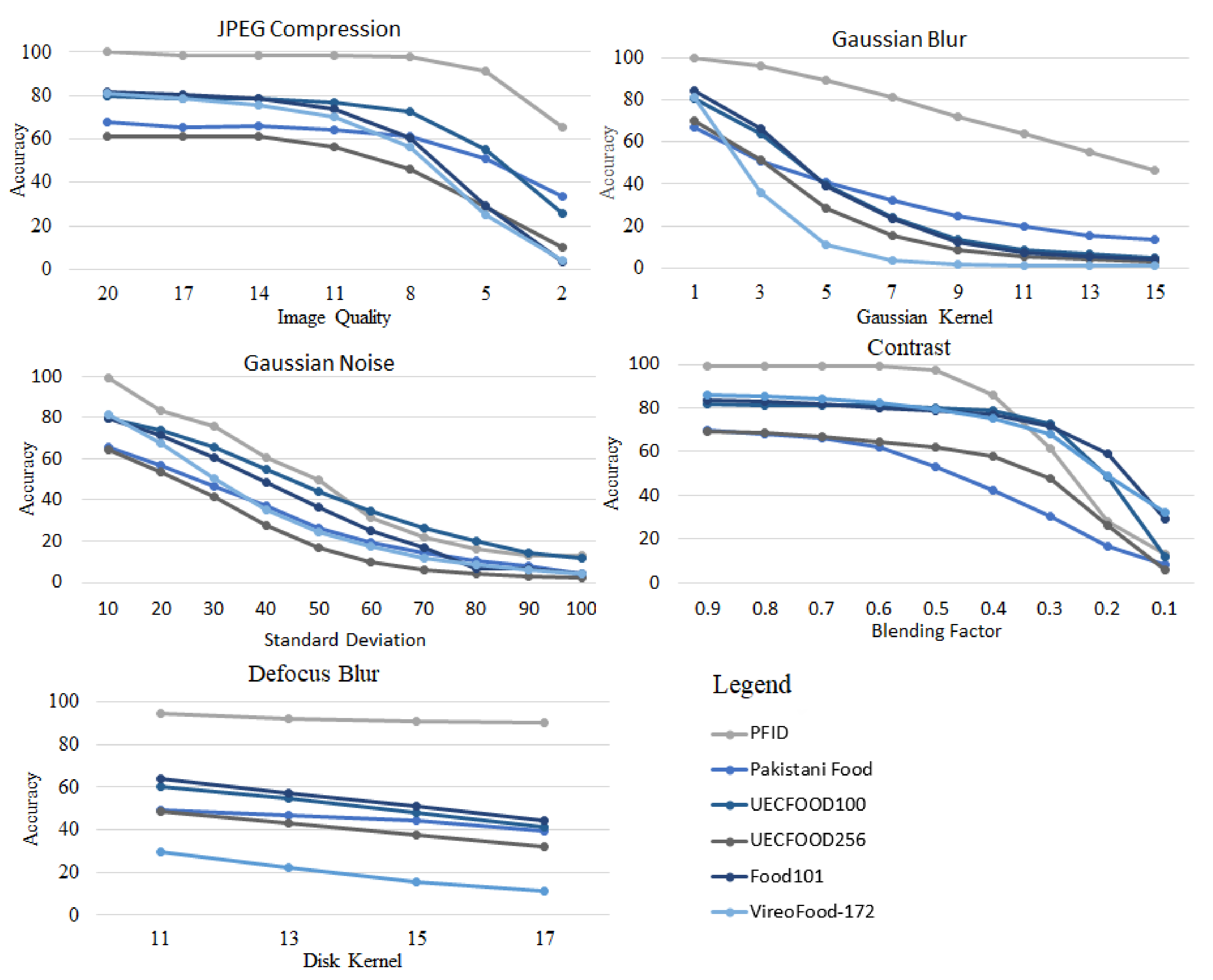

4.4.2. Impact of Food Image Quality on Visual Features from CNN

4.4.3. Random Iterative Mixup Data Augmentation during Fine-Tuning

4.5. Gradient/Deep Explainer Based Strategy for Feature Selection

| Datasets | ReliefF | Deep Explainer | Gradient Explainer |

|---|---|---|---|

| Food101 | 85.54 | 85.09 | 86.05 |

| UECFOOD100 | 90.63 | 90.71 | 90.98 |

| UECFOOD256 | 75.85 | 79.56 | 79.34 |

| PFID | 100 | 100 | 100 |

| PFID-Baseline | 69.97 | 70.64 | 71.15 |

| Pakistani Food | 76.01 | 75.70 | 77.43 |

| VireoFood-172 | 91.88 | 91.97 | 92.09 |

4.6. Progressive Learning for Food Category and Ingredient Classification

4.6.1. Multi-Class Classification for Food Category

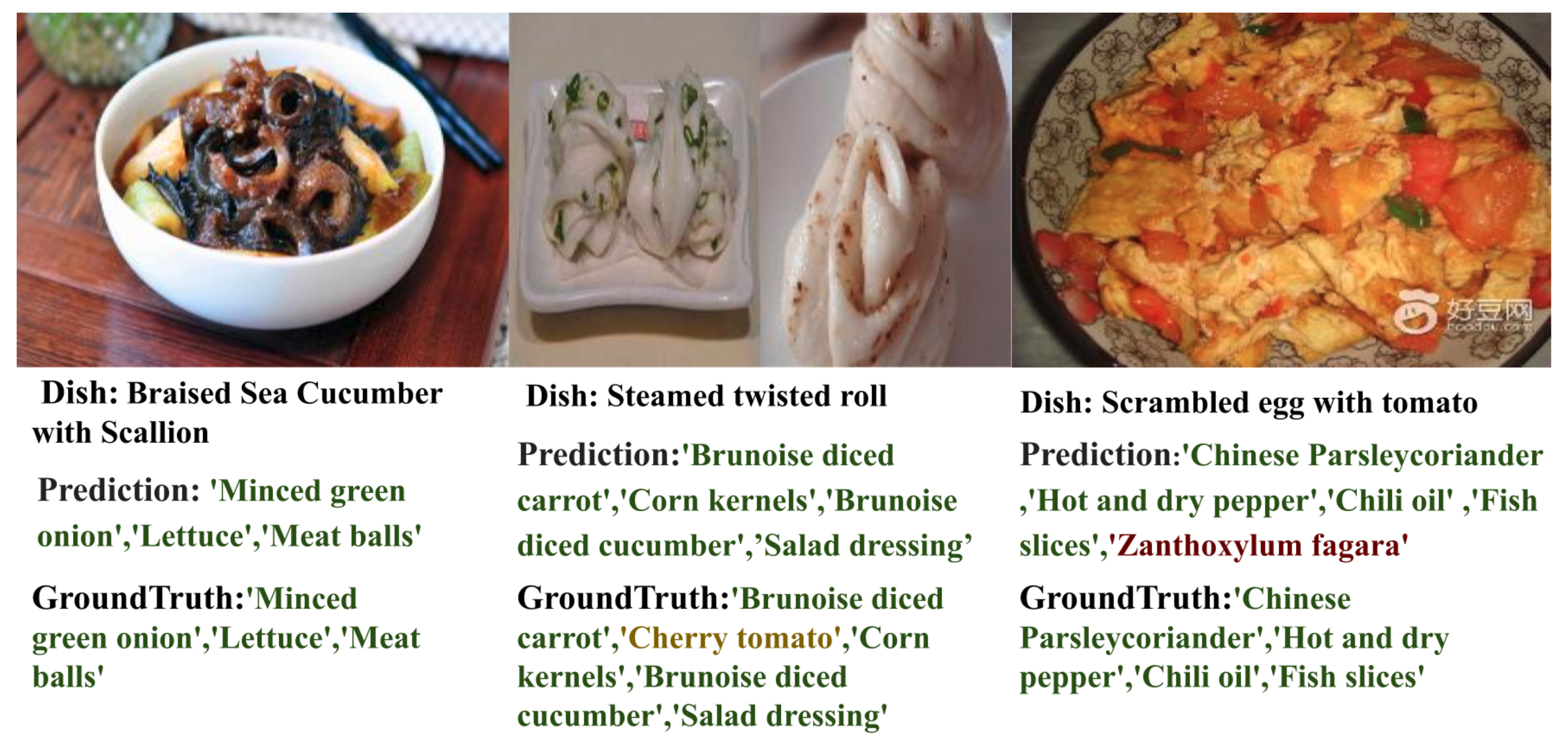

4.6.2. Multi-Label Classification for Ingredient Detection

| Datasets | Precision | Recall | F1-Score |

|---|---|---|---|

| Food101 | |||

| ResNet-50 | 83.42 | 59.34 | 69.35 |

| InceptionResNetV2 | 79.39 | 76.54 | 77.94 |

| PKELM (RBF) | 87.56 | 87.81 | 87.58 |

| PKELM (chi-squared) | 85.74 | 83.24 | 83.55 |

| Food101(Simplified) | |||

| ResNet-50 | 72.17 | 68.53 | 70.30 |

| InceptionResNetV2 | 76.10 | 76.41 | 76.25 |

| PKELM (RBF) | 82.89 | 83.45 | 83.02 |

| PKELM (chi-squared) | 83.52 | 77.89 | 78.71 |

| VireoFood-172 | |||

| ResNet-50 | 70.38 | 70.93 | 70.65 |

| InceptionResNetV2 | 70.40 | 70.94 | 70.67 |

| PKELM (RBF) | 87.98 | 87.18 | 87.02 |

| PKELM (chi-squared) | 89.51 | 84.00 | 85.67 |

4.7. Comparison of Catastrophic Forgetting during Progressive Learning

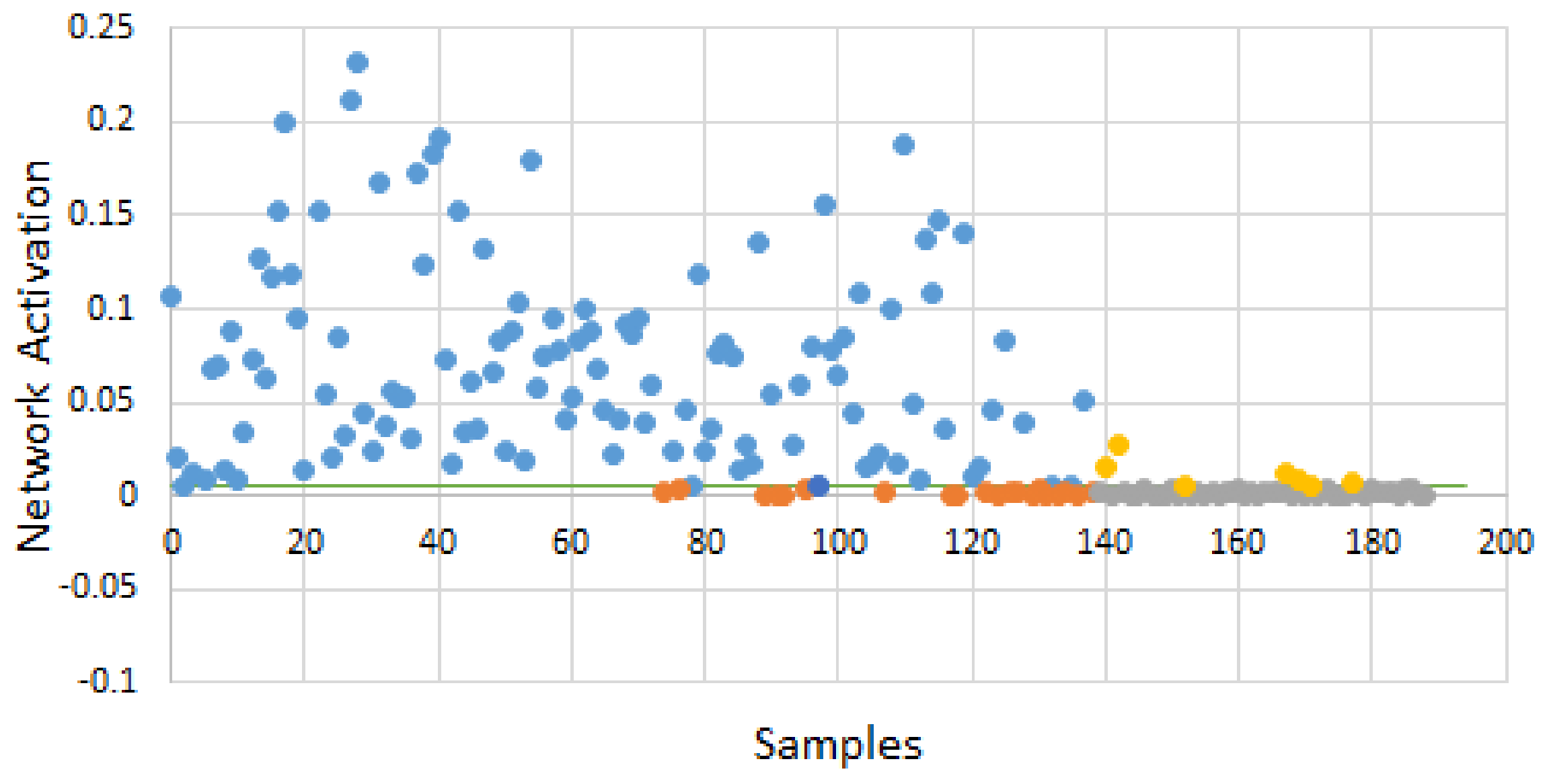

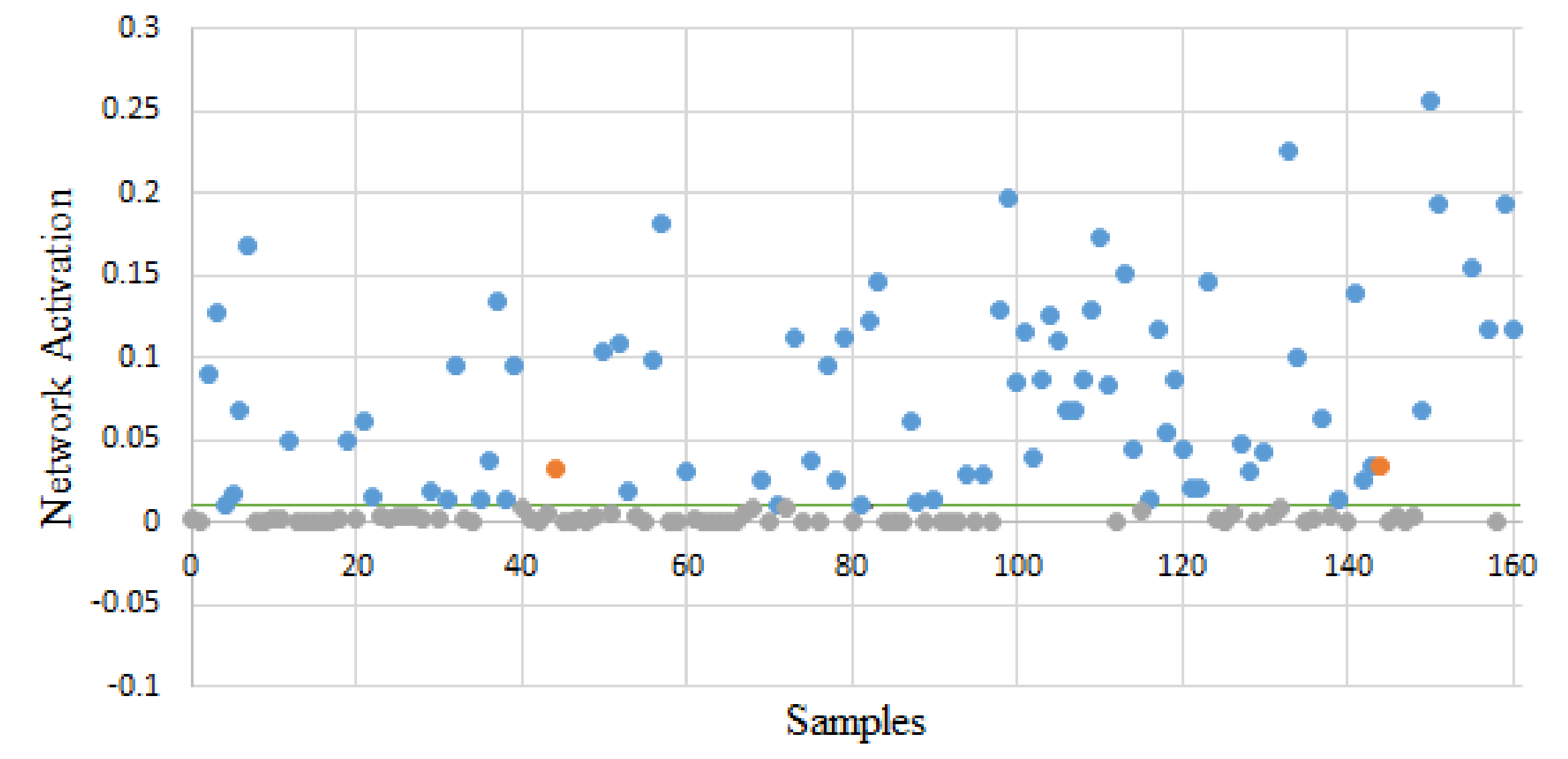

4.8. Novelty/Noise Detection during Online Learning

| Datasets | |||||

|---|---|---|---|---|---|

| PFID-Baseline | |||||

| CIELM | 0.3532 | 0.5441 | 0.5932 | 0.2532 | 0.1306 |

| ACIELM | 0.0022 | 0.1445 | 0.8234 | 0.8279 | 0.8359 |

| ARCIKELM | 0.0395 | 0.6618 | 0.7570 | 0.1794 | 0.0889 |

| PKELM (RBF) | 0.5391 | 0.8067 | 0.7860 | 0.1002 | 0.0986 |

| PKELM (chi-squared) | 0.5391 | 0.8074 | 0.7901 | 0.0950 | 0.0972 |

| Pakistani Food | |||||

| CIELM | 0.7905 | 0.7330 | 0.7492 | 0.0663 | 0.1044 |

| ACIELM | 0.4026 | 0.4605 | 0.9482 | 0.5481 | 0.5399 |

| ARCIKELM | 0.8044 | 0.7969 | 0.8289 | 0.0240 | 0.0848 |

| PKELM (RBF) | 0.7860 | 0.8253 | 0.8670 | −0.0169 | 0.0943 |

| PKELM (chi-squared) | 0.8863 | 0.8409 | 0.8646 | −0.0158 | 0.0934 |

| Food101 | |||||

| CIELM | 0.9069 | 0.8654 | 0.8695 | 0.0512 | 0.0575 |

| ACIELM | 0.4677 | 0.5688 | 0.9897 | 0.4642 | 0.5861 |

| ARCIKELM | 0.9430 | 0.9055 | 0.9078 | 0.0051 | 0.0417 |

| PKELM (RBF) | 0.9335 | 0.9042 | 0.9109 | 0.0056 | 0.0473 |

| PKELM (chi-squared) | 0.9412 | 0.9168 | 0.9244 | −0.0332 | 0.0620 |

| UECFOOD100 | |||||

| CIELM | 0.9500 | 0.9123 | 0.8319 | 0.0410 | 0.0688 |

| ACIELM | 0.3714 | 0.5450 | 0.9759 | 0.5240 | 0.6520 |

| ARCIKELM | 0.9642 | 0.9254 | 0.8651 | 0.0128 | 0.0512 |

| PKELM (RBF) | 0.9533 | 0.9363 | 0.8910 | −0.0005 | 0.0755 |

| PKELM (chi-squared) | 0.9557 | 0.9379 | 0.8861 | −0.0063 | 0.0563 |

| UECFOOD256 | |||||

| CIELM | 0.5362 | 0.7227 | 0.7757 | 0.1059 | 0.1236 |

| ACIELM | 0.1006 | 0.4722 | 0.9482 | 0.5481 | 0.5399 |

| ARCIKELM | 0.8808 | 0.8454 | 0.8691 | −0.0049 | 0.0839 |

| PKELM (RBF) | 0.8880 | 0.8491 | 0.8647 | −0.0332 | 0.0755 |

| PKELM (chi-squared) | 0.8109 | 0.8495 | 0.8810 | −0.0106 | 0.0876 |

4.9. Comparison with Other Frameworks

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Tahir, G.A.; Loo, C.K. An Open-Ended Continual Learning for Food Recognition Using Class Incremental Extreme Learning Machines. IEEE Access 2020, 8, 82328–82346. [Google Scholar] [CrossRef]

- Urbanowicz, R.J.; Meeker, M.; La Cava, W.; Olson, R.S.; Moore, J.H. Relief-based feature selection: Introduction and review. J. Biomed. Inform. 2018, 85, 189–203. [Google Scholar] [CrossRef] [PubMed]

- Herranz, L.; Xu, R.; Jiang, S.; Luis, H. A probabilistic model for food image recognition in restaurants. In Proceedings of the 2015 IEEE International Conference on Multimedia and Expo (ICME), Turin, Italy, 29 June–3 July 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Wanjeka, P.; Narongwut, K.; Rattapoom, W. Automatic sushi classification from images using color histograms and shape properties. In Proceedings of the 2014 3rd ICT International Senior Project Conference, ICT-ISPC 2014, Bangkok, Thailand, 26–27 March 2014; pp. 83–86. [Google Scholar] [CrossRef]

- Kong, F.; Tan, J. DietCam: Automatic dietary assessment with mobile camera phones. Pervasive Mob. Comput. 2012, 8, 147–163. [Google Scholar] [CrossRef]

- Anthimopoulos, M.M.; Gianola, L.; Scarnato, L.; Diem, P.; Mougiakakou, S.G. A Food Recognition System for Diabetic Patients Based on an Optimized Bag-of-Features Model. IEEE J. Biomed. Health Inform. 2014, 18, 1261–1271. [Google Scholar] [CrossRef]

- Zheng, J.; Wang, Z.J.; Ji, X. Superpixel-based image recognition for food images. In Proceedings of the 2016 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), Vancouver, BC, Canada, 15–18 May 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Miyano, R.; Uematsu, Y.; Saito, H. Food region detection using bag-of-features representation and color feature. In Proceedings of the International Conference on Computer Vision Theory and Applications, VISAPP 2012, Rome, Italy, 24–26 February 2012; pp. 709–713. [Google Scholar]

- Nguyen, D.T.; Zong, Z.; Ogunbona, P.O.; Probst, Y.; Li, W. Food image classification using local appearance and global structural information. Neurocomputing 2014, 140, 242–251. [Google Scholar] [CrossRef] [Green Version]

- Farinella, G.M.; Allegra, D.; Moltisanti, M.; Stanco, F.; Battiato, S. Retrieval and classification of food images. Comput. Biol. Med. 2016, 77, 23–39. [Google Scholar] [CrossRef]

- Pouladzadeh, P.; Shirmohammadi, S.; Arici, T.; Arici, T. Intelligent SVM based food intake measurement system. In Proceedings of the 2013 IEEE International Conference on Computational Intelligence and Virtual Environments for Measurement Systems and Applications (CIVEMSA), Milan, Italy, 15–17 July 2013; pp. 87–92. [Google Scholar] [CrossRef]

- Matsuda, Y.; Yanai, K. Multiple-food recognition considering co-occurrence employing manifold ranking. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 2017–2020. [Google Scholar]

- Bettadapura, V.; Thomaz, E.; Parnami, A.; Abowd, G.D.; Essa, I. Leveraging Context to Support Automated Food Recognition in Restaurants. In Proceedings of the 2015 IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2015; pp. 580–587. [Google Scholar] [CrossRef] [Green Version]

- Kusumoto, R.; Han, X.; Chen, Y.-W. Hybrid Aggregation of Sparse Coded Descriptors for Food Recognition. In Proceedings of the 2014 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 1490–1495. [Google Scholar] [CrossRef]

- He, H.; Kong, F.; Tan, J. DietCam: Multiview Food Recognition Using a Multikernel SVM. IEEE J. Biomed. Health Inform. 2015, 20, 848–855. [Google Scholar] [CrossRef]

- Luo, Y.; Ling, C.; Ao, S. Mobile-based food classification for Type-2 Diabetes using nutrient and textual features. In Proceedings of the 2014 International Conference on Data Science and Advanced Analytics (DSAA), Shanghai, China, 30 October–2 November 2014; pp. 563–569. [Google Scholar] [CrossRef]

- Tanno, R.; Okamoto, K.; Yanai, K. DeepFoodCam: A DCNN-based Real-time Mobile Food Recognition System. In Proceedings of the 2nd International Workshop on Multimedia Assisted Dietary Management, Amsterdam, The Netherlands, 16 October 2016; pp. 89–89. [Google Scholar] [CrossRef]

- Liu, C.; Cao, Y.; Luo, Y.; Chen, G.; Vokkarane, V.; Yunsheng, M.; Chen, S.; Hou, P. A New Deep Learning-Based Food Recognition System for Dietary Assessment on An Edge Computing Service Infrastructure. IEEE Trans. Serv. Comput. 2017, 11, 249–261. [Google Scholar] [CrossRef]

- Meyers, A.; Johnston, N.; Rathod, V.; Korattikara, A.; Gorban, A.; Silberman, N.; Guadarrama, S.; Papandreou, G.; Huang, J.; Murphy, K.P. Im2Calories: Towards an Automated Mobile Vision Food Diary. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1233–1241. [Google Scholar] [CrossRef]

- Sahoo, D.; Hao, W.; Ke, S.; Wu, X.W.; Le, H.; Achananuparp, P.; Lim, E.-P.; Hoi, S.C.H. FoodAI: Food Image Recognition via Deep Learning for Smart Food Logging. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2260–2268. [Google Scholar] [CrossRef]

- Ciocca, G.; Napoletano, P.; Schettini, R. Food Recognition: A New Dataset, Experiments, and Results. IEEE J. Biomed. Health Inform. 2016, 21, 588–598. [Google Scholar] [CrossRef]

- Heravi, E.J.; Aghdam, H.H.; Puig, D. Classification of Foods Using Spatial Pyramid Convolutional Neural Network. Artificial Intelligence Research and Development. In Proceedings of the 19th International Conference of the Catalan Association for Artificial Intelligence, Barcelona, Spain, 19–21 October 2016; Volume 288, pp. 163–168. [Google Scholar]

- Jiang, S.; Min, W.; Liu, L.; Luo, Z. Multi-Scale Multi-View Deep Feature Aggregation for Food Recognition. IEEE Trans. Image Process. 2019, 29, 265–276. [Google Scholar] [CrossRef]

- Chen, J.; Ngo, C.-W. Deep-based Ingredient Recognition for Cooking Recipe Retrieval. In Proceedings of the 24th ACM International Conference on Multimedia (MM’16), Amsterdam, The Netherlands, 15–19 October 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 32–41. [Google Scholar] [CrossRef]

- Horiguchi, S.; Amano, S.; Ogawa, M.; Aizawa, K. Personalized Classifier for Food Image Recognition. IEEE Trans. Multimedia 2018, 20, 2836–2848. [Google Scholar] [CrossRef] [Green Version]

- Martinel, N.; Piciarelli, C.; Micheloni, C. A supervised extreme learning committee for food recognition. Comput. Vis. Image Underst. 2016, 148, 67–86. [Google Scholar] [CrossRef]

- Dodge, S.; Karam, L. Understanding how image quality affects deep neural networks. In Proceedings of the 2016 Eighth International Conference on Quality of Multimedia Experience (QoMEX), Lisbon, Portugal, 6–8 June 2016; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Deng, Z.; Kawaguchi, K.; Ghorbani, A.; Zou, J.Y. How Does Mixup Help With Robustness and Generalization? arXiv 2021, arXiv:2010.04819. [Google Scholar]

- Zhang, H.; Cissé, M.; Dauphin, Y.; Lopez-Paz, D. mixup: Beyond Empirical Risk Minimization. arXiv 2018, arXiv:1710.09412. [Google Scholar]

- Borkar, T.S.; Karam, L.J. DeepCorrect: Correcting DNN Models Against Image Distortions. IEEE Trans. Image Process. 2019, 28, 6022–6034. [Google Scholar] [CrossRef] [Green Version]

- Zheng, S.; Song, Y.; Leung, T.; Goodfellow, I. Improving the Robustness of Deep Neural Networks via Stability Training. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef] [Green Version]

- Sun, Z.; Ozay, M.; Zhang, Y.; Liu, X.; Okatani, T. Feature Quantization for Defending Against Distortion of Images. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7957–7966. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S. A unified approach to interpreting model predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS’17), Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 4768–4777. [Google Scholar]

- Marsland, S.; Shapiro, J.; Nehmzow, U. A self-organising network that grows when required. Neural Netw. 2002, 15, 1041–1058. [Google Scholar] [CrossRef]

- Rasmussen, C.E.; Williams, C.K.I. Gaussian Processes for Machine Learning; The MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Zhang, J.; Marszalek, M.; Lazebnik, S.; Schmid, C. Local Features and Kernels for Classification of Texture and Object Categories: A Comprehensive Study. In Proceedings of the 2006 Conference on Computer Vision and Pattern Recognition Workshop (CVPRW’06), New York, NY, USA, 17–22 June 2006; p. 13. [Google Scholar] [CrossRef] [Green Version]

- Bossard, L.; Guillaumin, M.; Van Gool, L. Food-101—Mining Discriminative Components with Random Forests. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 446–461. [Google Scholar] [CrossRef]

- Bolaños, M.; Ferrà, A.; Radeva, P. Food Ingredients Recognition Through Multi-label Learning. In New Trends in Image Analysis and Processing—ICIAP 2017; Lecture Notes in Computer Science; Battiato, S., Farinella, G., Leo, M., Gallo, G., Eds.; Springer: Cham, Switzerland, 2017; Volume 10590. [Google Scholar] [CrossRef] [Green Version]

- Kawano, Y.; Yanai, K. Automatic Expansion of a Food Image Dataset Leveraging Existing Categories with Domain Adaptation. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 3–17. [Google Scholar] [CrossRef]

- Chen, M.; Dhingra, K.; Wu, W.; Yang, L.; Sukthankar, R.; Yang, J. PFID: Pittsburgh fast-food image dataset. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 289–292. [Google Scholar] [CrossRef] [Green Version]

- Kemker, R.; McClure, M.; Abitino, A.; Hayes, T.; Kanan, C. Measuring Catastrophic Forgetting in Neural Networks. arXiv 2017, arXiv:1708.02072. [Google Scholar]

- Liew, W.S.; Loo, C.K.; Gryshchuk, V.; Weber, C.; Wermter, S. Effect of Pruning on Catastrophic Forgetting in Growing Dual Memory Networks. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Feurer, M.; Hutter, F. Hyperparameter Optimization. In Automated Machine Learning; The Springer Series on Challenges in Machine Learning; Hutter, F., Kotthoff, L., Vanschoren, J., Eds.; Springer: Cham, Swizerland, 2019. [Google Scholar] [CrossRef] [Green Version]

- Martinel, N.; Foresti, G.L.; Micheloni, C. Wide-Slice Residual Networks for Food Recognition. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 567–576. [Google Scholar] [CrossRef] [Green Version]

- Liu, C.; Cao, Y.; Luo, Y.; Chen, G.; Vokkarane, V.; Ma, Y. DeepFood: Deep Learning-Based Food Image Recognition for Computer-Aided Dietary Assessment. In Inclusive Smart Cities and Digital Health; ICOST 2016, Lecture Notes in Computer Science; Chang, C., Chiari, L., Cao, Y., Jin, H., Mokhtari, M., Aloulou, H., Eds.; Springer: Cham, Swizerland, 2016; Volume 9677. [Google Scholar] [CrossRef] [Green Version]

- Pandey, P.; Deepthi, A.; Mandal, B.; Puhan, N.B. FoodNet: Recognizing Foods Using Ensemble of Deep Networks. IEEE Signal Process. Lett. 2017, 24, 1758–1762. [Google Scholar] [CrossRef] [Green Version]

- Yanai, K.; Kawano, Y. Food Image Recognition Using Deep Convolutional Network with Pre-Training and Fine-Tuning. In Proceedings of the 2015 IEEE International Conference on Multimedia & Expo Workshops (Icmew), Turin, Italy, 29 June–3 July 2015; pp. 1–6. [Google Scholar] [CrossRef]

- McAllister, P.; Zheng, H.; Bond, R.; Moorhead, A. Combining deep residual neural network features with supervised machine learning algorithms to classify diverse food image datasets. Comput. Biol. Med. 2018, 95, 217–233. [Google Scholar] [CrossRef]

- Mandal, B.; Puhan, N.B.; Verma, A. Deep Convolutional Generative Adversarial Network-Based Food Recognition Using Partially Labeled Data. IEEE Sens. Lett. 2019, 3, 7000104. [Google Scholar] [CrossRef]

- Liu, C.; Liang, Y.; Xue, Y.; Qian, X.; Fu, J. Food and Ingredient Joint Learning for Fine-Grained Recognition. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 2480–2493. [Google Scholar] [CrossRef]

| Initialization Phase 1. Initialize the network and creates two mapping nodes, and , using a small chunk of training data . 2. The connection set C is set to empty . 3. Compute the initial kernel matrix Equation (12). 4. Estimate the initial output weight Equation (13) and set . Progressive Learning For each iteration of the algorithm 5. Select the data sample from the input. 6. For each mapping node i in the network, computes the distance with the input node Equation (15). 7. Selects the first best matching node and second-best matching node, that is the nodes, Equation (16). 8. Create a new connection if it does not exist between the first and second-best matching nodes Equation (17). 9. Compute the activity of the best matching node. Update the beta matrix Equation (19), when new output neurons are added corresponding to a new food category or ingredients in the case of multi-label learning. 10. When the activity, a < activity threshold , and firing counter < firing threshold . a. Insert the new node in the model Equation (21). b. Compute the new weight vector and set the value to the average of the best matching mapping and input node Equation (22). c. Insert the edges between the input node and the first and second-best matched Equation (23). d. Remove the link between the first best and second-best Equation (24). e. Compute the kernel matrix of the input vector with nodes in the network by using Equations (25) and (26). f. Insert a new mapping node in the kernel matrix by using the growing hidden neuron methodology Equations (7)–(11). 11. If no new mapping node, then update the position of the first best match as in Equation (27), and its neighbors by using Equation (28) to which it is connected. 12. Increment the age of all the edges of the neighbors of first best matched by 1. 13. Compute the kernel matrix by using Equation (30). 14. Update the output matrix G and B according to Equations (31) and (32). 15. Reduce the firing frequency of the winning node as in Equation (33) and of its neighbors by using Equation (34). 16. Remove mapping nodes with age greater than the threshold or no neighbors. 17. If new inputs, go to step 1; otherwise, the model is already updated. Testing Phase 1. During the testing phase computes the distance between the input node and the mapping nodes. 2. Compute the kernel matrix by using Equation (35). 3. Compute the output Y vector as in Equation (36). 4. Select food category corresponding to the index of the highest value in the Y vector. 5. For multi-label classification, compute the final classification results by using the threshold value. Select the labels corresponding to the indexes of the Y vector with a threshold greater than zero as in Equation (37). Noise or Novelty Detection 1. Compute the activation value with the best matching neuron. 2. Use Equation (40) to compute the network response. If it is less than the threshold, then it is a novel concept or noisy sample. Labeling Data 1. Compute the activation value and check the response Equation (40). 2. If it is larger then the threshold assigns the label of the winning neuron. |

| Accuracy | F1-Score (Macro) | Precision (Macro) | Recall (Macro) | Accuracy | F1-Score (Macro) | Precision (Macro) | Recall (Macro) | |

|---|---|---|---|---|---|---|---|---|

| PFID-Baseline | Top1 | Top3 | ||||||

| ELM (batch) | 69.77 ± 0.285 | 66.13 ± 0.343 | 68.34 ± 0.395 | 69.77 ± 0.285 | 81.71 ± 0.146 | 79.54 ± 0.202 | 81.41 ± 0.227 | 81.71 ± 0.146 |

| RKELM (batch) | 52.41 ± 0.590 | 43.17 ± 0.672 | 40.80 ± 0.882 | 52.41 ± 0.590 | 60.63 ± 0.478 | 51.53 ± 0.622 | 48.78 ± 0.719 | 60.63 ± 0.478 |

| CIELM | 50.04 ± 1.414 | 44.63 ± 0.741 | 46.67 ± 0.442 | 50.07 ± 1.414 | 71.26 ± 2.570 | 67.54 ± 2.724 | 69.55 ± 2.653 | 71.26 ± 2.570 |

| ACIELM | 12.04 ± 1.18 | 3.33 ± 1.12 | 2.02 ± 1.09 | 12.04 ± 1.15 | 36.13 ± 1.18 | 29.35 ± 1.12 | 28.18 ± 1.09 | 36.13 ± 1.15 |

| ARCIKELM | 67.03 ± 1.34 | 62.47 ± 1.22 | 65.35 ± 0.70 | 67.03 ± 1.34 | 79.68 ± 2.187 | 75.87 ± 2.462 | 77.10 ± 1.363 | 79.68 ± 2.187 |

| PKELM (RBF) | 71.15 ± 0 | 66.78 ± 0 | 68.08 ± 0 | 71.15 ± 0 | 81.25 ± 0 | 77.83 ± 0 | 77.53 ± 0 | 81.25 ± 0 |

| PKELM (chi-squared) | 71.67 ± 0 | 67.57 ± 0 | 68.87 ± 0 | 71.67 ± 0 | 81.48 ± 0 | 78.63 ± 0 | 78.39 ± 0 | 81.48 ± 0 |

| Food101 | Top1 | Top3 | ||||||

| ELM (batch) | 86.79 ± 0.018 | 86.76 ± 0.014 | 86.88 ± 0.017 | 86.79 ± 0.016 | 94.61 ± 0.009 | 94.54 ± 0.009 | 94.62 ± 0.008 | 94.53 ± 0.009 |

| RKELM (batch) | 85.78 ± 0.019 | 85.74 ± 0.019 | 85.91 ± 0.019 | 85.78 ± 0.019 | 94.47 ± 0.010 | 94.43 ± 0.010 | 94.52 ± 0.010 | 94.42 ± 0.010 |

| CIELM | 80.06 ± 0.091 | 79.77 ± 0.084 | 80.38 ± 0.081 | 80.06 ± 0.091 | 90.04 ± 0.035 | 89.94 ± 0.057 | 90.19 ± 0.017 | 90.04 ± 0.035 |

| ACIELM | 41.84 ± 6.12 | 61.23 ± 5.91 | 92.73 ± 5.83 * | 41.64 ± 5.65 | 43.61 ± 8.11 | 62.31 ± 6.87 | 94.66 ± 5.73 * | 45.97 ± 6.66 |

| ARCIKELM | 85.95 ± 0.086 | 85.92 ± 0.092 | 86.03 ± 0.076 | 85.95 ± 0.086 | 94.31 ± 0.098 | 94.29 ± 0.095 | 94.36 ± 0.100 | 94.31 ± 0.098 |

| PKELM (RBF) | 86.05 ± 0 | 86.76 ± 0 | 86.06 ± 0 | 86.05 ± 0 | 94.83 ± 0 | 94.83 ± 0 | 94.88 ± 0 | 94.734 ± 0 |

| PKELM (chi-squared) | 86.79 ± 0 | 86.83 ± 0 | 87.24 ± 0 | 86.79 ± 0 | 95.13 ± 0 | 95.15 ± 0 | 95.24 ± 0 | 95.13 ± 0 |

| Pakistani Food | Top1 | Top3 | ||||||

| ELM (batch) | 76.62 ± 0.104 | 76.15 ± 0.114 | 78.66 ± 0.180 | 76.66 ± 0.105 | 88.58 ± 0.112 | 88.29 ± 0.120 | 89.88 ± 0.087 | 88.54 ± 0.111 |

| RKELM (batch) | 73.49 ± 0.058 | 72.40 ± 0.055 | 75.92 ± 0.163 | 73.43 ± 0.053 | 86.92 ± 0.160 | 86.18 ± 0.207 | 88.44 ± 0.254 | 86.81 ± 0.148 |

| CIELM | 69.60 ± 0.975 | 68.57 ± 1.247 | 71.99 ± 1.580 | 69.54 ± 1.090 | 84.96 ± 0.381 | 84.47 ± 0.309 | 86.97 ± 0.498 | 84.86 ± 0.299 |

| ACIELM | 26.42 ± 2.14 | 60.29 ± 1.68 * | 78.75 ± 1.42 * | 26.58 ± 1.53 | 11.44 ± 2.14 | 8.65 ± 1.68 | 8.41 ± 1.428 | 11.44 ± 1.53 |

| ARCIKELM | 74.63 ± 0.203 | 73.80 ± 0.223 | 77.36 ± 0.918 | 74.53 ± 0.190 | 88.00 ± 1.154 | 87.62 ± 1.124 | 89.58 ± 0.842 | 87.78 ± 1.147 |

| PKELM (RBF) | 77.43 ± 0 | 77.031 ± 0 | 80.56 ± 0 | 77.53 ± 0 | 90.48 ± 0 | 90.33 ± 0 | 91.71 ± 0 | 90.43 ± 0 |

| PKELM (chi-squared) | 78.20 ± 0 | 77.65 ± 0 | 80.85 ± 0 | 78.30 ± 0 | 91.10 ± 0 | 90.86 ± 0 | 92.66 ± 0 | 91.04 ± 0 |

| VireoFood-172 | Top1 | Top3 | ||||||

| ELM (batch) | 91.69 ± 0.003 | 91.66 ± 0.001 | 91.83 ± 0.003 | 91.64 ± 0.006 | 96.49 ± 0.008 | 96.52 ± 0.010 | 96.61 ± 0.015 | 96.48 ± 0.006 |

| RKELM (batch) | 92.01 ± 0.006 | 92.01 ± 0.011 | 92.30 ± 0.012 | 91.90 ± 0.010 | 96.92 ± 0.005 | 96.88 ± 0.005 | 97.08 ± 0.006 | 96.74 ± 0.004 |

| CIELM | 87.13 ± 0.335 | 86.37 ± 0.407 | 88.76 ± 0.312 | 85.70 ± 0.398 | 92.31 ± 0.196 | 92.16 ± 0.186 | 93.48 ± 0.124 | 91.50 ± 0.232 |

| ACIELM | 29.12 ± 3.12 | 60.69 ± 1.58 * | 78.32 ± 1.32 * | 29.88 ± 1.33 | 31.22 ± 2.14 | 64.17 ± 1.88 * | 80.46 ± 2.41 * | 30.88 ± 3.51 |

| ARCIKELM | 92.09 ± 0.023 | 92.07 ± 0.039 | 92.35 ± 0.038 | 91.97 ± 0.036 | 96.92 ± 0.025 | 96.88 ± 0.024 | 97.09 ± 0.032 | 96.73 ± 0.016 |

| PKELM (RBF) | 92.09 ± 0 | 92.09 ± 0 | 92.32 ± 0 | 92.04 ± 0 | 96.85 ± 0 | 96.60 ± 0 | 96.78 ± 0 | 96.49 ± 0 |

| PKELM (chi-squared) | 92.17 ± 0 | 92.19 ± 0 | 92.34 ± 0 | 92.16 ± 0 | 97.24 ± 0 | 97.25 ± 0 | 97.39 ± 0 | 97.15 ± 0 |

| UECFOOD100 | Top1 | Top3 | ||||||

| ELM (batch) | 90.46 ± 0.020 | 84.29 ± 0.051 | 86.35 ± 0.059 | 83.70 ± 0.061 | 97.05 ± 0.015 | 94.03 ± 0.023 | 95.53 ± 0.023 | 93.22 ± 0.020 |

| RKELM (batch) | 89.32 ± 0.025 | 83.32 ± 0.024 | 85.70 ± 0.090 | 82.64 ± 0.027 | 95.57 ± 0.025 | 92.31 ± 0.024 | 94.04 ± 0.090 | 91.50 ± 0.027 |

| CIELM | 87.37 ± 0.288 | 79.92 ± 0.188 | 83.42 ± 0.430 | 79.05 ± 0.321 | 95.04 ± 0.088 | 90.24 ± 0.441 | 92.54 ± 0.575 | 89.20 ± 0.292 |

| ACIELM | 38.28 ± 3.77 | 88.08 * ± 2.18 | 91.51 * ± 3.06 | 34.13 ± 2.26 | 42.36 ± 7.14 | 86.11 * ± 3.09 | 90.44 * ± 4.16 | 35.16 ± 3.31 |

| ARCIKELM | 89.20 ± 0.297 | 83.58 ± 0.679 | 85.91 ± 0.759 | 82.86 ± 0.587 | 95.68 ± 0.130 | 91.95 ± 0.283 | 93.79 ± 0.235 | 91.07 ± 0.360 |

| PKELM (RBF) | 90.98 ± 0 | 84.15 ± 0 | 86.04 ± 0 | 83.57 ± 0 | 96.76 ± 0 | 93.66 ± 0 | 94.99 ± 0 | 92.98 ± 0 |

| PKELM (chi-squared) | 91.18 ± 0 | 84.46 ± 0 | 86.58 ± 0 | 83.78 ± 0 | 96.56 ± 0 | 93.86 ± 0 | 95.21 ± 0 | 93.30 ± 0 |

| UECFOOD256 | Top1 | Top3 | ||||||

| ELM (batch) | 78.38 ± 0.087 | 77.60 ± 0.093 | 80.16 ± 0.010 | 78.64 ± 0.083 | 89.51 ± 0.016 | 89.18 ± 0.093 | 90.69 ± 0.010 | 89.55 ± 0.083 |

| RKELM (batch) | 79.00 ± 0.035 | 78.24 ± 0.048 | 80.85 ± 0.033 | 79.33 ± 0.035 | 90.50 ± 0.015 | 90.23 ± 0.014 | 91.65 ± 0.016 | 90.52 ± 0.013 |

| CIELM | 68.08 ± 1.090 | 68.97 ± 1.145 | 74.55 ± 1.196 | 69.55 ± 1.086 | 79.76 ± 1.542 | 78.99 ± 1.825 | 82.74 ± 1.467 | 79.71 ± 1.750 |

| ACIELM | 39.82 ± 4.14 | 69.76 ± 4.12 * | 88.11 ± 2.21 * | 39.83 ± 3.97 | 41.43 ± 5.11 | 69.61 ± 4.09 * | 89.11 ± 2.27 * | 40.91 ± 4.06 |

| ARCIKELM | 78.85 ± 0.131 | 77.89 ± 0.276 | 80.54 ± 0.532 | 79.02 ± 0.163 | 90.27 ± 0.131 | 89.99 ± 0.185 | 91.43 ± 0.198 | 90.28 ± 0.167 |

| PKELM (RBF) | 79.34 ± 0 | 78.83 ± 0 | 81.31 ± 0 | 79.49 ± 0 | 90.33 ± 0 | 90.12 ± 0 | 91.43 ± 0 | 90.29 ± 0 |

| PKELM (chi-squared) | 79.45 ± 0 | 78.97 ± 0 | 81.88 ± 0 | 79.75 ± 0 | 91.48 ± 0 | 90.98 ± 0 | 92.18 ± 0 | 91.14 ± 0 |

| Method | Accuracy | Average Accuracy of All Sessions |

|---|---|---|

| EnsembleNet [46] | 72.10% | - |

| ResNet-152+SVM-RBF [48] | 64.98% | - |

| Liu et al. [18] | 77.00% | - |

| GAN [49] | 75.30% | - |

| CNN+Inception [45] | 77.40% | - |

| SELC [26] | 55.89% | - |

| ARCIKELM [1] | 81.24% | - |

| QIF+SHAP+PKELM | 83.71% | 86.79% |

| Method | Accuracy | Average Accuracy of All Sessions |

|---|---|---|

| Liu et al. [18] | 54.50% | - |

| AlexNet [47] | 67.57% | - |

| ARCIKELM [1] | 69.32% | 75.85% |

| QIF+SHAP+PKELM | 71.12% | 79.45% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tahir, G.A.; Loo, C.K. Progressive Kernel Extreme Learning Machine for Food Image Analysis via Optimal Features from Quality Resilient CNN. Appl. Sci. 2021, 11, 9562. https://doi.org/10.3390/app11209562

Tahir GA, Loo CK. Progressive Kernel Extreme Learning Machine for Food Image Analysis via Optimal Features from Quality Resilient CNN. Applied Sciences. 2021; 11(20):9562. https://doi.org/10.3390/app11209562

Chicago/Turabian StyleTahir, Ghalib Ahmed, and Chu Kiong Loo. 2021. "Progressive Kernel Extreme Learning Machine for Food Image Analysis via Optimal Features from Quality Resilient CNN" Applied Sciences 11, no. 20: 9562. https://doi.org/10.3390/app11209562