The Effect of Task Complexity on Time Estimation in the Virtual Reality Environment: An EEG Study

Abstract

:1. Introduction

1.1. Task Complexity and Time Estimation

1.2. Task Complexity and Electroencephalography

1.3. NASA-Task Load Index

2. Methods

2.1. Participants

2.2. Apparatus

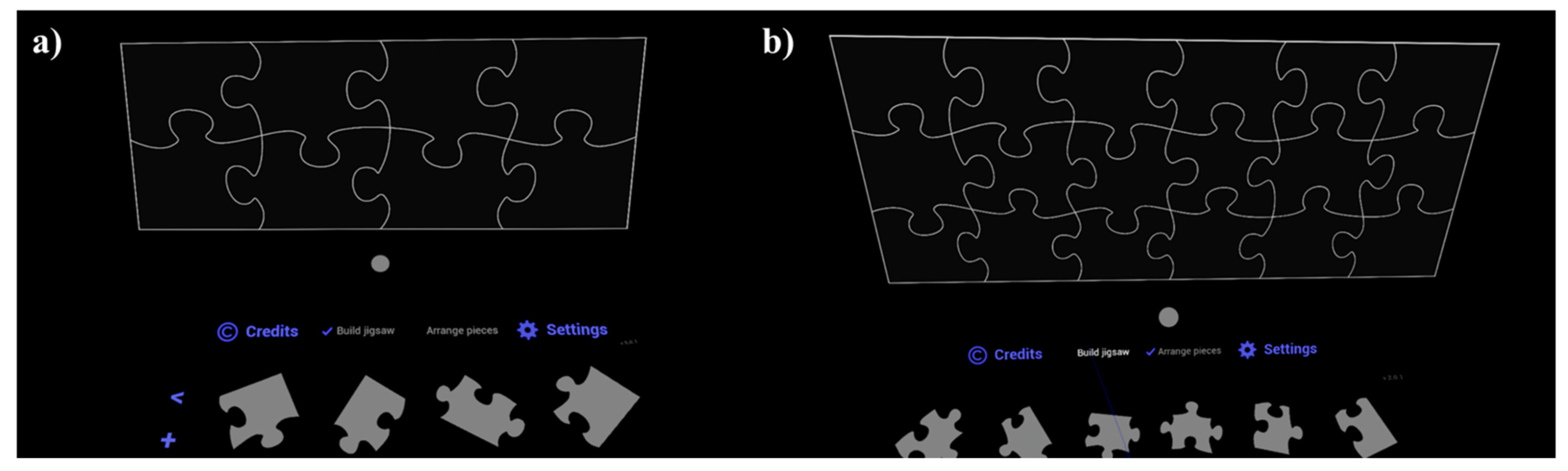

2.3. Experimental Design

2.4. Procedure

2.5. Data Analysis

2.5.1. Absolute Time Estimation Error

2.5.2. Relative Beta-Band Power

2.5.3. NASA-TLX

3. Results

3.1. Descriptive Statistics

3.2. The Effect of Task Complexity and Block Sequence on Dependent Variables

3.2.1. Absolute Time Estimation Error

3.2.2. Relative Beta-Band Power of EEG

3.2.3. Subjective Workload

3.3. Correlations among Responses

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Schneider, S.; Kisby, M.; Flint, C. Effect of virtual reality on time perception in patients receiving chemotherapy. Supportive Care Cancer 2011, 19, 555–564. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wiederhold, M.D.; Wiederhold, B.K. Virtual reality and interactive simulation for pain distraction. Pain Med. 2007, 8, 182–188. [Google Scholar] [CrossRef]

- Greengard, S. Virtual Reality; MIT Press: Cambridge, MA, USA, 2019. [Google Scholar]

- Bisson, N.; Tobin, S.; Grondin, S. Prospective and retrospective time estimates of children: A comparison based on ecological tasks. PLoS ONE 2012, 7, e33049. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Block, R.A.; Zakay, D. Prospective and retrospective duration judgments: A meta-analytic review. Psychon. Bull. Rev. 1997, 4, 184–197. [Google Scholar] [CrossRef]

- Brown, S.W. Attentional resources in timing: Interference effects in concurrent temporal and nontemporal working memory tasks. Percept. Psychophys. 1997, 59, 1118–1140. [Google Scholar] [CrossRef] [Green Version]

- Grondin, S. Timing and time perception: A review of recent behavioral and neuroscience findings and theoretical directions. Atten. Percept. Psychophys. 2010, 72, 561–582. [Google Scholar] [CrossRef]

- Zakay, D.; Block, R.A. Temporal cognition. Curr. Dir. Psychol. Sci. 1997, 6, 12–16. [Google Scholar] [CrossRef]

- Eagleman, D.M.; Pariyadath, V. Is subjective duration a signature of coding efficiency? Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 1841–1851. [Google Scholar] [CrossRef]

- Zakay, D. Psychological time as information: The case of boredom. Front. Psychol. 2014, 5, 917. [Google Scholar] [CrossRef] [Green Version]

- Egger, S.; Reichl, P.; Hoßfeld, T.; Schatz, R. “Time is bandwidth”? Narrowing the gap between subjective time perception and Quality of Experience. In Proceedings of the 2012 IEEE International Conference on Communications (ICC), Ottawa, ON, Canada, 10–15 June 2012; pp. 1325–1330. [Google Scholar] [CrossRef]

- Davis, M.; Heineke, J. How disconfirmation, perception and actual waiting times impact customer satisfaction. Int. J. Serv. Ind. Manag. 1998, 9, 64–73. [Google Scholar] [CrossRef]

- East, R.; Singh, J.; Wright, M.; Vanhuele, M. Consumer Behaviour: Applications in Marketing; Sage: Thousand Oaks, CA, USA, 2016. [Google Scholar]

- Klapproth, F. Time and decision making in humans. Cogn. Affect. Behav. Neurosci. 2008, 8, 509–524. [Google Scholar] [CrossRef] [Green Version]

- Wittmann, M.; Paulus, M.P. Decision making, impulsivity and time perception. Trends Cogn. Sci. 2008, 12, 7–12. [Google Scholar] [CrossRef]

- Aitken, M.E. Personality Profile of the College Student Procrastinator. Dissertation Abstracts International: Section A. Humanities and Social Sciences. Ph.D. Thesis, University of Pittsburgh, Pittsburgh, PA, USA, 1982; pp. 722–723. Available online: https://psycnet.apa.org/record/1983-52736-001 (accessed on 9 September 2021).

- Josephs, R.A.; Hahn, E.D. Bias and accuracy in estimates of task duration. Organ. Behav. Hum. Decis. Process. 1995, 61, 202–213. [Google Scholar] [CrossRef]

- Chirico, A.; D′Aiuto, M.; Pinto, M.; Milanese, C.; Napoli, A.; Avino, F.; Iodice, G.; Russo, G.; De Laurentiis, M.; Ciliberto, G.; et al. The elapsed time during a virtual reality treatment for stressful procedures: A pool analysis on breast cancer patients during chemotherapy. In Intelligent Interactive Multimedia Systems and Services; De Pietro, G., Gallo, L., Howlett, R.J., Jain, L.C., Eds.; Springer: Cham, Switzerland, 2016; pp. 731–738. [Google Scholar] [CrossRef]

- Volante, W.G.; Cruit, J.; Tice, J.; Shugars, W.; Hancock, P.A. Time flies: Investigating duration judgments in virtual reality. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Thousand Oaks, CA, USA, 27 September 2018; Volume 62, pp. 1777–1781. [Google Scholar] [CrossRef]

- Bruder, G.; Steinicke, F. Time perception during walking in virtual environments. In Proceedings of the 2014 IEEE Virtual Reality, Minneapolis, MN, USA, 29 March–2 April 2014; pp. 67–68. [Google Scholar] [CrossRef]

- Liu, P.; Li, Z. Task complexity: A review and conceptualization framework. Int. J. Ind. Ergon. 2012, 42, 553–568. [Google Scholar] [CrossRef]

- Wood, R.E. Task complexity: Definition of the construct. Organ. Behav. Hum. Decis. Process. 1986, 37, 60–82. [Google Scholar] [CrossRef]

- Bedny, G.Z.; Karwowski, W.; Bedny, I.S. Complexity evaluation of computer-based tasks. Int. J. Hum. Comput. Interact. 2012, 28, 236–257. [Google Scholar] [CrossRef]

- Robinson, P. Task complexity, task difficulty, and task production: Exploring interactions in a componential framework. Appl. Linguist. 2001, 22, 27–57. [Google Scholar] [CrossRef]

- Zakay, D.; Nitzan, D.; Glicksohn, J. The influence of task difficulty and external tempo on subjective time estimation. Percept. Psychophys. 1983, 34, 451–456. [Google Scholar] [CrossRef] [Green Version]

- Chan, A.; Hoffmann, E. Subjective estimation of task time and task difficulty of simple movement tasks. J. Mot. Behav. 2016, 49, 185–199. [Google Scholar] [CrossRef]

- Brown, S.W. Time perception and attention: The effects of prospective versus retrospective paradigms and task demands on perceived duration. Percept. Psychophys. 1985, 38, 115–124. [Google Scholar] [CrossRef]

- Schatzschneider, C.; Bruder, G.; Steinicke, F. Who turned the clock? Effects of manipulated zeitgebers, cognitive load and immersion on time estimation. IEEE Trans. Vis. Comput.Graph. 2016, 22, 1387–1395. [Google Scholar] [CrossRef] [PubMed]

- Binnie, C.D.; Prior, P.F. Electroencephalography. J. Neurol. Neurosurg. Psychiatry 1994, 57, 1308–1319. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Choi, M.K.; Lee, S.M.; Ha, J.S.; Seong, P.H. Development of an EEG-based workload measurement method in nuclear power plants. Ann. Nucl. Energy 2018, 111, 595–607. [Google Scholar] [CrossRef]

- Kamiński, J.; Brzezicka, A.; Gola, M.; Wróbel, A. Beta band oscillations engagement in human alertness process. Int. J. Psychophysiol. 2012, 85, 125–128. [Google Scholar] [CrossRef]

- Klimesch, W. EEG alpha and theta oscillations reflect cognitive and memory performance: A review and analysis. Brain Res. Rev. 1999, 29, 169–195. [Google Scholar] [CrossRef]

- Engel, A.K.; Fries, P. Beta-band oscillations—signalling the status quo? Curr. Opin. Neurobiol. 2010, 20, 156–165. [Google Scholar] [CrossRef]

- Howells, F.M.; Stein, D.J.; Russell, V.A. Perceived Mental Effort Correlates with Changes in Tonic Arousal During Attentional Tasks. Behav. Brain Funct. 2010, 6, 39. Available online: http://www.behavioralandbrainfunctions.com/content/6/1/39 (accessed on 30 September 2021). [CrossRef] [Green Version]

- Murata, A. An attempt to evaluate mental workload using wavelet transform of EEG. Hum. Factors 2005, 47, 498–508. [Google Scholar] [CrossRef]

- Chen, Y.; Huang, X. Modulation of alpha and beta oscillations during an n-back task with varying temporal memory load. Front. Psychol. 2016, 6, 2031. [Google Scholar] [CrossRef] [Green Version]

- Brookings, J.B.; Wilson, G.F.; Swain, C.R. Psychophysiological responses to changes in workload during simulated air traffic control. Biol. Psychol. 1996, 42, 361–377. [Google Scholar] [CrossRef]

- Fairclough, S.H.; Venables, L.; Tattersall, A. The influence of task demand and learning on the psychophysiological response. Int. J. Psychophysiol. 2005, 56, 171–184. [Google Scholar] [CrossRef] [PubMed]

- Wilson, G.F.; Swain, C.R.; Ullsperger, P. EEG power changes during a multiple level memory retention task. Int. J. Psychophysiol. 1999, 32, 107–118. [Google Scholar] [CrossRef]

- Micheloyannis, S.; Vourkas, M.; Bizas, M.; Simos, P.; Stam, C.J. Changes in linear and nonlinear EEG measures as a function of task complexity: Evidence for local and distant signal synchronization. Brain Topogr. 2003, 15, 239–247. [Google Scholar] [CrossRef] [PubMed]

- Fernández, T.; Harmony, T.; Rodriguez, M.; Bernal, J.; Silva, J.; Reyes, A.; Marosi, E. EEG activation patterns during the performance of tasks involving different components of mental calculation. Electroencephalogr. Clin. Neurophysiol. 1995, 94, 175–182. [Google Scholar] [CrossRef]

- Bočková, M.; Chládek, J.; Jurák, P.; Halámek, J.; Rektor, I. Executive functions processed in the frontal and lateral temporal cortices: Intracerebral study. Clin. Neurophysiol. 2007, 118, 2625–2636. [Google Scholar] [CrossRef]

- Dey, A.; Chatburn, A.; Billinghurst, M. Exploration of an EEG-based cognitively adaptive training system in virtual reality. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 220–226. [Google Scholar] [CrossRef]

- Fadeev, K.A.; Smirnov, A.S.; Zhigalova, O.P.; Bazhina, P.S.; Tumialis, A.V.; Golokhvast, K.S. Too real to be virtual: Autonomic and EEG responses to extreme stress scenarios in virtual reality. Behav. Neurol. 2020, 2020. [Google Scholar] [CrossRef]

- Berka, C.; Levendowski, D.J.; Cvetinovic, M.M.; Petrovic, M.M.; Davis, G.; Lumicao, M.N.; Olmstead, R. Real-time analysis of EEG indexes of alertness, cognition, and memory acquired with a wireless EEG headset. Int. J. Human-Computer Interact. 2004, 17, 151–170. [Google Scholar] [CrossRef]

- Borghini, G.; Astolfi, L.; Vecchiato, G.; Mattia, D.; Babiloni, F. Measuring neurophysiological signals in aircraft pilots and car drivers for the assessment of mental workload, fatigue and drowsiness. Neurosci. Biobehav. Rev. 2014, 44, 58–75. [Google Scholar] [CrossRef]

- Hart, S.G. NASA-task load index (NASA-TLX); 20 years later. Proc. Hum. Factors Ergon. Soc. Annu.Meet. 2006, 50, 904–908. [Google Scholar] [CrossRef] [Green Version]

- Fournier, L.R.; Wilson, G.F.; Swain, C.R. Electrophysiological, behavioral, and subjective indexes of workload when performing multiple tasks: Manipulations of task difficulty and training. Int. J. Psychophysiol. 1999, 31, 129–145. [Google Scholar] [CrossRef]

- Jaquess, K.J.; Lo, L.C.; Oh, H.; Lu, C.; Ginsberg, A.; Tan, Y.Y.; Gentili, R.J. Changes in mental workload and motor performance throughout multiple practice sessions under various levels of task difficulty. Neuroscience 2018, 393, 305–318. [Google Scholar] [CrossRef]

- Carswell, C.M.; Clarke, D.; Seales, W.B. Assessing mental workload during laparoscopic surgery. Surg. Innov. 2005, 12, 80–90. [Google Scholar] [CrossRef]

- Liu, Y.; Wickens, C.D. Mental workload and cognitive task automaticity: An evaluation of subjective and time estimation metrics. Ergonomics 1994, 37, 1843–1854. [Google Scholar] [CrossRef]

- LaViola, J.J., Jr. A discussion of cybersickness in virtual environments. ACM Sigchi Bull. 2000, 32, 47–56. [Google Scholar] [CrossRef]

- Davis, S.; Nesbitt, K.; Nalivaiko, E. A systematic review of cybersickness. In Proceedings of the 2014 Conference on Interactive Entertainment, Newcastle, Australia, 2–3 December 2014; pp. 1–9. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing, 2013. Available online: https://www.scirp.org/(S(i43dyn45teexjx455qlt3d2q))/reference/ReferencesPapers.aspx?ReferenceID=1787696 (accessed on 9 September 2021).

- Koessler, L.; Maillard, L.; Benhadid, A.; Vignal, J.P.; Felblinger, J.; Vespignani, H.; Braun, M. Automated cortical projection of EEG sensors: Anatomical correlation via the international 10–10 system. Neuroimage 2009, 46, 64–72. [Google Scholar] [CrossRef]

- Carrasco, M.C.; Bernal, M.C.; Redolat, R. Time estimation and aging: A comparison between young and elderly adults. Int. J. Aging Hum. Dev. 2001, 52, 91–101. [Google Scholar] [CrossRef]

- Meaux, J.B.; Chelonis, J.J. Time perception differences in children with and without ADHD. J. Pediatr. Heal. Care 2003, 17, 64–71. [Google Scholar] [CrossRef]

- Welch, P. The use of fast Fourier transform for the estimation of power spectra: A method based on time averaging over short, modified periodograms. IEEE Trans. Audio Electroacoust. 1967, 15, 70–73. [Google Scholar] [CrossRef] [Green Version]

- Nussbaumer, H.J. The fast Fourier transform. In Fast Fourier Transform and Convolution Algorithms; Springer: Berlin/Heidelberg, Germany, 1981; pp. 80–111. [Google Scholar] [CrossRef]

- Alkan, A.; Kiymik, M.K. Comparison of AR and Welch methods in epileptic seizure detection. J. Med. Syst. 2006, 30, 413–419. [Google Scholar] [CrossRef]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; van Mulbregt, P. SciPy 1.0: Fundamental algorithms for scientific computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.C.; Tan, D.S. Using a low-cost electroencephalograph for task classification in HCI research. In Proceedings of the 19th Annual ACM Symposium on User Interface Software and Technology, Montreux, Switzerland, 15–18 October 2006; pp. 81–90. [Google Scholar] [CrossRef]

- Grimes, D.; Tan, D.S.; Hudson, S.E.; Shenoy, P.; Rao, R.P. Feasibility and pragmatics of classifying working memory load with an electroencephalograph. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008; pp. 835–844. [Google Scholar] [CrossRef]

- Fernández, T.; Harmony, T.; Rodríguez, M.; Reyes, A.; Marosi, E.; Bernal, J. Test-retest reliability of EEG spectral parameters during cognitive tasks: I absolute and relative power. Int. J. Neurosci. 1993, 68, 255–261. [Google Scholar] [CrossRef] [PubMed]

- Moretti, D.V.; Babiloni, C.; Binetti, G.; Cassetta, E.; Dal Forno, G.; Ferreric, F.; Rossini, P.M. Individual analysis of EEG frequency and band power in mild Alzheimer′s disease. Clin. Neurophysiol. 2004, 115, 299–308. [Google Scholar] [CrossRef] [Green Version]

- Bakdash, J.Z.; Marusich, L.R. Repeated measures correlation. Front. Psychol. 2017, 8, 456. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Thomas, E.A.C.; Weaver, W.B. Cognitive processing and time perception. Percept. Psychophys. 1975, 17, 363–367. [Google Scholar] [CrossRef]

- Zakay, D. Subjective time and attentional resource allocation: An integrated model of time estimation. Adv.Psychol. 1989, 59, 365–397. [Google Scholar] [CrossRef]

- Polti, I.; Martin, B.; van Wassenhove, V. The effect of attention and working memory on the estimation of elapsed time. Sci. Rep. 2018, 8, 6690. [Google Scholar] [CrossRef] [Green Version]

- Brown, S.W. Timing, Resources, and Interference: Attentional Modulation of Time Perception. In Attention and Time; Oxford University Press: Oxford, UK, 2010; pp. 107–121. [Google Scholar]

- Lelis-Torres, N.; Ugrinowitsch, H.; Apolinário-Souza, T.; Benda, R.N.; Lage, G.M. Task engagement and mental workload involved in variation and repetition of a motor skill. Sci. Rep. 2017, 7, 14764. [Google Scholar] [CrossRef] [Green Version]

- Gevins, A.; Smith, M.E.; Leong, H.; McEvoy, L.; Whitfield, S.; Du, R.; Rush, G. Monitoring working memory load during computer-based tasks with EEG pattern recognition methods. Hum. Factors 1998, 40, 79–91. [Google Scholar] [CrossRef]

- Puma, S.; Matton, N.; Paubel, P.V.; Raufaste, É.; El-Yagoubi, R. Using theta and alpha band power to assess cognitive workload in multitasking environments. Int. J. Psychophysiol. 2018, 123, 111–120. [Google Scholar] [CrossRef]

- Kulashekhar, S.; Pekkola, J.; Palva, J.M.; Palva, S. The role of cortical beta oscillations in time estimation. Hum. Brain Mapp. 2016, 37, 3262–3281. [Google Scholar] [CrossRef] [Green Version]

- Ghaderi, A.H.; Moradkhani, S.; Haghighatfard, A.; Akrami, F.; Khayyer, Z.; Balcı, F. Time estimation and beta segregation: An EEG study and graph theoretical approach. PLoS ONE 2018, 13, e0195380. [Google Scholar] [CrossRef]

- Wiener, M.; Parikh, A.; Krakow, A.; Coslett, H.B. An intrinsic role of beta oscillations in memory for time estimation. Sci. Rep. 2018, 8, 7992. [Google Scholar] [CrossRef] [Green Version]

- Lecoutre, L.A.; Lini, S.; Lebour, Q.; Bey, C.; Favier, P. Evaluating EEG measures as a workload assessment in an operational video game setup. In Proceedings of the PhyCS 2015—2nd International Conference on Physiological Computing Systems, Loire Valley, France, 11–13 February 2015; pp. 112–117. Available online: https://www.scitepress.org/papers/2015/53189/53189.pdf (accessed on 30 September 2021).

- Espinosa-Fernández, L.; Miró, E.; Cano, M.; Buela-Casal, G. Age-related changes and gender differences in time estimation. Acta Psychol. 2003, 112, 221–232. [Google Scholar] [CrossRef]

- Wessel, J.R. Error awareness and the error-related negativity: Evaluating the first decade of evidence. Front. Hum. Neurosci. 2012, 6, 88. [Google Scholar] [CrossRef] [Green Version]

- Pezzetta, R.; Nicolardi, V.; Tidoni, E.; Aglioti, S.M. Error, rather than its probability, elicits specific electrocortical signatures: A combined EEG-immersive virtual reality study of action observation. J. Neurophysiol. 2018, 120, 1107–1118. [Google Scholar] [CrossRef]

- Sutcliffe, A.G.; Poullis, C.; Gregoriades, A.; Katsouri, I.; Tzanavari, A.; Herakleous, K. Reflecting on the design process for virtual reality applications. Int. J. Hum. Comput. Interact. 2019, 35, 168–179. [Google Scholar] [CrossRef]

| Variables | Levels |

|---|---|

| Independent variables | Task complexity (low, high) |

| Block sequence (first, second, third) | |

| Dependent variables | Absolute time estimation error |

| Relative beta-band power | |

| The NASA-TLX score |

| Responses | Low Complexity | High Complexity | ||

|---|---|---|---|---|

| Mean | 95% CI | Mean | 95% CI | |

| Task completion time | 47.74 | [45.00, 50.47] | 140.69 | [130.98, 150.40] |

| Estimated time | 56.78 | [50.32, 63.24] | 168.07 | [151.70, 184.44] |

| Absolute time estimation error | 18.95 | [14.92, 22.99] | 56.87 | [46.86, 66.89] |

| NASA-TLX score | 3.35 | [2.91, 3.79] | 5.56 | [5.04, 6.08] |

| Relative beta-band power at Cz | 0.2 | [0.19, 0.20] | 0.19 | [0.18, 0.20] |

| Relative beta-band power at Fz | 0.18 | [0.17, 0.19] | 0.17 | [0.16, 0.18] |

| Relative beta-band power at Pz | 0.2 | [0.19, 0.21] | 0.19 | [0.19, 0.20] |

| Variables | Coefficients | p-Value |

|---|---|---|

| Absolute time estimation error and relative beta-band power at Cz | −0.25 | 0.01 * |

| Absolute time estimation error and relative beta-band power at Fz | −0.16 | 0.11 |

| Absolute time estimation error and relative beta-band power at Pz | −0.17 | 0.08 |

| Absolute time estimation error and NASA-TLX score | 0.55 | <0.001 *** |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Kim, J.-E. The Effect of Task Complexity on Time Estimation in the Virtual Reality Environment: An EEG Study. Appl. Sci. 2021, 11, 9779. https://doi.org/10.3390/app11209779

Li J, Kim J-E. The Effect of Task Complexity on Time Estimation in the Virtual Reality Environment: An EEG Study. Applied Sciences. 2021; 11(20):9779. https://doi.org/10.3390/app11209779

Chicago/Turabian StyleLi, Jiaxin, and Ji-Eun Kim. 2021. "The Effect of Task Complexity on Time Estimation in the Virtual Reality Environment: An EEG Study" Applied Sciences 11, no. 20: 9779. https://doi.org/10.3390/app11209779