Integration of Sentinel 1 and Sentinel 2 Satellite Images for Crop Mapping

Abstract

:1. Introduction

- Combine Sentinel 1 and Sentinel 2 time series data;

- Classification of the majority of strategic national crops;

- Provide crop classification data with acceptable accuracy.

2. Materials and Methods

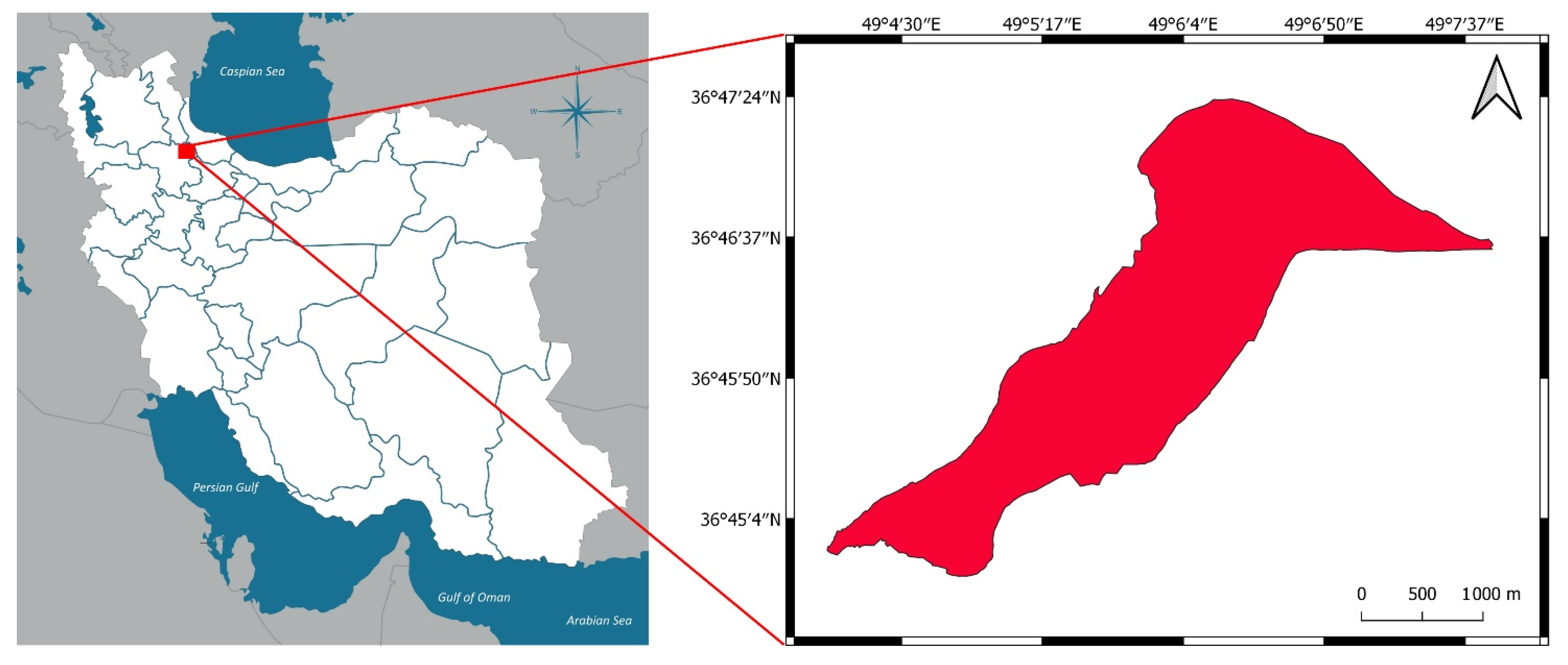

2.1. Study Area

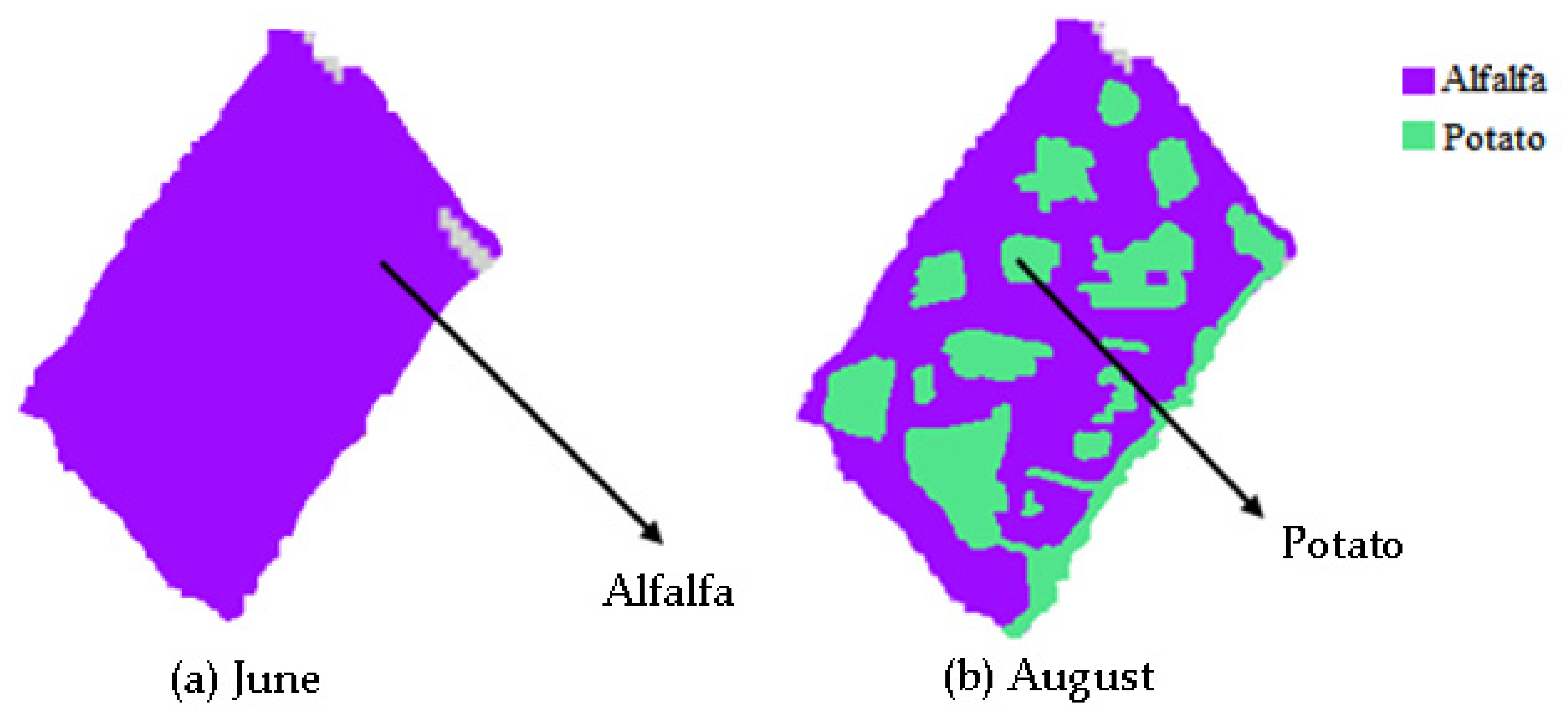

2.2. Field Data

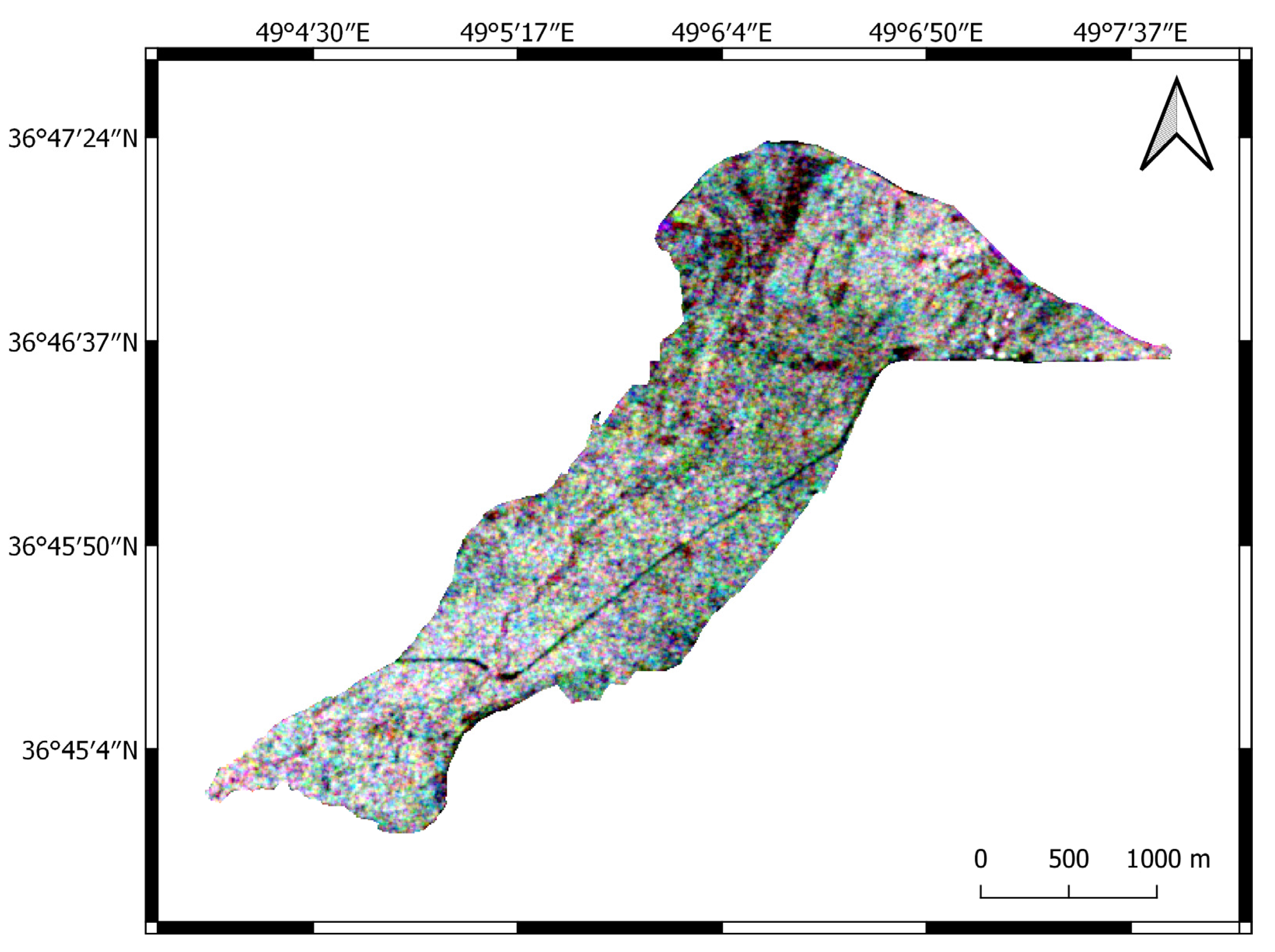

2.3. Sentinel 1 Data

2.4. Sentinel 2 Data

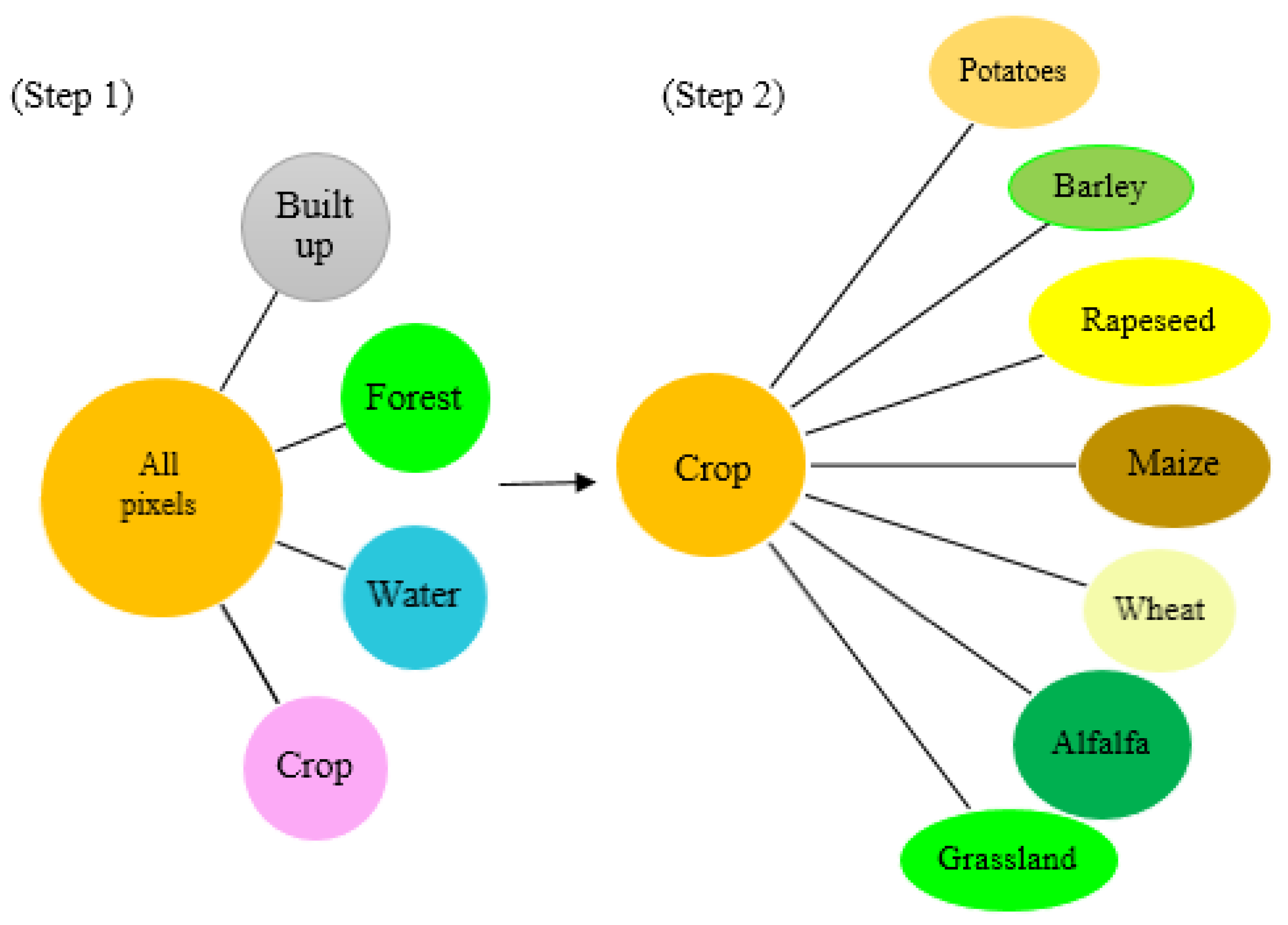

2.5. Classification of Hierarchical Random Forest

2.6. Calibration and Validation Data

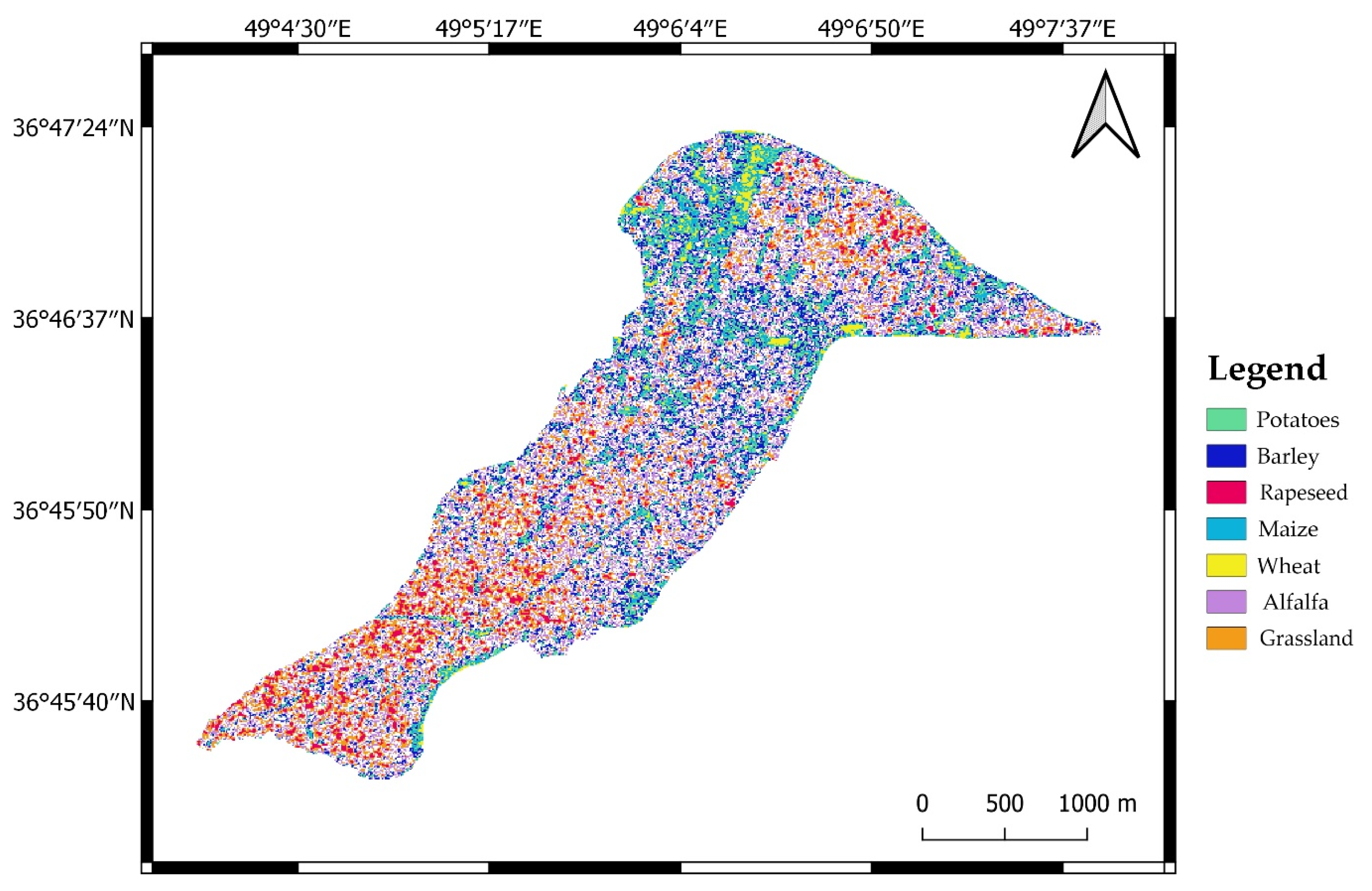

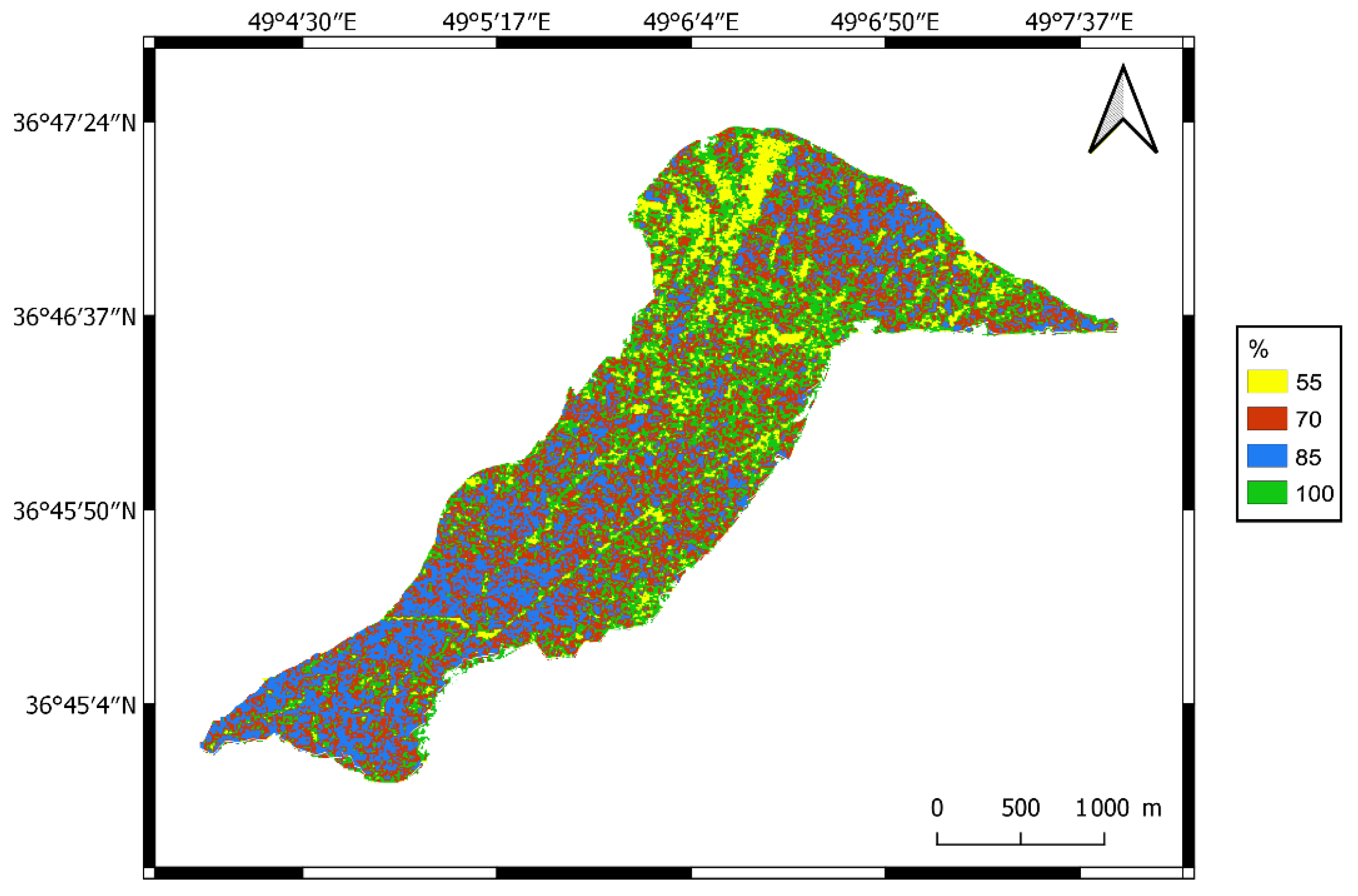

2.7. Classification Schemes

2.8. Classification Accuracy

3. Result

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jain, M.; Balwinder-Singh; Rao, P.; Srivastava, A.K.; Poonia, S.; Blesh, J.; Azzari, G.; McDonald, A.J.; Lobell, D.B. The impact of agricultural interventions can be doubled by using satellite data. Nat. Sustain. 2019, 2, 931–934. [Google Scholar] [CrossRef]

- Sharifi, A. Estimation of biophysical parameters in wheat crops in Golestan province using ultra-high resolution images. Remote Sens. Lett. 2018, 9, 559–568. [Google Scholar] [CrossRef]

- Kosari, A.; Sharifi, A.; Ahmadi, A.; Khoshsima, M. Remote sensing satellite’s attitude control system: Rapid performance sizing for passive scan imaging mode. Aircr. Eng. Aerosp. Technol. 2020, 92, 1073–1083. [Google Scholar] [CrossRef]

- Wu, F.; Wu, B.; Zhang, M.; Zeng, H.; Tian, F. Identification of crop type in crowdsourced road view photos with deep convolutional neural network. Sensors 2021, 21, 1165. [Google Scholar] [CrossRef]

- Van Tricht, K.; Gobin, A.; Gilliams, S.; Piccard, I. Synergistic use of radar sentinel 1 and optical sentinel 2 imagery for crop mapping: A case study for Belgium. Remote Sens. 2018, 10, 1642. [Google Scholar] [CrossRef] [Green Version]

- Ghaderizadeh, S.; Abbasi-Moghadam, D.; Sharifi, A.; Zhao, N.; Tariq, A. Hyperspectral Image Classification Using a Hybrid 3D-2D Convolutional Neural Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7570–7588. [Google Scholar] [CrossRef]

- Sharifi, A.; Amini, J.; Sumantyo, J.T.S.; Tateishi, R. Speckle reduction of PolSAR images in forest regions using fast ICA algorithm. J. Indian Soc. Remote Sens. 2015, 43, 339–346. [Google Scholar] [CrossRef]

- McNairn, H.; Brisco, B. The application of C-band polarimetric SAR for agriculture: A review. Can. J. Remote Sens. 2004, 30, 525–542. [Google Scholar] [CrossRef]

- Baillarin, S.J.; Meygret, A.; Dechoz, C.; Petrucci, B.; Lacherade, S.; Tremas, T.; Isola, C.; Martimort, P.; Spoto, F. Sentinel 2 level 1 products and image processing performances. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 7003–7006. [Google Scholar] [CrossRef] [Green Version]

- Sharifi, A. Development of a method for flood detection based on Sentinel 1 images and classifier algorithms. Water Environ. J. 2020, 35, 924–929. [Google Scholar] [CrossRef]

- Uddin, K.; Matin, M.A.; Meyer, F.J. Operational flood mapping using multi-temporal Sentinel 1 SAR images: A case study from Bangladesh. Remote Sens. 2019, 11, 1581. [Google Scholar] [CrossRef] [Green Version]

- McNairn, H.; Champagne, C.; Shang, J.; Holmstrom, D.; Reichert, G. Integration of optical and Synthetic Aperture Radar (SAR) imagery for delivering operational annual crop inventories. ISPRS J. Photogramm. Remote Sens. 2009, 64, 434–449. [Google Scholar] [CrossRef]

- Soria-Ruiz, J.; Fernandez-Ordońez, Y.; Woodhouse, I.H. Land-cover classification using radar and optical images: A case study in Central Mexico. Int. J. Remote Sens. 2010, 31, 3291–3305. [Google Scholar] [CrossRef]

- Inglada, J.; Vincent, A.; Arias, M.; Marais-Sicre, C. Improved early crop type identification by joint use of high temporal resolution sar and optical image time series. Remote Sens. 2016, 8, 362. [Google Scholar] [CrossRef] [Green Version]

- Ferrant, S.; Selles, A.; Le Page, M.; Herrault, P.A.; Pelletier, C.; Al-Bitar, A.; Mermoz, S.; Gascoin, S.; Bouvet, A.; Saqalli, M.; et al. Detection of irrigated crops from Sentinel 1 and Sentinel 2 data to estimate seasonal groundwater use in South India. Remote Sens. 2017, 9, 1119. [Google Scholar] [CrossRef] [Green Version]

- Joshi, N.; Baumann, M.; Ehammer, A.; Fensholt, R.; Grogan, K.; Hostert, P.; Jepsen, M.R.; Kuemmerle, T.; Meyfroidt, P.; Mitchard, E.T.A.; et al. A review of the application of optical and radar remote sensing data fusion to land use mapping and monitoring. Remote Sens. 2016, 8, 70. [Google Scholar] [CrossRef] [Green Version]

- De Oliveira Pereira, L.; Da Costa Freitas, C.; Anna, S.J.S.S.; Lu, D.; Moran, E.F. Optical and radar data integration for land use and land cover mapping in the Brazilian Amazon. GIScience Remote Sens. 2013, 50, 301–321. [Google Scholar] [CrossRef]

- Zhou, T.; Pan, J.; Zhang, P.; Wei, S.; Han, T. Mapping winter wheat with multi-temporal SAR and optical images in an urban agricultural region. Sensors 2017, 17, 1210. [Google Scholar] [CrossRef] [PubMed]

- Campos-Taberner, M.; García-Haro, F.J.; Camps-Valls, G.; Grau-Muedra, G.; Nutini, F.; Busetto, L.; Katsantonis, D.; Stavrakoudis, D.; Minakou, C.; Gatti, L.; et al. Exploitation of SAR and optical sentinel data to detect rice crop and estimate seasonal dynamics of leaf area index. Remote Sens. 2017, 9, 248. [Google Scholar] [CrossRef] [Green Version]

- Bellón, B.; Bégué, A.; Seen, D.L.; de Almeida, C.A.; Simões, M. A remote sensing approach for regional-scale mapping of agricultural land-use systems based on NDVI time series. Remote Sens. 2017, 9, 600. [Google Scholar] [CrossRef] [Green Version]

- De Keukelaere, L.; Sterckx, S.; Adriaensen, S.; Knaeps, E.; Reusen, I.; Giardino, C.; Bresciani, M.; Hunter, P.; Neil, C.; Van der Zande, D.; et al. Atmospheric correction of Landsat-8/OLI and Sentinel 2/MSI data using iCOR algorithm: Validation for coastal and inland waters. Eur. J. Remote Sens. 2018, 51, 525–542. [Google Scholar] [CrossRef] [Green Version]

- Zheng, B.; Myint, S.W.; Thenkabail, P.S.; Aggarwal, R.M. A support vector machine to identify irrigated crop types using time-series Landsat NDVI data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 103–112. [Google Scholar] [CrossRef]

- Rouse, W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Cai, Y.; Guan, K.; Peng, J.; Wang, S.; Seifert, C.; Wardlow, B.; Li, Z. A high-performance and in-season classification system of field-level crop types using time-series Landsat data and a machine learning approach. Remote Sens. Environ. 2018, 210, 35–47. [Google Scholar] [CrossRef]

- Estel, S.; Kuemmerle, T.; Alcántara, C.; Levers, C.; Prishchepov, A.; Hostert, P. Mapping farmland abandonment and recultivation across Europe using MODIS NDVI time series. Remote Sens. Environ. 2015, 163, 312–325. [Google Scholar] [CrossRef]

- Ouyang, F.; Su, W.; Zhang, Y.; Liu, X.; Su, J.; Zhang, Q.; Men, X.; Ju, Q.; Ge, F. Ecological control service of the predatory natural enemy and its maintaining mechanism in rotation-intercropping ecosystem via wheat-maize-cotton. Agric. Ecosyst. Environ. 2020, 301, 107024. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăgu, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Sharifi, A.; Amini, J.; Tateishi, R. Estimation of forest biomass using multivariate relevance vector regression. Photogramm. Eng. Remote Sens. 2016, 82, 41–49. [Google Scholar] [CrossRef] [Green Version]

- Snapp, S.; Bezner Kerr, R.; Ota, V.; Kane, D.; Shumba, L.; Dakishoni, L. Unpacking a crop diversity hotspot: Farmer practice and preferences in Northern Malawi. Int. J. Agric. Sustain. 2019, 17, 172–188. [Google Scholar] [CrossRef]

- Quegan, S.; Toan, T.L.; Yu, J.J.; Ribbes, F.; Floury, N. Multitemporal ERS SAR analysis applied to forest mapping. IEEE Trans. Geosci. Remote Sens. 2000, 38, 741–753. [Google Scholar] [CrossRef]

- Yan, S.; Shi, K.; Li, Y.; Liu, J.; Zhao, H. Integration of satellite remote sensing data in underground coal fire detection: A case study of the Fukang region, Xinjiang, China. Front. Earth Sci. 2020, 14, 1–12. [Google Scholar] [CrossRef]

- Francini, S.; McRoberts, R.E.; Giannetti, F.; Mencucci, M.; Marchetti, M.; Scarascia Mugnozza, G.; Chirici, G. Near-real time forest change detection using PlanetScope imagery. Eur. J. Remote Sens. 2020, 53, 233–244. [Google Scholar] [CrossRef]

- Shanmugapriya, S.; Haldar, D.; Danodia, A. Optimal datasets suitability for pearl millet (Bajra) discrimination using multiparametric SAR data. Geocarto Int. 2020, 35, 1814–1831. [Google Scholar] [CrossRef]

- Moreau, D.; Pointurier, O.; Nicolardot, B.; Villerd, J.; Colbach, N. In which cropping systems can residual weeds reduce nitrate leaching and soil erosion? Eur. J. Agron. 2020, 119, 126015. [Google Scholar] [CrossRef]

- Saleem, M.; Pervaiz, Z.H.; Contreras, J.; Lindenberger, J.H.; Hupp, B.M.; Chen, D.; Zhang, Q.; Wang, C.; Iqbal, J.; Twigg, P. Cover crop diversity improves multiple soil properties via altering root architectural traits. Rhizosphere 2020, 16, 100248. [Google Scholar] [CrossRef]

- Muoni, T.; Koomson, E.; Öborn, I.; Marohn, C.; Watson, C.A.; Bergkvist, G.; Barnes, A.; Cadisch, G.; Duncan, A. Reducing soil erosion in smallholder farming systems in east Africa through the introduction of different crop types. Exp. Agric. 2019, 56, 183–195. [Google Scholar] [CrossRef] [Green Version]

- Ahmad, A.; Ahmad, S.R.; Gilani, H.; Tariq, A.; Zhao, N.; Aslam, R.W.; Mumtaz, F. A synthesis of spatial forest assessment studies using remote sensing data and techniques in Pakistan. Forests 2021, 12, 1211. [Google Scholar] [CrossRef]

- Burke, M.; Lobell, D.B. Satellite-based assessment of yield variation and its determinants in smallholder African systems. Proc. Natl. Acad. Sci. USA 2017, 114, 2189–2194. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, G.; Messina, J.P.; Peter, B.G.; Snapp, S.S. Mapping land suitability for agriculture in Malawi. Land Degrad. Dev. 2017, 28, 2001–2016. [Google Scholar] [CrossRef]

| Code | Class | Calibration Parcels | Validation Parcels | Calibration Pixels | Validation Pixels |

|---|---|---|---|---|---|

| 1 | Potatoes | 613 | 5522 | 121,884 | 3,867,261 |

| 2 | Barley | 175 | 1590 | 31,189 | 1,333,238 |

| 3 | Rapeseed | 10 | 77 | 1520 | 50,159 |

| 4 | Maize | 1894 | 17,063 | 293,880 | 15,614,296 |

| 5 | Wheat | 848 | 7652 | 171,864 | 5,700,540 |

| 6 | Alfalfa | 352 | 3179 | 73,059 | 1,913,304 |

| 7 | Grassland | 2614 | 23,549 | 322,425 | 21,200,420 |

| - | Total | 7567 | 67,481 | 1,426,520 | 56,515,322 |

| Sentinel 2 | Sentinel 1 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| # | March | April | May June | July | August | March | April | May June | July | August | OA | κ |

| 1 | X | 0.44 | 0.33 | |||||||||

| 2 | X | X | 0.60 | 0.52 | ||||||||

| 3 | X | X | X | 0.72 | 0.61 | |||||||

| 4 | X | X | X X | 0.73 | 0.70 | |||||||

| 5 | X | X | X X | X | 0.72 | 0.64 | ||||||

| 6 | X | X | X X | X | X | 0.78 | 0.70 | |||||

| 7 | X | 0.42 | 0.30 | |||||||||

| 8 | X | X | 0.56 | 0.42 | ||||||||

| 9 | X | X | X | 0.70 | 0.57 | |||||||

| 10 | X | X | X X | 0.73 | 0.65 | |||||||

| 11 | X | X | X X | X | 0.74 | 0.72 | ||||||

| 12 | X | X | X X | X | X | 0.80 | 0.73 | |||||

| 13 | X | X | 0.55 | 0.42 | ||||||||

| 14 | X | X | X | X | 0.67 | 0.57 | ||||||

| 15 | X | X | X | X | X | X | 0.74 | 0.67 | ||||

| 16 | X | X | X X | X | X | X X | 0.81 | 0.74 | ||||

| 17 | X | X | X X | X | X | X | X X | X | 0.83 | 0.80 | ||

| 18 | X | X | X X | X | X | X | X | X X | X | X | 0.84 | 0.79 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Felegari, S.; Sharifi, A.; Moravej, K.; Amin, M.; Golchin, A.; Muzirafuti, A.; Tariq, A.; Zhao, N. Integration of Sentinel 1 and Sentinel 2 Satellite Images for Crop Mapping. Appl. Sci. 2021, 11, 10104. https://doi.org/10.3390/app112110104

Felegari S, Sharifi A, Moravej K, Amin M, Golchin A, Muzirafuti A, Tariq A, Zhao N. Integration of Sentinel 1 and Sentinel 2 Satellite Images for Crop Mapping. Applied Sciences. 2021; 11(21):10104. https://doi.org/10.3390/app112110104

Chicago/Turabian StyleFelegari, Shilan, Alireza Sharifi, Kamran Moravej, Muhammad Amin, Ahmad Golchin, Anselme Muzirafuti, Aqil Tariq, and Na Zhao. 2021. "Integration of Sentinel 1 and Sentinel 2 Satellite Images for Crop Mapping" Applied Sciences 11, no. 21: 10104. https://doi.org/10.3390/app112110104

APA StyleFelegari, S., Sharifi, A., Moravej, K., Amin, M., Golchin, A., Muzirafuti, A., Tariq, A., & Zhao, N. (2021). Integration of Sentinel 1 and Sentinel 2 Satellite Images for Crop Mapping. Applied Sciences, 11(21), 10104. https://doi.org/10.3390/app112110104