Effect of Target Size, Location, and Input Method on Interaction in Immersive Virtual Reality

Abstract

:1. Introduction

2. Related Work

2.1. Fitts’ Law

2.2. Virtual Reality Sickness Questionnaire

3. Method

3.1. Participants

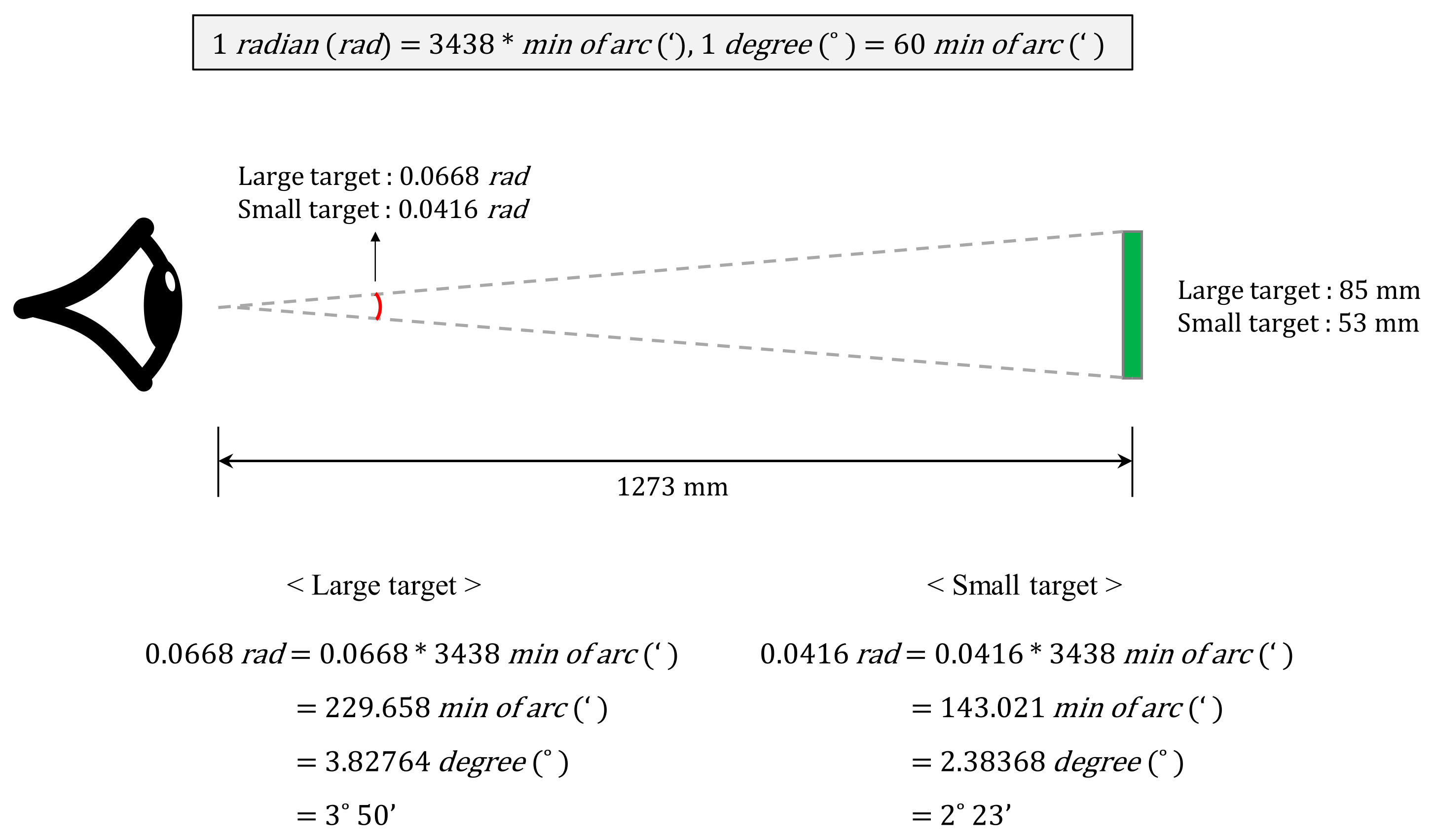

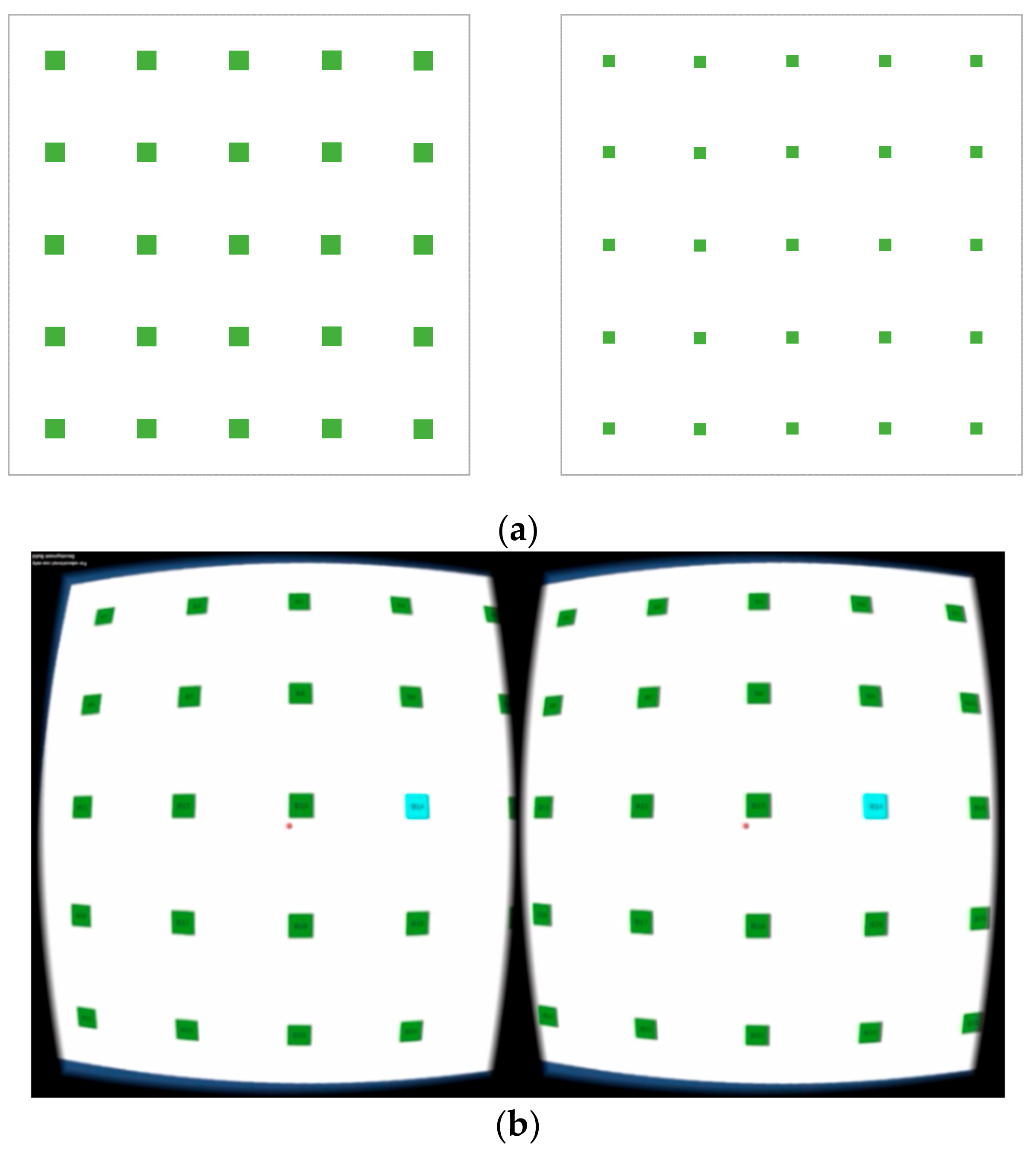

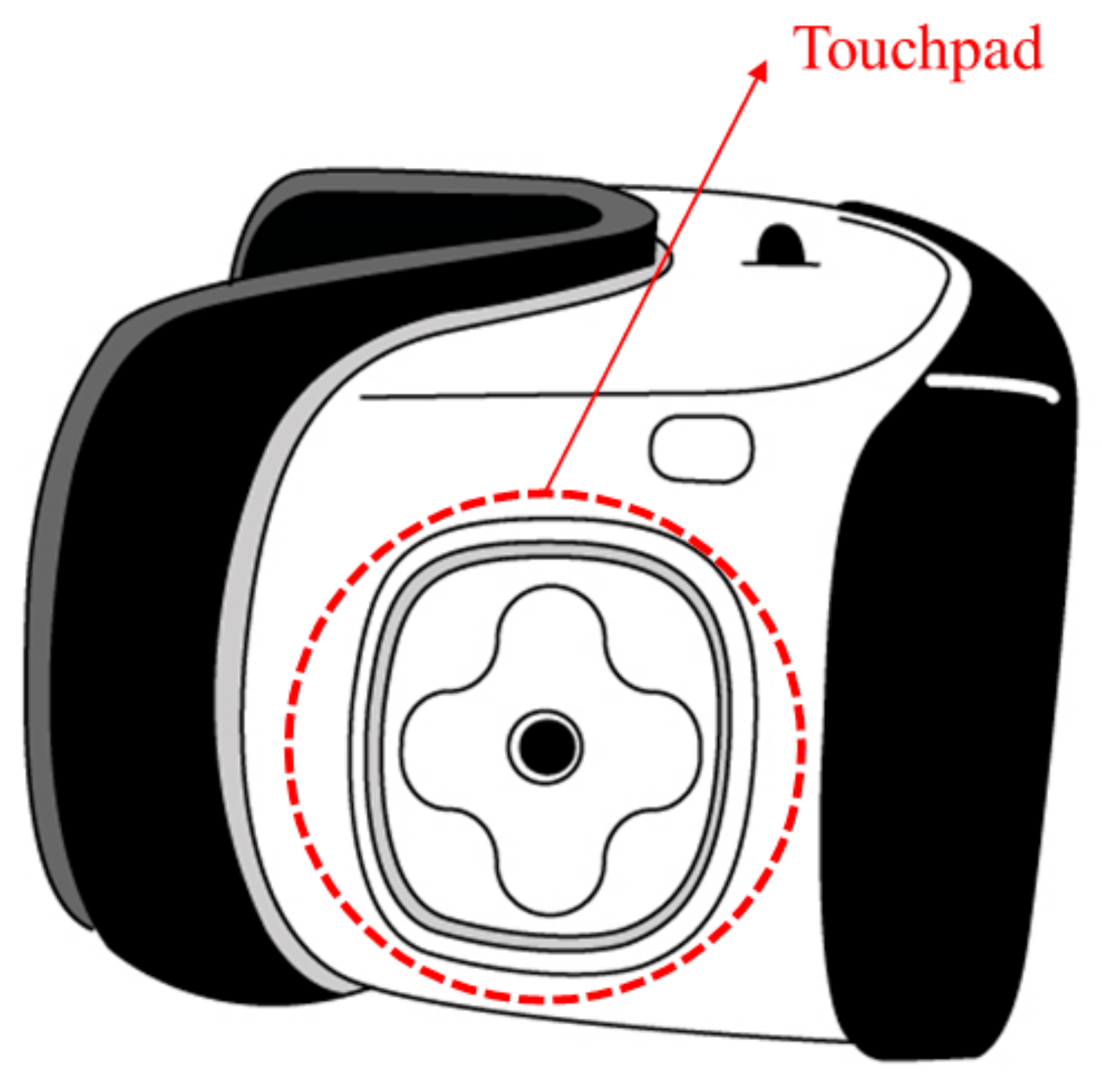

3.2. Apparatus and Application

3.3. Experimental Measurement

3.4. Procedure

4. Results

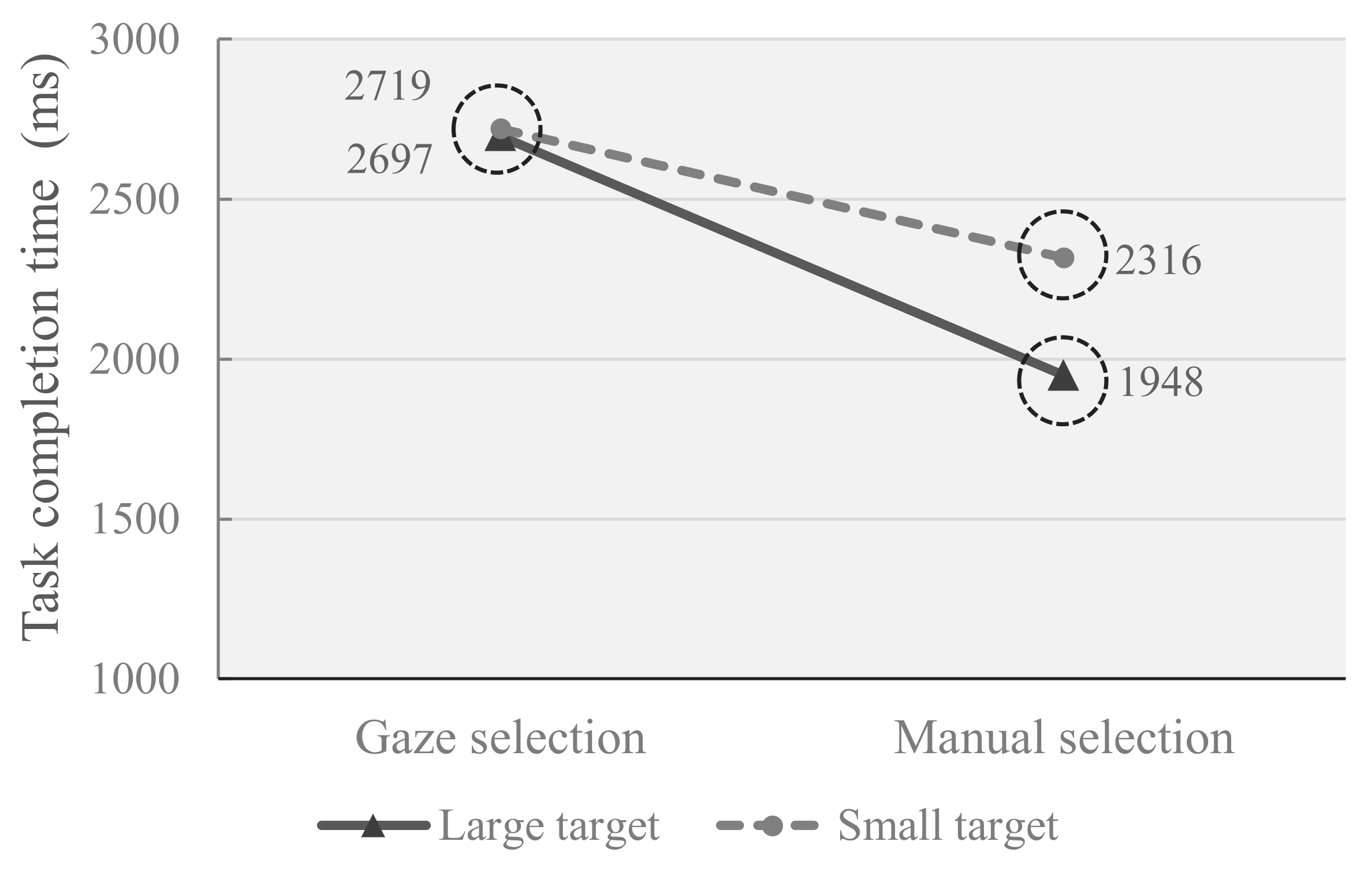

4.1. Task Completion Time

4.2. Error Rate

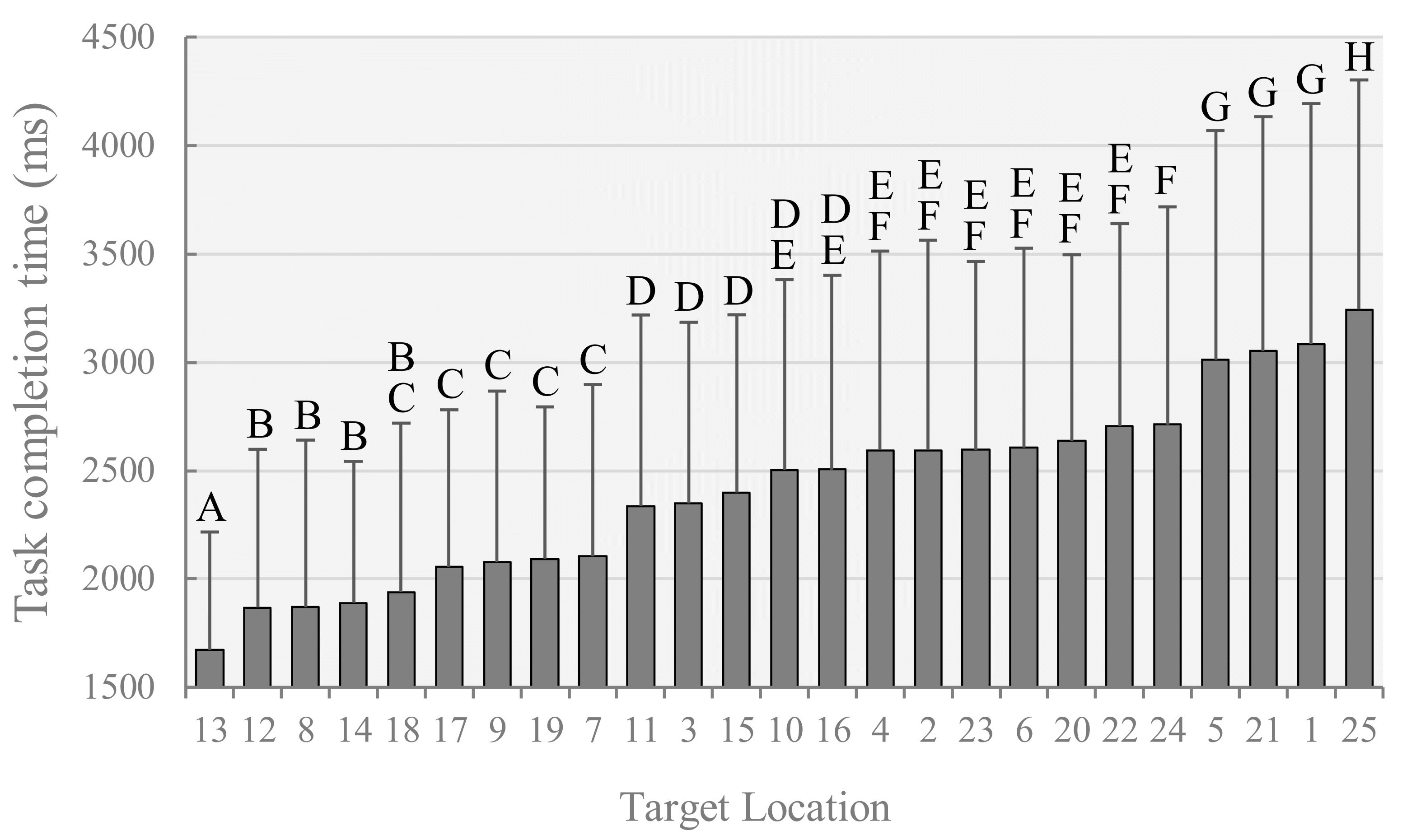

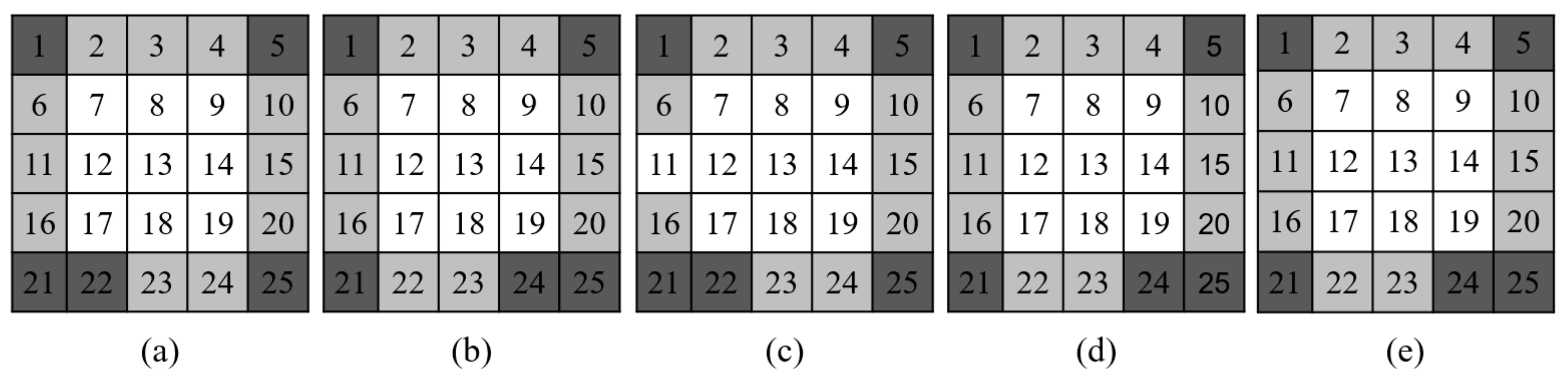

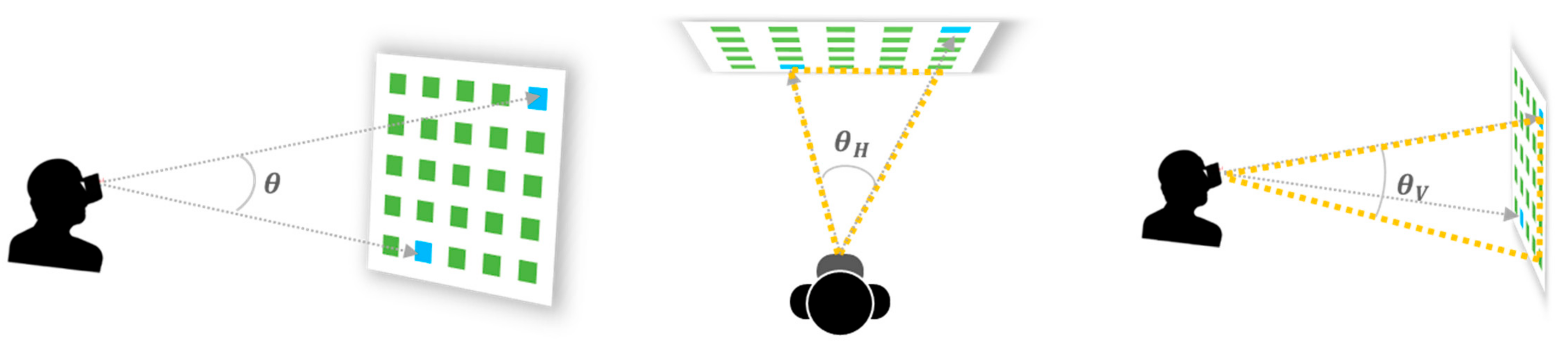

4.3. Target Location

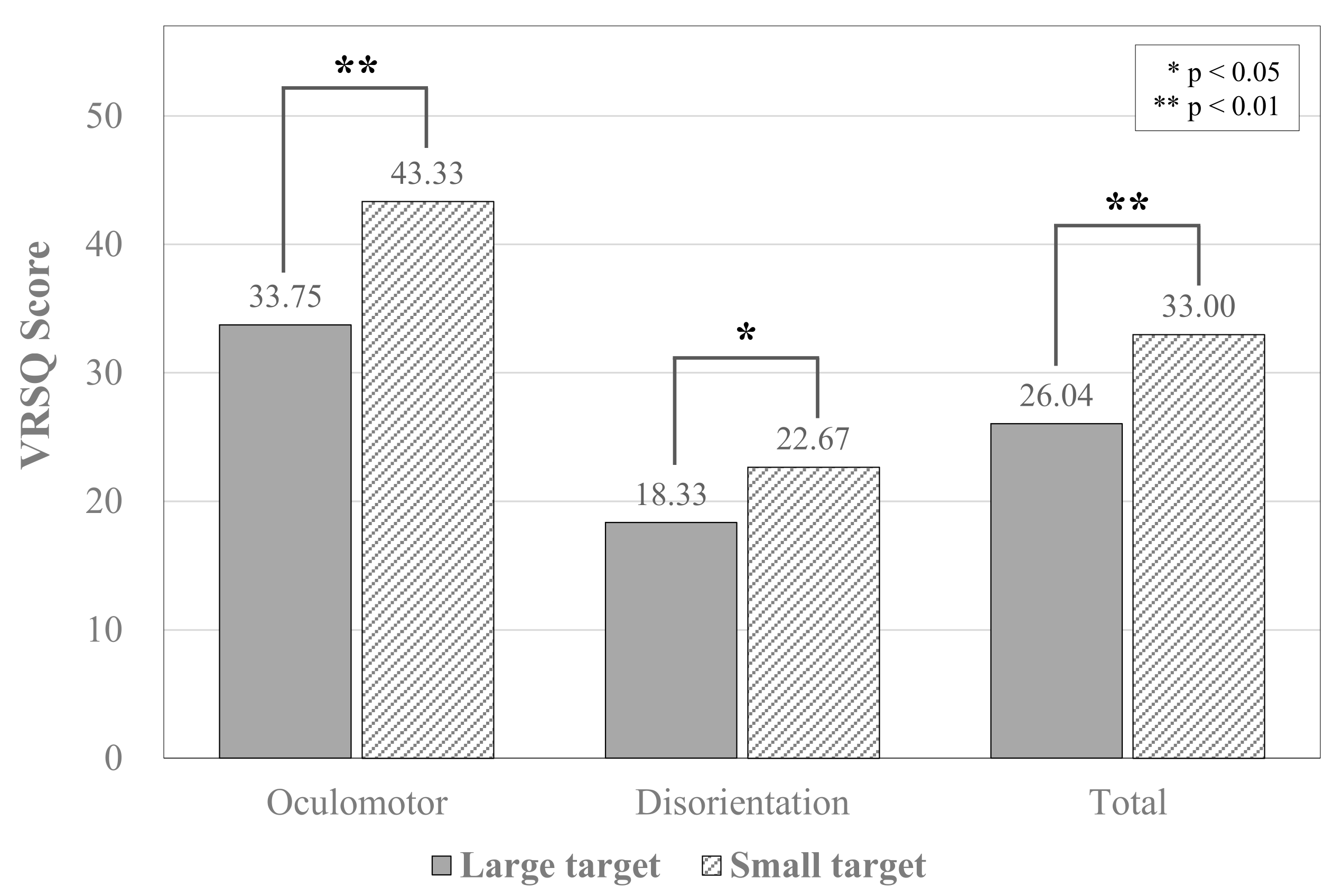

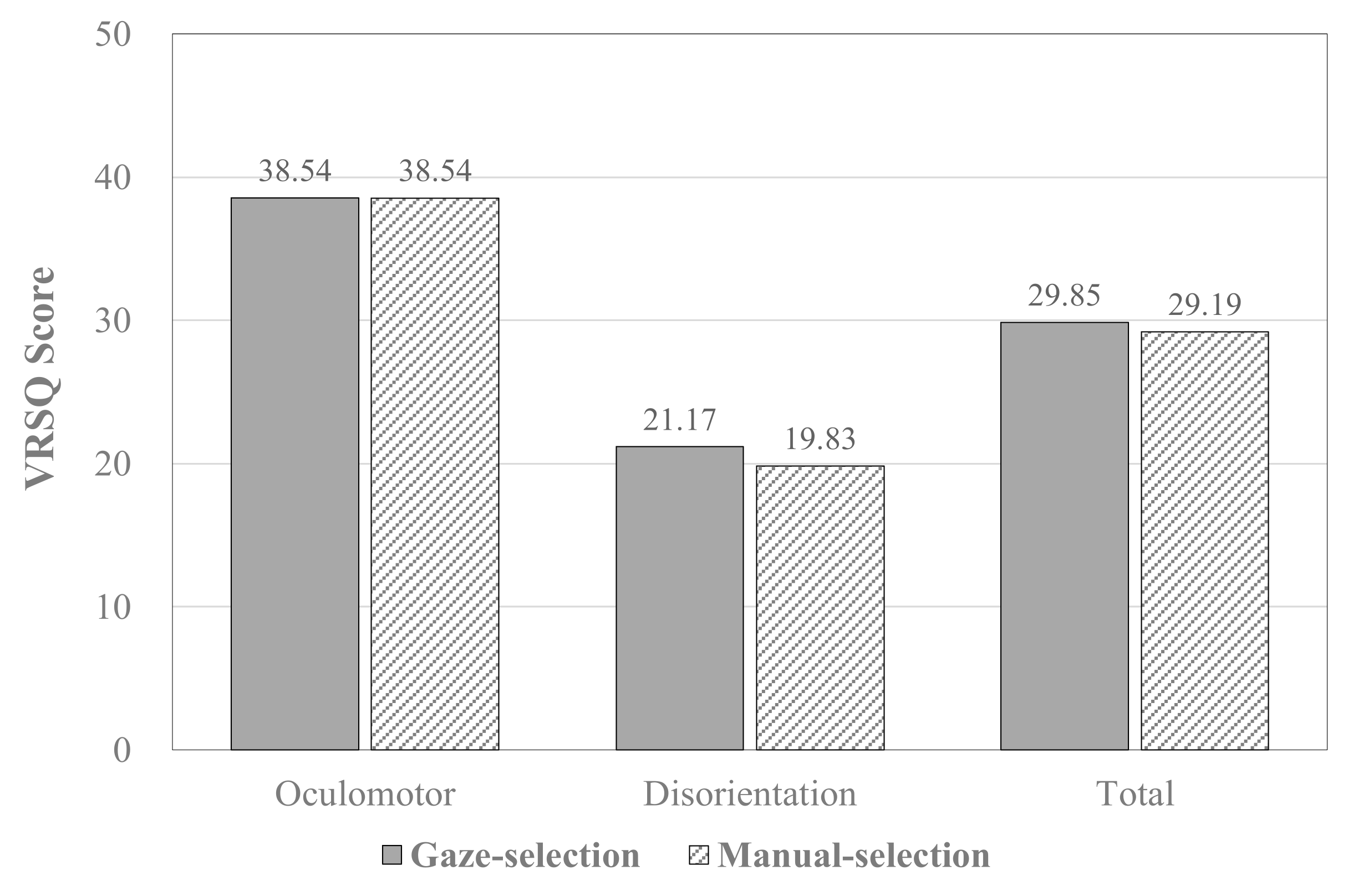

4.4. Virtual Reality Sickness

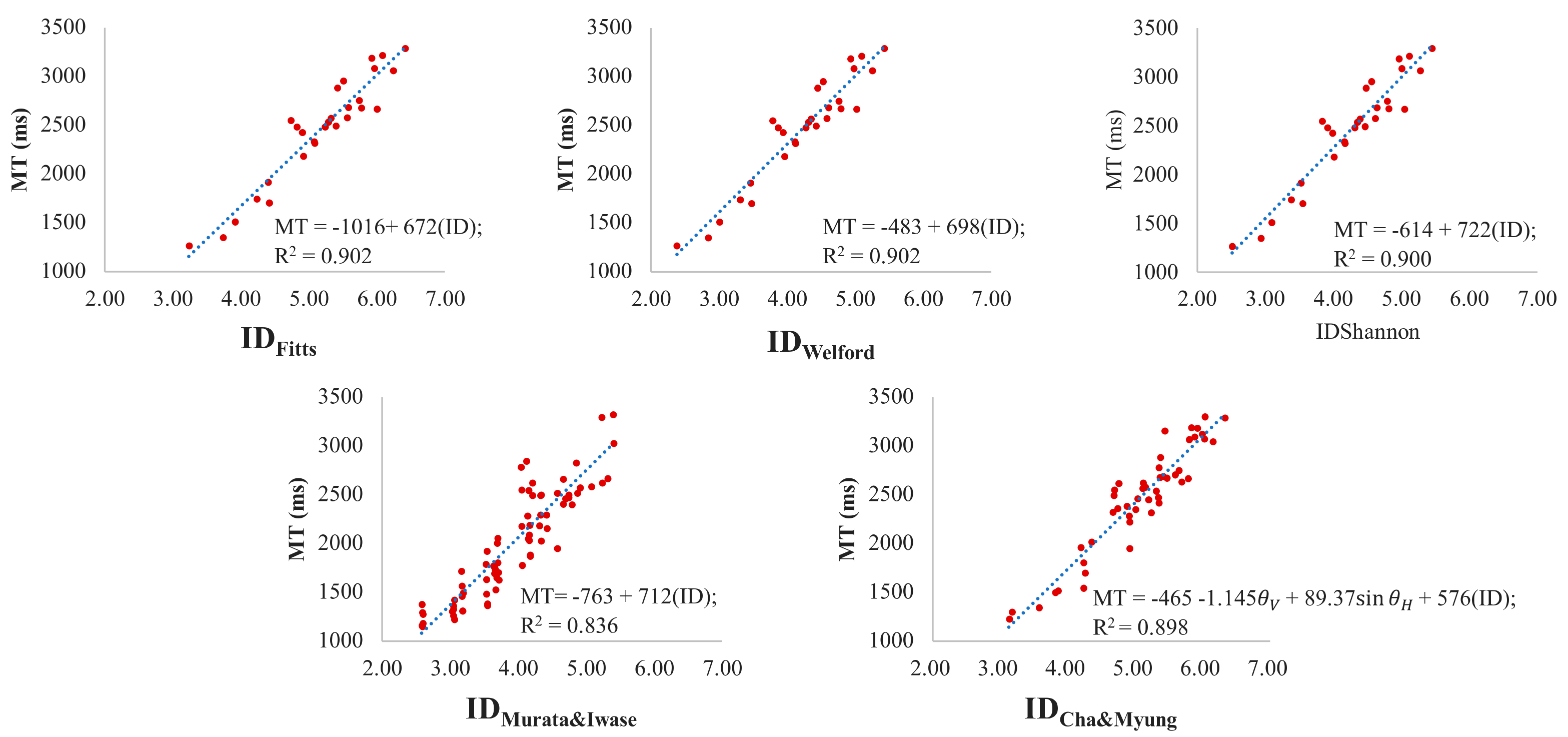

4.5. Application of Fitts’ Law

5. Discussion

5.1. Factors Affecting the Task Performance

5.2. Influence of Target Position

5.3. Application of Fitts’ Law

5.4. Measuring VR Sickness through VRSQ

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Porcino, T.M.; Clua, E.; Trevisan, D.; Vasconcelos, C.N.; Valente, L. Minimizing cyber sickness in head mounted display systems: Design guidelines and applications. In Proceedings of the 2017 IEEE 5th International Conference on Serious Games and Applications for Health (SeGAH), Perth, WA, Australia, 2–4 April 2017; pp. 1–6. [Google Scholar]

- Waltemate, T.; Senna, I.; Hülsmann, F.; Rohde, M.; Kopp, S.; Ernst, M.; Botsch, M. The impact of latency on perceptual judgments and motor performance in closed-loop interaction in virtual reality. In Proceedings of the 22nd ACM Conference on Virtual Reality Software and Technology, Munich, Germany, 2–4 November 2016; pp. 27–35. [Google Scholar]

- Cevette, M.; Stepanek, J.; Galea, A. Galvanic Vestibular Stimulation System and Method of Use for Simulation, Directional Cueing, and Alleviating Motion-Related Sickness. Google Patent 8,718,796, 6 May 2014. [Google Scholar]

- Ruddle, R.A. The effect of environment characteristics and user interaction on levels of virtual environment sickness. In Proceedings of the IEEE Virtual Reality 2004, Chicago, IL, USA, 27–31 March 2004; pp. 141–285. [Google Scholar]

- Groen, E.L.; Bos, J.E. Simulator sickness depends on frequency of the simulator motion mismatch: An observation. Presence 2008, 17, 584–593. [Google Scholar] [CrossRef]

- Lu, D. Virtual Reality Sickness during Immersion: An Investigation of Potential Obstacles towards General Accessibility of VR Technology. Master’s Thesis, Uppsala University, Uppsala, Sweeden, 2016. [Google Scholar]

- Marks, S.; Estevez, J.E.; Connor, A.M. Towards the Holodeck: Fully immersive virtual reality visualisation of scientific and engineering data. In Proceedings of the 29th International Conference on Image and Vision Computing New Zealand, Hamilton, New Zealand, 19–21 November 2014; pp. 42–47. [Google Scholar]

- Hou, X.; Lu, Y.; Dey, S. Wireless VR/AR with edge/cloud computing. In Proceedings of the 2017 26th International Conference on Computer Communication and Networks (ICCCN), Vancouver, BC, Canada, 31 July–3 August 2017; pp. 1–8. [Google Scholar]

- Wagner, D. Motion-to-Photon Latency in Mobile AR and VR. Available online: https://medium.com/@DAQRI/motion-to-photon-latency-in-mobile-ar-and-vr-99f82c480926 (accessed on 13 October 2021).

- Kundu, R.K.; Rahman, A.; Paul, S. A Study on Sensor System Latency in VR Motion Sickness. J. Sens. Actuator Netw. 2021, 10, 53. [Google Scholar] [CrossRef]

- Blum, T.; Wieczorek, M.; Aichert, A.; Tibrewal, R.; Navab, N. The effect of out-of-focus blur on visual discomfort when using stereo displays. In Proceedings of the 2010 IEEE International Symposium on Mixed and Augmented Reality, Seoul, Korea, 13–16 October 2010; pp. 13–17. [Google Scholar]

- Carnegie, K.; Rhee, T. Reducing visual discomfort with HMDs using dynamic depth of field. IEEE Comput. Graph. Appl. 2015, 35, 34–41. [Google Scholar] [CrossRef]

- Fernandes, A.S.; Feiner, S.K. Combating VR sickness through subtle dynamic field-of-view modification. In Proceedings of the 2016 IEEE Symposium on 3D User Interfaces (3DUI), Greenville, SC, USA, 19–20 March 2016; pp. 201–210. [Google Scholar]

- Wienrich, C.; Weidner, C.K.; Schatto, C.; Obremski, D.; Israel, J.H. A virtual nose as a rest-frame-the impact on simulator sickness and game experience. In Proceedings of the 2018 10th International Conference on Virtual Worlds and Games for Serious Applications (VS-Games), Würzburger, BY, Germany, 5–7 September 2018; pp. 1–8. [Google Scholar]

- Buhler, H.; Misztal, S.; Schild, J. Reducing vr sickness through peripheral visual effects. In Proceedings of the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Tuebingen/Reutlingen, Germany, 18–22 March 2018; pp. 517–519. [Google Scholar]

- Whittinghill, D.M.; Ziegler, B.; Case, T.; Moore, B. Nasum virtualis: A simple technique for reducing simulator sickness. In Proceedings of the Games Developers Conference (GDC), San Francisco, CA, USA, 2–6 March 2015. [Google Scholar]

- Won, J.-H.; Kim, Y.S. A Study on Visually Induced VR Reduction Method for Virtual Reality Sickness. Appl. Sci. 2021, 11, 6339. [Google Scholar] [CrossRef]

- Kim, J.-Y.; Son, J.-B.; Leem, H.-S.; Lee, S.-H. Psychophysiological alteration after virtual reality experiences using smartphone-assisted head mount displays: An EEG-based source localization study. Appl. Sci. 2019, 9, 2501. [Google Scholar] [CrossRef] [Green Version]

- Grassini, S.; Laumann, K.; Luzi, A.K. Association of Individual Factors with Simulator Sickness and Sense of Presence in Virtual Reality mediated by head-mounted displays (HMDs). Multimodal Technol. Interact. 2021, 5, 7. [Google Scholar] [CrossRef]

- So, R.H.; Griffin, M.J. Effects of a target movement direction cue on head-tracking performance. Ergonomics 2000, 43, 360–376. [Google Scholar] [CrossRef] [PubMed]

- Jagacinski, R.J.; Monk, D.L. Fitts’ Law in Two dimensions with hand and head movements movements. J. Mot. Behav. 1985, 17, 77–95. [Google Scholar] [CrossRef] [PubMed]

- Borah, J. Investigation of Eye and Head Controlled Cursor Positioning Techniques; Applied Science Labs: Bedford, MA, USA, 1 September 1995. [Google Scholar]

- Johnsgard, T. Fitts’ Law with a virtual reality glove and a mouse: Effects of gain. In Proceedings of the Graphics Interface, Canadian Information Processing Society, Banff, Alberta, Canada, 18–20 May 1994; pp. 8–15. [Google Scholar]

- Chen, J.; Or, C. Assessing the use of immersive virtual reality, mouse and touchscreen in pointing and dragging-and-dropping tasks among young, middle-aged and older adults. Appl. Ergon. 2017, 65, 437–448. [Google Scholar] [CrossRef]

- D’Errico, F.; Leone, G.; Schmid, M.; D’Anna, C. Prosocial virtual reality, empathy, and EEG measures: A pilot study aimed at monitoring emotional processes in intergroup helping behaviors. Appl. Sci. 2020, 10, 1196. [Google Scholar] [CrossRef] [Green Version]

- Baños, R.M.; Botella, C.; Rubió, I.; Quero, S.; García-Palacios, A.; Alcañiz, M. Presence and emotions in virtual environments: The influence of stereoscopy. Cyber Psychol. Behav. 2008, 11, 1–8. [Google Scholar] [CrossRef]

- Diemer, J.; Alpers, G.W.; Peperkorn, H.M.; Shiban, Y.; Mühlberger, A. The impact of perception and presence on emotional reactions: A review of research in virtual reality. Front. Psychol. 2015, 6, 26. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cournia, N.; Smith, J.D.; Duchowski, A.T. Gaze-vs. hand-based pointing in virtual environments. In Proceedings of the CHI’03 Extended Abstracts on Human Factors in Computing Systems, Ft. Lauderdale, FL, USA, 5–10 April 2003; pp. 772–773. [Google Scholar]

- Piumsomboon, T.; Lee, G.; Lindeman, R.W.; Billinghurst, M. Exploring natural eye-gaze-based interaction for immersive virtual reality. In Proceedings of the 2017 IEEE Symposium on 3D User Interfaces (3DUI), Los Angeles, CA, USA, 18–19 March 2017; pp. 36–39. [Google Scholar]

- Lyons, D.M. System and Method for Permitting Three-Dimensional Navigation through a Virtual Reality Environment Using Camera-Based Gesture Inputs. Google Patents 6,181,343, 30 January 2001. [Google Scholar]

- Saenz-de-Urturi, Z.; Garcia-Zapirain Soto, B. Kinect-based virtual game for the elderly that detects incorrect body postures in real time. Sensors 2016, 16, 704. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, S.; Kim, G.J. Using keyboards with head mounted displays. In Proceedings of the 2004 ACM SIGGRAPH International Conference on Virtual Reality Continuum and Its Applications in Industry, Singapore, 16–18 June 2004; pp. 336–343. [Google Scholar]

- Scheggi, S.; Meli, L.; Pacchierotti, C.; Prattichizzo, D. Touch the virtual reality: Using the leap motion controller for hand tracking and wearable tactile devices for immersive haptic rendering. In Proceedings of the ACM SIGGRAPH 2015 Posters, Los Angeles, CA, USA, 9–13 August 2015; p. 1. [Google Scholar]

- Khademi, M.; Mousavi Hondori, H.; McKenzie, A.; Dodakian, L.; Lopes, C.V.; Cramer, S.C. Free-hand interaction with leap motion controller for stroke rehabilitation. In Proceedings of the CHI’14 Extended Abstracts on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; pp. 1663–1668. [Google Scholar]

- Sidorakis, N.; Koulieris, G.A.; Mania, K. Binocular eye-tracking for the control of a 3D immersive multimedia user interface. In Proceedings of the 2015 IEEE 1St workshop on everyday virtual reality (WEVR), Arles, Bouches-du-Rhône, France, 23 March 2015; pp. 15–18. [Google Scholar]

- Fitts, P.M. The information capacity of the human motor system in controlling the amplitude of movement. J. Exp. Psychol. 1954, 47, 381. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Welford, A.T. Fundamentals of Skill; Methuen & Co Ltd.: London, UK, 1968. [Google Scholar]

- MacKenzie, I.S. Fitts’ law as a research and design tool in human-computer interaction. Hum. Comput. Interact. 1992, 7, 91–139. [Google Scholar] [CrossRef]

- Card, S.K.; English, W.K.; Burr, B.J. Evaluation of mouse, rate-controlled isometric joystick, step keys, and text keys for text selection on a CRT. Ergonomics 1978, 21, 601–613. [Google Scholar] [CrossRef]

- Epps, B.W. Comparison of six cursor control devices based on Fitts’ law models. In Proceedings of the Human Factors Society Annual Meeting; SAGE Publications: Los Angeles, CA, USA, 1986; pp. 327–331. [Google Scholar]

- Poupyrev, I.; Okabe, M.; Maruyama, S. Haptic feedback for pen computing: Directions and strategies. In Proceedings of the CHI’04 Extended Abstracts on Human Factors in Computing Systems, Vienna, Austria, 24–29 April 2004; pp. 1309–1312. [Google Scholar]

- Colle, H.A.; Hiszem, K.J. Standing at a kiosk: Effects of key size and spacing on touch screen numeric keypad performance and user preference. Ergonomics 2004, 47, 1406–1423. [Google Scholar] [CrossRef]

- Parhi, P.; Karlson, A.K.; Bederson, B.B. Target size study for one-handed thumb use on small touchscreen devices. In Proceedings of the 8th Conference on Human-Computer Interaction with Mobile Devices and Services, Helsinki, Finland, 12–15 September 2006; pp. 203–210. [Google Scholar]

- Park, Y.S.; Han, S.H. Touch key design for one-handed thumb interaction with a mobile phone: Effects of touch key size and touch key location. Int. J. Ind. Ergon. 2010, 40, 68–76. [Google Scholar] [CrossRef]

- Crossman, E.R. The Measurement of Perceptual Load in Manual Operations. University of Birmingham: Birmingham, UK, 1956. [Google Scholar]

- MacKenzie, I.S.; Buxton, W. Extending Fitts’ law to two-dimensional tasks. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Monterey, CA, USA, 3–7 May 1992; pp. 219–226. [Google Scholar]

- Hoffmann, E.R.; Sheikh, I.H. Effect of varying target height in a Fitts’ movement task. Ergonomics 1994, 37, 1071–1088. [Google Scholar] [CrossRef]

- Murata, A.; Iwase, H. Extending Fitts’ law to a three-dimensional pointing task. Hum. Mov. Sci. 2001, 20, 791–805. [Google Scholar] [CrossRef] [Green Version]

- Cha, Y.; Myung, R. Extended Fitts’ law for 3D pointing tasks using 3D target arrangements. Int. J. Ind. Ergon. 2013, 43, 350–355. [Google Scholar] [CrossRef]

- Kennedy, R.S.; Lane, N.E.; Berbaum, K.S.; Lilienthal, M.G. Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 1993, 3, 203–220. [Google Scholar] [CrossRef]

- Kim, H.K.; Park, J.; Choi, Y.; Choe, M. Virtual reality sickness questionnaire (VRSQ): Motion sickness measurement index in a virtual reality environment. Appl. Ergon. 2018, 69, 66–73. [Google Scholar] [CrossRef]

- Plechatá, A.; Sahula, V.; Fayette, D.; Fajnerová, I. Age-related differences with immersive and non-immersive virtual reality in memory assessment. Front. Psychol. 2019, 10, 1330. [Google Scholar] [CrossRef]

- Gough, C. VR and AR Ownership and Purchase Intent among U.S. Consumers 2017, by Age. Available online: https://www.statista.com/statistics/740760/vr-ar-ownership-usa-age/ (accessed on 13 October 2021).

- Bababekova, Y.; Rosenfield, M.; Hue, J.E.; Huang, R.R. Font size and viewing distance of handheld smart phones. Optom. Vis. Sci. 2011, 88, 795–797. [Google Scholar] [CrossRef] [Green Version]

- Sesto, M.E.; Irwin, C.B.; Chen, K.B.; Chourasia, A.O.; Wiegmann, D.A. Effect of touch screen button size and spacing on touch characteristics of users with and without disabilities. Hum. Factors 2012, 54, 425–436. [Google Scholar] [CrossRef]

- Shin, H.; Lim, J.M.; Lee, J.U.; Lee, G.; Kyung, K.U. Effect of tactile feedback for button GUI on mobile touch devices. ETRI J. 2014, 36, 979–987. [Google Scholar] [CrossRef] [Green Version]

- Choe, M.; Choi, Y.; Park, J.; Kim, H.K. Comparison of gaze cursor input methods for virtual reality devices. Int. J. Hum. Comput. Interact. 2019, 35, 620–629. [Google Scholar] [CrossRef]

- Kim, H.K.; Park, J.; Park, K.; Choe, M. Analyzing thumb interaction on mobile touchpad devices. Int. J. Ind. Ergon. 2018, 67, 201–209. [Google Scholar] [CrossRef]

- Kim, H.K.; Choe, M.; Choi, Y.; Park, J. Does the Hand Anthropometric Dimension Influence Touch Interaction? J. Comput. Inf. Syst. 2019, 59, 85–96. [Google Scholar] [CrossRef]

- Lin, J.-W.; Duh, H.B.-L.; Parker, D.E.; Abi-Rached, H.; Furness, T.A. Effects of field of view on presence, enjoyment, memory, and simulator sickness in a virtual environment. In Proceedings of the IEEE Virtual Reality, Orlando, FL, USA, 24–28 March 2002; pp. 164–171. [Google Scholar]

- Saredakis, D.; Szpak, A.; Birckhead, B.; Keage, H.A.; Rizzo, A.; Loetscher, T. Factors associated with virtual reality sickness in head-mounted displays: A systematic review and meta-analysis. Front. Hum. Neurosci. 2020, 14, 96. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hakkinen, J.; Vuori, T.; Paakka, M. Postural stability and sickness symptoms after HMD use. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Yasmine Hammamet, Tunisia, 6–9 October 2002; pp. 147–152. [Google Scholar]

- Arns, L.L.; Cerney, M.M. The relationship between age and incidence of cybersickness among immersive environment users. In Proceedings of the IEEE Proceedings. VR 2005. Virtual Reality, Bonn, North Rhine-Westphalia, Germany, 12–16 March 2005; pp. 267–268. [Google Scholar]

| Model | Index of Difficulty |

|---|---|

| Fitts et al. [36] | |

| Welford [37] | |

| MacKenzie [38] | |

| Crossman [45] | |

| MacKenzie and Buxton [46] | |

| Hoffman and Sheikh [47] | |

| Murata and Iwase [48] | |

| Cha and Myung [49] |

| VRSQ Symptom | Oculomotor | Disorientation |

|---|---|---|

| General discomfort | O | |

| Fatigue | O | |

| Eyestrain | O | |

| Difficulty focusing | O | |

| Headache | O | |

| Fullness of head | O | |

| Blurred vision | O | |

| Dizzy (eyes closed) | O | |

| Vertigo | O | |

| Total | [1] | [2] |

| Score | Oculomotor = ([1]/12) × 100 Disorientation = ([2]/15) × 100 Total Score = (Oculomotor score + Disorientation score)/2 | |

| Large Size | Small Size | Total | |

|---|---|---|---|

| Manual selection | 1948 (±859) | 2316 (±997) | 2132 (±944) |

| Gaze selection | 2697 (±919) | 2719 (±885) | 2708 (±902) |

| Total | 2322 (±961) | 2518 (±963) | 2420 (±967) |

| Fast-Speed | Medium-Speed | Slow-Speed | ||

|---|---|---|---|---|

| Target size (A) | Large | Under 2040 | From 2040 to 2560 | Over 2560 |

| Small | Under 2242 | From 2242 to 2765 | Over 2765 | |

| Input method (B) | Manual | Under 1977 | From 1977 to 2465 | Over 2465 |

| Gaze | Under 2443 | From 2443 to 2987 | Over 2987 | |

| A × B | Under 2100 | From 2100 to 2700 | Over 2700 | |

| Gaze-Selection | Manual-Selection | ||||||

|---|---|---|---|---|---|---|---|

| ID | TCT | ID | TCT | ID | TCT | ID | TCT |

| 2.51 | 1.965 | 4.39 | 3.327 | 2.51 | 1.267 | 4.39 | 2.572 |

| 2.94 | 2.208 | 4.46 | 3.329 | 2.94 | 1.351 | 4.46 | 2.496 |

| 3.10 | 1.906 | 4.48 | 3.317 | 3.10 | 1.512 | 4.48 | 2.888 |

| 3.38 | 2.356 | 4.56 | 3.252 | 3.38 | 1.744 | 4.56 | 2.955 |

| 3.53 | 2.599 | 4.62 | 3.288 | 3.53 | 1.916 | 4.62 | 2.578 |

| 3.55 | 2.140 | 4.64 | 3.128 | 3.55 | 1.705 | 4.64 | 2.688 |

| 3.84 | 3.252 | 4.79 | 3.507 | 3.84 | 2.552 | 4.79 | 2.755 |

| 3.92 | 3.222 | 4.82 | 3.109 | 3.92 | 2.483 | 4.82 | 2.678 |

| 3.99 | 3.065 | 4.96 | 3.445 | 3.99 | 2.430 | 4.96 | 3.189 |

| 4.01 | 2.466 | 5.01 | 3.230 | 4.01 | 2.185 | 5.01 | 3.088 |

| 4.16 | 2.761 | 5.05 | 3.226 | 4.16 | 2.336 | 5.05 | 2.671 |

| 4.17 | 3.084 | 5.12 | 3.423 | 4.17 | 2.320 | 5.12 | 3.218 |

| 4.31 | 3.508 | 5.28 | 3.372 | 4.31 | 2.484 | 5.28 | 3.065 |

| 4.35 | 3.412 | 5.45 | 3.412 | 4.35 | 2.538 | 5.45 | 3.292 |

| . | R2 | F | df1 | df2 | p-Value | ||

|---|---|---|---|---|---|---|---|

| Gaze-selection | 0.71 | 64.493 | 1 | 26 | <0.000 | 0.563 | 0.574 |

| Manual-selection | 0.90 | 234.270 | 1 | 26 | <0.000 | −0.614 | 0.722 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choe, M.; Park, J.; Kim, H.K. Effect of Target Size, Location, and Input Method on Interaction in Immersive Virtual Reality. Appl. Sci. 2021, 11, 9846. https://doi.org/10.3390/app11219846

Choe M, Park J, Kim HK. Effect of Target Size, Location, and Input Method on Interaction in Immersive Virtual Reality. Applied Sciences. 2021; 11(21):9846. https://doi.org/10.3390/app11219846

Chicago/Turabian StyleChoe, Mungyeong, Jaehyun Park, and Hyun K. Kim. 2021. "Effect of Target Size, Location, and Input Method on Interaction in Immersive Virtual Reality" Applied Sciences 11, no. 21: 9846. https://doi.org/10.3390/app11219846

APA StyleChoe, M., Park, J., & Kim, H. K. (2021). Effect of Target Size, Location, and Input Method on Interaction in Immersive Virtual Reality. Applied Sciences, 11(21), 9846. https://doi.org/10.3390/app11219846