Social Distance in Interactions between Children with Autism and Robots

Abstract

:1. Introduction

1.1. HRI

1.2. HRI in Autism Therapy

1.3. HRI and Social Distance

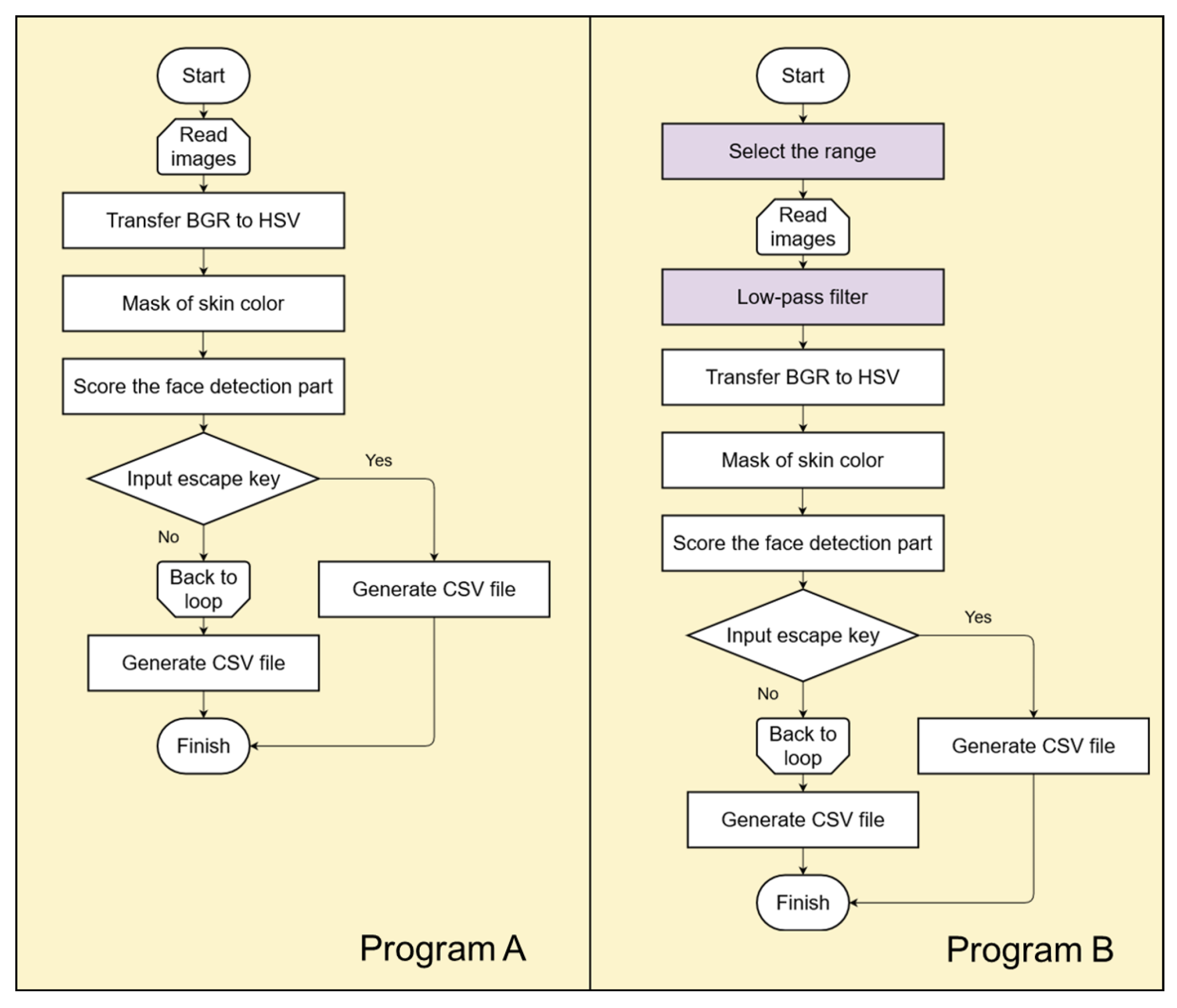

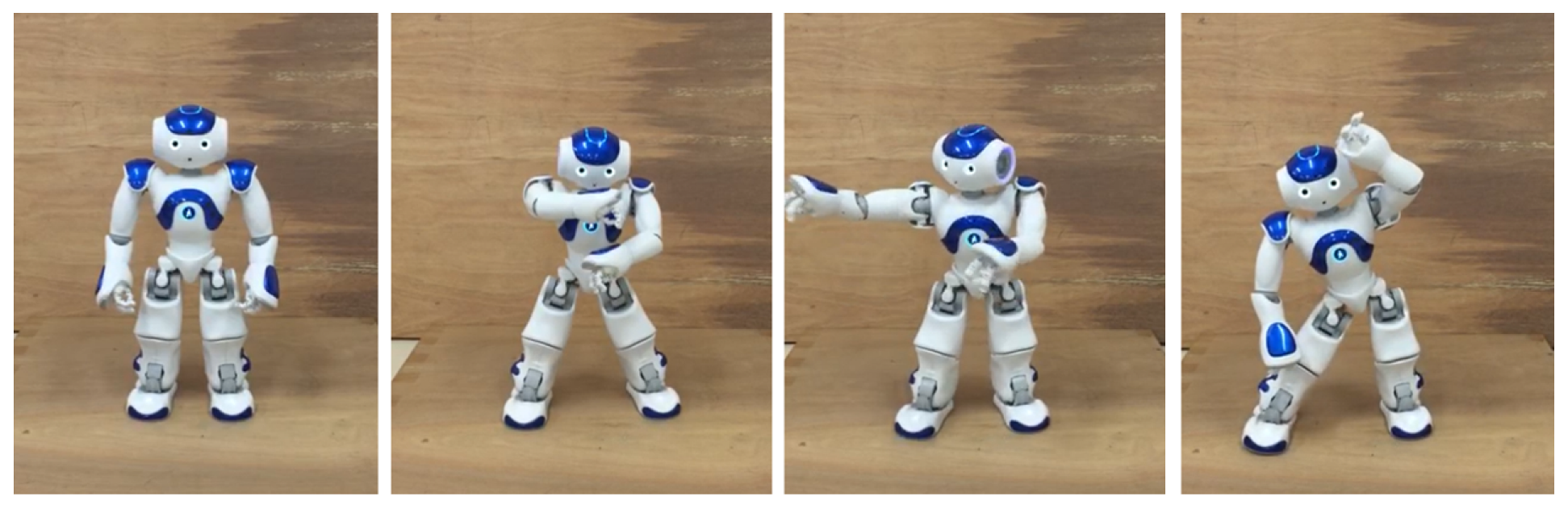

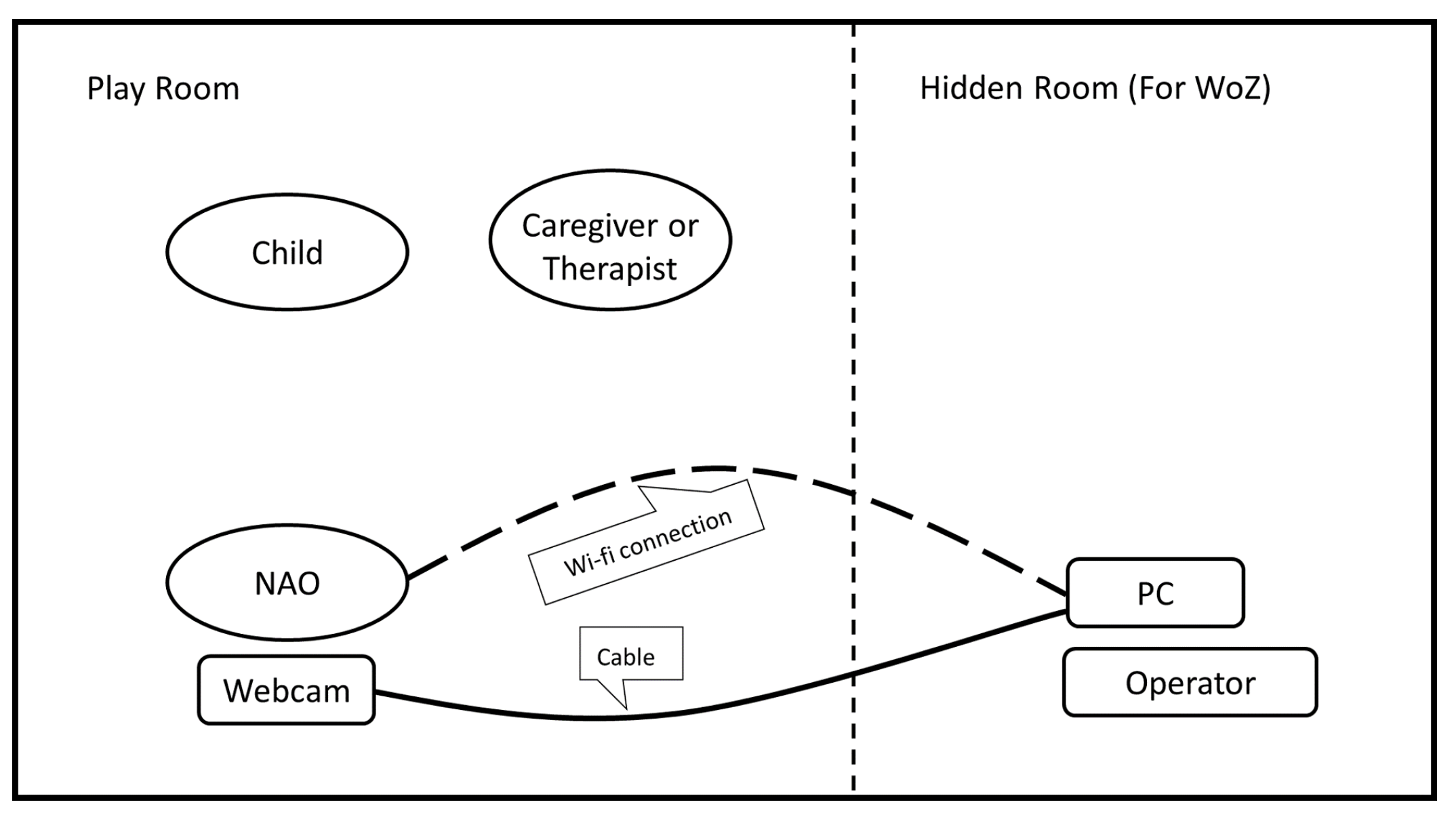

2. Methodology

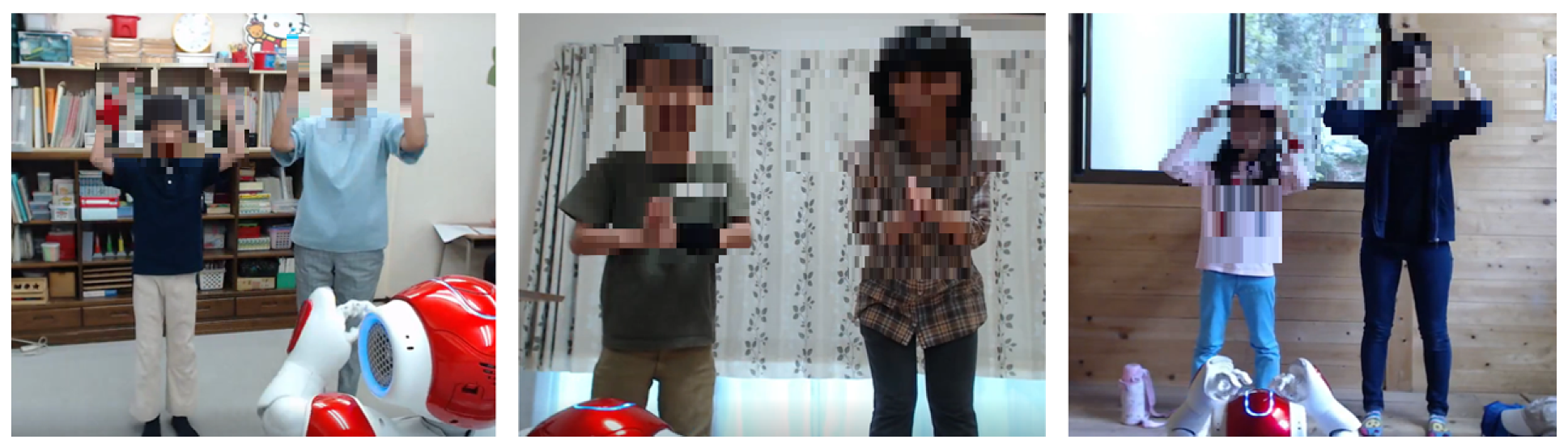

3. Experiments

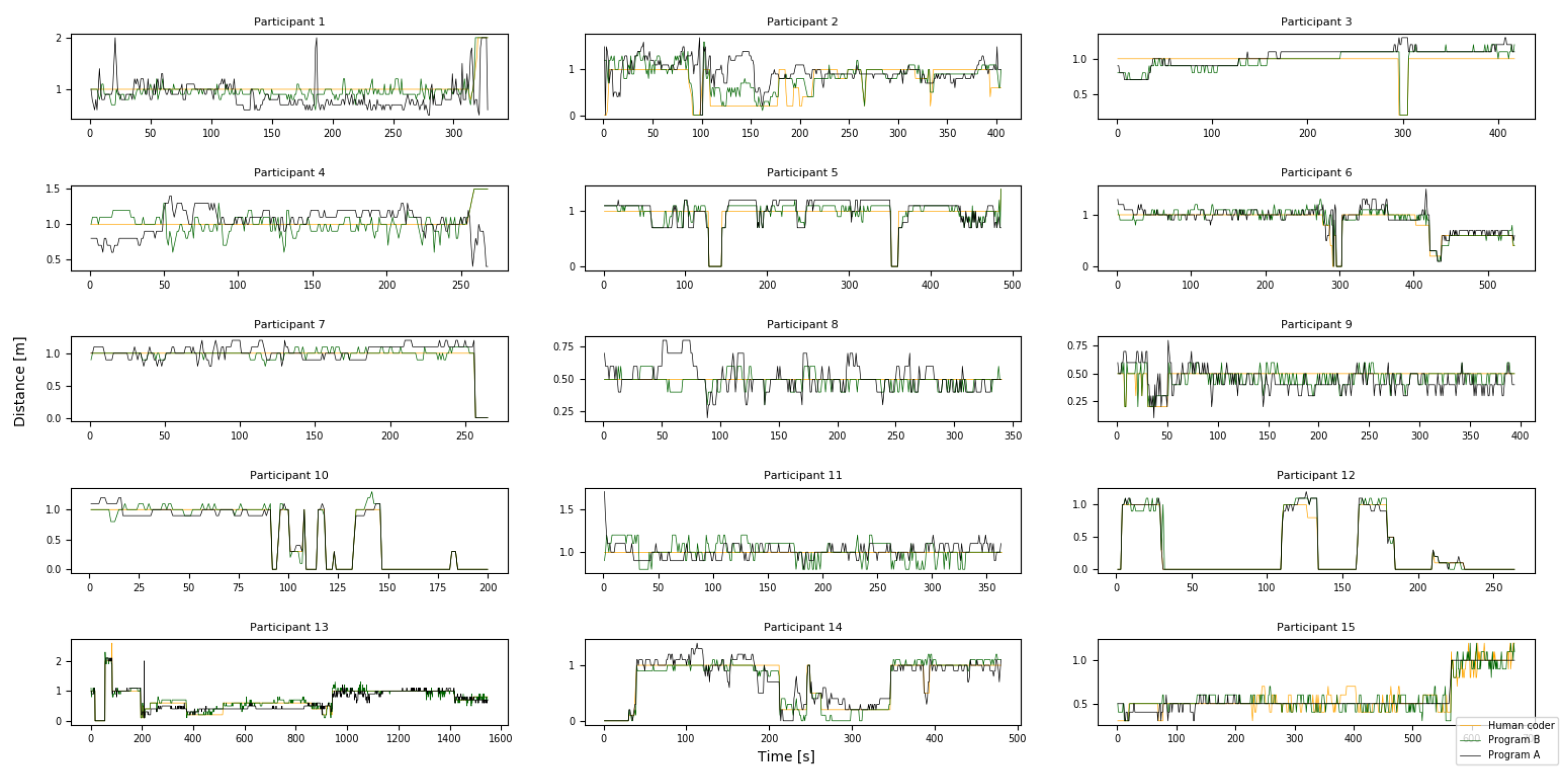

4. Results

Correlation between Social Distance and SRS

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ministry of Internal Affairs and Communications. WHITE PAPER 2016. Information and Communications in Japan; Ministry of Internal Affairs and Communications: Tokyo, Japan, 2016; pp. 156–162.

- Kanda, T. Research Trends towards Social Robots in HRI. J. Robot. Soc. Jpn. 2011, 29, 2–5. [Google Scholar] [CrossRef]

- Broadbent, E.; Stafford, R.; MacDonald, B. Acceptance of healthcare robots for the older population: Review and future directions. Int. J. Soc. Robot. 2009, 1, 319–330. [Google Scholar] [CrossRef]

- Katarzyna, K.; Prescott, T.J.; Robillard, J.M. Socially assistive robots as mental health interventions for children: A scoping review. Int. J. Soc. Robot. 2021, 13, 919–935. [Google Scholar]

- Maenner, M.J.; Shaw, K.A.; Baio, J. Prevalence of autism spectrum disorder among children aged 8 years—Autism and developmental disabilities monitoring network, 11 sites, United States, 2016. MMWR Surveill. Summ. 2020, 69, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Flavia, C.; Venerosi, A. Epidemiology of autism spectrum disorders: A review of worldwide prevalence estimates since 2014. Brain Sci. 2020, 10, 274. [Google Scholar]

- Yosuke, K.; Ashizawa, F.; Inagaki, M. Prevalence estimates of neurodevelopmental disorders in Japan: A community sample questionnaire study. Psychiatry Clin. Neurosci. 2020, 74, 118–123. [Google Scholar]

- Sasayama, D.; Kuge, R.; Toibana, Y.; Honda, H. Trends in Autism Spectrum Disorder Diagnoses in Japan, 2009 to 2019. JAMA Netw Open 2021, 4, e219234. [Google Scholar] [CrossRef]

- Saleh, M.A.; Hanapiah, F.A.; Hashim, H. Robot applications for autism: A comprehensive review. Disabil. Rehabil. Assist. Technol. 2021, 16, 580–602. [Google Scholar] [CrossRef] [PubMed]

- Jaeryoung, L.; Hiroki, T.; Chikara, N.; Goro, O.; Dimitar, S. Which robot features can stimulate better responses from children with autism in robot-assisted therapy? Int. J. Adv. Robot. Syst. 2012, 9, 72. [Google Scholar]

- Ognjen, R.; Jaeryoung, L.; Dai, M.; Schuller, B.; Picard, R.W. Personalized machine learning for robot perception of affect and engagement in autism therapy. Sci. Robot. 2018, 3, eaao6760. [Google Scholar]

- Brian, S.; Henny, A.; Maja, M. Robots for use in autism research. Annu. Rev. Biomed. Eng. 2012, 14, 275–294. [Google Scholar]

- Yunkyung, K.; Mutlu, B. How social distance shapes human–robot interaction. Int. J. Hum.-Comput. Stud. 2014, 72, 783–795. [Google Scholar]

- Goodrich, M.A.; Schultz, A.C. Human–robot interaction: A survey. Found. Trends Hum.-Comput. Interact. 2008, 1, 203–275. [Google Scholar] [CrossRef]

- Imai, M.; Ono, T. Human-Robot Interaction. J. Soc. Instrum. Control. Eng. 2005, 44, 846–852. [Google Scholar]

- Wada, K.; Shibata, T.; Tanie, K. Robot Therapy at a Health Service Facility for the Aged. Trans. Soc. Instrum. Control. Eng. 2006, 42, 386–392. [Google Scholar] [CrossRef]

- Tetsui, T.; Ohkubo, E.; Kato, N.; Sato, S.; Kimura, R.; Naganuma, M. Preliminary Studies of Robot Assisted Rehabilitation Using Commercially Available Entertainment Robots. Bull. Teikyo Univ. Sci. Technol. 2008, 4, 41–52. [Google Scholar]

- Hamada, T. Robot Therapy in Tsukuba Gakuin University 2016. Bull. Tsukuba Gakuin Univ. 2018, 13, 201–208. [Google Scholar]

- Leo, K. Autistic disturbances of affective contact. Nerv. Child 1943, 2, 217–250. [Google Scholar]

- Nightingale, S. Autism spectrum disorders. Nat. Rev. Drug Discov. 2012, 11, 745. [Google Scholar] [CrossRef]

- American Psychiatric Association. The title of the cited contribution. In Diagnostic and Statistical Manual of Mental Disorders (DSM-5®); American Psychiatric Pub: Arlington, VA, USA, 2013. [Google Scholar]

- Hughes, J.R. A review of recent reports on autism: 1000 studies published in 2007. Epilepsy Behav. 2008, 13, 425–437. [Google Scholar] [CrossRef]

- Kawashima, K. A Trial of Case-Study Classification and Extraction of Therapeutic Effects of Robot-Therapy: Literature Review with Descriptive-Analysis. Rep. Fac. Clin. Psychol. 2014, 6, 155–167. [Google Scholar]

- Diehl, J.J.; Schmitt, L.M.; Villano, M.; Crowell, C.R. The clinical use of robots for individuals with autism spectrum disorders: A critical review. Res. Autism Spectr. Disord. 2012, 6, 249–262. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rudovic, O.; Lee, J.; Mascarell-Maricic, L.; Schuller, B.W.; Picard, R.W. Measuring engagement in robot-assisted autism therapy: A cross-cultural study. Front. Robot. AI 2017, 4, 36. [Google Scholar] [CrossRef] [Green Version]

- Emi, M.; Mingyi, L.; Michio, O. Robots as Social Agents: Developing Relationships between Autistic Children and Robots. Jpn. J. Dev. Psychol. 2007, 18, 78–87. [Google Scholar]

- Wood, L.J.; Zaraki, A.; Robins, B.; Dautenhahn, K. Developing kaspar: A humanoid robot for children with autism. Int. J. Soc. Robot. 2021, 13, 491–508. [Google Scholar] [CrossRef] [Green Version]

- Kozima, H.; Michalowski, P.M.; Nakagawa, C. Keepon: A Playful Robot for Research, Therapy, and Entertainment. Int. J. Soc. Robot. 2009, 1, 3–18. [Google Scholar] [CrossRef]

- Costa, A.P.; Charpiot, L.; Lera, F.R.; Ziafati, P.; Nazarikhorram, A.; Van Der Torre, L.; Steffgen, G. More attention and less repetitive and stereotyped behaviors using a robot with children with autism. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018. [Google Scholar]

- Hall, E.T.; Birdwhistell, R.L.; Bock, B.; Bohannan, P.; Diebold, A.R., Jr.; Durbin, M.; Edmonson, M.S.; Fischer, J.L.; Hymes, D.; Kimball, S.T.; et al. Proxemics. Curr. Anthropol. 1968, 9, 83–108. [Google Scholar] [CrossRef]

- Hall, E.T. The Hidden Dimension; Doubleday: Garden City, NY, USA, 1966; Volume 609. [Google Scholar]

- Argyle, M. Bodily Communication; Methuen: London, UK, 1975. [Google Scholar]

- Nakashima, K.; Sato, H. Personal distance against mobile robot. Jpn. J. Ergon. 1999, 35, 87–95. [Google Scholar]

- Yoda, M.; Shiota, Y. A Study on the Mobile Robot which Passes a Man. J. Robot. Soc. Jpn. 1999, 17, 202–209. [Google Scholar] [CrossRef]

- Sug, M.; Taya, M.; Minato, K.; Yamamura, K.; Tomohisa, H.; Komori, M. A Measurement System for the Behavior of Children with Developmental Disorder. Trans. Fundam. Electron. Commun. Comput. Sci. 2001, 84, 2320–2327. [Google Scholar]

- Koay, K.L.; Dautenhahn, K.; Woods, S.N.; Walters, M.L. Empirical results from using a comfort level device in human-robot interaction studies. In Proceedings of the 1st ACM ACM SIGCHI/SIGART Conference on Human-Robot Interaction, Salt Lake City, UT, USA, 2–3 March 2006; pp. 194–201. [Google Scholar]

- Dahyun, K.; Kim, S.; Kwak, S.S. The Effects of the Physical Contact in the Functional Intimate Distance on User’s Acceptance toward Robots. In Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018. [Google Scholar]

- Mahmoud, T.M. A new fast skin color detection technique. World Acad. Sci. Eng. Technol. 2008, 43, 501–505. [Google Scholar]

- David, G.; Hugel, V.; Blazevic, P.; Kilner, C.; Monceaux, J.; Lafourcade, P.; Maisonnier, B. Mechatronic design of NAO humanoid. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Suzuki, R.; Lee, J. Robot-play therapy for improving prosocial behaviours in children with Autism Spectrum Disorders. In Proceedings of the 2016 International Symposium on Micro-NanoMechatronics and Human Science (MHS), Nagoya, Japan, 28–30 November 2016; pp. 1–5. [Google Scholar]

- Ryo, S.; Lee, J.; Rudovic, O. Nao-dance therapy for children with ASD. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017. [Google Scholar]

- Constantino, J.N.; Gruber, C.P. Social Responsiveness Scale: SRS-2; Western Psychological Services: Torrance, CA, USA, 2012. [Google Scholar]

- Nakanishi, Y.; Ishikawa, S. Social skills and school adjustment in adolescents with autism spectrum. Doshisha Clin. Psychol. Ther. Res. 2014, 4, 3–11. [Google Scholar]

| P | Age | Sex | ||||||

|---|---|---|---|---|---|---|---|---|

| P 1 | 7 | M | 75 | 70 | 72 | 77 | 64 | 73 |

| P 2 | 8 | F | 50 | 45 | 48 | 47 | 54 | 58 |

| P 3 | 6 | F | 73 | 80 | 71 | 74 | 50 | 76 |

| P 4 | 13 | M | 69 | 64 | 69 | 68 | 66 | 70 |

| P 5 | 13 | M | 62 | 67 | 66 | 57 | 58 | 61 |

| P 6 | 14 | M | 66 | 54 | 68 | 67 | 58 | 69 |

| P 7 | 7 | M | 68 | 73 | 74 | 67 | 54 | 66 |

| P 8 | 10 | M | 68 | 76 | 72 | 66 | 58 | 61 |

| P 9 | 9 | M | 60 | 45 | 55 | 57 | 64 | 68 |

| P 10 | 7 | M | 79 | 82 | 79 | 71 | 71 | 82 |

| P 11 | 5 | M | 55 | 57 | 57 | 54 | 54 | 52 |

| P 12 | 4 | M | 56 | 67 | 57 | 56 | 44 | 57 |

| P 13 | 10 | M | 70 | 67 | 76 | 67 | 64 | 66 |

| P 14 | 13 | M | 95 | 92 | 91 | 93 | 89 | 85 |

| P 15 | 14 | M | 81 | 64 | 85 | 81 | 73 | 78 |

| P | Pro A(H) [m] | Pro B(H) [m] | Pro A(A) [m] | Pro B(A) [m] | |

|---|---|---|---|---|---|

| P 1 | 75 | 1.0 | 0.6 | 0.1549 ± 0.26 | 0.0537 ± 0.10 |

| P 2 | 50 | 1.7 | 1.2 | −0.0847 ± 0.43 | −0.2173 ± 0.24 |

| P 3 | 73 | 1.1 | 0.9 | −0.0501 ± 0.20 | 0.0050 ± 0.13 |

| P 4 | 69 | 1.1 | 0.4 | −0.0164 ± 0.24 | 0.0078 ± 0.12 |

| P 5 | 62 | 0.7 | 0.3 | −0.0288 ± 0.18 | −0.0002 ± 0.13 |

| P 6 | 66 | 1.1 | 0.8 | −0.0438 ± 0.13 | −0.0274 ± 0.10 |

| P 7 | 68 | 0.2 | 0.2 | −0.026 ± 0.10 | 0.0023 ± 0.05 |

| P 8 | 68 | 0.3 | 0.2 | −0.0147 ± 0.11 | 0.0129 ± 0.06 |

| P 9 | 60 | 0.5 | 0.3 | 0.0437 ± 0.11 | 0.0218 ± 0.07 |

| P 10 | 79 | 0.2 | 0.3 | 0.0105 ± 0.07 | −0.0055 ± 0.06 |

| P 11 | 55 | 0.7 | 0.2 | −0.0085 ± 0.08 | −0.0127 ± 0.11 |

| P 12 | 56 | 0.3 | 0.3 | −0.0087 ± 0.06 | −0.0136 ± 0.09 |

| P 13 | 70 | 0.6 | 0.7 | 0.0428 ± 0.14 | −0.016 ± 0.08 |

| P 14 | 95 | 0.6 | 0.7 | −0.014 ± 0.16 | 0.0115 ± 0.12 |

| P 15 | 81 | 0.4 | 0.4 | 0.0025 ± 0.09 | −0.008 ± 0.10 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Nagae, T. Social Distance in Interactions between Children with Autism and Robots. Appl. Sci. 2021, 11, 10520. https://doi.org/10.3390/app112210520

Lee J, Nagae T. Social Distance in Interactions between Children with Autism and Robots. Applied Sciences. 2021; 11(22):10520. https://doi.org/10.3390/app112210520

Chicago/Turabian StyleLee, Jaeryoung, and Taisuke Nagae. 2021. "Social Distance in Interactions between Children with Autism and Robots" Applied Sciences 11, no. 22: 10520. https://doi.org/10.3390/app112210520