Abstract

Eye writing is a human–computer interaction tool that translates eye movements into characters using automatic recognition by computers. Eye-written characters are similar in form to handwritten ones, but their shapes are often distorted because of the biosignal’s instability or user mistakes. Various conventional methods have been used to overcome these limitations and recognize eye-written characters accurately, but difficulties have been reported as regards decreasing the error rates. This paper proposes a method using a deep neural network with inception modules and an ensemble structure. Preprocessing procedures, which are often used in conventional methods, were minimized using the proposed method. The proposed method was validated in a writer-independent manner using an open dataset of characters eye-written by 18 writers. The method achieved a 97.78% accuracy, and the error rates were reduced by almost a half compared to those of conventional methods, which indicates that the proposed model successfully learned eye-written characters. Remarkably, the accuracy was achieved in a writer-independent manner, which suggests that a deep neural network model trained using the proposed method is would be stable even for new writers.

1. Introduction

Keyboards, mice, and touchscreens represent the most popular input devices for human–computer interaction (HCI) in recent decades, and they are useful for general everyday purposes. Additionally, novel types of interfaces for computer systems have been developed for specialized applications, such as education, medical care, arts, controlling robots, and games utilizing gestures [1], voices [2], pens [3], and other devices [4,5]. Biosignal processing is drawing attention to these novel interfaces because it enables direct interactions between body movements and a computer. Directly interacting with computers through biosignals could significantly improve user experience.

Biosignals used for HCI include electroencephalograms (EEG), electromyograms (EMG), and electrooculograms (EOG) [6,7,8]. EOG are directly related to eye movements and can thus be used for eye-tracking. Because of the difference in electric potentials between the retina and cornea of the eye, the potential increases where the cornea approaches as the eye moves [9]. Eye movements can be measured using optical or infrared cameras [10,11,12,13]. Camera-based methods have higher accuracy than EOG methods but suffer from limitations such as their high cost, complicated setup, and inconsistent recognition rates because of the variability in eyelid/eyelash movements among different individuals and contrast differences depending on the surrounding environment [4].

EOG-based eye tracking is relatively cheap and is not affected by lighting or the physical condition of the eyelids. However, it is difficult to obtain clean data with this method because various signals from the body are measured together with EOG, and these are difficult to separate. Previous studies indicate that EOG-based eye-tracking often attempts to estimate eye movements during a very short period (less than 1 s) with simple and directional movements [14,15,16,17,18]. Yan et al. recognized a maximum of 24 patterns with this approach [19]. They classified eye movement in 24 directions with an average accuracy of 87.1%, but the performance was unstable, and the eyes needed to be turned up to 75°, which is unnatural and inconvenient.

Recently, the concept of eye writing was introduced to overcome the limitations of conventional EOG-based methods. Eye writing involves moving the eyes such that the gaze traces the form of a letter, which increases the amount of information transfer compared to conventional EOG-based eye tracking. The degree of freedom is as high as the number of letters we may write. Recent studies have shown that eye writing can be used to trace Arabic numerals, English alphabets, and Japanese katakana characters [20,21].

To recognize eye-written characters, various pattern-recognition algorithms have been proposed. Tsai et al. proposed a system for recognizing eye-written Arabic numerals and four arithmetic symbols [22]. The system was developed using a heuristic algorithm and achieved a 75.5% accuracy (believability). Fang et al. utilized a hidden Markov model to recognize 12 patterns of Japanese katakana and achieved an 86.5% accuracy in writer-independent validation [20]. Lee et al. utilized dynamic time warping (DTW) to achieve an 87.38% accuracy for 36 patterns of numbers and English alphabets [21]. Chang et al. showed that the accuracy could be increased by combining dynamic positional warping, a modification of DTW, with a support vector machine (SVM) [23]. They achieved a 95.74% accuracy for Arabic numbers, which was 3.33% points higher than when only DTW was used for the same dataset.

Increasing the recognition accuracy is critical for eye writing when it is used as an HCI tool. This paper proposes a method to increase the accuracy of the conventional method using a deep neural network. The proposed method minimizes the preprocessing procedures and automatically finds the features in convolutional network layers. Section 2 describes the datasets, preprocessing, and network structures of the proposed method, and Section 3 presents the experimental results. Section 4 concludes the study and describes future work.

2. Materials and Methods

2.1. Dataset

In this study, the dataset presented by Chang et al. [23] was used because it is one of the few open datasets of eye-written characters. It contains eye-written Arabic numerals collected from 18 participants (5 females and 13 males; mean age 23.5 ± 3.28). The majority of participants (17 out of 18) had no experiences of eye-writing before the experiment. The Arabic numeral patterns were specifically designed for eye writing to minimize user difficulty and reduce similarity among the patterns (Figure 1). The participants moved their eyes to follow the guide-patterns in Figure 1 during the experiment.

Figure 1.

Pattern designs of Arabic numbers [23]. The red dots denote the starting points. Reprinted with permission.

The data were recorded at a sampling rate of 2048 Hz, and they comprised EOG signals at four locations around the eye (two on the left and right sides of the eyes, and two above and below the right eye). All 18 participants were healthy and did not have any eye diseases. The detailed experimental procedures can be found in [23].

The total number of eye-written characters was 540, with 10 Arabic numerals written thrice by each participant. Figure 2 shows examples of eye-written characters in the dataset. The shapes in Figure 2 are very different from the original pattern designs, because the recording started when the participants look at the central point of a screen, and noises and artifacts were included during the experiments. There were distortions caused by the participants’ mistakes during the eye-writing.

Figure 2.

Eye-written characters [23].

2.2. Preprocessing

The data were preprocessed in four steps: (1) resampling, (2) eye-blink removal, (3) resizing, and (4) normalization (Figure 3). First, the signal was resampled to 64 Hz because high sampling rates are unnecessary for EOG analysis (the signal was originally recorded at 2048 Hz). Second, eye blinks in the signals were removed using the maximum summation of the first derivative within a window (MSDW) [24]. The MSDW filter generates two sequences: the filtered signal of emphasizing eye blinks and selected window-sizes () at each data point. The MSDW filter utilizes a set of a simple filter (), known as the SDW (summation of the first derivative within a window). An SDW filter with a window size of is defined as follows:

where is the -th sample of the original signal and is the width of the sliding window. For every time , the following steps are performed to obtain an MSDW output:

Figure 3.

Preprocessing flow.

- (1)

- Calculate SDWs with different s, considering a typical eye blink range.

- (2)

- Choose the maximum SDW at time as the output of MSDW if it satisfies the conditions below.

- a.

- The numbers of local minima and maxima are the same within the range of ;

- b.

- All the first derivatives from time to should be within and , where is the first derivatives at time t.

The ranges of the eye-blink region () were determined using the following equation:

where is the window-size at time from MSDW filter output, and and are the time points of the ith local maximum and minimum, respectively [24]. The integer value is determined to maximize . The detected regions were removed and interpolated using the beginning and end points of each range. Third, all the signals were resized to have the same length because the eye-written characters were recorded in varying time period from 1.69 to 23.51 s, and the use of convolutional layers require all signals to have the same length. All the signals were resized to length , where is the mean of all signal lengths of raw data. Finally, the signals were normalized such that they were within a 1 × 1 size box in 2D space, keeping the aspect ratio unchanged.

The preprocessing procedure in [23] was used after the following simplification: the saccade detection and crosstalk removal were removed. This is because convolutional networks can extract features by themselves, and additional feature extraction methods often decrease the accuracy.

2.3. Deep Neural Network

A deep neural network model was proposed to train and recognize the eye-written characters (Figure 4). The model was designed with an inception architecture inspired by GoogLeNet [25]. The inception model was simplified from its original state because complicated networks were easily overfitted as the data size was limited (only 540 eye-written characters). As is shown in the figure, the network structure consists of four convolution blocks, and two fully connected blocks, sequentially. A convolution block is assembled with four convolutional layers in parallel, a concatenate layer, and a max-polling layer. The kernel size of the four convolutional layers in a convolution block were set to 1, 3, 5, and 7, and the numbers of filters were set differently according to the position of the block. The numbers of the filters at the first and second blocks were set to 8 and 16, respectively; the numbers of the filters at the third and fourth blocks were set to 32. The pooling and stride sizes were set to 3 for all the convolution block. The number of nodes at the first and second fully connected layers were set to 30 and 10, respectively, and a dropout layer with the rate of 0.5 was attached before each fully connected layer. Parameters such as filter size, number of filters, and dropout ratios were set experimentally.

Figure 4.

Proposed network structure.

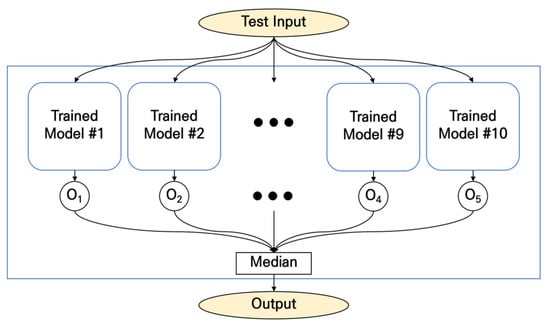

2.4. Ensemble Method

The training results varied in every trial because of the randomness of deep neural networks. Therefore, we employed an ensemble method to reduce the uncertainty and improve the accuracy of the networks. We trained the networks 10 times using the same training data and obtained 10 models with different weights. In the testing/inferencing phase, 10 outputs were obtained from all the trained models when an eye-written character was input. The final output was defined as the median of the 10 outputs. Because each output was a vector with a length of 10, the median operator was applied to the scalars at the same positions. This mechanism is illustrated in Figure 5.

Figure 5.

Ensemble method.

2.5. Evaluation

The proposed method was evaluated in a writer-independent manner using a one-subject leave-out validation approach. This enabled us to maximize the amount of training data while completely separating the test data from the training data. We used the characters written by 17 writers as training data and those of the remaining one writer as the test data. This was repeated 18 times such that all the writers’ characters were used as the test data once.

The method was evaluated with recognition accuracies, precision, recall, and F1 score, which were calculated as follows:

where P denotes the number of total characters, and TP (true positives) denotes the number of correctly classified characters. The accuracy was utilized to evaluate overall classification performance, and the other metrics were utilized to evaluate classification performance for each character. FP (false positives) and FN (false negatives) were calculated for each target letter group. FP was the number of characters in the other letter groups which were classified as the target letter, and FN was the number of the characters in the target group which were classified as other letter groups by the trained network.

Accuracy = TP/P,

Precision = TP/(TP + FP),

Recall = TP/(TP + FN),

F1 score = 2TP/(2TP + FP + FN),

The Adam optimizer was used with the AMSGrad option [26], and the learning rate was set to 0.001. The training was repeated for over 200 epochs with a batch size of 128. The learning rate and number of epochs were derived experimentally using data from all the writers. This does not mean that we optimized the parameters, but we found that the accuracy was stable with the parameters after a number of trials.

3. Results

The proposed method achieved a 97.78% accuracy for 10 Arabic numbers in the writer-independent validation, showing 12 errors among 540 characters. Table 1 compares the results of the proposed method and the conventional methods in the literature. This indicates that the proposed method increased the accuracy by employing a deep neural network, reducing the error rates by approximately half, from 4.26% as reported in [23] to 2.22%. We can directly compare the results because the same dataset was used in [23] and in this study.

Table 1.

Recognition accuracies of eye-written characters with different methods.

It is difficult to compare the current results to those of previous studies other than [23] because of the different experimental conditions. Instead of a direct comparison, we indirectly compared the results using the accuracy differences between the DTW and deep neural network (DNN) with the dataset from [23] because DTW was also used in previous studies. Notably, the error rates dropped significantly from 7.59% to 2.22% after employing DNN by the proposed method. Although the number of patterns in [20,21] were larger compared to those in our datasets, the accuracy also increased when we employed DNN instead of DTW.

It is remarkable that the proposed method achieved higher accuracies than the previous methods in a writer-independent manner. The network was trained from the data from 17 writers and tested with the data from an unknown writer, which means that a similar accuracy was expected when a new writer’s data were tested with the pretrained model. This proves the results to be trustworthy because the training and test dataset were independent of each other. Deep neural networks are commonly trained with a large amount of training data, which implies that the accuracy could be improved with a bigger dataset.

Table 2 and Figure 6 show the accuracy of the proposed method for each character. Number 2 shows the lowest F1 score of 95.41%, and numbers 0, 3, 5, and 6 show accuracies of over 99.0%. The errors are not concentrated in a certain pair of characters, but they are distributed broadly over the confusion matrix.

Table 2.

Accuracies of the proposed method over characters.

Figure 6.

Confusion matrix of the proposed method.

Figure 7 shows an entire list of misrecognized characters. Many of the misrecognized characters had additional eye movements (a, b, h, i, j, k) or long-term fixations (c, d, e, j, k) at certain points in the middle. Some characters were written differently to their references and had distorted shapes (d, i, k).

Figure 7.

Misrecognized eye-written characters from (a) to (k). A→B in each subfigure denotes the number A misclassified as B.

Table 3 summarizes the recognition accuracies of the participants with different classifiers. Evidently, the proposed method improved the accuracy for most of the participants. There were two cases in which the accuracy decreased: participant #10 (Figure 7c–e) and #17 (Figure 7i,j). The errors were because of the long-term fixation of the eyes during eye writing, as shown in Figure 7.

Table 3.

Recognition accuracy for each participant with different classifiers.

It is evident that the accuracy increased through the trials (Table 4). There were eight errors in the first trial for all participants but only two in the second and third trials. This shows that the participants were accustomed to the eye-writing process after a short practice, which is beneficial for an HCI tool.

Table 4.

Number of errors and accuracies through the trials.

4. Conclusions

This paper proposed a method with a deep neural network model and ensemble structure to recognize eye-written characters for eye-based HCI. The proposed method achieved a 97.78% accuracy for Arabic numerals eye-written by 18 healthy participants, which reduced the error rates to approximately half that of the conventional methods. The performance of the proposed method could be effective for new users outside of our dataset because the validation was conducted in a writer-independent manner. Similarly, the accuracy is expected to increase if additional data are used to train the network.

One of the limitations of this study is that the proposed method was validated with a single dataset of Arabic numbers only. In future work, the proposed method should be validated with different datasets such as English alphabets and other complicated characters such as Japanese and Korean characters. An automatic triggering system for eye writing is another potential research topic for eye-based HCI.

Author Contributions

Conceptualization, J.S. and W.-D.C.; methodology, W.-D.C.; implementation, W.-D.C.; experimental validation, J.-H.C.; investigation, J.S.; writing—original draft preparation, W.-D.C. and J.-H.C.; writing—review and editing, W.-D.C. and J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by a grant from the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2020R1F1A1077162); and Ministry of Trade, Industry & Energy (MOTIE, Korea) under Industrial Technology Innovation Program. No.20016150, development of low latency multi-functional microdisplay controller for eye-wear devices.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sonoda, T.; Muraoka, Y. A letter input system based on handwriting gestures. Electron. Commun. Jpn. Part III Fundam. Electron. Sci. (Engl. Transl. Denshi Tsushin Gakkai Ronbunshi) 2006, 89, 53–64. [Google Scholar] [CrossRef]

- Lee, K.-S. EMG-based speech recognition using hidden markov models with global control variables. IEEE Trans. Biomed. Eng. 2008, 55, 930–940. [Google Scholar] [CrossRef] [PubMed]

- Shin, J. On-line cursive hangul recognition that uses DP matching to detect key segmentation points. Pattern Recognit. 2004, 37, 2101–2112. [Google Scholar] [CrossRef]

- Chang, W.-D. Electrooculograms for human–computer interaction: A review. Sensors 2019, 19, 2690. [Google Scholar] [CrossRef] [Green Version]

- Sherman, W.R.; Craig, B.A. Input: Interfacing the Participants with the Virtual World Understanding. In Virtual Reality; Morgan Kaufmann: Cambridge, MA, USA, 2018; pp. 190–256. ISBN 9780128183991. [Google Scholar]

- Wolpaw, J.R.; McFarland, D.J.; Neat, G.W.; Forneris, C.A. An EEG-based brain-computer interface for cursor control. Electroencephalogr. Clin. Neurophysiol. 1991, 78, 252–259. [Google Scholar] [CrossRef]

- Han, J.-S.; Bien, Z.Z.; Kim, D.-J.; Lee, H.-E.; Kim, J.-S. Human-machine interface for wheelchair control with EMG and its evaluation. In Proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (IEEE cat. No. 03CH37439), Cancun, Mexico, 17–21 September 2003; IEEE: Manhattan, NY, USA, 2003; Volume 2, pp. 1602–1605. [Google Scholar]

- Jang, S.-T.; Kim, S.-R.; Chang, W.-D. Gaze tracking of four direction with low-price EOG measuring device. J. Korea Converg. Soc. 2018, 9, 53–60. [Google Scholar]

- Malmivuo, J.; Plonsey, R. Bioelectromagnetism: Principles and Applications of Bioelectric and Biomagnetic Fields; Oxford University Press: New York, NY, USA, 1995. [Google Scholar]

- Sáiz-Manzanares, M.C.; Pérez, I.R.; Rodríguez, A.A.; Arribas, S.R.; Almeida, L.; Martin, C.F. Analysis of the learning process through eye tracking technology and feature selection techniques. Appl. Sci. 2021, 11, 6157. [Google Scholar] [CrossRef]

- Scalera, L.; Seriani, S.; Gallina, P.; Lentini, M.; Gasparetto, A. Human–robot interaction through eye tracking for artistic drawing. Robotics 2021, 10, 54. [Google Scholar] [CrossRef]

- Wöhle, L.; Gebhard, M. Towards robust robot control in cartesian space using an infrastructureless head-and eye-gaze interface. Sensors 2021, 21, 1798. [Google Scholar] [CrossRef] [PubMed]

- Dziemian, S.; Abbott, W.W.; Aldo Faisal, A. Gaze-based teleprosthetic enables intuitive continuous control of complex robot arm use: Writing & drawing. In Proceedings of the 6th IEEE International Conference on Biomedical Robotics and Biomechatronics, Singapore, 26–29 June 2016; IEEE: Singapore, 2016; pp. 1277–1282. [Google Scholar]

- Barea, R.; Boquete, L.; Mazo, M.; López, E. Wheelchair guidance strategies using EOG. J. Intell. Robot. Syst. Theory Appl. 2002, 34, 279–299. [Google Scholar] [CrossRef]

- Wijesoma, W.S.; Wee, K.S.; Wee, O.C.; Balasuriya, A.P.; San, K.T.; Soon, K.K. EOG based control of mobile assistive platforms for the severely disabled. In Proceedings of the IEEE Conference Robotics and Biomimetics, Shatin, China, 5–9 July 2005; pp. 490–494. [Google Scholar]

- LaCourse, J.R.; Hludik, F.C.J. An eye movement communication-control system for the disabled. IEEE Trans. Biomed. Eng. 1990, 37, 1215–1220. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.R.; Yoon, G. Control signal from EOG analysis and its application. World Acad. Sci. Eng. Technol. Int. J. Electr. Electron. Sci. Eng. 2013, 7, 864–867. [Google Scholar]

- Kaufman, A.E.; Bandopadhay, A.; Shaviv, B.D. An Eye Tracking Computer User Interface. In Proceedings of the IEEE Symposium on Research Frontiers in Virtual Reality, San Jose, CA, USA, 23–26 October 1993; pp. 120–121. [Google Scholar]

- Yan, M.; Tamura, H.; Tanno, K. A study on gaze estimation system using cross-channels electrooculogram signals. In Proceedings of the International MultiConference of Engineers and Computer Scientists, Hong Kong, China, 12–14 March 2014; Volume I, pp. 112–116. [Google Scholar]

- Fang, F.; Shinozaki, T. Electrooculography-based continuous eye-writing recognition system for efficient assistive communication systems. PLoS ONE 2018, 13, e0192684. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, K.-R.; Chang, W.-D.; Kim, S.; Im, C.-H. Real-time “eye-writing” recognition using electrooculogram (EOG). IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 37–48. [Google Scholar] [CrossRef]

- Tsai, J.-Z.; Lee, C.-K.; Wu, C.-M.; Wu, J.-J.; Kao, K.-P. A feasibility study of an eye-writing system based on electro-oculography. J. Med. Biol. Eng. 2008, 28, 39–46. [Google Scholar]

- Chang, W.-D.; Cha, H.-S.; Kim, D.Y.; Kim, S.H.; Im, C.-H. Development of an electrooculogram-based eye-computer interface for communication of individuals with amyotrophic lateral sclerosis. J. Neuroeng. Rehabil. 2017, 14, 89. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chang, W.-D.; Cha, H.-S.; Kim, K.; Im, C.-H. Detection of eye blink artifacts from single prefrontal channel electroencephalogram. Comput. Methods Programs Biomed. 2016, 124, 19–30. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Reed, S.; Sermanet, P.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–12. [Google Scholar]

- Reddi, S.J.; Kale, S.; Kumar, S. On the convergence of Adam and beyond. In Proceedings of the 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, 30 April–3 May 2018; pp. 1–23. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).