1. Introduction

A shadow is a phenomenon that is presented when a light source is totally or partially obstructed by an object [

1]; according to the shadow location, they can be classified into cast shadow (the part that is cast on the ground or other objects by high objects) and self-shadow (the part of the object that is not illuminated) [

2]. With the increasing development of remote sensing technology, the shadow effect becomes more noticeable in aerial imaging, which, together with the higher spatial resolution, brings new challenges to the image preprocessing step. Specifically, unmanned aerial vehicles (UAVs) have been increasingly used over the last few years; recently, this technology has taken place in the areas of object detection [

3,

4,

5], agriculture [

6,

7,

8], and urban zones analysis [

9,

10,

11]. Within the applications focused on urban areas, the presence of cast shadows and self-shadows in aerial images might also cause shape distortion in objects and loss of color information [

12] since urban surface features are rather complex with a great variety of shadows resulting from occlusion caused by buildings, bridges, and trees [

13]. It is also known that in color aerial images, color characteristics are valid descriptors that simplify identifying characteristics of visual interpretation applications [

14]; this fact enhances the need for new methodologies for shadow removal that can retrieve color and texture information from aerial images.

Shadow removal methodologies pursue obtaining shadow-free images since it can facilitate improving the performance of tasks, such as object recognition, object tracking, and information enhancement [

15]; due to the previously stated, several shadow removal methods have been developed. Such methods are conducted by using close-shot images [

16,

17,

18], video surveillance [

19,

20,

21], and aerial images [

22,

23,

24]. Numerous methods attending to shadow removal in outdoor scenes have been developed. One of the leading strategies applied when performing shadow removal in outdoor scenes is illumination correction [

25,

26,

27]. Highlighted examples in published works are methods that execute shadow removal in UAV aerial images using retinex theory [

28] as the basis of computing the illumination correction, wherein the work published by Guo et al. [

29] computes an improved luminance image and executes an illumination correction based upon the color transfer formula. Other recently published methodology bases itself on the use of the image matting process. The research published by Amin et al. [

30] proposes a relighting method that does not rely on user interaction and maximizes the natural appearance of the output relighted images. Although the results are acceptable, the number of erosion-dilation iterations is empirically justified for the tested dataset, mainly consisting of close camera shots. Illumination correction methods usually depict a high consistency in color restoration and shadow boundaries. However, these methods usually maintain the chromatic features of shadowed regions, leading to color differences, especially in urban aerial scenes. As an alternative method when performing the shadow removal, color transfer [

31] is presented. The local color transfer methods use spatial similarity to set up a relationship between pixel features of the single image shadows and non-shadows to restore the shadowed regions, in which such relationship predominantly consists of a statistical correlation [

32,

33] that can be performed using different color spaces or a single-color feature.

Recently in the deep learning field, convolutional neural network (CNN) architectures have been used to enhance the results of these methods [

34]. Furthermore, a deep learning-related approach that uses generative adversarial networks (GANs) is used by Inuoue et al. [

35] to perform the shadow detection and removal process, where they proposed a SynShadow model, a large-scale dataset of shadow/shadow-free/matte image triplets, and the pipeline to synthesize the diverse and realistic triplets. An adversarial neural network (ANN) based solution has been recently proposed by Tang et al. [

36]. The authors targeted obtaining a shadow detection and removal procedure by taking care of the image color consistency at the mask silhouette region. This problem is tackled using the multiscale and global feature (MSGF) and the direction feature (DF) algorithms, resulting in an improvement in the balance error rate (BER) index for shadow detection and the root mean square error (RMSE) index for shadow removal when compared to ground-truth images for the image shadow triplets dataset (ISTD) and Stony Brook University (SBU) public datasets. ANN solutions require a comprehensive dataset of training images, sometimes produced by a manual image transform process that could require experts’ time. For a shadow removal ANN, shadow mask creation could be performed manually or in an automated fashion with a specific algorithm. For the case of GAN-based solutions, such as the one published by Ding et al. [

37], the training process is described as a semi-supervised task, which does not rely as heavily on supervised data to set its model parameters; this solution uses a multi-step coarse-to-fine-grained shadow removal deep learning image generation process. However, solutions based on the deep learning processing scheme require a large amount of computing power for the training process, as the number of generations, the training dataset image count, and the input data size is substantially large. In addition to the aforementioned, pre-established image input and output sizes exist for the ANN models, requiring a resizing at both ends for effectively computing the shadow detection and removal processes.

Since complex scenarios could involve a heterogeneous mix of materials and textures, algorithms need to adapt themselves for any case. The study presented by Fan et al. [

38] tries to solve this issue by two key steps: the first step is devoted to a filtering process where the textures are taken away, while the second and last step effectively incorporates depth cue data to the preprocessed input. This method shows an advantage for its second step, as no extra information or specific purpose hardware, such as a LiDAR capture device, is required, reducing the implementation cost and enabling the ability to handle more scenarios. Aerial imaging-related works are some of the emerging issues in the shadow removal task, in which the methods often base their work on illumination feature correction [

14] and local color transfer [

39]. Notwithstanding, the applications in UAV imaging still lack accurate results in color-corrected and texture-preserving shadows; this is mainly noticeable in the case of urban scenes that often contain several heterogeneous regions. Additionally, the capturing of aerial images take place at outdoor scenes, where two light sources mainly illuminate the environment: a light source (the sun) and a diffuse source (the sky) with different spectral power distributions; the skylight has components in the wavelengths from 450 to 495 nm of the visible spectrum [

40]. Shadows are perceived when the direct illumination of the sun is blocked, and a region is only illuminated by the light of the sky; all regions covered by shadows appear to be more bluish. This phenomenon is especially noticeable in regions that present low saturated colors when illuminated by sunlight (concrete and asphalt); images captured with standard cameras also tend to capture images in which the bluish regions are visually and numerically discerned [

41].

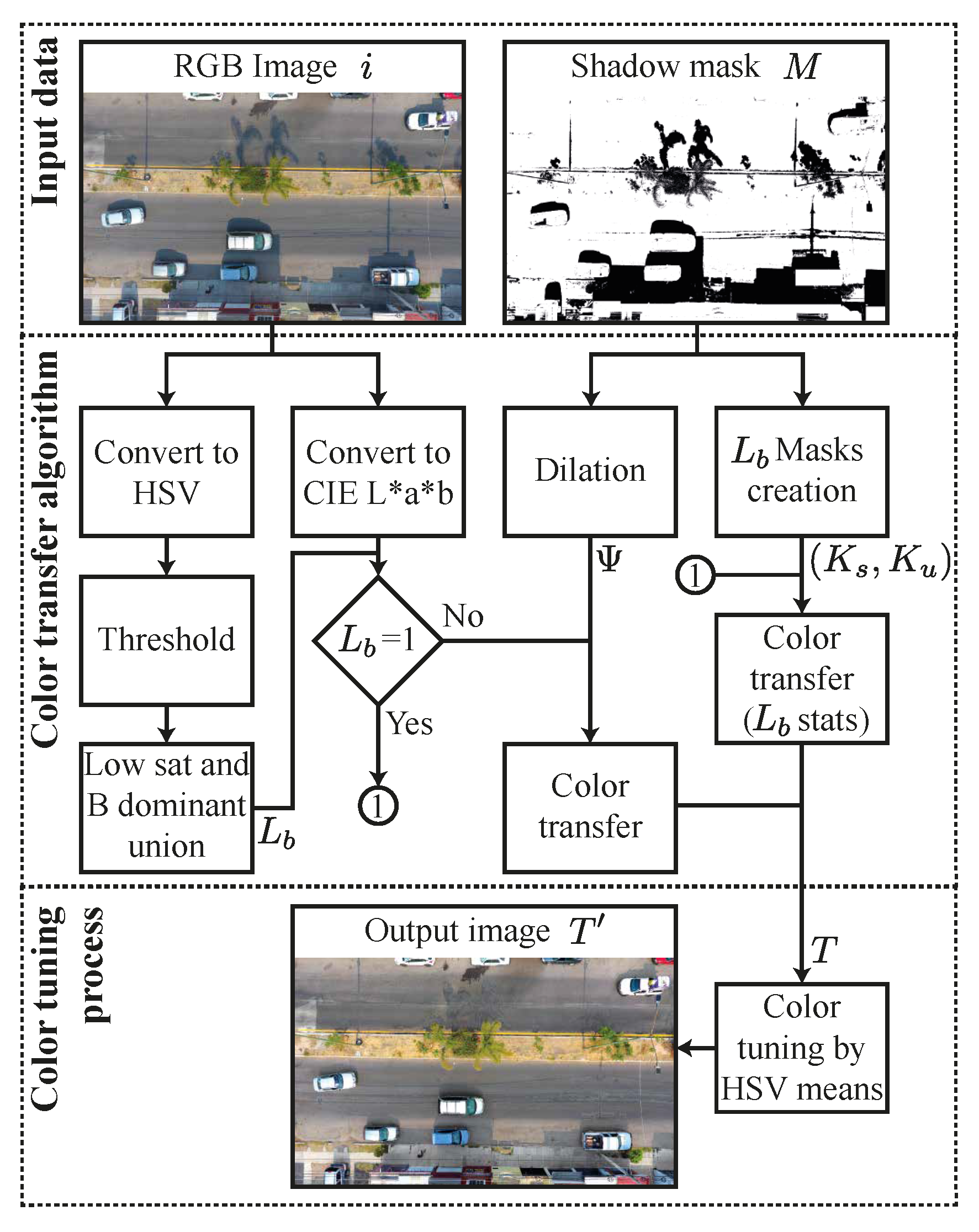

In this work, we present a novel method for shadow removal based on the characteristics of the CIE L*a*b (luminance, a, and b) color space and the HSV (Hue, Saturation, and Value) color space. The proposed work aims to correct the chromatic discrepancies found in concrete and asphalt areas in UAV-captured images under light and shadow conditions. This method consists of a proposed local color transfer algorithm that executes the color correction separating the colors’ statistics according to chromatic features into an image, uses a dilation process to the shadow mask, and a final color tuning that enhances the local color transfer results. The present proposal offers a shadow removal tool that improves the results presented in local color transfer algorithms when UAV-captured images are used. Moreover, this work considers both cast shadows and self-shadows. The proposed final color tuning step reduces the discontinuity found in similar regions that are found under shadows. In addition, this method presents an algorithm with a relatively low computational load that, at the same time, is suitable for implementing in a parallel computational model, owing to the independence of its functions. This new methodology was tested over aerial urban RGB (Red, Green, and Blue) images captured using a standard drone device. The study cases considered were captured at urban scenes containing mainly asphalt and concrete regions; such urban scenes are covered by shadow at different ratios in order to test the proposed color correction method. Likewise, the proposed work was also compared against algorithms in the state-of-the-art by visual qualitative analysis and quantitatively using the shadow standard deviation index (SSDI). The experiments demonstrated an improvement in color correction results at asphalt and concrete regions, and at the same time, accurately preserved texture information. In the following sections, we present the proposed method development describing the algorithm and method details; the results section, where the results of the experiments are exposed; the discussion section follows. Finally, the conclusions are stated.

3. Results

The shadow removal results obtained for each tested method for HS images are displayed in

Figure 6, where, for the sake of brevity, Cun et al.’s method is referenced as SMGAN, Inoue et al.’s method is referenced as SynShadow, Luo et al.’s method is referenced as Illumination Correction, and Murali and Govindan’s method is referenced as Color transfer.

It is evident that when shadow removal is performed in tested HS images, the results are hindered due to the high amount of land covered by such shadows. Likewise, it is noticeable that self-shadows typically found in trees and shrubs present a difficulty for color recovering. In

Figure 6a–c, the results obtained in the Illumination correction method are displayed. It can be observed that the illumination correction algorithm loses color recovery accuracy when shadows cover asphalt and concrete; this can be seen as a bluish color in such regions. This fact makes shadow boundaries evident despite the boundary correction proposed in the tested work. Additionally, it is noticeable in the marked regions that green areas lose color accuracy and texture information. In

Figure 6d–f, the results provided by the SMGAN method are depicted. It can be appreciated that this method keeps the texture features in the corrected regions but lacks an accurate color correction and narrows the image resolution to reduce the computational load.

Figure 6g–i depicts the results for the color transfer method. It is shown that despite using a color transfer method based on the CIE L*a*b color space, the concrete and asphalt regions tend to keep the blue-like color or acquire a tone similar to other dominant regions in the unshadowed regions. The condition mentioned above is especially noticeable in

Figure 6i in the region marked with a yellow rectangle, where the corrected shadows in asphalt become green-like. Additionally, in the grass regions highlighted with arrows, it can be noticed that such regions look blurred. In the case of

Figure 6j–l, it can be observed that the SynShadow method shows visually accurate results that enhance the contour smoothing compared to traditional methods, but the corrected regions are still evident due to the chromatic differences found mainly in the asphalt areas, as signaled in the yellow rectangle. Lastly, corrected green areas are over lighted. Finally,

Figure 6m–o depict the results of the proposed method. Although boundaries are still noticeable, it is discerned that the color in asphalt areas maintains an accurate visual consistency regarding the unshadowed one as marked in the yellow rectangles. In addition, it can be noticed that the grass areas highlighted keep texture information. As seen in

Figure 6, HS images intricate the shadow removal task due to the limited information contained in unshadowed regions. In spite of this, the proposed method shows visual consistency for color and texture in grass and asphalt regions. The following set of results for SS images is shown in

Figure 7.

As shown in

Figure 7a–c, the illumination correction algorithm tends to depict blue-like colors in asphalt and concrete. In

Figure 7d–f, the SMGAN method modifies the unshadowed areas, which represents a complete alteration of the image information. The results displayed in

Figure 7g–i show that color correction in concrete and asphalt regions also tend to keep the blue-like color or acquire a tone similar to the other statistical dominant regions.

Figure 7j–l shows that shadow removal results present an accurate color correspondency, wherein corrected regions can be observed with slight over-illumination; this is especially noticeable in the regions signaled with a yellow rectangle. Regarding

Figure 7j–l, the results of the proposed method are depicted. It is noticeable that the color in the asphalt and concrete areas is recovered with visual accuracy, but in some corrected regions, it is observed that it is dark regarding unshadowed contiguous regions. Summarizing the results displayed in

Figure 7, it is evident in the regions marked in a yellow rectangle that color consistency is improved in the proposed method, in which shadow boundaries are not as evident as the other traditional tested methods. Furthermore, it is quite remarkable that corrected self-shadows on the vegetation highlighted present an improvement in texture preservation. Likewise, it can be appreciated that self-shadows are also corrected. As seen in

Figure 6 and

Figure 7, the proposed method delivers accurate visual results, in which color correction and texture preservation are the principal issues attended to in this work. In order to complement the quantitative analysis, visual analysis for the results obtained was realized. In

Figure 8, the comparison on specific zones is realized.

The analyzed zones are delimited with blue and red squares as depicted in

Figure 8, in which the qualitative criteria to evaluate the shadow removal results are color correction and texture preservation. As observed in

Figure 8b–g, the Illumination correction method provides results in which color and texture information are not visually consistent regarding unshadowed regions with similar land cover, and the texture information in green areas are blurred. In

Figure 8c–h, a resolution loss due to the SMGAN method is noticeable. The end-to-end process also shows a poor shadow detection step in the study case analyzed. In the case of

Figure 8d–i, it is evident that the texture information is accurately preserved. However, color information is visually inconsistent regarding unshadowed regions with the same land cover; specifically,

Figure 8d shows over-saturated colors with acceptable texture preservation, and the asphalt in

Figure 8i displays bluish colors in the corrected regions. The SynShadow method results shown in

Figure 8e–j depicts an over-illuminated correction in green areas (see

Figure 8e), and the corrected asphalt regions show color inconsistency in the shadow boundaries, as shown in

Figure 8e. Additionally, the image resolution is evidently reduced in the provided results.

Figure 8f–k shows the results obtained with our proposed method; the color correspondence provided in the corrected asphalt regions (see

Figure 8k) is improved compared to the other tested methods. Although shadow boundaries are still visible, the difference in terms of color between unshadowed and corrected regions is reduced regarding the rest of the methods. Moreover, as it is noticed in

Figure 8f, green areas present an accurate texture and color when compared with the rest of works. It was seen in

Figure 8 that the proposed work shows visually accurate results during qualitative analysis than the tested methods.

As mentioned above, the quantitative analysis was executed by using SSDI, and

Table 1 displays the results obtained in each study case for all methods compared.

Table 1 presents the SSDI results computed for the methods tested. In the specific case of study case 1, the proposed work presents an improvement of up to 19 units compared to the Illumination correction method. Nonetheless, in test 2, it is discerned that the SynShadow method presents a lower SSDI value. In the average results, it is observed near values between Illumination correction, color transfer, and SMGAN methods, and in the case of the SynShadow method and our proposed method, the average results are numerically near, where our proposal is lower by about 0.25. According to qualitative and quantitative analysis, it was demonstrated that the proposed work provides accurate color correction and texture preservation results, improving the other tested methods. The results validated the proposed method as an alternative solution to automatically perform shadow removal tasks in urban aerial images without resizing the input image.

4. Discussion

As proven in the previous section, the shadow removal task executed over images containing urban aerial scenes still presents a challenging task. According to the experiments executed, the Illumination correction method presented noticeable bluish colors in corrected zones that include asphalt and concrete; this is especially visible in

Figure 6a–c. This result is mainly caused by the lack of chromatic correction in the shadowed regions that include elements that present low saturated colors when illuminated by sunlight and are bluish when illuminated by a skylight. In the case of the Color transfer method, the chromatic correction is executed. However, as it is mainly observed in

Figure 6i and

Figure 7i, the corrected shadows display a slight green color; this is caused during the color transfer process. The unshadowed low saturated regions are classified with the rest of the unshadowed colors contained in the image, which causes an ambiguous classification, leading to color transfer results with corrected regions that show a slight color tone similar to the dominant region (green color in the experiments performed).

It can be seen in

Figure 6d–f and

Figure 7d–f that the SMGAN method presents variated results, in which it is noticed that in the case of

Figure 6f, the corrected regions show accurate color and texture results, but such results are not extended to the rest of the images. Additionally, in

Figure 7d–e, it is observed that the method modifies the colors in the entire image. Lastly, the SynShadow method results are depicted in

Figure 6j–l and

Figure 7j–l; it is evident that the SynShadow method performs acceptable boundary smoothing. Nevertheless, the color correction results are still visually perceptible; also, shadowed green areas correction tends to provide over-illuminated pixels, which can be mainly observed in

Figure 6j,l, and detailed in

Figure 8. Deep learning methods present an innovative and functional methodology that is able to execute such tasks as an end-to-end process; it can be discerned in that deep learning-based methods provide results that enhance the boundaries smoothing when it is visually compared to traditional methods. Nevertheless, the results can vary depending on the training process and the method development, and the computational load implies an image resizing that can lead to data loss. In addition, although the shadow boundaries smoothing is executed, the color correction results provided still keep visual evidence of the corrected regions.

The results of this study’s experiments are shown in

Figure 6m–o and

Figure 7m–o. It was demonstrated through them that executing the color classification grouping for the regions that contain concrete and asphalt enhances the color correction results and avoids the statistical misclassification of such regions. It was also demonstrated that the dilation applied to the shadow mask improves the statistical relation between shadowed and unshadowed regions; this can be appreciated in improved texture preservation, especially in green areas, as detailed in

Figure 8. The relatively low computational load allows this method to be executed over high-resolution images. Nonetheless, the boundaries smoothing is still deficient in most of the study cases tested; this opens the opportunity of improving the present results working in boundaries processing. The present work presents an alternative tool that can be suitable to any shadow detection algorithm to process the automatic or semi-automatic process end-to-end.

5. Conclusions

In the proposed work, a methodology for cast shadows and self-shadows removal was presented. The proposed approach offers a tool based on color transfer for color correction in shadowed regions in urban aerial scenes captured with UAV. The presented work was tested under different urban scenes containing roads, concrete sidewalks, and green areas, where scenes presented different percentages of shadows were transformed into scenes that presented different darkness levels, and texture features were also considered. During the qualitative analysis, the advantages that this work shows over the other tested methods were demonstrated. Despite preserving shadow boundaries, the color consistency and texture preservation provided visually accurate results; this is mainly noticeable in vegetation, road, and sidewalk textures, which were successfully conserved. Likewise, according to the SSDI results, the proposed method provided far better results than the other tested methods in all the study cases in this work, which proves its accuracy. The qualitative and quantitative results validate this work as a valuable and affordable tool in aerial urban areas shadow removal tasks. Additionally, this methodology is helpful as a preprocessing step to execute remote sensing, pattern recognition, and image segmentation tasks. Further works under this topic would focus on enhancing the quality of shadow removal results in terms of shadow boundaries since it still involves a challenging task. Likewise, in future works, photogrammetric processing of the corrected images will be executed for specific applications.