Can ADAS Distract Driver’s Attention? An RGB-D Camera and Deep Learning-Based Analysis

Abstract

:1. Introduction

2. Materials and Methods

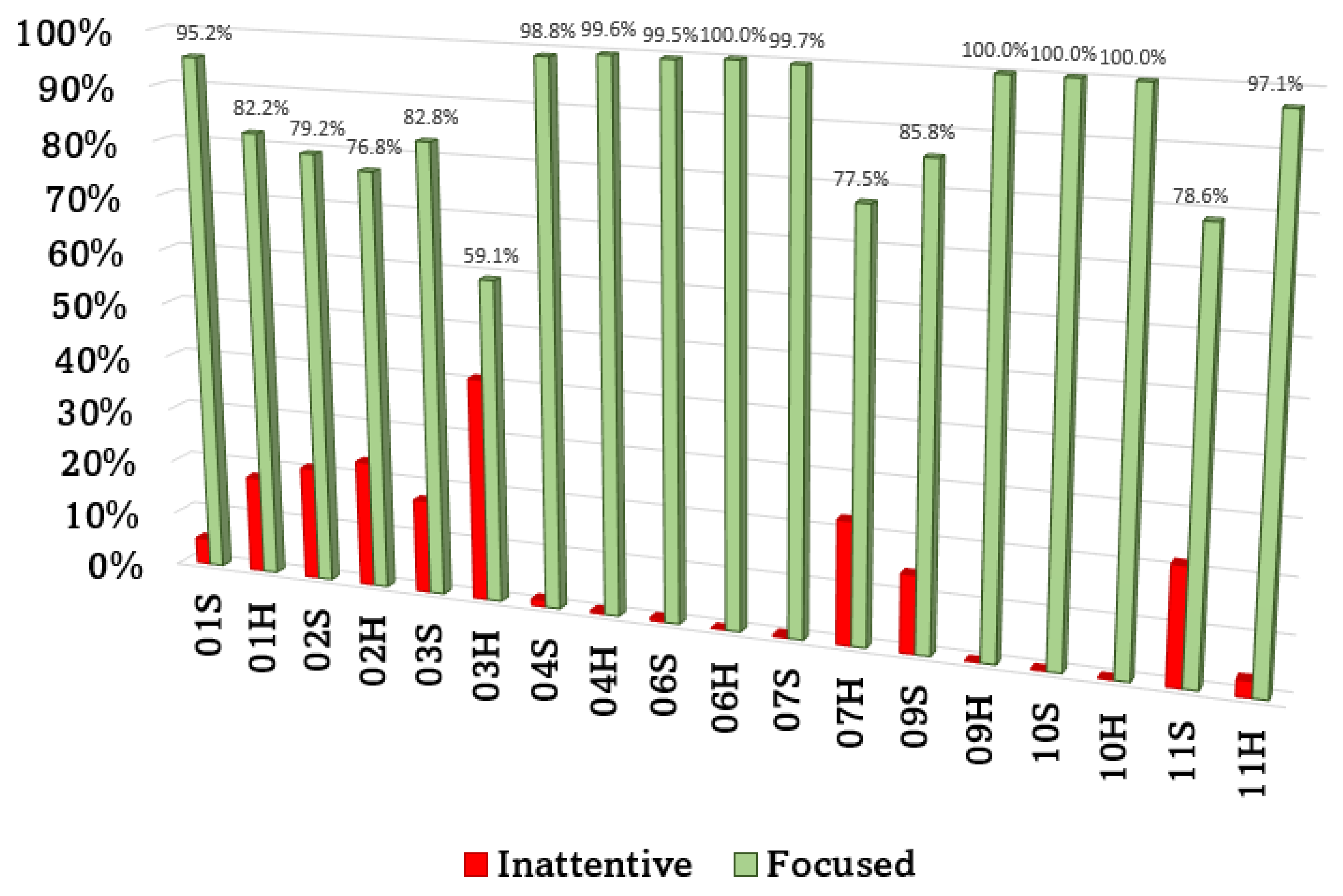

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dua, I.; Nambi, A.U.; Jawahar, C.V.; Padmanabhan, V. AutoRate: How attentive is the driver? In Proceedings of the 14th IEEE International Conference on Automatic Face and Gesture Recognition, FG 2019, Lille, France, 14–18 May 2019. [Google Scholar]

- Fang, J.; Yan, D.; Qiao, J.; Xue, J.; Yu, H. DADA: Driver Attention Prediction in Driving Accident Scenarios. IEEE Trans. Intell. Transp. Syst. 2021. [Google Scholar] [CrossRef]

- Xia, Y.; Zhang, D.; Kim, J.; Nakayama, K.; Zipser, K.; Whitney, D. Predicting Driver Attention in Critical Situations. In Asian Conference on Computer Vision; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2019; Volume 11365, pp. 658–674. [Google Scholar]

- Ziebinski, A.; Cupek, R.; Grzechca, D.; Chruszczyk, L. Review of advanced driver assistance systems (ADAS). AIP Conf. Proc. 2017, 1906, 120002. [Google Scholar] [CrossRef]

- Gaspar, J.; Carney, C. The Effect of Partial Automation on Driver Attention: A Naturalistic Driving Study. Hum. Factors 2019, 61, 1261–1276. [Google Scholar] [CrossRef]

- Ruscio, D.; Bos, A.J.; Ciceri, M.R. Distraction or cognitive overload? Using modulations of the autonomic nervous system to discriminate the possible negative effects of advanced assistance system. Accid. Anal. Prev. 2017, 103, 105–111. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.; Bordegoni, M.; Caruso, G. User studies by driving simulators in the era of automated vehicle. Comput. Aided. Des. Appl. 2020, 18, 211–226. [Google Scholar] [CrossRef]

- Bozkir, E.; Geisler, D.; Kasneci, E. Assessment of driver attention during a safety critical situation in VR to generate VR-based training. In Proceedings of the SAP 2019: ACM Conference on Applied Perception, Barcelona, Spain, 19–20 September 2019; ACM: New York, NY, USA, 2019. [Google Scholar]

- Caruso, G.; Shi, Y.; Ahmed, I.S.; Ferraioli, A.; Piga, B.; Mussone, L. Driver’s behaviour changes with different LODs of Road scenarios. In Proceedings of the European Transport Conference, Milan, Italy, 9–11 September 2020. [Google Scholar]

- Gaweesh, S.M.; Khoda Bakhshi, A.; Ahmed, M.M. Safety Performance Assessment of Connected Vehicles in Mitigating the Risk of Secondary Crashes: A Driving Simulator Study. Transp. Res. Rec. J. Transp. Res. Board 2021. [Google Scholar] [CrossRef]

- Khoda Bakhshi, A.; Gaweesh, S.M.; Ahmed, M.M. The safety performance of connected vehicles on slippery horizontal curves through enhancing truck drivers’ situational awareness: A driving simulator experiment. Transp. Res. Part F Traffic Psychol. Behav. 2021, 79, 118–138. [Google Scholar] [CrossRef]

- Jha, S.; Marzban, M.F.; Hu, T.; Mahmoud, M.H.; Al-Dhahir, N.; Busso, C. The Multimodal Driver Monitoring Database: A Naturalistic Corpus to Study Driver Attention. IEEE Trans. Intell. Transp. Syst. 2021. [Google Scholar] [CrossRef]

- Nishigaki, M.; Shirakata, T. Driver attention level estimation using driver model identification. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference, ITSC 2019, Auckland, New Zealand, 27–30 October 2019; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2019; pp. 3520–3525. [Google Scholar]

- Yang, D.; Li, X.; Dai, X.; Zhang, R.; Qi, L.; Zhang, W.; Jiang, Z. All in One Network for Driver Attention Monitoring. In Proceedings of the ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing, Barcelona, Spain, 4–8 May 2020; Volume 2020, pp. 2258–2262. [Google Scholar]

- Ulrich, L.; Vezzetti, E.; Moos, S.; Marcolin, F. Analysis of RGB-D camera technologies for supporting different facial usage scenarios. Multimed. Tools Appl. 2020, 79, 29375–29398. [Google Scholar] [CrossRef]

- Ceccacci, S.; Mengoni, M.; Andrea, G.; Giraldi, L.; Carbonara, G.; Castellano, A.; Montanari, R. A preliminary investigation towards the application of facial expression analysis to enable an emotion-aware car interface. In International Conference on Human-Computer Interaction; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2020; Volume 12189, pp. 504–517. [Google Scholar]

- Ekman, P. An Argument for Basic Emotions. Cogn. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Cowen, A.S.; Keltner, D. Self-report captures 27 distinct categories of emotion bridged by continuous gradients. Proc. Natl. Acad. Sci. USA 2017, 114, E7900–E7909. [Google Scholar] [CrossRef] [Green Version]

- Grafsgaard, J.F.; Wiggins, J.B.; Boyer, K.E.; Wiebe, E.N.; Lester, J.C. Automatically recognizing facial expression: Predicting engagement and frustration. In Proceedings of the 6th International Conference on Educational Data Mining, (EDM 2013), Memphis, TN, USA, 6–9 July 2013. [Google Scholar]

- Borghi, G. Combining Deep and Depth: Deep Learning and Face Depth Maps for Driver Attention Monitoring. arXiv 2018, arXiv:1812.05831. [Google Scholar]

- Craye, C.; Karray, F. Driver distraction detection and recognition using RGB-D sensor. arXiv 2015, arXiv:1502.00250. [Google Scholar]

- Kowalczuk, Z.; Czubenko, M.; Merta, T. Emotion monitoring system for drivers. IFAC-PapersOnLine 2019, 52, 440–445. [Google Scholar] [CrossRef]

- Tornincasa, S.; Vezzetti, E.; Moos, S.; Violante, M.G.; Marcolin, F.; Dagnes, N.; Ulrich, L.; Tregnaghi, G.F. 3D facial action units and expression recognition using a crisp logic. Comput. Aided. Des. Appl. 2019, 16. [Google Scholar] [CrossRef]

- Dubbaka, A.; Gopalan, A. Detecting Learner Engagement in MOOCs using Automatic Facial Expression Recognition. In Proceedings of the IEEE Global Engineering Education Conference, EDUCON, Porto, Portugal, 27–30 April 2020; Volume 2020, pp. 447–456. [Google Scholar]

- Roohi, S.; Takatalo, J.; Matias Kivikangas, J.; Hämäläinen, P. Neural network based facial expression analysis of game events: A cautionary tale. In Proceedings of the CHI PLAY 2018 Annual Symposium on Computer-Human Interaction in Play, Melbourne, VIC, Australia, 28–31 October 2018; Association for Computing Machinery, Inc.: New York, NY, USA, 2018; pp. 59–71. [Google Scholar]

- Sharma, P.; Esengönül, M.; Khanal, S.R.; Khanal, T.T.; Filipe, V.; Reis, M.J.C.S. Student concentration evaluation index in an E-learning context using facial emotion analysis. In Proceedings of the International Conference on Technology and Innovation in Learning, Teaching and Education, Thessaloniki, Greece, 20–22 June 2019; Springer: Berlin/Heidelberg, Germany, 2019; Volume 993, pp. 529–538. [Google Scholar]

- Meyer, O.A.; Omdahl, M.K.; Makransky, G. Investigating the effect of pre-training when learning through immersive virtual reality and video: A media and methods experiment. Comput. Educ. 2019, 140, 103603. [Google Scholar] [CrossRef]

- Varao-Sousa, T.L.; Smilek, D.; Kingstone, A. In the lab and in the wild: How distraction and mind wandering affect attention and memory. Cogn. Res. Princ. Implic. 2018, 3, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Aksan, N.; Sager, L.; Hacker, S.; Marini, R.; Dawson, J.; Anderson, S.; Rizzo, M. Forward Collision Warning: Clues to Optimal Timing of Advisory Warnings. SAE Int. J. Transp. Saf. 2016, 4, 107–112. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dingus, T.A.; Guo, F.; Lee, S.; Antin, J.F.; Perez, M.; Buchanan-King, M.; Hankey, J. Driver crash risk factors and prevalence evaluation using naturalistic driving data. Proc. Natl. Acad. Sci. USA 2016, 113, 2636–2641. [Google Scholar] [CrossRef] [Green Version]

- Lemaire, P.; Ardabilian, M.; Chen, L.; Daoudi, M. Fully automatic 3D facial expression recognition using differential mean curvature maps and histograms of oriented gradients. In Proceedings of the 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Shanghai, China, 22–26 April 2013. [Google Scholar]

- Li, H.; Sun, J.; Xu, Z.; Chen, L. Multimodal 2D+3D Facial Expression Recognition with Deep Fusion Convolutional Neural Network. IEEE Trans. Multimed. 2017, 19, 2816–2831. [Google Scholar] [CrossRef]

- Sui, M.; Zhu, Z.; Zhao, F.; Wu, F. FFNet-M: Feature Fusion Network with Masks for Multimodal Facial Expression Recognition. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar]

- Li, H.; Sui, M.; Zhu, Z.; Zhao, F. MFEViT: A Robust Lightweight Transformer-based Network for Multimodal 2D+3D Facial Expression Recognition. arXiv 2021, arXiv:2109.13086. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, International Conference on Learning Representations, ICLR, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Shams, Z.; Naderi, H.; Nassiri, H. Assessing the effect of inattention-related error and anger in driving on road accidents among Iranian heavy vehicle drivers. IATSS Res. 2021, 45, 210–217. [Google Scholar] [CrossRef]

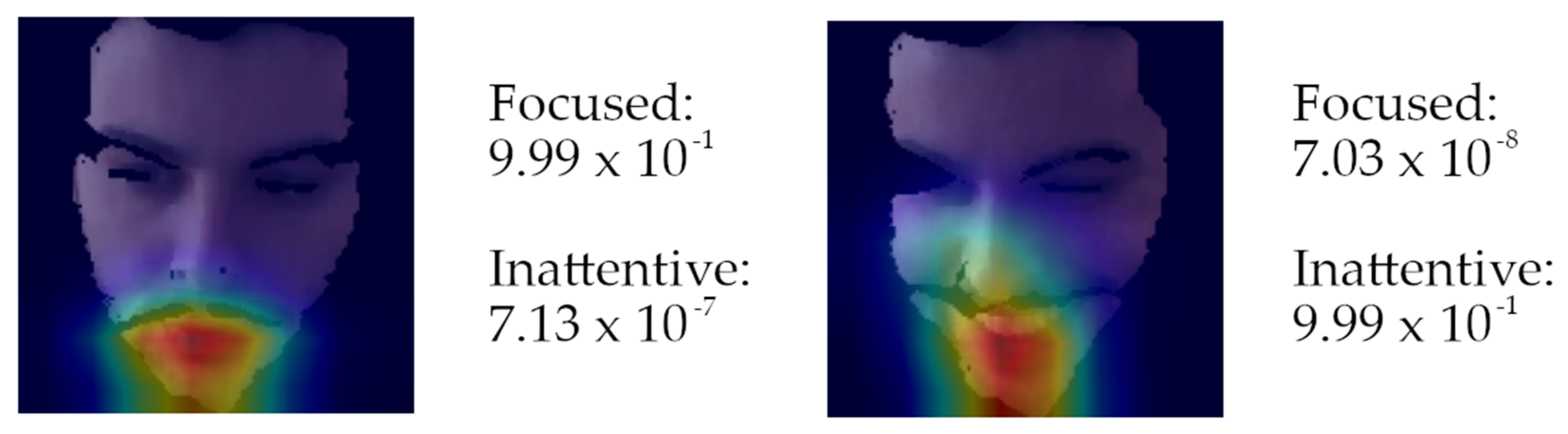

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef] [Green Version]

- Hungund, A.P.; Pai, G.; Pradhan, A.K. Systematic Review of Research on Driver Distraction in the Context of Advanced Driver Assistance Systems. Transp. Res. Rec. J. Transp. Res. Board 2021. [Google Scholar] [CrossRef]

| Experience ID | Number of Accidents | ADAS Activation |

|---|---|---|

| 01S | 2 | 2.58% |

| 01H | 2 | 0.77% |

| 02S | 2 | 9.28% |

| 02H | 1 | 32.15% |

| 03S | 2 | 17.48% |

| 03H | 1 | 7.30% |

| 04S | 1 | 1.59% |

| 04H | 0 | 0% |

| 06S | 0 | 24.68% |

| 06H | 1 | 22.79% |

| 07S | 1 | 10.38% |

| 07H | 1 | 21.49% |

| 09S | 0 | 15.01% |

| 09H | 1 | 15.28% |

| 10S | 0 | 5.20% |

| 10H | 0 | 8.01% |

| 11S | 1 | 28.78% |

| 11H | 2 | 9.05% |

| Experience ID | Event-CNN |

|---|---|

| 01S | 0.248 |

| 01H | 0.942 |

| 02S | 0.630 |

| 02H | 0.469 |

| 03S | 0.114 |

| 03H | 0.466 |

| 04S | - |

| 04H | −0.008 |

| 06S | 0.698 |

| 06H | - |

| 07S | 0.202 |

| 07H | 0.695 |

| 09S | 0.991 |

| 09H | - |

| 10S | - |

| 10H | - |

| 11S | 0.422 |

| 11H | 0.148 |

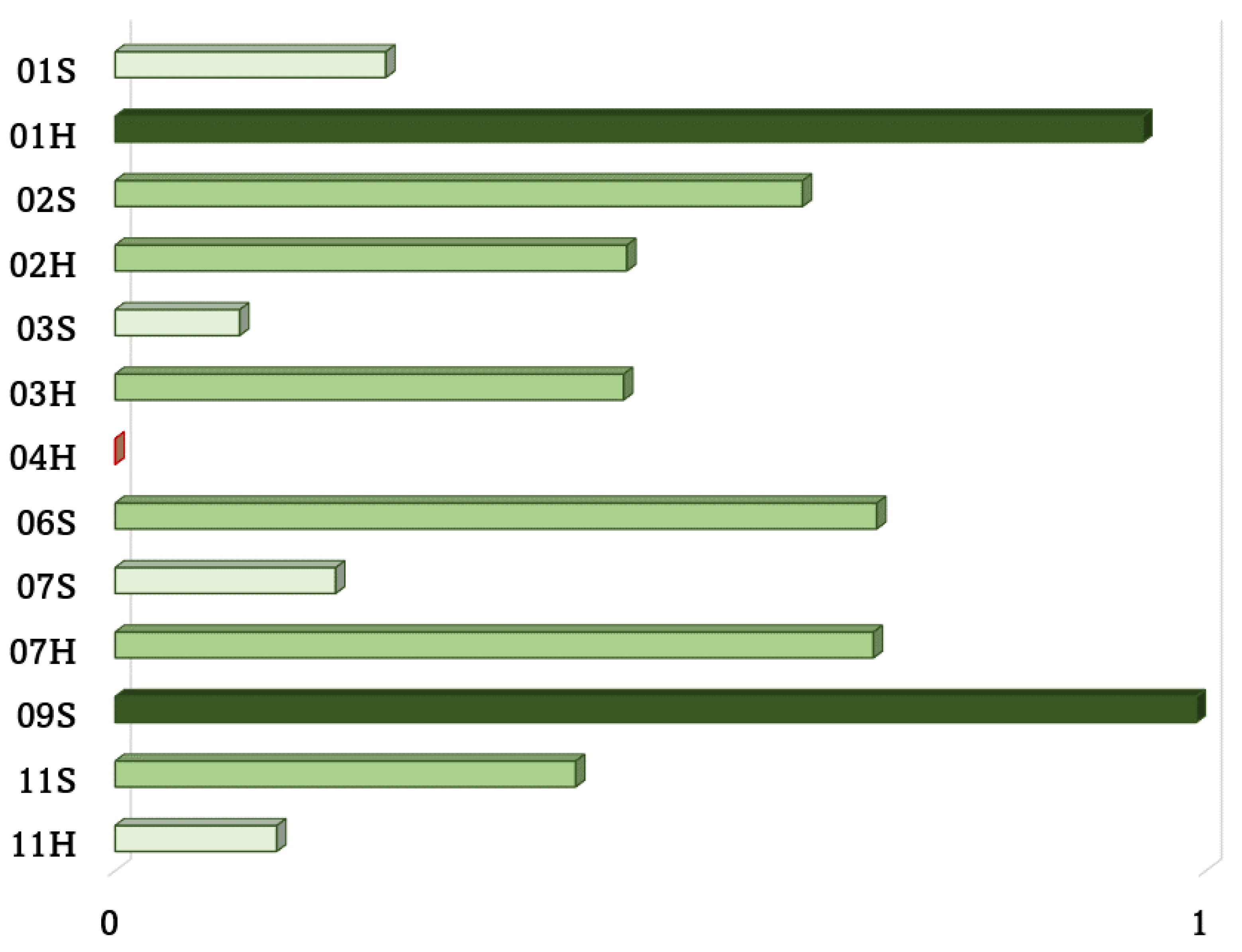

| Experience ID | ADAS-CNN |

|---|---|

| 01S | −0.020 |

| 01H | 0.024 |

| 02S | −0.346 |

| 02H | 0.124 |

| 03S | −0.027 |

| 03H | 0.012 |

| 04S | - |

| 04H | 0.008 |

| 06S | −0.04 |

| 06H | - |

| 07S | −0.030 |

| 07H | −0.179 |

| 09S | −0.172 |

| 09H | - |

| 10S | - |

| 10H | - |

| 11S | −0.165 |

| 11H | −0.110 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ulrich, L.; Nonis, F.; Vezzetti, E.; Moos, S.; Caruso, G.; Shi, Y.; Marcolin, F. Can ADAS Distract Driver’s Attention? An RGB-D Camera and Deep Learning-Based Analysis. Appl. Sci. 2021, 11, 11587. https://doi.org/10.3390/app112411587

Ulrich L, Nonis F, Vezzetti E, Moos S, Caruso G, Shi Y, Marcolin F. Can ADAS Distract Driver’s Attention? An RGB-D Camera and Deep Learning-Based Analysis. Applied Sciences. 2021; 11(24):11587. https://doi.org/10.3390/app112411587

Chicago/Turabian StyleUlrich, Luca, Francesca Nonis, Enrico Vezzetti, Sandro Moos, Giandomenico Caruso, Yuan Shi, and Federica Marcolin. 2021. "Can ADAS Distract Driver’s Attention? An RGB-D Camera and Deep Learning-Based Analysis" Applied Sciences 11, no. 24: 11587. https://doi.org/10.3390/app112411587

APA StyleUlrich, L., Nonis, F., Vezzetti, E., Moos, S., Caruso, G., Shi, Y., & Marcolin, F. (2021). Can ADAS Distract Driver’s Attention? An RGB-D Camera and Deep Learning-Based Analysis. Applied Sciences, 11(24), 11587. https://doi.org/10.3390/app112411587