A Study on Highway Driving Assist Evaluation Method Using the Theoretical Formula and Dual Cameras

Abstract

:1. Introduction

2. Theoretical Background

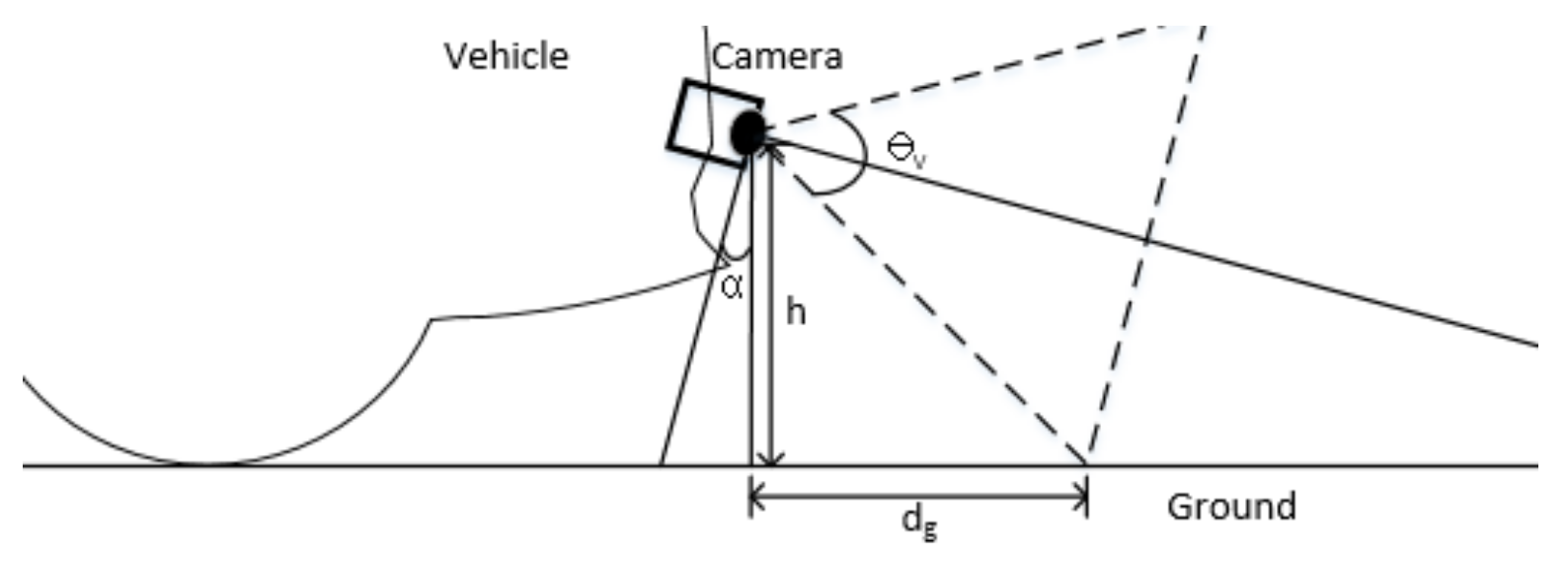

2.1. Proposal of Theoretical Formulas That Can Calculate Distance

2.2. Selecting the Optimal Installation Location for Dual Camera

2.3. Calculate the Lane Distance Using Dual Cameras

2.3.1. Variables of Camera Image

2.3.2. Geometrical Variables of the Vehicle

2.3.3. Proposal for Theoretical Formula

2.4. Design Standards for Highways

3. Actual Vehicle Test

3.1. Test Vehicle

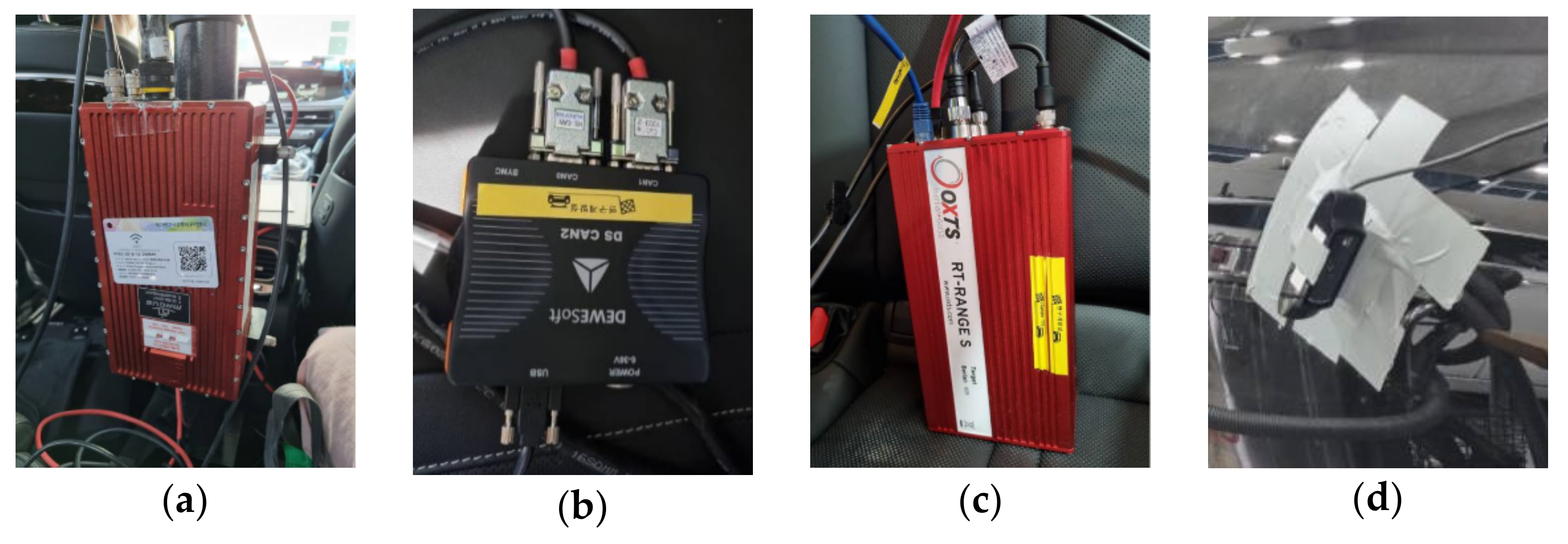

3.2. Test Equipment

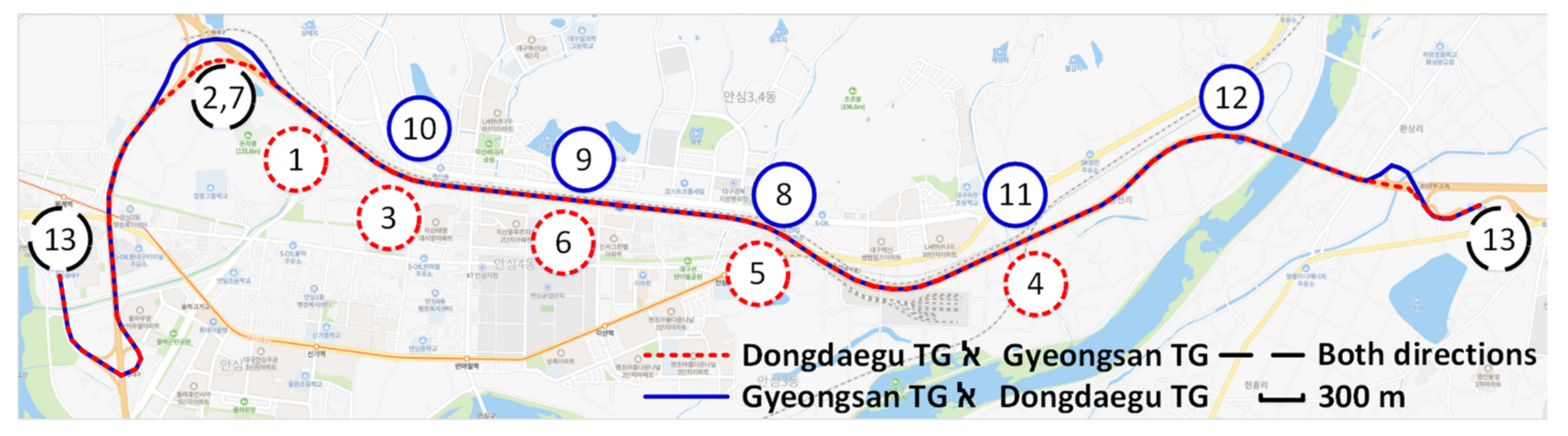

3.3. Test Location and Road Conditions

4. Test Results and Analysis

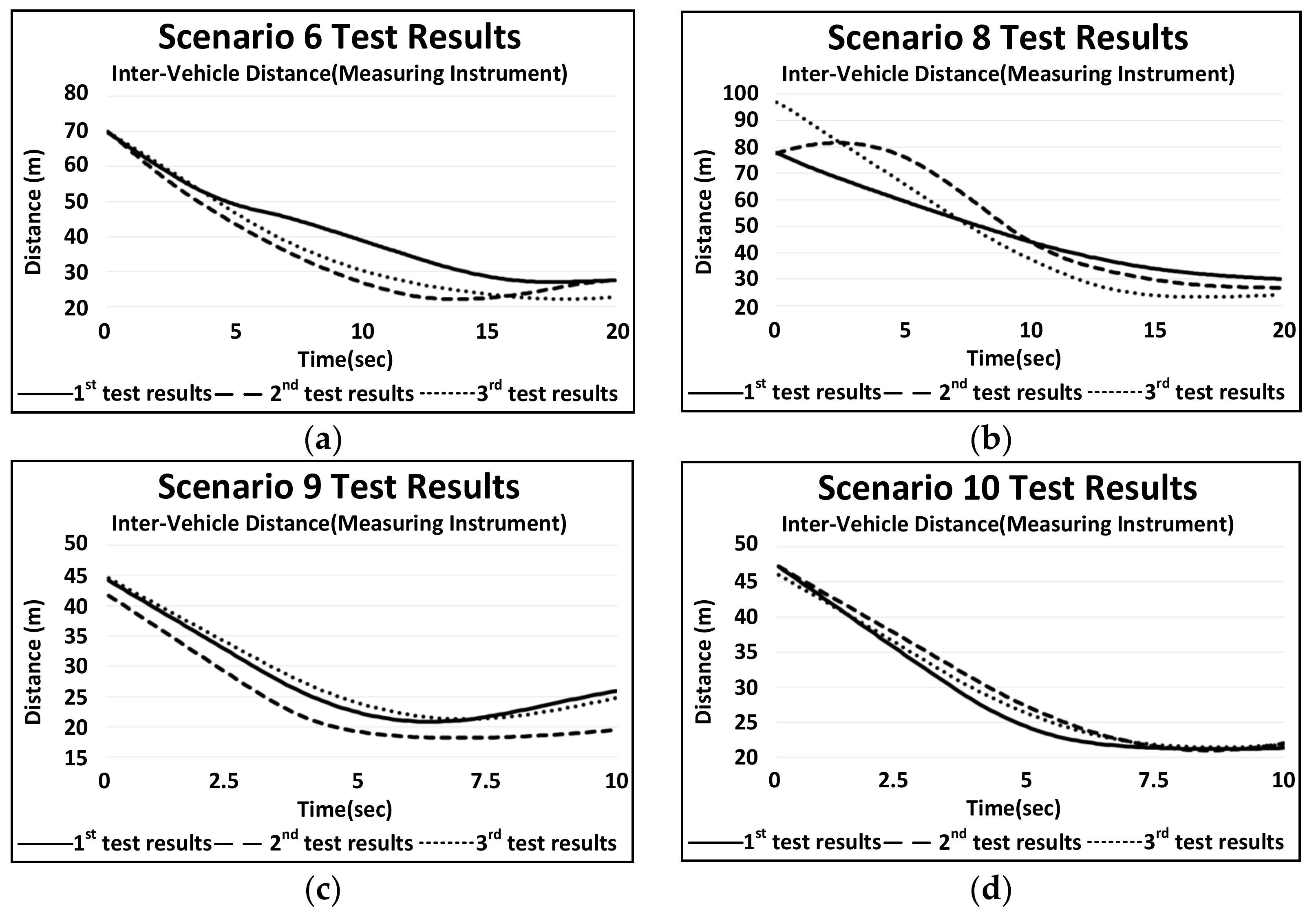

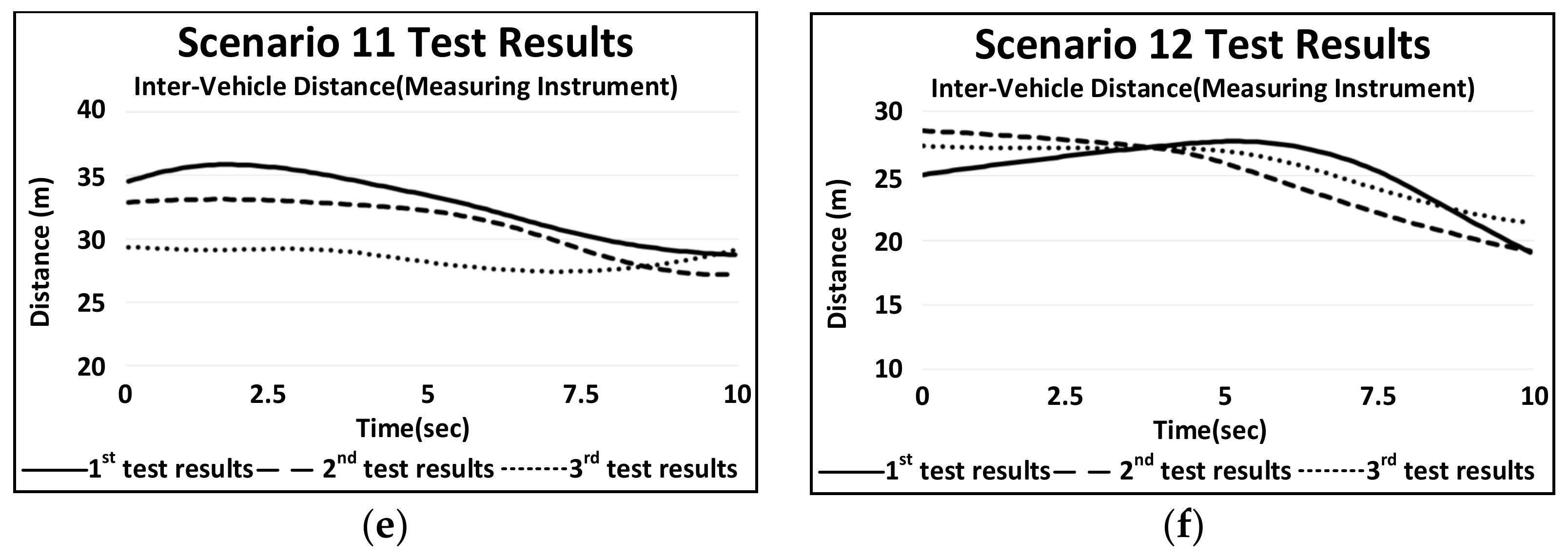

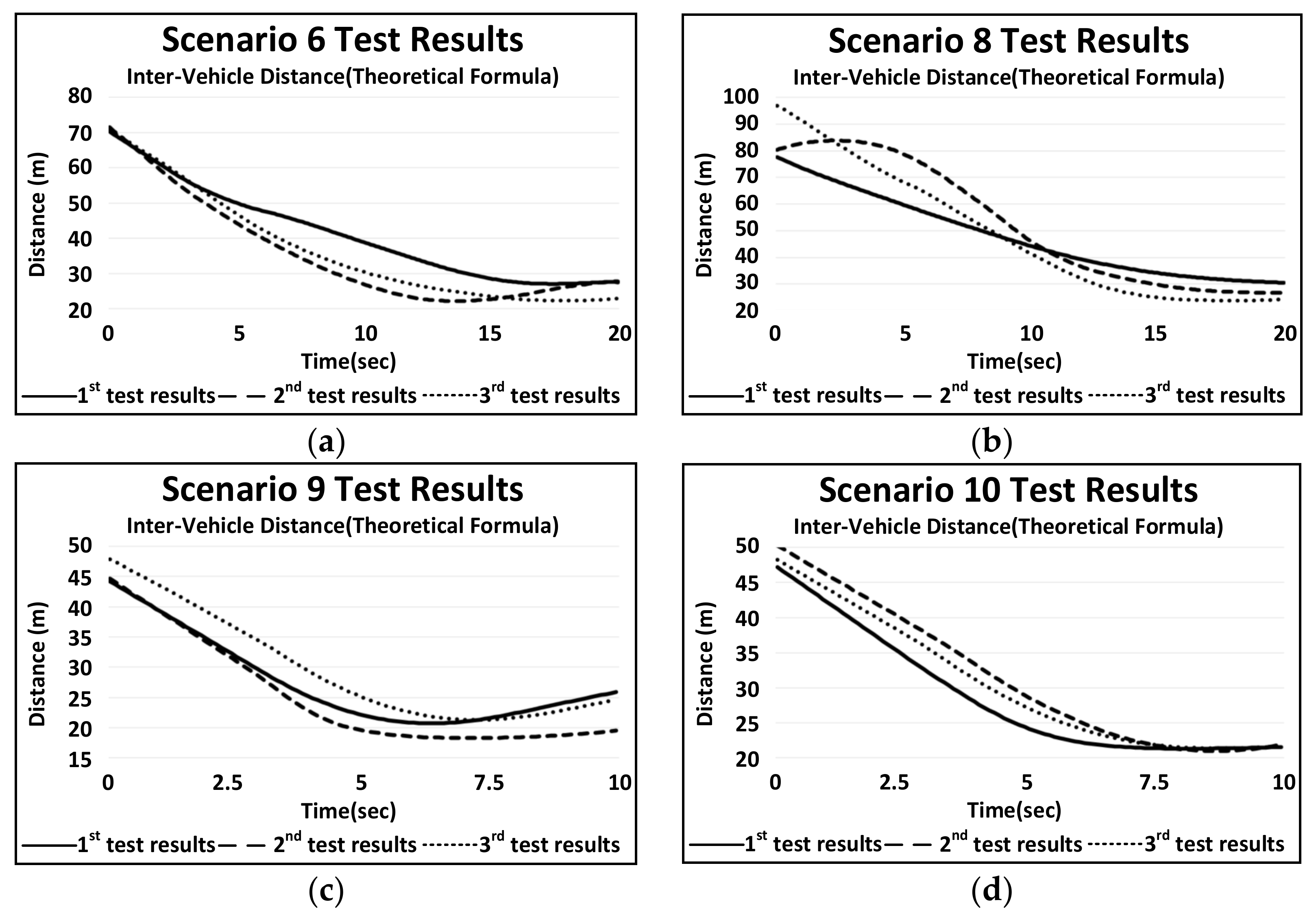

4.1. Results of the Actual Vehicle Test Using Measuring Instruments

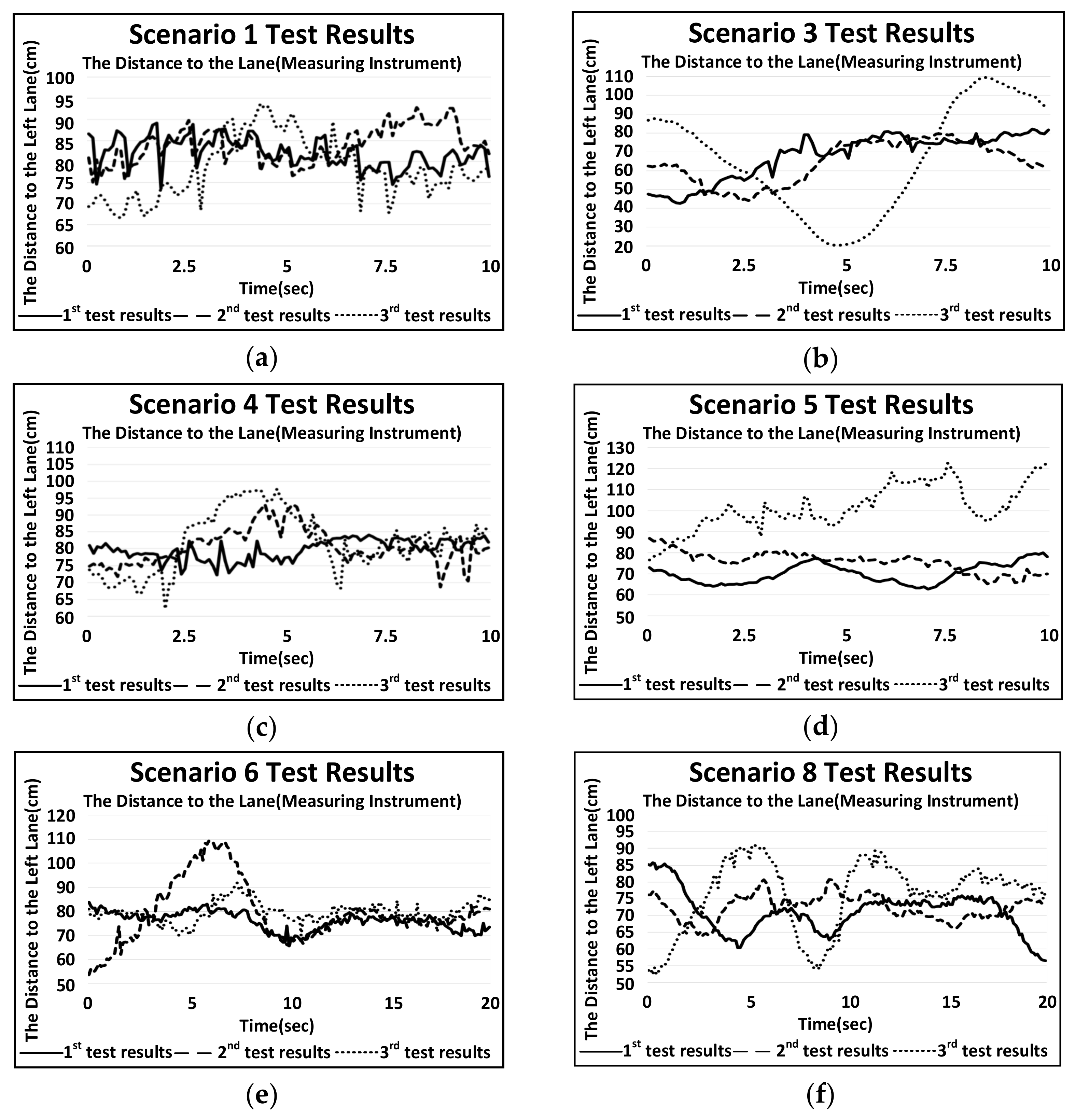

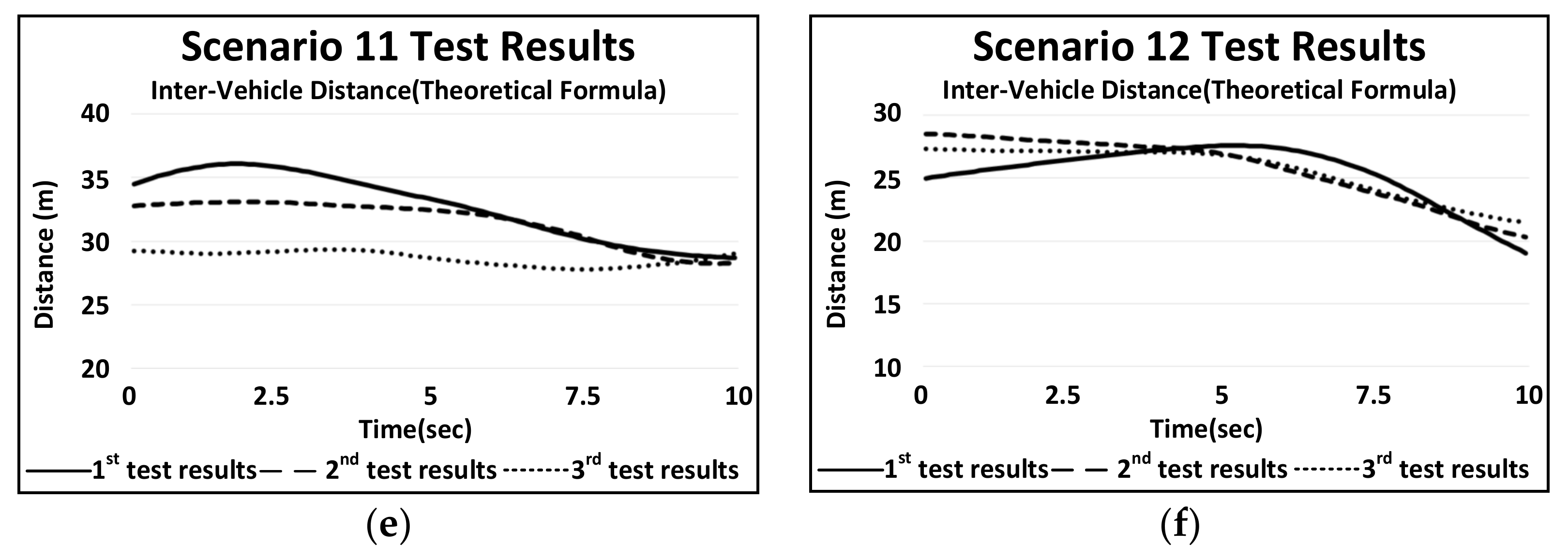

4.2. Results of the Actual Vehicle Test Using Theoretical Formulas

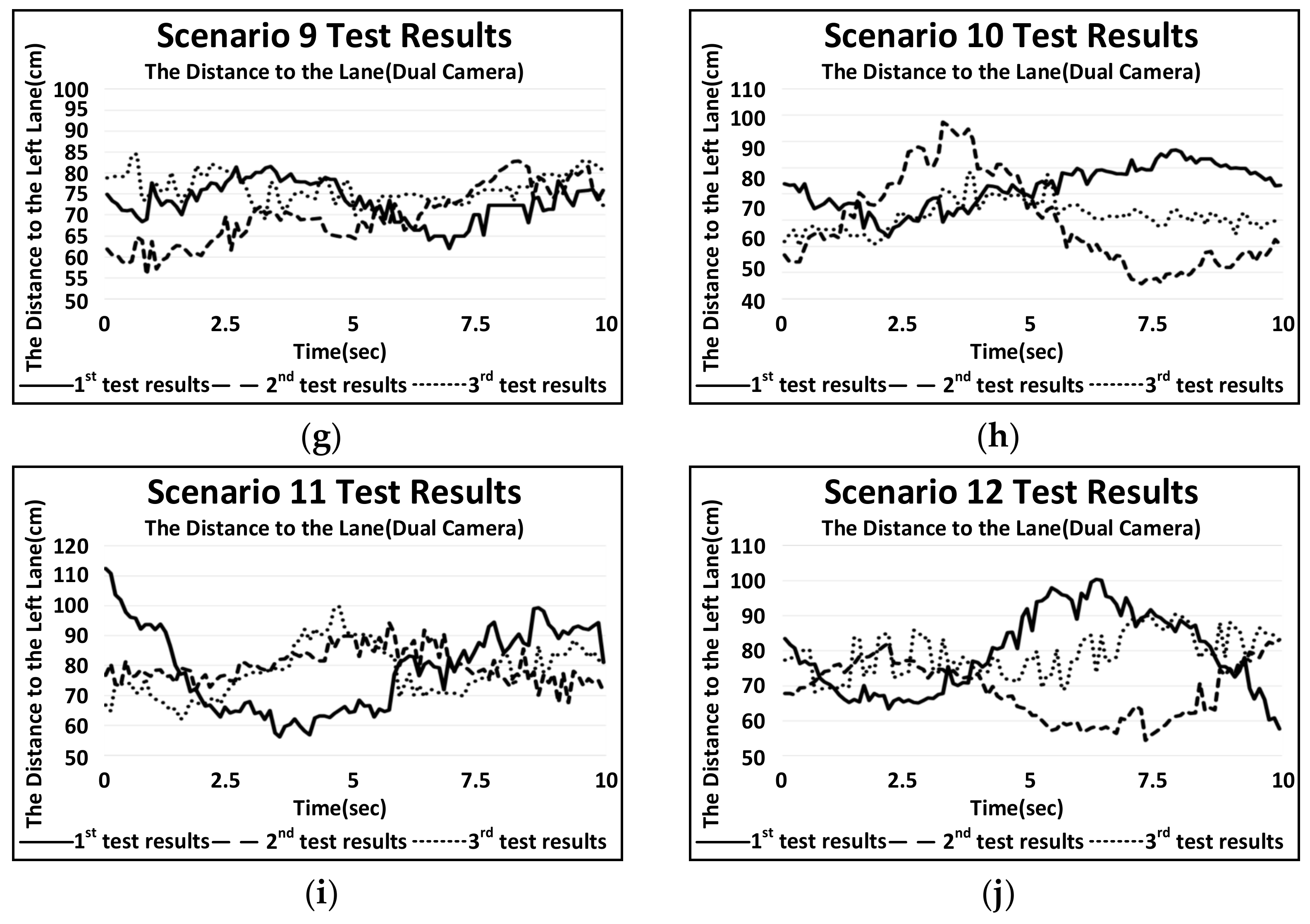

4.3. Results of the Actual Vehicle Test Using Dual Cameras

4.4. Comparative Analysis of the Results of the Actual Vehicle Test

4.4.1. Comparative Analysis of Inter-Vehicle Distance Results

4.4.2. Comparative Analysis of the Results of the Distance to the Lane

5. Conclusions

- (1)

- The formula proposed a new theoretical formula applicable to the safety evaluation of HDA. The proposed formula is a longitudinal theoretical formula capable of calculating the inter-vehicle distance, and a lateral theoretical formula capable of calculating the distance to the lane using a dual camera; the formula from previous studies was cited.

- (2)

- The actual vehicle test was conducted on the Dongdaegu TG–Gyeongsan TG section on the Gyeongbu Expressway of the Republic of Korea. To secure the objectivity of the test results, repeated tests were conducted three times with the same number of people, and same equipment and location (site). GENESIS G90 of company H** was used for the subject vehicle.

- (3)

- The HDA test and evaluation scenarios were selected according to the characteristics of each section of the highway and the results were analyzed. The analysis compared and evaluated the proposed longitudinal theoretical formula, the cited lateral theoretical formula, and the measured values of the measurement equipment by the actual vehicle test conducted on the highway.

- (4)

- The test results of the scenario were compared. As a result of the analysis of the lead vehicle and the relative distance by scenario, most of the maximum errors were within 10%, and the average error rate was within 5%. In the second and third test cases of scenario 7, the maximum error rate between 10% and 12% occurred momentarily. As a result of the analysis of the distance from the lane by scenario, the maximum errors were within 10%, and the average error rate was within 6%.

- (5)

- Compared with the precision measuring instrument through actual difference testing, the proposed theoretical formula showed that the longitudinal error rate was at least 0.005% and the maximum was 9.798%, and the lateral error rate was at least 0.007% and the maximum was 9.997%. Using the proposed theoretical formula, safety trends can be identified before development when studying an HDA system and reliability can be predicted in an environment where the actual test of the system is impossible.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Marsden, G.; McDonald, M.; Brackstone, M. Towards an understanding of adaptive cruise control. Transp. Res. Part C Emerg. Technol. 2001, 9, 33–51. [Google Scholar] [CrossRef]

- Kim, S.; Tomizuka, M.; Cheng, K. Smooth motion control of the adaptive cruise control system by a virtual lead vehicle. Int. J. Automot. Technol. 2012, 13, 77–85. [Google Scholar] [CrossRef]

- Kim, N.; Lee, J.; Soh, M.; Kwon, J.; Hong, T.; Park, K. Improvement of Longitudinal Safety System’s Performance on Near Cut-In Situation by Using the V2V. Korean Soc. Automot. Eng. 2013, 747–755. [Google Scholar]

- Moon, C.; Lee, Y.; Jeong, C.; Choi, S. Investigation of objective parameters for acceptance evaluation of automatic lane change system. Int. J. Automot. Technol. 2018, 19, 179–190. [Google Scholar] [CrossRef]

- Ulbrich, S.; Maurer, M. Towards Tactical Lane Change Behavior Planning for Automated Vehicles. In Proceedings of the 2015 IEEE 18th International Conference on Intelligent Transportation Systems, Gran Canaria, Spain, 15–18 September 2015; pp. 989–995. [Google Scholar] [CrossRef] [Green Version]

- Butakov, V.; Ioannou, P. Personalized Driver/Vehicle Lane Change Models for ADAS. IEEE Trans. Veh. Technol. 2015, 64, 4422–4431. [Google Scholar] [CrossRef]

- SAE International, SAE J3016: Levels of Driving Automation. Available online: https://www.sae.org/news/2019/01/sae-updates-j3016-automated-driving-graphic (accessed on 1 October 2021).

- Bae, G.H.; Kim, B.J.; Lee, S.B. A study on evaluation method of the HDA test in domestic road environment. J. Auto-Veh. Saf. Assoc. 2019, 11, 39–49. [Google Scholar]

- Bae, G.H.; Lee, S.B. A Study on the Evaluation Method of Highway Driving Assist System Using Monocular Camera. Appl. Sci. 2020, 10, 6443. [Google Scholar] [CrossRef]

- Kim, B.J.; Lee, S.B. A Study on Evaluation Method of the Adaptive Cruise Control. J. Drive Control 2017, 14, 8–17. [Google Scholar]

- Kim, B.J.; Lee, S.B. A Study on Evaluation Method of ACC Test Considering Domestic Road Environment. J. Korean Auto-Veh. Saf. Assoc. 2017, 9, 38–47. [Google Scholar]

- Bae, G.H.; Lee, S.B. A Study on the Test Evaluation Method of LKAS Using a Monocular Camera. J. Auto-Veh. Saf. Assoc. 2020, 12, 34–42. [Google Scholar] [CrossRef]

- Hwang, J.; Huh, K.; Na, H.; Jung, H.; Kang, H.; Yoon, P. Evaluation of Lane Keeping Assistance Controllers in HIL Simulations. In Proceedings of the 17th IFAC World Congress, Seoul, Korea, 6–11 July 2008. [Google Scholar] [CrossRef] [Green Version]

- Lee, K.; Li, S.E.; Kum, D. Synthesis of Robust Lane Keeping Systems: Impact of Controller and Design Parameters on System Performance. IEEE Trans. Intell. Transp. Syst. 2019, 20, 3129–3141. [Google Scholar] [CrossRef]

- Bae, G.H.; Lee, S.B. A Study on Calculation Method of Distance with Forward Vehicle Using Single-Camera. Proc. Symp. Korean Inst. Commun. Inf. Sci. 2019, 256–257. [Google Scholar]

- Lee, S.H.; Kim, B.J.; Lee, S.B. Study on Image Correction and Optimization of Mounting Positions of Dual Cameras for Vehicle Test. Energies 2021, 14, 2857. [Google Scholar] [CrossRef]

- Kalaki, A.S.; Safabakhsh, R. Current and adjacent lanes detection for an autonomous vehicle to facilitate obstacle avoidance using a monocular camera. In Proceedings of the 2014 Iranian Conference on Intelligent Systems (ICIS), Bam, Iran, 4–6 February 2014. [Google Scholar]

- Jiangwei, C.; Lisheng, J.; Lie, G.; Rongben, W. Study on method of detecting preceding vehicle based on monocular camera. In Proceedings of the 2004 IEEE Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004. [Google Scholar]

- Ravi, K.S.; Eshed, O.B.; Jinhee, L.; Hohyon, S.; Mohan, M.T. On-road vehicle detection with monocular camera for embedded realization: Robust algorithms and evaluations. In Proceedings of the 2014 International SoC Design Conference (ISOCC), Jeju, Korea, 3–6 November 2014. [Google Scholar]

- Shu, Y.; Tan, Z. Vision based lane detection in autonomous vehicle. In Proceedings of the Fifth World Congress on Intelligent Control and Automation, Hangzhou, China, 15–19 June 2004. [Google Scholar]

- Koo, S.M. Forward Collision Warning (FCW) System with Single Camera using Deep Learning and OBD-2. Master’s Thesis, Dankook University, Seoul, Korea, 2018. [Google Scholar]

- Zhao, X.; Sun, P.; Xu, Z.; Min, H.; Yu, H.K. Fusion of 3D LIDAR and Camera Data for Object Detection in Autonomous Vehicle Applications. IEEE Sens. J. 2020, 20, 4901–4913. [Google Scholar] [CrossRef] [Green Version]

- Yamaguti, N.; OE, S.; Terada, K. A method of distance measurement by using monocular camera. In Proceedings of the 36th SICE Annual Conference. International Session Papers, Tokushima, Japan, 29–31 July 1997. [Google Scholar]

- Song, Y.H. Real-time Vehicle Path Prediction based on Deep Learning Using Monocular Camera. Master’s Thesis, Hanyang University, Seoul, Korea, 2020. [Google Scholar]

- Heo, S.M. Distance and Speed Measurements of Moving Object Using Difference Image in Stereo Vision System. Master’s Thesis, Kwangwoon University, Seoul, Korea, 2002. [Google Scholar]

- Abduladhem, A.A.; Hussein, A.H. Distance estimation and vehicle position detection based on monocular camera. In Proceedings of the 2016 AI-Sadeq International Conference on Multidisciplinary in IT and Communication Science and Applications, Baghdad, Iraq, 9–10 May 2016. [Google Scholar]

- Korea Legislation Research Institute all rights Reserved, Road Act. Article 47 (Rules on Structure and Facilities Standards of Roads). Available online: http://www.law.go.kr/lsInfoP.do?lsiSeq=215507&efYd=20200306#0000 (accessed on 1 October 2021).

| Height (cm) | Baseline (cm) | Angle (Degree) | Error Rate (%) | |

|---|---|---|---|---|

| Average | Maximum | |||

| 30 | 10 | 3 | 13.28 | 48.62 |

| 7 | 14.07 | 45.36 | ||

| 12 | 14.15 | 53.04 | ||

| 20 | 3 | 6.89 | 23.66 | |

| 7 | 9.64 | 27.3 | ||

| 12 | 4.14 | 10.53 | ||

| 30 | 3 | 10.34 | 35.83 | |

| 7 | 9.5 | 33.35 | ||

| 12 | 8.34 | 33.99 | ||

| 40 | 10 | 3 | 5.55 | 13.77 |

| 7 | 7.84 | 15.38 | ||

| 12 | 8.18 | 26.9 | ||

| 20 | 3 | 4.93 | 15.19 | |

| 7 | 2.38 | 6.17 | ||

| 12 | 5.49 | 19.52 | ||

| 30 | 3 | 3.34 | 13.9 | |

| 7 | 1.88 | 5.69 | ||

| 12 | 0.86 | 2.15 | ||

| 50 | 10 | 3 | 10.62 | 32.49 |

| 7 | 3.77 | 10.94 | ||

| 12 | 8.19 | 27.67 | ||

| 20 | 3 | 2.47 | 5.81 | |

| 7 | 2.45 | 5.84 | ||

| 12 | 5.23 | 18.39 | ||

| 30 | 3 | 2.15 | 4.65 | |

| 7 | 2.45 | 8 | ||

| 12 | 1.32 | 2.34 | ||

| Spec. (m) | Length | Width | Height | Wheel Base | Minimum Turning Radius |

|---|---|---|---|---|---|

| Semi-trailer | 2.5 | 4.0 | 16.7 | Front: 4.2 Back: 9.0 | 12.0 |

| Classification According to Function of Road | Design Speed (km/h) | ||||

|---|---|---|---|---|---|

| Local | City | ||||

| Flat | Hill | Mountain | |||

| Main arterial | Highway | 120 | 110 | 100 | 100 |

| Design Speed (km/h) | Minimum Width of the Lane (m) | ||

|---|---|---|---|

| Local | City | Compact Roadway | |

| Over 100 | 3.50 | 3.50 | 3.25 |

| Design Speed (km/h) | Minimum Curve Radius According to Superelevation (m) | ||

|---|---|---|---|

| 6% | 7% | 8% | |

| 120 | 710 | 670 | 630 |

| 110 | 600 | 560 | 530 |

| 100 | 460 | 440 | 420 |

| Design Speed (km/h) | Maximum Longitudinal Slope (%) | |

|---|---|---|

| Highway | ||

| Flat | Hill | |

| 120 | 3 | 4 |

| 110 | 3 | 5 |

| 100 | 3 | 5 |

| Scenario no. | Condition | |||

|---|---|---|---|---|

| Lead Vehicle Velocity (km/h) | Subject Vehicle Velocity (km/h) | Road Curvature (m) | Note | |

| 1 | No lead vehicle | 90 | 0 | Straight |

| 2 | No lead vehicle | 90 | 350 | Ramp |

| 3 | No lead vehicle | 90 | 750 | Curve |

| 4 | 70 | 90 | 0 | Side lane |

| 5 | 70 | 90 | 750 | Curve |

| 6 | 70 | 90 | 0 | Main lane, Straight |

| 7 | 70 | 90 | 350 | Main lane, Ramp |

| 8 | 70 | 90 | 750 | Main lane, Curve |

| 9 | 70 | 90 | 0 | Cut-in, Straight |

| 10 | 70 | 90 | 750 | Cut-in, Curve |

| 11 | 70 | 90 | 0 | Cut-out, Straight |

| 12 | 70 | 90 | 750 | Cut-out, Curve |

| 13 | No lead vehicle | 90 | 0 | Tollgate |

| Name | Specification | Name | Specification |

|---|---|---|---|

| RT-3002 | - Single antenna model - Velocity Accuracy: 0.05 km/h RMS - Roll, Pitch: 0.03 deg Heading: 0.1 deg - GPS Accuracy: 2 cm RMS | DAQ | - Interface data rate: up to 1 Mbit/sec - Special protocols: OBDII, J1939, CAN out - Sampling rate: >10 kHz per channel software selectable |

| RT-range | - Operational temperature: 10 to 50 °C - Lateral distance to lane: ±30 m 0.02 m RMS - Lateral velocity to lane: ±20 m/s 0.02 m/s RMS - Lateral acceleration to lane: ±30 m 0.1 m/s2 RMS | Camera | - height: 43.3 mm - width: 94 mm - depth: 71 mm - field of view: 78° - field of view(horizontal): 70.42° - field of view(vertical): 43.3° - image resolution: 1920 × 1080 p - focal length: 3.67 mm |

| Curvature | Condition | Friction Coefficient |

|---|---|---|

| 0.750 m | Flat, dry, clean, asphalt | 1.079 |

| Scenario | Case | Result (Error Factor) (%) | Scenario | Case | Result (Error Factor) (%) | ||||

|---|---|---|---|---|---|---|---|---|---|

| Minimum | Maximum | Average | Minimum | Maximum | Average | ||||

| 6 | 1 | 0.025 | 9.135 | 2.481 | 10 | 1 | 0.351 | 9.367 | 4.162 |

| 2 | 0.014 | 9.707 | 3.568 | 2 | 0.024 | 9.471 | 3.484 | ||

| 3 | 0.005 | 9.665 | 3.004 | 3 | 0.164 | 8.213 | 4.017 | ||

| 8 | 1 | 0.028 | 9.487 | 3.275 | 11 | 1 | 0.009 | 9.128 | 2.910 |

| 2 | 0.074 | 9.798 | 3.493 | 2 | 0.084 | 8.969 | 5.128 | ||

| 3 | 0.013 | 9.351 | 3.228 | 3 | 0.013 | 9.484 | 4.040 | ||

| 9 | 1 | 0.049 | 9.453 | 2.935 | 12 | 1 | 0.205 | 9.391 | 4.222 |

| 2 | 0.740 | 8.418 | 4.487 | 2 | 0.806 | 9.074 | 6.720 | ||

| 3 | 0.063 | 8.933 | 1.987 | 3 | 0.003 | 8.794 | 3.874 | ||

| Scenario | Case | Result (Error Factor) (%) | Scenario | Case | Result (Error Factor) (%) | ||||

|---|---|---|---|---|---|---|---|---|---|

| Minimum | Maximum | Average | Minimum | Maximum | Average | ||||

| 1 | 1 | 0.053 | 9.970 | 4.250 | 8 | 1 | 0.046 | 9.930 | 3.870 |

| 2 | 0.013 | 9.414 | 3.845 | 2 | 0.005 | 9.993 | 3.359 | ||

| 3 | 0.162 | 9.939 | 4.469 | 3 | 0.123 | 9.951 | 4.695 | ||

| 3 | 1 | 0.089 | 9.939 | 5.007 | 9 | 1 | 0.045 | 9.519 | 3.575 |

| 2 | 0.214 | 9.946 | 5.455 | 2 | 0.022 | 9.397 | 3.610 | ||

| 3 | 0.012 | 9.979 | 5.680 | 3 | 0.022 | 9.885 | 3.458 | ||

| 4 | 1 | 0.022 | 9.520 | 3.622 | 10 | 1 | 0.229 | 9.975 | 5.912 |

| 2 | 0.020 | 9.948 | 4.299 | 2 | 0.011 | 9.883 | 4.579 | ||

| 3 | 0.044 | 9.976 | 4.544 | 3 | 0.138 | 9.933 | 4.701 | ||

| 5 | 1 | 0.037 | 9.872 | 4.960 | 11 | 1 | 0.120 | 9.781 | 4.063 |

| 2 | 0.024 | 9.861 | 4.880 | 2 | 0.035 | 9.347 | 3.615 | ||

| 3 | 0.044 | 9.919 | 5.014 | 3 | 0.007 | 9.929 | 4.832 | ||

| 6 | 1 | 0.035 | 9.943 | 4.576 | 12 | 1 | 0.271 | 9.966 | 4.727 |

| 2 | 0.123 | 9.821 | 4.532 | 2 | 0.134 | 9.949 | 5.832 | ||

| 3 | 0.016 | 9.751 | 3.881 | 3 | 0.045 | 9.997 | 5.481 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, B.-J.; Lee, S.-B. A Study on Highway Driving Assist Evaluation Method Using the Theoretical Formula and Dual Cameras. Appl. Sci. 2021, 11, 11903. https://doi.org/10.3390/app112411903

Kim B-J, Lee S-B. A Study on Highway Driving Assist Evaluation Method Using the Theoretical Formula and Dual Cameras. Applied Sciences. 2021; 11(24):11903. https://doi.org/10.3390/app112411903

Chicago/Turabian StyleKim, Bong-Ju, and Seon-Bong Lee. 2021. "A Study on Highway Driving Assist Evaluation Method Using the Theoretical Formula and Dual Cameras" Applied Sciences 11, no. 24: 11903. https://doi.org/10.3390/app112411903

APA StyleKim, B.-J., & Lee, S.-B. (2021). A Study on Highway Driving Assist Evaluation Method Using the Theoretical Formula and Dual Cameras. Applied Sciences, 11(24), 11903. https://doi.org/10.3390/app112411903