Hybrid Spine Simulator Prototype for X-ray Free Pedicle Screws Fixation Training

Abstract

1. Introduction

- A “Virtual X-Ray Visualization”, simulating X-ray images of the anatomy to train for the uniplanar fluoroscopic targeting of pedicles without any exposure to harmful radiation.

- An “AR Visualization”, allowing the observation of the torso with overlaid virtual content to assist in the implantation of the screws at the proper anatomical targets (vertebral peduncles).

2. Materials and Methods

2.1. Hardware Components

2.2. Software Architecture

2.2.1. ArUco 3D Tracking

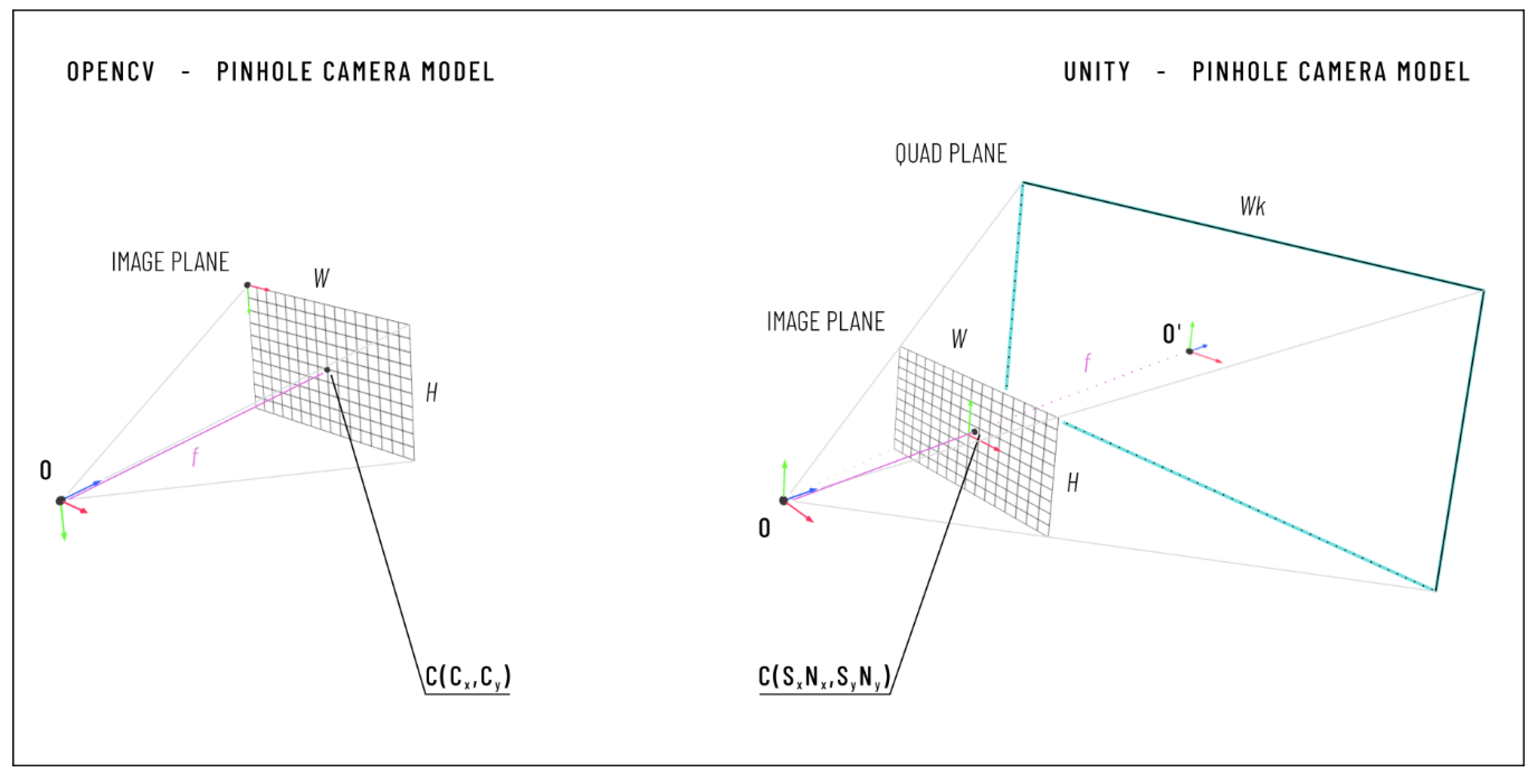

2.2.2. Camera Calibration

- the horizontal and vertical focal length expressed in pixels (fx, fy);

- the coordinates of the principal point in pixels (cx, cy);

- two radial distortion coefficients (K1 and K2); and

- the input image size in pixels (Nx, Ny).

- The calibration cube is positioned inside the field of view (FOV) of both cameras, and CamLat and CamTop simultaneously acquire the diamond markers.

- ArUco libraries are used to estimate the pose of each diamond marker in the reference system of the corresponding tracking camera. We refer to the position vector from the CamTop reference system to the origin of the DiamondTop reference system as , while we use to denote the position vector from the origin of the CamLat reference system to the origin of the reference system of the DiamondLat (see Figure 2b).

- The position of the cube center is estimated in each camera reference system from the position of the two diamond markers , the orientation of their Z-axis () expressed in the tracking camera reference system, and the size (l) of the ArUco Diamond marker according to Equations (1) and (2).where is the position vector from the origin of the CamTop reference system to the calibration cube center, and is the position vector from the origin of the CamLat reference system to the calibration cube center.

- Steps 1–2–3 are repeated at least three times moving the cube in the camera FOV to collect two clouds of n-positions (n ≥ 3).

- A rigid point cloud registration algorithm based on singular value decomposition (SVD) is used to calculate the transformation matrix (TopTLat in Figure 2b) between the reference systems of the two cameras from the collected clouds of positions (n-positions of the center of the calibration cube expressed in the reference systems of the two cameras).

2.2.3. AR Visualization

2.2.4. Virtual X-ray Visualization

2.2.5. User Interface

2.3. Simulator Testing

2.3.1. Evaluation of the Camera Calibration and AR Visualization

2.3.2. Evaluation of the Total Error

3. Results

4. Discussion and Conclusions

- patient-specific modeling to improve the realism of the simulated surgical pathology;

- rapid prototyping for the manufacturing of synthetic vertebral models;

- AR to enrich the simulated surgical scenario and help the learner to carry out the procedure;

- VR functionalities for simulating X-ray images of the anatomy to train for the uniplanar fluoroscopic targeting of pedicles without any exposure to harmful radiations.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Atesok, K.; Hurwitz, S.; Anderson, D.D.; Satava, R.; Thomas, G.W.; Tufescu, T.; Heffernan, M.J.; Papavassiliou, E.; Theiss, S.; Marsh, J.L. Advancing Simulation-Based Orthopaedic Surgical Skills Training: An Analysis of the Challenges to Implementation. Adv. Orthop. 2019, 2019, 2586034. [Google Scholar] [CrossRef]

- Parchi, P.; Evangelisti, G.; Cervi, V.; Andreani, L.; Carbone, M.; Condino, S.; Ferrari, V.; Lisanti, M. Patient’s Specific Template for Spine Surgery. In Computer-Assisted Musculoskeletal Surgery; Ritacco, L.E., Milano, F.E., Chao, E., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2016. [Google Scholar] [CrossRef]

- Ferrari, V.; Parchi, P.; Condino, S.; Carbone, M.; Baluganti, A.; Ferrari, M.; Mosca, F.; Lisanti, M. An optimal design for patient-specific templates for pedicle spine screws placement. Int. J. Med. Robot. Comput. Assist. Surg. 2013, 9, 298–304. [Google Scholar] [CrossRef]

- Kotsis, S.V.; Chung, K.C. Application of the “see one, do one, teach one” concept in surgical training. Plast. Reconstr. Surg. 2013, 131, 1194–1201. [Google Scholar] [CrossRef]

- Stirling, E.R.; Lewis, T.L.; Ferran, N.A. Surgical skills simulation in trauma and orthopaedic training. J. Orthop. Surg. Res. 2014, 9, 126. [Google Scholar] [CrossRef] [PubMed]

- Yiasemidou, M.; Gkaragkani, E.; Glassman, D.; Biyani, C.S. Cadaveric simulation: A review of reviews. Ir. J. Med. Sci. 2018, 187, 827–833. [Google Scholar] [CrossRef] [PubMed]

- Parchi, P.; Condino, S.; Carbone, M.; Gesi, M.; Ferrari, V.; Ferrari, M.; Lisanti, M. Total hip replacement simulators with virtual planning and physical replica for surgical training and reharsal. In Proceedings of the 12th IASTED International Conference on Biomedical Engineering, BioMed, Innsbruck, Austria, 15–17 February 2016; pp. 97–101. [Google Scholar]

- Anderson, P.A. Chapter 6—3D Printing for Education and Surgical Planning in Orthopedic Surgery. In 3D Printing in Orthopaedic Surgery; Dipaola, M., Wodajo, F.M., Eds.; Elsevier: Amsterdam, The Netherlands, 2019; pp. 55–63. [Google Scholar] [CrossRef]

- Park, H.J.; Wang, C.; Choi, K.H.; Kim, N. Use of a life-size three-dimensional-printed spine model for pedicle screw instrumentation training. J. Orthop. Surg. Res. 2018, 13, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Vaughan, N.; Dubey, V.N.; Wainwright, T.W.; Middleton, R.G. A review of virtual reality based training simulators for orthopaedic surgery. Med. Eng. Phys. 2016, 38, 59–71. [Google Scholar] [CrossRef] [PubMed]

- Pfandler, M.; Lazarovici, M.; Stefan, P.; Wucherer, P.; Weigl, M. Virtual reality-based simulators for spine surgery: A systematic review. Spine J. 2017, 17, 1352–1363. [Google Scholar] [CrossRef] [PubMed]

- Immersive Touch Surgical Simulation Suite for Spine Surgery. Available online: https://www.immersivetouch.com/ivsp-for-spine-surgery (accessed on 11 December 2020).

- Gasco, J.; Patel, A.; Ortega-Barnett, J.; Branch, D.; Desai, S.; Kuo, Y.F.; Luciano, C.; Rizzi, S.; Kania, P.; Matuyauskas, M.; et al. Virtual reality spine surgery simulation: An empirical study of its usefulness. Neurol. Res. 2014, 36, 968–973. [Google Scholar] [CrossRef]

- Condino, S.; Turini, G.; Parchi, P.D.; Viglialoro, R.M.; Piolanti, N.; Gesi, M.; Ferrari, M.; Ferrari, V. How to Build a Patient-Specific Hybrid Simulator for Orthopaedic Open Surgery: Benefits and Limits of Mixed-Reality Using the Microsoft HoloLens. J. Healthc. Eng. 2018, 2018. [Google Scholar] [CrossRef] [PubMed]

- Viglialoro, R.M.; Condino, S.; Gesi, M.; Ferrari, M.; Ferrari, V. Augmented reality simulator for laparoscopic cholecystectomy training. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2014; Volume 8853, pp. 428–433. [Google Scholar]

- Viglialoro, R.M.; Esposito, N.; Condino, S.; Cutolo, F.; Guadagni, S.; Gesi, M.; Ferrari, M.; Ferrari, V. Augmented Reality to Improve Surgical Simulation: Lessons Learned Towards the Design of a Hybrid Laparoscopic Simulator for Cholecystectomy. IEEE Trans. Biomed. Eng. 2019, 66, 2091–2104. [Google Scholar] [CrossRef] [PubMed]

- Microsoft HoloLens (1st Gen). Available online: https://docs.microsoft.com/en-us/hololens/hololens1-hardware (accessed on 12 January 2021).

- Condino, S.; Carbone, M.; Piazza, R.; Ferrari, M.; Ferrari, V. Perceptual Limits of Optical See-Through Visors for Augmented Reality Guidance of Manual Tasks. IEEE Trans. BioMed Eng. 2019. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Kane, D.; Banks, M.S. The rate of change of vergence–accommodation conflict affects visual discomfort. Vis. Res. 2014, 105, 159–165. [Google Scholar] [CrossRef] [PubMed]

- Kim, E.; Shin, G. Head Rotation and Muscle Activity When Conducting Document Editing Tasks with a Head-Mounted Display. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2018, 62, 952–955. [Google Scholar] [CrossRef]

- Bott, O.; Dresing, K.; Wagner, M.; Raab, B.-W.; Teistler, M. Use of a C-Arm Fluoroscopy Simulator to Support Training in Intraoperative Radiography. Radiographics 2011, 31, E65–E75. [Google Scholar] [CrossRef]

- Yushkevich, P.A.; Piven, J.; Hazlett, H.C.; Smith, R.G.; Ho, S.; Gee, J.C.; Gerig, G. User-guided 3D active contour segmentation of anatomical structures: Significantly improved efficiency and reliability. NeuroImage 2006, 31, 1116–1128. [Google Scholar] [CrossRef]

- Cignoni, P.; Callieri, M.; Corsini, M.; Dellepiane, M.; Ganovelli, F.; Ranzuglia, G. MeshLab: An Open-Source Mesh Processing Tool. In Proceedings of the Eurographics Italian Chapter Conference, Salerno, Italy, 2–4 July 2008; Volume 1, pp. 129–136. [Google Scholar]

- Parchi, P.D.; Ferrari, V.; Piolanti, N.; Andreani, L.; Condino, S.; Evangelisti, G.; Lisanti, M. Computer tomography prototyping and virtual procedure simulation in difficult cases of hip replacement surgery. Surg. Technol. Int. 2013, 23, 228–234. [Google Scholar]

- Parchi, P.; Carbone, M.; Condino, S.; Stagnari, S.; Rocchi, D.; Colangeli, S.; Ferrari, M.; Scaglione, M.; Ferrari, V. Patients Specific Spine Simulators for Surgical Training and Rehearsal in Pedicule Screws Placement: A New Way for Surgical Education. In Proceedings of the CAOS 2020. The 20th Annual Meeting of the International Society for Computer Assisted Orthopaedic Surgery, Brest, France, 10–13 June 2020; pp. 225–230. [Google Scholar]

- Unity3D. 2020. Available online: https://unity.com/ (accessed on 27 October 2020).

- OpenCV for Unity. Available online: https://enoxsoftware.com/opencvforunity/ (accessed on 27 October 2020).

- Romero-Ramirez, F.J.; Muñoz-Salinas, R.; Medina-Carnicer, R. Speeded up detection of squared fiducial markers. Image Vis. Comput. 2018, 76, 38–47. [Google Scholar] [CrossRef]

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.; Medina-Carnicer, R. Generation of fiducial marker dictionaries using Mixed Integer Linear Programming. Pattern Recognit. 2015, 51, 481–491. [Google Scholar] [CrossRef]

- Kam, H.C.; Yu, Y.K.; Wong, K.H. An Improvement on ArUco Marker for Pose Tracking Using Kalman Filter. In Proceedings of the 2018 19th IEEE/ACIS International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD), Busan, Korea, 27–29 June 2018; pp. 65–69. [Google Scholar]

- Matlab Computer Vision Toolbox. Available online: https://www.mathworks.com/products/computer-vision.html (accessed on 29 October 2020).

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- OpenCV—Detection of Diamond Markers. Available online: https://docs.opencv.org/master/d5/d07/tutorial_charuco_diamond_detection.html (accessed on 15 December 2020).

- Unity Manual—Using Physical Cameras. Available online: https://docs.unity3d.com/Manual/PhysicalCameras.html (accessed on 11 December 2020).

- Daly, M.J.; Siewerdsen, J.H.; Cho, Y.B.; Jaffray, D.A.; Irish, J.C. Geometric calibration of a mobile C-arm for intraoperative cone-beam CT. Med. Phys. 2008, 35, 2124–2136. [Google Scholar] [CrossRef] [PubMed]

- Ritschl, L.; Kuntz, J.; Kachelrieß, M. The Rotate-Plus-Shift C-Arm Trajectory: Complete CT Data with Limited Angular Rotation; SPIE: Bellingham, WA, USA, 2015; Volume 9412. [Google Scholar]

- Mamone, V.; Viglialoro, R.M.; Cutolo, F.; Cavallo, F.; Guadagni, S.; Ferrari, V. Robust Laparoscopic Instruments Tracking Using Colored Strips. In Proceedings of the Augmented Reality, Virtual Reality, and Computer Graphics, Ugento, Italy, 12–15 June 2017; pp. 129–143. [Google Scholar]

- Yuen, H.K.; Princen, J.; Illingworth, J.; Kittler, J. Comparative study of Hough Transform methods for circle finding. Image Vis. Comput. 1990, 8, 71–77. [Google Scholar] [CrossRef]

- Ferrari, V.; Viglialoro, R.M.; Nicoli, P.; Cutolo, F.; Condino, S.; Carbone, M.; Siesto, M.; Ferrari, M. Augmented reality visualization of deformable tubular structures for surgical simulation. Int. J. Med. Robot. Comput. Assist. Surg. 2016, 12, 231–240. [Google Scholar] [CrossRef] [PubMed]

- Lien, S.-B.; Liou, N.-H.; Wu, S.-S. Analysis of anatomic morphometry of the pedicles and the safe zone for through-pedicle procedures in the thoracic and lumbar spine. Eur. Spine J. 2007, 16, 1215–1222. [Google Scholar] [CrossRef] [PubMed]

- Yaniv, Z. Which pivot calibration? In Medical Imaging 2015: Image-Guided Procedures, Robotic Interventions, and Modeling; SPIE: Bellingham, WA, USA, 2015. [Google Scholar] [CrossRef]

| Pos 1 | Pos 2 | Pos 3 | Pos 4 | Pos 5 | Pos 6 | Pos 7 | Pos 8 | Pos 9 | Pos 10 | Total | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CamLat | TVE2D (pixel) | µ= 8.1 | 9.1 | 10.1 | 10 | 6.5 | 8.8 | 7.4 | 7.5 | 5.5 | 6.6 | 8 |

| σ= 7.7 | 7.8 | 9.9 | 9.2 | 5.5 | 9.2 | 8.4 | 8.5 | 6.1 | 6.4 | 4.1 | ||

| TVE3D (mm) | 0.7 | 0.9 | 1 | 1 | 0.6 | 0.9 | 0.8 | 0.8 | 0.6 | 0.7 | 0.8 | |

| 0.3 | 0.4 | 0.4 | 0.7 | 0.3 | 0.4 | 0.3 | 0.4 | 0.3 | 0.5 | 0.4 | ||

| CamTop | TVE2D (pixel) | 5.9 | 6.5 | 4.9 | 4.7 | 4.5 | 6.6 | 6.6 | 3.9 | 4.1 | 6.8 | 5.5 |

| 2.8 | 3.8 | 3.2 | 3.6 | 1.2 | 3.4 | 3.2 | 2.2 | 2.2 | 3.1 | 3 | ||

| TVE3D [mm] | 1.7 | 1.9 | 1.5 | 1.4 | 1.4 | 2 | 2 | 1.1 | 1.3 | 2 | 1.6 | |

| 0.8 | 1.1 | 0.9 | 1.1 | 0.4 | 1 | 1 | 0.6 | 0.7 | 0.9 | 0.4 | ||

| Vertebra 1 | Vertebra 2 | Vertebra 3 | Vertebra 4 | Total | ||

|---|---|---|---|---|---|---|

| Targeting Error | LL (mm) | 1.9 | 2.2 | 2.1 | 2.1 | 2.1 |

| 0.8 | 0.6 | 1.1 | 1.3 | 1.0 | ||

| AP (mm) | 2.5 | 1.7 | 1.6 | 1.8 | 1.9 | |

| 0.5 | 0.8 | 0.4 | 1.4 | 0.9 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Condino, S.; Turini, G.; Mamone, V.; Parchi, P.D.; Ferrari, V. Hybrid Spine Simulator Prototype for X-ray Free Pedicle Screws Fixation Training. Appl. Sci. 2021, 11, 1038. https://doi.org/10.3390/app11031038

Condino S, Turini G, Mamone V, Parchi PD, Ferrari V. Hybrid Spine Simulator Prototype for X-ray Free Pedicle Screws Fixation Training. Applied Sciences. 2021; 11(3):1038. https://doi.org/10.3390/app11031038

Chicago/Turabian StyleCondino, Sara, Giuseppe Turini, Virginia Mamone, Paolo Domenico Parchi, and Vincenzo Ferrari. 2021. "Hybrid Spine Simulator Prototype for X-ray Free Pedicle Screws Fixation Training" Applied Sciences 11, no. 3: 1038. https://doi.org/10.3390/app11031038

APA StyleCondino, S., Turini, G., Mamone, V., Parchi, P. D., & Ferrari, V. (2021). Hybrid Spine Simulator Prototype for X-ray Free Pedicle Screws Fixation Training. Applied Sciences, 11(3), 1038. https://doi.org/10.3390/app11031038