1. Inference in Service Robots

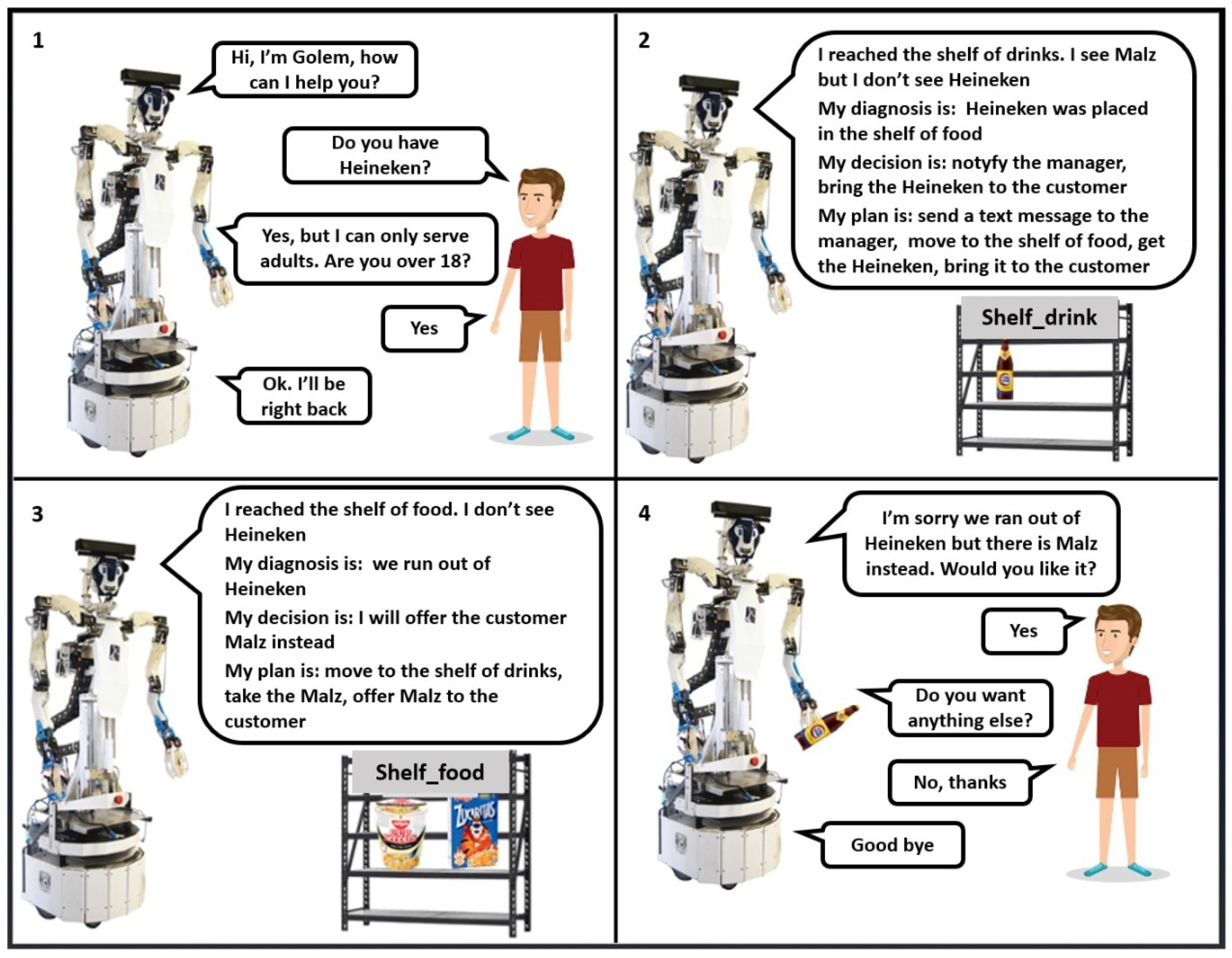

Fully autonomous service robots aimed to support people in common daily tasks require competence in an ample range of faculties, such as perception, language, thought, and motor behavior, in which deployment should be highly coordinated for the execution of service robotics tasks. A hypothetical illustrative scenario in which a general purpose service robot performs as a supermarket assistant is shown in the story-board in

Figure 1. The overall purposes of the robot in the present scenario are (i) to attend the customer’s information and action requests or commands; (ii) to keep the supermarket in order, and (iii) to keep the manager informed about the state of the inventory in the stands. The basic behavior can be specified schematically; but, if the world varies in unexpected ways, due to spontaneous behavior of other agents or to unexpected natural events, the robot has to reason to complete the service tasks successfully. The robot greets the customer, offers help and the customer asks for a beer in the top-left box 1. The command is performed as an indirect speech act in the form of a question. The robot has conceptual knowledge stored in his knowledge-base, including the obligations and preferences of the agents involved. In this case, the restriction that alcoholic beverages can only be served to people over eighteen. This prompts the robot to issue an information request to confirm the age of the customer. When the user does so, the robot is ready to accomplish the task. The robot has a scheme to deliver the order and also knowledge about the kinds of objects in the supermarket, including their locations. So, if everything is as expected the robot can accomplish the task successfully by executing the scheme. With this information, the robot moves to the stand of drinks where the beer should be found. However, in the present scenario, the Heineken is not there, the scheme is broken down, and, to proceed, the robot needs to reason. As this is an expensive resource, it should be employed on demand.

The reasoning process involves three main kinds of inference:

An abductive inference process to the effect of diagnosing the cause of the current state of the world which differs from the expected one.

A decision-making inference to the effect of deciding what to do to produce the desired state on the basis of the diagnosis and the overall purposes of the agent in the task.

The decision made becomes the goal of a plan that has to be induced and carried out to produce the desired state of the world.

We refer to this cycle as the common sense daily-life inference cycle. It also has to be considered that the world may have changed along the development of the task or that the robot may fail in achieving some actions of the plan, and it needs to check again along the way; if the world is as expected, the execution of the plan is continued; but, if something is wrong, the robot needs to engage recurrently in the daily-life inference cycle until the task is completed, or the robot needs to give up.

The first instance of the daily-life inference cycle in the scenario in

Figure 1 is shown in box 2. The inference is prompted by the robot’s visual behavior, which is goal-directed, that fails to recognize the intended object. This failure is reported through the declarative speech act

I see Malz, but I don’t see the Heineken. Then, the robot performs a diagnosis inference to the effect of determining what went wrong. This kind of reasoning proceeds from an observation to its causes, has an abductive character and is non-monotonic. In this case, the robot hypothesizes where the beer should be and what was the cause of such state (i.e.,

Heineken was placed on the shelf of food). The decision about what to do next involves two goals: informing the manager the state of the inventory of the drinks stand through a text message—not illustrated in the figure—and delivering the beer to the customer, and a plan to such an effect is induced and executed.

The robot carries on with the plan but fails to find the beer in the stand for food, and the daily-life inference cycle is invoked again, as shown in

Figure 1, box 3. The diagnosis this time is that the supermarket ran out of beer, and the decision is to offer the customer the Malz instead. The plan consists of moving back to the shelf of drinks, get the Malz (this action is not shown in the figure), make the offer, and conclude the task, as illustrated in

Figure 1, box 4.

The implementation of the scenario relies on two conceptual engines that work together. The first is a methodology and programming environment for specifying and interpreting conversational protocols, which here we call

dialogue models, that carry on with the schematic behavior, by issuing and interpreting the relevant speech acts during the execution of the task. Dialogue models have two dual aspects and represent both the task structure and the communication structure, which proceed in tandem. The second consists of the inferential machinery that is used on demand, which is called upon within the interpretation of the input and output speech acts. In this paper, we show the conceptual model and physical machinery to support the conversational protocols and inference capabilities require to achieve the kind of service tasks illustrated in

Figure 1.

The present framework is used to model two inference strategies to support this kind of tasks, one which we call

deliberative inference and the other

conceptual inference, each yielding a form or style of interaction. In the first, the inference conforms to the standard specification of a problem-space that is explored through symbolic search, in which overt diagnosis, decision-making, and planning inferences are performed. This strategy is useful in scenarios in which the robot is given a complex command and is expected to execute it successfully and robustly, dealing with open real-world environments in real-time, such as the General Purpose Service Robot (GPSR) test of the RoboCup@Home competition [

1]. The second conforms to situations in which the robots carries out a service task that involves a close interaction in natural language with the user, the use of general and particular concepts, and dynamic specification and interpretation of user beliefs and preferences, which reflect better the needs of socially assistive robotics [

2]. Although this scenario also involves diagnosis, decision-making, and planning, these inferences are implicit and result from the interplay between speech act protocols specified in the dialogue models and the use of the knowledge-based service, and nevertheless, achieved effects similar to the first scenario.

The structure of this paper is as follows: A summary of the relevant work on deliberative and conceptual inference in service robots is presented in

Section 2. Next, in

Section 3, we describe the conceptual model and architecture required to support the inferences deployed by the robot in the present scenario. In

Section 4, the

programming language for the specification dialogue models or interaction protocols in which inference is used on demand is reviewed.

supports the definition of robotics tasks and behaviors, which is the subject of

Section 5. The non-monotonic service used to perform conceptual inferences is reviewed in

Section 6. With this machinery in hand, we present the two strategies to implement the daily-life inference cycle. First, in

Section 8, we show the pipe-line strategy involving the definition of an explicit problem space and heuristic search. We describe a full demonstration scenario in which the robot Golem-III performs as a supermarket assistant. A previous version of this demo was performed successfully at the final of the RoboCup German Open 2018 in the category @Home. Then, in

Section 9, we describe the second scenario in which Golem-III performs as a home assistant. Finally, in

Section 10, we discuss the advantage and limitations of both approaches and suggest further work to model better the common sense inferences made by people in practical tasks.

2. Related Work

Service robotics research has traditionally focused on tackling specific functionalities or carrying out tasks that integrate such functionalities (e.g., navigation [

3,

4], manipulation [

5,

6], or vision [

7,

8]). However, relatively few efforts have been made to articulate an integrated concept of service robot. For instance, Rajan and Saffioti [

9] organize the field in the dimensions of three cognitive abilities—knowing, reasoning, and cooperating—versus four challenges—uncertainty, incomplete information, complexity, and hybrid reasoning.

In this section, we briefly review related works on high-level programming languages and knowledge representation and reasoning systems for service robots. High-level task programming has been widely studied in robotics, and several domain-specific languages and specialized libraries, as well as extensions to general-purpose programming languages, have been developed for this purpose. Many of these approaches are built upon finite state machines and extensions [

10,

11,

12], although situation calculus [

13,

14,

15] and other formalisms are also common. Notable instances of domain-specific languages are the Task Description Language [

16], the Extensible Agent Behavior Specification Language (XABSL) [

10], XRobots [

11], and

ReadyLog [

13]. More recently, specialized frameworks and libraries, such as TREX [

5] and SMACH [

12], have become attractive alternatives for high-level task programming.

Reasoning is an essential ability in order for service robots to autonomously operate in a realistic scenario, robustly handling its inherent uncertainty and complexity. Most existing reasoning systems in service robots are employed for task planing and decision-making, commonly taking into account spatial and temporal information, in order to allow more adaptable and robust behavior and to facilitate development and deployment (e.g., References [

17,

18,

19]). These systems typically exploit logical (e.g., References [

20,

21,

22,

23]) or probabilistic inference (e.g., Partially Observable Markov Decision Processes and variants [

24,

25,

26,

27,

28]), or combinations of both (e.g., References [

29,

30,

31]), and have been demonstrated on a wide variety of applications, including manipulation [

25], navigation [

24], collaborative [

23], and interactive [

32] tasks. An overview and classification of the so-called “deliberative functions”, including planning, acting, monitoring, observing, and learning, is given by Ingrand and Ghallab [

33], and the need to integrate deliberative functions with planning and reasoning is emphasized by Ghallab et al. [

34].

Reasoning systems rely on knowledge-bases to store and retrieve knowledge about the world, which can be obtained beforehand or dynamically by interacting with the users and/or the environment. One of the most prominent knowledge-base systems for service robots has been KnowRob (Knowledge processing for Robots) [

35,

36], which is implemented in Prolog and uses the Web Ontology Language (OWL) [

37]. KnowRob has multiple knowledge representation and reasoning capabilities and has been successfully deployed in complex tasks, such as identifying missing items on a table [

38], operating containers [

39], multi-robot coordination [

40], and semantic mapping [

41]. Non-monotonic knowledge representation and reasoning systems are typically based on Answer Set Programming (ASP) (e.g., References [

29,

42,

43]) and extensions of OWL-DL that allow the use of incomplete information have been defined (e.g., References [

44]), some of which have been demonstrated in different complex tasks [

42,

44,

45,

46,

47,

48]. Awaad et al. [

44] use OWL-DL to model preferences and functional affordances for establishing social-accepted behaviors and guidelines to improve human-robot interaction and carrying out tasks in real-world scenarios. In addition, an application of dynamic knowledge-acquisition through the interaction with a teacher is provided by Berlin et al. [

49].

In this work, we present a general framework for deliberative and conceptual inference in service robots that its integrated within an interaction-oriented cognitive architecture and accessed through a domain-specific task programming language. The framework allows modeling the common-sense daily life inference cycle, which consists of diagnosis, decision-making, and planning, to endow service robots with robust and flexible behavior. It also implements a light and dynamic knowledge-base system that enables non-monotonic reasoning and the specification of preferences.

3. Conceptual Model and Robotics Architecture

To address the complexity described in

Section 1, we have developed an overall conceptual model of service robots [

50] and the Interaction-Oriented Cognitive Architecture (IOCA) [

51] for its implementation, which illustrated in

Figure 2. IOCA has a number of functional modules and an overall processing strategy that remain fixed across domains and applications, conforming to the functionality of standard cognitive architectures [

52,

53,

54]. The conceptual, inferential, and linguistic knowledge is specific to domains and applications but is used in a regimented fashion by the system interpreters, which are also fixed. The conceptual model is inspired in Marr’s hierarchy of systems levels [

55] and consists of the functional, the algorithmic, and the implementation system levels. The functional level is related to

what the robot does from the point of view of the human-user and focuses on the definition of tools and methodologies for the declarative specification and interpretation of robotic tasks and behaviors; the algorithmic level consists of the specification of

how behaviors are performed and focuses on the the development of robotics algorithms and devices; finally, the implementation level focuses on system programming, operating systems, and the software agent’s specification and coordination.

The present conceptual model provides a simple but powerful way to integrate symbolic AI, which is the focus of the functional system level, and sub-symbolic AI, in which perception, machine learning, and action tasks are specified and executed at the algorithmic system level. The robotics algorithms and devices which have a strong implementation component are defined and integrated at the algorithmic and implementation systems levels. In this way, we share the concerns of bringing AI and robotics efforts closer [

9,

56].

The core of IOCA is the interpreter of

[

57]. This is a programming language for the declarative specification and interpretation of the robot’s communication and task structure.

defines two main abstract data-types: the

situation and the

dialogue model (DM). A situation is an information state defined in terms of the expectations of the robot, and a DM is a directed graph of situations representing the task structure. Situations can be

grounded and correspond to an actual spatial and temporal estate of the robot, where concrete perceptual and motor actions are performed, or

recursive, consisting on a full dialogue model, possibly including recursive situations, permitting the definition of large abstractions of the task structure.

Dialogue models have a dual interpretation as conversational or speech acts protocols that the robot performs along the execution of a task. From this perspective, expectations are the speech acts that can potentially be expressed by an interlocutor, either human or machine, in the current situation. Actions are thought of as the speech acts performed by the robot as a response to such interpretations. Events in the world that can occur in the situation are also considered expectations that give rise to intentional action by the robot. For this reason, dialogue models represent the communication or interaction structure, and they correspond to the task structure.

Knowledge and inference resources are used on demand within the conversational context. These “thinking” resources are also actions, but, unlike perceptual and motor actions, which are directed to the interaction with the world, thinking is an internal action that mediates the input and output, permitting the robot to anticipate and cope better with the external world. The communication and interaction cycle is then the center of conceptual architecture and is oriented to interpret and act upon the world but also to manage thinking resources that are embedded within the interaction, and the interpreter of coordinates the overall intentional behavior of the robot.

The present architecture supports rich and varied but schematic or stereotyped behavior. Task structure can proceed as long as at least one expectation in the current situation is met by the robot. However, schematic behavior can easily break down in dynamic worlds when none or more than one expectations are satisfied in the situation. When this happens, the interpretation context is lost, and the robot needs to recover it to continue with the task. There are two strategies to deal with such contingencies: (1) to invoke domain independent dialogue models for task management, which here we refer to as

recovery protocols, or (2) to invoke inference strategies to recover the ground. In this latter case, the robot requires to make an abductive inference or a diagnosis in order to find out why none of its expectations were met, decide the action needed to recover the ground on the basis of such diagnosis, in conjunction with a given set of preferences or obligations, and induce and execute a plan to achieve such goal. Here, we refer to the cycle of diagnosis-decision-making-planning as the

daily life inference cycle, which is specified in

Section 7. This cycle is invoked by the robot when schematic behavior cannot proceed, and a recovery protocol that is likely to recover the ground is not available.

The architecture includes a low level reactive cycle involving low-level recognition and rendering of behaviors that are managed by the Autonomous Reactive System directly. This cycle is embedded within the communication or interaction cycle and has the ’s interpreter as its center, which performs the interpretation of the input and the specification of the output in relation to current situation and dialogue model. The reactive and communication cycles normally proceed independently and continuously, the former working at as least one order of magnitude faster than the latter, although there are times in which one needs to take full control of the task for performing a particular process, and there is a loose coordination between the two cycles.

The perceptual interpreters are modality specific and receive the output of the low-level recognition process bottom-up but also the expectations in the current situation top-down, narrowing the possible interpretations of the input. There is one perceptual interpreter for each input modality which instantiates the expectation that is meaningful in relation to the context. Expectations are, hence, representations of interpretations. The perceptual interpreters promote sub-symbolic information produced by the input modalities into a fully articulated representation of the world, as seen by the robot in the situation. Standard perception and action robotics algorithms are embedded within modality specific perceptual interpreters for the input and for specifying the external output, respectively.

The present conceptual model supports the so-called

deliberative functions [

33] embodied in our Robot Golem-III, such as planning, acting, monitoring, observing, and acquiring knowledge through language, which is a form of learning, but also other higher-level cognitive functions, such as performing diagnosis and decision-making dynamically, and carrying on intentional dialogues based on speech act protocols. Golem-III is an in-house development including the design and construction of the torso, arms, hands, neck, head and face, using a PatrolBot base built by MobileRobots Inc., Amherst, NH, USA, 2014

https://www.generationrobots.com/media/PatrolBot-PTLB-RevA.pdf.

4. The Programming Language

The overall intelligent behavior of the robot in the present framework depends on the synergy of intentional dialogues oriented to achieve the goals of the task and the inference resources that are used on demand within such purposeful interaction. The code implementing the

programming language is available as a GitHub repository at

https://github.com/SitLog/source_code/.

4.1. ’s Basic Abstract Data-Types

The basic notion of is the situation. A situation is an information state characterized by the expectations of an intentional agent, such that the agent, i.e., the robot, remains in the situation as long as its expectation are the same. This notion provides a large spatial and temporal abstraction of the information state because, although there may be large changes in the world or in the knowledge that the agent has in the situation, its expectations may nevertheless remain the same.

A situation is specified as a set of expectations. Each expectation has an associated action that is performed by the agent when such expectation is met in the world and the situation that is reached as a result of such action. If the set of expectations of the robot after performing such an action remain the same, the robot recurs to the same situation. Situations, actions, and next situations may be specified concretely, but also allows these knowledge objects to be specified through functions, possible higher-order, that are evaluated in relation to the interpretation context. The results of such an evaluation are concrete interpretations and actions that are performed by the robot, as well as the concrete situation that is reached next in the robotics task. Hence, a basic task can be modeled with a directed graph with a moderate and normally small set of situations. Such a directed graph is referred to in as a Dialogue Model. Dialogue models can have recursive situations including a full dialogue model, providing the means for expressing large abstractions and modeling complex tasks. A situation is specified in as an attribute-value structure, as follows in Listing 1:

’s interpreter is programmed fully in Prolog (We used SWI-Prolog 6.6.6

https://www.swi-prolog.org/) and subsumes Prolog’s notation. Following Prolog’s standard conventions, strings starting with lower and upper case letters are

atoms and

variables, respectively, and the

==> is an operator relating an attribute with its corresponding value. Values are expressions of a functional language, including constants, variables, operators, predicates, and operators, such as unification, variable assignment, and the

apply operator for dynamic binding and evaluation of functions. The functional language supports the expression of higher-order functions, too. The interpretation of a situation by

consists of the interpretation of all its attributes from top to bottom. The attributes

id,

type, and

arcs are mandatory. The value of

prog is a list of expressions of the functional language, and, in case such attribute is defined, it is evaluated unconditionally before the

arcs attribute.

Listing 1. Specification of ’s Situation

[

ID ==> Situation_ID(Arg_List),

type ==> Situtation_Type_ID,

in_arg ==> In_Arg,

out_arg ==> Out_Arg,

prog ==> Expression,

arcs ==> [

Expect1:Action1 => Next_Sit1,

Expect2:Action2 => Next_Sit2,

...

Expectn:Actionn => Next_Sitn

]

]

A dialogue model is defined as a set of situations. Each DM has a designated initial situation and at least a final one. A program consists of a set of DMs, one designed as the main DM. This may include a number of situations of type recursive each containing a full DM. ’s interpreter unfolds a concrete graph of situations, starting form the initial situation of the main DM and generates a Recursive Transition Network (RTN) of concrete situations. Thus, the basic expressive power of ’s corresponds to a context-free grammar. ’s interpreter consists of the coordinated tandem operation of the RTN’s interpreter that unfolds the graph of situations and the functional language that evaluates the attributes’ values.

All links of the arcs attribute are interpreted during the interpretation of a situation. Expectations are sent to the perceptual interpreter top-down, which instantiates the expectation that is met by the information provided by the low-level recognition processes, and sends such expectation back, bottom-up, to ’s interpreter. From the perspective of the declarative specification of the task, EXPECTn contains the information provided by perception. Once an expectation is selected, the corresponding action and next situation are processed. In this way, abstracts over the external input, and such an interface is transparent for the user in the declarative specification of the task. Expectations and actions may be empty, in which case a transition between situations is performed unconditionally.

4.2. ’s Programming Environment

’s programming environment includes the specification of a set of global variables that have scope over the whole of the program, as well as a set of local variables that have scope over the situations of a particular dialogue model. ’s variables are also defined as attribute-value pairs, the attribute being a Prolog’s atom and its value a standard Prolog’s variable. variables can have arbitrary Prolog’s expressions as their values. All variables can have default values and can be updated through the standard assignment operator, which is defined in ’s functional language.

Dialogue models and situations can also have arguments, in which values are handled by reference in the programming environment, and dialogue models and situations allow input and output values that can propagate through the graph by these interface means.

The programming environment includes also a pipe global communication structure that provides an input to the main DM and propagates through all concrete DMs and situations that unfold in the execution of the task. This channel is stated through the attributes in_arg and out_arg, in which definition is optional. The value of out_arg is not specified when the situation is called upon, i.e., it is a variable, and can be given a value in the body of the situation through a variable assignment or through unification. In case there is no such assignment, the input and output pipes are unified when the interpretation of the situation is concluded. The value of in_arg can be also underspecified, and given a value within the body of the situation, too. In case these are not stated explicitly, the value of in_arg propagates to out_arg by default as was mentioned.

Global and local variables, as well as the values of the pipe, have scope over the local program and within each link of the arcs attribute, and their values can be changed through the ’s assignment operator or through unification. However, a local program and links are encapsulated local objects that have no scope outside their particular definition. Hence, Prolog’s variables defined in prog and in different links of arcs attribute are not bounded, even if they have the same name. The strict locality of these programs has proven to be very effective for the definition of complex applications.

The programming environment includes, as well, a history of the task conformed by the stack structure of all dialogue models and situations, with their corresponding concrete expectation, action and next situation, unfolded along the execution of the task. The current history can be consulted through a function of the functional language and can be used not only to report what happened before but also to make a decision about the future course of action.

The elements of the programming environment augment the expressive power of , which corresponds overall to a context-sensitive grammar. The representation of the task is, hence, very expressive but still preserves the graph structure, and provides a very good compromise between expressiveness and computational cost.

4.3. ’s Diagrammatic Representation

programs have a diagrammatic representation, as illustrated in

Figure 3 (the full commented code of the present

program is given in

Appendix A). DMs are bounded by large dotted ovals, including the corresponding situation’s graph (i.e.,

main and

wait). Situation are represented by circles with labels indicating the situation’s ID and type. In the example, the

main DM has three situations in which IDs are

is,

fs, and

rs. The situation identifier is optional—provided by the user—except the initial situation that has the mandatory ID

is. The types IDs are also optional with the exception of the

final and

recursive, as these are used by

to control the stack of DMs. The links between situations are labeled with pairs of form

:

, which stand for expectations and actions, respectively. When the next situation is specified concretely the arrow linking to situations is stated directly; however, if the next situation is stated through a function (e.g.,

h), there is a large bold dot after the

:

pair with two or more exit arrows. This indicates that the next situation depends on the value of

h in relation to the current context, and there is a particular next situation for each particular value. For instance, the edge of

is in de DM

main that is labeled by

:

g, representing the expectation as a list of the value of the local variable

day and the function

f and the action as the function

g, is followed by a large black dot labeled by the function

h; this function has two possible values, one cycling back into

is and the other leading to the recursive situation

rs. This depicts when the expectation that is met at the initial situation satisfies the value of the local variable

day and the value of function

f, so the action defined by the value of function

g is performed, and the next situation is the value of function

h.

The circles representing recursive situations have also large internal dots representing control return points from embedded dialogue models. The dots mark the origin of the exit links that have to be transversal whenever the execution of an embedded DM is concluded, when the embedding DM is popped up from the stack and resumes execution. The labels of the expectations of such arcs and the names of the corresponding final states of the embedded DM are the same, depicting that the expectations of a recursive situation correspond to the designated final states of the embedded DM. This is the only case in which an expectation is made available internally to the interpreter of and is not provided by a perceptual interpreter as a result of an observation from the external world.

Finally, the bold arrows depict the information flow between dialogue models. The output bold arrow leaving main at the upper right corner denotes the value of out_arg when the task is concluded. The bold arrow from main to wait denotes the corresponding pipe connection, such that the value of out_arg of the situation rs in main is the same as the value of in_arg in the initial situation of wait. The diagram also illustrates that the value of in_arg propagates back to main through the value of out_arg in both final situations fs1 and fs2; since the attribute out_arg is never set within the DM wait, the original value of in_arg keeps passing on through all the situations, including the final ones. The expectations of the arcs of is in the DM wait take the input from the perceptual interpreter being either the value of in_arg or the atom loop.

5. Specification of Task Structure and Robotics Behaviors

The functional system level addresses the tools and methodologies to define the robot’s competence. In the present model, such competence depends on a set of robotics behaviors and a composition mechanism to specify complex tasks. Behaviors rely on a set of primitive perceptual and motor actions. There is a library of such basic actions, each associated to a particular robotics algorithm. Such algorithms constitute the “innate” capabilities of the robot.

In the present framework, robotics behaviors are

programs in which the purpose is to achieve a specific goal by executing one or more basic actions within a behavior’s specific logic. Examples of such behaviors are

move,

see,

see_object,

approach,

take,

grasp,

deliver,

relieve,

see_person,

detect_face,

memorize_face,

reconize_face,

point,

follow,

guide,

say,

ask, etc. The

’s code of

grasp, for instance, is available at the GitHub repository of

https://bit.ly/grasp-dm.

Behaviors are parametric abstract units that can be used as atomic objects but can also be defined as structured objects using other behaviors. For instance, take is a composite behavior using approach and grasp and deliver uses move and relieve. Another example is see, which uses see_object, see_person and see_gesture to generally interpret a visual scene.

All behaviors have a number of terminating status. If the behavior is executed successfully the status is ok; however, there may be a number of failure conditions, particular to the behavior, that may prevent its correct termination, and each is associated with a particular error status. The dialogue model at the application layer should consider all possible status of all behaviors in order to improve the robot’s reliability.

Through these mechanisms complex behaviors can be defined, such as

find, that, given a search path and a target object or person, enables the robot to explore the space using the

scan and

tilt behaviors to move its head and make visual observations at different positions and orientations. The full

’s code of

find is also provided at the GitHub repository of

https://bit.ly/find-dm.

Behaviors should be quite general, robust and flexible, so they can be used in different tasks and domains. There is a library of behaviors that provide the basic capabilities of the robot from the perspective of the human-user. This library evolves with practice and experience and constitutes a rich empirical resource for the construction and application of service robots [

50].

The composition mechanism is provided by , too, which allows the specification of dialogue models that represent the tasks and communication structure. Situations in these latter dialogue models represent stages of a task, which can be partitioned into sub-tasks. So, the tasks as a whole can be seen as a story-board, where each situation corresponds to a picture.

For example, if the robot performs as a supermarket assistant, the structure of the tasks can be construed as (1) take an order from the human customer; (2) find and take the requested product; and (3) deliver the product to the customer. These tasks correspond to the situations in the application layer, as illustrated in

Figure 4. Situations can be further refined in several sub-tasks specified as more specific dialogue models embedded on the situations of upper levels, and the formalism can be used to model complex task quite effectively.

The dotted lines from the application to the behaviors layer in

Figure 4 illustrate that behaviors are used at the application layer as abstract units at different degrees of granularity. For instance,

find is used as an atomic behavior but also

detect_face can be used directly by a situations at the level of the task structure, despite that

detect_face is used by

find. The task structure at the application layer can be partitioned in subordinated tasks, too. For this,

’s supports the recursive specification of dialogue models and situations, enhancing the expressive power of the formalism.

Although both task structure and behaviors are specified through ’s programs, these correspond to two different levels of abstraction. The top level specifies the final application task-structure, and is defined by the final user, while the lower level consists of the library of behaviors, which should be generic and potentially useful in diverse application domains.

From the point of view of an ideal division of labor, the behaviors layer is the responsibility of the robot’s developing team, while the application’s layer is the focus of teams oriented to the development of final service robot applications.

The General Purpose Service Robot

Prototypical or schematic robotic tasks can be defined through dialogue models directly. However, the structure of the task has to be known in advance, and there are many scenarios in which this information is not available. For this, in the present framework, we define a general purpose mechanism that translates speech acts performed by the human-user into a sequence of behaviors, which is interpreted by a behavior’s dispatcher one behavior at a time, and finishes the task when the list has been emptied [

50]. We refer to this mechanism as

General Purpose Service Robot, or simply

GPSR.

In the basic case, all the behaviors in the list terminate with the status

ok. However, whenever the behaviors terminate with a different status, something in the world was unexpected, or the robot failed, and the dispatcher must take an appropriate action. We consider two main types of error situations. The first may be a general but common and known failure, in which case, a recovery protocol is invoked; these protocols are implemented as standard

’s dialogue models and undergo a procedure that is specific to fix the error, and, when this is accomplished, they return control to the dispatcher and continue with the task. The second type is about errors that cannot be prevented; to recover from them, the robot needs to engage in the daily-life inference cycle, as discussed in the

Section 1 and will be elaborated upon in

Section 7,

Section 8 and

Section 9.

6. Non-Monotonic Knowledge-Base Service

The specification of service robot’s tasks requires an expressive, robust but flexible knowledge-base service. The robot may require to represent, reason and maintain terminological or linguistic knowledge, general and particular concepts about the world, and about the application domain. There may be also defaults, exceptions and preferences, which can be acquired and updated incrementally during the specification and execution of a task, and a non-monotonic KB service is required. Inferences about this kind of knowledge are referred to here as conceptual inferences.

To support such functionality, we developed a non-monotonic knowledge-base service based on the specification of a class hierarchy. This system supports the representation of classes and individuals, which can have general or specific properties and relations [

58,

59]. Classes and individuals are the primitive objects and constitute the ontology. There is a main or top class which includes all the individuals in the universe of discourse; this can be divided in a finite number of mutually exclusive partitions, each corresponding to a subordinated or subsumed class. Subordinated classes can be further partitioned into subordinated mutually exclusive partitions giving rise to a strict hierarchy of an arbitrary depth, and classes are related through a proper inclusion relation. Individual objects can be specified at any level in the taxonomy and the relation between individuals and classes is one of set membership. Classes and individuals can have arbitrary properties and relations, which have generic or specific interpretations, respectively.

The taxonomy has a diagrammatic representation, as illustrated in

Figure 5. Classes are represented by circles, and individuals are represented by boxes. The inclusion relation between classes is represented by a directed edge or arrow pointing to the circle representing the subordinated class, and the membership relation is represented by a bold dot pointing to the box representing the corresponding individual. Properties and relations are represented through labels associated to the corresponding circles and boxes; expressions of the form

stand for a property or a relation, where

stands for the name of the property or relation, and

stands for its corresponding value. The properties or relations are bounded within the scope of their associated circle or box. Classes and individuals can be also labeled with expressions of the form

,

standing for implications, where

is an expression of the form

for

, such that

is a property or a relation, and

stands for an atomic property or relation with a weight

, such that

holds for the corresponding class or individual with priority

, if all

in

hold. The KB service allows that objects of relations and values of properties are left underspecified, augmenting its flexibility and expressive power.

For instance, the class

animals at the top in

Figure 5 is partitioned into

fishes,

birds, and

mammals, where the class of birds is further partitioned into

eagles and

penguins. The label

fly stands for a property that all birds have and can be interpreted as an absolute default holding for all individuals of such class and its subsumed classes. The label

eat animals denotes a relation between

eagles with

animals such that all eagles eat animals, and the question

do eagles eat animals? is answered

yes, without specifying which particular eagle eats and which particular animal is eaten. The properties and relations within the scope of a class or an individual, represented by circles and squares, have such class or individual as the subject of the corresponding proposition, but these are not named explicitly. For instance,

like => mexico within the box for Pete is interpreted as the proposition

Pete likes Mexico. In the case of classes, such an individual is unspecified, but, in the case of individuals, it is determined. Likewise, the labels

work(y) live(y),3;

born(y) live(y),5; and

like(y) live(y),6, within the scope of

birds stand for implications that hold for all unnamed individuals

x of the class

birds and some individual

y, which is the value of the corresponding property or the object of the relation, e.g.,

if x works at y, then x lives at y. Such implications are interpreted as conditional defaults, preferences, or abductive rules holding for all birds that work at, were born in, or like

y. The integer numbers are the weights or priorities of such preference, with the convention that the lower the value the larger its priority. Labels without weights are assumed to have a weight of 0 and represent the absolute properties or relations that classes or individuals have. The label

size large denotes the property

size of

pete and its corresponding value, which is

large. The labels

work mexico;

born argentina; and

like mexico denote relations of Pete with their corresponding objects (México and Argentina). The system also supports the negation operator

not, so all atoms can have a positive or a negative form (e.g.,

fly,

not(fly)).

Class inclusion and membership are interpreted in terms of the inheritance relation such that all classes inherit the properties, relations, and preferences of their sub-summing or dominant classes, and individuals inherit the properties, relations, and preferences of their class. Hence, the extension or closure of the KB is the knowledge specified explicitly, plus the knowledge stated implicitly, through the inheritance relation.

The addition of the

not operator allows the expression of incomplete knowledge, as opposed to the Closed World Assumption (CWA). Hence, queries are interpreted in relation to the strong negation and may be answered

yes,

no and

not known. For instance, the questions

do birds fly?,

do birds swim?, and

do fish swim? in relation to

Figure 5 are answered

yes,

no, and

I don’t know (In case the CWA were assumed, queries would be right only in case complete knowledge about the domain were available, but they could be wrong otherwise. For instance, the queries

do fish swim? and

do mammals swim? would be both answered

no in relation to the CWA, which would be wrong for the former but generally right for the latter).

Properties, relations and preferences can be thought of as defaults that hold in the current class and over all the subsumed classes, as well as for the individual members of such classes. Such defaults can be positive, e.g., birds fly, but also negative, e.g., birds do not swim; defaults can have exceptions, such as penguins, which are birds that do not fly but do swim.

The introduction of negation augments the expressive power of the representational system and allows for the definition of exceptions, but it also allows the expression of contradictions, such as that penguins can and cannot fly, and swim and do not swim. To support this expressiveness and coherently reason about this kind of concept, we adopt the principle of specificity, which states that, in case of conflicts of knowledge, the more specific propositions are preferred. Subsumed classes are more specific than subsuming classes, and individuals are more specific than their classes. Hence, in the present example, the answer to do penguins fly?, do penguins swim?, and does Arthur swim? are no, yes, and yes.

The principle of specificity chooses a consistent extension of a set of atomic propositions, positive and negative, that can be produced out of the empty set by obtaining two extensions or branches, one with the positive and the other with its negation, one proposition at a time, for all end nodes of each branch and for all atomic propositions that can be formed with the atoms in the theory. These extensions give rise to a binary tree of extended theories in which each path represents a consistent theory, but all different paths are inconsistent between each other. In the present example, the principle of specificity chooses the branch including

{not(fly(pinguins)), swin(pinguins), not(fly(arthur)), swin(arthur)}. The set of possible theories that can be constructed in this way are referred to as

multiple extensions [

60].

The principle of specificity is a heuristics for choosing a particular consistent theory among all possible extensions. Its utility is that the extension at each particular state of the ontology is determined directly by the structure of the tree, or the strict hierarchy. Changing the ontology, i.e., augmenting or deleting classes or individuals, or changing their properties or relations, changes the current theory; some propositions may change their truth value, and some attributes may change their values, but the inference engine chooses always the corresponding consistent theory or the coherent extension.

Preferences can be thought of as conditional defaults that hold in the current class and over all subsumed classes, as well as for their individual members, if their antecedents hold. However, this additional expressiveness gives rise to contradictions or incoherent theories but this time due to the implication. In the present example, the preferences work(y) live(y),3 and born(y) live(y),5 of birds are inherited to Pete whom works in México but was born in Argentina; as Pete works in México and was born in Argentina, therefore, he lives both in México and in Argentina, which is incoherent. This problem is solved by the present KB service through the weight value or priority, and as this is 3 for México and 5 for Argentina, the answer for where does Pete lives? is México.

Preferences can also be seen as abductive rules that provide the most likely explanation for an observation. For instance, if the property live=>mexico is added within the scope of Pete, the question of why does Pete live in México can be answered because he works in México, i.e., work(y) live(y),3, which is preferred over the alternative because he likes México, i.e., like(y) live(y),6, since the former preference has a lower priority. This kind of rule can also be used to diagnose the causes or reasons of arbitrary observations and constitutes a rich conceptual resource to deal with unexpected events that happen in the world.

The KB is specified as a list of Prolog clauses with five arguments: (1) the class id; (2) the subsuming or mother class; (3) the list of properties of the class; (4) the list of relations of the class; and (5) the list of individual objects of the class. Every individual is specified as a list, with its id, the list of its properties and the list of its relations. Each property and relation is also specified as a list, including the property or relation itself and its corresponding weight. Thus, preferences of classes and individuals may be included in both the property list and the relation list, suggesting that they constitute conditional properties and relations. IDs, properties, and relations are specified as attribute-value pairs, such that values can be objects of well-defined Prolog’s forms. The actual code of the KB illustrated in

Figure 5 is given in Listing 2.

Listing 2. Full code of example taxonomy.

[

%The ‘top’ class in mandatory

class(top,none,[],[],[]),

class(animals,top,[],[],[]),

class(fish,animals,[],[],[]),

class(birds,animals,[[fly,0],

[not(swim),0],

[work=>’-’=>>live=>>’-’,3],

[born=>’-’=>>live=>>’-’,5],

[like=>’-’=>>live=>>’-’,6]],

[],[]),

class(mammals,animals,[],[],[]),

class(eagles,birds,[],[[eat=>animals,0]],

[[id=>pete,[[size=>large,0]],

[[work=>mexico,0],

[born=>argentina,0],

[like=>mexico,0]

]]

]),

class(penguins,birds,[[swim,0],[not(fly),0]],[],[[id=>arthur,[],[]])

]

The KB service provides eight main services for retrieving information from the non-monotonic KB over the closure of the inheritance relations [

58], as follows:

class-extension(Class, Extension) provides the set of individuals in the argument class. If this is top, this service provides the full set of individuals in the KB.

property-extension(Property, Extension) provides the set of individuals that have the argument property in the KB.

relation-extension(Relation, Extension) provides the set of individuals that stand as subjects in the argument relation in the KB.

explanation_extension(Property/Relation, Extension) provides the set of individuals with an explanation supporting why such individuals have the argument property/relation in the KB.

classes_of_individual(Argument, Extension): provides the set of mother classes of the argument individual.

properties_of_individual(Argument, Extension): provides the set of properties that the argument individual has.

relations_of_individual(Argument, Extension): provides the set of relations in which the argument individual stands as subject.

explanation_of_individual(Argument, Extension) provides the supporting explanations of the conditional properties and relations that hold for the argument individual.

These services provide the full extension of the KB at a particular state. There are, in addition, services to update the values of the KB. There are also services to change, add, or delete all objects in the KB, including classes and individuals, with their properties and relations. Hence, the KB can be developed incrementally and also updated during the execution of a task, and the KB service always provides a coherent value. The full Prolog’s code of the KB service is available at

https://bit.ly/non-monotonic-kb.

The KB services are manipulated by dialogue models as ’s user functions. These services are included as standard ’s programs that are used on demand during the interpretation of ’s situations. Such services are commonly part of larger programs representing input and output speech acts that are interpreted within structured dialogues defined through dialogue models. Hence, conceptual inferences made on demand during the performance of linguistic and interaction behavior constitute the core of our conceptual model of service robots.

The non-monotonic KB-Service is general and allows the specification of non-monotonic taxonomies, including the expression of preferences and abductive rules in a simple declarative format for arbitrary domains. The system allows the expression of classes with properties and relations, which correspond to roles in Description Logics [

61], but also of individuals with particular properties and relations. However, most description logics are monotonic, such as OWL [

37], and the expressive power of our system should be compared to Answer Set Programming [

62] and systems that can handle incomplete information, as the use of OWL-DL for modeling preferences and functional affordances [

44]. There are ontological assumptions and practical considerations that distinguish our approach from alternative representation schemes, but such discussion and comparative evaluation are beyond the scope of the present paper.

7. The Daily-Life Inference Cycle

Next, we address the specification and interpretation of the daily-life inference cycle, as described in

Section 1. This cycle is studied from two different perspectives: the first consists of the pipe-line execution of a diagnosis, a decision-making, and a planning inference, and it involves the explicit definition of a problem space and heuristic search; the second is modeled through the interaction of appropriate speech-acts protocols and the extensive use of preferences. We refer to these two approaches as

deliberative and

conceptual inference strategies. The former is illustrated with a supermarket scenario, where the robot plays the role of an assistant, and the latter with a home scenario, where the robot plays the role of a butler, as described in

Section 8 and

Section 9, respectively. The actors play analog roles in both settings, e.g., attending commands and information request related to a service task, and bringing objects involved in such requests or placing objects in their right locations, but each scenario emphasizes a particular aspect of the kind of support that can be provided by service robots.

The robot behaves cooperatively and must satisfy a number of cognitive, conversational, and task obligations, as follows:

Cognitive obligations ():

- -

update its KB whenever it realizes that it has a false belief;

- -

notify the human user of such changes, so he or she can be aware of the beliefs of the robot;

Conversational obligations: to attend successfully the action directives or information requests expressed by the human user;

Task obligations (): to position the misplaced objects in their corresponding shelves or tables.

The cognitive obligations manage the state of beliefs of the robot and its communication to the human user. These are associated to perception and language and are stated for the specific scenario. Conversational and task obligations may have some associated cognitive obligations, too, that must be fulfilled in conjunction with the corresponding speech acts or actions.

In both the supermarket and home scenarios, there is a set of objects that belong to a specific class, e.g., food, drinks, bread, snacks, etc., and each shelf or table should hold objects of a designated class. Let , and be the sets of observed, unseen/missing and misplaced objects, respectively, on the shelf or the table in a particular observation at time in relation to the current state of the KB. We assume that the behavior inspects the whole shelf or table in every single observation, and these three sets can be computed directly. must hold, and all objects in should belong to the class associated to the shelf .

Let be the set of objects of the class that are believed to be misplaced in other shelves at the observation and the full set of believed misplaced objects in the KB at any given time. Let be ; i.e., the set of objects of the shelf’s class that the robot does not know where are placed at the time of the particular observation .

Whenever an observation is made, the robot has the cognitive obligation of verifying whether it is consistent with the current state of the KB, and correct the false believes, if any, as follows:

For every object in , state the exception in the KB, i.e., that the object is not in its corresponding shelf; notify the exception and that the robot does not know where such an object is!

For every object in , verify that the object is marked in the KB as misplaced at the current shelf; otherwise, update the KB accordingly, and notify the exception.

The conversational obligations are associated to the linguistic interaction with the human user. For instance, if he or she expresses a fetch command, the robot should move to the expected location of the requested object, grasp it, move back to the location where the user is expected to be, and hand the object over to him or her. The command can be simple, such as bring me a coke or place the coke in the shelve of drinks; or composite, such as bring me a coke and a bag of crisps.

A core functionality of the GPSR is to interpret the speech acts in relation to the context and produce the appropriate sequence of behaviors, which is taken to be the meaning of the speech act. Such list of behaviors can also be seen as a schematic plan that needs to be executed to satisfy the command successfully. The general problem-solving strategy is defined along the lines of the GPSR as described above [

50].

The task obligations are generated along the execution of a task, when the world is not as expected and should eventually be fixed. For instance, the behavior produces, in addition to its cognitive obligations, the task obligations of placing the objects in the sets , , and in their right places. These are included in the list .

All behaviors have a indicating whether the behavior was accomplished successfully or whether there was an error, and in this latter case, its type. Every behavior has also an associated manager that handles the possible termination status; if the status is , the behavior’s manager finishes and passes the control back to the dialogue manager or the GPSR dispatcher.

However, when the behavior terminates with an error, the manager executes the action corresponding to the status type. There are two main cases: (i) when the status can be handled with a recovery protocol and (ii) when inference is required. An instance of case (i) is the behavior that may fail because there is a person blocking the robot’s path, or a door is closed and needs to be opened. The recovery protocols may ask the person to move away and, in the latter situation, either ask someone around to open the door or execute the open-door behavior instead, if the robot does have such behavior in its behaviors library. An instance of case (ii) is when the behavior, which includes a behavior, fails to find the object in its expected shelf. This failure prompts the execution of the daily-life inference cycle.

Whenever the expectations of the robot are not met in the environment, the robot needs first to make a diagnosis inference and find a potential explanation for the failure; induce a decision dynamically as how to proceed and such decision becomes the goal for the induction and execution of a plan. The present model makes extensive use of diagnosis and decision-making and contrasts in this regard with models focus on planning mostly [

34,

44,

63,

64,

65], as discussed in relation of the deliberative functions [

33].

8. Deliberative Inference

This inference strategy is illustrated with the supermarket scenario in

Figure 1. This has the following elements:

The supermarket consists of a finite set of shelves at their corresponding locations , each having an arbitrary number of objects or entities } of a particular designated class , the set of classes; for instance, ;

The human client, who may require assistance;

The robot, which has a number of conversational, task, and cognitive obligations;

A human supermarket assistant whose job is to bring the products from the supermarket’s storage and place them on their corresponding shelves.

The cognitive, conversational, and task obligations are as stated above. A typical command is bring me a coke, which is interpreted as [, , , ], where is the composite behavior , , , and .

In this scenario, the priority is to satisfy the customer, and a sensible strategy is to achieve the action as soon as possible and complete the execution of the command, and use the idle time to carry on with the . These two goals interact, and the robot may place some misplaced objects along the way if the actions deviate little from the main conversational obligation. If the sought object is placed at its right shelf, the command can be performed directly; otherwise, the robot must engaged in the daily-life inference cycle to find the object, take it, and deliver it to the customer. These conditions are handled by the behavior’s manager of the behavior , which, in turn, uses the behavior , with its associated cognitive obligations.

The arguments of the inference procedure are:

The current behavior;

The list

of shelves already inspected by the robot including the objects placed on them, which corresponds to the states of the shelves as arranged by the human assistant when the scenario was created, as discussed below in

Section 8.1; this list is initially empty;

The set of objects already put in their right locations by previous successful place actions performed by the robot in the current inference cycle; this set is initially empty.

The inference cycle proceeds as follows:

Perform a diagnosis inference in relation to the actual observations already made by the robot; this inference renders the assumed actions made by the human assistant when he or she filled up the stands including the misplaced objects ;

Compute the in relation to the current goal, e.g., , and possibly other subordinated place actions in the current ;

Induce the plan consisting of the list of behaviors to achieved ;

Execute the plan in ; this involves the following actions:

- (a)

update every time the robot sees a new shelf;

- (b)

update the KB whenever an object is placed on its right shelf, and accordingly update the current ; and update ;

- (c)

if the is not found at its expected shelf when the goal is executed, invoke the inference cycle recursively with the same goal and the current values of and which may not be empty.

8.1. Diagnosis Inference

The diagnosis inference model is based on a number of assumptions that are specific to the task and the scenario, as follows:

The objects, e.g., drinks, food, and bread products, were placed in their corresponding shelves by the human assistant who can perform the actions —move to of from its current location—and , i.e., place on the shelf at the current location. The assistant can start the delivery path at any of arbitrary shelf, can carry as many objects as needed in every move action, and he or she places all the objects in a single round.

The believed content of the shelves is stored in the robot’s KB. This information can be provided in advance or by the human assistant through a natural language conversation, which may be defined as a part of the task structure.

If an object is not found by the robot in its expected shelf, it is assumed that it was originally misplaced in another shelf by the human assistant. Although, in actual supermarkets, there is an open-ended number of reasons for objects to be misplaced, in the present scenario, this is the only reason considered.

The robot performs local observations and can only see one shelf at a time, but it sees all the objects on the shelf in a single observation.

The diagnosis consists of the set of moves and placing actions that the human assistant is assumed to have performed to fill up all the unseen shelves given the current and possibly previously observed shelves. Whenever there are mismatches between the state of the KB and the observed world, a diagnosis is rendered by the inferential machinery (It should be considered that even the states of observed shelves are also hypothetical as there may have been visual precision and/or recall errors, i.e, objects may have been wrongly recognized or missed out; however, when this happens, the robot can recover only later on when it realizes that the state of the world is not consistent with its expectations, and it has to reconsider previous diagnoses.).

The diagnosis inference is invoked when the at shelf within the behavior fails. The KB is updated according to the current observation, and contains the beliefs of the robot about the content of the current and the remaining shelves. The current observation renders the set of missing objects and of missing and misplaced objects at the current shelf . If the sought object is of the class of the shelf, it must be within or the supermarket has run out of such object; otherwise, the robot believed that the sought object was already misplaced in a shelf of a different class , but the failed observation showed that such belief was false. Consequently, the sought object must be included in , and the KB must be updated with the double exception in the KB: that the object is not in the current shelf and was not in its corresponding shelf; hence, it must be in on one of the shelves that remain to be inspected in the current inference cycle. This illustrates that negative propositions increase the knowledge productively, as the uncertainty is reduced.

The diagnosis procedure involves extending the believed content of all unseen shelves with the content of , avoiding repetitions. The content of the shelves seen in previous observations is already known.

There are many possible heuristics to make such an assignment; here, we simply assume that is the closest unseen shelf—in metrical distance—to the current shelf and distribute the remaining objects of in the remaining unseen shelves randomly. The procedure renders the known state of shelf , unless there were visual perception errors, and the assumed or hypothetical states of the remaining unseen shelves.

The diagnosis is then rendered directly by assuming that the human assistant moved to each shelf and placed on it all the objects in its assumed and known states. There may be more than one known state because the assumption made at a particular inference cycle may have turned out wrong, and the diagnosis may have been invoked with a list of previous observed shelves in which states are already known.

8.2. Decision-Making Inference

In the present model, deciding what to do next depends on the task obligation that invoked the inference cycle in the first place , e.g., , and the current . Let the set . Compute the set consisting of all subsets of that include .

The model could also consider other parameters, such as the mood of the customer or whether he or she is in a hurry, which can be thought of as constraints in the decision-making process; here, we state a global parameter that is interpreted as the maximum cost that can be afforded for the completion of the task.

We also consider that each action performed by the robot has an associated cost in time, e.g., the parameters associated to the behaviors and , and a probability to be achieved successfully, e.g., the parameters associated to a action. The total cost of an action is computed by a restriction function r.

The decision-making module in relation to proceeds as follows:

8.3. Plan Inference

The planning module searches the most efficient way to solve a set of and . Each element of implies a realignment in the position of the objects in the scenario, either carrying and object to another shelf or delivering it to a client.

Each is transformed in a list of basic actions of the form:

,

and each is transformed in a list of basic actions of the form:

,

where is the shelf containing the object according to the diagnosis module, and is the correct shelf, where should be according to the KB. All the lists are joined in a multiset of basic actions B.

The initial state of the search tree contains:

The current location of the robot ().

The actual state of the right hand (free or carrying the object ).

The actual state of the left hand (free or carrying the object ).

The list R of remaining to solve.

The multiset B of basic actions to solve the elements in R.

The list of basic actions of the plan P (in this moment is still empty).

The initial state is put on a list F of all the not expanded nodes in the frontier of the tree. The search algorithm proceeds as follows:

Select one node to expand from F. The selection criteria of the node of F is DFS. The cost and probability of each action in the current plan P in the node is used to compute a score.

When a node has been selected, a rigorous analysis of B is performed. For each basic action in B, check if the following preconditions are satisfied:

Two subsequent navigation moves are banned. If the action is a or a , discard if the last action of P is a or a .

Only useful observations. If the action is a , discard if the last action of P is a , , or if the robot actually has objects in both hands.

Only deliveries after taking. If the action is , the action should be included previously in the plan.

Only take actions if at least one hand is free.

For each basic actions of B not discarded using the preconditions, generate a successor node in this way:

If the basic action is or change the current location of the robot to s or the user position, respectively. If not, the current location of the robot in is the same as .

Update the state of the right and left hand if the basic action is a or a .

If the basic action was a , delete the associated element in the list R of remaining . If the list gets empty, a solution has been found.

Remove the basic action used to create this node from B.

Add the basic action to the plan P.

Return to step 1 to select a new node.

When a solution has been found in the tree the plan

P is post processed to generate a list of actions specified in terms of

basic behaviors, which can be used by the dispatcher. A video showing a demo of the robot Golem-III performing as a supermarket assistant, including all the features described in this section is available at

http://golem.iimas.unam.mx/inference-in-service-robots. The KB-system and the full Sitlog’s code are also available at

https://bit.ly/deliberative-inference.

We could have employed a ready-to-use planning systems for the implementation (e.g., Reference [

65]) instead of the particular planning algorithm presented here, which we acknowledge is focused in the particular scenario, but our model is stated at the functional system level in opposition to particular algorithms that are used at the algorithmic level, and these two system levels should not be confused.

9. Conceptual Inference

This inference strategy is illustrated with a home scenario in which the robot plays the role of a butler, as follows:

The home has a number of rooms ; a finite set of shelves at their corresponding locations , which may be within any arbitrary room, each having an arbitrary number of objects of a particular designated class , the set of classes;

The human user, who may require assistance;

The human user has a child that plays at home and misplaces objects on wrong tables;

The robot has a number of conversational, task, and cognitive obligations, which are the same as in the supermarket scenario, but, in addition, it may provide the user plausible explanations about the causes of unexpected observations;

The user has a number of preferences that are stored in the robot’s KB, which can be changed dynamically; in addition, the robot may know about general health recommendations that can be deployed along the execution of a task.

The details of the implementation of the home scenario, including the use of the conditional defaults in the KB and the way they are managed, are explained in

Appendix B. The roles played by the agents and the speech acts involved are analogous to those of the supermarket scenario. Additionally, in the home scenario, the robot is familiar with the human user, has knowledge about his or her preferences and/or habits, and may use this knowledge to assist him or her better. The robot’s behavior is proactive, and the task relies on a more extensive use of cooperative dialogues, where the robot may have conversational initiatives. A task oriented conversation in this setting between the robot Golem-III and a human user named Luis is as follows:

Golem: Hi, Luis.

Golem: Please tell me what do you like best: malz or coke?

Luis: Hi, Golem. I like malz.

Golem: Excellent! Malz is much healthier than the coke!

Golem: What do you like best: noodles or biscuits?

Luis: I like noodles best.

Golem: Great! I will recall your choice!

Golem: Do you have any more preferences?

Luis: No thanks, Golem; that is ok.

Golem: Thank you, Luis.

At this point Luis goes to work and takes some time to be back; when he does Golem meets him at the door.

- 11.

Golem: Hi, Luis, how was your day?

- 12.

Luis: I had a bad day!

- 13.

Golem: Sorry to hear that; do you want me to do something for you?

- 14.

Luis: Bring me something to drink and biscuits.

- 15.

Golem: Ok. I will bring you malz, your favorite drink.

- 16.

Luis: Yes.

- 17.

Golem: But you like noodles better than biscuits! Shall I bring you the biscuits?

- 18.

Luis: No, Golem, noodles are ok.

- 19.

Golem: Ok. I will bring you the malz and the noodles. I will get the malz.

Golem goes to the expected locations of the offered objects since they should be at their respective shelves, although there may be exceptions. So, Golem reaches the shelf of drinks where the malz should be placed and finds it.

- 20.

Golem: Attempting to grab the malz with my left arm.

Golem grabs the malz.

- 21.

Golem: I took the malz.

- 22.

Golem: I will get the noodles.

Golem goes to the expected location of noodles, which is the table for food; however, the noodles are not there.

- 23.

Golem: The noodles are not in the shelf of food.

Golem goes to the next preferred shelf for noodles, which is snacks; the noodles are found there.

- 24.

Golem: Attempting to grab the noodles with my right arm.

Golem grabs the noodles;

- 25.

Golem: I took the noodles.

Golem goes to the location where Luis is expected to be, which is the living room since he is back from work and tired after a bad day. Golem finds him there.

- 26.

Golem: Here is the noodles.

Golem handles the noodles to Luis.

- 27.

Golem: Here is the malz.

Golem handles the malz to Luis.

- 28.

Golem: I found the noodles on the shelf of snacks, but it should be on the shelf of food; do you want me to change the preferred location of noodles to the shelf of snacks?

- 29.

Luis: Yes, Golem, please.

- 30.

Golem: Ok. I updated my KB with your new preference.

- 31.

Golem: I also noticed that the coke is not in its right place.

- 32.

Golem: I think that the explanation for this is that the coke was misplaced there by your child.

- 33.

Golem: Do you want me to take it to its right shelf?

- 34.

Luis: Yes, Golem, please.

- 35.

Golem: Ok. I will take it to the shelf of drinks.

Golem goes to the shelf of snacks and takes the coke.

- 36.

Golem: Attempting to grab the coke with my right arm.

- 37.

Golem: I took the coke.

Golem goes to the shelf of drinks, places the coke there, and goes back to the living room with Luis.

- 38.

Golem: I put the coke on its right shelf.

- 39.

Golem: All the objects are placed on their right shelves.

- 40.

Golem: The task is finished.

- 41.

Golem: Goodbye.

This scenario could be implemented with the

, too; however, here, we use an alternative strategy that offers an additional perspective of the framework. This is based on the direct specification of speech act protocols defined in

. These are intentional structures in which performing a speech act establishes a number of conversational obligations that must be fulfilled before the dialogue proceeds to the next transaction. For instance, a command must be executed, and a question must be answered. The dialogue models are designed considering the user’s preferences and the whole task oriented conversation is modeled as the interpretation of one main protocol that embeds the goals of the task. The design of the dialogue models is loosely based on the notion of balanced transactions of the DIME-DAMSL. annotation scheme [

66].