An Improved VGG19 Transfer Learning Strip Steel Surface Defect Recognition Deep Neural Network Based on Few Samples and Imbalanced Datasets

Abstract

:1. Introduction

1.1. The Significance and Development of Strip Surface Defect Detection

1.2. The Practical Difficulties of Using the Machine Vision Surface Detection Technique on Imbalanced Datasets

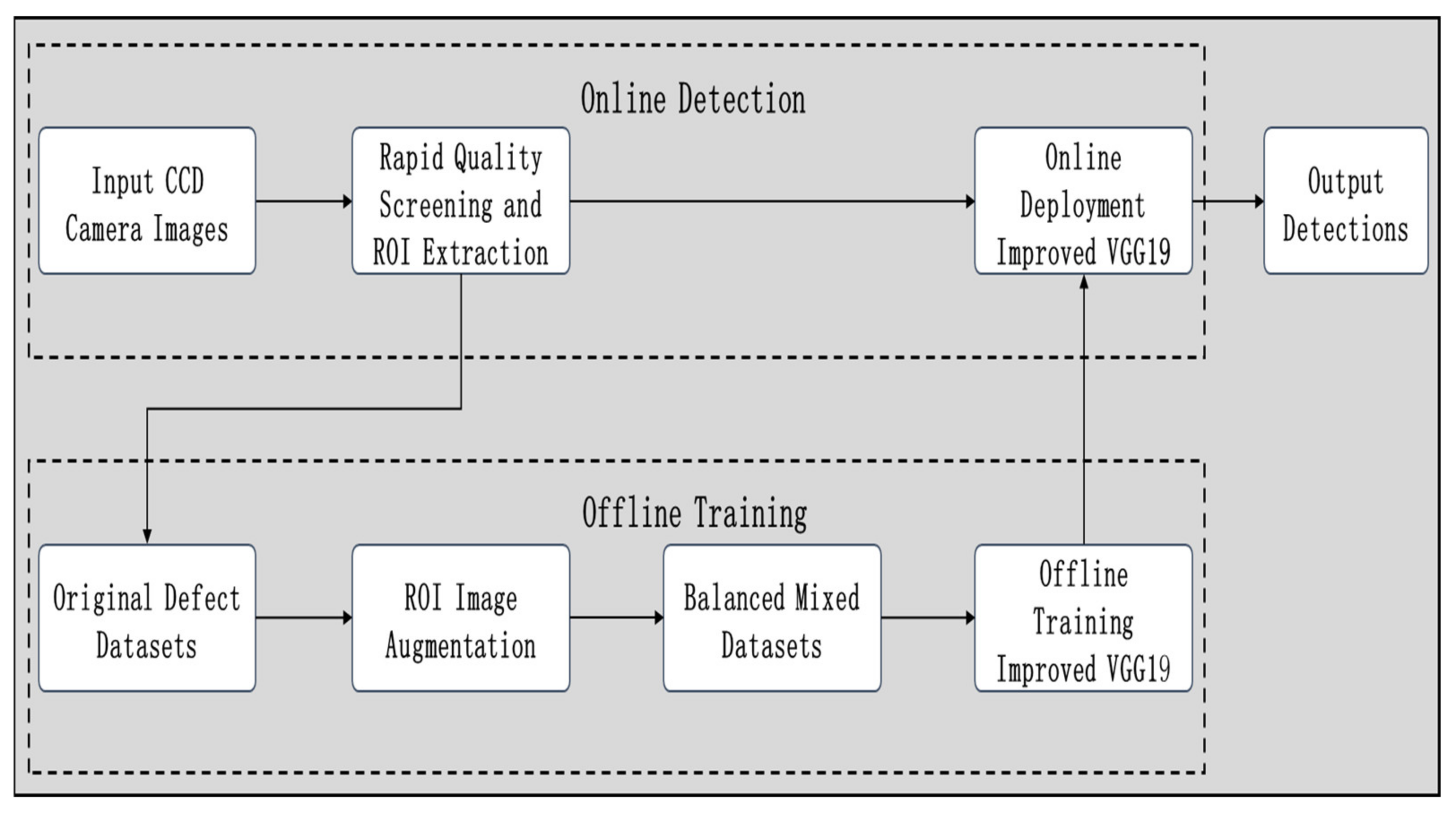

1.3. Scope of Our Work and Contribution

2. Related Work

2.1. Categories of Surface Defect Detection Algorithms

2.2. Levels of Imbalanced Learning Algorithms

2.3. The Development of Deep Learning on Few Samples and Imbalanced Datasets

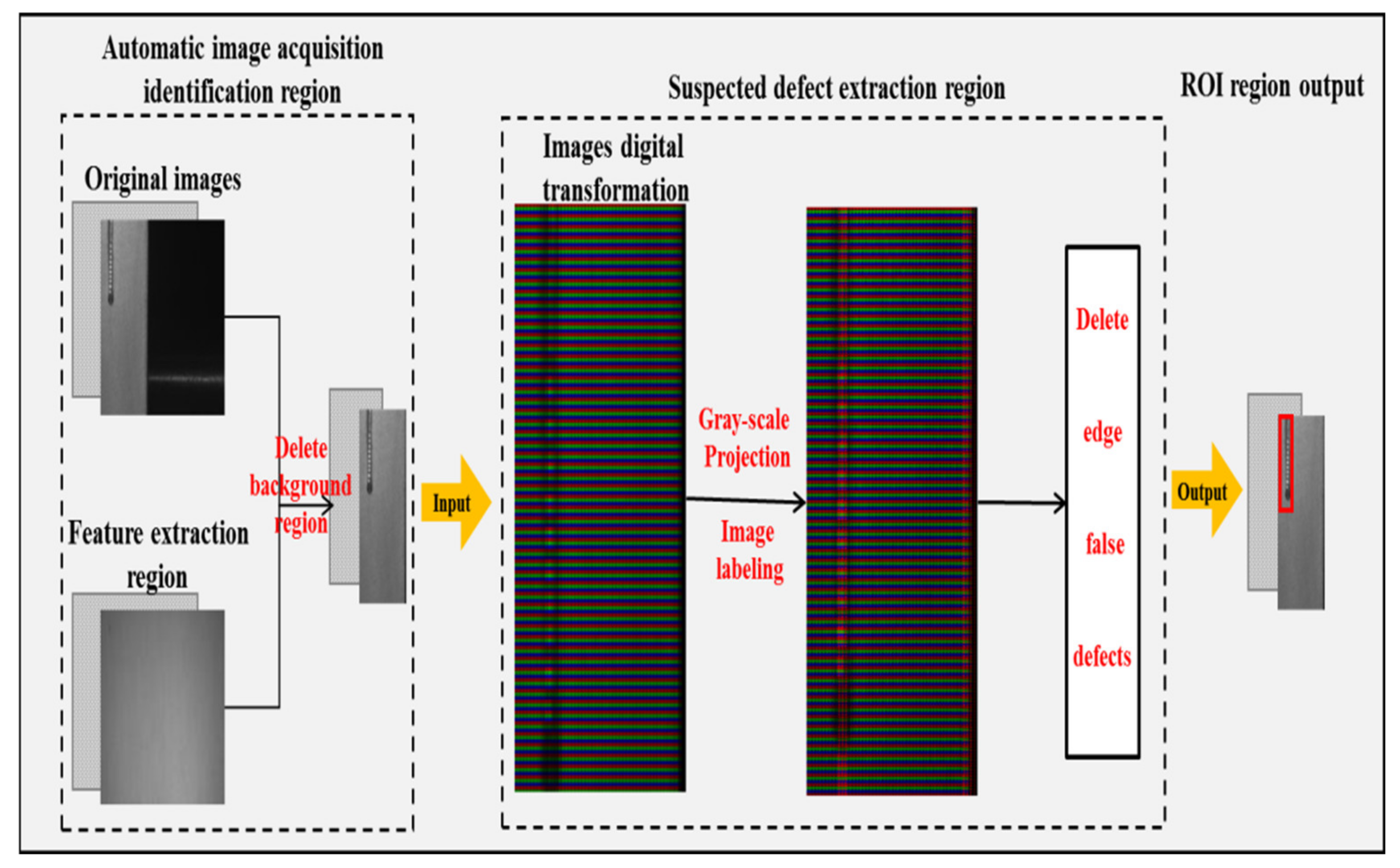

3. Rapid Quality Screening and Defect Feature Extraction Algorithm on the Strip Steel Surface

3.1. Rapid Quality Screening Problems on the Strip Steel Surface

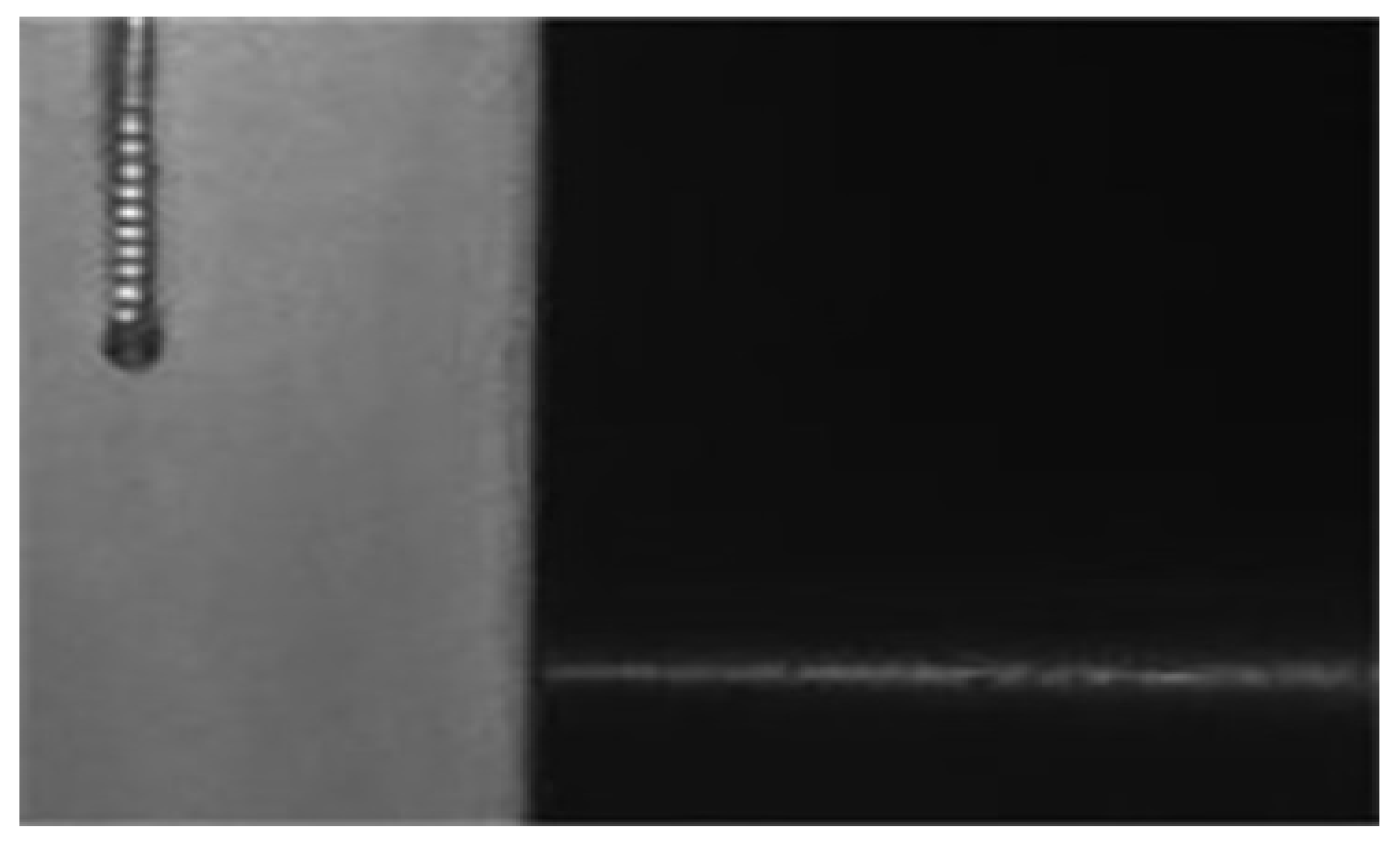

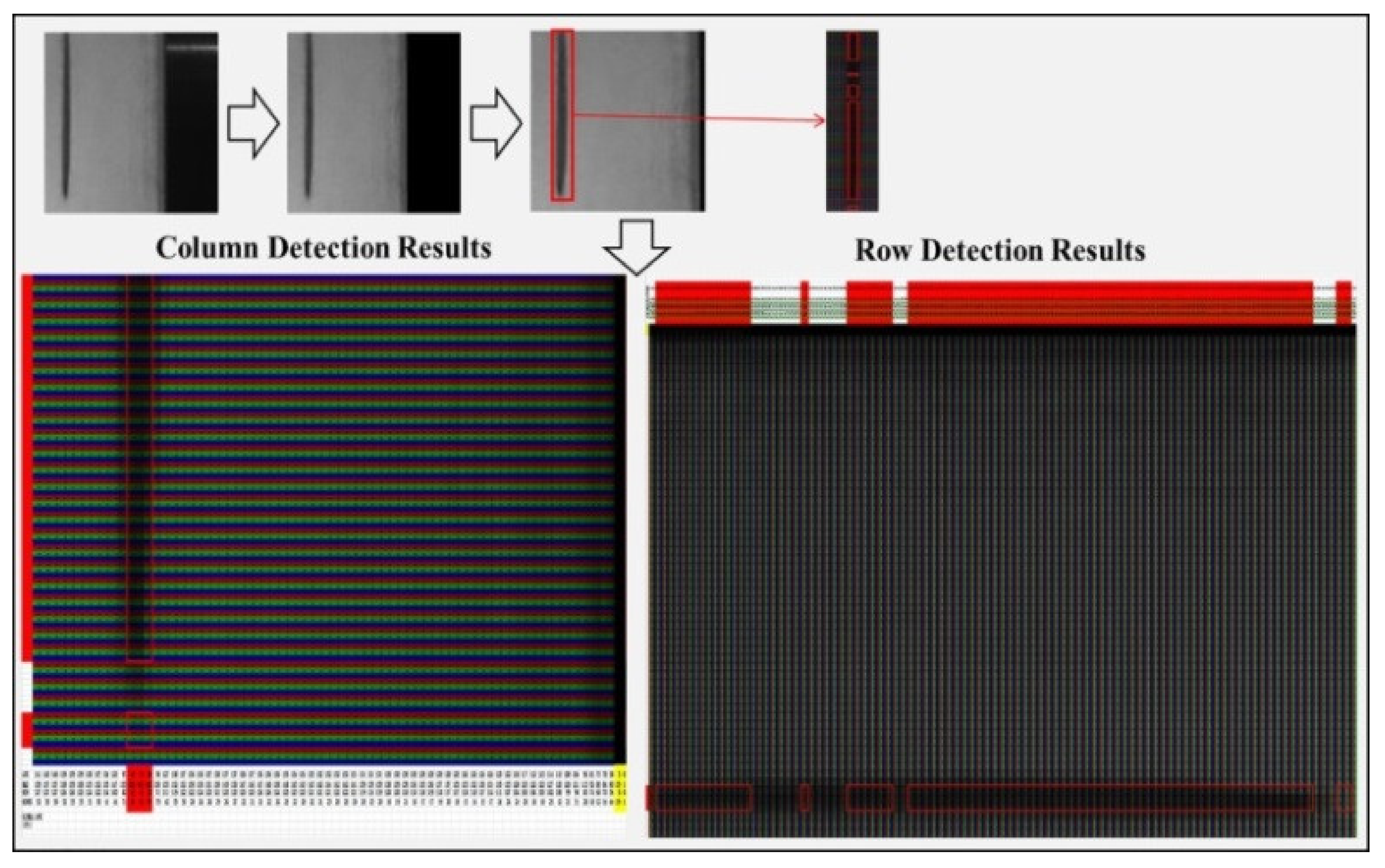

3.2. Strip Steel Edge and Background Region Automatic Detection

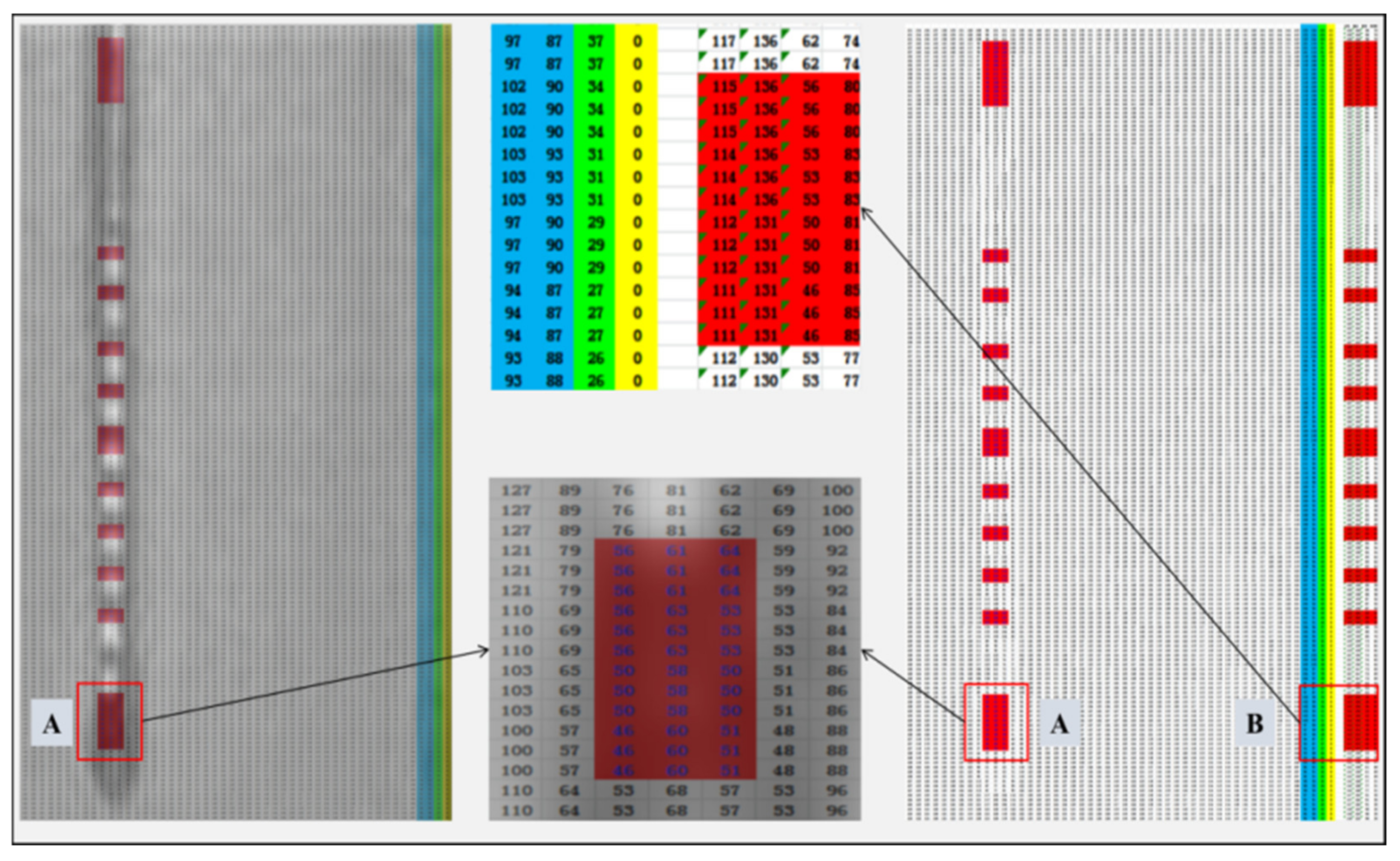

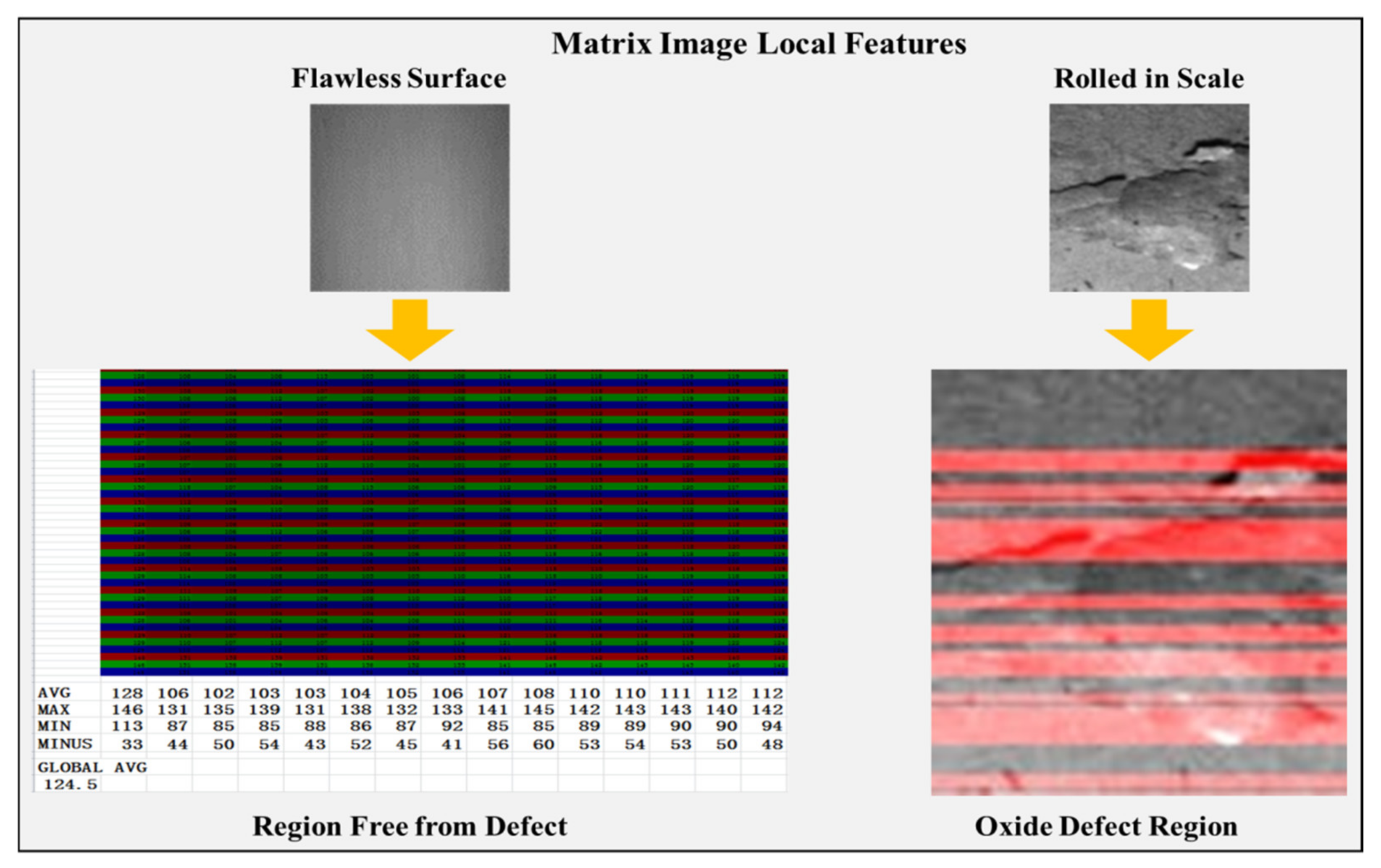

3.3. An Improved Strip Steel Surface Rapid Quality Screening and Defect Feature Extraction Algorithm Based on Gray-Scale Projection

| Algorithm 1 Rapid Quality Screening and Defect Feature Extraction Algorithm |

| Input: original image matrix f(i, j), Size = M × N, iϵ[0, M − 1], jϵ [0, N − 1] |

| Output: ROI Defect Region |

| matrix R[M], Size = M × 1; empty matrix C[N], Size = 1 × N |

| Step 1. Calculate Initial Values |

| for int R = 0, R ≦ i, R++; △Calculate the matrix by rows, fixed the rows first |

| for int j = 0; j ≦ N − 1, j++; △Iterate through the columns of the matrix |

| f(R, j), Size = M × 1 |

| RMax = Max(f(R, j)), Size = 1 × 1 |

| RMin = Min(f(R, j)), Size = 1 × 1 |

| RAvg = Sum(f(R, j))/M, Size = 1 × 1 |

| Add RAvg into R[M], |

| Return R[M], Size = M × 1 |

| △The same principle is used to calculate the matrix by column to obtain C[N], Size = 1 × N |

| GlobalAvg[M, N] = Sum(R[M])/M Or Sum(C[N])/N, Size = 1 × 1 |

| Step 2. Confirm Detection Region |

| if RAvg Or CAvg << GlobalAvg[M, N]; △The row or column is the background area |

| Delect the Row or Column with low projection value |

| Add rest of area into Confirm Detection Region |

| Step 3. Suspected Defect Region |

| △region with Defect Region; Transform Region; False Defects Region |

| if (1 − µ) GlobalAvg[M, N] ≦ RMax − RMin ≦ (1 + µ) GlobalAvg[M, N] |

| Or |

| (1 − µ) GlobalAvg[M, N] ≦ CMax − CMin ≦ (1 + µ) GlobalAvg[M, N] Else if 1.5*GlobalAvg ≦ CAvg Or RAvg ≦ 2*GlobalAvg Find Highlighted Defective ROI Region |

| Add rest of area into Suspected Defect Region |

| Step 4. Detection Transform Region |

| if RAvg Or CAvg < (1 − 2µ)GlobalAvg[M, N]; |

| Find Transform Region T- Region, △region between strip steel edge and background |

| Delete T- Region |

| Step 5. False Defects Region |

| Find the position of T- Region, |

| Extend T- Region’s length twice by horizontal and vertical coordinates |

| Delete False Defects Region |

| Step 6. Output ROI Defect Region = The final remaining area + Highlighted Defective ROI Region |

| Get ROI Defect Region |

3.4. Strip Steel Surface Rapid Quality Screening and Defect Feature Extraction Experiment

4. ROI Image Augmentation Algorithm for Strip Steel Defects

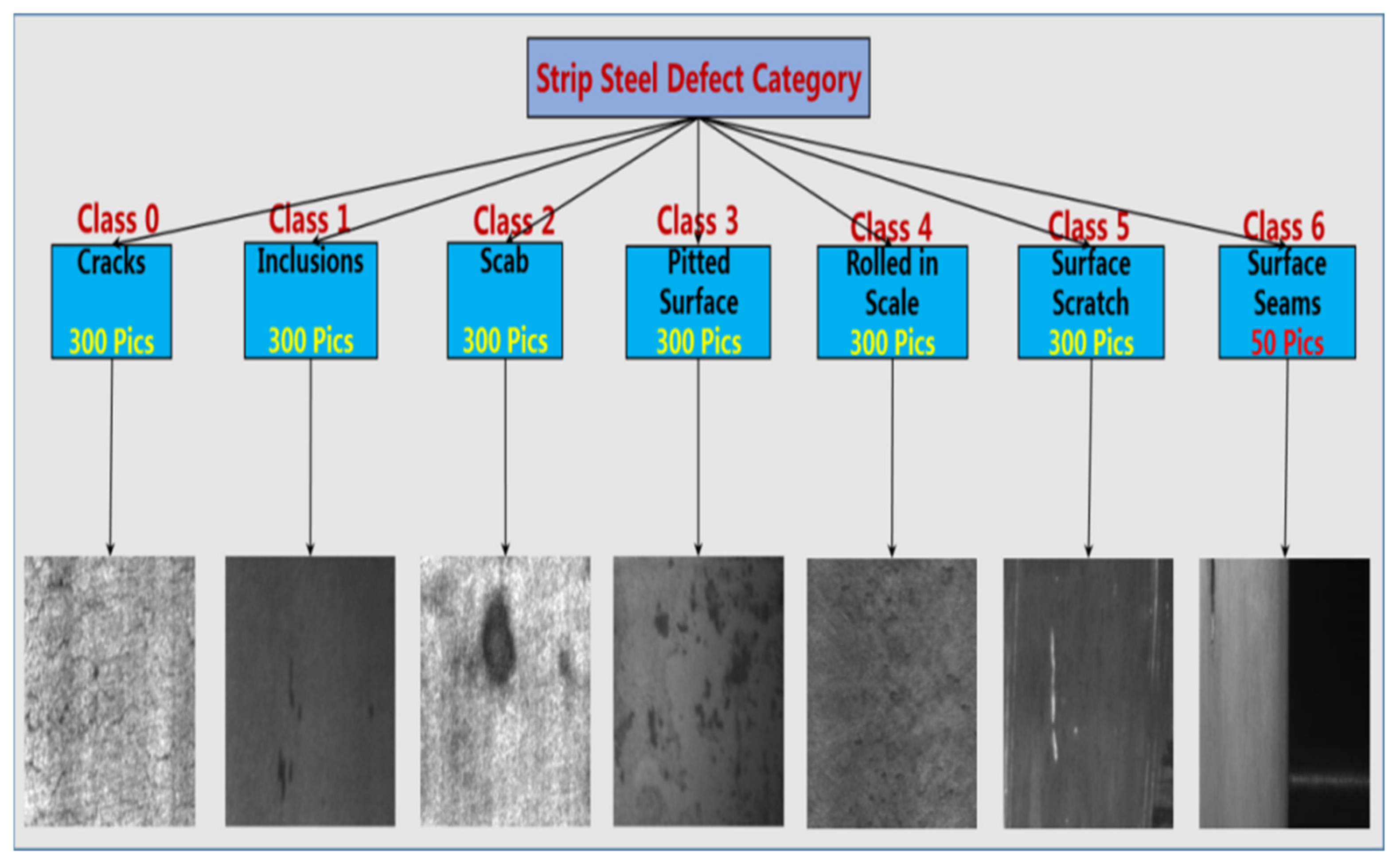

4.1. Category Imbalance Problem for Strip Steel Surface Defects

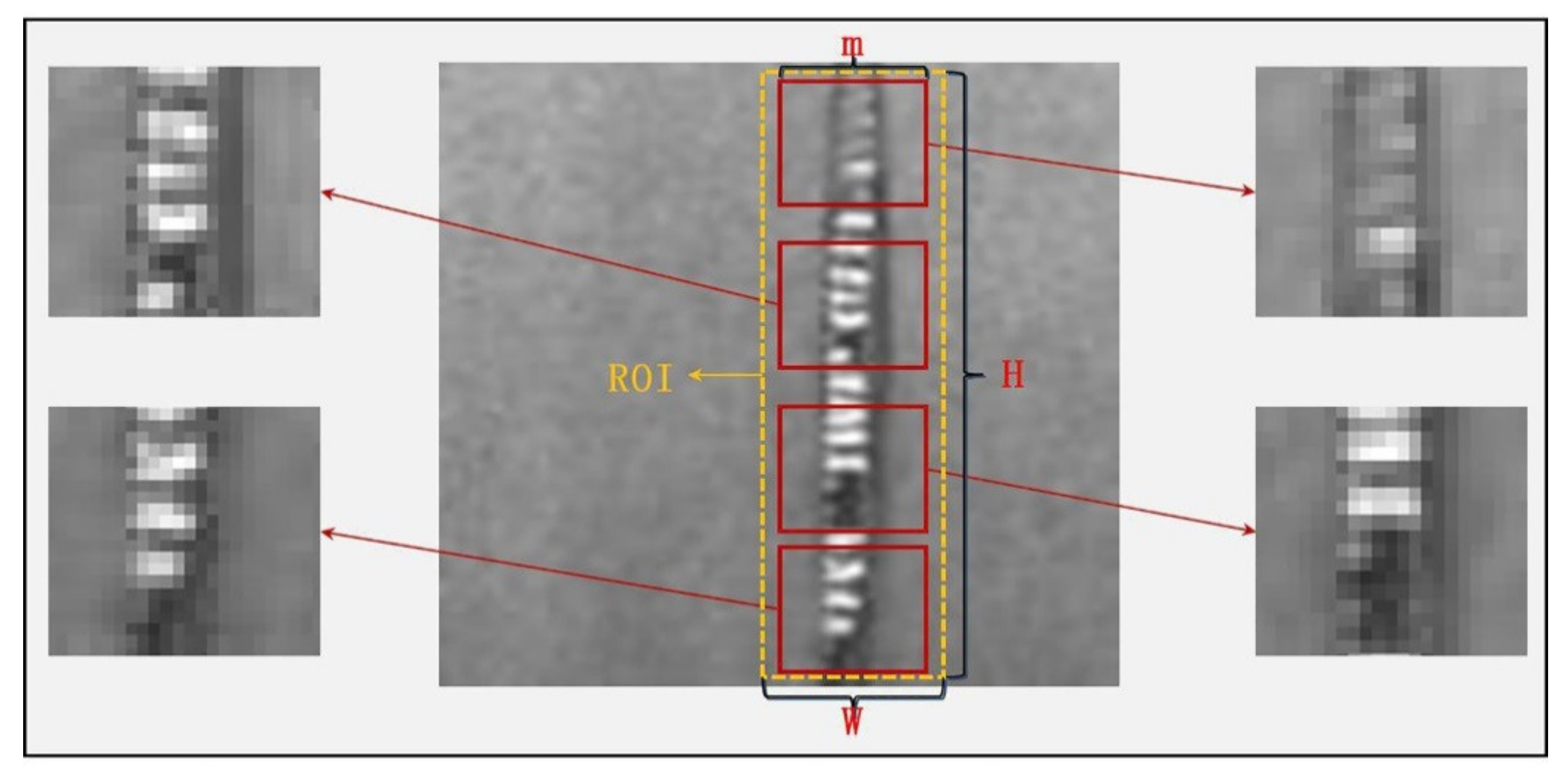

4.2. Industrial Image Data Augmentation Algorithm Based on ROI Region Random Cropping

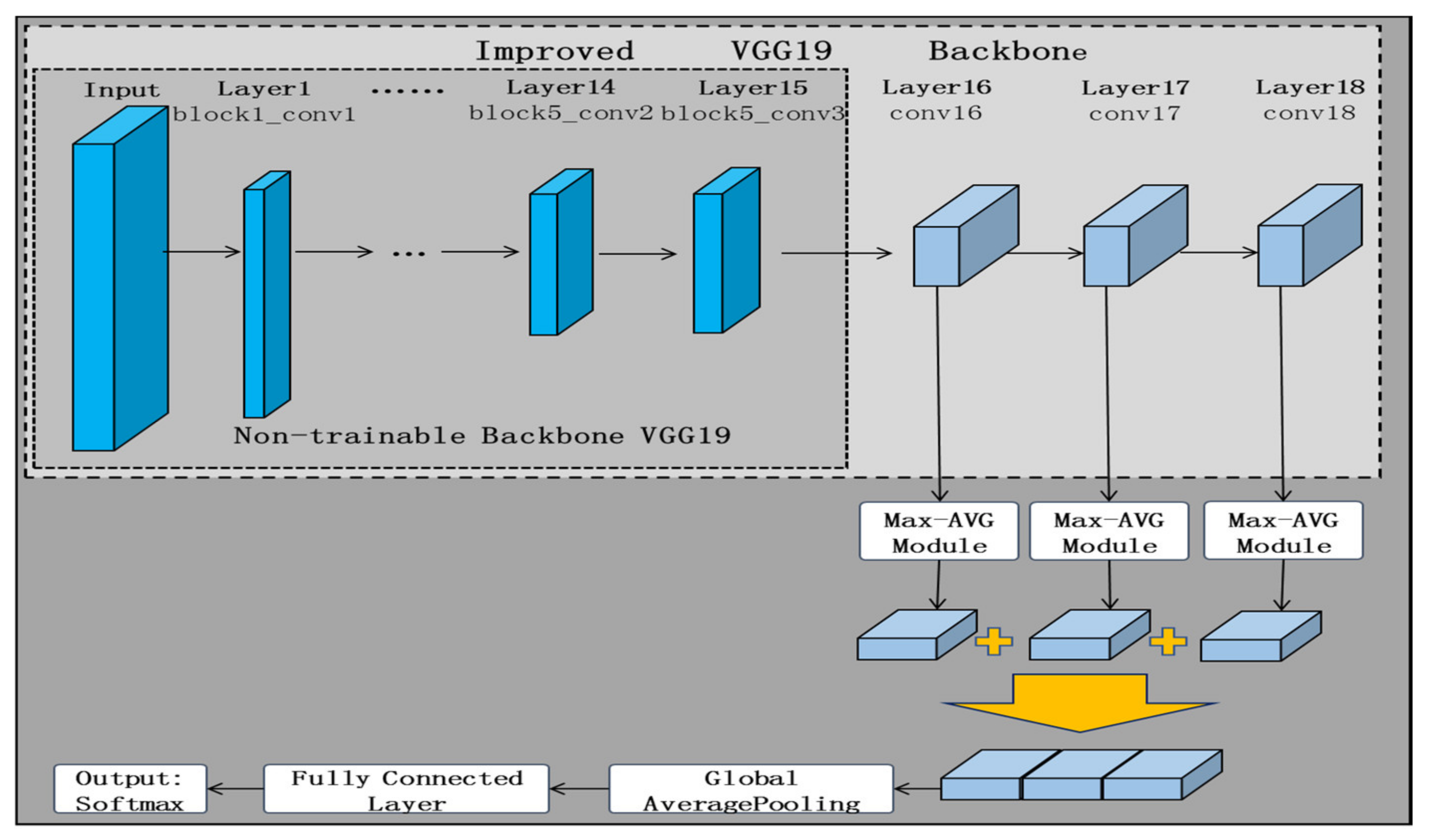

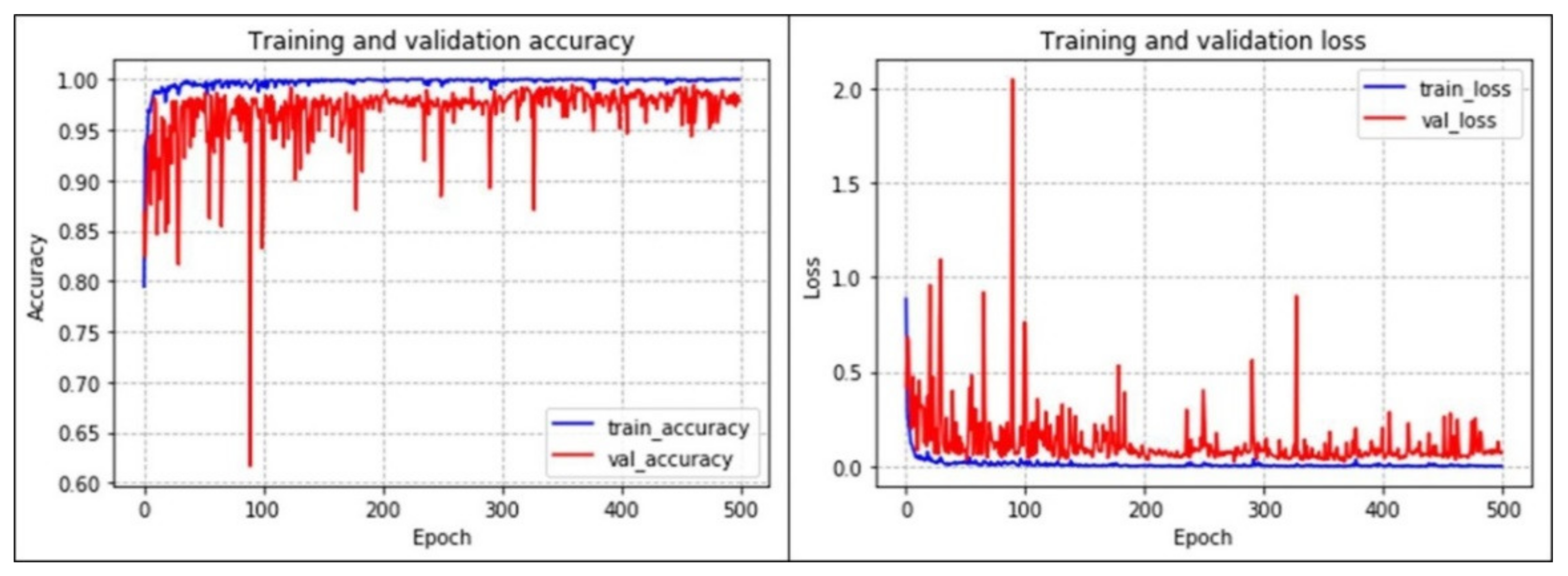

5. Strip Steel Surface Defect Recognition Deep Neural Network Based on Transfer Learning

5.1. Image Detection Problem on Low-Resolution and Few Samples

5.2. Transfer Learning Deep Neural Network Based on VGG19

5.3. A Transfer Learning Deep Neural Network Based on Improved VGG19

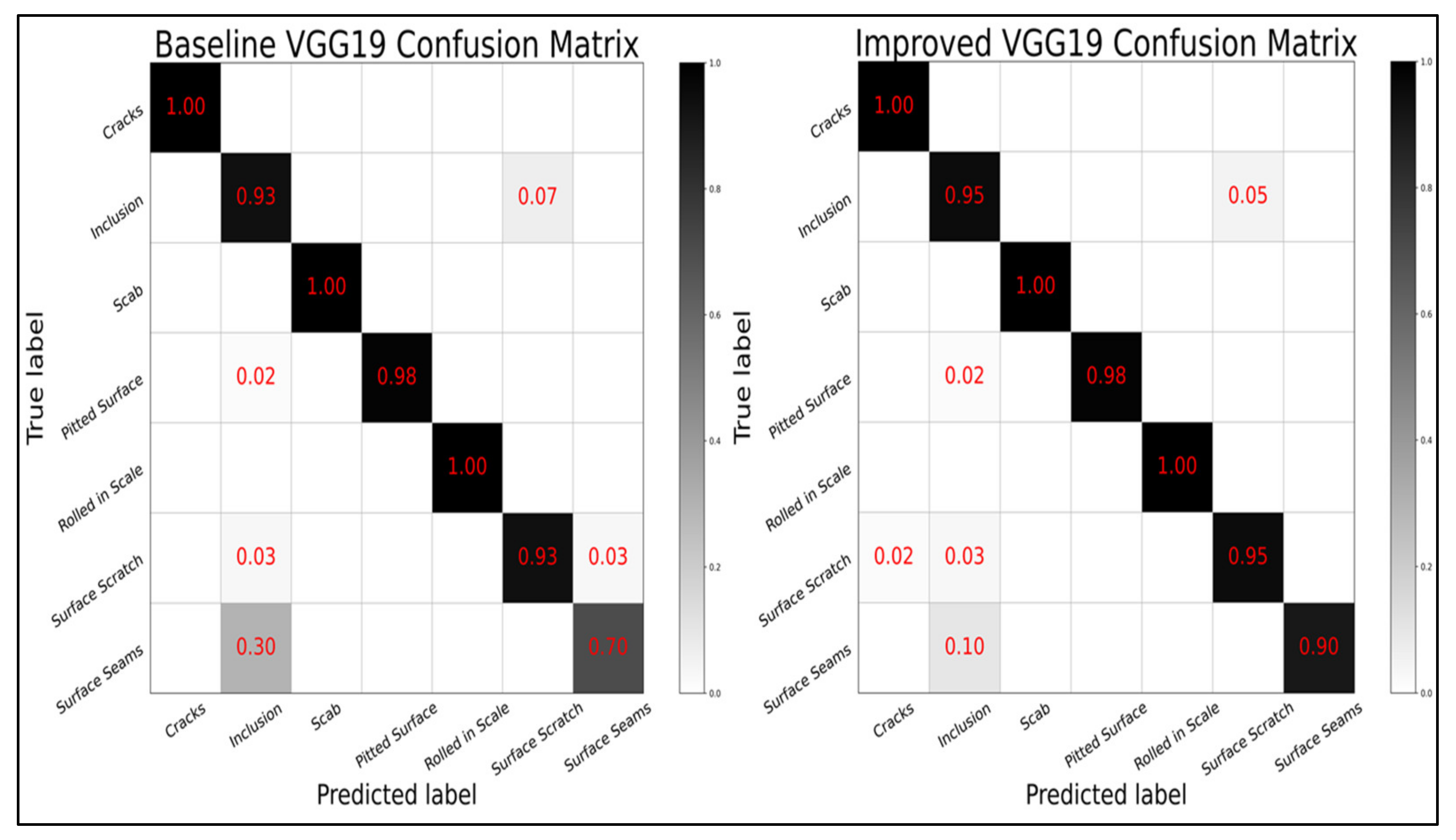

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| A-Fast-RCNN | Adversarial Fast Region-based Convolutional Neural Network |

| BMP | Bitmap |

| CCD | Charge Coupled Device |

| CNN | Convolution Neural Network |

| CAE-SGAN | Convolutional Autoencoder Extract and Semi-supervised Generative Adversarial Networks |

| DNN | Deep Neural Network |

| FCN | Fully Convolutional Network |

| FPS | Frames Per Second |

| GANs | Generative Adversarial Networks |

| GHM | Gradient Harmonizing Mechanis |

| HSVM-MC | Multi-label Classifier with Hyper-sphere Support Vector Machine |

| HCGA | Hybrid Chromosome Genetic Algorithm |

| MFN | Multilevel Feature Fusion Network |

| NEU | Northeastern University |

| M-Pooling CNN | Max-Pooling Convolution Neural Network |

| OHEM | Online Hard Example Mining |

| PLCNN | Convolution Neural Network based on Pseudo-Label |

| ROI | Region of Interest |

| ResNet | Residual Network |

| RAdam | Rectified Adam |

| S-OHEM | Stratified Online Hard Example Mining |

| SVM | Support Vector Machines |

| SGD | Stochastic Gradient Descent |

| VGG | Very Deep Convolutional Networks designed by Visual Geometry Group |

| YOLO | You Only Look Once |

References

- Wu, P.; Lu, T.; Wang, Y. Nondestructive testing technique for strip surface defects and its applications. Nondestruct. Test. 2000, 22, 312–315. [Google Scholar]

- Tian, S.; Xu, K. An Algorithm for Surface Defect Identification of Steel Plates Based on Genetic Algorithm and Extreme Learning Machine. Metals 2017, 7, 311. [Google Scholar] [CrossRef]

- Srinivasan, K.; Dastoor, P.H.; Radhakrishnaiah, P.; Jayaraman, S. FDAS: A Knowledge-based Framework for Analysis of Defects in Woven Textile Structures. J. Text. Inst. 1992, 83, 431–448. [Google Scholar] [CrossRef]

- Yin, X.; Yu, X.; Sohn, K.; Liu, X.; Chandraker, M. Feature Transfer Learning for Face Recognition with Under-Represented Data. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5697–5706. [Google Scholar]

- Cui, Y.; Jia, M.; Lin, T.Y.; Song, Y.; Belongie, S. Class-Balanced Loss Based on Effective Number of Samples. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9260–9269. [Google Scholar]

- Fang, X.; Luo, Q.; Zhou, B.; Li, C.; Tian, L. Research Progress of Automated Visual Surface Defect Detection for Industrial Metal Planar Materials. Sensors 2020, 20, 5136. [Google Scholar] [CrossRef] [PubMed]

- Shi, T.; Kong, J.; Wang, X.; Liu, Z.; Zheng, G. Improved sobel algorithm for defect detection of rail surfaces with enhanced efficiency and accuracy. J. Cent. South. Univ. 2016, 23, 2867–2875. [Google Scholar] [CrossRef]

- Ma, Y.; Li, Q.; Zhou, Y.; He, F.; Xi, S. A surface defects inspection method based on multidirectional gray-level fluctuation. Int. J. Adv. Robot. Syst. 2017, 14, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Ojala, T.; Pietikainen, M.; Harwood, D. A comparative study of texture measures with classification based on feature distributions. Pattern Recognit. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- Jeon, Y.; Yun, J.; Choi, D.; Kim, S.W. Defect detection algorithm for corner cracks in steel billet using discrete wavelet transform. In Proceedings of the ICROS-SICE International Joint Conference, Fukuoka, Japan, 18–21 August 2009; pp. 2769–2773. [Google Scholar]

- Hu, H.; Liu, Y.; Liu, M.; Nie, L. Surface Defect Classification in Large-scale Strip Steel Image Collection via Hybrid Chromosome Genetic Algorithm. Neurocomputing 2016, 181, 86–95. [Google Scholar] [CrossRef]

- Yazdchi, M.; Yazdi, M.; Mahyari, A.G. Steel surface defect detection using texture segmentation based on multifractal dimension. In Proceedings of the International Conference on Digital Image Processing (ICDIP), Bangkok, Thailand, 7–9 March 2009; pp. 346–350. [Google Scholar]

- Mandriota, C.; Nitti, M.; Ancona, N.; Stella, E.; Distante, A. Filter-based feature selection for rail defect detection. Mach. Vis. Appl. 2004, 15, 179–185. [Google Scholar] [CrossRef]

- Tang, B. Steel Surface Defect Recognition Based on Support Vector Machine and Image Processing. China Mech. Eng. 2011, 22, 1402–1405. [Google Scholar]

- Chu, M.; Zhao, J.; Gong, R.; Liu, L. Steel surface defects recognition based on multi-label classifier with hyper-sphere support vector machine. In Proceedings of the Control and Decision Conference (CCDC), Chongqing, China, 28–30 May 2017; pp. 3276–3281. [Google Scholar]

- Kwon, B.K.; Won, J.S.; Kang, D.J. Fast defect detection for various types of surfaces using random forest with VOV features. Int. J. Precis. Eng. Manuf. 2015, 16, 965–970. [Google Scholar] [CrossRef]

- Masci, J.; Meier, U.; Ciresan, D.; Schmidhuber, J.; Fricout, G. Steel defect classification with Max-Pooling Convolutional Neural Networks. In Proceedings of the 2012 International Joint Conference on Neural Networks (IJCNN), Brisbane, QLD, Australia, 10–15 June 2012; pp. 1–6. [Google Scholar]

- Gao, Y.; Gao, L.; Li, X.; Yan, X. A semi-supervised convolutional neural network-based method for steel surface defect recognition. Robot. Comput. Integr. Manuf. 2020, 61, 101825. [Google Scholar] [CrossRef]

- Li, J.; Su, Z.; Geng, J.; Yin, Y. Real-time Detection of Steel Strip Surface Defects Based on Improved YOLO Detection Network. IFAC-Pap. 2018, 51, 76–81. [Google Scholar] [CrossRef]

- Geirhos, R.; Rubisch, P.; Michaelis, C.; Bethge, M.; Wichmann, F.A.; Brendel, W. ImageNet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness. In Proceedings of the International Conference on Learning Representations (ICLR 2019), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Kim, S.; Kim, W.; Noh, Y.K.; Park, F.C. Transfer learning for automated optical inspection. In Proceedings of the International Joint Conference on Neural Networks, Anchorage, AK, USA, 14–19 May 2017; pp. 2517–2524. [Google Scholar]

- He, Y.; Song, K.; Meng, Q.; Yan, Y. An End-to-End Steel Surface Defect Detection Approach via Fusing Multiple Hierarchical Features. IEEE Trans. Instrum. Meas. 2020, 69, 1493–1504. [Google Scholar] [CrossRef]

- Dung, C.V.; Anh, L.D. Autonomous concrete crack detection using deep fully convolutional neural network. Autom. Constr. 2018, 99, 52–58. [Google Scholar] [CrossRef]

- Han, T.; Liu, C.; Yang, W.; Jiang, D. Deep transfer network with joint distribution adaptation: A new intelligent fault diagnosis framework for industry application. ISA Trans. 2020, 97, 269–281. [Google Scholar] [CrossRef] [Green Version]

- Mun, S.; Shin, M.; Shon, S.; Kim, W.; Han, D.K.; Ko, H. DNN Transfer learning based non-linear feature extraction for acoustic event classification. IEICE Trans. Inf. Syst. 2017, 100, 2249–2252. [Google Scholar] [CrossRef] [Green Version]

- Qureshi, A.S.; Khan, A.; Zameer, A.; Usman, A. Wind power prediction using deep neural network based meta regression and transfer learning. Appl. Soft Comput. 2017, 58, 742–755. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Zhang, J.; Mani, I. KNN Approach to Unbalanced Data Distributions: A Case Study Involving Information Extraction. In Proceedings of the ICML’2003 Workshop on Learning from Imbalanced Datasets, Washington, DC, USA, 21 August 2003. [Google Scholar]

- He, H.; Bai, Y.; Garcia, E.A.; Li, S. ADASYN: Adaptive Synthetic Sampling Approach for Imbalanced Learning. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–8 June 2008; pp. 1322–1328. [Google Scholar]

- Batista, G.E.; Prati, R.C.; Monard, M.C. A study of the behavior of several methods for balancing machine learning training data. ACM Sigkdd Explor. Newsl. 2004, 6, 20. [Google Scholar] [CrossRef]

- Elkan, C. The foundations of cost-sensitive learning. In Proceedings of the Seventeenth International Conference on Artificial Intelligence, Seattle, USA, 4–10 August 2001; pp. 973–978. [Google Scholar]

- Liu, X.Y.; Zhou, Z.H. The Influence of Class Imbalance on Cost-Sensitive Learning: An Empirical Study. In Proceedings of the 6th IEEE International Conference on Data Mining (ICDM 2006), Hong Kong, China, 18–22 December 2006. [Google Scholar]

- Wang, S.; Yao, X. Diversity Analysis on Imbalanced Data Sets by Using Ensemble Models. In Proceedings of the IEEE Symposium on Computational Intelligence and Data Mining, CIDM 2009, part of the IEEE Symposium Series on Computational Intelligence 2009, Nashville, TN, USA, 30 March–2 April 2009. [Google Scholar]

- Liu, X.Y.; Wu, J.; Zhou, Z.H. Exploratory Undersampling for Class-Imbalance Learning. IEEE Trans. Cybern. 2009, 39, 539–550. [Google Scholar]

- Shrivastava, A.; Gupta, A.; Girshick, R. Training Region-Based Object Detectors with Online Hard Example Mining. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 761–769. [Google Scholar]

- Li, M.; Zhang, Z.; Yu, H.; Chen, X.; Li, D. S-OHEM: Stratified Online Hard Example Mining for Object Detection. In Proceedings of the Chinese Conference on Computer Vision, Tianjin, China, 11–14 October 2017. [Google Scholar]

- Wang, X.; Shrivastava, A.; Gupta, A. A-Fast-RCNN: Hard Positive Generation via Adversary for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3039–3048. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Li, B.; Liu, Y.; Wang, X. Gradient Harmonized Single-stage Detector. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence (AAAI2019), Hilton Hawaiian Village, Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- NEU Surface Defect Database. Available online: http://faculty.neu.edu.cn/yunhyan/NEU_surface_defect_database.html (accessed on 8 January 2021).

- Springer Nature Editorial. More accountability for big-data algorithms. Nature 2016, 537, 449. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. DropBlock: A regularization method for convolutional networks. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 2–8 December 2018. [Google Scholar]

- Singh, K.K.; Lee, Y.J. Hide-and-Seek: Forcing a Network to be Meticulous for Weakly-supervised Object and Action Localization. In Proceedings of the 16th IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhao, Z.; Li, B.; Dong, R. A Surface Defect Detection Method Based on Positive Samples. In Proceedings of the 5th Pacific Rim International Conference on Artificial Intelligence (PRICAI), Nanjing, China, 28–31 August 2018. [Google Scholar]

- Sun, C.; Shrivastava, A.; Singh, S.; Gupta, A. Revisiting Unreasonable Effectiveness of Data in Deep Learning Era. In Proceedings of the 16th IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Liu, L.; Jiang, H.; He, P.; Chen, W.; Liu, X.; Gao, J.; Han, J. On the Variance of the Adaptive Learning Rate and Beyond. In Proceedings of the International Conference on Learning Representations (ICLR 2020), Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Di, H.; Ke, X.; Peng, Z.; Dongdong, Z. Surface defect classification of steels with a new semi-supervised learning method. Opt. Lasers Eng. 2019, 117, 40–48. [Google Scholar] [CrossRef]

- Kostenetskiy, P.; Alkapov, R.; Vetoshkin, N.; Chulkevich, R.; Napolskikh, I.; Poponin, O. Real-time system for automatic cold strip surface defect detection. FME Trans. 2019, 47, 765–774. [Google Scholar] [CrossRef] [Green Version]

| Samples | 240 | 240 | 240 | 240 | 240 | 240 | 40 |

| Defects | cracks | inclusions | scab | pitted surface | rolled in scale | surface scratch | surface seams |

| Accurate | 83.8% | 0.83% | 2.5% | 1.7% | 1.3% | 87.5% | 90.0% |

| Targets | Precision | Recall | f1-Score | Samples | |

|---|---|---|---|---|---|

| Defects | |||||

| Baseline VGG19 Network | |||||

| Cracks | 1.0000 | 1.0000 | 1.000 | 60 | |

| Inclusion | 0.9032 | 0.9333 | 0.9180 | 60 | |

| Scab | 1.0000 | 1.0000 | 1.0000 | 60 | |

| Pitted Surface | 1.0000 | 0.9833 | 0.9916 | 60 | |

| Rolled in Scale | 1.0000 | 1.0000 | 1.0000 | 60 | |

| Surface Scratch | 0.9333 | 0.9333 | 0.9333 | 60 | |

| Surface Seams | 0.7778 | 0.7000 | 0.7368 | 10 | |

| Weighted Avg | 0.9675 | 0.9676 | 0.9674 | 370 | |

| Improved VGG19 Network | |||||

| Cracks | 0.9836 | 1.0000 | 0.9917 | 60 | |

| Inclusion | 0.9344 | 0.9500 | 0.9421 | 60 | |

| Scab | 1.0000 | 1.0000 | 1.0000 | 60 | |

| Pitted Surface | 1.0000 | 0.9833 | 0.9916 | 60 | |

| Rolled in Scale | 1.0000 | 1.0000 | 1.0000 | 60 | |

| Surface Scratch | 0.9500 | 0.9500 | 0.9500 | 60 | |

| Surface Seams | 1.0000 | 0.9000 | 0.9474 | 10 | |

| Weighted Avg | 0.9786 | 0.9784 | 0.9784 | 370 | |

| Algorithm | Task | Accuracy | Average Detection per Image | Samples |

|---|---|---|---|---|

| M-Pooling CNN [17] Masci et al., 2012 | DNN Classification | 93.03% | 0.0062 s 161.3 FPS | 2927 |

| HCGA [11] Hu et al., 2015 | Traditional Classification | 95.04% | 0.158 s 6.3 FPS | 351 |

| HSVM-MC [15] (Chu et al., 2017) | Traditional Classification | 95.18% | 1.1044 s 0.9 FPS | 900 |

| Improved YOLO [19] Jiangyun LI et al., 2018 | DNN Classification and location | 97.55% | 0.012 s 83.3 FPS | 4655 |

| CAE-SGAN [47] Di HE et al., 2019 | Traditional Classification | 98.20% | unknown | 10,800 |

| Ours | DNN Classification | 97.75% | 0.0183 s 54.6 FPS | 1850 |

| Algorithm | Accuracy | Time per Image |

|---|---|---|

| M-Pooling CNN [17] Masci et al., 2012 | 93.37% | 0.007s 142.9FPS |

| CNN [48] Kostenetskiy, P. et al., 2019 | 98.10% | 0.0021 s 476.2 FPS |

| PLCNN [18] Yiping GAO et al., 2020 | 94.74% | 0.00865 s 115.6 FPS |

| ResNet50+MFN [22] ResNet34+MFN Yu HE et al., 2020 | 99.70% 99.20% | 0.165 s/6.1 FPS 0.115 s/8.7 FPS |

| Ours | 97.62% | 0.0192 s/52.1 FPS |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wan, X.; Zhang, X.; Liu, L. An Improved VGG19 Transfer Learning Strip Steel Surface Defect Recognition Deep Neural Network Based on Few Samples and Imbalanced Datasets. Appl. Sci. 2021, 11, 2606. https://doi.org/10.3390/app11062606

Wan X, Zhang X, Liu L. An Improved VGG19 Transfer Learning Strip Steel Surface Defect Recognition Deep Neural Network Based on Few Samples and Imbalanced Datasets. Applied Sciences. 2021; 11(6):2606. https://doi.org/10.3390/app11062606

Chicago/Turabian StyleWan, Xiang, Xiangyu Zhang, and Lilan Liu. 2021. "An Improved VGG19 Transfer Learning Strip Steel Surface Defect Recognition Deep Neural Network Based on Few Samples and Imbalanced Datasets" Applied Sciences 11, no. 6: 2606. https://doi.org/10.3390/app11062606

APA StyleWan, X., Zhang, X., & Liu, L. (2021). An Improved VGG19 Transfer Learning Strip Steel Surface Defect Recognition Deep Neural Network Based on Few Samples and Imbalanced Datasets. Applied Sciences, 11(6), 2606. https://doi.org/10.3390/app11062606