Continuous-Time Fast Motion of Explosion Fragments Estimated by Bundle Adjustment and Spline Representation Using HFR Cameras

Abstract

:1. Introduction

- Radial expansion of the shell;

- A crack appears somewhere in the shell;

- Material leakage, resulting in pressure difference between the inside and outside of the shell;

- The shell is broken randomly, forming irregular fragments which are scattered with a related high initial velocity.

2. Bundle Adjustment for Motion Estimation Using HFR Cameras

2.1. Bundle Adjustment Reconstructs Motion’s Trajectory

| Algorithm 1: Bundle adjustment for motion estimation | |

| Input: Series of observed point from image sequences of all cameras | |

| Output: Cameras parameters and all captured position | |

| 1: | V; |

| 2: | |

| 3: | =; g :=; |

| 4: | found := ; |

| 5: | while not found and do |

| 6: | k = k + 1; |

| 7: | Solve Equation (5) |

| 8: | |

| 9: | found := true; |

| 10: | else |

| 11: | ; |

| 12: | then |

| 13: | ; ; |

| 14: | else |

| 15: | using Equations (1)–(9) |

| 16: | ; g := |

| 17: | |

| 18: | |

| 19: | end if |

| 20: | end if |

| 21: | end while |

2.2. Jacobian Matrix for Stereo HFR Cameras

2.3. Jacobian Matrix for HFR Cameras with Frame-Rate Multiplication

3. Continuous-Time Spline Representation for Fast Motion Estimation

3.1. B-Spline Interpolation

3.2. Cubic B-Spline Representation for Continuous-Time Motion Estimation

3.3. Accuracy Evaluation of B-Spline Representation

4. Experiments and Evaluation

4.1. Experiments Setup of Explosion Simulation

- The balloons are inflated in the inner shell and the inflation behavior is mainly radial;

- The elastic limit causes breakage of the balloons;

- Because of the elastic contraction of the balloon, the pressure difference between the inside and outside is constructed at the same time;

- The shells of the balloons are randomly broken, and the coffee beans are distributed randomly during the inflation. After the balloons are broken, the coffee beans between these two balloons are scattered with a high initial velocity due to the elasticity and pressure difference.

4.2. Evaluation

- (a)

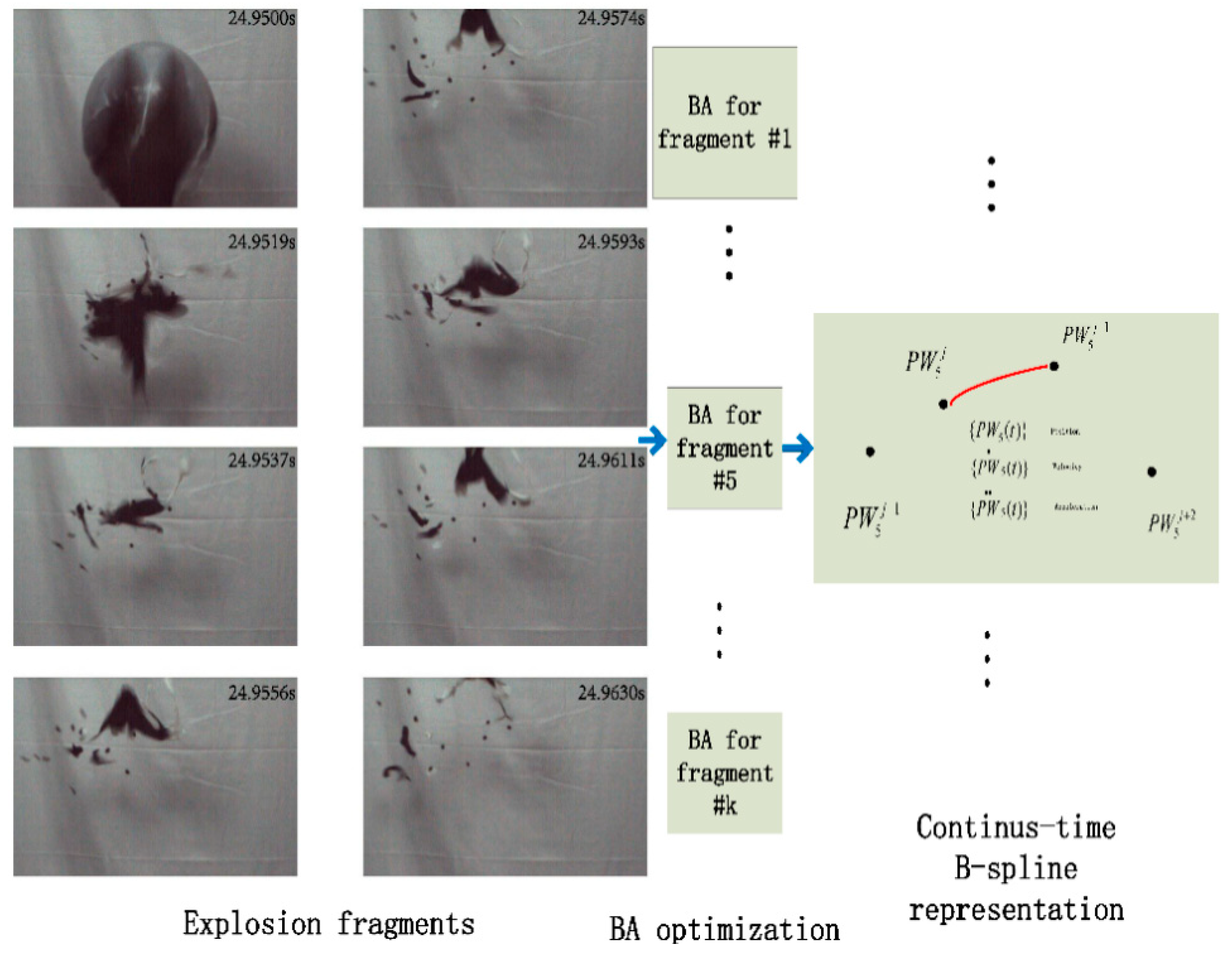

- Explosion fragments’ motion are captured by the HFR cameras with a related frame-rate setting strategy (synchronization or multiplication); the large FOV LWIR cameras monitor the explosion (shown in Figure 6a)

- (b)

- With recording images from both HFR cameras, we use the dense optical flow: the Farneback method [27] is used to extract the motion area of the images at each time stamp j. The pixel motion of different fragments are analyzed (shown in Figure 6b). Then we combine the HOG feature extraction and the connected component at every extracted motion area from the optical flow estimation. The image position (area) of fragments at each captured frame are extracted, i.e., the dataset of each camera’s observed position of fragment k is established;

- (c)

- The bundle adjustment is optimized based on the HFR cameras’ working strategy, which has been detailed in the previous section. Figure 6c demonstrates the variation of three key parameters (damping factor, norm of reprojection error , and , from top to bottom) of the BA along the iteration step. From the BA optimization, the 3D positions of fragment at each captured time stamp j are established.

- (d)

- With optimized 3D positions, the continuous-time motion (position, velocity, acceleration) of each fragment is estimated by cubic B-spline representation.

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mohr, L.; Benauer, R.; Leitl, P.; Fraundorfer, F. Damage estimation of explosions in urban environments by simulation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. Arch. 2019, 42, 253–260. [Google Scholar] [CrossRef] [Green Version]

- Gu, Q.-Y.; Idaku Ishii, I. Review of some advances and applications in real-time high-speed vision: Our views and experiences. Int. J. Autom. Comput. 2016, 13, 305–318. [Google Scholar] [CrossRef]

- Li, J.; Liu, X.; Liu, F.; Xu, D.; Gu, Q.; Ishii, I. A hardware-oriented algorithm for ultra-high-speed object detection. IEEE Sens. J. 2019, 19, 3818–3831. [Google Scholar] [CrossRef]

- Li, J.; Yin, Y.; Liu, X.; Xu, D.; Gu, Q. 12,000-fps multi-object detection using hog descriptor and svm classifier. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 14 December 2017; pp. 5928–5933. [Google Scholar]

- Gao, H.; Gu, Q.; Takaki, T.; Ishii, I. A self-projected light-section method for fast three-dimensional shape inspection. Int. J. Optomechatron. 2012, 6, 289–303. [Google Scholar] [CrossRef] [Green Version]

- Van der Jeught, S.; Dirckx, J.J. Real-time structured light profilometry: A review. Opt. Lasers Eng. 2016, 87, 18–31. [Google Scholar] [CrossRef]

- Sharma, A.; Raut, S.; Shimasaki, K.; Senoo, T.; Ishii, I. Hfr projector camera based visible light communication system for real-time video streaming. Sensors 2020, 20, 5368. [Google Scholar] [CrossRef] [PubMed]

- Landmann, M.; Heist, S.; Dietrich, P.; Lutzke, P.; Gebhart, I.; Templin, J.; Kühmstedt, P.; Tünnermann, A.; Notni, G. High-speed 3d thermography. Opt. Lasers Eng. 2019, 121, 448–455. [Google Scholar] [CrossRef]

- Chen, R.; Li, Z.; Zhong, K.; Liu, X.; Chao, Y.J.; Shi, Y. Low-speed-camera-array-based high-speed threedimensional deformation measurement method: Principle, validation, and application. Opt. Lasers Eng. 2018, 107, 21–27. [Google Scholar] [CrossRef]

- Jiang, M.; Aoyama, T.; Takaki, T.; Ishii, I. Pixel-level and robust vibration source sensing in high-frame-rate video analysis. Sensors 2016, 16, 1842. [Google Scholar] [CrossRef] [PubMed]

- Ishii, I.; Tatebe, T.; Gu, Q.; Moriue, Y.; Takaki, T.; Tajima, K. 2000 fps real-time vision system with high-frame-rate video recording. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AL, USA, 3–7 May 2010; pp. 1536–1541. [Google Scholar]

- Raut, S.; Shimasaki, K.; Singh, S.; Takaki, T.; Ishii, I. Real-time high-resolution video stabilization using high-frame-rate jitter sensing. ROBOMECH J. 2019, 6, 16. [Google Scholar] [CrossRef]

- Hu, S.; Matsumoto, Y.; Takaki, T.; Ishii, I. Monocular stereo measurement using high-speed catadioptric tracking. Sensors 2017, 17, 1839. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, S.; Xu, Y.; Zheng, Y.; Zhu, M.; Yao, H.; Xiao, Z. Tracking a golf ball with high-speed stereo vision system. IEEE Trans. Instrum. Meas. 2018, 68, 2742–2754. [Google Scholar] [CrossRef]

- Hu, S.; Jiang, M.; Takaki, T.; Ishii, I. Real-time monocular three-dimensional motion tracking using a multithread active vision system. J. Robot. Mechatron. 2018, 30, 453–466. [Google Scholar] [CrossRef]

- Shimasaki, K.; Jiang, M.; Takaki, T.; Ishii, I.; Yamamoto, K. Hfr-video-based honeybee activity sensing. IEEE Sens. J. 2020, 20, 5575–5587. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Bustos, Á.P.; Chin, T.-J.; Eriksson, A.; Reid, I. Visual slam: Why bundle adjust? In Proceedings of the IEEE 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 2385–2391. [Google Scholar]

- Agarwal, S.; Snavely, N.; Seitz, S.M.; Szeliski, R. Bundle adjustment in the large. In Proceedings of the European Conference on Computer Vision, Hersonissos, Greece, 5–11 September 2010; pp. 29–42. [Google Scholar]

- Chebrolu, N.; Läbe, T.; Vysotska, O.; Behley, J.; Stachniss, C. Adaptive robust kernels for non-linear least squares problems. arXiv 2020, arXiv:2004.14938. [Google Scholar]

- Gong, Y.; Meng, D.; Seibel, E.J. Bound constrained bundle adjustment for reliable 3d reconstruction. Opt. Express 2015, 23, 10771–10785. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Qin, K. General matrix representations for b-splines. Vis. Comput. 2000, 16, 177–186. [Google Scholar] [CrossRef]

- Mueggler, E.; Gallego, G.; Rebecq, H.; Scaramuzza, D. Continuous-time visual-inertial odometry for event cameras. IEEE Trans. Robot. 2018, 34, 1425–1440. [Google Scholar] [CrossRef] [Green Version]

- Ovrén, H.; Forssén, P.-E. Trajectory representation and landmark projection for continuous-time structure from motion. Int. J. Robot. Res. 2019, 38, 686–701. [Google Scholar] [CrossRef] [Green Version]

- Lovegrove, S.; Patron-Perez, A.; Sibley, G. Spline fusion: A continuous-time representation for visual-inertial fusion with application to rolling shutter cameras. BMVC 2013, 2, 8. [Google Scholar]

- Geneva, P.; Eckenhoff, K.; Lee, W.; Yang, Y.; Huang, G. Openvins: A research platform for visual-inertial estimation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–30 June 2020; pp. 4666–4672. [Google Scholar]

- Farnebäck, G. Two-frame motion estimation based on polynomial expansion. In Proceedings of the Scandinavian Conference on Image Analysis, Halmstad, Sweden, 29 June–2 July 2003; pp. 363–370. [Google Scholar]

| Method | Stereo | SBA | BAM |

|---|---|---|---|

| Duration | 538 ms | 538 ms | 883 ms |

| MSP (mm) | 33.29 | 20.94 | 25.88 |

| MESP (mm) | 31.44 | 12.03 | 12.57 |

| MERE-C1 | 0.040 | 0.00012 | 0.00053 |

| MSERE-C1 | 0.39 | 0.00014 | 0.00072 |

| MERE-C2 | 0.041 | 0.00011 | 0.00079 |

| MSERE-C2 | 0.41 | 0.00014 | 0.0015 |

| ID | of PWx (mm) | of PWy (mm) | of PWy (mm) | Momentum (kg m/s) × 10−4 | Force (N) |

|---|---|---|---|---|---|

| 1 | 63.2 | 22.5 | 29.1 | 8.04 | 0.0019 |

| 2 | 31.4 | 31.5 | 43.3 | 6.19 | 0.0015 |

| 3 | 59.5 | 43.2 | 24.3 | 10.2 | 0.0024 |

| 4 | 32.1 | 27.0 | 32.8 | 6.62 | 0.0016 |

| 5 | 48.0 | 19.2 | 51.2 | 7.39 | 0.0018 |

| ID | of PWx (mm) | of PWy (mm) | of PWy (mm) | Momentum (kg m/s) × 10−4 | Force (N) |

|---|---|---|---|---|---|

| 1 | 79.8 | 33.6 | 42.8 | 8.04 | 0.0019 |

| 2 | 22.8 | 29.2 | 30.6 | 6.20 | 0.0015 |

| 3 | 69.8 | 43.2 | 18.0 | 10.1 | 0.0024 |

| 4 | 61.8 | 27.0 | 32.8 | 6.63 | 0.0016 |

| 5 | 13.9 | 69.2 | 34.2 | 7.38 | 0.0018 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ni, Y.; Liu, F.; Wu, Y.; Wang, X. Continuous-Time Fast Motion of Explosion Fragments Estimated by Bundle Adjustment and Spline Representation Using HFR Cameras. Appl. Sci. 2021, 11, 2676. https://doi.org/10.3390/app11062676

Ni Y, Liu F, Wu Y, Wang X. Continuous-Time Fast Motion of Explosion Fragments Estimated by Bundle Adjustment and Spline Representation Using HFR Cameras. Applied Sciences. 2021; 11(6):2676. https://doi.org/10.3390/app11062676

Chicago/Turabian StyleNi, Yubo, Feng Liu, Yi Wu, and Xiangjun Wang. 2021. "Continuous-Time Fast Motion of Explosion Fragments Estimated by Bundle Adjustment and Spline Representation Using HFR Cameras" Applied Sciences 11, no. 6: 2676. https://doi.org/10.3390/app11062676