Tool Health Monitoring Using Airborne Acoustic Emission and Convolutional Neural Networks: A Deep Learning Approach

Abstract

:1. Introduction

2. Proposed Technique

3. Background Theory

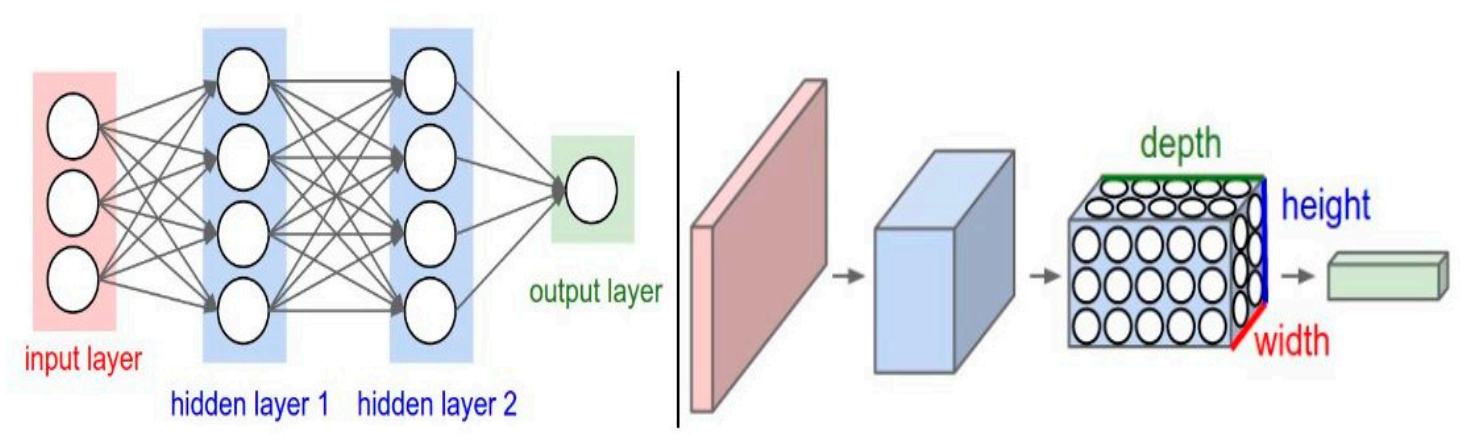

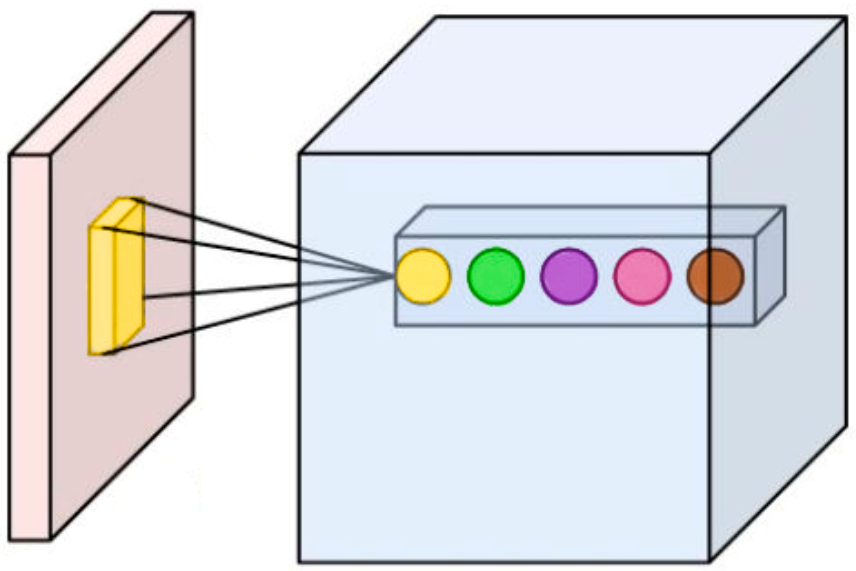

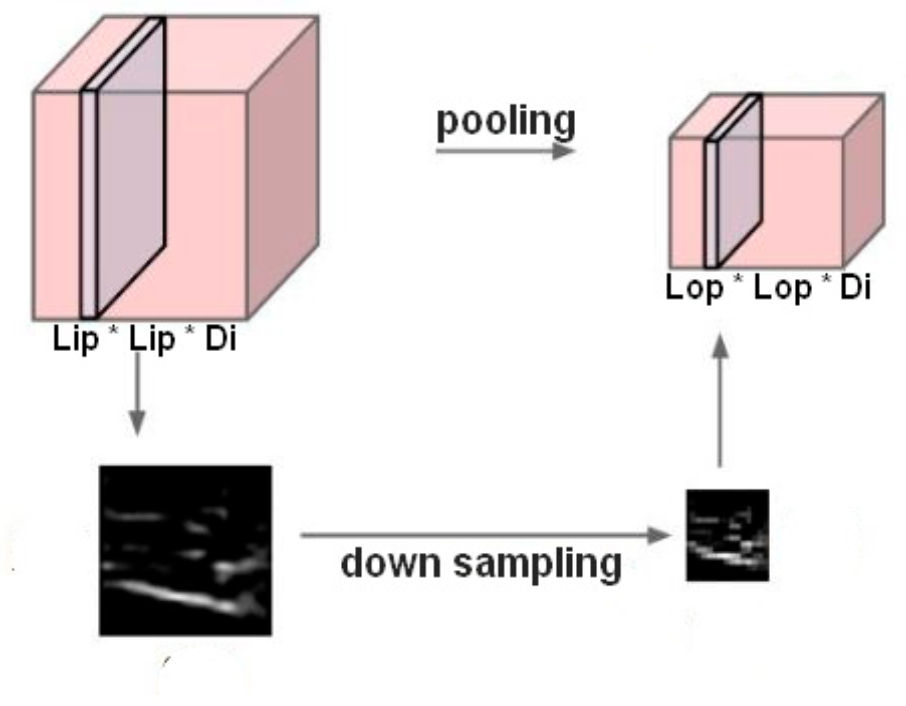

3.1. Convolutional Neural Network (CNN)

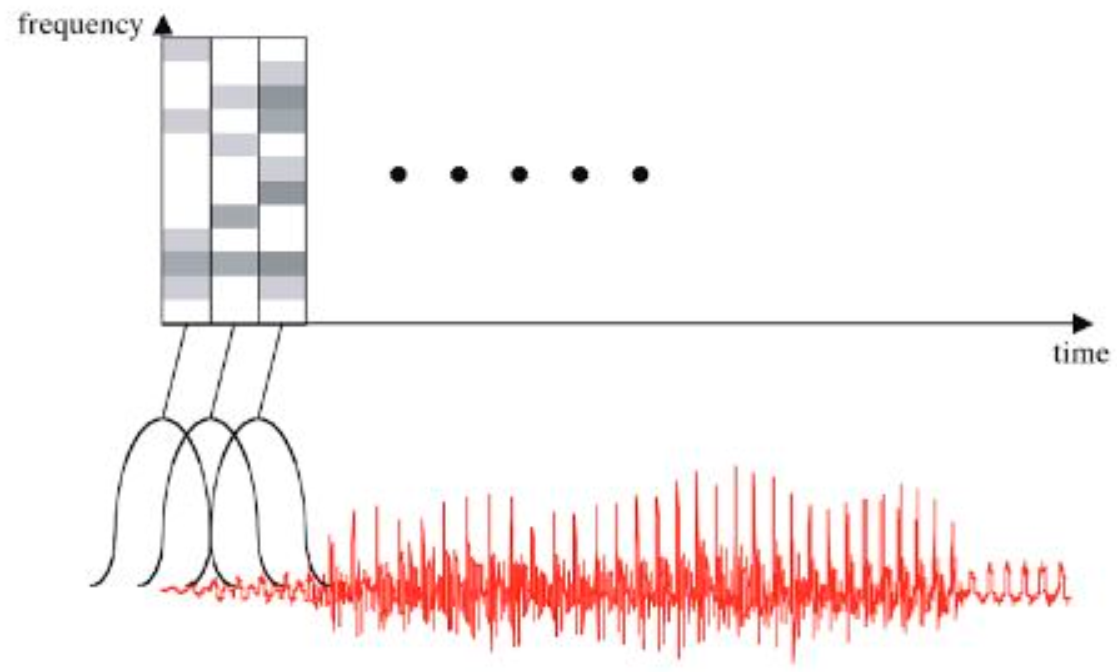

3.2. Spectrogram

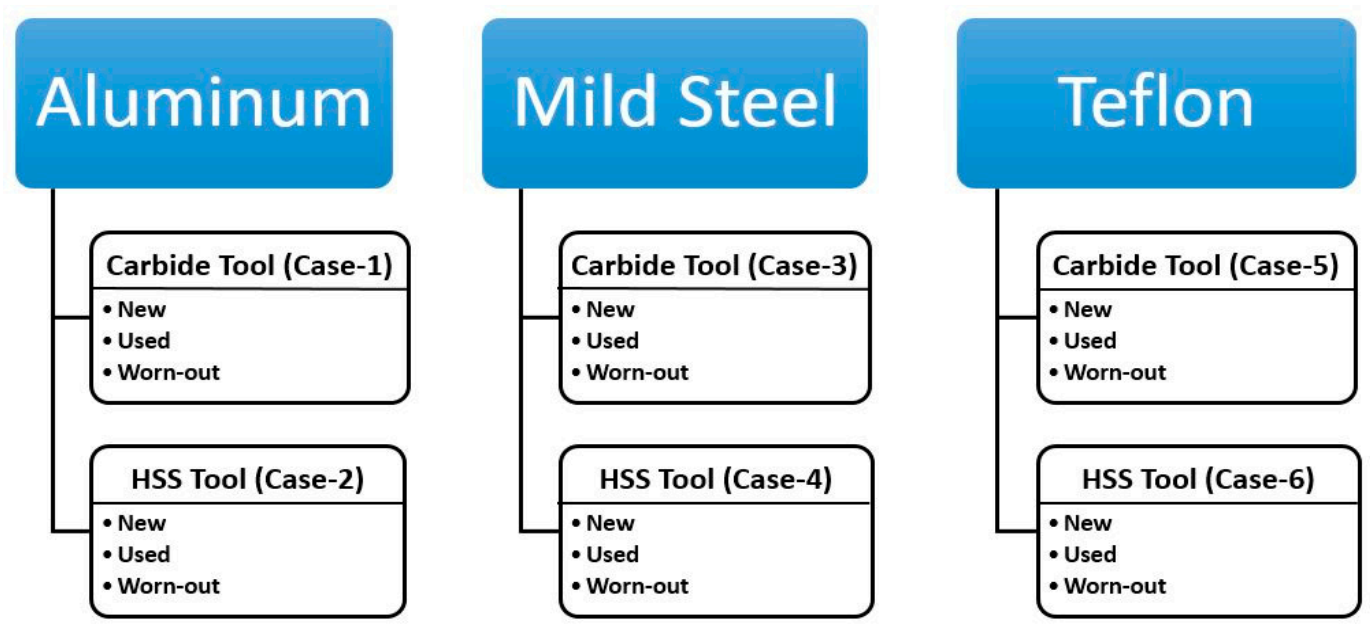

4. Experimentation

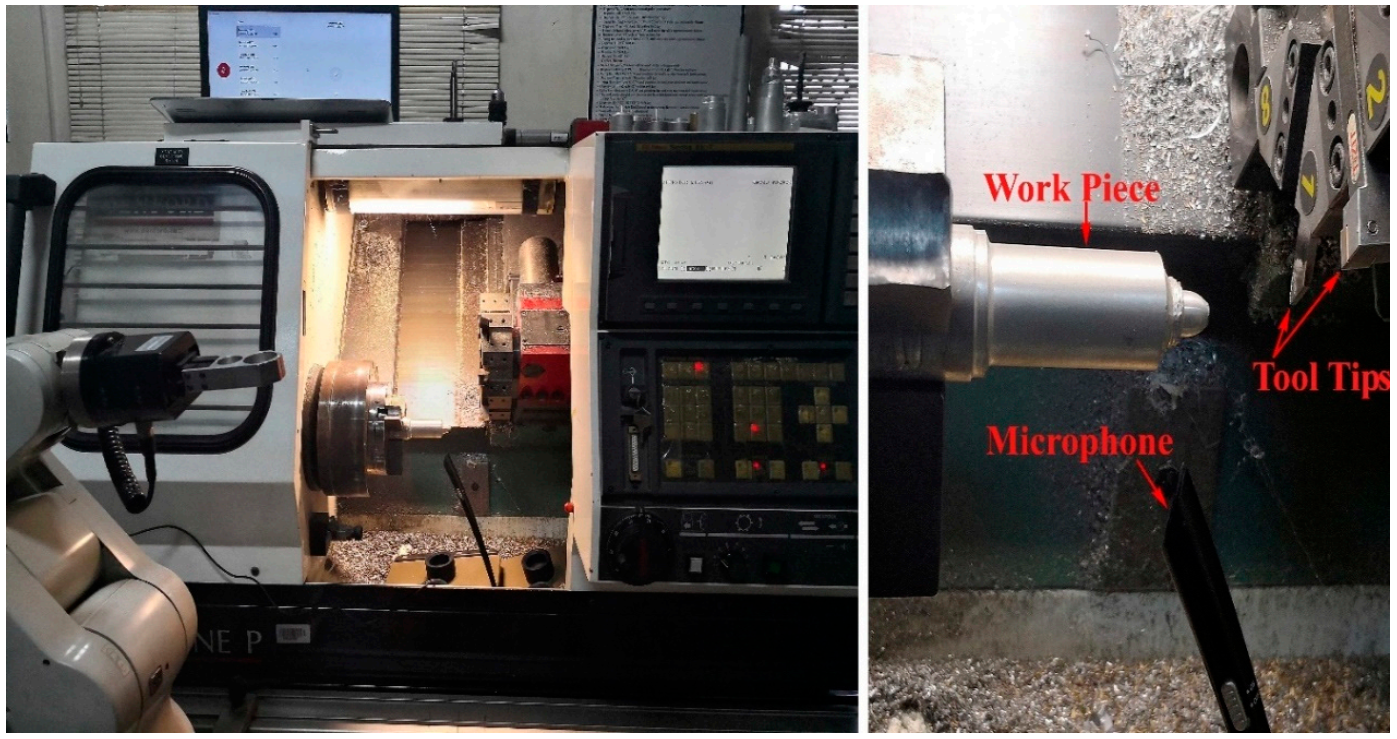

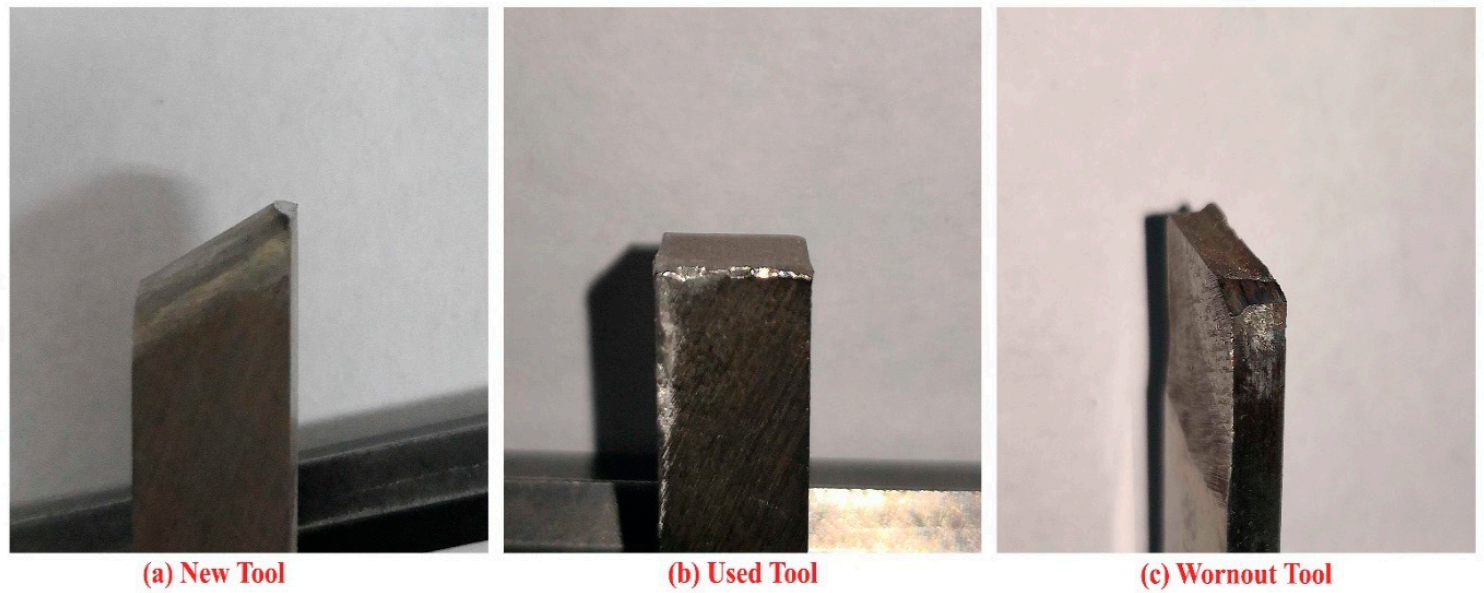

4.1. Experimental Setup and Data Acquisition

4.2. Acoustic Emission Signals Preprocessing

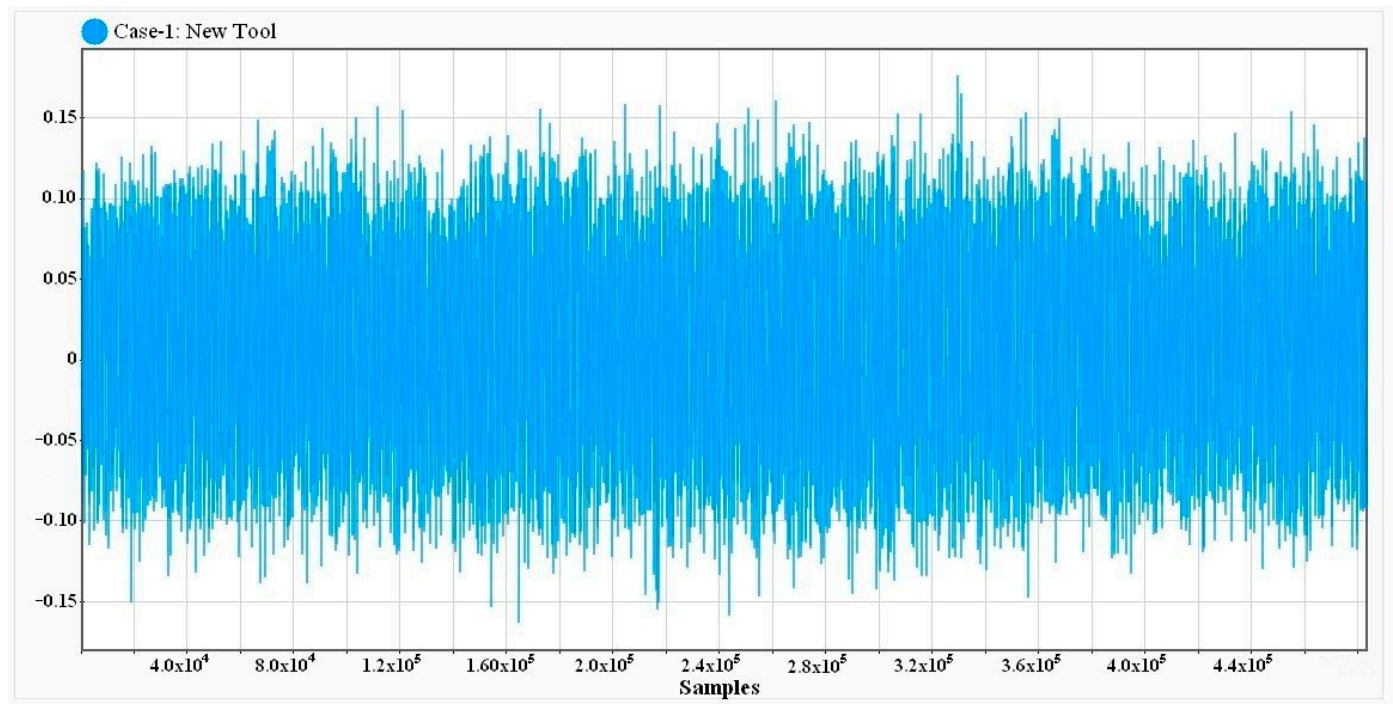

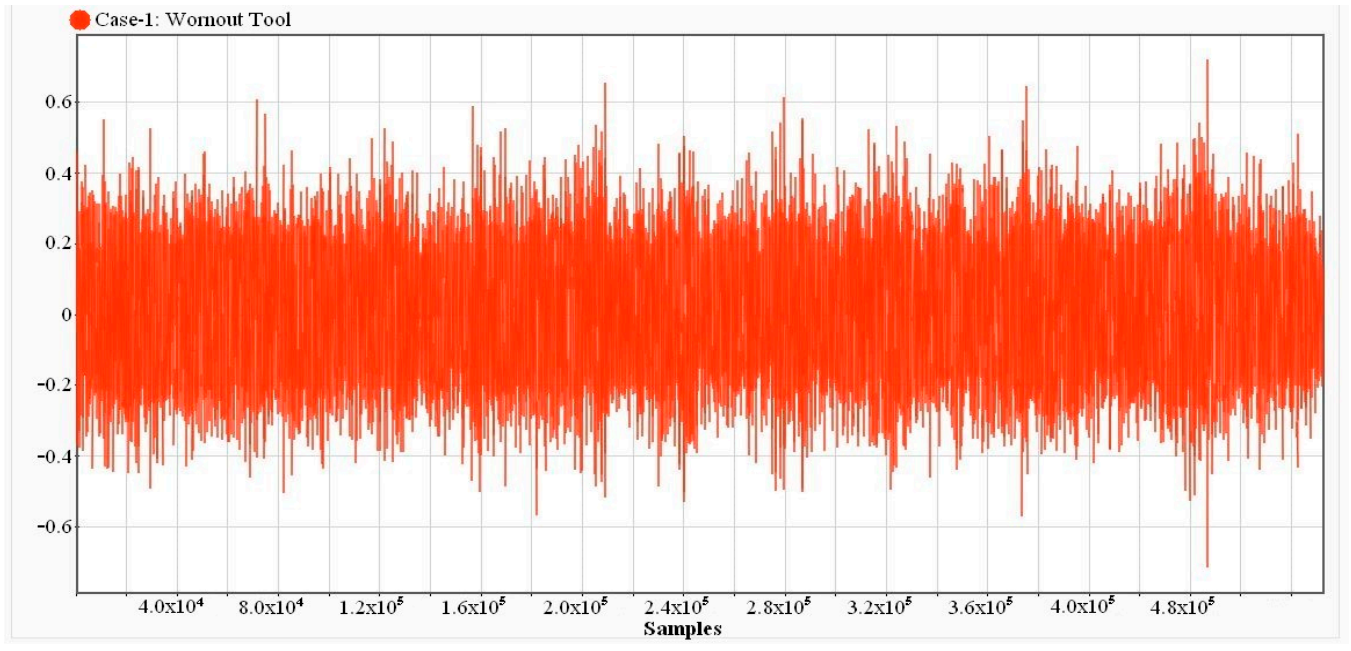

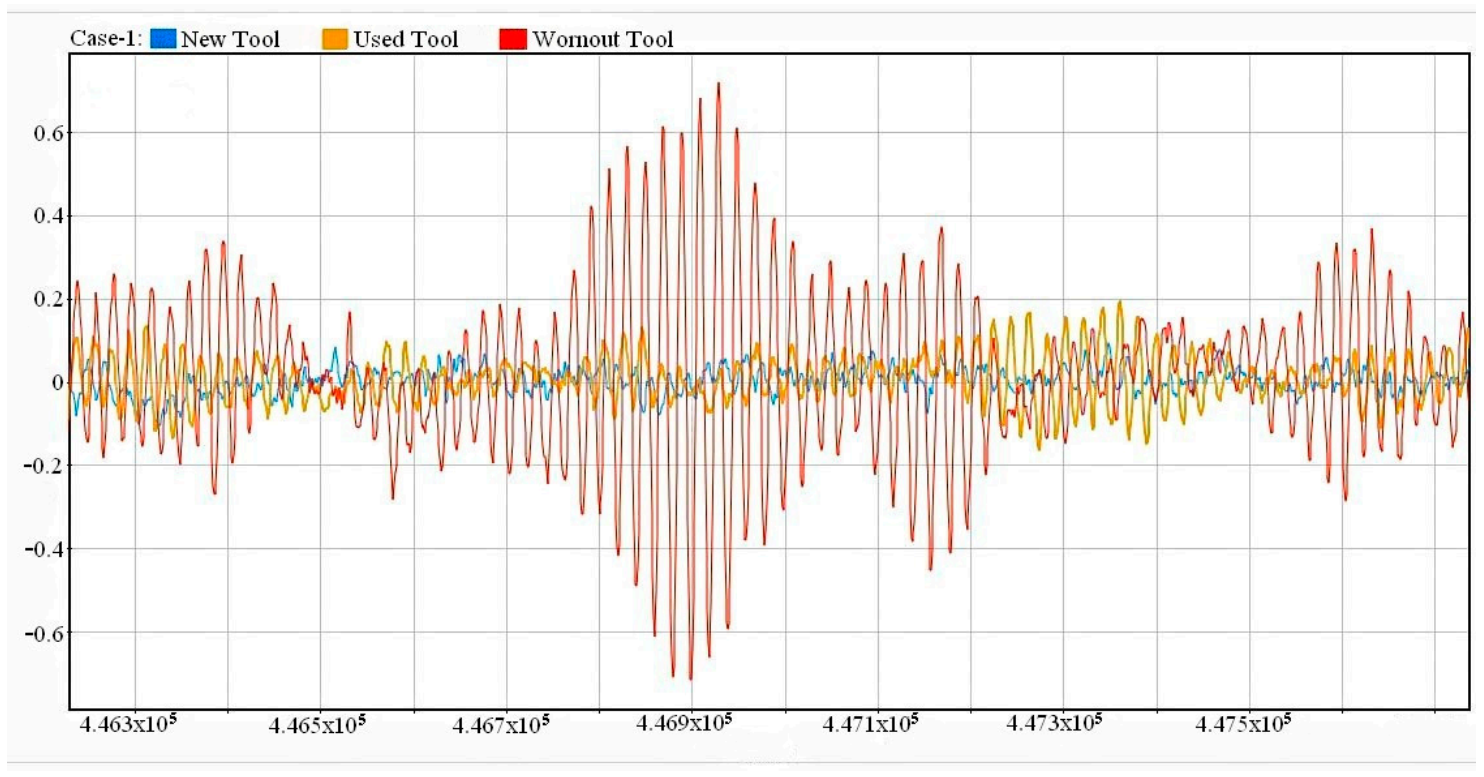

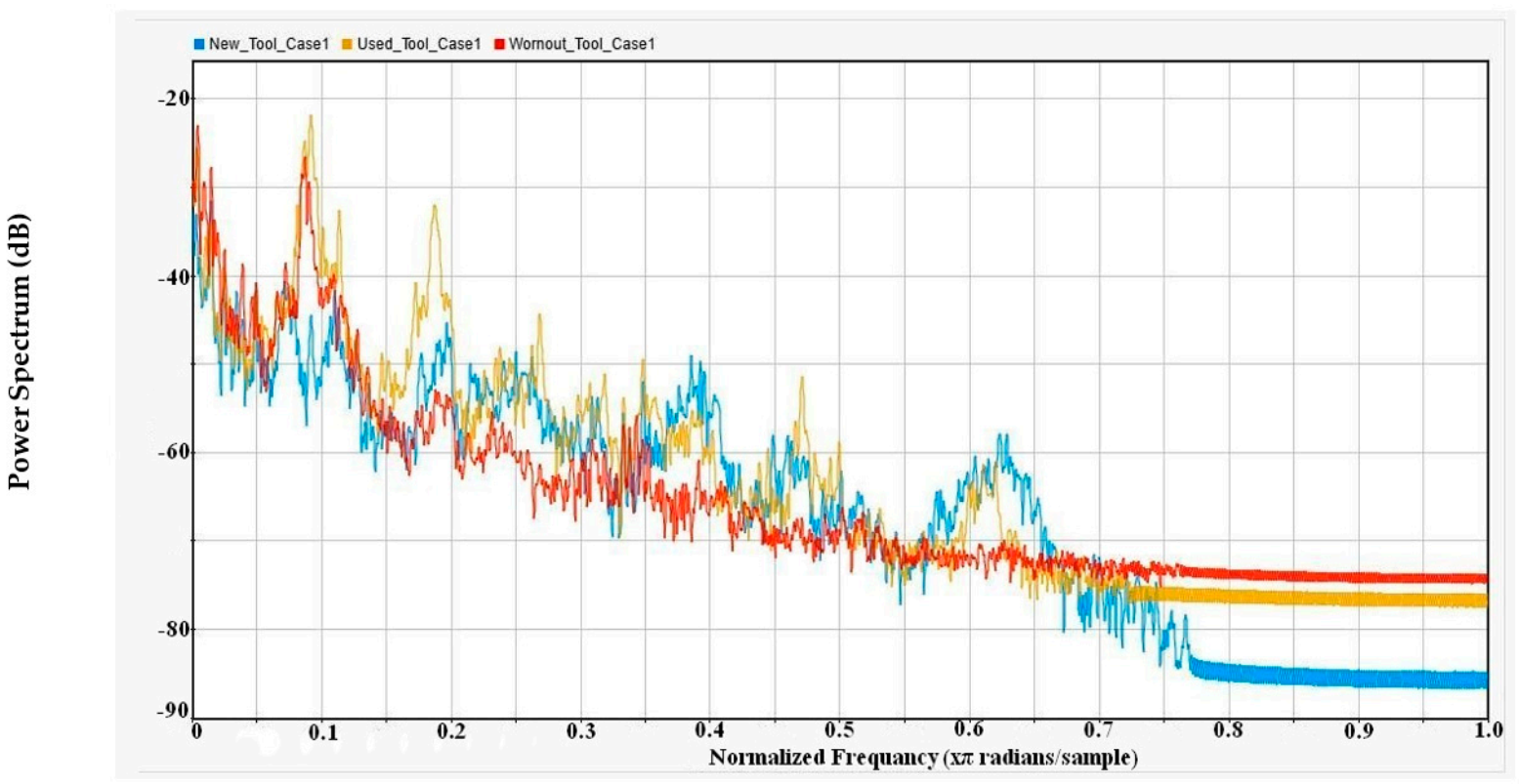

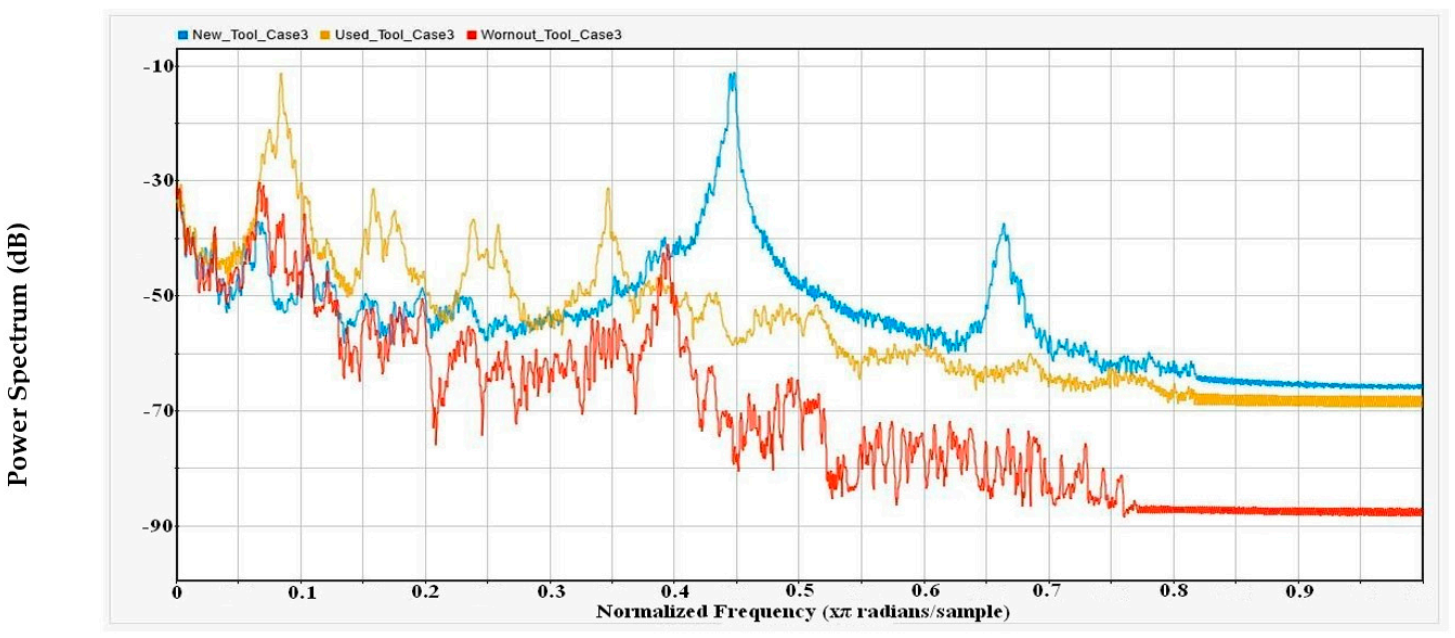

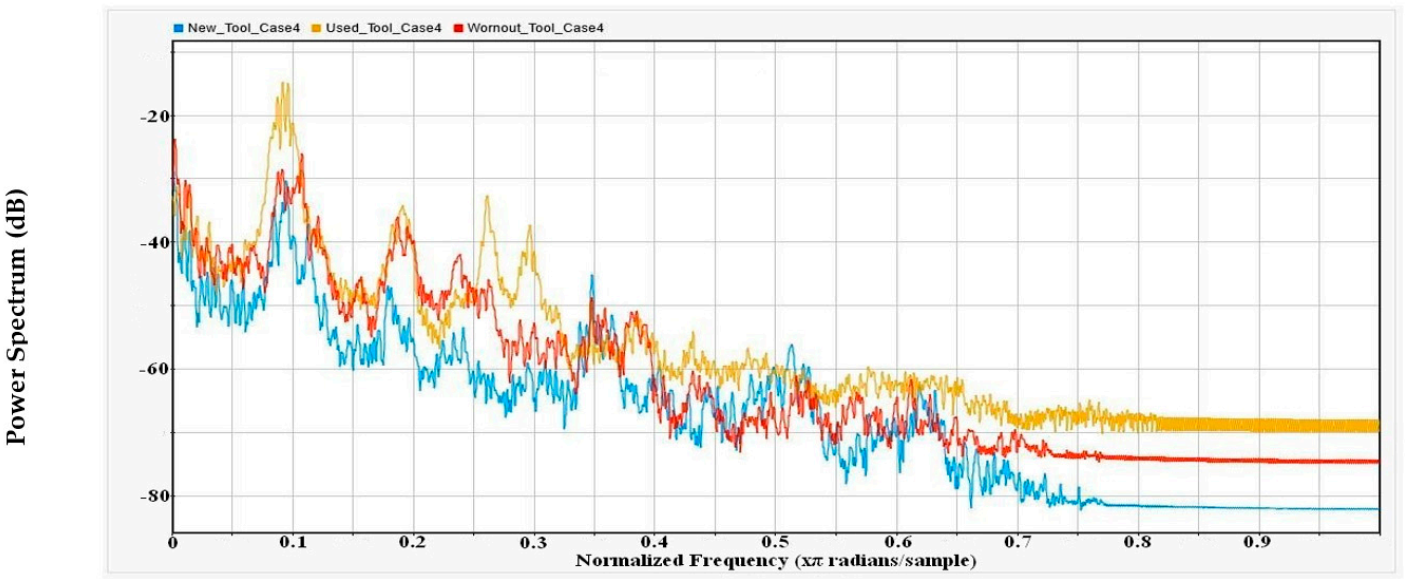

4.3. Raw AE Signals Characteristics

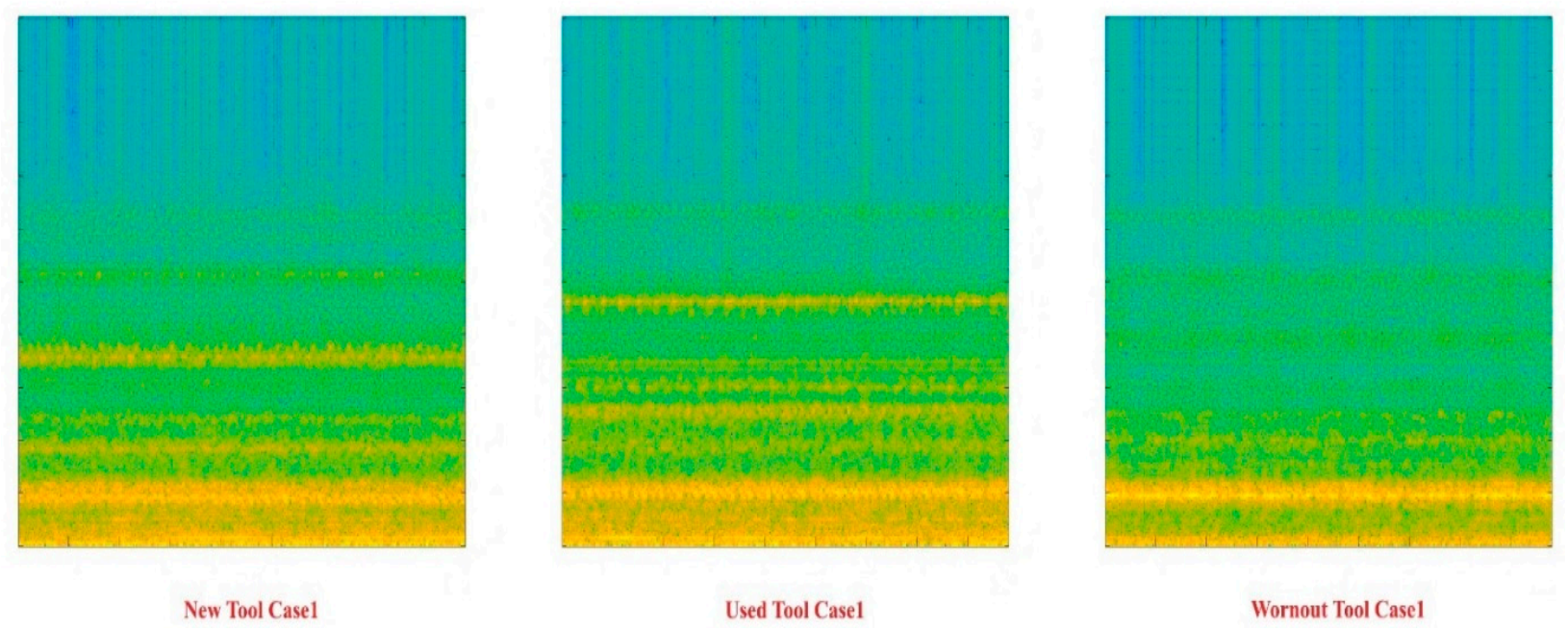

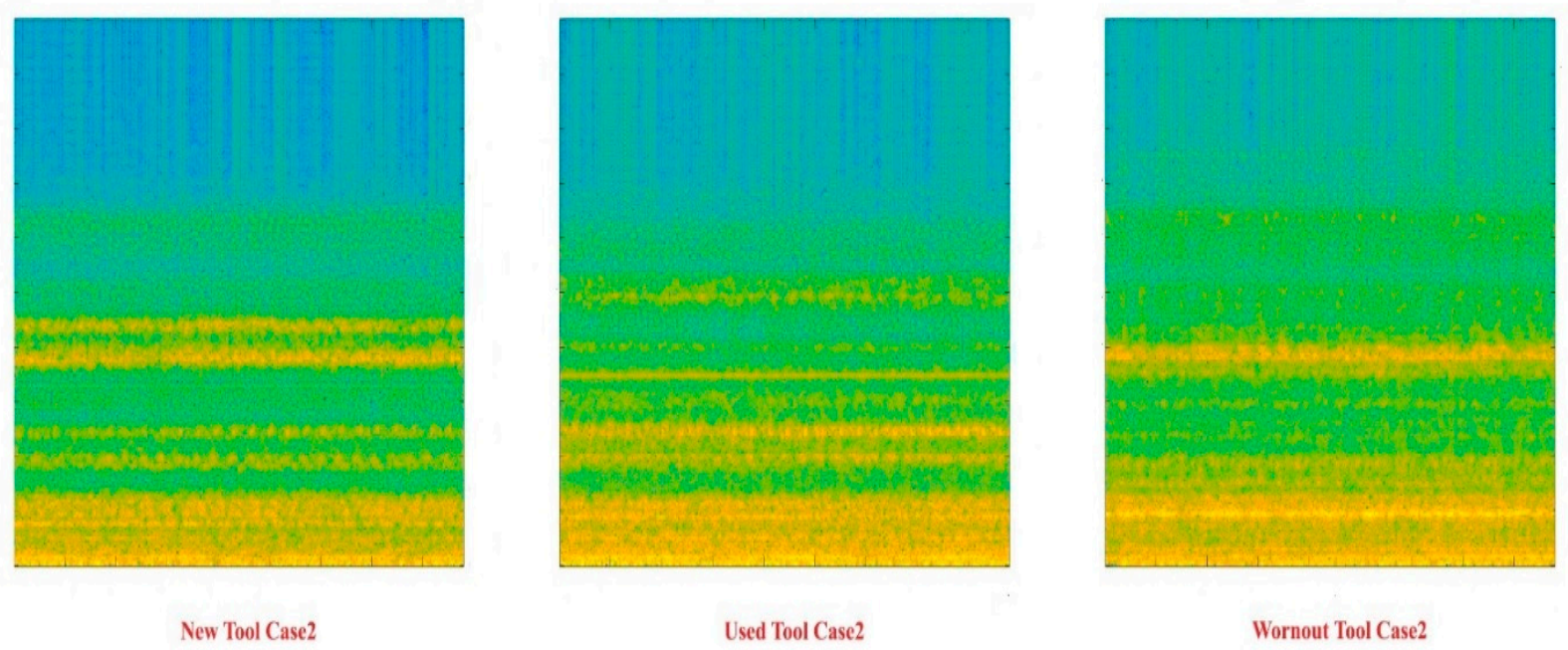

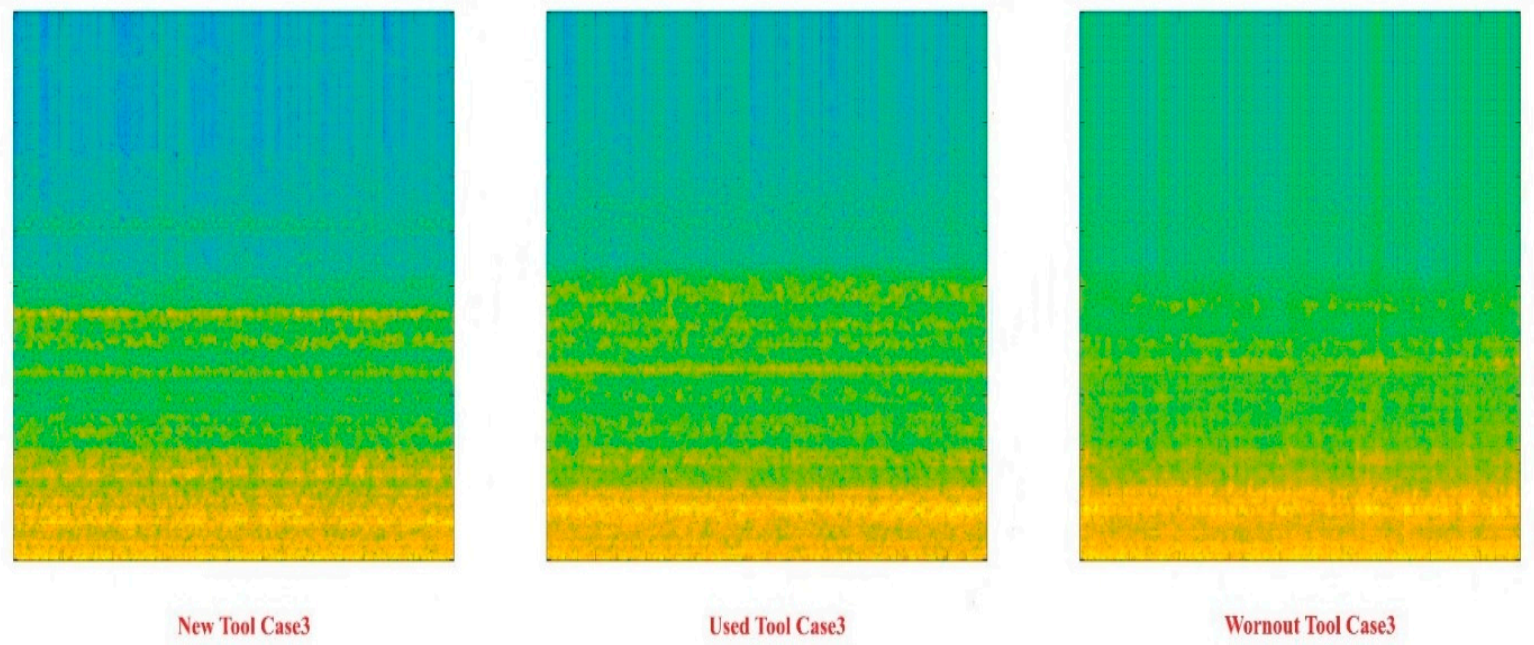

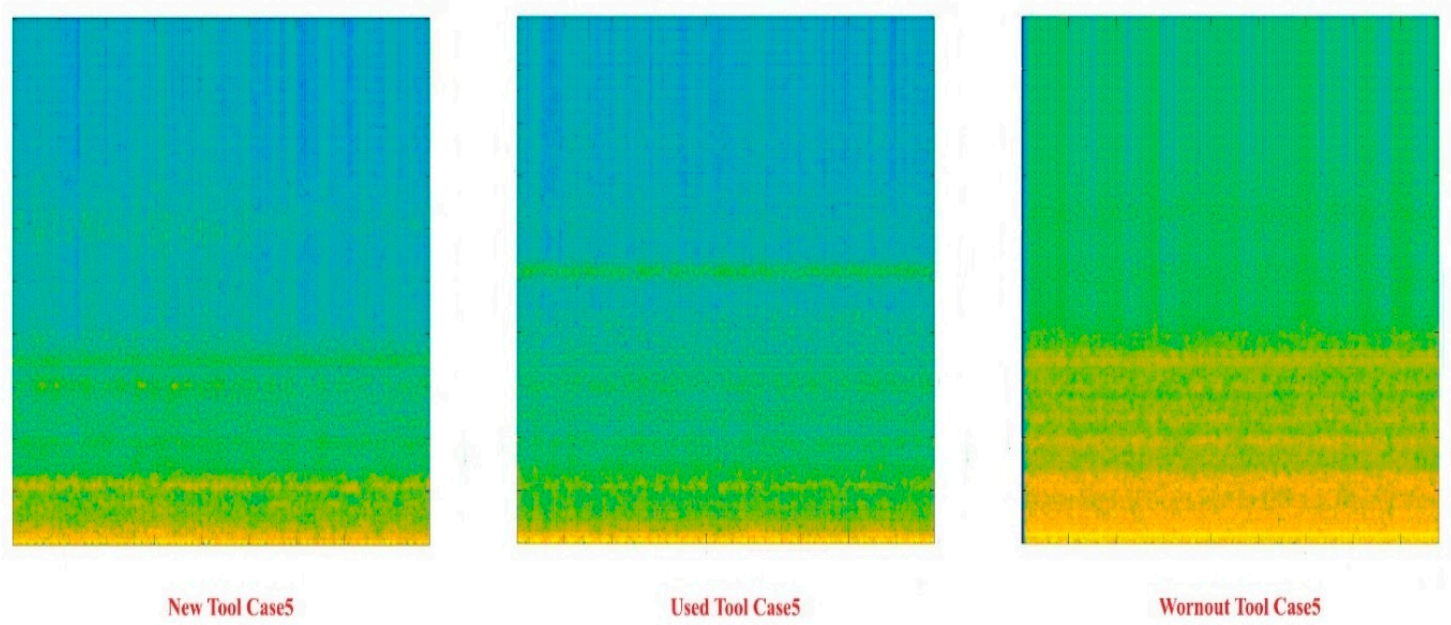

4.4. Visual Representation of Time–Frequency Domain: Spectrogram

5. Classification Results

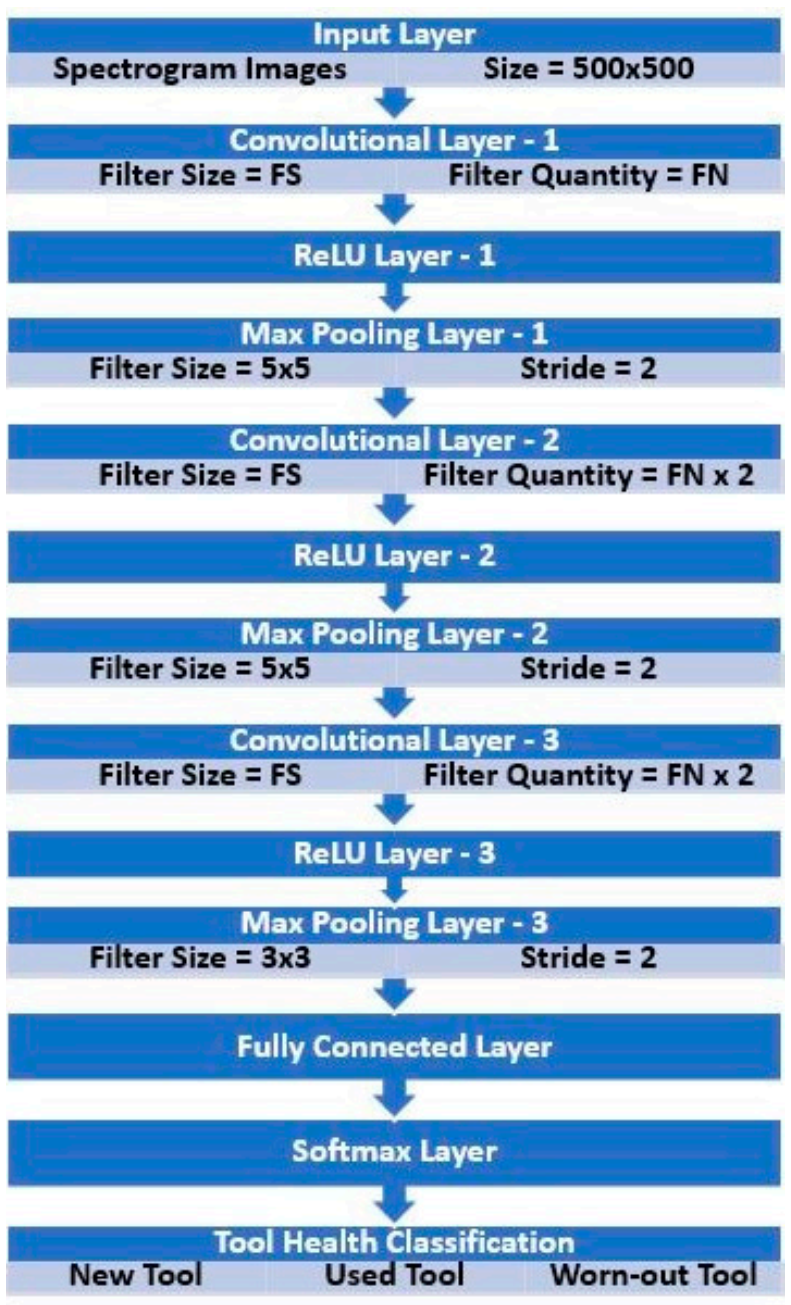

5.1. Convolutional Neural Network (CNN) Architecture

5.2. Multiclass Quandary: Tool Health Classification

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Manufacturing, Value Added (Current US$). Available online: data.worldbank.org/indicator/NV.IND.MANF.CD (accessed on 9 November 2020).

- Manufacturing, Value Added (Annual % Growth). Available online: data.worldbank.org/indicator/NV.IND.MANF.KD.ZG (accessed on 9 November 2020).

- Global and China CNC Machine Tool Industry Report, 2017–2021. December 2017. Available online: www.reportbuyer.com/product/4126834/global-and-china-cnc-machine-tool-industry-report-2017-2021.html (accessed on 9 November 2020).

- Ray, N.; Worden, K.; Turner, S.; Villain-Chastre, J.P.; Cross, E.J. Tool wear prediction and damage detection in milling using hidden Markov models. In Proceedings of the International Conference on Noise and Vibration Engineering (ISMA), Leuven, Belgium, 19–21 September 2016. [Google Scholar]

- Zhang, C.; Yao, X.; Zhang, J.; Jin, H. Tool Condition Monitoring and Remaining Useful Life Prognostic Based on a Wireless Sensor in Dry Milling Operations. Sensors 2016, 16, 795. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, X.; Li, B. Acoustic emission method for tool condition monitoring based on wavelet analysis. Int. J. Adv. Manuf. Technol. 2007, 33, 968. [Google Scholar] [CrossRef]

- Dimia, D.E.; Lister, P.M.; Leighton, N.J. A multi-sensor integration method of signals in a metal cutting operation via application of multi-layer perceptron neural networks. In Proceedings of the Fifth International Conference on Artificial Neural Networks, Cambridge, UK, 7–9 July 1997. [Google Scholar]

- Gao, H.; Xu, M.; Shi, X.; Huang, H. Tool Wear Monitoring Based on Localized Fuzzy Neural Networks for Turning Operation. In Proceedings of the 2009 Sixth International Conference on Fuzzy Systems and Knowledge Discovery, Tianjin, China, 14–16 August 2009. [Google Scholar]

- Snr, D.E. Sensor signals for tool-wear monitoring in metal cutting operations—A review of methods. Int. J. Mach. Tools Manuf. 2000, 40, 1073–1098. [Google Scholar]

- Li, X.L. A brief review: Acoustic emission method for tool wear monitoring during turning. Int. J. Mach. Tools Manuf. 2002, 42, 157–165. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Kondrich, A. CS231n Convolutional Neural Networks for Visual Recognition. Available online: http://cs231n.github.io/convolutional-networks/#conv (accessed on 5 June 2017).

- Simard, P.Y.; Steinkraus, D.; Platt, J.C. Best Practices for Convolutional Neural Networks Applied to Visual Document Analysis. In Proceedings of the Seventh International Conference on Document Analysis and Recognition (ICDAR 2003), Edinburgh, UK, 3–6 August 2003. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted Boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2016. [Google Scholar]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; Adaptive Computation and Machine Learning; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Havelock, D.; Kuwano, S.; Vorländer, M. (Eds.) Handbook of Signal Processing in Acoustics; Springer: Berlin, Germany, 2008. [Google Scholar]

- Abdelgawwad, A.; Catala, A.; Pätzold, M. Doppler Power Characteristics Obtained from Calibrated Channel State Information for Human Activity Recognition. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25–28 May 2020. [Google Scholar]

- Sikdar, S.K.; Chen, M. Relationship between tool flank wear area and component forces in single point turning. J. Mater. Process. Technol. 2002, 128, 210–215. [Google Scholar] [CrossRef]

- Malagi, R.R.; Rajesh, B.C. Factors influencing cutting forces in turning and development of software to estimate cutting forces in turning. Int. J. Eng. Innov. Technol. 2012, 2, 14–30. [Google Scholar]

- Ayodeji, O.O.; Abolarin, M.S.; Yisa, J.J.; Olaoluwa, P.S.; Kehinde, A.C. Effect of Cutting Speed and Feed Rate on Tool Wear Rate and Surface Roughness in Lathe Turning Process. Int. J. Eng. Trends Technol. 2015, 22, 173–175. [Google Scholar]

- Astakhov, V.P.; Davim, J.P. Tools (geometry and material) and tool wear. In Machining; Springer: London, UK, 2008; pp. 29–57. [Google Scholar]

- Kumar, M.P.; Ramakrishna, N.; Amarnath, K.; Kumar, M.S.; Kumar, M.P.; Ramakrishna, N. Study on tool life and its failure mechanisms. Int. J. Innov. Res. Sci. Technol. 2015, 2, 126–131. [Google Scholar]

- Zafar, T.; Kamal, K.; Sheikh, Z.; Mathavan, S.; Jehanghir, A.; Ali, U. Tool health monitoring for wood milling process using airborne acoustic emission. In Proceedings of the 2015 IEEE International Conference on Automation Science and Engineering (CASE), Gothenburg, Sweden, 24–28 August 2015. [Google Scholar]

- Arslan, H.; Er, A.O.; Orhan, S.; Aslan, E. Tool condition monitoring in turning using statistical parameters of vibration signal. Int. J. Acoust. Vib. 2016, 21, 371–378. [Google Scholar] [CrossRef]

- Yu, J.; Liang, S.; Tang, D.; Liu, H. A weighted hidden Markov model approach for continuous-state tool wear monitoring and tool life prediction. Int. J. Adv. Manuf. Technol. 2017, 91, 201–211. [Google Scholar] [CrossRef]

- Luo, B.; Wang, H.; Liu, H.; Li, B.; Peng, F. Early Fault Detection of Machine Tools Based on Deep Learning and Dynamic Identification. IEEE Trans. Ind. Electron. 2018, 66, 509–518. [Google Scholar] [CrossRef]

| Feed Rate | RPM | Depth of Cut |

|---|---|---|

| 200 mm/min | 1500 rev/min | 1 mm |

| Actual Class | ||||

|---|---|---|---|---|

| N = 900 | New Tool | Used Tool | Worn-Out Tool | |

| Predicted Class | New Tool | 300 | 0 | 0 |

| Used Tool | 0 | 300 | 0 | |

| Worn-out Tool | 0 | 0 | 300 | |

| FS | FN | Case 1 | Case 2 | Case 3 | Case 4 | Case 5 | Case 6 | Average | |

|---|---|---|---|---|---|---|---|---|---|

| 5 × 5 | 4 | Accuracy | 1.000 | 0.980 | 0.998 | 0.988 | 0.988 | 0.998 | 0.992 |

| Precision | 1.000 | 0.971 | 0.996 | 0.982 | 0.982 | 0.996 | 0.988 | ||

| Recall | 1.000 | 0.970 | 0.996 | 0.981 | 0.981 | 0.996 | 0.987 | ||

| F1 Score | 1.000 | 0.970 | 0.996 | 0.982 | 0.981 | 0.996 | 0.988 | ||

| 3 × 3 | 4 | Accuracy | 0.744 | 0.583 | 0.970 | 0.570 | 0.973 | 0.985 | 0.804 |

| Precision | 0.818 | 0.464 | 0.959 | 0.319 | 0.962 | 0.979 | 0.750 | ||

| Recall | 0.616 | 0.374 | 0.956 | 0.356 | 0.959 | 0.978 | 0.707 | ||

| F1 Score | 0.527 | 0.277 | 0.955 | 0.221 | 0.959 | 0.978 | 0.653 | ||

| 10 × 10 | 4 | Accuracy | 0.556 | 0.852 | 0.556 | 0.575 | 0.840 | 0.556 | 0.656 |

| Precision | 0.111 | 0.777 | 0.111 | 0.395 | 0.807 | 0.111 | 0.385 | ||

| Recall | 0.333 | 0.778 | 0.333 | 0.363 | 0.759 | 0.333 | 0.483 | ||

| F1 Score | 0.167 | 0.765 | 0.167 | 0.359 | 0.749 | 0.167 | 0.396 | ||

| 5 × 5 | 2 | Accuracy | 0.990 | 0.652 | 0.657 | 0.728 | 0.852 | 0.960 | 0.807 |

| Precision | 0.986 | 0.480 | 0.741 | 0.792 | 0.790 | 0.949 | 0.790 | ||

| Recall | 0.986 | 0.478 | 0.485 | 0.593 | 0.778 | 0.941 | 0.710 | ||

| F1 Score | 0.986 | 0.431 | 0.428 | 0.584 | 0.776 | 0.940 | 0.691 | ||

| 5 × 5 | 6 | Accuracy | 1.000 | 0.988 | 0.837 | 0.686 | 0.556 | 0.960 | 0.838 |

| Precision | 1.000 | 0.983 | 0.771 | 0.524 | 0.111 | 0.950 | 0.723 | ||

| Recall | 1.000 | 0.983 | 0.756 | 0.530 | 0.333 | 0.941 | 0.757 | ||

| F1 Score | 1.000 | 0.983 | 0.756 | 0.510 | 0.167 | 0.941 | 0.726 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arslan, M.; Kamal, K.; Sheikh, M.F.; Khan, M.A.; Ratlamwala, T.A.H.; Hussain, G.; Alkahtani, M. Tool Health Monitoring Using Airborne Acoustic Emission and Convolutional Neural Networks: A Deep Learning Approach. Appl. Sci. 2021, 11, 2734. https://doi.org/10.3390/app11062734

Arslan M, Kamal K, Sheikh MF, Khan MA, Ratlamwala TAH, Hussain G, Alkahtani M. Tool Health Monitoring Using Airborne Acoustic Emission and Convolutional Neural Networks: A Deep Learning Approach. Applied Sciences. 2021; 11(6):2734. https://doi.org/10.3390/app11062734

Chicago/Turabian StyleArslan, Muhammad, Khurram Kamal, Muhammad Fahad Sheikh, Mahmood Anwar Khan, Tahir Abdul Hussain Ratlamwala, Ghulam Hussain, and Mohammed Alkahtani. 2021. "Tool Health Monitoring Using Airborne Acoustic Emission and Convolutional Neural Networks: A Deep Learning Approach" Applied Sciences 11, no. 6: 2734. https://doi.org/10.3390/app11062734

APA StyleArslan, M., Kamal, K., Sheikh, M. F., Khan, M. A., Ratlamwala, T. A. H., Hussain, G., & Alkahtani, M. (2021). Tool Health Monitoring Using Airborne Acoustic Emission and Convolutional Neural Networks: A Deep Learning Approach. Applied Sciences, 11(6), 2734. https://doi.org/10.3390/app11062734