One-Dimensional Convolutional Neural Network with Adaptive Moment Estimation for Modelling of the Sand Retention Test

Abstract

1. Introduction

1.1. Variable Identification in the Sand Retention Test

1.1.1. Sand Characteristics

1.1.2. Screen Characteristics

1.1.3. Fluid Characteristics

1.1.4. The Condition in Test Cell

1.1.5. Variable Summary

2. Materials and Methods

2.1. Data Collection

The Availability of Sand Retention Test Variables

2.2. Data Analysis

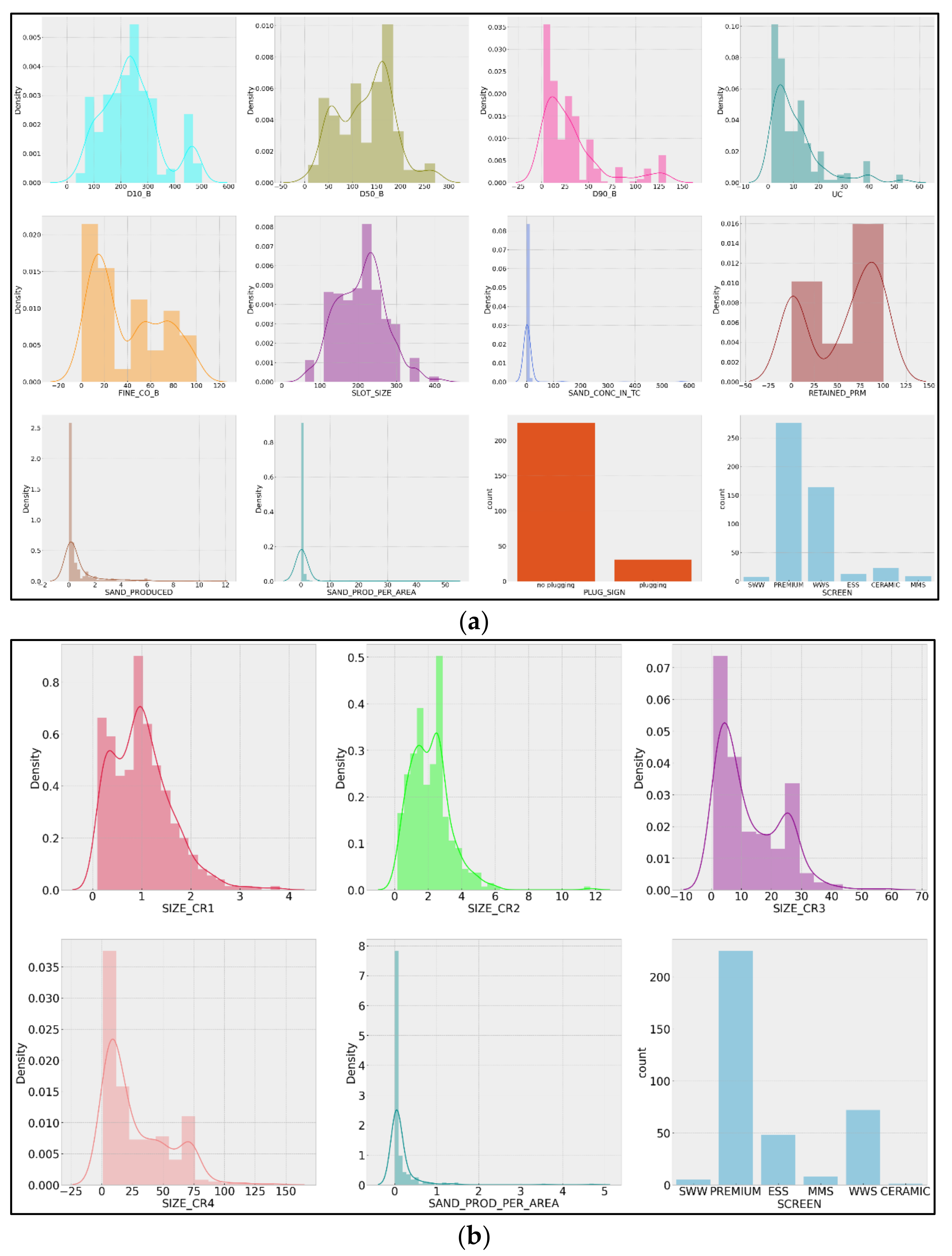

2.2.1. Univariate Analysis

2.2.2. Bivariate Analysis

2.3. Data Preparation

2.4. Convolutional Neural Network Experimental Setup

| Algorithm 1 1D-CNN modelling process |

* Input:

2 Fit the model using training data 3 Calculate accuracy and loss function 4 Evaluate the trained model using performance metrics 5 Compare actual and predicted values of the trained model 6 Backpropagate error and adjust the model parameters 7 If better loss Do: 8 Predict the output of testing data using the trained network 9 Evaluate the model using performance metrics 10 Compare actual and predicted values of the model 11 Save network (model, weights) 12 End If 13 Tune hyperparameters and return to step 2 14 End For |

Hyperparameters in the Convolutional Neural Network

2.5. Model Evaluation and Validation

3. Results and Discussion

3.1. Slurry Test Model Validation Result

3.2. Sand Pack Test Model Validation Result

3.3. Comparative Model Performance

3.4. Significance Analysis of Comparative Models

4. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wu, B.; Choi, S.; Feng, Y.; Denke, R.; Barton, T.; Wong, C.; Boulanger, J.; Yang, W.; Lim, S.; Zamberi, M. Evaluating sand screen performance using improved sand retention test and numerical modelling. In Proceedings of the Offshore Technology Conference Asia, Offshore Technology Conference, Kuala Lumpur, Malaysia, 22–25 March 2016. [Google Scholar]

- Chanpura, R.A.; Mondal, S.; Andrews, J.S.; Mathisen, A.M.; Ayoub, J.A.; Parlar, M.; Sharma, M.M. Modeling of square mesh screens in slurry test conditions for stand-alone screen applications. In Proceedings of the SPE International Symposium and Exhibition on Formation Damage Control, Society of Petroleum Engineers, Lafayette, LA, USA, 15–17 February 2012. [Google Scholar]

- Ballard, T.; Beare, S. Media sizing for premium sand screens: Dutch twill weaves. In Proceedings of the SPE European Formation Damage Conference, Society of Petroleum Engineers, The Hague, The Netherlands, 13–14 May 2003. [Google Scholar]

- Ballard, T.; Beare, S.P. Sand retention testing: The more you do, the worse it gets. In Proceedings of the SPE International Symposium and Exhibition on Formation Damage Control, Society of Petroleum Engineers, Lafayette, LA, USA, 15–17 February 2006. [Google Scholar]

- Markestad, P.; Christie, O.; Espedal, A.; Rørvik, O. Selection of screen slot width to prevent plugging and sand production. In Proceedings of the SPE Formation Damage Control Symposium, Society of Petroleum Engineers, Lafayette, LA, USA, 14–15 February 1996. [Google Scholar]

- Ballard, T.J.; Beare, S.P. An investigation of sand retention testing with a view to developing better guidelines for screen selection. In Proceedings of the SPE International Symposium and Exhibition on Formation Damage Control, Society of Petroleum Engineers, Lafayette, LA, USA, 15–17 February 2012. [Google Scholar]

- Ballard, T.; Beare, S.; Wigg, N. Sand Retention Testing: Reservoir Sand or Simulated Sand-Does it Matter? In Proceedings of the SPE International Conference and Exhibition on Formation Damage Control, Society of Petroleum Engineers, Lafayette, LA, USA, 24–26 February 2016. [Google Scholar]

- Agunloye, E.; Utunedi, E. Optimizing sand control design using sand screen retention testing. In Proceedings of the SPE Nigeria Annual International Conference and Exhibition, Society of Petroleum Engineers, Victoria Island, Nigeria, 5–7 August 2014. [Google Scholar]

- Mathisen, A.M.; Aastveit, G.L.; Alteraas, E. Successful installation of stand alone sand screen in more than 200 wells-the importance of screen selection process and fluid qualification. In Proceedings of the European Formation Damage Conference, Society of Petroleum Engineers, The Hague, The Netherlands, 30 May–1 June 2007. [Google Scholar]

- Fischer, C.; Hamby, H. A Novel Approach to Constant Flow-Rate Sand Retention Testing. In Proceedings of the SPE International Conference and Exhibition on Formation Damage Control, Society of Petroleum Engineers, Lafayette, LA, USA, 7–9 February 2018. [Google Scholar]

- Ma, C.; Deng, J.; Dong, X.; Sun, D.; Feng, Z.; Luo, C.; Xiao, Q.; Chen, J. A new laboratory protocol to study the plugging and sand control performance of sand control screens. J. Pet. Sci. Eng. 2020, 184, 106548. [Google Scholar]

- Torrisi, M.; Pollastri, G.; Le, Q. Deep learning methods in protein structure prediction. Comput. Struct. Biotechnol. J. 2020, 18, 1301–1310. [Google Scholar] [PubMed]

- Kiranyaz, S.; Avci, O.; Abdeljaber, O.; Ince, T.; Gabbouj, M.; Inman, D.J. 1D convolutional neural networks and applications: A survey. Mech. Syst. Signal Process. 2019, 151, 107398. [Google Scholar]

- Abdulkadir, S.J.; Alhussian, H.; Nazmi, M.; Elsheikh, A.A. Long Short Term Memory Recurrent Network for Standard and Poor’s 500 Index Modelling. Int. J. Eng. Technol. 2018, 7, 25–29. [Google Scholar] [CrossRef]

- Abdulkadir, S.J.; Yong, S.P.; Marimuthu, M.; Lai, F.W. Hybridization of ensemble Kalman filter and nonlinear auto-regressive neural network for financial forecasting. In Mining Intelligence and Knowledge Exploration; Springer: York, NY, USA, 2014; pp. 72–81. [Google Scholar]

- Abdulkadir, S.J.; Yong, S.P. Empirical analysis of parallel-NARX recurrent network for long-term chaotic financial forecasting. In Proceedings of the 2014 International Conference on Computer and Information Sciences (ICCOINS), Kuala Lumpur, Malaysia, 3–5 June 2014; pp. 1–6. [Google Scholar]

- Abdulkadir, S.J.; Yong, S.P.; Zakaria, N. Hybrid neural network model for metocean data analysis. J. Inform. Math. Sci. 2016, 8, 245–251. [Google Scholar]

- Abdulkadir, S.J.; Yong, S.P. Scaled UKF–NARX hybrid model for multi-step-ahead forecasting of chaotic time series data. Soft Comput. 2015, 19, 3479–3496. [Google Scholar]

- Abdulkadir, S.J.; Yong, S.P.; Alhussian, H. An enhanced ELMAN-NARX hybrid model for FTSE Bursa Malaysia KLCI index forecasting. In Proceedings of the 2016 3rd International Conference on Computer and Information Sciences (ICCOINS), Kuala Lumpur, Malaysia, 15–17 August 2016; pp. 304–309. [Google Scholar]

- Abdulkadir, S.J.; Yong, S.P. Lorenz time-series analysis using a scaled hybrid model. In Proceedings of the 2015 International Symposium on Mathematical Sciences and Computing Research (iSMSC), Ipoh, Malaysia, 19–20 May 2015; pp. 373–378. [Google Scholar]

- Yoo, Y. Hyperparameter optimization of deep neural network using univariate dynamic encoding algorithm for searches. Knowl. Based Syst. 2019, 178, 74–83. [Google Scholar]

- Mhiri, M.; Abuelwafa, S.; Desrosiers, C.; Cheriet, M. Footnote-based document image classification using 1D convolutional neural networks and histograms. In Proceedings of the 2017 Seventh International Conference on Image Processing Theory, Tools and Applications (IPTA), Montreal, QC, Canada, 28 November–1 December 2017; pp. 1–5. [Google Scholar]

- Pereira, S.; Pinto, A.; Alves, V.; Silva, C.A. Brain tumor segmentation using convolutional neural networks in MRI images. IEEE Trans. Med. Imaging 2016, 35, 1240–1251. [Google Scholar]

- Shin Yoon, J.; Rameau, F.; Kim, J.; Lee, S.; Shin, S.; So Kweon, I. Pixel-level matching for video object segmentation using convolutional neural networks. In Proceedings of the IEEE international conference on computer vision, Venice, Italy, 22–29 October 2017; pp. 2167–2176. [Google Scholar]

- Aszemi, N.M.; Dominic, P. Hyperparameter optimization in convolutional neural network using genetic algorithms. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 269–278. [Google Scholar]

- Souza, J.F.L.; Santana, G.L.; Batista, L.V.; Oliveira, G.P.; Roemers-Oliveira, E.; Santos, M.D. CNN Prediction Enhancement by Post-Processing for Hydrocarbon Detection in Seismic Images. IEEE Access 2020, 8, 120447–120455. [Google Scholar]

- Zhou, X.; Zhao, C.; Liu, X. Application of CNN Deep Learning to Well Pump Troubleshooting via Power Cards. In Proceedings of the Abu Dhabi International Petroleum Exhibition & Conference, Society of Petroleum Engineers, Abu Dhabi, UAE, 11–14 November 2019. [Google Scholar]

- Daolun, L.; Xuliang, L.; Wenshu, Z.; Jinghai, Y.; Detang, L. Automatic well test interpretation based on convolutional neural network for a radial composite reservoir. Pet. Explor. Dev. 2020, 47, 623–631. [Google Scholar]

- Kwon, S.; Park, G.; Jang, Y.; Cho, J.; Chu, M.-g.; Min, B. Determination of Oil Well Placement using Convolutional Neural Network Coupled with Robust Optimization under Geological Uncertainty. J. Pet. Sci. Eng. 2020, 108, 118. [Google Scholar]

- Li, J.; Hu, D.; Chen, W.; Li, Y.; Zhang, M.; Peng, L. CNN-Based Volume Flow Rate Prediction of Oil–Gas–Water Three-Phase Intermittent Flow from Multiple Sensors. Sensors 2021, 21, 1245. [Google Scholar]

- Chanpura, R.A.; Hodge, R.M.; Andrews, J.S.; Toffanin, E.P.; Moen, T.; Parlar, M. State of the art screen selection for stand-alone screen applications. In Proceedings of the SPE International Symposium and Exhibition on Formation Damage Control, Society of Petroleum Engineers, Lafayette, LA, USA, 10–12 February 2010. [Google Scholar]

- Hodge, R.M.; Burton, R.C.; Constien, V.; Skidmore, V. An evaluation method for screen-only and gravel-pack completions. In Proceedings of the International Symposium and Exhibition on Formation Damage Control, Society of Petroleum Engineers, Lafayette, LA, USA, 20–21 February 2002. [Google Scholar]

- Gillespie, G.; Deem, C.K.; Malbrel, C. Screen selection for sand control based on laboratory tests. In Proceedings of the SPE Asia Pacific Oil and Gas Conference and Exhibition, Society of Petroleum Engineers, Brisbane, Australia, 16–18 October 2000. [Google Scholar]

- Chanpura, R.A.; Fidan, S.; Mondal, S.; Andrews, J.S.; Martin, F.; Hodge, R.M.; Ayoub, J.A.; Parlar, M.; Sharma, M.M. New analytical and statistical approach for estimating and analyzing sand production through wire-wrap screens during a sand-retention test. SPE Drill. Completion 2012, 27, 417–426. [Google Scholar]

- Constien, V.G.; Skidmore, V. Standalone screen selection using performance mastercurves. In Proceedings of the SPE International Symposium and Exhibition on Formation Damage Control, Society of Petroleum Engineers, Lafayette, LA, USA, 15–17 February 2006. [Google Scholar]

- Mondal, S.; Sharma, M.M.; Hodge, R.M.; Chanpura, R.A.; Parlar, M.; Ayoub, J.A. A new method for the design and selection of premium/woven sand screens. In Proceedings of the SPE Annual Technical Conference and Exhibition, Society of Petroleum Engineers, Denver, CO, USA, 30 October–2 November 2011. [Google Scholar]

- Heumann, C.; Schomaker, M. Introduction to Statistics and Data Analysis; Springer: New York, NY, USA, 2016; p. 83. [Google Scholar]

- Suchmacher, M.; Geller, M. Correlation and Regression. In Practical Biostatistics: A Friendly Step-by-Step Approach For Evidence-based Medicine; Academic Press: Waltham, MA, USA, 2012; pp. 167–186. [Google Scholar]

- Forsyth, D. Probability and Statistics for Computer Science; Springer: New York, NY, USA, 2018. [Google Scholar]

- Bonamente, M. Statistics and Analysis of Scientific Data; Springer: New York, NY, USA, 2017; p. 187. [Google Scholar]

- Swinscow, T. Statistics at square one: XVIII-Correlation. Br. Med, J. 1976, 2, 680. [Google Scholar] [CrossRef]

- Chollet, F. Deep Learning with Python; Manning Publication, Co.: New York, NY, USA, 2018; Volume 361. [Google Scholar]

- Abdulkadir, S.J.; Yong, S.P.; Foong, O.M. Variants of Particle Swarm Optimization in Enhancing Artificial Neural Networks. Aust. J. Basic Appl. Sci. 2013, 7, 388–400. [Google Scholar]

- Khan, S.; Rahmani, H.; Shah, S.A.A.; Bennamoun, M. A guide to convolutional neural networks for computer vision. In Synthesis Lectures on Computer Vision; Morgan & Claypool Publishers: San Rafael, CA, USA, 2018; Volume 8, pp. 1–207. [Google Scholar]

- Michelucci, U. Advanced Applied Deep Learning: Convolutional Neural Networks and Object Detection; Springer: New York, NY, USA, 2019. [Google Scholar]

- Pysal, D.; Abdulkadir, S.J.; Shukri, S.R.M.; Alhussian, H. Classification of children’s drawing strategies on touch-screen of seriation objects using a novel deep learning hybrid model. Alex. Eng. J. 2021, 60, 115–129. [Google Scholar]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar]

- Aggarwal, C.C. Neural Networks and Deep Learning; Springer: New York, NY, USA, 2018; Volume 10, pp. 978–983. [Google Scholar]

- Li, Y.; Zou, L.; Jiang, L.; Zhou, X. Fault diagnosis of rotating machinery based on combination of deep belief network and one-dimensional convolutional neural network. IEEE Access 2019, 7, 165710–165723. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. Available online: https://arxiv.org/abs/1412.6980 (accessed on 1 January 2021).

- Chai, T.; Draxler, R.R. Root mean square error (RMSE) or mean absolute error (MAE). Geosci. Model Dev. Discuss. 2014, 7, 1525–1534. [Google Scholar]

- Hyndman, R.J.; Koehler, A.B. Another look at measures of forecast accuracy. Int. J. Forecast. 2006, 22, 679–688. [Google Scholar]

- Willmott, C.J.; Matsuura, K. Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance. Clim. Res. 2005, 30, 79–82. [Google Scholar]

- Onyutha, C. From R-squared to coefficient of model accuracy for assessing” goodness-of-fits”. Geosci. Model. Dev. Discuss. 2020, 1–25. [Google Scholar] [CrossRef]

- He, Z.; Lin, D.; Lau, T.; Wu, M. Gradient Boosting Machine: A Survey. arXiv 2019, arXiv:1908.06951. Available online: https://arxiv.org/abs/1908.06951 (accessed on 19 March 2021).

- Barber, D. Bayesian Reasoning and Machine Learning; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning: From Theory to Algorithms; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Kubat, M.A. Introduction to Machine Learning; Springer: New York, NY, USA, 2017. [Google Scholar]

| Variable Description | Variable Abbreviation | Unit | Type of Variable | Test |

|---|---|---|---|---|

| 10% PSD of formation sand | D10_B | micron (μm) | Input | Slurry |

| 50% PSD of formation sand | D50_B | micron (μm) | Input | Slurry |

| 90% PSD of formation sand | D90_B | micron (μm) | Input | Slurry |

| Uniformity coefficient | UC | - | Input | Slurry |

| Fines content | FINE_CO_B | % | Input | Slurry |

| Aperture size of the screen | SLOT_SIZE | micron (μm) | Input | Slurry |

| Type of screen: Single Wire Wrap (SWW), Premium (PREMIUM), Wire Wrap Screen (WWS), Expandable Sand Screen (ESS), Ceramic (CERAMIC), Metal Mesh Screen (MMS) | SCREEN | - | Input | Slurry and sand pack |

| Sand concentration in test cell | SAND_CONC_IN_TC | gram/liter (g/L) | Input | Slurry |

| Sizing criteria 1: | SIZE_CR1 | - | Input | Sand pack |

| Sizing criteria 2: | SIZE_CR2 | - | Input | Sand pack |

| Sizing criteria 3: | SIZE_CR3 | - | Input | Sand pack |

| Sizing criteria 4: | SIZE_CR4 | - | Input | Sand pack |

| Sign of screen plugging | PLUG_SIGN | - | Output | Slurry |

| Amount of sand produced in gram | SAND_PRODUCED | gram (g) | Output | Slurry |

| Retained permeability | RETAINED_PRM | % | Output | Slurry |

| Amount of sand produced per unit area | SAND_PROD_PER_AREA | pound per square foot (lb/ft2) | Output | Slurry and sand pack |

| Set | Test | Number of Observations | Number of Variable (Output) |

|---|---|---|---|

| 1 | Slurry | 162 | 8 inputs and 1 output (PLUG_SIGN) |

| 2 | Slurry | 367 | 8 inputs and 1 output (SAND_PRODUCED) |

| 3 | Slurry | 65 | 8 inputs and 1 output (RETAINED_PRM) |

| 4 | Slurry | 343 | 8 inputs and 1 output (SAND_PROD_PER_AREA) |

| 5 | Sand pack | 263 | 5 inputs and 1 output (SAND_PROD_PER_AREA) |

| Hyperparameter | Range |

|---|---|

| Number of CNN layer | (1) |

| Number of filters | (32,64,128,256) |

| Kernel size | (2,3,5,9,11) |

| Stride in CNN layer | (1–3) |

| Activation function | (ReLU) |

| Pool size | (2) |

| Stride in pooling layer | (2) |

| Number of FC layer | (4) |

| Dropout rate | (0.1) |

| Epoch | (30,50,60) |

| Batch size | (32) |

| Optimizer | (Adaptive moment estimation) |

| Hyperparameter | Model 1 | Model 2 | Model 3 | Model 4 | Model 5 | Model 6 |

|---|---|---|---|---|---|---|

| Number of CNN layer | 1 | 1 | 1 | 1 | 1 | 1 |

| Number of filters | 64 | 32 | 128 | 256 | 256 | 128 |

| Kernel size | 2 | 3 | 5 | 11 (for set 1,2,4,5) and 9 (only for set 3) | 5 | 3 |

| Stride in CNN layer | 3 (for set 1,2,3,4) and 1 (only for set 5) | 1 | 2 | 2 | 2 | 1 |

| Activation function | ReLU | ReLU | ReLU | ReLU | ReLU | ReLU |

| Pool size | 2 | 2 | 2 | 2 | 2 | 2 |

| Stride in pooling layer | 2 | 2 | 2 | 2 | 2 | 2 |

| Number of FC layer | 4 | 4 | 4 | 4 | 4 | 4 |

| Dropout rate | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 |

| Epoch | 60 | 60 | 30 | 50 | 50 | 50 |

| Batch size | 32 | 32 | 32 | 32 | 32 | 32 |

| Hyperparameter | Value |

|---|---|

| 0.9 | |

| 0.999 | |

| 0.001 | |

| 1 × 10−7 |

| Model | TN | FP | FN | TP | ACC |

|---|---|---|---|---|---|

| 1 | 42 | 3 | 1 | 3 | 0.918 |

| 2 | 41 | 1 | 1 | 6 | 0.959 |

| 3 | 42 | 2 | 2 | 3 | 0.918 |

| 4 | 43 | 2 | 2 | 2 | 0.918 |

| 5 | 46 | 0 | 2 | 1 | 0.959 |

| 6 | 44 | 0 | 2 | 3 | 0.959 |

| Model | MSE | RMSE | MAE | R2 |

|---|---|---|---|---|

| 1 | 0.508 | 0.713 | 0.456 | 0.625 |

| 2 | 0.704 | 0.839 | 0.482 | 0.649 |

| 3 | 0.669 | 0.818 | 0.435 | 0.621 |

| 4 | 0.470 | 0.686 | 0.367 | 0.712 |

| 5 | 0.330 | 0.575 | 0.375 | 0.766 |

| 6 | 0.374 | 0.612 | 0.325 | 0.747 |

| Model | MSE | RMSE | MAE | R2 |

|---|---|---|---|---|

| 1 | 117.217 | 10.827 | 6.485 | 0.957 |

| 2 | 70.616 | 8.403 | 5.438 | 0.978 |

| 3 | 30.121 | 5.488 | 4.616 | 0.990 |

| 4 | 79.436 | 8.913 | 5.415 | 0.966 |

| 5 | 18.240 | 4.271 | 2.411 | 0.993 |

| 6 | 21.980 | 4.688 | 3.889 | 0.990 |

| Model | MSE | RMSE | MAE | R2 |

|---|---|---|---|---|

| 1 | 0.065 | 0.255 | 0.158 | 0.553 |

| 2 | 0.041 | 0.203 | 0.121 | 0.613 |

| 3 | 0.089 | 0.299 | 0.162 | 0.579 |

| 4 | 0.077 | 0.277 | 0.150 | 0.585 |

| 5 | 0.032 | 0.179 | 0.096 | 0.772 |

| 6 | 0.032 | 0.179 | 0.109 | 0.761 |

| Model | MSE | RMSE | MAE | R2 |

|---|---|---|---|---|

| 1 | 0.007 | 0.083 | 0.060 | 0.837 |

| 2 | 0.009 | 0.096 | 0.052 | 0.821 |

| 3 | 0.011 | 0.104 | 0.063 | 0.724 |

| 4 | 0.019 | 0.136 | 0.068 | 0.664 |

| 5 | 0.012 | 0.111 | 0.056 | 0.686 |

| 6 | 0.027 | 0.166 | 0.088 | 0.530 |

| Model | Description | Hyperparameter (value) | Formula |

|---|---|---|---|

| GB | GB’s prediction used ensemble method with gradient descent to minimize the loss function [55]. | Loss function [classification: binary_crossentropy, regression: (east_squares) | is the loss function; is the argument of function that minimizes the expected value of loss function. |

| Learning rate (0.1) | |||

| Maximum number of trees (100) | |||

| Maximum number of leaves for each tree (31) | |||

| Minimum number of samples per leaf (20) | |||

| KNN | KNN cast the prediction by the weighted average of the targets according to its neighbor’s closest distance [56]. | Number of neighbors (3) | is a test example; is one of the KNN in training set; is an indicator that the training set, belongs to class is the argument of function that gives the maximum predicted probability. |

| Weight function used for prediction (uniform) | |||

| Algorithm used to compute the nearest neighbors (auto) | |||

| Leaf size (30) | |||

| Power parameter (2) | |||

| Distance metric used for the tree (minkowski) | |||

| RF | RF represents a boosting method consisting of a collection of decision trees where a majority vote acquires the prediction over each tree’s predictions [57]. | Number of trees (1000) | is the total number of trees; is the prediction of the individual tree at node. |

| Function to measure the quality of a split (classification: Gini, regression: MSE) | |||

| Minimum number of samples required to split (2) | |||

| Minimum number of samples required to be at a leaf node (1) | |||

| Minimum weighted fraction of total weights required to be at a leaf node (0) | |||

| Number of features to consider when looking for the best split (auto) | |||

| Random state (42) | |||

| SVM | The prediction of SVM depends on the hyperplane and the decision boundary in multidimensional space where the algorithm will find the best fit line with a maximum number of points [58]. | Regularization parameter (1) | |

| Kernel (classification: linear, regression: RBF) | is the regularization parameter; is the loss function; is the hyperplane with a maximum margin. | ||

| Stopping criteria tolerance (1 × 10−3) | |||

| Hard limit on iterations within solver (−1) | |||

| Kernel coefficient for RBF [regression only: scale] | |||

| Epsilon (regression only: 0.1) |

| Model | TN | FP | FN | TP | Accuracy |

|---|---|---|---|---|---|

| 1D-CNN | 41 | 1 | 1 | 6 | 0.959 |

| GB | 35 | 1 | 2 | 3 | 0.927 |

| KNN | 34 | 1 | 2 | 4 | 0.927 |

| RF | 33 | 2 | 3 | 3 | 0.878 |

| SVM | 31 | 2 | 4 | 4 | 0.854 |

| Model | MSE | RMSE | MAE | R2 |

|---|---|---|---|---|

| 1D-CNN | 0.330 | 0.575 | 0.375 | 0.766 |

| GB | 0.339 | 0.594 | 0.395 | 0.714 |

| KNN | 0.437 | 0.661 | 0.396 | 0.677 |

| RF | 0.901 | 0.949 | 0.422 | 0.573 |

| SVM | 0.551 | 0.742 | 0.514 | 0.578 |

| Model | MSE | RMSE | MAE | R2 |

|---|---|---|---|---|

| 1D-CNN | 18.240 | 4.271 | 2.411 | 0.993 |

| GB | 288.692 | 16.990 | 13.900 | 0.845 |

| KNN | 18.570 | 4.309 | 2.535 | 0.990 |

| RF | 77.758 | 8.818 | 5.854 | 0.954 |

| SVM | 83.300 | 9.126 | 8.335 | 0.904 |

| Model | MSE | RMSE | MAE | R2 |

|---|---|---|---|---|

| 1D-CNN | 18.240 | 4.271 | 2.411 | 0.772 |

| GB | 288.692 | 16.990 | 13.900 | 0.548 |

| KNN | 18.570 | 4.309 | 2.535 | 0.564 |

| RF | 77.758 | 8.818 | 5.854 | 0.735 |

| SVM | 83.300 | 9.126 | 8.335 | 0.624 |

| Model | MSE | RMSE | MAE | R2 |

|---|---|---|---|---|

| 1D-CNN | 0.007 | 0.083 | 0.060 | 0.837 |

| GB | 0.023 | 0.153 | 0.086 | 0.578 |

| KNN | 0.014 | 0.118 | 0.079 | 0.603 |

| RF | 0.014 | 0.119 | 0.092 | 0.292 |

| SVM | 0.026 | 0.162 | 0.122 | 0.570 |

| Output | Test | Test Statistic | p-Value | Critical Value |

|---|---|---|---|---|

| Slurry–PLUG_SIGN | Kruskal-Wallis | 12.2000 | 0.0159 | 9.4677 |

| Slurry–SAND_PRODUCED | ANOVA | 11.0518 | 1.254 × 10−8 | 2.3881 |

| Slurry–RETAINED_PRM | ANOVA | 35.4468 | 4.254 × 10−18 | 2.4675 |

| Slurry–SAND_PROD_PER_AREA | ANOVA | 23.9964 | 3.307 × 10−18 | 2.3894 |

| Sand pack–SAND_PROD_PER_AREA | ANOVA | 35.3620 | 3.283 × 10−25 | 2.3948 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Razak, N.N.A.; Abdulkadir, S.J.; Maoinser, M.A.; Shaffee, S.N.A.; Ragab, M.G. One-Dimensional Convolutional Neural Network with Adaptive Moment Estimation for Modelling of the Sand Retention Test. Appl. Sci. 2021, 11, 3802. https://doi.org/10.3390/app11093802

Razak NNA, Abdulkadir SJ, Maoinser MA, Shaffee SNA, Ragab MG. One-Dimensional Convolutional Neural Network with Adaptive Moment Estimation for Modelling of the Sand Retention Test. Applied Sciences. 2021; 11(9):3802. https://doi.org/10.3390/app11093802

Chicago/Turabian StyleRazak, Nurul Nadhirah Abd, Said Jadid Abdulkadir, Mohd Azuwan Maoinser, Siti Nur Amira Shaffee, and Mohammed Gamal Ragab. 2021. "One-Dimensional Convolutional Neural Network with Adaptive Moment Estimation for Modelling of the Sand Retention Test" Applied Sciences 11, no. 9: 3802. https://doi.org/10.3390/app11093802

APA StyleRazak, N. N. A., Abdulkadir, S. J., Maoinser, M. A., Shaffee, S. N. A., & Ragab, M. G. (2021). One-Dimensional Convolutional Neural Network with Adaptive Moment Estimation for Modelling of the Sand Retention Test. Applied Sciences, 11(9), 3802. https://doi.org/10.3390/app11093802