A Novel Deep Learning Based Model for Tropical Intensity Estimation and Post-Disaster Management of Hurricanes

Abstract

:1. Introduction

2. Literature Review

Research Gaps and Motivation

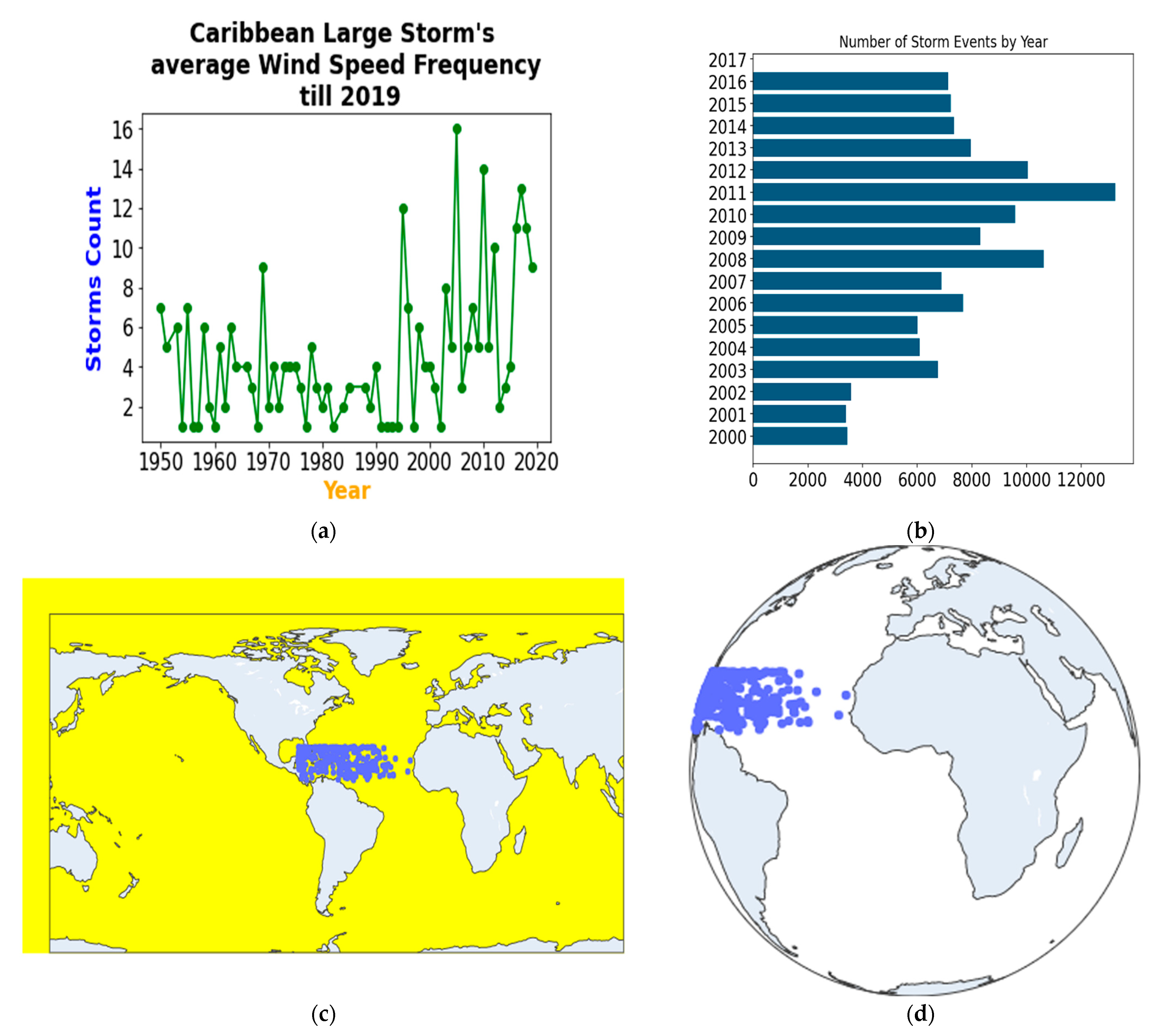

- Exploratory data analysis and visualization of hurricanes is being carried out.

- The development of improved deep CNN (I-DCNN) model for estimating the intensity using the infrared satellite imagery dataset.

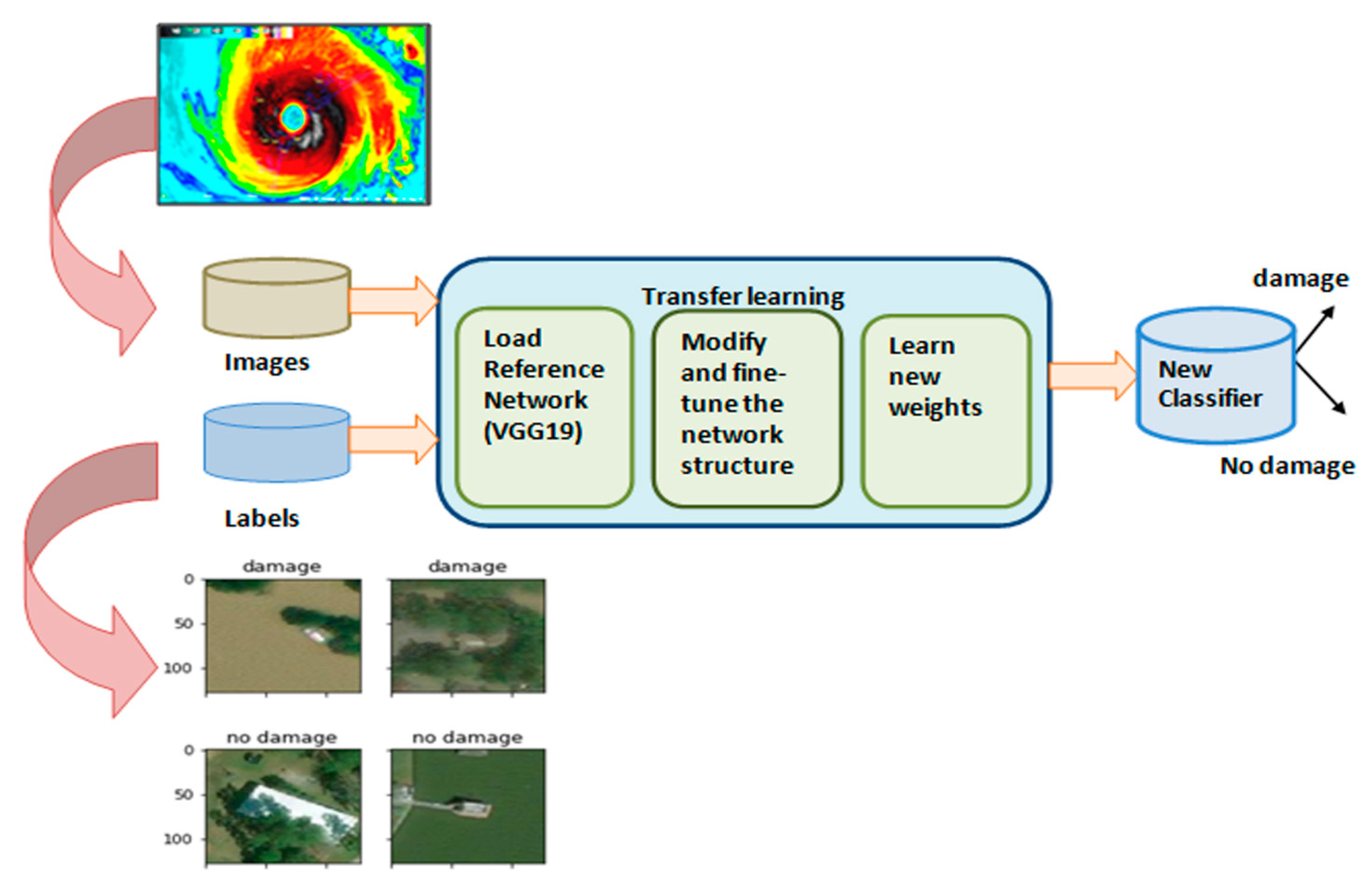

- To perform a damage assessment of hurricanes and categorization of various extreme weather events using VGG 19 CNN with data augmentation and transfer learning.

- To explore the mitigation steps in reducing the hurricane risk.

3. Methodology

3.1. Theoretical Background

3.2. Dataset Description

3.2.1. Data for Hurricane Intensity Estimation

3.2.2. Data for Hurricane Damage Prediction

3.2.3. Data for Extreme Weather Event Classification

3.3. Methodology

3.3.1. Hurricane Intensity Estimation

3.3.2. Dropout and Batch Normalization

3.3.3. Hurricane Damage Prediction

3.3.4. Classification of Severe Weather Events

3.4. VGG 19 Architecture

4. Performance Metrics and Evaluation

5. Results and Discussions

5.1. Prediction of Hurricane Intensity

5.2. Hurricane Damage Prediction

5.3. Detection and Annotation of Severe Weather Events

5.4. Hurricane Risk Mitigation

6. Conclusions and Future Work

- For hurricane intensity estimation, an improved deep CNN model is trained with the satellite images of hurricane along with the wind speed data. The proposed model provides lower RMSE value of 7.6% and MAE value of 6.68% after removing the max-pooling layers, and adding the batch normalization after first convolution layer.

- For building damage assessment in context with post-disaster management, transfer learning using feature extraction of VGG 19 CNN achieves a higher accuracy of 98% than VGG 16 model, with most of the predictions as true positives.

- For classifying the severe weather events, fine-tuning of VGG 19 achieves a higher accuracy of 97% by training the video datasets

- Importance of mitigation measures against hurricane are addressed.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Smith, G. Hurricane names: A bunch of hot air? Weather Clim. Extrem. 2016, 12, 80–84. [Google Scholar] [CrossRef] [Green Version]

- Schwartz, S.B. Sea of Storms: A History of Hurricanes in the Greater Caribbean from Columbus to Katrina; Princeton University Press: Princeton, NJ, USA, 2015. [Google Scholar]

- Mori, N.; Takemi, T. Impact assessment of coastal hazards due to future changes of tropical cyclones in the North Pacific Ocean. Weather Clim. Extrem. 2016, 11, 53–69. [Google Scholar] [CrossRef] [Green Version]

- Karpatne, A.; Ebert-Uphoff, I.; Ravela, S.; Babaie, H.; Kumar, V. Machine learning for the geosciences: Challenges and opportunities. IEEE Trans. Knowl. Data Eng. 2019, 31, 1544–1554. [Google Scholar] [CrossRef] [Green Version]

- Zipser, E.; Liu, C.; Cecil, D.; Nesbitt, S.; Yorty, S. Where are the most intense thunderstorms on Earth? Bull. Am. Meteorol. Soc. 2006, 87, 1057–1071. [Google Scholar] [CrossRef] [Green Version]

- Gagne, D.J., II; Williams, J.K.; Brown, R.A.; Basara, J.B. Enhancing understanding and improving prediction of severe weather through spatiotemporal relational learning. Mach. Learn. 2014, 95, 27–50. [Google Scholar] [CrossRef] [Green Version]

- McGovern, A.; Elmore, K.L.; Gagn, D.J., II; Haupt, S.E.; Karstens, C.D.; Lagerquist, R.; Smith, T.; Williams, J.K. Using Artificial Intelligence to improve real-time decision making. Bull. Am. Meteorol. Soc. 2017, 98, 2073–2090. [Google Scholar] [CrossRef]

- Olander, T.; Velden, C. The current status of the UW-CIMSS Advanced Dvorak Technique (ADT). In Proceedings of the 30th Conference Hurricanes Tropical Meteorology, Madison, WI, USA, 17 April 2012; American Meteorological Society: Boston, MA, USA, 2012. [Google Scholar]

- Olander, T.; Velden, C. The advanced Dvorak technique: Continued development of an objective scheme to estimate tropical cyclone intensity using geostationary infrared satellite imagery. Weather Forecast. 2007, 22, 287–298. [Google Scholar] [CrossRef]

- Olander, T.; Velden, C. The advanced Dvorak technique (ADT) for estimating tropical cyclone intensity: Update and new capabilities. Weather Forecast. 2019, 34, 905–922. [Google Scholar] [CrossRef]

- Pineros, M.; Ritchie, E.; Tyo, J. Estimating tropical cyclone intensity from infrared image data. Weather Forecast. 2011, 26, 690–698. [Google Scholar] [CrossRef]

- Pineros, M.; Ritchie, E.; Tyo, J. Objective measures of tropical cyclone structure and intensity change from remotely sensed infrared image data. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3574–3580. [Google Scholar] [CrossRef]

- Ritchie, E.; Valliere-Kelley, G.; Piñeros, M.; Tyo, J. Tropical cyclone intensity estimation in the North Atlantic basin using an improved deviation angle variance technique. Weather Forecast. 2012, 27, 1264–1277. [Google Scholar] [CrossRef] [Green Version]

- Ritchie, E.; Wood, K.; Rodríguez-Herrera, O.; Pineros, M.; Tyo, J. Satellite-derived tropical cyclone intensity in the North Pacific Ocean using the deviation-angle variance technique. Weather Forecast. 2014, 29, 505–516. [Google Scholar] [CrossRef] [Green Version]

- Li, L.; Zhou, Y.; Wang, H.; Zhou, H.; He, X.; Wu, T. An Analytical Framework for the Investigation of Tropical Cyclone Wind Characteristics over Different Measurement Conditions. Appl. Sci. 2019, 9, 5385. [Google Scholar] [CrossRef] [Green Version]

- Hay, J.; Mimura, N. The changing nature of extreme weather and climate events: Risks to sustainable development. Geomat. Nat. Hazards Risk 2010, 1, 3–18. [Google Scholar] [CrossRef]

- Devaraj, J.; Elavarasan, R.M.; Pugazhend, R.; Shafiullah, G.M.; Ganesan, S.; Jeysree, A.K.; Khan, I.A.; Hossain, E. Forecasting of COVID-19 cases using deep learning models: Is it reliable and practically significant? Results Phys. 2021, 21, 103817. [Google Scholar] [CrossRef] [PubMed]

- Raz, T.; Liwag, C.R.E.U.; Valentine, A.; Andres, L.; Castro, L.T.; Cuña, A.C.; Vinarao, C.; Raza, T.K.S.; Mchael, K.; Marsian, E.; et al. Extreme weather disasters challenges for sustainable development: Innovating a science and policy framework for disaster-resilient and sustainable, Quezon City, Philippines. Prog. Disaster Sci. 2020, 5, 100066. [Google Scholar] [CrossRef]

- Bao, X.; Jiang, D.; Yang, X.; Wang, H. An improved deep belief network for traffic prediction considering weather factors. Alex. Eng. J. 2021, 60, 413–420. [Google Scholar] [CrossRef]

- Devaraj, J.; Elavarasan, R.M.; Shafiullah, G.M.; Jamal, T.; Khan, I. A holistic review on energy forecasting using big data and deep learning models. Int. J. Energy Res. 2021. [Google Scholar] [CrossRef]

- Anbarasana, M.; Muthu, B.A.; Sivaparthipan, C.B.; Sundarasekar, R.; Dine, S. Detection of flood disaster system based on IoT, big data and convolutional deep neural network. Comput. Commun. 2020, 150, 150–157. [Google Scholar] [CrossRef]

- Rysman, J.L.L.; Claud, C.; Dafis, S. Global monitoring of deep convection using passive microwave observations. Atmos. Res. 2021, 247, 105244. [Google Scholar] [CrossRef]

- Tien, D.; Nhat-DucHoang, D.; Martínez-Álvarez, F.; Thi Ngo, P.; Viet Hoa, P.; Dat Pham, T.; Samui, P.; Costacheij, R. A novel deep learning neural network approach for predicting flash flood susceptibility: A case study at a high frequency tropical storm area. Sci. Total Environ. 2020, 701, 134413. [Google Scholar] [CrossRef]

- Kordmahalleh, M.M.; Sefidmazgi, M.G.; Homaifar, A.A. A Sparse Recurrent Neural Network for Trajectory Prediction of Atlantic Hurricanes. In Proceedings of the Genetic and Evolutionary Computation Conference (GECCO’ 16), Denver, CO, USA, 20–24 July 2016; pp. 957–964. [Google Scholar]

- Mangalathu, S.; Burton, H.V. Deep learning-based classification of earthquake-impacted buildings using textual damage descriptions. Int. J. Disaster Risk Reduct. 2019, 36, 101111. [Google Scholar] [CrossRef]

- Alemany, S.; Beltran, J.; Perez, A.; Ganzfried, S. Predicting Hurricane Trajectories Using a Recurrent Neural Network. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence (AAAI-19), Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Chen, R.; Wang, X.; Zhang, W.; Zhu, X.; Li, A.; Yang, C. A hybrid CNN-LSTM model for typhoon formation forecasting. Geoinformatica 2019, 23, 375–396. [Google Scholar] [CrossRef]

- Mohammadi, M.E.; Watson, D.P.; Wood, R.L. Deep Learning-Based Damage Detection from Aerial SfM Point Clouds. Drones 2019, 3, 68. [Google Scholar] [CrossRef] [Green Version]

- Zhou, K.H.; Zheng, Y.G.; Li, B. Forecasting different types of convective weather: A deep learning approach. J. Meteorol. Res. 2019, 33, 797–809. [Google Scholar] [CrossRef]

- Snaiki, R.; Wu, T. Knowledge-enhanced deep learning for simulation of tropical cyclone boundary-layer winds. J. Wind Eng. Ind. Aerodyn. 2019, 194, 103983. [Google Scholar] [CrossRef]

- Chen, B.F.; Chen, B.; Elsberry, R.L. Estimating Tropical Cyclone Intensity by Satellite Imagery Utilizing Convolutional Neural Networks. Weather Forecast. 2019, 34, 447–465. [Google Scholar] [CrossRef]

- Castro, R.; Souto, Y.M.; Ogasawara, E.; Porto, F.; Bezerra, E. STConvS2S: Spatiotemporal Convolutional Sequence to Sequence Network for weather forecasting. Neurocomputing 2020, 426, 285–298. [Google Scholar] [CrossRef]

- Kim, S.; Kim, H.; Lee, J.; Yoon, S.W.; Kahou, S.E.; Kashinath, K.; Prabhat, M. Deep-Hurricane-Tracker: Tracking and Forecasting Extreme Climate Events. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019. [Google Scholar]

- Li, Y.; Hu, W.; Dong, H.; Zhang, X. Building Damage Detection from Post-Event Aerial Imagery Using Single Shot Multibox Detector. Appl. Sci. 2019, 9, 1128. [Google Scholar] [CrossRef] [Green Version]

- Haghroosta, T. Comparative study on typhoon’s wind speed prediction by a neural networks model and a hydrodynamical model. MethodsX 2019, 6, 633–640. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, S.; Zhang, W.; Peng, J.; Cai, Y. Multifactor spatio-temporal correlation model based on a combination of convolutional neural network and long short-term memory neural network for wind speed forecasting. Energy Convers. Manag. 2019, 185, 783–799. [Google Scholar] [CrossRef]

- Neshat, M.; Nezhad, M.M.; Abbasnejad, E.; Mirjalili, S.; Tjernberg, B.L.; Garcia, A.D.; Alexander, B.; Wagner, M. A deep learning-based evolutionary model for short-term wind speed forecasting: A case study of the Lillgrund offshore wind farm. Energy Convers. Manag. 2021, 236, 114002. [Google Scholar] [CrossRef]

- Meka, R.; Alaeddini, A.; Bhaganagar, K. A robust deep learning framework for short-term wind power forecast of a full-scale wind farm using atmospheric variables. Energy 2021, 221, 119759. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet classification with deep convolutional neural networks. In Proceedings of the NIPS’12 Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 1, pp. 1097–1105. [Google Scholar]

- Bengio, Y.; Courville, A. Deep learning of representations. In Handbook on Neural Information Processing; Springer: Berlin, Germany, 2013; Volume 49, pp. 1–28. [Google Scholar]

- Pradhan, R.; Aygun, R.; Maskey, M.; Ramachandran, R.; Cecil, D. Tropical cyclone intensity estimation using a deep convolutional neural network. IEEE Trans. Image Process. 2018, 27, 692–702. [Google Scholar] [CrossRef] [PubMed]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Zurich, Switzerland, 2014; pp. 818–833. [Google Scholar]

- Dataset for Hurricane Is Accessed from NHC. Available online: https://www.nhc.noaa.gov/ (accessed on 20 November 2020).

- Satellite Imagery Data Are Accessed from HURSAT2. Available online: https://www.ncdc.noaa.gov/hursat/ (accessed on 20 November 2020).

- Satellite Dataset for Hurricane Damage Prediction Is Accessed from IEEE DataPort. Available online: https://ieee-dataport.org/keywords/hurricane (accessed on 20 November 2020).

- Classification of Extreme Weather Events Dataset from pySearchImage GoogleImages. Available online: https://www.pyimagesearch.com (accessed on 30 December 2020).

- Bertinelli, L.; Mohan, P.; Strobl, E. Hurricane damage risk assessment in the Caribbean: An analysis using synthetic hurricane events and nightlight imagery. Ecol. Econ. 2016, 124, 135–144. [Google Scholar] [CrossRef]

- Beurs, K.M.; McThompson, N.S.; Owsley, B.C.; Henebry, G.M. Hurricane damage detection on four major Caribbean islands. Remote Sens. Environ. 2019, 229, 1–13. [Google Scholar] [CrossRef]

- Sealya, K.; Strobl, E. A hurricane loss risk assessment of coastal properties in the caribbean: Evidence from the Bahamas. Ocean Coast. Manag. 2017, 149, 42–51. [Google Scholar] [CrossRef]

- Medina, N.; Abebe, Y.A.; Sanchez, A.; Vojinovic, Z. Assessing Socio economic Vulnerability after a Hurricane: A Combined Use of an Index-Based approach and Principal Components Analysis. Sustainability 2020, 12, 1452. [Google Scholar] [CrossRef] [Green Version]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Hinz, T.; Navarro-Guerrero, N.; Magg, S.; Wermter, S. Speeding up the Hyperparameter Optimization of Deep Convolutional Neural Networks. Int. J. Comput. Intell. Appl. 2018, 17, 1850008. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Gao, L.; Wang, P.; Sun, X.; Liu, X. Two-stream 3-D convnet fusion for action recognition in videos with arbitrary size and length. IEEE Trans. Multimed. 2018, 20, 634–644. [Google Scholar] [CrossRef]

- Bai, Y.; Mas, E.; Koshimura, S. Towards operational satellite-based damage-mapping using u-net convolutional network: A case study of 2011 tohoku earthquake-tsunami. Remote Sens. 2018, 10, 1626. [Google Scholar] [CrossRef] [Green Version]

- Duarte, D.; Nex, F.; Kerle, N.; Vosselman, G. Satellite Image Classification Of Building Damages Using Airborne And Satellite Image Samples In A Deep Learning Approach. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 4, 89–96. [Google Scholar] [CrossRef] [Green Version]

- Ning, H.; Li, Z.; Hodgson, M.E. Prototyping a Social Media Flooding Photo Screening System Based on Deep Learning. ISPRS Int. J. Geo-Inf. 2020, 9, 104. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, D.T.; Ofli, F.; Imran, M.; Mitra, P. Damage assessment from social media imagery data during disasters. In Proceedings of the 2017 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining 2017, Sydney, Australia, 31 July–3 August 2017; pp. 569–576. [Google Scholar]

- Chen, S.A.; Escay, A.; Haberland, C.; Schneider, T.; Staneva, V.; Choe, Y. Benchmark dataset for automatic damaged building detection from post-hurricane remotely sensed imagery. IEEE Dataport 2019. [Google Scholar] [CrossRef]

| Region | Development | Sustained Winds (km/h) |

|---|---|---|

| Tropical | Tropical Storm | Between 64 km/hour to 118 km/h (74 miles/h) |

| Hurricane | > or = 119 km/h (74 miles/h) | |

| Tropical Depression | < or = 63 km/h (39 miles/h) |

| Ref. | Forecasting Model | Severe Weather Type | Data Source, Data Set, Sample Size and Location | Accuracy and Evaluation | Purpose of Prediction | Limitations |

|---|---|---|---|---|---|---|

| [21] | Convolutional deep neural network (CDNN) | Flood disaster. | Big data of flood disaster for a period of 10 years. | CDNN outperforms Artificial neural networks (ANN) and DNN with higher accuracy of 91%. | Detection of flood using IOT, Big Data, and CDNN models along with Hadoop Distributed File System (HDFS) map reduces tasks. | Difficult to acquire good prediction results for the spatio-temporal variables. |

| [22] | DEEPSTORM | Ice water path detection (IWP) in tropics, mid-latitudes, and hurricane prediction. | Microwave radiometer observations, Cloud profiling radar (CPR) measurements embedded in each radiometer pixel for the period spanning between 2006 and 2017. Hurricane data between Sep 2016 to Dec 2016 are considered. | Average root mean square error—0.27 kg/m2; correlation index—0.87; false alarm rate—24%. | Deep moist atmospheric convection is accurate in the tropics, and IWP works well in the mid-latitudes. | Prone to over-fit, difficult to capture non-linear relationship between input and output data. Does not produce good results for long-term prediction. |

| [23] | Deep learning neural network (DLNN) | Flood susceptibility prediction. | The high frequency tropical storm area in Vietnam with attributes such as slope, curvature, stream density, and rainfall are considered. | DLNN outperforms multilayer perceptron neural network and support vector machine. Accuracy rate—92.05% Positive predictive value— 94.55%. | To predict the flash flood susceptibility levels using an inference model. | Feature selection is carried out using the information gain ratio. Automated feature selection at higher level using hybrid models can enhance the prediction accuracy. |

| [24] | Recurrent neural network (RNN) and Genetic algorithm (GA) | Hurricane prediction. | Atlantic hurricane data. | Proposed model provides nearly 85% greater accuracy than the other traditional models. | Dynamic time warping (DWT) that measures the distance between the target hurricanes improves the prediction accuracy. | RNN lacks in capturing the long-term dependencies in the data and fails to produce good results for the changing and dynamic nature of weather variables. |

| [25] | Long short term memory (LSTM) | Earthquake impacted building damage using textual descriptions. | California earthquake recorded building damage data with 3423 buildings (1552 green tagged, 1674 yellow tagged, 197 red tagged). | Accuracy of 86% is achieved to identify the ATC-20 tags for the test data. | Building damage assessment after the occurrence of an earthquake to help the emergency responders and recovery planners. | Model was trained for a single earthquake with a smaller number of textual information and components. Training the model to classify the multiple events with higher level components. |

| [26] | Grid-based Recurrent neural network (RNN) | Hurricane prediction. | Hurricanes and tropical storms from 1920 to 2012 from National Hurricane Center (NHC). | Mean squared error DEAN—0.0842; SANDY—0.0800; ISAAC—0.0592. | Grid-based RNN outperforms sparse based RNN average by considering latitude and longitude data. | When the size of the dataset increases, the conversion rate from locations of grid to latitude and longitude coordinates increases. |

| [27] | CNN-LSTM with 3DCNN and 2DCNN | Typhoon forecasting. | World Meteorological Organization (WMO) of BestTrack Archive for Climate Stewardship (IBTrACS) tropical cyclone dataset. | Hybrid CNN-LSTM —0.852; AUC—0.897. | Spatio-temporal sequence prediction and analysis of atmospheric variables are done in 3D space. | High-resolution satellite image data are not considered for typhoon prediction. |

| [28] | 3D Fully connected convolutional neural networks (FCNN) | Post-disaster assessment of hurricane damage. | Point cloud datasets at the south of Texas after Hurricane Harvey. | Salt Lake (Model-64)—97% accuracy. Post Aranas (Model-100)—97.4% accuracy. | To classify the objects of different types such as damages, undamaged structures, and neutral and terrain classes. | Achieved lower precision and recall values for the classes with similar geometric and color features. |

| [29] | Deep convolutional neural networks (DCNN) | Heavy rain (HR), hail, convective gusts (CG), and thunderstorms. | Surface Contraction Waves (SCW) observations and National Centers for Environmental Prediction (NCEP) final (FNL) operational global analysis data during the period of March–October 2010–2014. | Threat score of thunderstorm—16.1%, HR—33.2%, hail—178%, CG—55.7%. | Deep CNN extracts nonlinear features of weather variables automatically and considers terrain features. | Analysis is done for different types of weather events by considering only a few datasets. The hybrid model can enhance the prediction accuracy for a large volume of data. |

| [30] | Knowledge-enhanced deep learning model | Tropical cyclone boundary layer winds. | Storm parameters such as spatial coordinates, storm size, and intensity values. | L2 norm for the noise cases with respect to noise free simulation are 0.0055, 0.0071, and 0.0093. | To predict the boundary layer winds associated with different tropical cyclones. Early warning can be given to prevent the tropical cyclone hazard. | To improve the performance of the knowledge enhanced deep learning model, the parameters such as pressure, wind shear, and friction force can be considered. |

| [31] | Convolutional neural network (CNN) | Tropical cyclone (TC). | Satellite infrared brightness temperature and microwave rain-rate data from 1097 global TCs between 2003–14 and optimized with data from 188 TCs between 2015–16. Testing data of 94 global TCs during 2017. | Root-mean-square intensity difference of 8.39 knots. | CNN estimates the TC intensity as a regression task and the RMSE is reduced to 8.74 kts because of post analysis smoothing. | Operational latency is higher and rain rate observation data in the short time window are not considered. |

| [32] | Spatio-temporal convolutional sequence to sequence network (STConvS2S) | Rainfall Prediction. | South America rainfall data and air temperature data. | Good accuracy is achieved with 23% better performance than the RNN-based models. | During learning, temporal order can be random, and the length of input and output sequences need not be equal. Spatio-temporal relationships are captured using only CNN. | Long-term dependencies in the temporal data cannot be efficiently handled and difficult to predict severe weather events with the more atmospheric variables. |

| [33] | ConvLSTM | Hurricane prediction. | 20 years of hurricane data from the National Hurricane Center (NHC). | ConvLSTM outperforms other models by gaining a good prediction accuracy of 87%. | Hurricane trajectories are predicted using density map sequences. | High computational costs and memory consumption for training. |

| [34] | Single shot multi box detector (SSD) algorithm. | Hurricane Sandy’s post-disaster damage prediction. | Hurricane Irma dataset from NHC. | Detection accuracy of mF1 and mAP increased by approximately 20% and 72% percent and the false alarm rate is reduced. | Data augmentation and pre-training improved the prediction accuracy. Gaussian noise deals with adaptability of complex images. | Real-time detection is complex to implement, and the model should be pre-trained on a large volume of datasets. |

| [35] | Typhoon wind speed prediction. | Weather Research and Forecasting (WRF) model and Adaptive Neuro-Fuzzy Inference System (ANFIS). | Six-hourly NCEP reanalysis of the 16 selected tropical cyclones from 1985 to 2011 in South China. Sea typhoon characteristics from the National Oceanic and Atmospheric Administration (NOAA). | ANN RMSE—6.11; CC—0.95; ANFIS RMSE—3.78; CC—0.98. | Intelligent neural networks outperform hydro-dynamic model because of the repetitive characteristics of typhoons. | The model mainly depends on the data chosen. For varying attributes, it is difficult to attain better performance. |

| [36] | Convolutional neural network and long short term memory (CNN-LSTM) and Multi-factor spatio-temporal correlation- CNN-LSTM (MFSTC-CNN-LSTM) | Wind speed forecasting. | Forty-six sites of data from the National Wind Institute in Texas from January 1 to June 29, 2018. The dataset contains attributes such as wind speed, wind direction, temperature, dew point, humidity, etc., with a 5-min interval. | Site name: ASPE Season: spring sum of squared error SSE—10809.8; MAE—1.0652; RMSE—1.4445; standard deviation error SDE—1.4444; index of agreement(IA)—0.9977; direction accuracy (DA)—46.9; Pearson correlation coefficient PCC—0.9400. | To improve the accuracy of wind-speed forecasting for enhancing the operational efficiency, power quality, and the economic benefit. | A data preprocessing technique is not employed to reduce the data noise. A hybrid model using a deep neural network (DNN) can improve the accuracy. |

| [37] | Evolutionary decomposition–hierarchical generalized normal distribution optimization-BiLSTM (ED- HGNDO-BiLSTM) | Short-term wind speed forecasting. | Swedish wind farm located in the Baltic Sea with the forecasting horizon of 10-min ahead and 1-h ahead. | Ten minutes ahead for B8 wind turbine at Lillgrund. ED-HGNDO-BiLSTM Model Summer Season: RMSE—7.41 × 10−1; MAE—5.32 × 10−1; MAPE—1.24 × 101; R —9.77 × 10−1; Root Mean Square Logarithmic Error RMSLE—1.41 × 10−1; Theil’s Inequality Co-efficient TIC—6.22 × 10−2. | To improve the accuracy of short-term wind-speed forecasting using a hybrid model by classifying the wind-speed time-series data and analyzing the performance on four different seasons. | Advanced feature extraction techniques, meta-heuristics algorithm, and other hybrid deep learning models can improve the accuracy. |

| [38] | Temporal convolutional network model (TCN). | Short-term wind power forecast. | Twelve months of data of 86 wind turbines from a 130 MW utility scale wind farm. Multi-step prediction is done 0, 10, 20, 30, 40 and 50-min ahead. | Optimal power curves are obtained using TCN. TCN outperforms CNN and the hybrid model CNN+LSTM. | Total wind power is predicted using a TCN model with an orthogonal array tuning method (OATM) to optimize the hyper parameters of the proposed model. | Only the temporal variables along with the meteorological variables are considered. Identifying the spatio-temporal correlation using hybrid deep learning models can improve the performance of the wind power forecast. |

| Hurricane Category | Sustained Wind Speed Range (miles/h) |

|---|---|

| Tropical Depression | <=33 |

| Tropical Storm | 34–64 |

| Category 1 | 74–95 |

| Category 2 | 96–110 |

| Category 3 | 111–130 |

| Category 4 | 131–155 |

| Category 5 | >155 |

| Validation Fold | Fold 1 | Fold 2 | Fold 3 | Fold 4 | Fold 5 |

|---|---|---|---|---|---|

| RMSE | 17.8 knots | 17.4 knots | 8.2 knots | 8.1 knots | 7.6 knots |

| Optimizer/Technique Used | Mean Absolute Error (MAE) Knots | Root Mean Square Error (RMSE) Knots | Relative RMSE |

|---|---|---|---|

| RMSProp (no max-pooling, no batch normalization, and only dropout) | 7.21 kts | 9.47 kts | 0.19 |

| RMSProp (no max-pooling, with batch normalization and dropout) | 6.68 kts | 7.6 kts | 0.17 |

| Adam optimizer (no max-pooling, no batch normalization, and only dropout) | 8.68 kts | 10.18 kts | 0.22 |

| Adam optimizer (no max-pooling, with batch normalization and dropout) | 8.52 kts | 10.04 kts | 0.20 |

| Layer (Type) | Output Shape | Param # |

|---|---|---|

| input_1 (InputLayer) | [(None, 128, 128, 3)] | 0 |

| block1_conv1 (Conv2D) | (None, 128, 128, 64) | 1792 |

| block1_conv2 (Conv2D) | (None, 128, 128, 64) | 36,928 |

| block1_pool (MaxPooling2D) | (None, 64, 64, 64) | 0 |

| block2_conv1 (Conv2D) | (None, 64, 64, 128) | 73,856 |

| block2_conv2 (Conv2D) | (None, 64, 64, 128) | 147,584 |

| block2_pool (MaxPooling2D) | (None, 32, 32, 128) | 0 |

| block3_conv1 (Conv2D) | (None, 32, 32, 256) | 295,168 |

| block3_conv2 (Conv2D) | (None, 32, 32, 256) | 590,080 |

| block3_conv3 (Conv2D) | (None, 32, 32, 256) | 590,080 |

| block3_conv4 (Conv2D) | (None, 32, 32, 256) | 590,080 |

| block3_pool (MaxPooling2D) | (None, 16, 16, 256) | 0 |

| block4_conv1 (Conv2D) | (None, 16, 16, 512) | 1,180,160 |

| block4_conv2 (Conv2D) | (None, 16, 16, 512) | 2,359,808 |

| block4_conv3 (Conv2D) | (None, 16, 16, 512) | 2,359,808 |

| block4_conv4 (Conv2D) | (None, 16, 16, 512) | 2,359,808 |

| block4_pool (MaxPooling2D) | (None, 8, 8, 512) | 0 |

| block5_conv1 (Conv2D) | (None, 8, 8, 512) | 2,359,808 |

| block5_conv2 (Conv2D) | (None, 8, 8, 512) | 2,359,808 |

| block5_conv3 (Conv2D) | (None, 8, 8, 512) | 2,359,808 |

| block5_conv4 (Conv2D) | (None, 8, 8, 512) | 2,359,808 |

| block5_pool (MaxPooling2D) | (None, 4, 4, 512) | 0 |

| Total params: 20,024,384 Trainable params: 0 Non-trainable params: 20,024,384 |

| Model: “Sequential” Layer (type) | Output Shape | Param # |

|---|---|---|

| Vgg19 (Model) Global_averge_pooling2d dense (Dense) | (None, 4, 4, 512) G1(None, 512) (None, 1) | 20,024,384 0 513 |

| Total params: 20,024,384 Trainable params: 513 Non-trainable params: 20,024,384 |

| Model: “Sequential” Layer (Type) | Output Shape | Param # |

|---|---|---|

| Vgg19 (Model) Global_averge_pooling2d dense (Dense) | (None, 4, 4, 512) G1(None, 512) (None, 1) | 20,024,384 0 513 |

| Total params: 20,024,384 Trainable params: 7,079,937 Non-trainable params: 12,944,960 |

| Ref | Disaster Type | Dataset | Model Used | Accuracy |

|---|---|---|---|---|

| [54] | Hurricane | Hurricane satellite images | Deep CNN | 80.66% |

| [55] | Flood | Flood video dataset | Base CNN | 70% |

| [56] | Hurricane | AIDR dataset | VGG-16 | 74% |

| [57] | Hurricane | Hurricane Sandy dataset | Convolutional Auto-encoders | 88.4% |

| [58] | Hurricane | Hurricane Harvey | VGG 16 CNN | 89.5% |

| Proposed | Hurricane | Hurricane Harvey | VGG 19 CNN (with fine-tuning) | 98% |

| Layer (Type) | Output Shape | Param # |

|---|---|---|

| input_1 (Input Layer) | (None, 224, 224, 3) | 0 |

| block1_conv1 (Conv2D) | (None, 224, 224, 64) | 1792 |

| block1_conv2 (Conv2D) | (None, 224, 224, 64) | 36,928 |

| block1_pool (MaxPooling2D) | (None, 112, 112, 64) | 0 |

| block2_conv1 (Conv2D) | (None, 112, 112, 128) | 73,856 |

| block2_conv2 (Conv2D) | (None, 112, 112, 128) | 147,584 |

| block2_pool (MaxPooling2D) | (None, 56, 56, 128) | 0 |

| block3_conv1 (Conv2D) | (None, 56, 56, 256) | 295,168 |

| block3_conv2 (Conv2D) | (None, 56, 56, 256) | 590,080 |

| block3_conv3 (Conv2D) | (None, 56, 56, 256) | 590,080 |

| block3_conv4 (Conv2D) | (None, 56, 56, 256) | 590,080 |

| block3_pool (MaxPooling2D) | (None, 28, 28, 256) | 0 |

| block4_conv1 (Conv2D) | (None, 28, 28, 512) | 1,180,160 |

| block4_conv2 (Conv2D) | (None, 28, 28, 512) | 2,359,808 |

| block4_conv3 (Conv2D) | (None, 28, 28, 512) | 2,359,808 |

| block4_conv4 (Conv2D) | (None, 28, 28, 512) | 2,359,808 |

| block4_pool (MaxPooling2D) | (None, 14, 14, 512) | 0 |

| block5_conv1 (Conv2D) | (None, 14, 14, 512) | 2,359,808 |

| block5_conv2 (Conv2D) | (None, 14, 14, 512) | 2,359,808 |

| block5_conv3 (Conv2D) | (None, 14, 14, 512) | 2,359,808 |

| block5_conv4 (Conv2D) | (None, 14, 14, 512) | 2,359,808 |

| block5_pool (MaxPooling2D) | (None, 7, 7, 512) | 0 |

| flatten (Flatten) | (None, 25,088) | 0 |

| dense (Dense) | (None, 512) | 12,845,568 |

| dropout (Dropout) | (None, 512) | 0 |

| dense_1 (Dense) | (None, 4) | 2052 |

| Total params: 32,872,004 | ||

| Trainable params: 12,847,620 | ||

| Non-trainable params: 20,024,384 | ||

| Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|

| Hurricane | 0.98 | 0.96 | 0.97 | 207 |

| Earthquake | 0.96 | 0.93 | 0.95 | 364 |

| Flood | 0.91 | 0.94 | 0.93 | 265 |

| Wildfire | 0.97 | 0.98 | 0.97 | 249 |

| Accuracy | 0.96 | 1107 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Devaraj, J.; Ganesan, S.; Elavarasan, R.M.; Subramaniam, U. A Novel Deep Learning Based Model for Tropical Intensity Estimation and Post-Disaster Management of Hurricanes. Appl. Sci. 2021, 11, 4129. https://doi.org/10.3390/app11094129

Devaraj J, Ganesan S, Elavarasan RM, Subramaniam U. A Novel Deep Learning Based Model for Tropical Intensity Estimation and Post-Disaster Management of Hurricanes. Applied Sciences. 2021; 11(9):4129. https://doi.org/10.3390/app11094129

Chicago/Turabian StyleDevaraj, Jayanthi, Sumathi Ganesan, Rajvikram Madurai Elavarasan, and Umashankar Subramaniam. 2021. "A Novel Deep Learning Based Model for Tropical Intensity Estimation and Post-Disaster Management of Hurricanes" Applied Sciences 11, no. 9: 4129. https://doi.org/10.3390/app11094129