Abstract

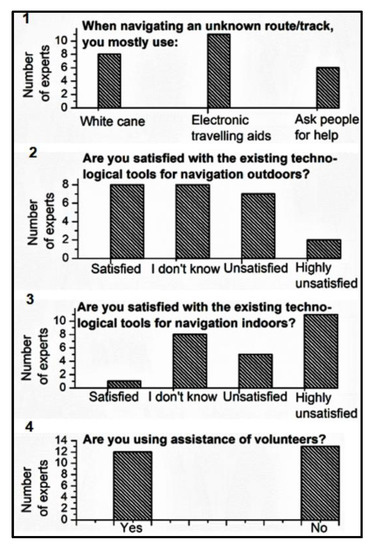

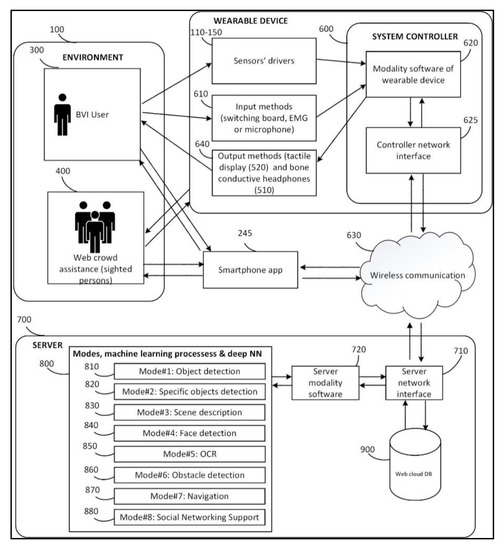

This paper presents an approach to enhance electronic traveling aids (ETAs) for people who are blind and severely visually impaired (BSVI) using indoor orientation and guided navigation by employing social outsourcing of indoor route mapping and assistance processes. This type of approach is necessary because GPS does not work well, and infrastructural investments are absent or too costly to install for indoor navigation. Our approach proposes the prior outsourcing of vision-based recordings of indoor routes from an online network of seeing volunteers, who gather and constantly update a web cloud database of indoor routes using specialized sensory equipment and web services. Computational intelligence-based algorithms process sensory data and prepare them for BSVI usage. In this way, people who are BSVI can obtain ready-to-use access to the indoor routes database. This type of service has not previously been offered in such a setting. Specialized wearable sensory ETA equipment, depth cameras, smartphones, computer vision algorithms, tactile and audio interfaces, and computational intelligence algorithms are employed for that matter. The integration of semantic data of points of interest (such as stairs, doors, WC, entrances/exits) and evacuation schemes could make the proposed approach even more attractive to BVSI users. Presented approach crowdsources volunteers’ real-time online help for complex navigational situations using a mobile app, a live video stream from BSVI wearable cameras, and digitalized maps of buildings’ evacuation schemes.

1. Introduction

The growing number of people who are BSVI (blind and severely visually impaired) with smartphones, which are multifunctional, multisensory GSM networking devices, has provided the impetus for the development of considerably cheaper electronic traveling assistive (ETA) devices that can employ hardware and software gadgets (defined by the 3GPP standards collaboration) integrated into smartphones. Smartphones are equipped with a CPU, an operating system, various sensors (GPS, accelerometer, gyroscope, magnetometer, pedometer, and compass) and can run mobile apps for data processing. Mobile computing platforms offer standard APIs for general-purpose computing, providing both application developers and users a level of flexibility that is very conducive to the development and distribution of novel solutions [1,2]. In addition, smartphones possess real-time GSM connections with the mobile phone network and the internet and facilitate continuous wireless data transfer to external servers and cloud platforms for web-services-based data processing. This considerably enlarges smartphones’ general usability and could also be employed for BSVI ETA solutions using social outsourcing, such as remote real-time visual assistance, route mapping, etc.

A few of the technologies involved are reviewed in this paper. First, it is important to note that BSVI individuals do not differ remarkably from the visually able population with regard to smartphone use. In fact, due to their condition, BSVI individuals are even more inclined to use handheld smartphones for social communication and mobility (making calls, chatting, using social media and many other apps, including GPS navigation, and so on). The screen reader interface integrated into modern mobile operating systems is accessible enough for people who are BSVI. The number of mobile apps tailored for blind users is also increasing, boosting the use of mobile devices and apps among people who are BSVI, and this usage is expected to continue to grow [3].

Relatively few studies have been conducted on mobile app use among people who are BSVI. In some preliminary studies, participants rated apps as useful (95.4%) and accessible (91.1%) tools for individuals with visual impairment. More than 90% of middle-aged adults strongly agreed that specifically tailored apps were practical. This shows that BSVI individuals frequently use apps that are specifically designed to help them accomplish daily tasks. Furthermore, it was found that the BSVI population is generally satisfied with mobile apps and is ready for improvements and new apps [3]. Thus, among the BSVI community, the multifunctional usage of smartphones for general and specialized tasks is widespread, and there is potential for them to be readily adapted for crowdsourced ETA solutions indoors.

Recent advances in computer vision, smartphone devices, and social networking opportunities have motivated the academic community and developers to find novel solutions that combine these evolving technologies to enhance the mobility and general quality of life of people who are BSVI. Unfortunately, this prospective research niche is not well covered in existing research papers. The only reviews we could identify were several that focused on existing mobile applications for the blind [1,2,3]. These findings suggest that electronic travel aids, navigation assistance modules, and text-to-speech applications, as well as virtual audio displays, which combine audio with haptic channels, are becoming integrated into standard mobile devices. Increasingly user-friendly interfaces and new modes of interaction have opened a variety of novel possibilities for the BSVI [4,5].

It is important to note that multifunctional mobile devices are increasingly being employed as interim embedded sensory processing units (exploiting IMU sensors, cameras, etc.) and BSVI user control and interface devices (sound and tactile feedback). In the embedded settings, they work in the context of larger ETA systems, where other often specialized and more powerful sensory controlling devices and local mini PC or remote processing units (such as web cloud servers) are used [1,6]. In this way, a mobile device becomes an embedded part of a larger networking system, where Web 2.0 services can be employed.

Admittedly, a wide range of general-purpose social networks, web 2.0 media apps, and other innovative ICT (information and communication technology) tools are developed to improve navigation and orientation. Although they are not destined to meet the specialized requirements of people who are BSVI, some mobile ads make them useful. For instance, text (and image) to voice, tactile feedback, and other additional enabling navigation software and hardware solutions are helpful for this matter. However, the complexity and abundance of general-purpose features pose a significant challenge for people who are BSVI. According to Raufi et al. [7], the increasing volume of visual information and other data from social networks confuses BSVI users. In this way, the expansion of general-purpose vision-based web 2.0 social networks leaves behind specialized digital (audio and tactile) content accessibility for BSVI users [8]. Some approaches that are more focused on the needs of BSVI are required.

In general, BSVI users are actively involved in social networks [9,10,11]. More than 90% of people who are BSVI actively use one or more general-purpose social networking means, such as Facebook, Twitter, LinkedIn, Instagram, and Snapchat [9,10,12,13,14]. However, only a few social networking platforms have additional features oriented for BSVI users. For instance, BSVI surveys have revealed that social networking apps are among the five most popular mobile apps [3]. The majority of people who are BSVI who use social media use the Facebook social network [10,15,16]. The use of Twitter is also unusually high, and it is assumed that its simple, text-based interface is more accessible to screen readers [10].

Next to the general-purpose social networks, people who are BSVI frequently use apps that are specifically designed to allow them to accomplish daily activities. However, N. Griffin-Shirley et al. emphasize that persons with visual impairments would like to see improvements in existing apps as well as the development of new apps [3]. There are a number of examples of some of the most popular navigation apps used for path planning, navigation, and obstacle avoidance [1,2,6,9]. Unfortunately, navigation apps are mostly based on predeveloped navigational information and do not provide real-life support, user experience-centric approaches, or participatory Web 2.0 social networking. On the contrary, there are other real-life social apps, such as Be My Eyes, which give access to a network of sighted volunteers and company representatives who are ready to provide real-time visual assistance for orientation, navigation, and other tasks [17]. There are also many other R&D applications that can be applied for the outsourcing of navigational information [18,19,20,21,22].

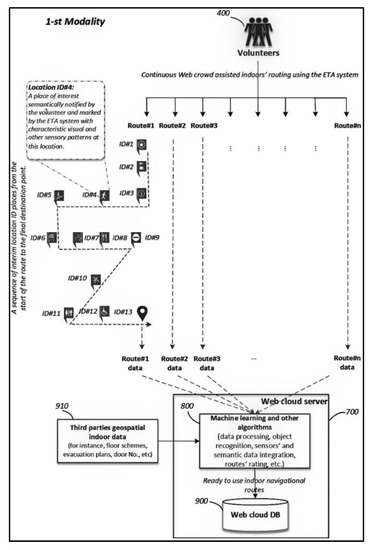

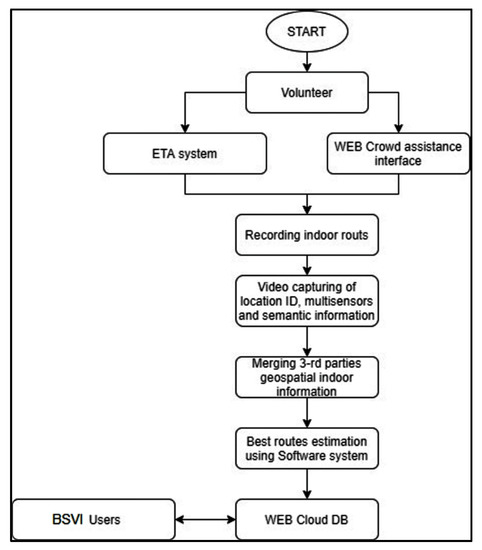

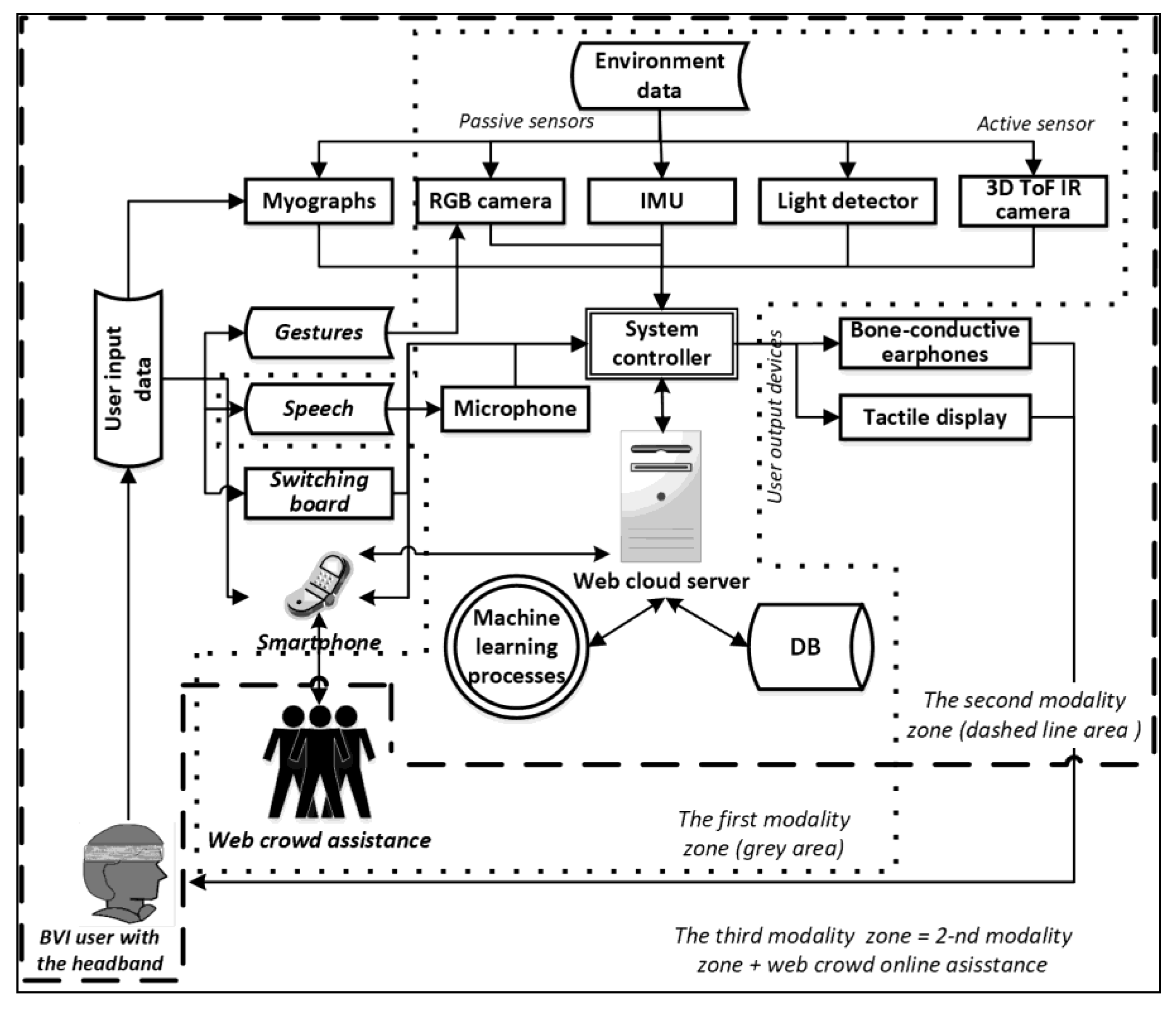

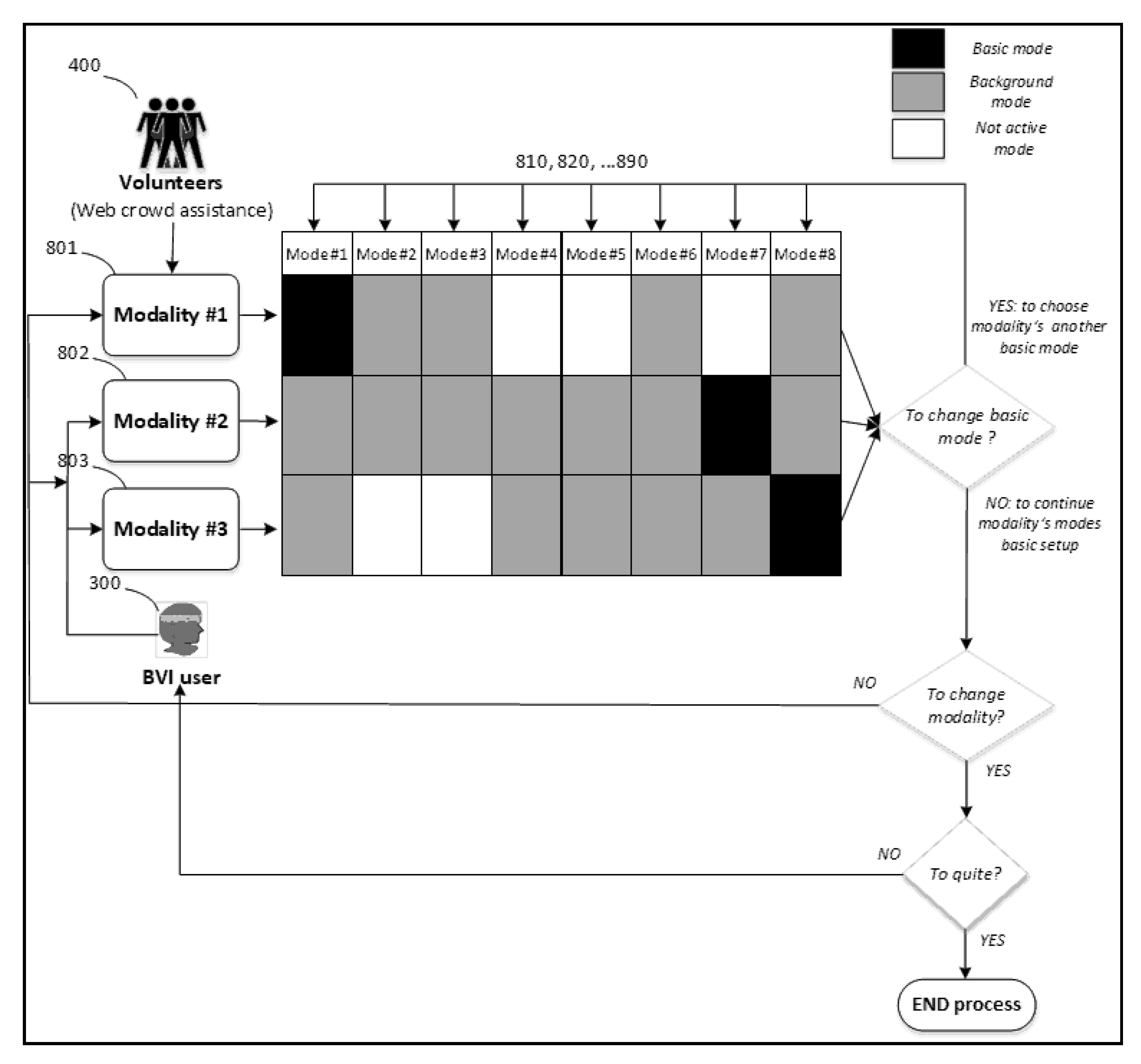

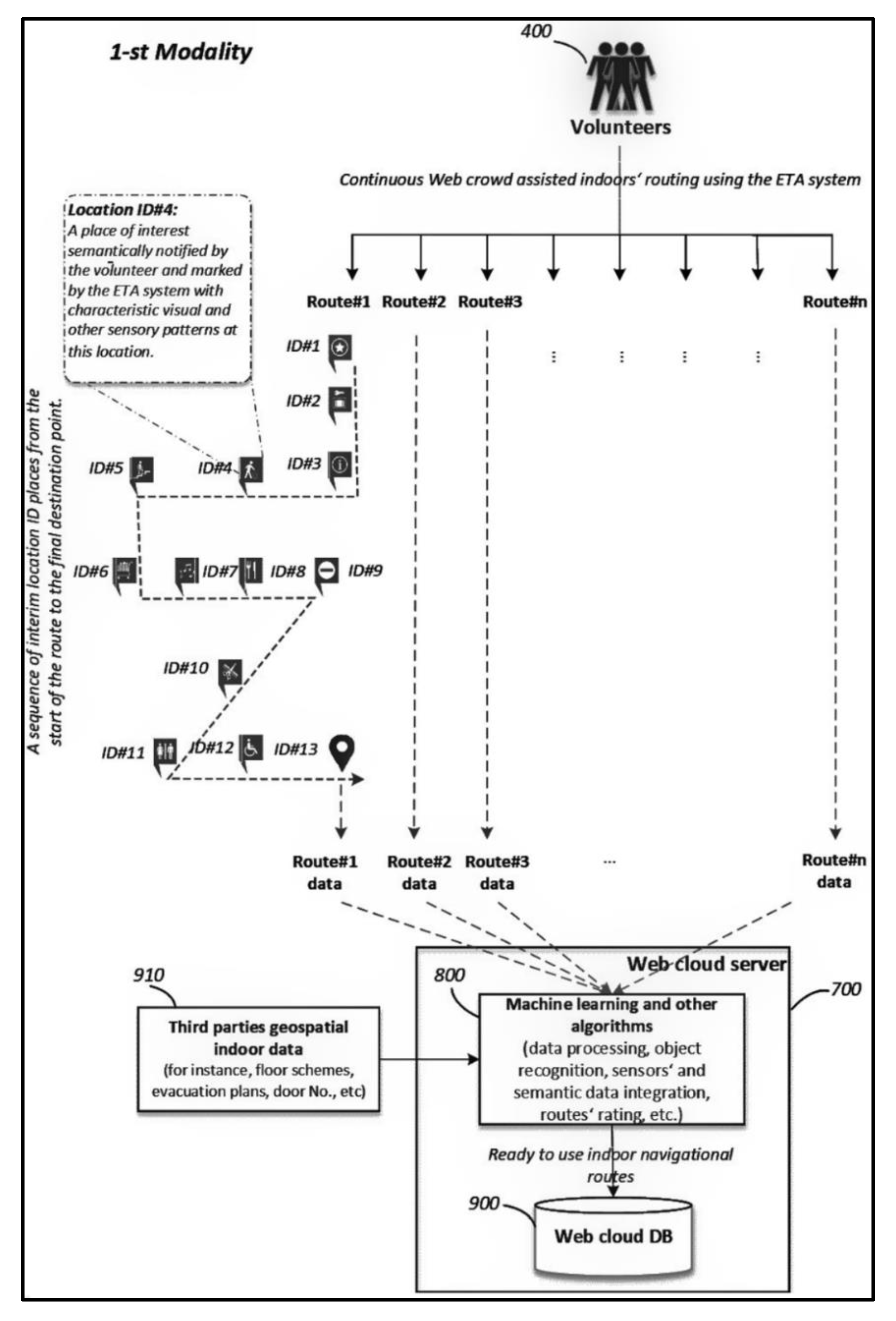

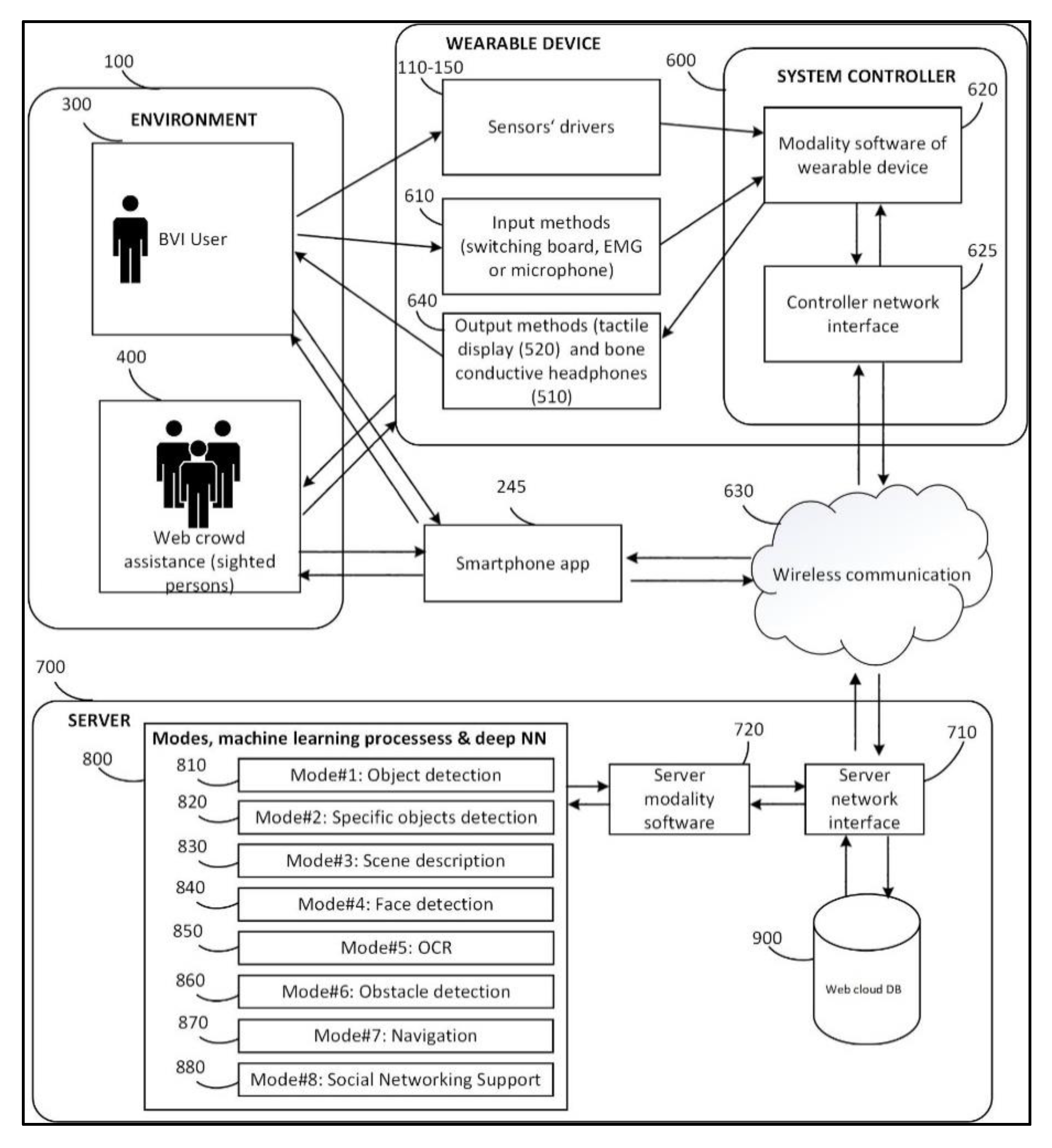

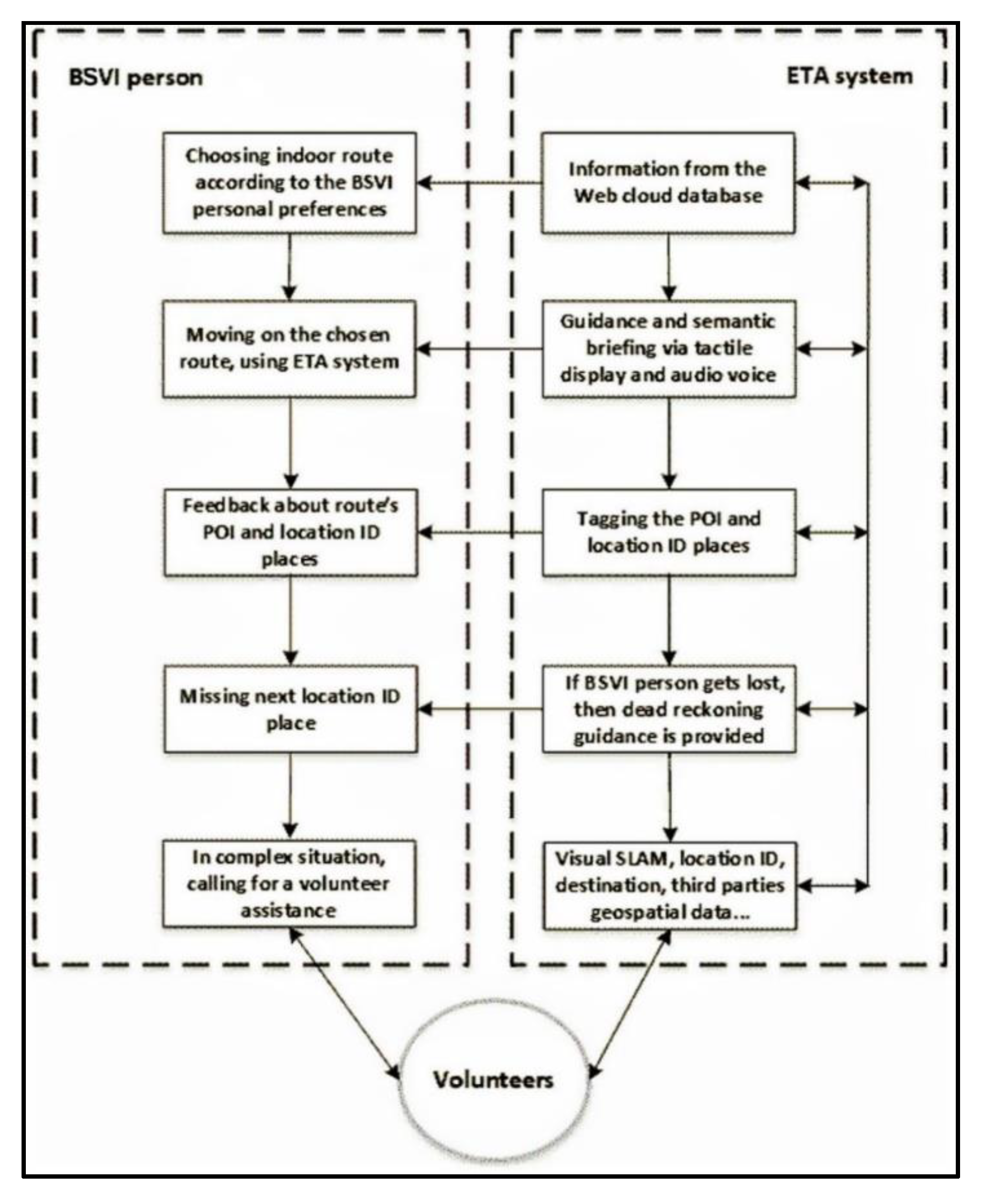

The above overview of the related literature reveals technological and socially guided indoor navigation advancements, implications, and drawbacks. In this regard, our approach proposes a novel guided indoor navigation solution, which is user-centric, crowdsourced, and does not require costly prior infrastructural indoor investments, such as the earlier-mentioned installation of Wi-Fi, RFID tags, beamers, etc. However, it does demand the involvement of some social networks, where volunteers walk in the chosen buildings, mark indoor routes, and carry out semantic tagging (using a mobile app with a voice recording or command line) of points of interest (POI) such as doors, exits, lifts, stairs, etc. For that matter, they use wearable ETA equipment with IMU sensors, stereo, and IR (depth) video cameras that record a visual stream and send it through Wi-Fi or GSM to the web cloud server where adapted SLAM algorithms produce clouds of characteristic points for each sequential video frame and use this information to form 3D routes. In our setting, we call this set of procedures the first operational modality.

In the proposed setting, the wearable ETA device undergoes some initial real-time data stream processing using Raspberry Pi4 and local mini PCUs such as NUC, but the main computational vision-based algorithms do the rest of the work offline in the web cloud server (video stream data from the wearable ETA device have to be transferred in advance). The server side analyzes video streams, recognizes objects, classifies them, measures distance and direction, and relates semantic POI tags with route coordinates.

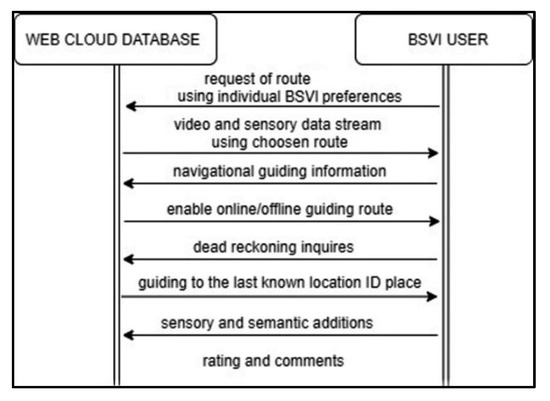

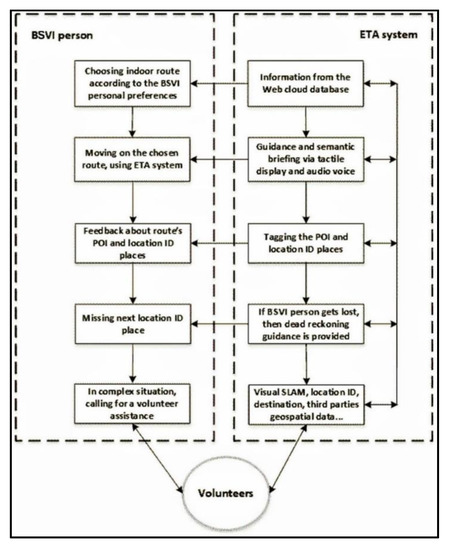

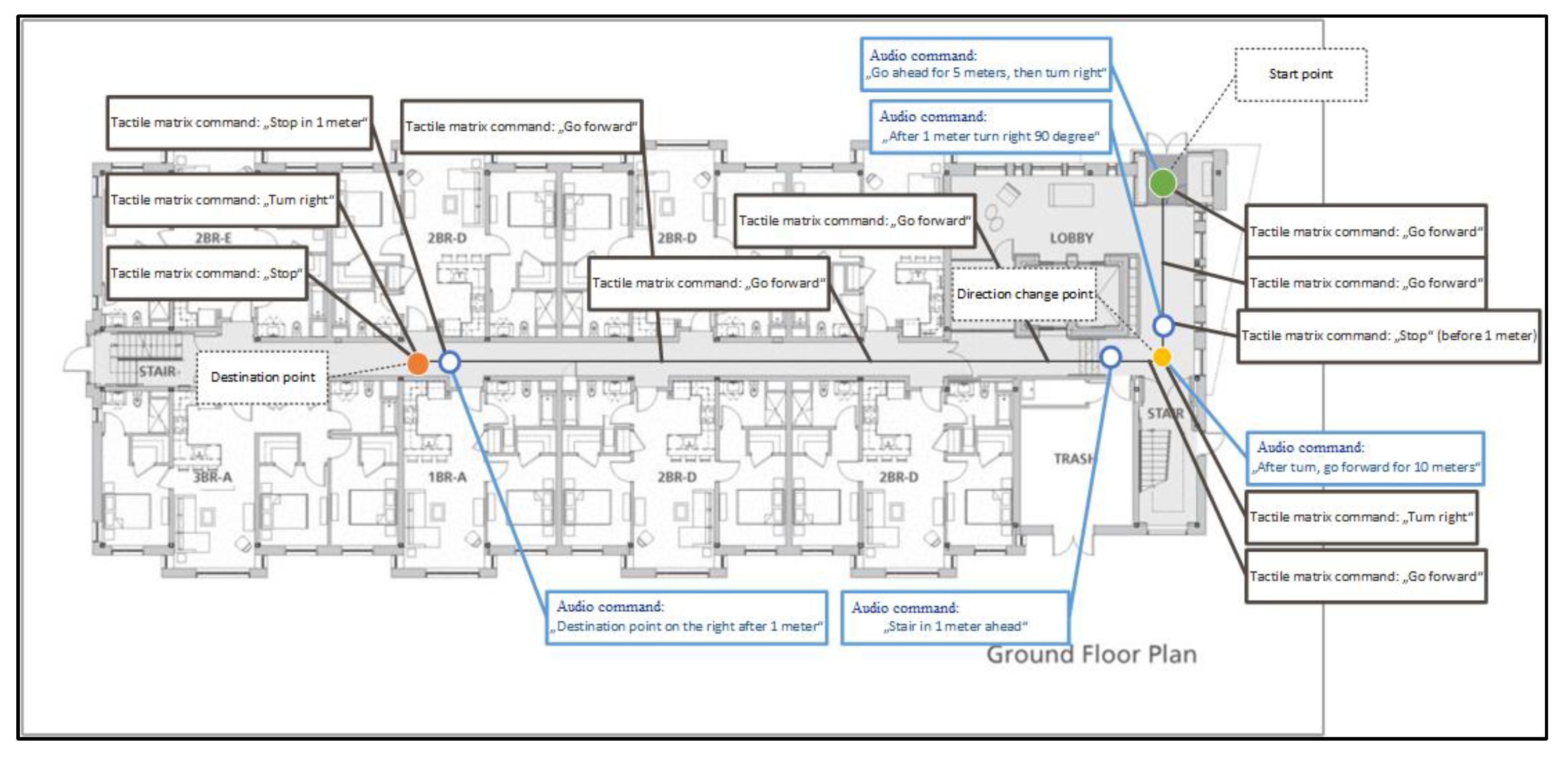

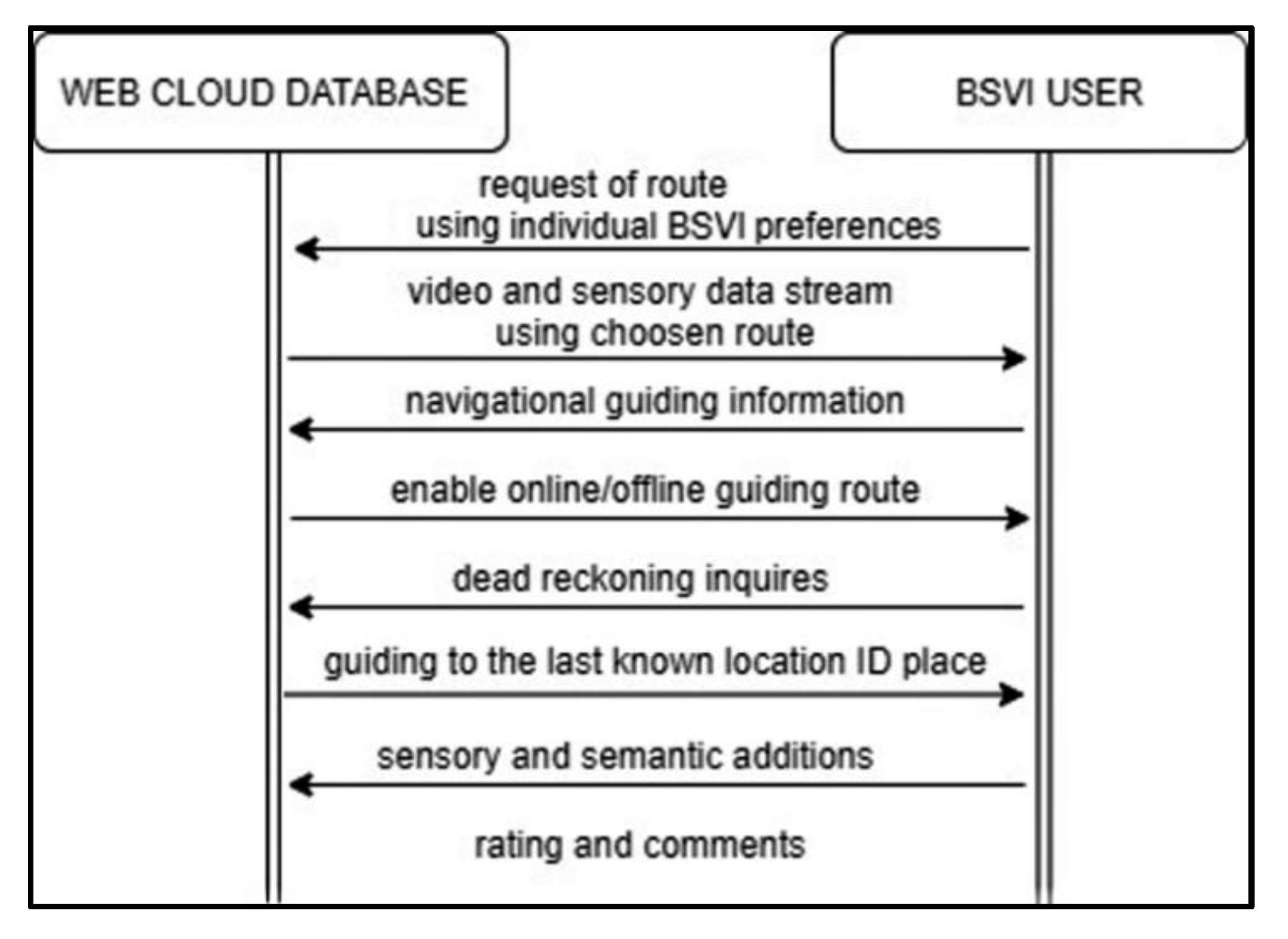

In the second operational modality, the database of prearranged routes for people who are BSVI is maintained in the web cloud server and is available online for their use. People who are BSVI use the same wearable ETA equipment with a local mini PCU, such as the NUC. They can choose building and indoor routes from the online web cloud database using the mobile app. Wearable ETA equipment use computational vision-based algorithms such as SLAM to recognize clouds of points on the BSVI route and guides the BSVI user through bone-conductive headphones and tactile signals. The latter are displayed using a unique headband with a tactile interface display. In this way, people who are BSVI can arrive at their desired indoor destinations through visual-based guided navigation.

In the proposed approach, the third operational modality deals with extraordinary situations such as dead reckoning and unrecognized paths or objects. For that reason, BSVI can use the mobile app to call volunteers who are familiar with that building or who were involved with the production of its routing data in the first modality. The web cloud server provides information about the current BSVI position on the chosen route (or last known location) and on that building’s digitalized evacuation scheme. A volunteer can help to recognize obstacles, read texts, and find a route in dead reckoning situations.

Compared with other earlier-reviewed approaches, this novel indoor navigation setting for people who are BSVI requires relatively more input from (i) social networking using the help of volunteers, (ii) AI-based computational intelligence algorithms, (iii) server and client-side processing power units, and (iv) a Wi-Fi or GSM Internet connection.

However, its main advantages are (1) the indoor route database is available 24/7 (a kind of Visiopedia), (2) it has an offline working mode, (3) there is no need for indoor infrastructural investments, (4) it has an autonomous and flexible, wearable ETA device with a tactile display and bone-conductive headphones, (5) real-time online volunteer help is available in complex situations using a mobile app, (6) it employs a user-centric approach, and (7) routes can be rated after each guided navigation to allow consequent improvement of the route database via BSVI feedback.

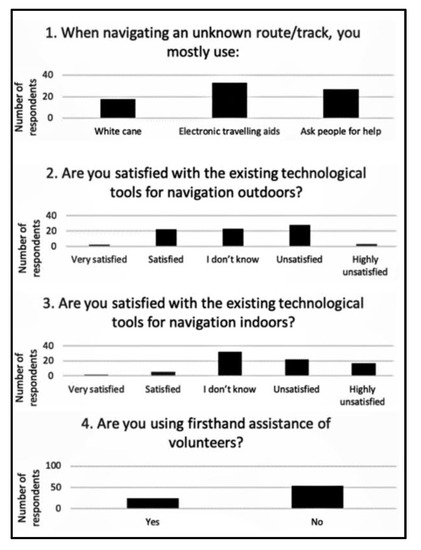

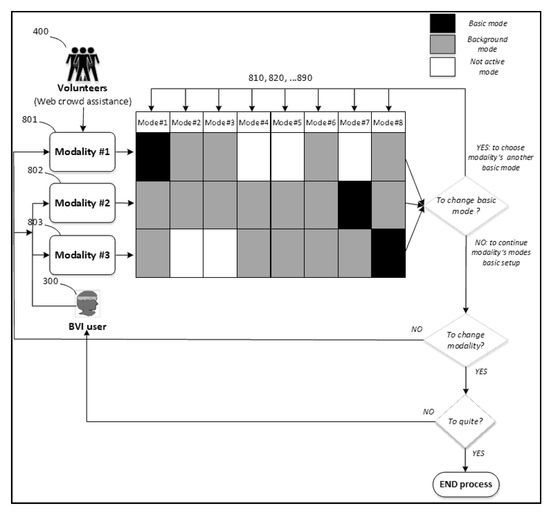

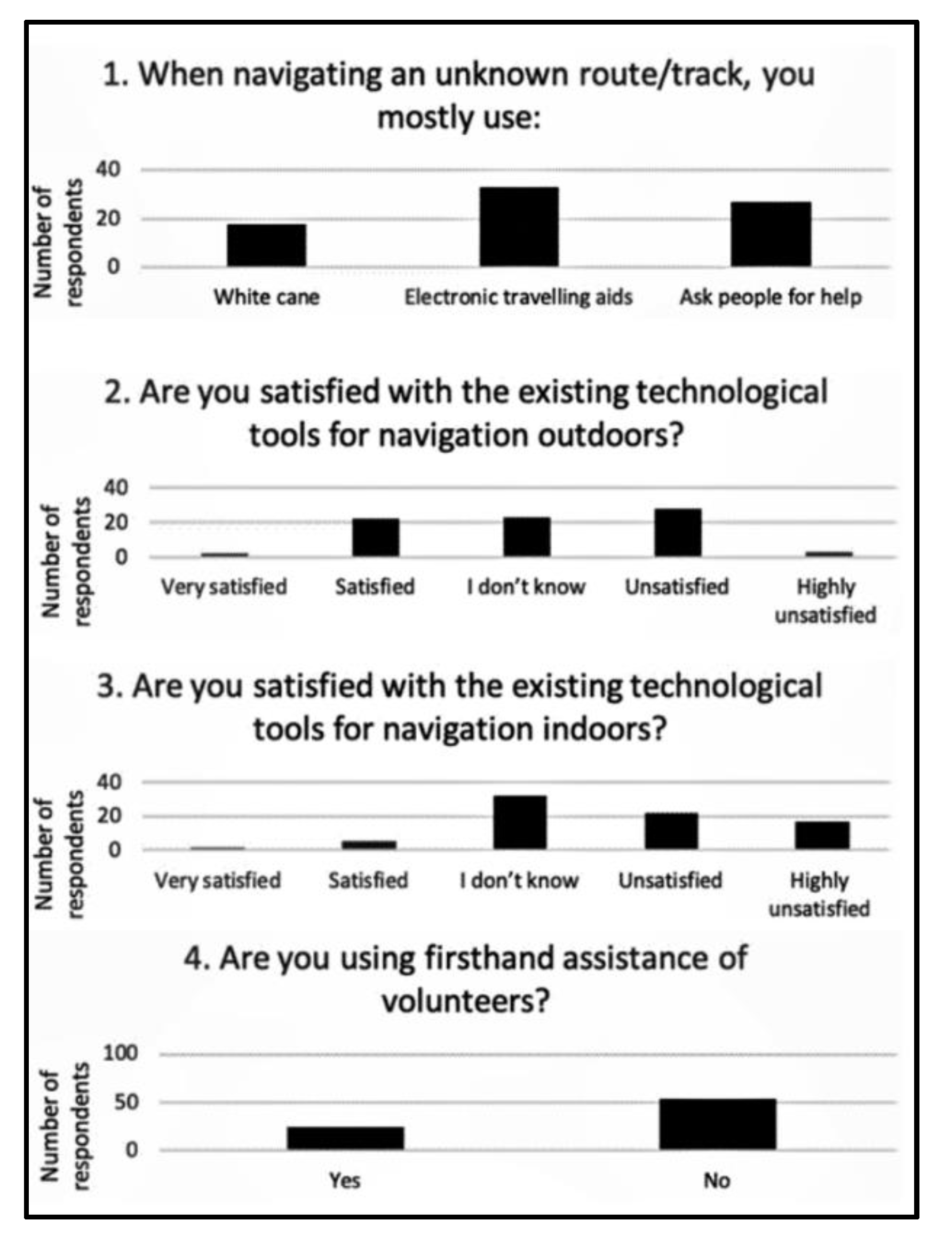

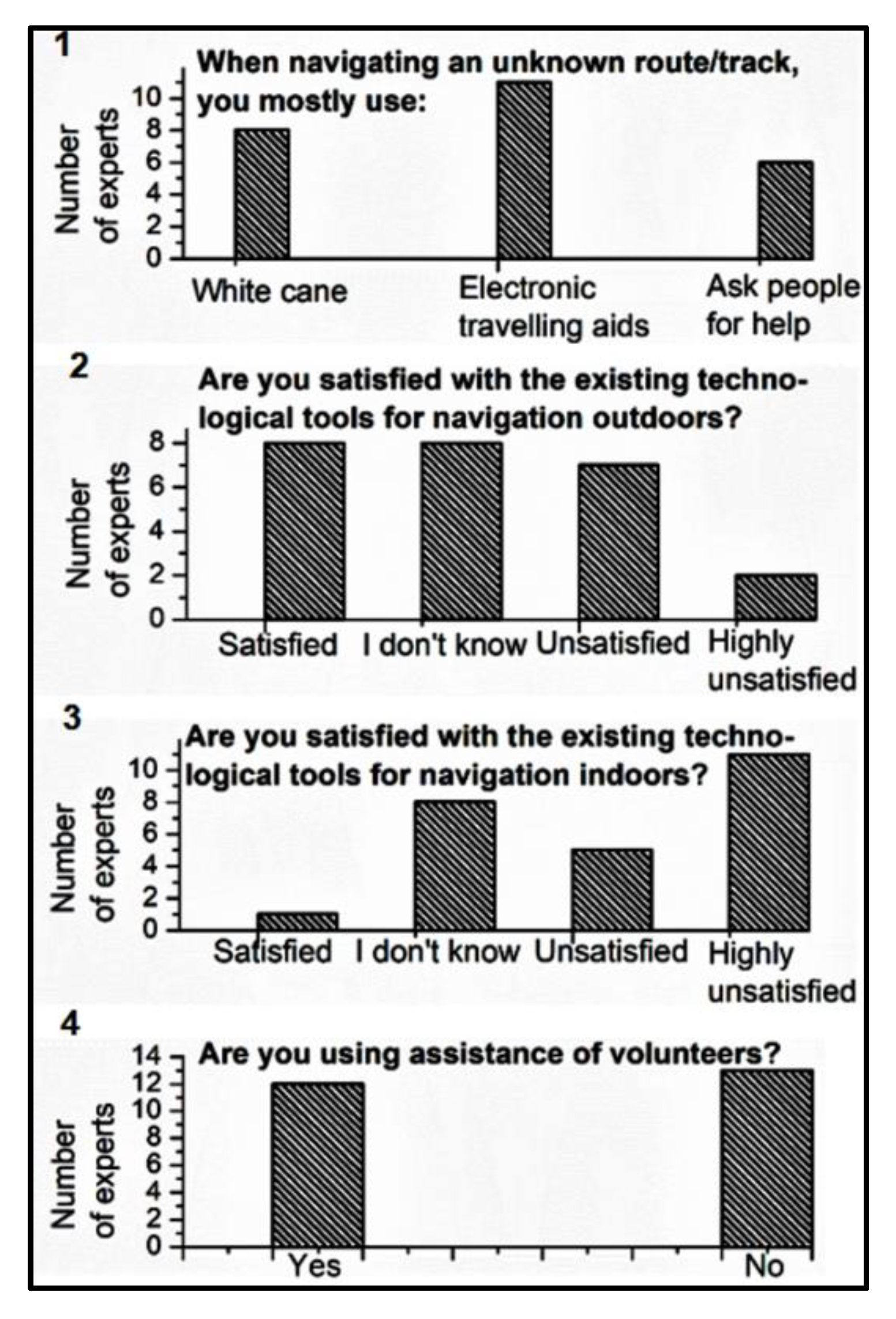

The remainder of the paper is organized as follows: Section 2 briefly describes the results of a survey conducted on people who are BSVI concerning their navigation and social networking needs and expectations; Section 3 layouts some insights on ETA enhancements of navigation and orientation using the advantages of participatory Web 2.0; Section 4 brings forth the wearable prototype R&D challenges; Section 5 provides web-based crowd-assisted social networking implications for navigation indoors; Section 6 presents the conclusions and discussion.

4. Bringing Forth Current R&D Challenges

The presented prototype is still in the development stage, and we can only provide initial evaluations of stand-alone modes and associated technologies. There is still some R&D left to go for field testing outside the lab with people who are BSVI.

4.1. Vision-Based Modes

Here is a brief summary of technologies and their evaluations used for the vision-based modes (see Section 3):

[Mode#1] Our object detection subsystem is based on a Faster RCNN algorithm at this link: https://arxiv.org/pdf/1506.01497.pdf (accessed on 23 October 2021) Object detectors usually are evaluated in terms of mean average precision (mAP). Faster RCNN with Resnet-101 backbone achieves 48.4% mAP on MSCOCO validation set [28] and is able to process VGA-resolution images in near-real-time (~10 Hz on Nvidia 2080 Ti GPU). Although these are not SOTA characteristics at the moment, we selected Faster RCNN due to satisfactory empirical performance when tested on our prototype and efficient, publicly available implementations.

[Mode#2] Specific (or a few-shot) object detection subsystem is based on Neurotechnology’s SentiBotics Navigation SDK 2.0 software (Neurotechnology, Vilnius, Lithuania). In our set up we use the BRIEF descriptor, which according to the tested data set, provides an accuracy of 84.93% [29]. After tuning thresholds, the SentiBotics algorithm allowed practically eliminate false positive recognitions, while providing fast matching and rapid learning of user-specified objects.

[Mode#3] Scene captioning subsystem relies on the [28] algorithm. In terms of the BLEU-4 score, its accuracy is 27.7–32.1 when tested on the MSCOCO data set.

[Mode#4] Face recognition subsystem is based on Neurotechnology’s Verilook 12.2 SDK (Neurotechnology, Vilnius, Lithuania). It was evaluated using three publicly available data sets (MEDS-II, LFW, NIR-VIS 2.0). According to obtained estimates, corresponding equal error rate (EER) values:

MEDS: 0.0455% 0.4149%

LFW: 0.0080% 0.0147%

NIR_VIS 2.0: 0.0367% 0.0662%

It shows that algorithm is capable of high accuracy face recognition.

[Mode#5] OCR subsystem relies on Google Cloud Platform. According to [30] experiments, the average accuracy on the tested data set of 1227 images from 15 categories (after preprocessing) was 81%.

[Mode#6] We employed the PointCloud Library (https://pointclouds.org/, accessed on 23 October 2021), which is based upon https://pcl.readthedocs.io/en/latest/cluster_extraction.html (accessed on 23 October 2021) and 3D depth camera images. We used Faster RCNN resnet101/resnet 50 or ssd mobilenet neural network architectures. It is worth noting that the Ligh backbone is much faster.

4.2. Data Structure

At the current R&D stage, the buildings’ 2D floor plans and SLAM trajectories are stored in a local server for online working mode and in the mini PC (such as Intel Nuc) for offline working mode. The points of interest and information about them are stored there as well. Web connection is needed for synchronization. However, at the later stages of the prototype R&D, we plan to store information in the web cloud server, using an enterprise platform for internal and external users with an application, core, and service layers. The structure of information storage will employ relational, file, and object databases.

The main projected data flows and storage are depicted in Figure 6. However, in the current R&D stage, we are testing stand-alone modes and modalities using a simple Wi-Fi connection with a portable mini PC (Intel Nuc) and a server.

Figure 6.

Main communication, data flows and storage.

For the functional prototype development, we used software packages with their databases or libraries such as:

- -

- Facial recognition mode’s (Verilook, https://www.neurotechnology.com/verilook.html, accessed on 20 October 2021) database is stored in the server;

- -

- SLAM database of points of interest, location, routes, and maps is stored in the server;

- -

- accurate open-source Visual SLAM Library

- -

- https://arxiv.org/abs/2007.11898; https://github.com/UZ-SLAMLab/ORB_SLAM3, accessed on 20 October 2021);

- -

- scene description—image caption model, based on Google Tensorflow “im2txt” models;

- -

- specific object detection database is stored in the server;

- -

- for object classes (such as doors, lifts, stairs, etc.) classification and recognition we used Faster RCNN resnet101, resnet 50, ssd mobilenet training and validation sets based on our own and open source databases;

- -

- for obstacle recognition, we used the Point Cloud library

- -

- https://pointclouds.org/, https://pcl.readthedocs.io/en/latest/cluster_extraction.html (accessed on 20 October 2021);

- -

- for OCR we employed Google OCR API (https://cloud.google.com/vision/docs/ocr; (accessed on 20 October 2021));

- -

- for Social Networking we are employing audio-video streaming to a web browser (Android Apps integrated with WEB Cloud DB).

The communication protocol is handled by ROS topics(ROS nodes, topics, and messages|ROS Robotics By Example—Second Edition (packtpub.com; accessed on 10 October 2021); Practical Example—ROS Tutorials 0.5.2 documentation (clearpathrobotics.com; accessed on 10 October 2021)), which organizes the data flow and ROS service interaction.

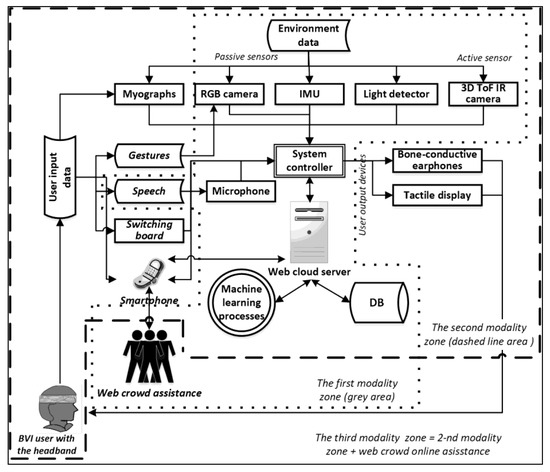

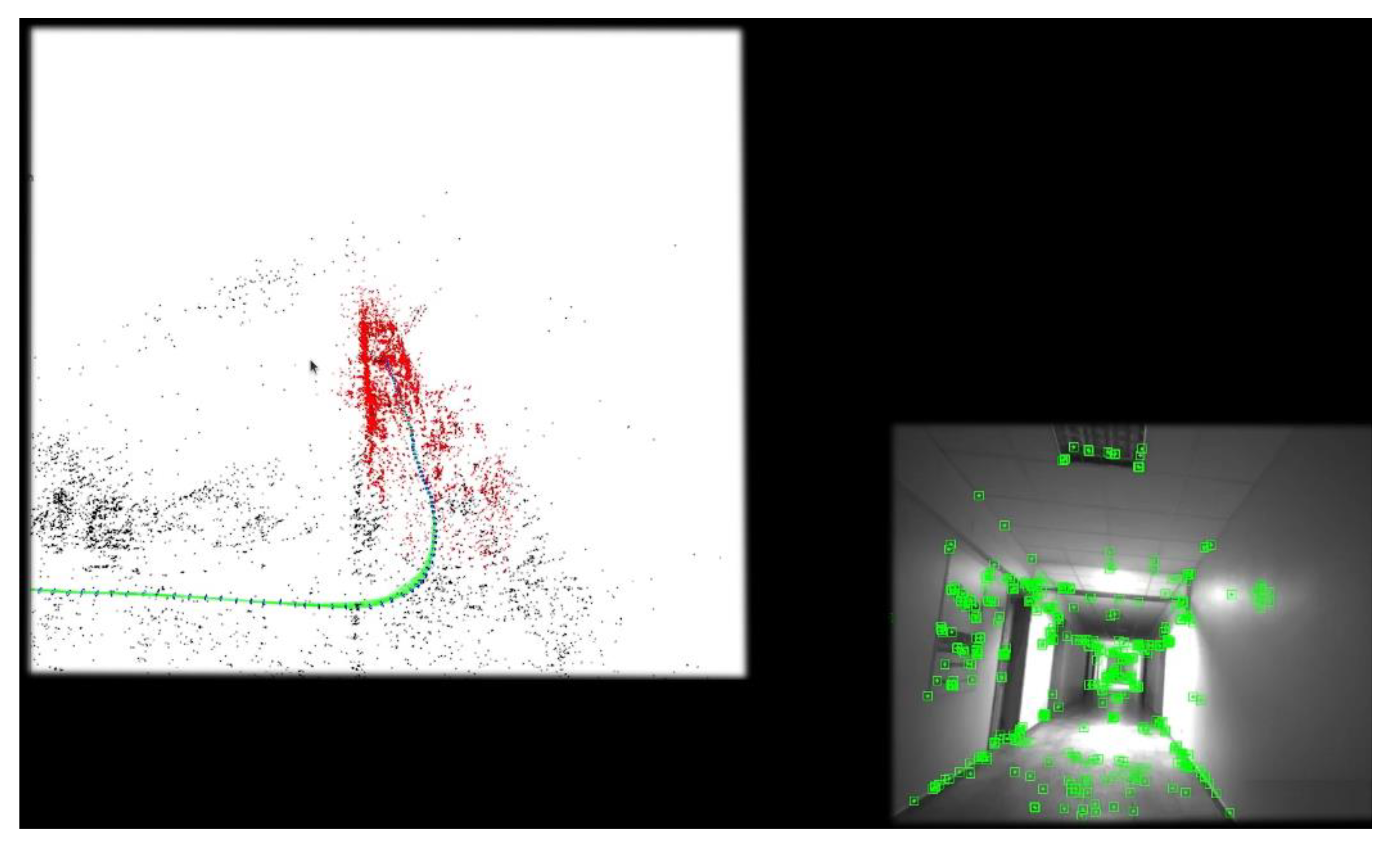

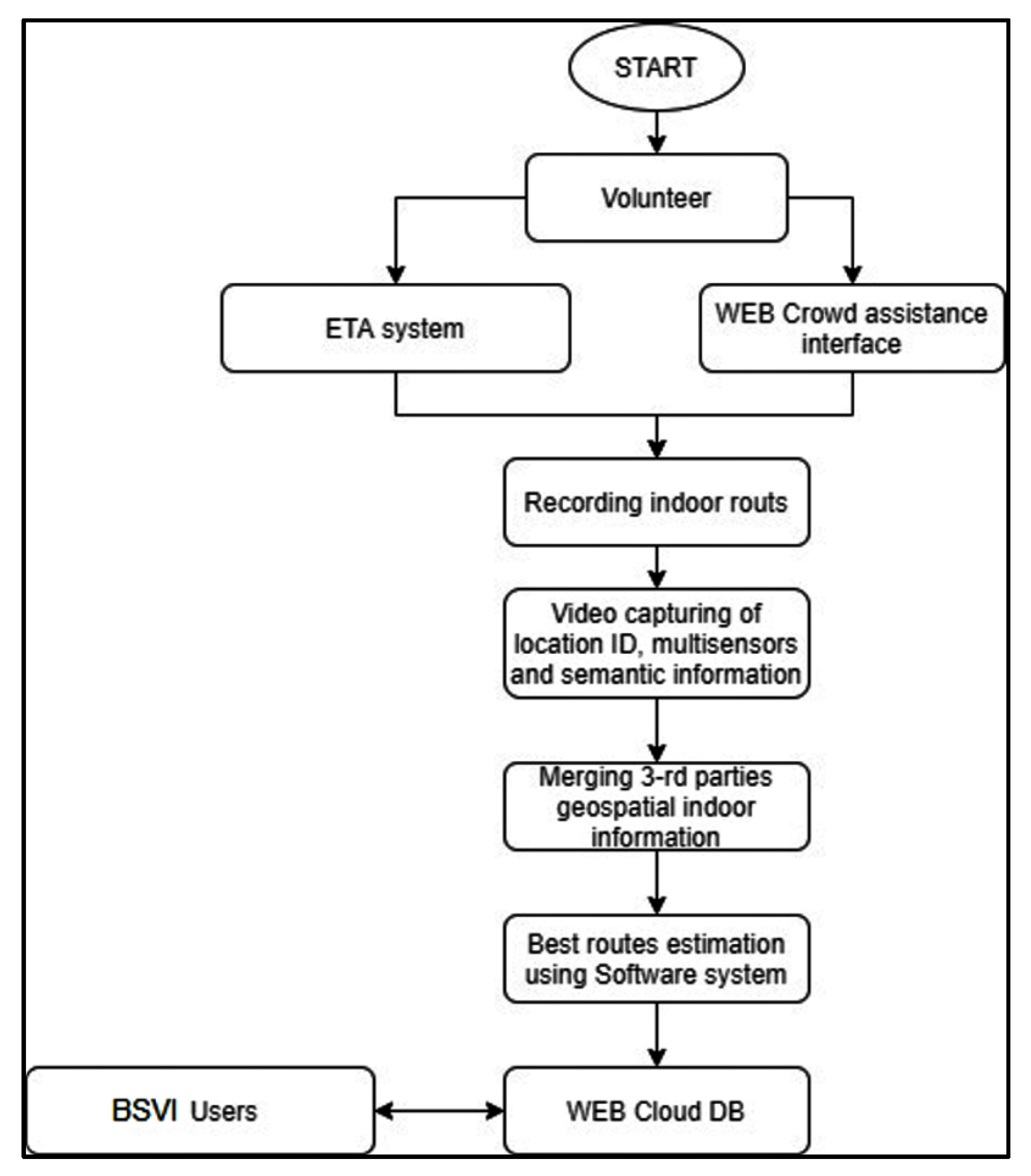

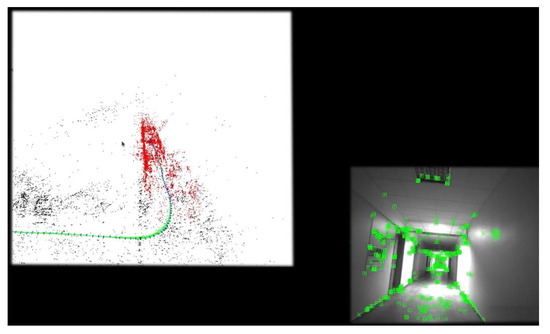

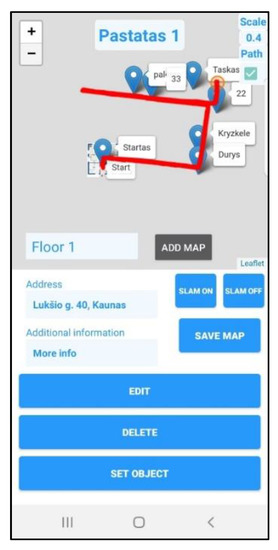

4.3. Crowdsourcing for Route Mapping

We use the state-of-the-art Visual SLAM algorithm Orb-slam-3 [31] for creating the trajectories that are later used to guide visually impaired users. We use camera poses returned by the SLAM algorithm as the points along the trajectory, see Figure 7. Loop closing implemented within the SLAM algorithm ensures that trajectory drifts are corrected once the volunteers visit the places where they have been before. The recorded trajectories are overlaid on the 2D building floor plans and navigational instructions are built, see Figure 8.

Figure 7.

Point of clouds (see on the right) and route of such clouds (see on the left) generated with the SLAM algorithm and depicted from the screen.

Figure 8.

Navigation instructions (voice and tactile output) and current location projected on the 2D evacuation scheme, using stored route data.

Seeing the recorded trajectories on the 2D plan the volunteers can verify that all of the places in the building have been visited. The redundant trajectories are generated when the volunteer returns to the place that has been visited before. This process is very important for loop closing and is part of the reliable workflow. On the other hand, this will generate redundant trajectories close to each other. The generated trajectories are converted to a pose graph and we use post processing to merge graph nodes and edges that are spatially close to each other. The processed graph is then used for path planning. At the moment we do not have any mechanism to remove faulty parts of the trajectory graph. If the volunteer sees that created graph has some faulty trajectories, they have to rerecord the floor map again to obtain the correct pose graph. In the future, we plan to add a GUI tool that would allow the volunteer to modify the trajectories after they have been recorded.

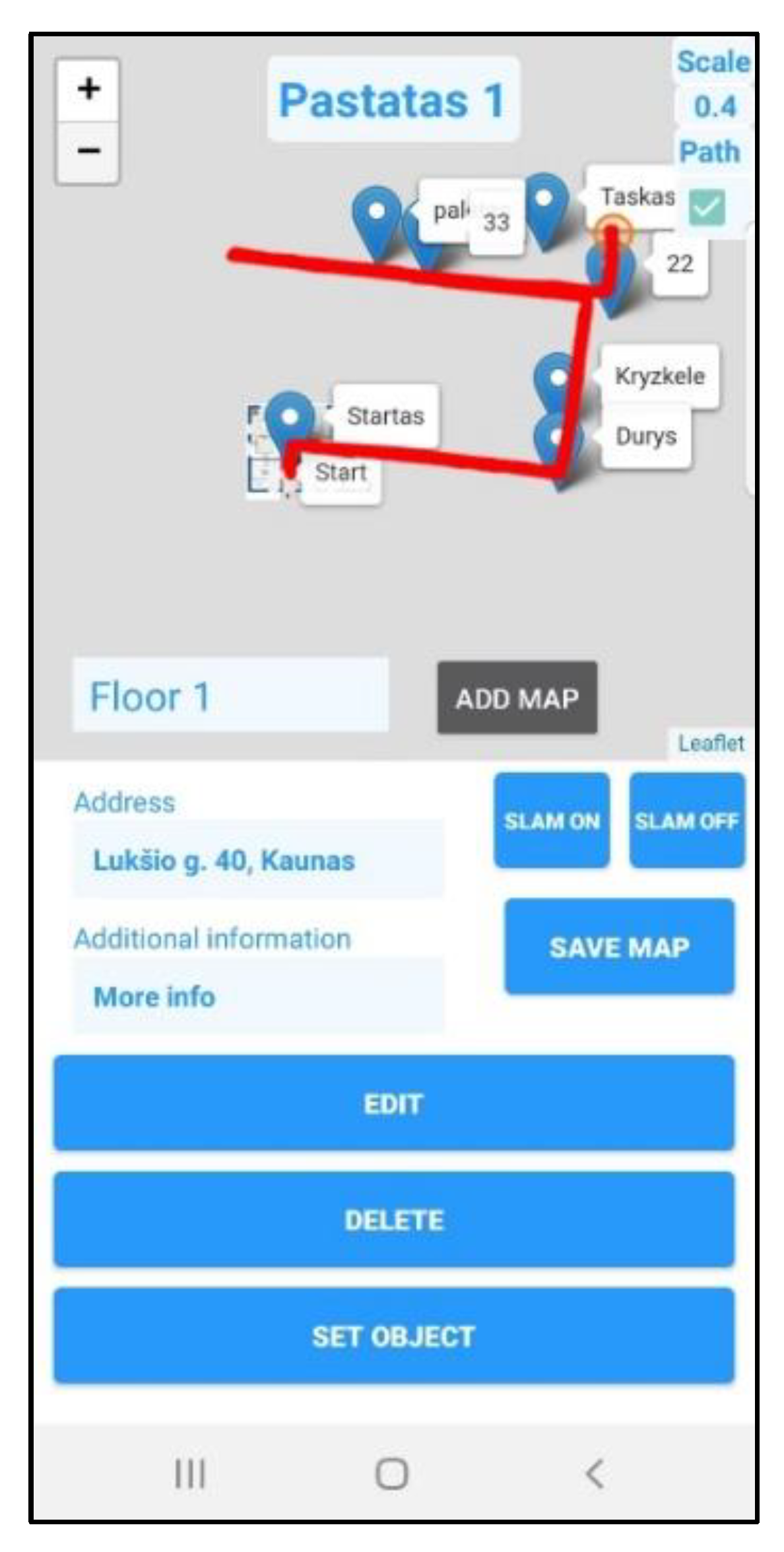

4.4. Verification and Mobile App

At the moment the collected data is only verified by the volunteers. They have to visually inspect the collected data to make sure that it is correct, see Figure 9. We created a specialized android app for the volunteers. This app is used during the data collection stage. The app displays a 2D plan of each building floor and trajectories collected by the Visual SLAM algorithm are overlayed on this map. It is the volunteer’s responsibility to verify that collected trajectories cover the whole building floor. Each volunteer would also be trained before performing the building mapping to make sure that they are able to verify the data. At the moment we do not use any automatic trajectory validation algorithms. In the future, this could be conducted using covered area validation algorithms.

Figure 9.

Mobile app view used by volunteers to record/delete routes and mark points of interest (Modality#1).

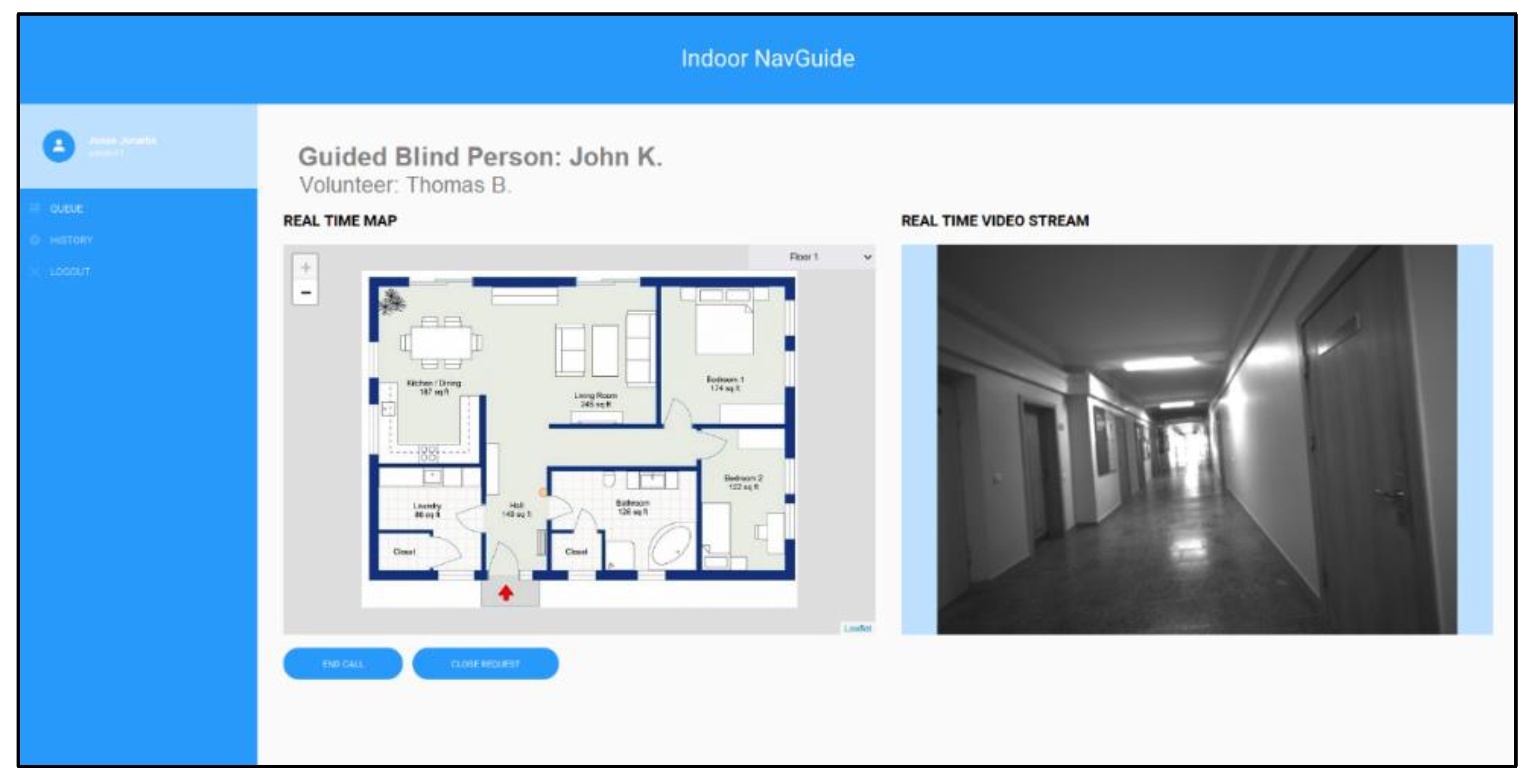

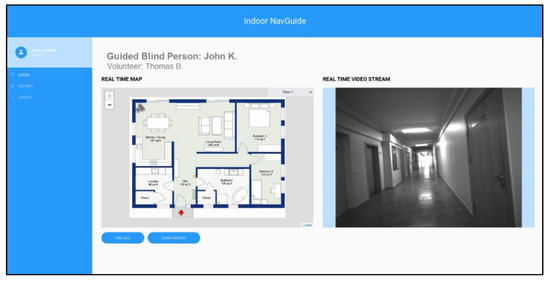

In the third operational modality, a BSVI person can use the mobile app to call volunteers who are familiar with that building or who were involved with the production of its routing data in the first modality. The web cloud server provides information about the current BSVI position on the chosen route (or last known location) and on that building’s digitalized evacuation scheme, see Figure 10. A volunteer can help to recognize obstacles, read texts, and find a route in dead reckoning situations.

Figure 10.

Volunteers’ mobile app view (Modality#3: while assisting BSVI person in real-time).

At the current stage, we are working to achieve integrity of the overall system (modalities and modes), improve stability (failures occur in 36% cases), and accuracy of the SLAM algorithm (sometimes occur scaling issues) while building routes. We also have to resolve other technical issues such as more reliable detection of potential obstacles, stable web connection for data transfer, intuitive and user-friendly tactile-voice interface, minimizing time lags for vision-based real-time processing (currently about 2 sec time lag for vision-based recognition of objects), etc.

Thus, our prototype is still in the development stage. Besides, as we stated in the title of the paper, this manuscript is mainly dedicated to shedding light on the innovative web-crowd outsourcing method for indoor routes’ mapping and assistance. At the current stage, we are working to achieve integrity of the overall system (modalities and modes), improve stability (failures occur in 36% cases), and accuracy of the SLAM algorithm (scaling issues) while building routes. We also have to resolve a number of other technical issues such as effective detection of potential obstacles, stable web connection, intuitive and user-friendly tactile-voice interface, minimizing time lags for vision-based real-time processing (currently about 2 sec time lag for vision-based recognition of objects), etc.

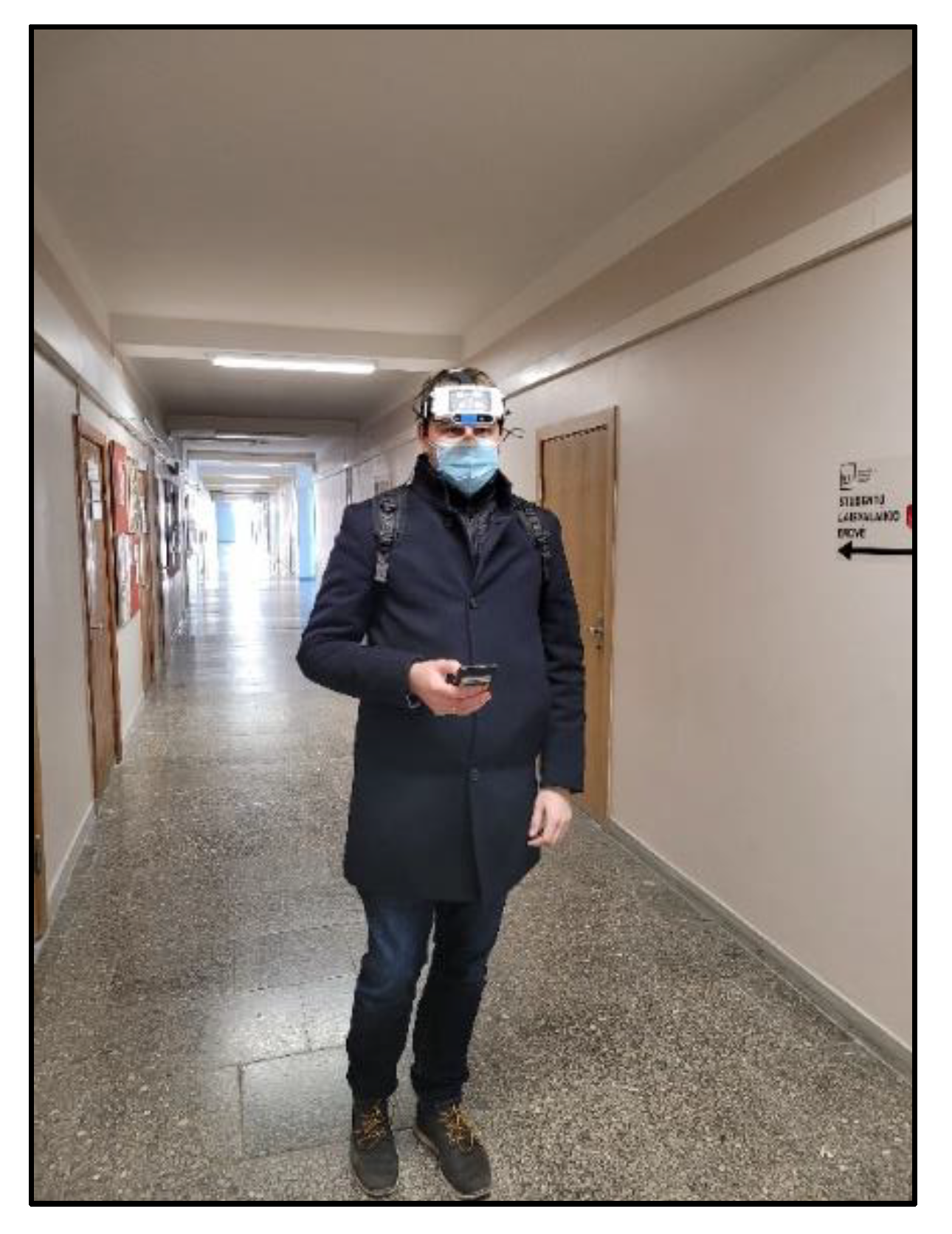

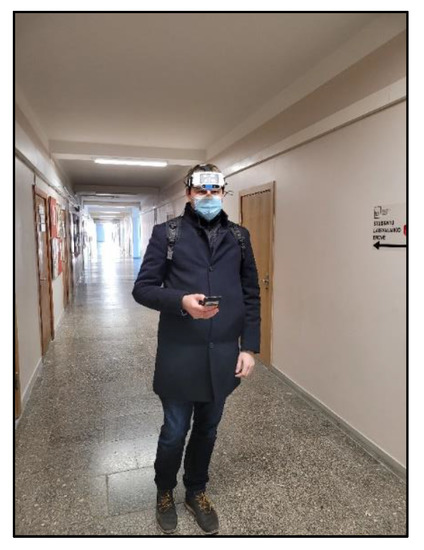

The literature review and patent DB analysis (see Section 1) indicate that the proposed web crowed assisted indoor navigation setup is unique and hardly comparable to other indoor navigation approaches that use infrastructural installations such as Wi-Fi routers, RFID tags, beamers, etc. Some live photos of the prototype development are presented in Figure 11 and Figure 12.

Figure 11.

A live prototype (Indoor NavGuide) development photo (headband with tactile display, controller, RealSense stereo camera, power bank, and mini PC).

Figure 12.

Modality#2: lab testing of guided navigation indoors, using ETA prototype.

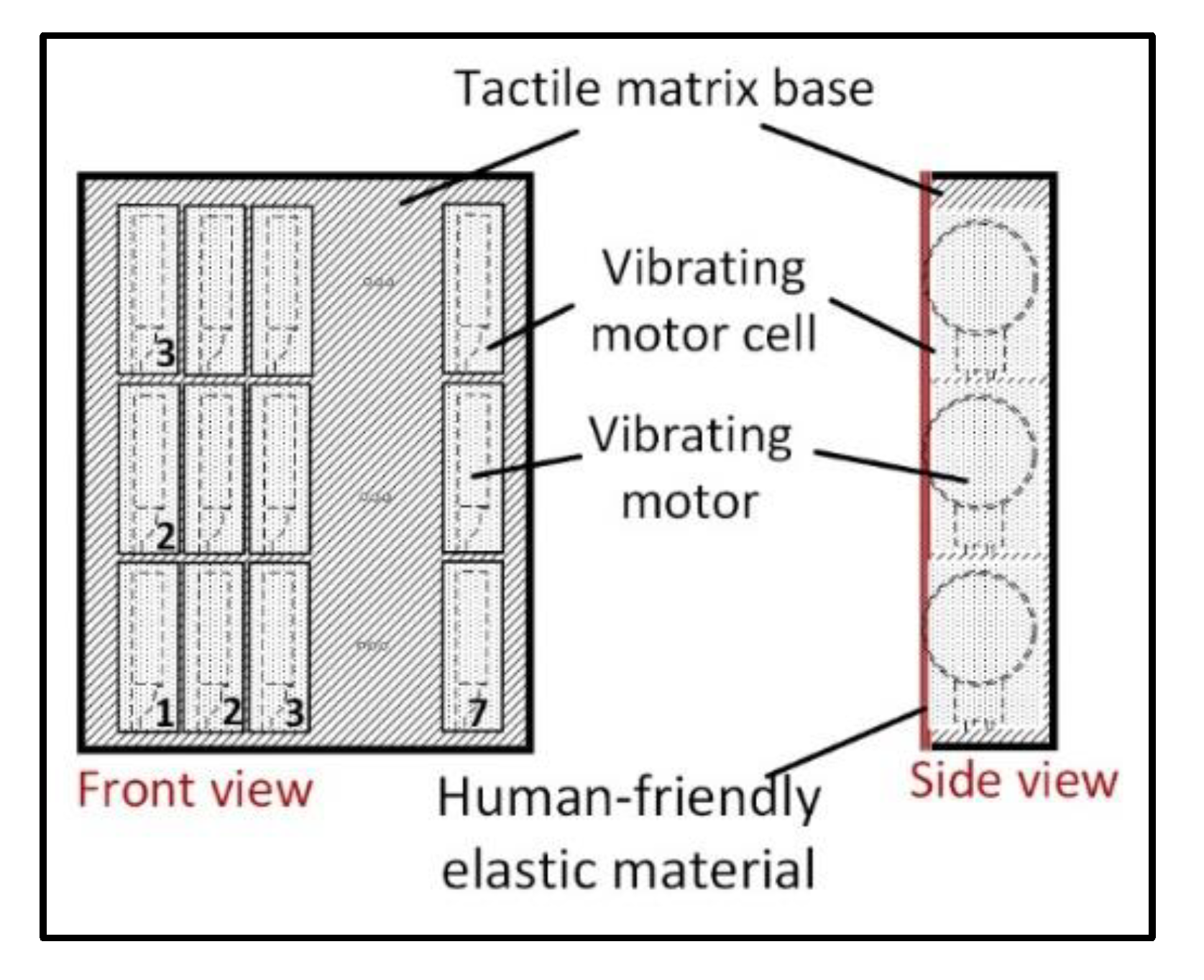

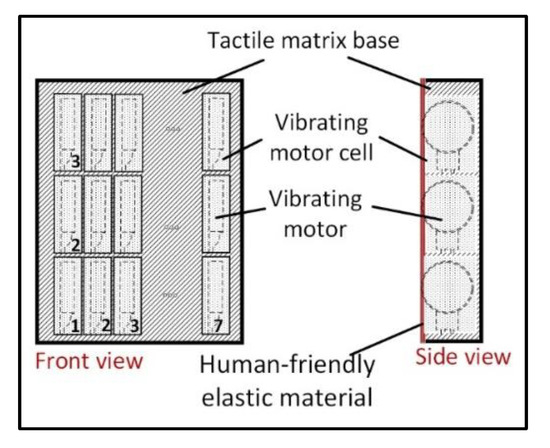

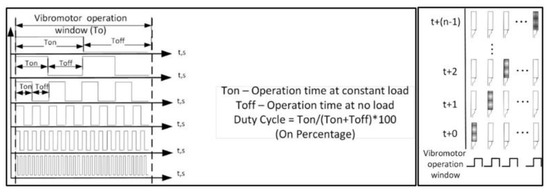

4.5. Tactile Display

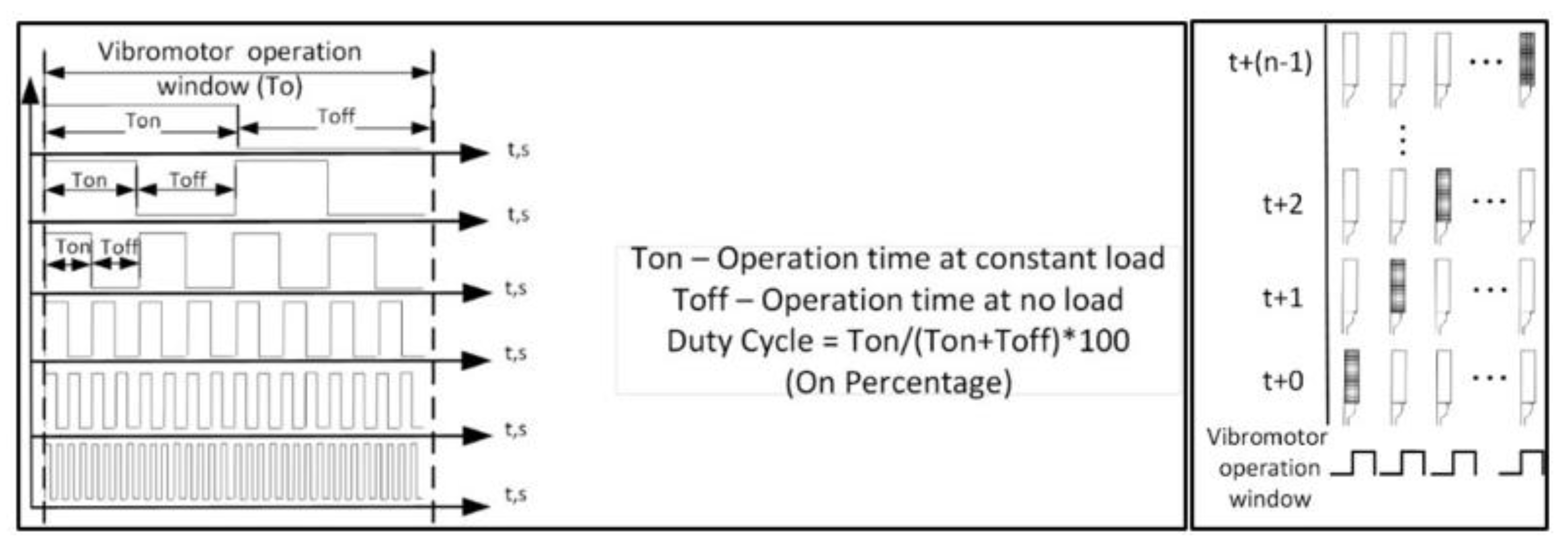

The tactile display Figure 13 consists of at least 27 vibrating motors (matrix of 3 rows and 9 columns). The base of the tactile display is 3D printed out of silicone (or elastomer). Each vibrating motor is immersed in a silicone cell with different stiffness compared to the base. In this way, the amplitude of the vibration is maximized, and the vibration energy is not transmitted to the other cells. The system controller can output a pulse-width modulated signal Figure 14 to drive vibration motors. The vibrating motor in the cell moves orthogonally to the forehead skin surface. The matrix is covered with a human-friendly elastic material.

Figure 13.

Tactile display.

Figure 14.

The system controller output to drive vibration motors.

6. Conclusions and Discussion

This paper is dedicated to the R&D implications of the ETA wearable system (prototype) for navigation indoors for people who are BSVI. It emphasizes the novel outsourcing method of mapping and assistance for indoor routes.

The logic and structure of the presented research follows certain steps, which can be narrated in the following way:

- After the overview of related research papers and patents, we found an evident lack of operationalization of the Web 2.0 social networking advantages for guided navigation indoors (see Introduction). That is, our investigation revealed that the indoor routing process could be crowdsourced to volunteers, avoiding costly infrastructural investment in RFID tags, Wi-Fi, beamers, etc.

- To define the real social networking abilities and expectations concerning indoor navigation ETA assistance of people who are BSVI, we conducted a semi-structured survey of people who are BSVI and interviews of experts in the field (see Section 2). It clearly shaped and targeted our R&D efforts towards the development of a wearable ETA prototype with some key crowdsourced functions such as an indoor routing process and assistance from volunteers in complex situations.

- Following the insights mentioned above and the needs of real BSVI users, we are in the process of constructing a unique computational, vision-based, wearable ETA prototype with crowdsourced functions. The presented wearable ETA prototype can be used in three consistent operational modalities: (i) sighted volunteers mark indoor routes using our wearable computational vision-based ETA prototype and help to maintain the web cloud DB of indoor routes; (ii) people who are BSVI can employ our wearable ETA prototype for guided navigation indoors using a chosen route from the route DB; and (iii) people who are BSVI can receive real-time web-crowd assistance (online volunteers’ help via the mobile app) in complex situations (such as being lost and encountering unexpected obstacles and situations) using the wearable ETA prototype (see Section 3, Section 4 and Section 5).

The applied research structure is user-centric and oriented toward actual BSVI needs. In this way, we avoided academic biases towards specific technology-centric approaches. Thus, the presented ETA indoor guided navigational system uses crowdsourcing when volunteers (sighted users) go through buildings and gather step-by-step visual and other sensory information on indoor routes that is processed using machine learning algorithms in the web cloud server and stored for BSVI usage in the web cloud DB.

The proposed novel adaptation of crowdsourcing helps indoor routing services to be obtained from a large group of sighted participants (neighbors, friends, parents, etc.), who voluntarily map indoor routes and place them in the online web server database. Here, indoor routes are processed using computational intelligence methods, enriched with semantic data, rated, and later exploited by people who are BSVI for indoor navigational guidance.

We believe that this integration of crowdsourcing methods using social networking will drive a new R&D frontier that can make more efficient ETA indoor navigational applications for people who are BSVI. Social networking and outsourcing can facilitate the sharing and exchange of experiences with points of interest (POI) (such as stairs, doors, WC, entrances/exits), routes of interest (ROI), and areas of interest (AOI) indoors. This form of social networking could initiate the formation of a self-organized community of people who are BSVI and volunteers.

In this way, participatory Web 2.0 social networking systems can integrate intelligent algorithms with the best experiences of people who are BSVI and sighted people while traveling, navigating, and orientating in indoor environments. This will help to build and continuously update real-time metrics of reachable POI, ROI, and AOI. This will allow routes to be averaged, erroneous routes eliminated, and optimal user-experience-based solutions found using various optimization approaches.

It is important to note that even indoor situations that change daily, such as renovations, furniture movements, closed doors, etc., can be recorded and updated continuously by sighted volunteers using social networking and the web cloud DB. In unrecognized environments, a trained ETA guiding system can either guide the user around the obstacle or suggest another route to continue on next.

Thus, the presented innovative web-crowd-assisted method enables BSVI users to obtain the latest information about the suitability of indoor routes. After experiencing a route, BSVI users (and correspondingly ETA system) can rate the route’s validity and make personal averaged ratings ascribed to the route (that are ascribed to the volunteer who recorded it). This allows other BSVI users to choose the best-rated routes and obtain offline guidance from the best-rated volunteers (sighted users).

In short, from the point of view of the end-user, the presented wearable prototype is distinguished from other related wearable indoor navigational ETA approaches in the sense that (a) it has an intuitive hands-free control interface that uses EMG (or mobile app and panel) and forehead tactile display; (b) it has a comfortable, user-orientated headband design; (c) it provides machine-learning-based real-time guided navigation and recognizes objects, scenes, and faces as well as providing OCR (optical character recognition); and (d) it provides web-crowd assistance while mapping indoor navigational routes and solving complex situations on the way using volunteers’ help.

It is important to note that each modality is composed of the same set of eight modes (object detector, specific object detector, scene description, face recognition, OCR, obstacle recognition, navigation, social networking) that are active or work in the background. The inclusion of all of these modes in three functional modalities is a unique feature of the proposed guided ETA system.

Thus, the specific advantages of the proposed experience-centric indoor guided navigation in the sense of user interface and social networking are as follows:

- People who are BSVI acquire more confidence through human-based wayfinding experiences (through interactions with trusted volunteers or other people who are BSVI with similar needs and preferences) than by using computer-generated models and algorithms.

- Floor plans or evacuation schemes provided by third parties or volunteers are digitalized, scaled, and matched with the computational vision (SLAM)-generated routes. This helps people who are BSVI when they become involved in complex situations (such as being lost, encountering unexpected obstacles, etc.) and need real-time assistance from volunteers.

- Indoor situations that change daily can be recorded and uploaded continuously by volunteers through social networking in the web cloud DB.

- Wayfinding experiences can be effectively rated and shared using social networking.

In time, a participatory web 2.0 social networking platform could emerge for people who are BSVI—something similar to a worldwide “Visiopedia” with a rated, crowdsourced, and publicly available indoor guided navigational web cloud database that is updated in almost real-time. This would expand the set of available indoor routes considerably and enable a much more efficient and reliable rating.

In summary, this paper presented a unique approach to the provision of technological and operational know-how on real-time guided indoor navigation improvements for people who are BSVI that does not require prior expensive investment in Wi-Fi, RFID, beamers, or other indoor infrastructure. The provided insights could help researchers and developers to exploit social Web2.0 and crowdsourcing opportunities for computer vision-based ETA navigation developments for people who are BSVI.

7. Patents

Patent application ‘Hands-Free Crowd Sourced Indoor Navigation System and Method for Guiding Blind and Visually Impaired Persons’. Application Number: 17/401,348; Date: 8 August 2021; Number of priority application: US 17/401,348.

Author Contributions

Conceptualization, D.P, A.I. and A.L.; methodology, D.P. and A.I.; software, D.P. and A.L.; validation, L.S.; formal analysis, D.P., A.I. and L.S.; investigation, D.P. and A.I.; resources, A.L.; data curation, D.P. and A.I.; writing—original draft preparation, D.P. and A.I; writing—review and editing, D.P., A.I. and A.L.; visualization, D.P.; supervision, D.P.; project administration, D.P.; funding acquisition, D.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by European Regional Development Fund (project No 01.2.2-LMT-K-718-01-0060) under grant agreement with the Research Council of Lithuania (LMTLT).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Plikynas, D.; Žvironas, A.; Budrionis, A.; Gudauskis, M. Indoor navigation systems for visually impaired persons: Mapping the features of existing technologies to user needs. Sensors 2020, 20, 636. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Csapó, Á.; Wersényi, G.; Nagy, H.; Stockman, T. A survey of assistive technologies and applications for blind users on mobile platforms: A review and foundation for research. J. Multimodal User Interfaces 2015, 9, 275–286. [Google Scholar] [CrossRef] [Green Version]

- Griffin-Shirley, N.; Banda, D.R.; Ajuwon, P.M.; Cheon, J.; Lee, J.; Park, H.R.; Lyngdoh, S.N. A survey on the use of mobile applications for people who are visually impaired. J. Vis. Impair. Blind. 2017, 111, 307–323. [Google Scholar] [CrossRef]

- Chessa, M.; Noceti, N.; Odone, F.; Solari, F.; Sosa-García, J.; Zini, L. An integrated artificial vision framework for assisting visually impaired users. Comput. Vis. Image Underst. 2016, 149, 209–228. [Google Scholar] [CrossRef]

- Tapu, R.; Mocanu, B.; Bursuc, A.; Zaharia, T. A smartphone-based obstacle detection and classification system for assisting visually impaired people. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, NSW, Australia, 2–8 December 2013; pp. 444–451. [Google Scholar] [CrossRef]

- Plikynas, D.; Žvironas, A.; Gudauskis, M.; Budrionis, A.; Daniušis, P.; Sliesoraitytė, I. Research advances of indoor navigation for blind people: A brief review of technological instrumentation. IEEE Instrum. Meas. Mag. 2020, 23, 22–32. [Google Scholar] [CrossRef]

- Raufi, B.; Ferati, M.; Zenuni, X.; Ajdari, J.; Ismaili, F. Methods and techniques of adaptive web accessibility for the blind and visually impaired. Procedia Soc. Behav. Sci. 2015, 195, 1999–2007. [Google Scholar] [CrossRef] [Green Version]

- Ferati, M.; Raufi, B.; Kurti, A.; Vogel, B. Accessibility requirements for blind and visually impaired in a regional context: An exploratory study. In Proceedings of the 2014 IEEE 2nd International Workshop on Usability and Accessibility Focused Requirements Engineering (UsARE), Karlskrona, Sweden, 25 August 2014; pp. 13–16. [Google Scholar]

- Elbes, M.; Al-Fuqaha, A. Design of a social collaboration and precise localization services for the blind and visually impaired. Procedia Comput. Sci. 2013, 21, 282–291. [Google Scholar] [CrossRef] [Green Version]

- Brady, E.L.; Zhong, Y.; Morris, M.R.; Bigham, J.P. Investigating the appropriateness of social network question asking as a resource for blind users. In Proceedings of the Conference on Computer Supported Cooperative Work, San Antonio, TX, USA, 23 February 2013; pp. 1225–1236. [Google Scholar]

- Brinkley, J.; Tabrizi, N. A desktop usability evaluation of the facebook mobile interface using the jaws screen reader with blind users. In Proceedings of the Human Factors and Ergonomics Society Annual Aeeting, 23 September 2017; SAGE Publications: Los Angeles, CA, USA, 2017; Volume 61, pp. 828–832. [Google Scholar]

- Wu, S.; Adamic, L.A. Visually impaired users on an online social network. In Proceedings of the Sigchi Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; pp. 3133–3142. [Google Scholar]

- Voykinska, V.; Azenkot, S.; Wu, S.; Leshed, G. How blind people interact with visual content on social networking services. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work & Social Computing, San Francisco, CA, USA, 27 February–2 March 2016; pp. 1584–1595. [Google Scholar]

- Qiu, S.; Hu, J.; Rauterberg, M. Mobile social media for the blind: Preliminary observations. In Proceedings of the International Conference on Enabling Access for Persons with Visual Impairment, Athens, Greece, 12–14 February 2015; pp. 152–156. [Google Scholar]

- Della Líbera, B.; Jurberg, C. Teenagers with visual impairment and new media: A world without barriers. Br. J. Vis. Impair. 2017, 35, 247–256. [Google Scholar] [CrossRef]

- Tjostheim, I.; Solheim, I.; Fuglerud, K.S. The importance of peers for visually impaired users of social media. In (746) Internet and Multimedia Systems and Applications/747: Human-Computer Interaction; Norwegian Computing: Oslo, Norway, 2011; p. 21. [Google Scholar]

- Avila, M.; Wolf, K.; Brock, A.; Henze, N. Remote assistance for blind users in daily life: A survey about be my eyes. In Proceedings of the 9th ACM International Conference on Pervasive Technologies Related to Assistive Environments, Corfu Island, Greece, 29 June–1 July 2016; pp. 1–2. [Google Scholar]

- Watanabe, T.; Matsutani, K.; Adachi, M.; Oki, T.; Miyamoto, R. Feasibility Study of Intersection Detection and Recognition Using a Single Shot Image for Robot Navigation. J. Image Graph. 2021, 9, 39–44. [Google Scholar] [CrossRef]

- Hasegawa, R.; Iwamoto, Y.; Chen, Y.W. Robust Japanese Road Sign Detection and Recognition in Complex Scenes Using Convolutional Neural Networks. J. Image Graph. 2020, 8, 59–66. [Google Scholar] [CrossRef]

- Alkowatly, M.T.; Becerra, V.M.; Holderbaum, W. Estimation of visual motion parameters used for bio-inspired navigation. J. Image Graph. 2013, 1, 120–124. [Google Scholar] [CrossRef]

- Lo, E. Target Detection Algorithms in Hyperspectral Imaging Based on Discriminant Analysis. J. Image Graph. 2019, 7, 140–144. [Google Scholar] [CrossRef]

- Utaminingrum, F.; Prasetya, R.P. Rizdania Combining Multiple Feature for Robust Traffic Sign Detection. J. Image Graph. 2020, 8, 53–58. [Google Scholar] [CrossRef]

- Likert, R. A Technique for the Measurement of Attitudes. Arch. Psychol. 1932, 22, 1–55. [Google Scholar]

- Sheepy, E.; Salenikovich, S. Technological support for mobility and orientation training: Development of a smartphone navigation aid. In Proceedings of the E-Learn.: World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education, Las Vegas, NV, USA, 21 September 2013; Association for the Advancement of Computing in Education (AACE): San Diego, CA, USA; pp. 975–980. [Google Scholar]

- Karlsson, N.; Di Bernardo, E.; Ostrowski, J.; Goncalves, L.; Pirjanian, P.; Munich, M.E. The vSLAM algorithm for robust localization and mapping. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 24–29. [Google Scholar] [CrossRef] [Green Version]

- Karimi, H.A.; Zimmerman, B.; Ozcelik, A.; Roongpiboonsopit, D. SoNavNet: A framework for social navigation networks. In Proceedings of the 2009 International Workshop on Location Based Social Networks, Seattle, WA, USA, 3 November 2009; pp. 81–87. [Google Scholar]

- Vítek, S.; Klíma, M.; Husník, L.; Spirk, D. New Possibilities for Blind People Navigation. In Proceedings of the 2011 International Conference on Applied Electronics, Pilsen, Czech Republic, 7–8 September 2011; pp. 1–4. [Google Scholar]

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and tell: Lessons learned from the 2015 mscoco image captioning challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 652–663. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Laganiere, R.; Akhoury, S. Training Binary Descriptors for Improved Robustness and Efficiency in Real-Time Matching. In Proceedings of the International Conference on Image Analysis and Processing, Naples, Italy, 9–13 September 2013; Springer: Berlin/Heidelberg, Germany; pp. 288–298. [Google Scholar] [CrossRef] [Green Version]

- Tafti, A.P.; Baghaie, A.; Assefi, M.; Arabnia, H.R.; Yu, Z.; Peissig, P. OCR as a service: An experimental evaluation of Google Docs OCR, Tesseract, ABBYY FineReader, and Transym. In International Symposium on Visual Computing; Springer International Publishing: Cham, Switzerland, 2016; pp. 735–746. [Google Scholar]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).