Design of an Eye-in-Hand Smart Gripper for Visual and Mechanical Adaptation in Grasping

Abstract

:Featured Application

Abstract

1. Introduction

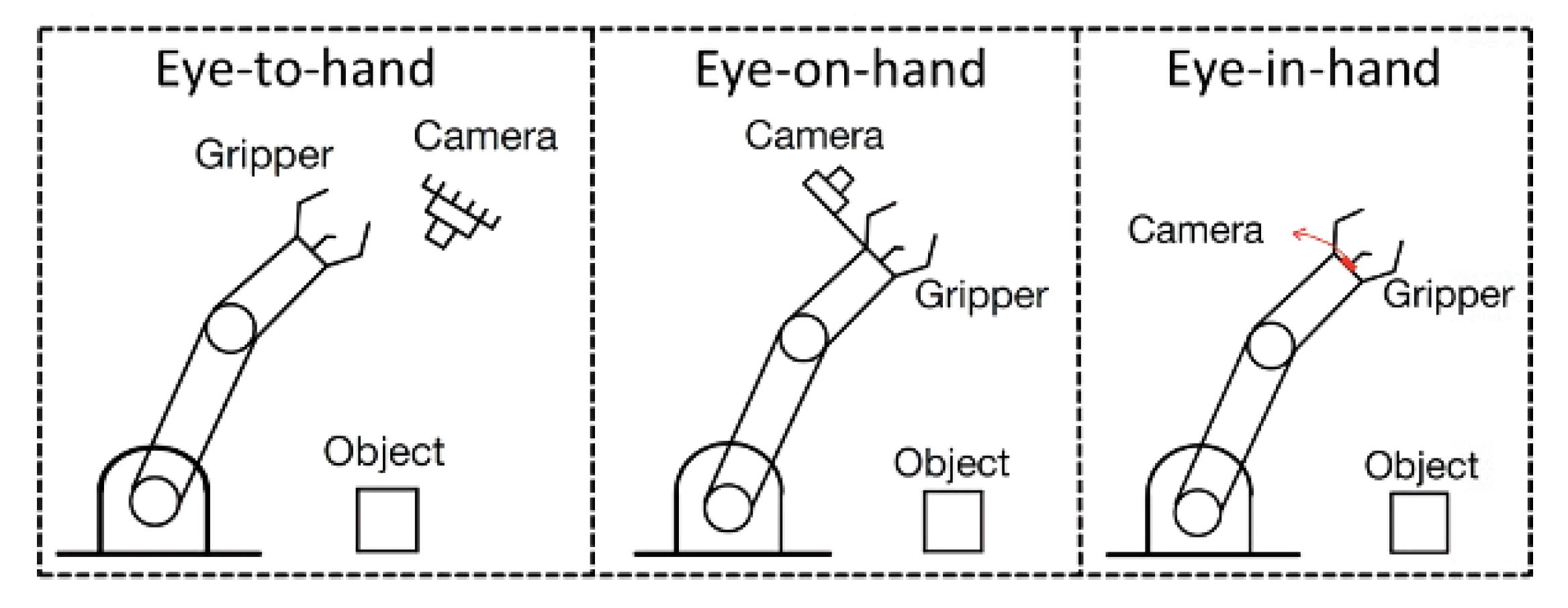

2. Linkage Structure Calculation

3. Materials and Methods

3.1. Angle and Torque Magnification of a Single Four-Bar Linkage Structure

3.2. Range of Motion for Double-Section Structures

3.3. Range of Motion for Triple-Section Structures

4. Mechanism Adaptive Results

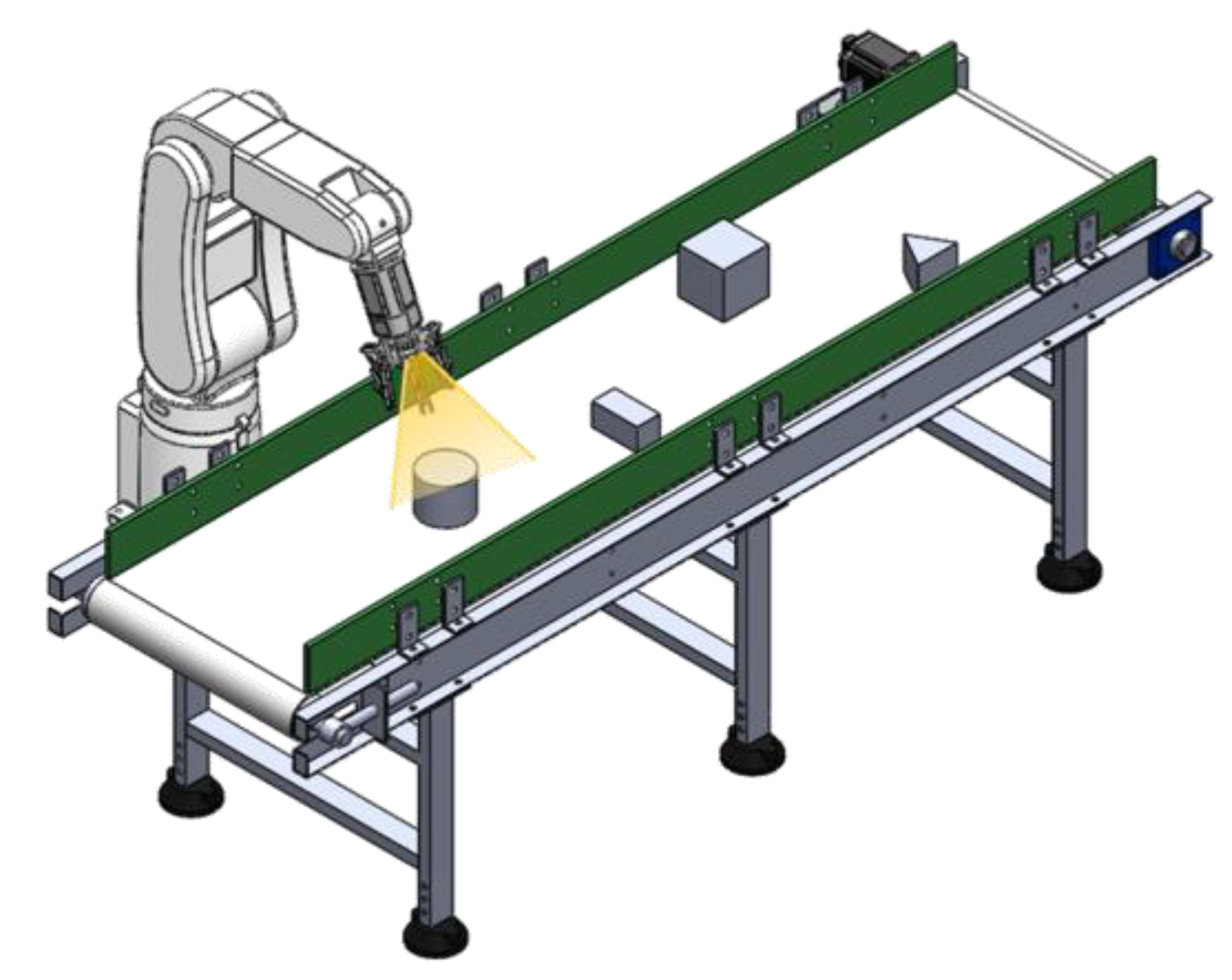

5. Visual Adaption by Eye-in-Hand System

5.1. Color Model for Object Image Processing

5.2. Finding Object’s Location, Orientation and Shape

5.3. Motion Planning

- Manipulator base coordinate frame ;

- Tool (end-effector) coordinate frame ;

- Eye-in-hand camera coordinate frame ;

- Object coordinate frame , which is the surface of the object.

5.3.1. Conversion of Image Coordinates

5.3.2. Determine the Height of the Target Object

5.3.3. Image-Based Visual Servo Control

6. Visual Adaption Results

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jin, J.; Zhang, W.; Sun, Z.; Chen, Q. LISA Hand: Indirect self-adaptive robotic hand for robust grasping and simplicity. In Proceedings of the 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guangzhou, China, 11–14 December 2012; pp. 2393–2398. [Google Scholar]

- Kappassov, Z.; Khassanov, Y.; Saudabayev, A.; Shintemirov, A.; Varol, H.A. Semi-anthropomorphic 3D printed multigrasp hand for industrial and service robots. In Proceedings of the 2013 IEEE International Conference on Mechatronics and Automation, Takamatsu, Japan, 4–7 August 2013; pp. 1697–1702. [Google Scholar]

- Li, G.; Zhang, W. Study on coupled and self-adaptive finger for robot hand with parallel rack and belt mechanisms. In Proceedings of the 2010 IEEE International Conference on Robotics and Biomimetics, Tianjin, China, 14–18 December 2010; pp. 1110–1115. [Google Scholar]

- Telegenov, K.; Tlegenov, Y.; Shintemirov, A. A low-cost open-source 3-d-printed three-finger gripper platform for research and educational purposes. IEEE Access 2015, 3, 638–647. [Google Scholar] [CrossRef]

- Birglen, L.; Gosselin, C.M. Geometric design of three-phalanx underactuated fingers. J. Mech. Des. 2006, 128, 356–364. [Google Scholar] [CrossRef]

- Birglen, L.; Gosselin, C.M. Kinetostatic analysis of underactuated fingers. IEEE Trans. Robot. Autom. 2004, 20, 211–221. [Google Scholar] [CrossRef]

- Birglen, L.; Gosselin, C.M. Force analysis of connected differential mechanisms: Application to grasping. Int. J. Robot. Res. 2006, 25, 1033–1046. [Google Scholar] [CrossRef]

- Cheng, L.-W.; Chang, J.-Y. Design of a Multiple Degrees of Freedom Robotic Gripper for Adaptive Compliant Actuation. In Proceedings of the 2018 International Conference on System Science and Engineering (ICSSE), New Taipei City, Taiwan, 28–30 June 2018; pp. 1–6. [Google Scholar]

- Barth, R.; Hemming, J.; van Henten, E.J. Design of an eye-in-hand sensing and servo control framework for harvesting robotics in dense vegetation. Biosyst. Eng. 2016, 146, 71–84. [Google Scholar] [CrossRef] [Green Version]

- Chang, W.-C. Robotic assembly of smartphone back shells with eye-in-hand visual servoing. Robot. Comput.-Integr. Manuf. 2018, 50, 102–113. [Google Scholar] [CrossRef]

- Pomares, J.; Perea, I.; García, G.J.; Jara, C.A.; Corrales, J.A.; Torres, F. A multi-sensorial hybrid control for robotic manipulation in human-robot workspaces. Sensors 2011, 11, 9839–9862. [Google Scholar] [CrossRef] [PubMed]

- Cigliano, P.; Lippiello, V.; Ruggiero, F.; Siciliano, B. Robotic ball catching with an eye-in-hand single-camera system. IEEE Trans. Control. Syst. Technol. 2015, 23, 1657–1671. [Google Scholar] [CrossRef] [Green Version]

- Shaw, J.; Chi, W.-L. Automatic classification of moving objects on an unknown speed production line with an eye-in-hand robot manipulator. J. Mar. Sci. Technol. 2018, 26, 10. [Google Scholar]

- Yu, S.; Lee, J.; Park, B.; Kim, K. Design of a gripper system for tendon-driven telemanipulators considering semi-automatic spring mechanism and eye-in-hand camera system. J. Mech. Sci. Technol. 2017, 31, 1437–1446. [Google Scholar] [CrossRef]

- Wang, H.; Guo, D.; Xu, H.; Chen, W.; Liu, T.; Leang, K.K. Eye-in-hand tracking control of a free-floating space manipulator. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 1855–1865. [Google Scholar] [CrossRef]

- Florence, P.R.; Manuelli, L.; Tedrake, R. Dense object nets: Learning dense visual object descriptors by and for robotic manipulation. arXiv 2018, arXiv:1806.08756. [Google Scholar]

- Shih, C.-L.; Lee, Y. A simple robotic eye-in-hand camera positioning and alignment control method based on parallelogram features. Robotics 2018, 7, 31. [Google Scholar] [CrossRef] [Green Version]

- Lin, Y.; Wei, S.; Fu, L. Grasping unknown objects using depth gradient feature with eye-in-hand RGB-D sensor. In Proceedings of the 2014 IEEE International Conference on Automation Science and Engineering (CASE), Taipei, Taiwan, 18–22 August 2014; pp. 1258–1263. [Google Scholar]

- Backus, S.B.; Dollar, A.M. An adaptive three-fingered prismatic gripper with passive rotational joints. IEEE Robot. Autom. Lett. 2016, 1, 668–675. [Google Scholar] [CrossRef]

- Douglas, D.H.; Peucker, T.K. Algorithms for the reduction of the number of points required to represent a digitized line or its caricature. Cartogr. Int. J. Geogr. Inf. Geovisualiz. 1973, 10, 112–122. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

| Range of Motion at End Point (mm2) | Double-Section Structures | Triple-Section Structures | |||||

|---|---|---|---|---|---|---|---|

| h1 = 0.5 | h1 = 1.0 | h1 = 1.5 | h1 = 0.5 | h1 = 1.0 | h1 = 1.5 | ||

| R = 0.5 | φ & = 45° | 249.0 | 234.2 | 252.7 | 652.8 | 661.0 | 667.9 |

| φ & = 90° | 238.5 | 233.2 | 248.2 | 535.7 | 630.3 | 663.3 | |

| φ & = 135° | 75.1 | 229.6 | 198.2 | 161.6 | 538.8 | 484.5 | |

| R = 1.0 | φ & = 45° | 212.1 | 215.3 | 214.0 | 199.7 | 195.9 | 195.0 |

| φ & = 90° | 105.3 | 95.1 | 219.8 | 160.6 | 159.2 | 158.9 | |

| φ & = 135° | 19.6 | 14.7 | 19.4 | 28.4 | 26.0 | 26.2 | |

| R = 1.2 | φ & = 45° | 54.1 | 37.2 | 33.5 | 64.0 | 51.4 | 40.5 |

| φ & = 90° | 20.2 | 10.3 | 11.5 | 17.5 | 14.0 | 12.6 | |

| φ & = 135° | 4.2 | 2.9 | 2.0 | 3.4 | 2.2 | 1.9 | |

| Operation Range | Speed | Force | Load | Weight |

|---|---|---|---|---|

| 160 mm | 100 mm/s | 50 N | 5 kg | 1.5 kg |

| Height Measurement | Average Height of Calculation (m) | Average Height of Measurement (m) | Standard Deviation (m) | Error Rate (%) |

|---|---|---|---|---|

| Square | 0.049 | 0.050 | 0.004 | 1.71% |

| Circle | 0.053 | 0.051 | 0.001 | 5.16% |

| Rectangle | 0.024 | 0.024 | 0.002 | 1.08% |

| Conveyor Moving Speed | Speed 20 mm/s | Speed 40 mm/s | Speed 60 mm/s | Speed 70 mm/s | |

|---|---|---|---|---|---|

| Square | Moving Distance (m) | 0.133 | 0.245 | 0.326 | 0.430 |

| Standard Deviation (m) | 0.008 | 0.020 | 0.020 | 0.034 | |

| Success Rate (%) | 100% | 100% | 100% | 70% | |

| Circle | Moving Distance (m) | 0.132 | 0.247 | 0.315 | 0.429 |

| Standard Deviation (m) | 0.007 | 0.013 | 0.025 | 0.022 | |

| Success Rate (%) | 100% | 100% | 100% | 85% | |

| Rectangle | Moving Distance (m) | 0.131 | 0.244 | 0.315 | 0.411 |

| Standard Deviation (m) | 0.008 | 0.017 | 0.021 | 0.021 | |

| Success Rate (%) | 100% | 100% | 100% | 100% | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, L.-W.; Liu, S.-W.; Chang, J.-Y. Design of an Eye-in-Hand Smart Gripper for Visual and Mechanical Adaptation in Grasping. Appl. Sci. 2022, 12, 5024. https://doi.org/10.3390/app12105024

Cheng L-W, Liu S-W, Chang J-Y. Design of an Eye-in-Hand Smart Gripper for Visual and Mechanical Adaptation in Grasping. Applied Sciences. 2022; 12(10):5024. https://doi.org/10.3390/app12105024

Chicago/Turabian StyleCheng, Li-Wei, Shih-Wei Liu, and Jen-Yuan Chang. 2022. "Design of an Eye-in-Hand Smart Gripper for Visual and Mechanical Adaptation in Grasping" Applied Sciences 12, no. 10: 5024. https://doi.org/10.3390/app12105024

APA StyleCheng, L.-W., Liu, S.-W., & Chang, J.-Y. (2022). Design of an Eye-in-Hand Smart Gripper for Visual and Mechanical Adaptation in Grasping. Applied Sciences, 12(10), 5024. https://doi.org/10.3390/app12105024

_Chang.jpg)