A Graph-Based Approach to Recognizing Complex Human Object Interactions in Sequential Data

Featured Application

Abstract

1. Introduction

- We used a graph-based image skeletonization called the image foresting transform (IFT) technique to detect 12 human body parts.

- We proposed a multi-feature approach involving three different types of features: full-body features, point-based features, and scene features. We also provided types of feature descriptors for each category.

- We optimized the large feature vector obtained through isometric feature mapping (ISOMAP).

- We implemented a graph convolution network (GCN) and tuned its parameters for the final classification of human locomotion activities.

2. Related Work

2.1. Multi-Feature HOIR

2.2. Graph-Based HOIR

3. Materials and Methods

3.1. Pre-Processing

3.1.1. Sigmoid Stretching

3.1.2. Gaussian Filtering

3.2. Image Segmentation

3.3. Key Point Detection

3.4. Feature Extraction

| Algorithm 1: Feature Extraction |

| Input: N: full images, full body silhouettes, and 12 key body points Output: combined feature vector (f1, f2, f3… fn) % initiating feature vector for HOIR % feature_vector ← [] F_vectorsize ← GetVectorsize () % loop on all images% For i = 1:n % extracting scene features% SPM ← GetSPM(i) Gist ← GetGist(i) FeatureVector.append(SPM, Gist) % loop on extracted human silhouettes % J ← len (silhouettes) For i = 1:J % extracting full body features% Trajectory ← GetTrajectory(silhouette[i])) LIOP ← GetLIOP(silhouette[i])) FeatureVector.append(Trajectory, LIOP) % loop on 12 key points of each silhouette% For i = 1:12 % extracting key point features% KinematicPosture ← GetKinematicPosture(i) LOP ← GetLOP(i) FeatureVector.append(LOP, KinematicPosture) End End End Feature-vector ← Normalize (FeatureVector) return feature vector (f1, f2, f3… fn) |

3.4.1. Full Body Feature: Dense Trajectory

3.4.2. Full Body Feature: LIOP

3.4.3. Point-Based Feature: Kinematic Posture

3.4.4. Point-Based Feature: LOP

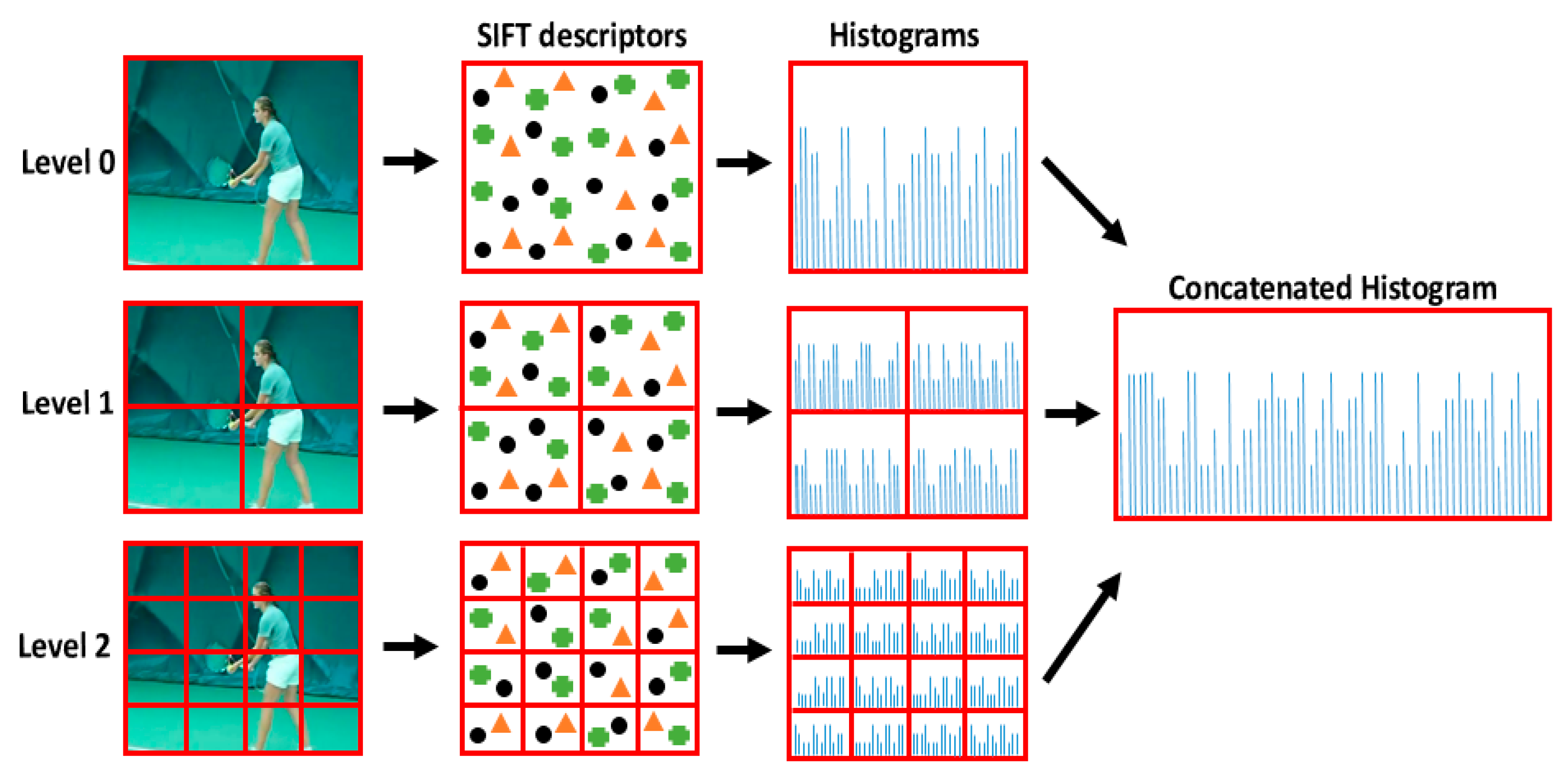

3.4.5. Scene Feature: SPM

3.4.6. Scene Feature: GIST

3.5. Feature Optimization

3.6. Human-Object Interaction Recognition (HOIR)

4. Experimental Results

4.1. Datasets Description

4.2. Experiment I: HOI Classification Accuracies

4.3. Experiment II: Computational Complexity

4.4. Experiment III: Body Part Detection Rate

4.5. Experiment IV: Comparison with Other Well-Known Classifiers

4.6. Experiment V: Comparison with Other State-of-the-Art Methods

5. Discussion

6. Conclusions and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jalal, A.; Sarif, N.; Kim, J.T.; Kim, T.-S. Human Activity Recognition via Recognized Body Parts of Human Depth Silhouettes for Residents Monitoring Services at Smart Home. Indoor Built Environ. 2012, 22, 271–279. [Google Scholar] [CrossRef]

- Jalal, A.; Uddin, Z.; Kim, T.-S. Depth video-based human activity recognition system using translation and scaling invariant features for life logging at smart home. IEEE Trans. Consum. Electron. 2012, 58, 863–871. [Google Scholar] [CrossRef]

- Jalal, A.; Lee, S.; Kim, J.T.; Kim, T.-S. Human Activity Recognition via the Features of Labeled Depth Body Parts. In Proceedings of the Smart Homes Health Telematics, Artimono, Italy, 12–15 June 2012; pp. 246–249. [Google Scholar] [CrossRef]

- Jalal, A.; Kim, J.T.; Kim, T.-S. Development of a life logging system via depth imaging-based human activity recognition for smart homes. In Proceedings of the International Symposium on Sustainable Healthy Buildings, Brisbane, Australia, 8–12 July 2012; pp. 91–95. [Google Scholar]

- Tahir, S.B.U.D.; Jalal, A.; Batool, M. Wearable Sensors for Activity Analysis using SMO-based Random Forest over Smart home and Sports Datasets. In Proceedings of the 2020 3rd International Conference on Advancements in Computational Sciences (ICACS), Lahore, Pakistan, 17–19 February 2020; pp. 1–6. [Google Scholar]

- Jalal, A.; Nadeem, A.; Bobasu, S. Human Body Parts Estimation and Detection for Physical Sports Movements. In Proceedings of the IEEE International Conference on Communication, Computing and Digital Systems, Islamabad, Pakistan, 6–7 March 2019. [Google Scholar]

- Javeed, M.; Gochoo, M.; Jalal, A.; Kim, K. HF-SPHR: Hybrid Features for Sustainable Physical Healthcare Pattern Recognition Using Deep Belief Networks. Sustainability 2021, 13, 1699. [Google Scholar] [CrossRef]

- Ansar, H.; Jalal, A.; Gochoo, M.; Kim, K. Hand Gesture Recognition Based on Auto-Landmark Localization and Reweighted Genetic Algorithm for Healthcare Muscle Activities. Sustainability 2021, 13, 2961. [Google Scholar] [CrossRef]

- Khalid, N.; Gochoo, M.; Jalal, A.; Kim, K. Modeling Two-Person Segmentation and Locomotion for Stereoscopic Action Identification: A Sustainable Video Surveillance System. Sustainability 2021, 13, 970. [Google Scholar] [CrossRef]

- Mahmood, M.; Jalal, A.; Kim, K. WHITE STAG model: Wise human interaction tracking and estimation (WHITE) using spatio-temporal and angular-geometric (STAG) descriptors. Multimed. Tools Appl. 2020, 79, 6919–6950. [Google Scholar] [CrossRef]

- Kamal, S.; Jalal, A.; Kim, D. Depth Images-based Human Detection, Tracking and Activity Recognition Using Spatiotemporal Features and Modified HMM. J. Electr. Eng. Technol. 2016, 11, 1857–1862. [Google Scholar] [CrossRef]

- Jalal, A.; Mahmood, M.; Hasan, A.S. Multi-features descriptors for Human Activity Tracking and Recognition in Indoor-Outdoor Environments. In Proceedings of the 2019 16th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 8–12 January 2019; pp. 371–376. [Google Scholar] [CrossRef]

- Nadeem, A.; Jalal, A.; Kim, K. Human Actions Tracking and Recognition Based on Body Parts Detection via Artificial Neural Network. In Proceedings of the 3rd International Conference on Advancements in Computational Sciences (ICACS 2020), Lahore, Pakistan, 17–19 February 2020; pp. 1–6. [Google Scholar]

- Jalal, A.; Mahmood, M. Students’ behavior mining in e-learning environment using cognitive processes with information technologies. Educ. Inf. Technol. 2019, 24, 2797–2821. [Google Scholar] [CrossRef]

- Gochoo, M.; Tahir, S.B.U.D.; Jalal, A.; Kim, K. Monitoring Real-Time Personal Locomotion Behaviors over Smart Indoor-Outdoor Environments via Body-Worn Sensors. IEEE Access 2021, 9, 70556–70570. [Google Scholar] [CrossRef]

- Jalal, A.; Kamal, S.; Kim, D. A Depth Video-based Human Detection and Activity Recognition using Multi-features and Embedded Hidden Markov Models for Health Care Monitoring Systems. Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 54–62. [Google Scholar] [CrossRef]

- Nadeem, A.; Jalal, A.; Kim, K. Automatic human posture estimation for sport activity recognition with robust body parts detection and entropy markov model. Multimed. Tools Appl. 2021, 80, 21465–21498. [Google Scholar] [CrossRef]

- Gochoo, M.; Akhter, I.; Jalal, A.; Kim, K. Stochastic Remote Sensing Event Classification over Adaptive Posture Estimation via Multifused Data and Deep Belief Network. Remote Sens. 2021, 13, 912. [Google Scholar] [CrossRef]

- Jalal, A.; Khalid, N.; Kim, K. Automatic Recognition of Human Interaction via Hybrid Descriptors and Maximum Entropy Markov Model Using Depth Sensors. Entropy 2020, 22, 817. [Google Scholar] [CrossRef] [PubMed]

- Kamal, S.; Jalal, A. A Hybrid Feature Extraction Approach for Human Detection, Tracking and Activity Recognition Using Depth Sensors. Arab. J. Sci. Eng. 2015, 41, 1043–1051. [Google Scholar] [CrossRef]

- Jalal, A.; Quaid, M.A.K.; Hasan, A.S. Wearable Sensor-Based Human Behavior Understanding and Recognition in Daily Life for Smart Environments. In Proceedings of the International Conference on Frontiers of Information Technology, Islamabad, Pakistan, 17–19 December 2018; pp. 105–110. [Google Scholar] [CrossRef]

- Quaid, M.A.K.; Jalal, A. Wearable sensors based human behavioral pattern recognition using statistical features and reweighted genetic algorithm. Multimed. Tools Appl. 2020, 79, 6061–6083. [Google Scholar] [CrossRef]

- Azmat, U.; Jalal, A. Smartphone Inertial Sensors for Human Locomotion Activity Recognition based on Template Matching and Codebook Generation. In Proceedings of the IEEE International Conference on Communication Technologies, Rawalpindi, Pakistan, 21–22 September 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Jalal, A.; Kim, Y. Dense Depth Maps-based Human Pose Tracking and Recognition in Dynamic Scenes Using Ridge Data. In Proceedings of the IEEE International Conference on Advanced Video and Signal-Based Surveillance, Seoul, Korea, 26–29 August 2014; pp. 119–124. [Google Scholar]

- Jalal, A.; Kamal, S. Real-Time Life Logging via a Depth Silhouette-based Human Activity Recognition System for Smart Home Services. In Proceedings of the IEEE International Conference on Advanced Video and Signal-Based Surveillance, Seoul, Korea, 26–29 August 2014; pp. 74–80. [Google Scholar]

- Jalal, A.; Kim, Y.; Kim, D. Ridge body parts features for human pose estimation and recognition from RGB-D video data. In Proceedings of the Fifth International Conference on Computing, Communications and Networking Technologies (ICCCNT), Hefei, China, 11–14 July 2014; pp. 1–6. [Google Scholar]

- Jalal, A.; Kim, Y.; Kamal, S.; Farooq, A.; Kim, D. Human daily activity recognition with joints plus body features representation using Kinect sensor. In Proceedings of the IEEE International Conference on Informatics, Electronics and Vision, Fukuoka, Japan, 15–18 June 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Jalal, A.; Kim, J.T.; Kim, T.S. Human activity recognition using the labeled depth body parts information of depth silhouettes. In Proceedings of the 6th International Symposium on Sustainable Healthy Buildings, Seoul, Korea, 27 February 2012; pp. 1–8. [Google Scholar]

- Jalal, A.; Kamal, S.; Farooq, A.; Kim, D. A spatiotemporal motion variation features extraction approach for human tracking and pose-based action recognition. In Proceedings of the IEEE International Conference on Informatics, Electronics and Vision, Fukuoka, Japan, 15–18 June 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Pervaiz, M.; Jalal, A.; Kim, K. Hybrid Algorithm for Multi People Counting and Tracking for Smart Surveillance. In Proceedings of the 2021 International Bhurban Conference on Applied Sciences and Technologies (IBCAST), Islamabad, Pakistan, 12–16 January 2021; pp. 530–535. [Google Scholar] [CrossRef]

- Fang, H.-S.; Cao, J.; Tai, Y.-W.; Lu, C. Pairwise Body-Part Attention for Recognizing Human-Object Interactions. In Proceedings of the ECCV, Munich, Germany, 8–14 September 2018; pp. 51–67. [Google Scholar] [CrossRef]

- Mallya, A.; Lazebnik, S. Learning Models for Actions and Person-Object Interactions with Transfer to Question Answering. In Proceedings of the CVPR, New Orleans, LA, USA, 14–19 June 2020; pp. 414–428. [Google Scholar]

- Yan, W.; Gao, Y.; Liu, Q. Human-object Interaction Recognition Using Multitask Neural Network. In Proceedings of the 2019 3rd International Symposium on Autonomous Systems (ISAS), Shanghai, China, 29–31 May 2019; pp. 323–328. [Google Scholar] [CrossRef]

- Gkioxari, G.; Girshick, R.; Dollár, P.; He, K. Detecting and recognizing human-object interactions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8359–8367. [Google Scholar]

- Li, J.; Xiong, C.; Hoi, S.C. Comatch: Semi-supervised learning with contrastive graph regularization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 9475–9484. [Google Scholar]

- Li, Y.-L.; Liu, X.; Lu, H.; Wang, S.; Liu, J.; Li, J.; Lu, C. Detailed 2D-3D Joint Representation for Human-Object Interaction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10163–10172. [Google Scholar] [CrossRef]

- Xia, L.-M.; Wu, W. Graph-based method for human-object interactions detection. J. Cent. South Univ. 2021, 28, 205–218. [Google Scholar] [CrossRef]

- Yang, D.; Zou, Y. A graph-based interactive reasoning for human-object interaction detection. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence (IJCAI-20), Yokohama, Japan, 11–17 July 2020; pp. 1111–1117. [Google Scholar]

- Sunkesula, S.P.R.; Dabral, R.; Ramakrishnan, G. Lighten: Learning interactions with graph and hierarchical temporal networks for hoi in videos. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 691–699. [Google Scholar]

- Qi, S.; Wang, W.; Jia, B.; Shen, J.; Zhu, S.-C. Learning Human-Object Interactions by Graph Parsing Neural Networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 401–417. [Google Scholar] [CrossRef]

- Liu, X.; Ji, Z.; Pang, Y.; Han, J.; Li, X. DGIG-Net: Dynamic Graph-in-Graph Networks for Few-Shot Human-Object Interaction. IEEE Trans. Cybern. 2021, 1–13. [Google Scholar] [CrossRef]

- Vedaldi, A.; Soatto, S. Quick Shift and Kernel Methods for Mode Seeking. In Proceedings of the ECCV, Marseille, France, 12–18 October 2008; pp. 705–718. [Google Scholar] [CrossRef]

- Xu, X.; Li, G.; Xie, G.; Ren, J.; Xie, X. Weakly supervised deep semantic segmentation using CNN and ELM with semantic candidate regions. Complexity 2019, 2019, 9180391. [Google Scholar] [CrossRef]

- Falcao, A.X.; Stolfi, J.; de Alencar Lotufo, R. The image foresting transform: Theory, algorithms, and applications. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 19–29. [Google Scholar] [CrossRef]

- Wang, H.; Schmid, C. Action recognition with improved trajectories. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 2–8 December 2013; pp. 3551–3558. [Google Scholar]

- Wang, Z.; Fan, B.; Wu, F. Local intensity order pattern for feature description. In Proceedings of the International Conference on Computer Vision, Barcela, Spain, 6–13 November 2011; pp. 603–610. [Google Scholar]

- Ahad, A.R.; Ahmed, M.; Das Antar, A.; Makihara, Y.; Yagi, Y. Action recognition using kinematics posture feature on 3D skeleton joint locations. Pattern Recognit. Lett. 2021, 145, 216–224. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Z.; Wu, Y.; Yuan, J. Learning actionlet ensemble for 3D human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 914–927. [Google Scholar] [CrossRef] [PubMed]

- Lazebnik, S.; Schmid, C.; Ponce, J. Beyond bags of features: Spatial pyramid matching for recognizing natural scene categories. In Proceedings of the IEEE Computer Society on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 2169–2178. [Google Scholar]

- Oliva, A.; Torralba, A. Modeling the Shape of the Scene: A Holistic Representation of the Spatial Envelope. Int. J. Comput. Vis. 2001, 42, 145–175. [Google Scholar] [CrossRef]

- Tenenbaum, J.B.; de Silva, V.; Langford, J.C. A Global Geometric Framework for Nonlinear Dimensionality Reduction. Science 2000, 290, 2319–2323. [Google Scholar] [CrossRef] [PubMed]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. In Proceedings of the ICLR 2017, Toulon, France, 24–26 April 2017; pp. 1–17. [Google Scholar]

- Niebles, J.C.; Chen, C.-W.; Fei-Fei, L. Modeling Temporal Structure of Decomposable Motion Segments for Activity Classification. In Proceedings of the 11th European Conference on Computer Vision (ECCV), Heraklion Crete, Greece, 5–11 September 2010; pp. 392–405. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Z.; Wu, Y.; Yuan, J. Mining actionlet ensemble for action recognition with depth cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1290–1297. [Google Scholar]

- Xu, X.; Joo, H.; Mori, G.; Savva, M. D3D-HOI: Dynamic 3D Human-Object Interactions from Videos. arXiv 2021, arXiv:2108.08420. [Google Scholar]

- Waheed, M.; Javeed, M.; Jalal, A. A Novel Deep Learning Model for Understanding Two-Person Intractions Using Depth Sensors. In Proceedings of the ICIC, Lahore, Pakistan, 9–10 November 2021; pp. 1–8. [Google Scholar]

- Andresini, G.; Appice, A.; Malerba, D. Autoencoder-based deep metric learning for network intrusion detection. Inf. Sci. 2021, 569, 706–727. [Google Scholar] [CrossRef]

- Mavroudi, E.; Tao, L.; Vidal, R. Deep moving poselets for video based action recognition. In Proceedings of the Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 111–120. [Google Scholar]

- Jain, M.; Jégou, H.; Bouthemy, P. Improved Motion Description for Action Classification. Front. ICT 2016, 2, 28. [Google Scholar] [CrossRef]

- Waheed, M.; Jalal, A.; Alarfaj, M.; Ghadi, Y.Y.; Al Shloul, T.; Kamal, S.; Kim, D.-S. An LSTM-Based Approach for Understanding Human Interactions Using Hybrid Feature Descriptors over Depth Sensors. IEEE Access 2021, 9, 167434–167446. [Google Scholar] [CrossRef]

- Tomas, A.; Biswas, K.K. Human activity recognition using combined deep architectures. In Proceedings of the IEEE International Conference on Signal and Image Processing (ICSIP), Singapore, 4–6 August 2017; pp. 41–45. [Google Scholar]

- Liang, M.; Jiao, L.; Yang, S.; Liu, F.; Hou, B.; Chen, H. Deep Multiscale Spectral-Spatial Feature Fusion for Hyperspectral Images Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2911–2924. [Google Scholar] [CrossRef]

| Serial No. | Olympic Sports | MSR Daily Activity 3D | D3D-HOI |

|---|---|---|---|

| 1 | basketball | drink | refrigerator |

| 2 | bowling | eat | storage furniture |

| 3 | tennis-serve | read book | trashcan |

| 4 | platform | write on a paper | washing machine |

| 5 | discus | use laptop | microwave |

| 6 | hammer | play game | dishwasher |

| 7 | javelin | call cellphone | laptop |

| 8 | shot put | use vacuum cleaner | oven |

| 9 | spring board | play guitar | - |

| 10 | clean jerk | lay on a sofa | - |

| Layer Type | Equation | Complexity | Dataset | Feature Map | Time (s) |

|---|---|---|---|---|---|

| Convolutional | (18) | Olympic Sports | 1 × 3,316,800 | 29,851,200 | |

| MSR Daily Activity | 1 × 1,382,000 | 12,438,000 | |||

| D3D HOI | 1 × 1,768,960 | 15,920,640 | |||

| Max Pooling | (19) | Olympic Sports | 1 × 3,316,800 | 7,462,800 | |

| MSR Daily Activity | 1 × 1,382,000 | 3,109,500 | |||

| D3D HOI | 1 × 1,768,960 | 3,980,160 | |||

| Fully Connected | (20) | Olympic Sport | 3,316,800 | 31,095,000 | |

| MSR Daily Activity | 1,382,000 | 39,801,600 | |||

| D3D HOI | 1,768,960 | 31,841,280 |

| Part | BB | BL | TS | PT | DS | HR | JV | SP | SB | CJ | AVG |

|---|---|---|---|---|---|---|---|---|---|---|---|

| HD | 92.23 | 90.34 | 90.03 | 90.12 | 92.24 | 93.4 | 94.32 | 90.45 | 88.02 | 90.12 | 91.13 |

| RE | 95.67 | 93.03 | 92.12 | 89.45 | 93.56 | 96.05 | 92.35 | 94.32 | 89.45 | 87.61 | 92.36 |

| LE | 87.61 | 95.67 | 91.78 | 94.38 | 87.61 | 89.45 | 93.62 | 87.61 | 93.56 | 89.45 | 91.07 |

| RH | 91.45 | 90.51 | 89.45 | 95.67 | 92.23 | 94.38 | 90.56 | 93.27 | 95.67 | 93.27 | 92.65 |

| LH | 89.45 | 90.12 | 88.02 | 91.45 | 92.35 | 88.02 | 92.03 | 93.56 | 96.05 | 92.23 | 91.33 |

| NK | 94.38 | 96.05 | 94.38 | 95.67 | 93.35 | 93.35 | 91.14 | 92.23 | 87.61 | 95.67 | 93.38 |

| TRS | 93.62 | 92.72 | 95.67 | 87.24 | 87.61 | 87.61 | 93.35 | 95.67 | 92.23 | 87.61 | 91.33 |

| BTR | 92.23 | 95.67 | 89.45 | 92.06 | 94.38 | 92.06 | 87.61 | 89.45 | 93.56 | 88.02 | 91.45 |

| RK | 93.35 | 91.39 | 94.38 | 92.23 | 88.02 | 92.23 | 93.24 | 93.56 | 94.38 | 92.23 | 92.50 |

| LK | 93.56 | 88.02 | 93.56 | 95.67 | 94.32 | 93.56 | 90.76 | 94.32 | 92.23 | 92.06 | 92.81 |

| RF | 89.45 | 91.45 | 89.45 | 91.45 | 94.38 | 92.23 | 89.45 | 94.38 | 88.02 | 94.32 | 91.46 |

| LF | 87.61 | 89.45 | 95.67 | 94.12 | 92.06 | 93.27 | 93.56 | 89.45 | 89.72 | 88.02 | 91.29 |

| Average part detection rate = 91.89% | |||||||||||

| Part | DR | ET | RB | WP | UL | PG | CC | UV | PR | LS | AVG |

|---|---|---|---|---|---|---|---|---|---|---|---|

| HD | 92.23 | 90.34 | 90.03 | 90.12 | 92.24 | 93.4 | 94.32 | 90.45 | 94.35 | 90.12 | 91.76 |

| RE | 95.67 | 93.03 | 92.12 | 90.11 | 93.56 | 96.05 | 92.35 | 94.32 | 93.27 | 96.05 | 93.65 |

| LE | 93.35 | 95.67 | 91.78 | 94.38 | 96.05 | 94.38 | 93.62 | 92.23 | 93.56 | 94.32 | 93.93 |

| RH | 91.45 | 90.51 | 91.63 | 95.67 | 92.23 | 94.38 | 90.56 | 93.27 | 95.67 | 93.27 | 92.86 |

| LH | 97.59 | 90.12 | 95.67 | 91.45 | 92.35 | 97.59 | 92.03 | 93.56 | 96.05 | 92.23 | 93.86 |

| NK | 94.38 | 96.05 | 94.38 | 95.67 | 93.35 | 93.35 | 91.14 | 92.23 | 97.59 | 95.67 | 94.38 |

| TRS | 93.62 | 92.72 | 95.67 | 87.24 | 94.32 | 95.67 | 93.35 | 95.67 | 92.23 | 94.32 | 93.48 |

| BTR | 92.23 | 95.67 | 97.59 | 93.56 | 94.38 | 94.38 | 90.42 | 92.23 | 93.56 | 96.05 | 94.01 |

| RK | 93.35 | 91.39 | 94.38 | 92.23 | 97.59 | 92.23 | 93.24 | 93.56 | 94.38 | 92.23 | 93.46 |

| LK | 93.56 | 97.59 | 93.56 | 95.67 | 94.32 | 93.56 | 90.76 | 94.32 | 92.23 | 94.38 | 94.00 |

| RF | 93.27 | 91.45 | 92.23 | 91.45 | 94.38 | 92.23 | 91.09 | 94.38 | 97.59 | 94.32 | 93.24 |

| LF | 95.67 | 92.35 | 95.67 | 94.38 | 93.27 | 93.27 | 93.56 | 92.35 | 94.38 | 93.27 | 93.82 |

| Average part detection rate = 93.53% | |||||||||||

| Part | BB | BL | TS | PT | JV | SP | SB | CJ | AVG |

|---|---|---|---|---|---|---|---|---|---|

| HD | 93.21 | 90.34 | 90.03 | 90.12 | 92.24 | 93.4 | 94.32 | 90.45 | 91.764 |

| RE | 92.23 | 93.03 | 92.12 | 90.11 | 92.35 | 96.05 | 92.35 | 94.32 | 92.820 |

| LE | 86.29 | 95.67 | 91.78 | 94.38 | 96.05 | 84.12 | 93.62 | 92.23 | 91.768 |

| RH | 91.45 | 90.51 | 91.63 | 95.67 | 86.45 | 94.38 | 90.56 | 93.27 | 91.740 |

| LH | 97.59 | 90.12 | 95.67 | 91.45 | 92.35 | 97.59 | 92.03 | 82.32 | 92.390 |

| NK | 94.38 | 96.05 | 94.38 | 95.67 | 85.23 | 82.75 | 91.14 | 92.23 | 91.479 |

| TRS | 93.62 | 92.72 | 95.67 | 87.24 | 94.32 | 95.67 | 93.35 | 95.67 | 93.533 |

| BTR | 92.23 | 95.67 | 97.59 | 92.23 | 94.38 | 83.03 | 90.42 | 92.23 | 92.223 |

| RK | 90.09 | 91.39 | 94.32 | 92.23 | 97.59 | 92.23 | 93.24 | 95.67 | 93.345 |

| LK | 88.43 | 97.59 | 92.23 | 95.67 | 94.32 | 82.45 | 90.76 | 94.32 | 91.971 |

| RF | 93.27 | 91.45 | 92.23 | 91.45 | 84.77 | 92.23 | 91.09 | 94.38 | 91.359 |

| LF | 94.38 | 92.35 | 95.67 | 94.38 | 93.27 | 93.27 | 93.03 | 92.35 | 93.588 |

| Average part detection rate = 92.33% | |||||||||

| HOI Class | CNN | FCN | GCN | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | Precision | Recall | F1-Score | Precision | Recall | F1-Score | |

| BB | 0.87 | 0.88 | 0.87 | 0.93 | 0.93 | 0.93 | 0.95 | 0.97 | 0.96 |

| BL | 0.91 | 0.91 | 0.91 | 0.91 | 0.91 | 0.91 | 0.94 | 0.95 | 0.94 |

| TS | 0.92 | 0.92 | 0.92 | 0.93 | 0.94 | 0.93 | 0.95 | 0.96 | 0.95 |

| PT | 0.92 | 0.91 | 0.91 | 0.92 | 0.93 | 0.92 | 0.95 | 0.95 | 0.95 |

| DS | 0.89 | 0.90 | 0.89 | 0.89 | 0.90 | 0.89 | 0.93 | 0.94 | 0.93 |

| HR | 0.88 | 0.89 | 0.88 | 0.88 | 0.89 | 0.88 | 0.93 | 0.93 | 0.93 |

| JV | 0.89 | 0.89 | 0.89 | 0.91 | 0.91 | 0.91 | 0.92 | 0.94 | 0.93 |

| SP | 0.87 | 0.88 | 0.87 | 0.87 | 0.88 | 0.87 | 0.92 | 0.92 | 0.92 |

| SB | 0.87 | 0.87 | 0.87 | 0.87 | 0.87 | 0.87 | 0.91 | 0.92 | 0.91 |

| CJ | 0.88 | 0.88 | 0.88 | 0.89 | 0.89 | 0.89 | 0.92 | 0.93 | 0.92 |

| Mean | 0.89 | 0.89 | 0.89 | 0.90 | 0.91 | 0.90 | 0.93 | 0.94 | 0.94 |

| HOI Class | CNN | FCN | GCN | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | Precision | Recall | F1-Score | Precision | Recall | F1-Score | |

| DR | 0.88 | 0.87 | 0.87 | 0.91 | 0.91 | 0.91 | 0.92 | 0.93 | 0.92 |

| ET | 0.88 | 0.88 | 0.88 | 0.91 | 0.91 | 0.91 | 0.92 | 0.93 | 0.92 |

| RB | 0.85 | 0.86 | 0.85 | 0.88 | 0.89 | 0.88 | 0.91 | 0.92 | 0.91 |

| WP | 0.87 | 0.88 | 0.87 | 0.89 | 0.91 | 0.90 | 0.93 | 0.93 | 0.93 |

| UL | 0.89 | 0.89 | 0.89 | 0.89 | 0.91 | 0.90 | 0.93 | 0.93 | 0.93 |

| PG | 0.85 | 0.86 | 0.85 | 0.87 | 0.88 | 0.87 | 0.92 | 0.92 | 0.92 |

| CC | 0.84 | 0.85 | 0.84 | 0.89 | 0.89 | 0.89 | 0.90 | 0.91 | 0.90 |

| UV | 0.91 | 0.91 | 0.91 | 0.91 | 0.93 | 0.92 | 0.94 | 0.96 | 0.95 |

| PR | 0.85 | 0.85 | 0.85 | 0.89 | 0.91 | 0.90 | 0.92 | 0.93 | 0.92 |

| LS | 0.91 | 0.91 | 0.91 | 0.92 | 0.93 | 0.92 | 0.95 | 0.96 | 0.95 |

| Mean | 0.87 | 0.88 | 0.87 | 0.90 | 0.91 | 0.90 | 0.92 | 0.93 | 0.93 |

| HOI Class | CNN | FCN | GCN | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | Precision | Recall | F1-Score | Precision | Recall | F1-Score | |

| RG | 0.87 | 0.89 | 0.88 | 0.91 | 0.91 | 0.91 | 0.91 | 0.92 | 0.91 |

| SF | 0.87 | 0.88 | 0.87 | 0.87 | 0.88 | 0.87 | 0.88 | 0.90 | 0.89 |

| TC | 0.83 | 0.83 | 0.83 | 0.85 | 0.85 | 0.85 | 0.87 | 0.87 | 0.87 |

| WM | 0.82 | 0.83 | 0.82 | 0.85 | 0.86 | 0.85 | 0.91 | 0.91 | 0.91 |

| MW | 0.86 | 0.85 | 0.85 | 0.88 | 0.89 | 0.88 | 0.91 | 0.92 | 0.91 |

| DW | 0.87 | 0.86 | 0.86 | 0.86 | 0.86 | 0.86 | 0.88 | 0.88 | 0.88 |

| LP | 0.82 | 0.82 | 0.82 | 0.85 | 0.87 | 0.86 | 0.89 | 0.89 | 0.89 |

| OV | 0.84 | 0.84 | 0.84 | 0.88 | 0.89 | 0.88 | 0.88 | 0.88 | 0.88 |

| Mean | 0.85 | 0.85 | 0.85 | 0.87 | 0.88 | 0.87 | 0.89 | 0.90 | 0.89 |

| Method | Mean Accuracy % | ||

|---|---|---|---|

| Olympic Sports | MSR Daily Activity 3D | D3D-HOI | |

| Metric learning autoencoder [57] | - | 67.1 | - |

| Deep moving poselets [58] | - | 84.4 | - |

| CNN [56] | - | - | 85.1 |

| DCS motion descriptors [59] | 85.2 | - | - |

| Actionlet ensemble [48] | - | 86.0 | - |

| CNN-LSTM [60] | - | - | 87.2 |

| Improved trajectories [45] | 90.2 | - | - |

| Combined deep architectures [61] | - | 91.3 | - |

| Spatial feature fusion [62] | - | 92.9 | - |

| Proposed | 94.1 | 93.2 | 89.6 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ghadi, Y.Y.; Waheed, M.; Gochoo, M.; Alsuhibany, S.A.; Chelloug, S.A.; Jalal, A.; Park, J. A Graph-Based Approach to Recognizing Complex Human Object Interactions in Sequential Data. Appl. Sci. 2022, 12, 5196. https://doi.org/10.3390/app12105196

Ghadi YY, Waheed M, Gochoo M, Alsuhibany SA, Chelloug SA, Jalal A, Park J. A Graph-Based Approach to Recognizing Complex Human Object Interactions in Sequential Data. Applied Sciences. 2022; 12(10):5196. https://doi.org/10.3390/app12105196

Chicago/Turabian StyleGhadi, Yazeed Yasin, Manahil Waheed, Munkhjargal Gochoo, Suliman A. Alsuhibany, Samia Allaoua Chelloug, Ahmad Jalal, and Jeongmin Park. 2022. "A Graph-Based Approach to Recognizing Complex Human Object Interactions in Sequential Data" Applied Sciences 12, no. 10: 5196. https://doi.org/10.3390/app12105196

APA StyleGhadi, Y. Y., Waheed, M., Gochoo, M., Alsuhibany, S. A., Chelloug, S. A., Jalal, A., & Park, J. (2022). A Graph-Based Approach to Recognizing Complex Human Object Interactions in Sequential Data. Applied Sciences, 12(10), 5196. https://doi.org/10.3390/app12105196