1. Introduction

Emotion plays a significant role in daily life and intelligent dialogue systems. Emotion recognition in conversation (ERC), which is one of the important tasks of Natural Language Processing, has attracted more and more attention in recent years. ERC is to predict the emotion of each utterance in a conversation. ERC is more challenging, considering the sequential information of the conversation and the self-speaker dependencies and inter-speaker dependencies [

1].

In the literature, many neural network models have been applied to model dialogue and dependencies, such as recurrent neural networks [

2,

3], graph-based convolutional neural networks [

4,

5], and attention mechanisms [

6,

7,

8]. However, some problems should not be ignored. A vital issue of emotion recognition in conversation is the lack of available labeled data, which is hard to collect and annotate. The emotion of the same statement in different dialogue scenarios is determined according to the context, rather than the same emotion [

9]. It is difficult for annotators to figure out the contextual information. So, there are a relatively small number of available datasets, such as in the more commonly used data IEMOCAP (the IEMOCAP and MELD are two common datasets for ERC, which will be described in detail in

Section 4.1) [

10], there are only 153 dialogues. It is challenging to finish the supervised training required by large-scale neural networks.

In addition, some datasets contain only two participants in each conversation, while others have multiple participants.

Figure 1 shows a conversation from the MELD (the IEMOCAP and MELD are two common datasets for ERC, which will be described in detail in

Section 4.1) [

11], which comprises five participants. It is challenging to model speakers dependencies. The IEMOCAP dataset has five sessions, and the last session is divided into test data, which means that there are different speakers in the training data and the test data. These factors have made it more challenging to model speakers and emotion dependencies.

Cross-domain sentiment classification, which aims to transfer knowledge to the target domain from the source domain, is one of the effective ways to alleviate the lack of datasets in the target domain. Many excellent achievements have been made in cross-domain sentiment classification [

12,

13,

14]. Cross-domain sentiment classification generally requires the source domain data to be labeled. Unlike sentiment classification, ERC lacks large scale datasets and aims to identify the emotion of each utterance in a conversation, rather than a single sentence. An utterance of the speaker is affected by their own and external factors, such as topic, speaker’s personality, argumentation logic, viewpoint, intent, etc. [

9]. In turn, the utterance reflects these factors to a certain extent. These factors may lead to improved conversation understanding, including emotion recognition [

9]. For the above reasons, aiming at the problem of lack of labeled data, Hazarika et al. [

15] pre-trained a whole conversation jointly using a hierarchical generative model and transfer the knowledge to the target domain.

In summary, the generation task can be used to assist the ERC task, since the dialogue generation task and ERC have some similarities. However, the spatial distribution of feature vectors in the source and target domains is inconsistent after introducing external generation datasets.

Inspired by cross-domain sentiment classification, to alleviate the problem of inconsistency in the domain feature space of the source domain dataset and target domain dataset, we propose a Domain Adversarial Network for Cross-Domain Emotion Recognition in Conversation (DAN-CDERC) model, which transfers the knowledge from the domain adversarial model to the emotion recognition model, instead of directly modeling historical information and speaker information. The domain adversarial model consists of the encoders, a generator and a domain discriminator. The encoders are used to learn sequence knowledge from the massive amounts of the generative dataset. The generator generates an utterance in the source domain. The discriminator performs domain adaptation by discriminating the domain, which plays an essential role in reducing the domain inconsistency for domain adaptation. Since both the generative conversational task and the ERC task also need to model the dialogue context, DAN-CDERC can use the dialogue sequence knowledge from the large-scale dialogue generation dataset to assist the ERC in modeling the dialogue context. Due to the use of the domain adversarial network, our DAN-CDERC avoids the dissimilarity of the domain and vector space during the transferring process. In this paper, we try to achieve the same effect of these models without explicitly modeling speakers.

For sentence-level classification, the domain discriminator is used to discriminate sentences. Our discriminator can discriminate each utterance in the conversation that belongs to the source domain or the target domain, rather than the whole conversation. We believe this is important. On the one hand, our model is relatively simple, with only two encoder layers. It is challenging to represent the whole conversation with a vector effectively. On the other hand, using sequential utterances is more conducive to learning and transferring sequence knowledge.

In summary, our contributions are as follows:

To alleviate the problem of the small scale of the ERC task dataset, we propose Domain Adversarial Network for Cross-Domain Emotion Recognition in Conversation, which not only learns knowledge from large-scale generative conversational datasets, but also utilizes adversarial networks to reduce the difference between source and target domains;

We use two large-scale generative conversational datasets and three emotion recognition datasets to verify model performance. The empirical studies illustrate the effectiveness of the proposed model, even without modeling information dependencies such as speakers.

The rest of the paper is organized as follows:

Section 2 discusses related work;

Section 3 provides details of our model;

Section 4 shows and interprets the experimental results;

Section 5 analyses and discusses the experimental results; and finally,

Section 6 concludes the paper.

2. Related Work

Inspired by sentence-level cross-domain sentiment analysis, this paper utilizes large-scale dialogue generative datasets, adversarial networks and transfer learning for Emotion Recognition in Conversation. The related work includes dialogue generation, Emotion Recognition in Conversation, Cross-Domain Sentiment Analysis, Adversarial Network and Transfer Learning.

2.1. Dialogue Generation

Hierarchical Recurrent encoder–decoder (HRED) [

16] is a classic generative hierarchical neural network. It has three key components, including the utterance encoder, the context encoder and the decoder. The latent variable hierarchical recurrent encoder–decoder (VHRED) [

17] extended HRED. VHRED added a latent variable at the decoder, which is trained by maximizing a variational lower-bound on the log-likelihood. Variational Hierarchical Conversation RNN (VHCR) [

18] augmented a global conversational latent variable along with local utterance latent variables to build a hierarchical latent structure with a new regularization technique called utterance drop.

Moreover, ERC is a vital step to endowing the dialogue system with emotional perception. Some researchers are interested in how to make the dialogue system have emotional perception. Zhou et al. [

19] proposed novel mechanisms to make the responses more emotional respectively: embedded emotion categories, captured the change of implicit internal emotion states, and used explicit emotion expressions by an external emotion vocabulary. Deeksha et al. [

20] employed a multi-task learning framework to predict emotion labels, and used emotion labels to guide the modeling of empathetic conversations. Li et al. [

21] proposed a multi-resolution interactive empathetic dialogue model combining coarse-grained dialogue-level and fine-grained token-level emotions, which contains an interactive adversarial learning framework to judge emotional feedback. Xie et al. [

22] combined 32 emotions and eight additional emotion regulation intentions to complete the task of empathic response generation. Ide et al. [

23] made the generated responses more emotional by adding emotion recognition tasks.

2.2. Emotion Recognition in Conversation

Unlike the document-level sentiment and emotion classification, neural network models learn the representation of words and documents, and understand the self and inter-speaker dependencies, for sentiment and emotion classification in conversation. Most of the models for ERC are hierarchical network structures, including at least one utterance encoder layer to encode utterances, and one context encoder layer to encode contextual content.

A dialogue, generally composed of multi-turn utterances, happens in a natural sequence, which is suitable for modeling with RNN. So RNN has become a fundamental component for emotion detection in conversation. Poria et al. [

2] employed an LSTM-based to model dependencies and relations among the utterances. Majumder et al. [

3] used three GRUs to model the speaker, the context and the emotion of the preceding utterances. In addition, the attention mechanism is also an important component. Wei et al. [

24] employed GRUs and hierarchical attention to model the self and inter-speaker influences of utterances. Jiang et al. [

25] proposed a hierarchical model and introduced a convolutional self-attention network as an utterance encoder layer.

Due to the rising of graph neural network models and the problem of context propagation in the current RNN-based methods, some work RNN-based networks are replaced by graph networks. Ghosal et al. [

4] proposed Dialogue Graph Convolutional Network (DialogueGCN) to model self and inter-speaker dependencies. Zhang et al. [

5] tried to address context-sensitive dependencies and speaker-sensitive dependencies using a conversational graph-based convolutional neural network in multi-speaker conversation. Sheng et al. [

26] introduced a two-stage Summarization and Aggregation Graph Inference Network, which models inference for topic-related emotional phrases and local dependency reasoning over neighboring utterances. Zhang et al. [

27] proposed a dual-level graph attention mechanism that augments the semantic information of the utterance and multi-task learning to alleviate the confusion between a few non-neutral utterances and much more neutral ones. Ma et al. [

28] used a multi-view network to explore the emotion representation of a query from word-level and utterance-level views. TODKAT [

29] used a topic-augmented language model (LM) with an additional layer specialized for topic detection, and combined LM with commonsense statements derived from a knowledge base ATOMIC. SKAIG [

30] used commonsense knowledge to enrich the edges of the graph with knowledge representations from the model COMET.

2.3. Cross-Domain Sentiment Analysis

Cross-domain sentiment analysis is one of the areas where a classifier is trained in one source domain and applied to one target domain. Due to different expressions of emotions across several domains, many pivot-based methods [

14,

31,

32] have been proposed to address domain adaptation problems by learning non-pivot words and pivot words. The selection of non-pivot words and pivot words will directly affect the performance of the target domain. Another effective way is adversarial training [

13,

33,

34,

35], which obtains domain-invariant features by deceiving the discriminator.

2.4. Adversarial Network and Transfer Learning

Multi-source transfer learning can also lay a foundation for modeling various aspects of different emotions (e.g., mood, anxiety), where only a limited number of datasets with a small number of data samples are available.

Liang et al. [

36] treated emotion recognition and culture recognition as two adversarial tasks for cross-culture emotion recognition to address the problem of generalization across different cultures. Lian et al. [

37] and Li et al. [

38] treated the speaker characteristics and emotion recognition as two adversarial tasks to reduce the speaker’s influence on emotion recognition. Parthasarathy et al. [

39] proposed an Adversarial Autoencoder (AAE) to perform variational inference over the latent factors, including age, gender, emotional state, and content of speech.

Furthermore, some researchers utilize transfer learning for emotion recognition. Gideon et al. [

40] investigated that emotion recognition can benefit from using representations originally learned for different paralinguistic and different domains. Felbo et al. [

41] used 1246 million tweets to train a pre-training model for emoji recognition. Li et al. [

42] utilized a low-level transformer as the utterance encoder layer and a high-level transformer as the context encoder layer. EmotionX-IDEA [

43] and PT-Code [

44] learn emotional knowledge from BERT. Hazarika et al. [

15] pre-trained a whole conversation jointly using a hierarchical generative model and transferred it to the target domain.

Our work strives to tackle a small number of datasets of ERC. Hence we use a large amount of publicly available generative conversational datasets to model conversation, and introduce a domain discrimination task to enhance domain adaptability.

3. Domain Adversarial Network for Emotion Recognition in Conversation

In this paper, there are two domains: a source domain and a target domain . Because the source domain dataset is used to train the generative task, it has no emotional label. For the source domain, given a dialogue containing m utterances = {, , …, }, m is the length of dialogue, we can leverage {, , …, } and {, , …, } to train a generative conversational task. For the target domain, given a dialogue containing n utterances = {, , …, } and n labels = {, , …, }, n is the length of dialogue. Our goal is to predict the emotion labels of the .

This study proposes a

Domain Adversarial Network for Cross-Domain Emotion Recognition in Conversation (DAN-CDERC) model to address emotion recognition with generative conversation. DAN-CDERC contains two key components: the

Domain Adversarial model and the

Emotion Recognition model.

Figure 2 shows the architecture of the

Domain Adversarial model, where the input is {

,

, …,

} for the source domain and {

,

, …,

} for the source target domain. The output of the generator is the generated response sequence {

,

, …,

}, and the output of the discriminator is the domain labels.

Figure 3 shows the architecture of the

Emotion Recognition model, where the input is {

,

, …,

} and the output is emotion labels

= {

,

, …,

}.

The

Domain Adversarial model contains the

Encoder Layers, the

Generator model and the

Discriminator. The

Encoder Layers and the

Generator, from Hierarchical Recurrent encoder–decoder (HRED) [

16], are used to learn sequence knowledge from the massive amounts of generative dataset. The

Discriminator performs domain adaptation by discriminating the domain, which plays an essential role in reducing the domain inconsistency for domain adaptation. For the emotion recognition model, we leverage BERT [

45] to encode utterances, and LSTM (context encoder) to encode context, which learns context weights from the generative conversational model. First, we leverage

= {

,

, …,

} and {

,

, …,

} to train a generative conversational model, and leverage

and

to train the domain-distinguish task. Then a part of the parameters of the generative model is transferred to the emotion recognition model (target task). We leverage

= {

,

, …,

} and

= {

,

, …,

} to train the emotion recognition model.

3.1. Domain Adversarial Model

3.1.1. Encoder Layers

The encoder layers include an utterance encoder and a context encoder. A Bidirectional LSTM is used as the utterance encoder, and a unidirectional LSTM is used as the context encoder. Given a dialogue

= {

,

, …,

}, the utterance encoder uses Equation (

1) to represent each

as a high-dimensional vector

. Then, the context encoder uses Equation (

2) to learn the sequence knowledge of the context and represent

as {

,

, …,

}.

3.1.2. Conversational Generator

The generator is used to decode and generate

one response at a time. In addition, at the decoding stage, the generator generates a new utterance

by computing a distribution over vocabulary

for target elements

by projecting the output of the decoder via a linear layer with weights

and bias

,

3.1.3. Domain Discriminator

The role of the domain discriminator is to predict the domain label of the utterance

which comes from the target domain or the source domain. The generator and the discriminator are trained in parallel. For each

through the encoding stage of

Section 3.1.1, an

can be obtained. Specifically, before feeding

to the domain classification, the

goes through the gradient reversal layer (GRL) [

33].

During the backpropagation, the role of the GRL is to reverse the gradient. The following equations are the forwardpropagation and backpropagation when

goes through GRL, respectively:

We denote the hidden state through the GRL as .

3.2. Emotion Recognition with Transfer Learning

Given a dialogue containing n utterances d = {, , …, }, n is the length of dialogue. Our goal is to predict their labels {, , …, }. This model has two components, including an utterance encoder and a context encoder.

3.2.1. Utterance Encoder

BERT is a classic pre-training model and has achieved good results on many NLP tasks. Consequently, BERT [

45] is used to encode utterances. We choose the BERT-based uncased pre-trained model as our utterance encoder. Through BERT, we can obtain representations of utterances,

3.2.2. Context Encoder and Transfer Learning

The context encoder of classification is the same as the encoder of the generative conversational model. The parameters of the context encoder of the generative model are used for initialization. The input of the context encoder is

, and the output

can be obtained by the following formulas:

We transfer

of the adversarial generative model to the context encoder of classification. Then

is used as inputs to a softmax output layer:

Here, and are model parameters, and is used to predict emotion.

3.3. Model Training

3.3.1. Conversational Generator

The goal is to maximize the output

probability given the input original

. Therefore, we optimize the negative log-likelihood loss function:

where

is the model parameters, and

is a pair (original utterance-new utterance) in training set

, then:

where

is calculated by the decoder.

3.3.2. Domain Discriminator and Joint Learning

We feed

through the GRL to the domain discriminator as:

Our training objective is to minimize the cross-entropy loss over a set of training examples:

We jointly train the conversational generator and the domain discriminator, and the final loss is the sum of the loss of the two tasks:

3.3.3. Emotion Recognition in Conversation

Given a dialogue

d including

n utterances and the pre-defined emotion

of

, our training objective is to minimize the cross-entropy loss over a set of training examples, with a

-regularization term,

where

is the predicted label, and

is the set of model parameters.

6. Conclusions

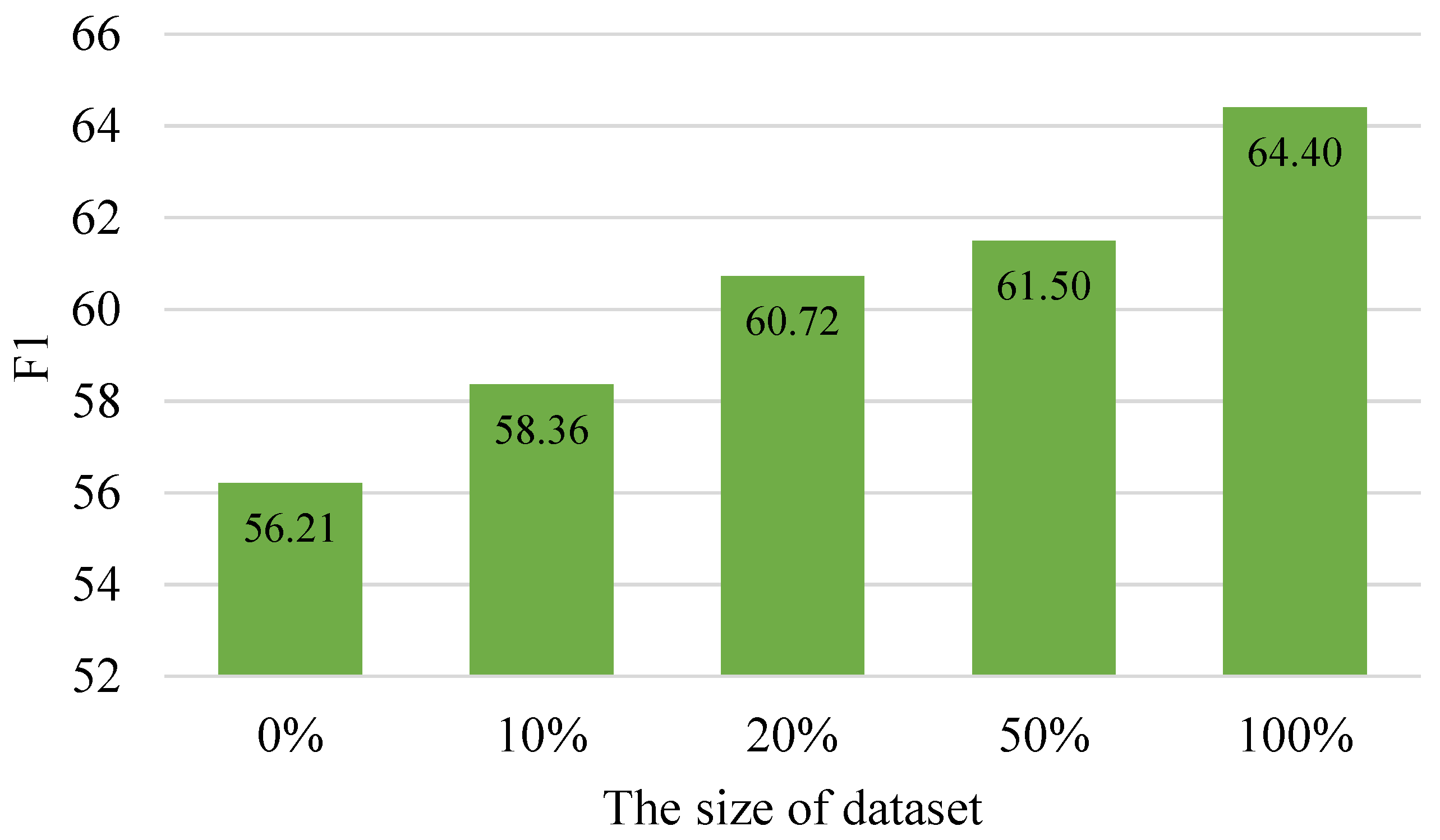

Given the lack of large-scale publicly available datasets, transfer learning is an effective way to alleviate this problem. We present a Domain Adversarial Network for Cross-Domain Emotion Recognition in Conversation (DAN-CDERC) model, consisting of two parts, namely the domain adversarial model and the emotion recognition model. The domain adversarial network employs a conversational dataset to train the generative task and a source domain and target domain dataset to train the domain discriminator for domain adaptation simultaneously. The emotion recognition model receives the transferred sequence knowledge and recognizes the emotions. When Cornell is the source dataset, the DAN-CDERC achieves an F1 of 64.40%, 59.44% and 55.20% on three datasets, all outperforming the baselines, without resorting to complex modeling inter and self-party dependency. In addition, the data scale of the source domain will have an impact on emotion recognition, but for different source domain datasets, domain similarity is more important than the data scale. Since DAN-CDERC does not model speakers’ information, it is effective not only for dyadic conversations, but also for multi-party conversations. Our method proves the feasibility of using conversational datasets and domain adaptation for ERC.

Although this paper attempts to solve the problem of domain adaptation for ERC, there is inconsistency in the domain space between different tasks and different datasets, which has not been fully considered. In addition, due to the introduction of large-scale data and the use of adversarial networks in this paper, the training time of the model on the source task is long, which is also one of the shortcomings of adversarial transfer networks. In the future, using more and faster adaptation strategies to solve the task of ERC is worthy of continuous and in-depth research.