Abstract

The anchor-free-based object detection method is a crucial part in an autonomous driving system because of its low computing cost. However, the under-fitting of positive samples and over-fitting of negative samples affect the detection performance. An aspect-aware anchor-free detector is proposed in this paper to address this problem. Specifically, it adds an aspect prediction head at the end of the detector, which can learn different distributions of aspect ratios between other objects. The sample definition method is improved to alleviate the problem of positive and negative sample imbalance. A loss function is designed to strengthen the learning weight of the center point of the network. The validation results show that the AP50 and AP75 of the proposed method are 97.3% and 93.4% on BCTSDB, and the average accuracies of the car, pedestrian, and cyclist are 92.7%, 77.4%, and 78.2% on KITTI, respectively. The comparison results demonstrate that the proposed algorithm is better than existing anchor-free methods.

1. Introduction

Vision-based object detection in traffic scenes plays a crucial role in an autonomous driving system. With the rapid development of deep learning in recent years, the performance of deep learning-based object detection has improved significantly. Two-dimensional object detection in an autonomous driving system can automatically recognize and locate the spatial position and category of the traffic object by learning the features of the traffic object and perceiving the traffic scenario to ensure the safety of the vehicle. It can be divided into anchor-based and anchor-free detection with the development of the convolutional neural network (CNN). In anchor-based detection, a set of preset size anchors are placed, and the positions and sizes of the anchors are regressed based on the ground truth. By contrast, anchor-free detectors directly detect objects without the anchors being set manually, which has a performance advantage over anchor-based detectors.

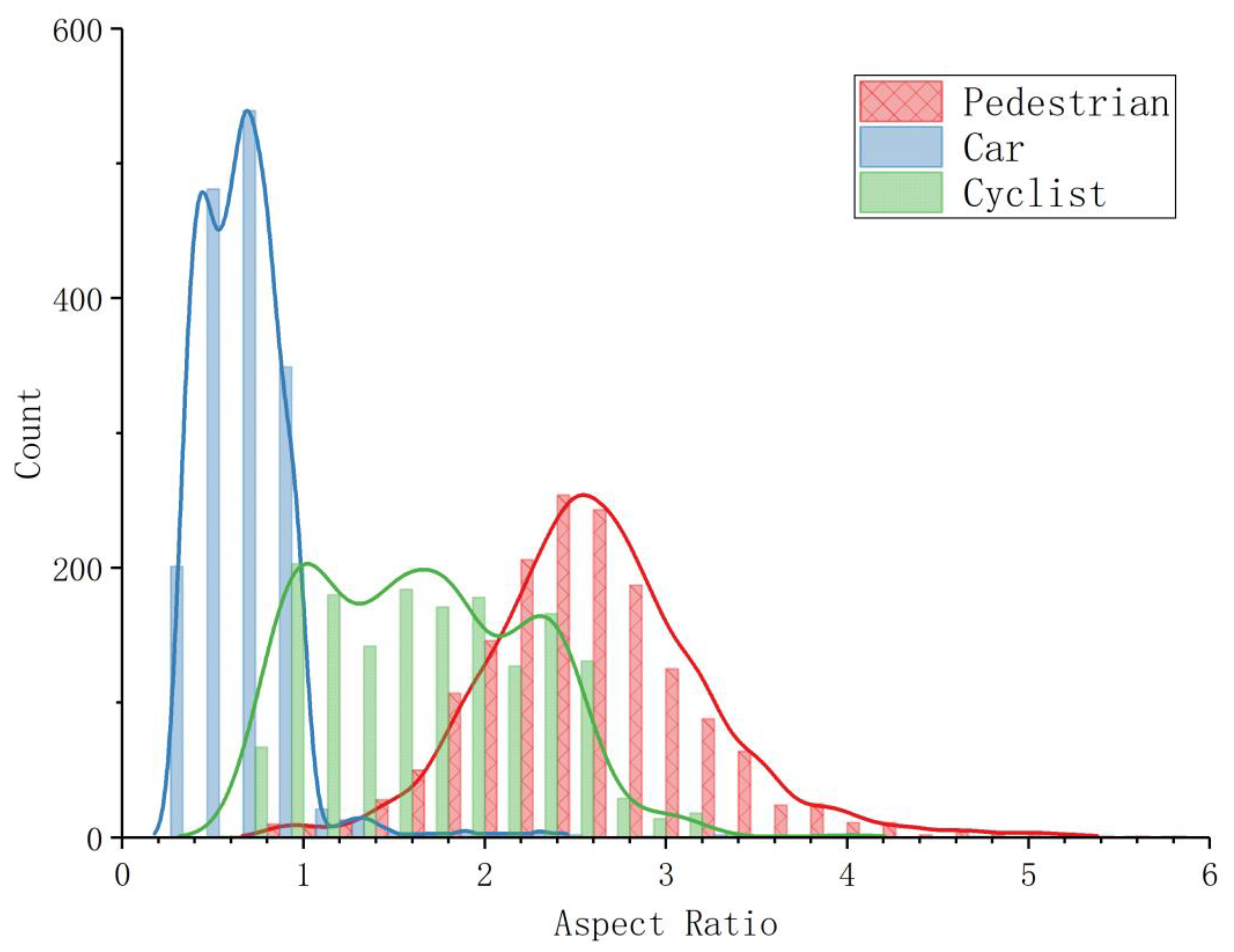

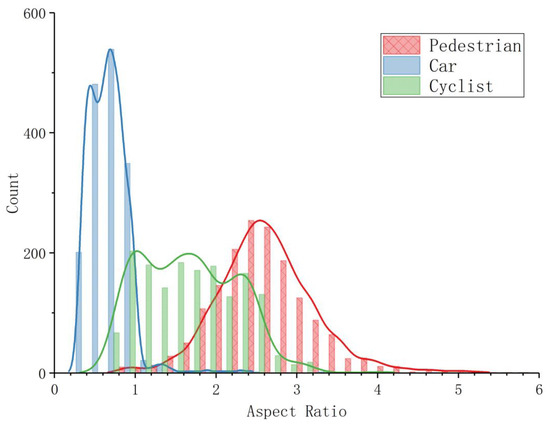

However, single-stage (dense) detectors that include anchor-based and anchor-free detectors still have many problems that affect performance, such as the definition of positive and negative samples, and the design of the loss function, which lead to sample imbalance problems. Additionally, the authors found that, in autonomous driving, the aspect ratios of different objects have different distributions, as shown in Figure 1. The inaccurate localization of objects can lead to the incorrect estimation of distance by the monocular ranging module, thus affecting the safe operation of autonomous driving. Anchor-based detectors (Faster R-CNN [1], and RetinaNet [2]) regress the object size using a large number of preset anchor boxes to make the network robust to aspect ratios. This mechanism has some drawbacks: (1) the anchor-based method performance is highly dependent on the selection of the number, size, and aspect ratio of the anchor. Anchor-based detectors need to fine-tune these hyperparameters. (2) In anchor-based detection, a set of anchor boxes needs to be densely placed. Most anchors are redundant and have little effect on model training. (3) Different detection tasks have different detection objects with large scale and shape variations, corresponding to the distribution of different anchor aspect ratios. It reduces the generalization ability of anchor-based detection method, for different anchor boxes need to be designed for new detection tasks. (4) In anchor-based detection, the computational cost increases due to the calculation of the intersection-over-union (IoU). Anchor-free detectors (CornerNet [3], CenterNet [4], and FCOS [5]) directly predict objects using key point or center point regression without preset anchor boxes. This mechanism can eliminate the hyperparameters related to the anchor, achieve similar performance to anchor-based detectors with less computational cost, and achieve better generalization ability. However, in regression using key points or the center point, prior knowledge of the aspect ratio is lacking, which increases the model training time required to fit the distribution.

Figure 1.

Aspect ratio distribution of different objects in KITTI. Different objects have different aspect ratio ground truths in traffic scenes. For example, cars have wide boundary boxes, and pedestrians have thin boundary boxes, relatively.

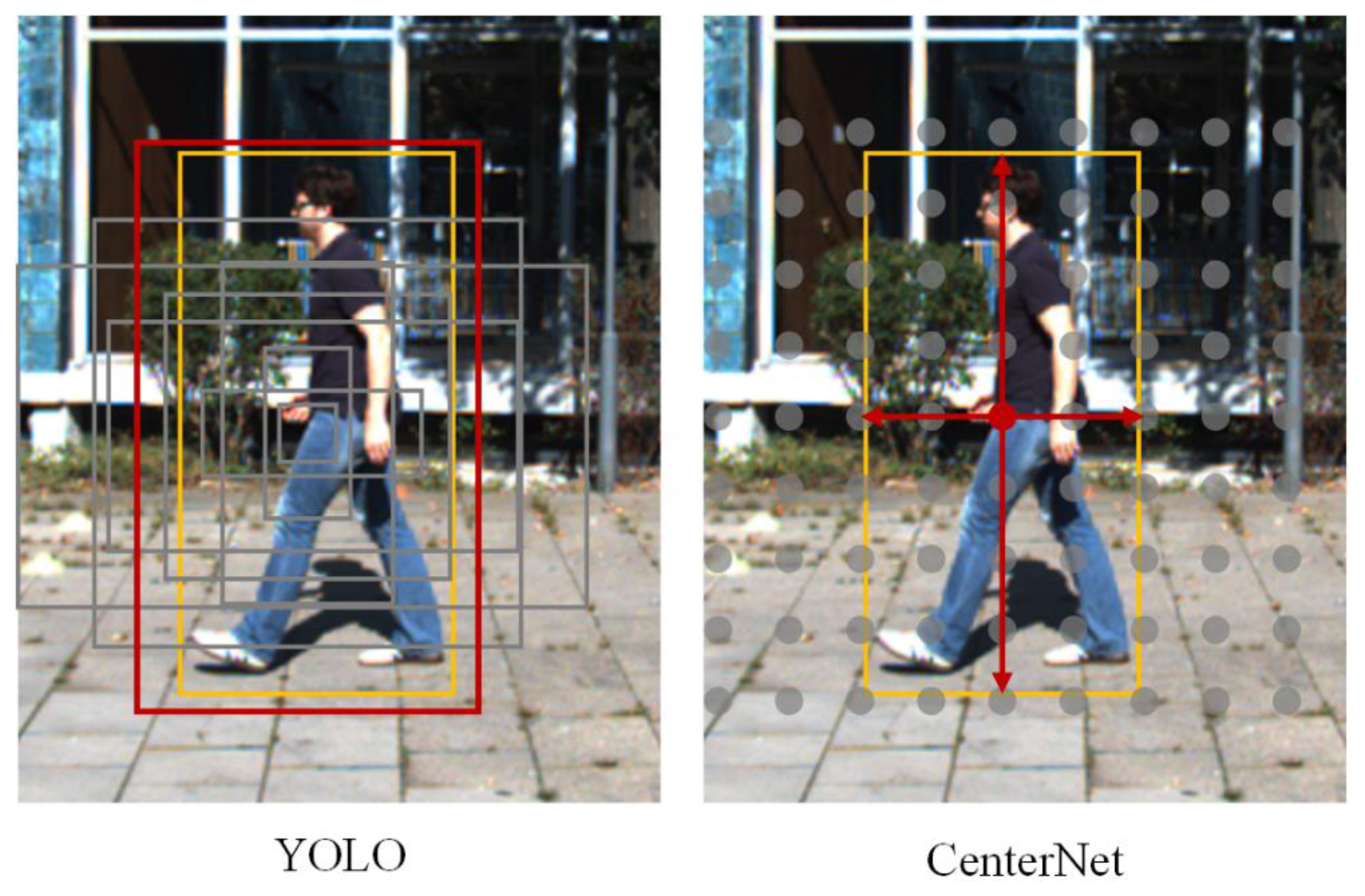

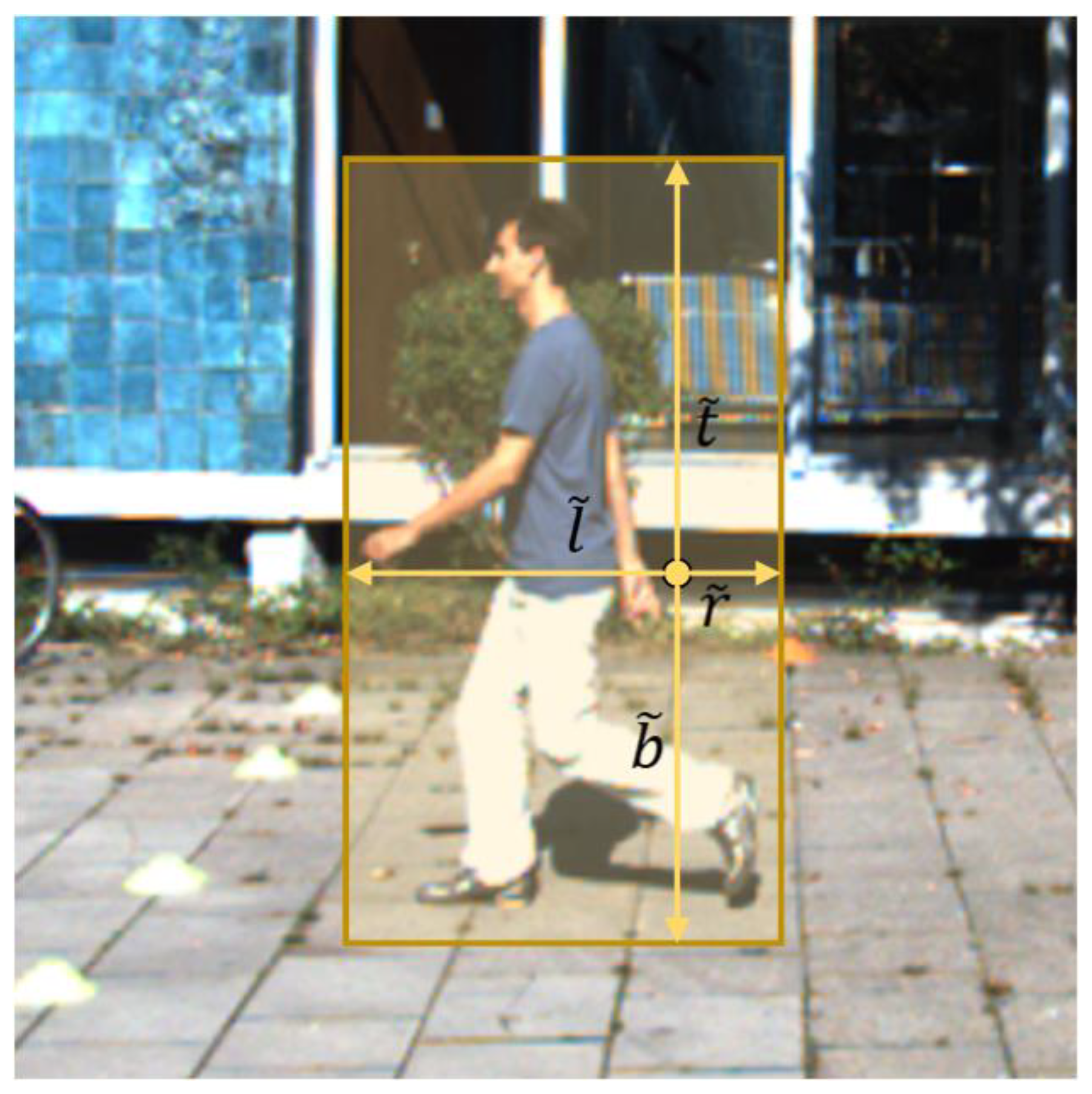

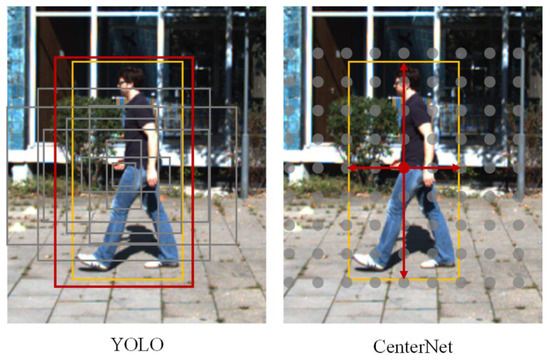

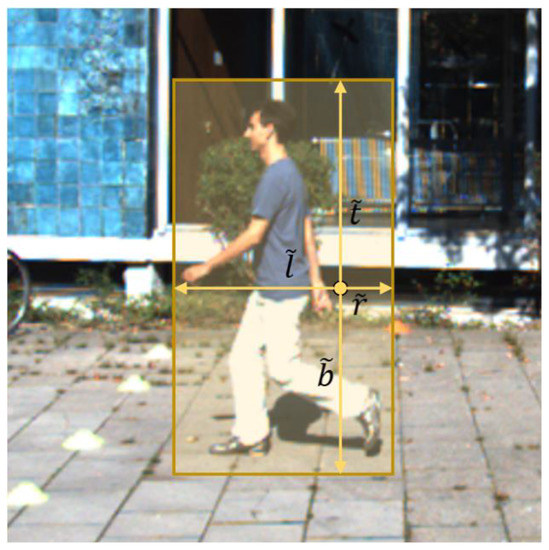

Additionally, ATSS [6] indicated that the performance gap between anchor-based and anchor-free detectors is mainly affected by the definition of positive and negative samples. Inappropriate sample definition and sampling methods aggravate the imbalance of positive and negative samples. In Figure 2, the yellow box is the ground truth, the red box is the positive sample, and the remaining gray boxes are negative samples. Each point on the feature map generates multiple anchor boxes in anchor-based methods, such as YOLO v3 [7]. YOLO v3 only uses the anchor box with the maximum IoU value for the ground truth as the positive sample, and the remainder of the anchor boxes as negative samples. By contrast, anchor-free methods, such as CenterNet, only use the center point of the object as the positive sample and the remaining points as negative samples. The under-fitting of positive samples and over-fitting of negative samples make the model unable to thoroughly learn the features of objects, which affects the detection performance.

Figure 2.

Sample imbalance problem for different detectors. Anchor-based detectors use many preset anchors to regress one object bounding box, and most of the anchors are negative samples. By contrast, anchor-free detectors use key points to regress the bounding box, and most of the key points are negative samples. Positive samples make up a small proportion of the total sample size.

In this paper, an aspect-aware anchor-free detector called AspectNet is proposed to solve the above problems. It supervises the object width and height by adding an aspect prediction head at the end of the detector, which can learn different distributions of aspect ratios between different objects. Simultaneously, a new sample definition method and a loss function are proposed to alleviate the problem of sample imbalance.

The contributions of this paper are as follows:

- (1)

- A novel aspect-aware anchor-free detector is proposed to fit the distribution of the aspect ratios of different objects to alleviate the influence of variable scales on model robustness.

- (2)

- The sample definition method is improved to alleviate the problem of positive and negative sample imbalance.

- (3)

- A loss function is designed to strengthen the learning weight of the center point of the network, which solves the imbalance problem.

The remainder of this paper is structured as follows: In Section 2, recent advances in object detection methods are discussed. Section 3 contains information, including specific details and improvement methods. In Section 4, the implementation of the proposed method and its comparison with existing approaches are discussed. In Section 5, the proposed method is summarized, and the future research direction is presented.

2. Related Work

Traditional object detection uses HOG [8] or DPM [9] to extract the image features, then feeds the features into a classifier, such as SVM [10]. Because of its low performance, it was replaced by deep learning convolutional networks in the deep learning era. Generally, deep CNNs can be roughly categorized into two types: anchor-based approaches and anchor-free approaches.

2.1. Anchor-Based Approaches

Anchor-based approaches can be divided into two main types of pipelines: multi-stage detection and single-stage detection. Multi-stage detection methods filter regions of interest in the image and extract the foreground area from preset dense candidates, using region proposal algorithms [11,12] in the first stage. The bounding boxes of objects are constantly regressed in the subsequent stages, such as the R-CNN series [1,11,12,13], which is time consuming. Complex multi-stage models cannot meet the requirements of real-time detection. Different from multi-stage methods that use traditional sliding windows and proposals, single-stage detection methods inherit the ideas of anchor boxes but directly detect the object and regress the bounding boxes in a single-shot manner without any region proposals, which avoids the repeated calculation of the feature map and makes the anchor boxes directly on the feature map, which speeds up the detection process dramatically, for example, YOLOv3, RetinaNet, and SSD [14].

These methods need to preset a large number of anchors manually, which increases the calculation in the network, and the excessive number of samples aggravates the imbalance of positive and negative samples, as described above. Additionally, the misaligned problem between anchors and features affects the network’s performance. In previous studies, researchers often used IoU to determine the samples. For example, if the IoU between the anchor boxes and ground truth boxes of a sample is in [0.5, 1], then the sample is positive, whereas if the IoU is in [0, 0.5), then the sample is negative. The anchor with the maximum ground truth IoU is selected for matching. These hyperparameters need to be manually fine-tuned for different detection tasks, and detection tasks are susceptible to hyperparameter selection. The matching definition of positive and negative samples has a significant impact on the model’s performance.

2.2. Anchor-Free Approaches

Anchor-free approaches can directly detect objects without preset anchors and can be divided into two directions: key point-based methods and center-based methods. Most are single stage because both methods are dense detectors. Key point-based methods use multi-key point joint expression to predict objects. For example, ExtremeNet [15] uses the four extreme points and the center point to make predictions. RepPoints [16] adaptively learns a set of points to represent boxes. FoveaBox [17] uses the center point, and upper left and lower right corners. PLN uses the center point and four corner points. CornerNet uses a pair of corner points to regress the bounding anchor based on key point-based methods. However, it requires an extra distance metric to store the pair of corner points that belong to the same object, which requires complicated post-processing. Compared with key point-based methods, structure center-based methods are more concise and can achieve better performance [18]. Therefore, the proposed model AspectNet uses the center-based approach to detect objects. Center-based methods use the center point for fitting to predict objects. YOLOv1 [19] uses points near the object’s center to regress the bounding box instead of using anchor boxes. However, YOLOv1 has the characteristics of high precision and low recall because it only uses a small number of positive samples to learn features, and was quickly replaced by the anchor-based detector YOLOv2 [20]. CenterNet models the bounding box regression problem as the problem of learning the center point and distance between the center point and the corresponding bounding box, and directly uses the center point regression method to predict objects without NMS for post-processing. The structure is simple and effective. However, CenterNet’s positive samples are only defined as the center point of the ground truth, and the remaining points are negative samples. This simple approach to defining positive and negative samples causes CenterNet to have a severe imbalance of positive and negative samples, which also causes the overfitting of regression branches. FCOS is a pixel-wise object detection algorithm based on FCN [21], which alleviates the problem of positive and negative sample imbalance by adding a centeredness branch. It uses the feature pyramid network (FPN) for multi-scale prediction. FCOS defines the points in the bounding boxes of the object as positive samples, and the remaining points are negative samples. Compared with CenterNet, FCOS has a larger number of positive samples. However, FCOS does not define the ignored area of the sample, and the focal loss makes FCOS pay more attention to the noisy points around the bounding box. Additionally, the position of the bounding box edge is used as a positive sample for which it is difficult to obtain accurate results for prediction.

The excessive attention paid to difficult negative samples makes the loss unstable and difficult to decrease, hence not robust. The proposed method alleviates this problem by redefining sample selection and redesigning the loss function. Additionally, the aspect ratio branch of the proposed method can make up for the lack of prior knowledge of the aspect ratio in the anchor-free method.

3. Proposed Method

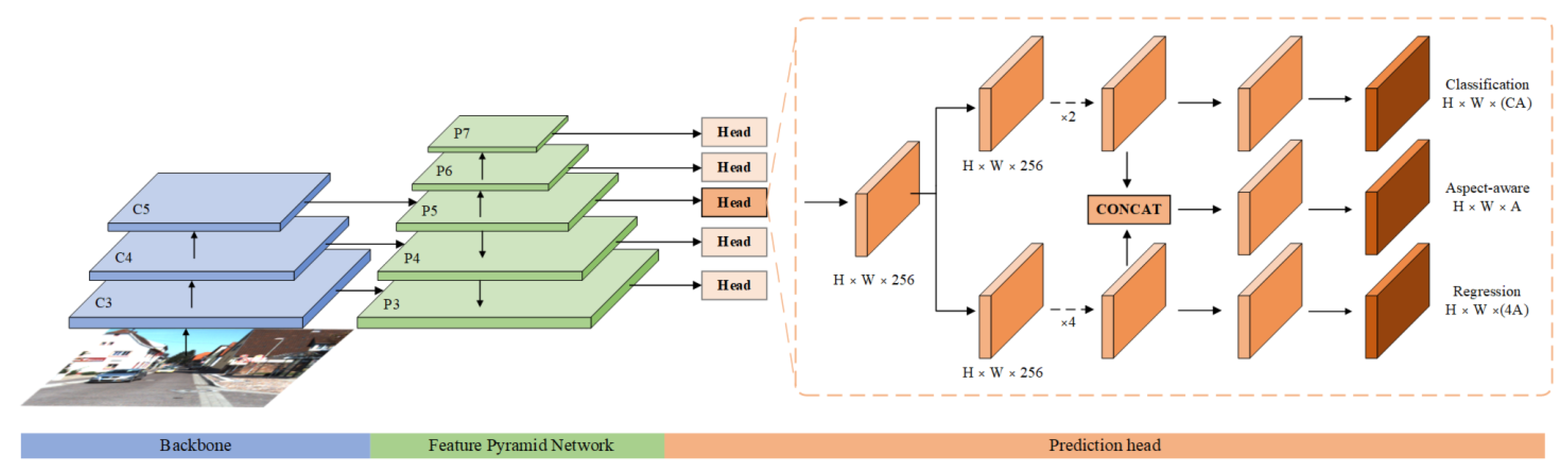

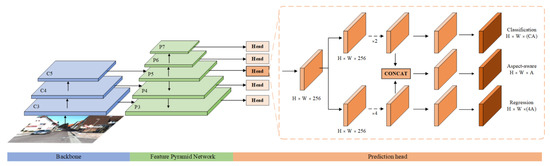

The proposed structure of AspectNet is shown in Figure 3. Different from other anchor-free models, an aspect-aware prediction head is designed and added to the end of the detector connecting the regression branch and classification branch to predict the aspect ratio of bounding boxes. The sample definition method and loss function of AspectNet are proposed to alleviate the sample imbalance problem.

Figure 3.

The overall structure of AspectNet. The supervised model learns the categories, positions, and aspect ratios of objects. The feature map of the aspect-aware head consists of the classification and regression branches.

3.1. Aspect Prediction for AspectNet

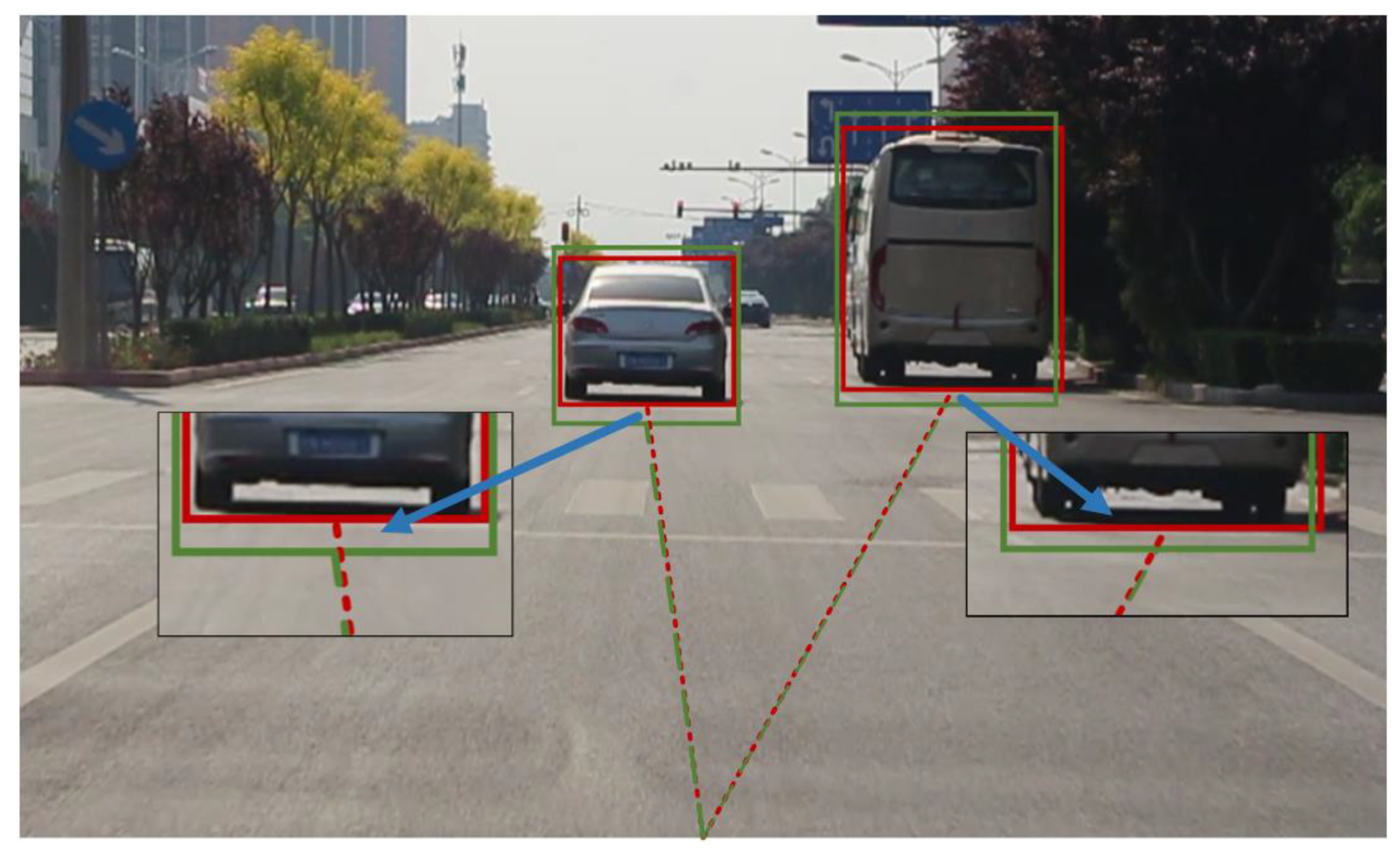

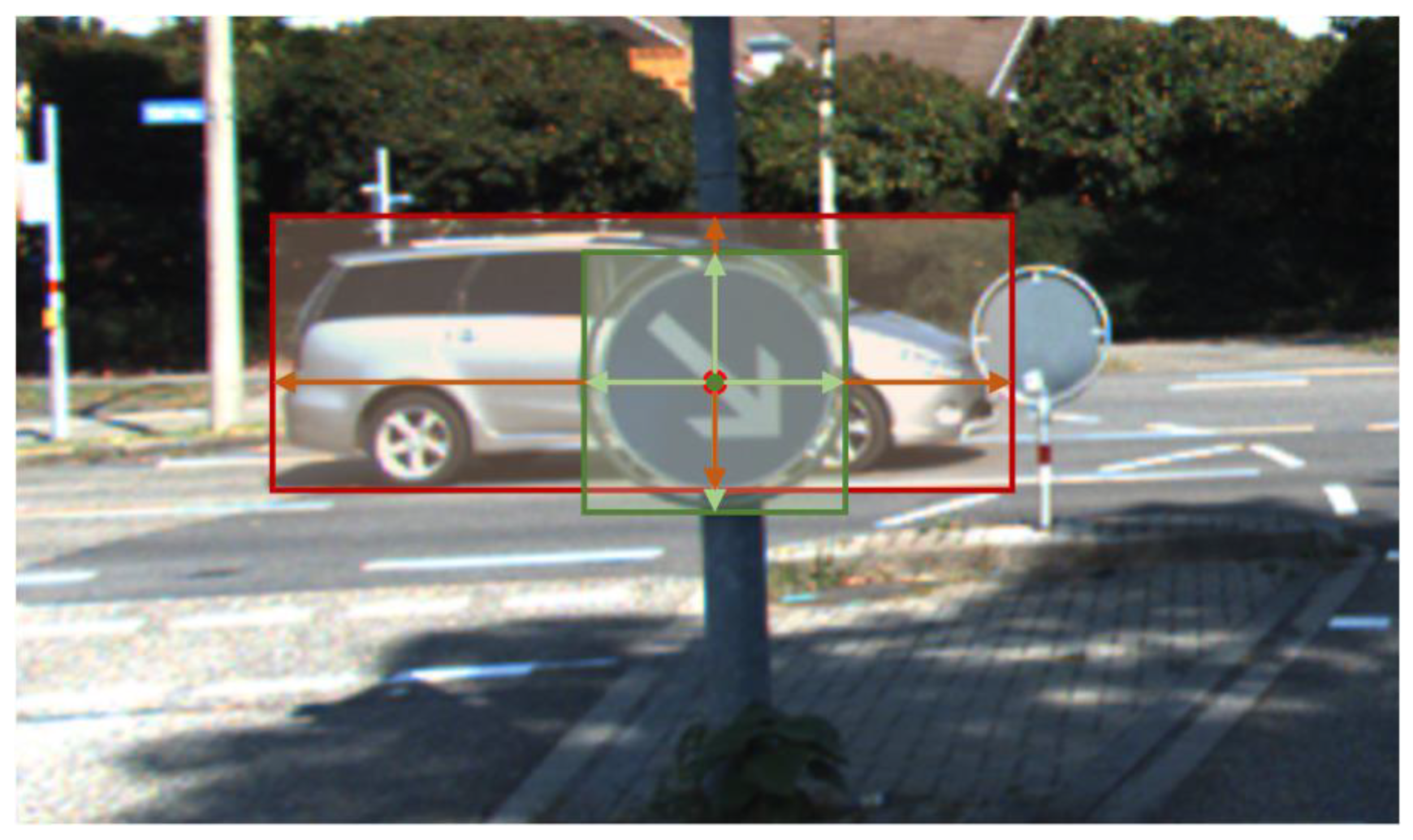

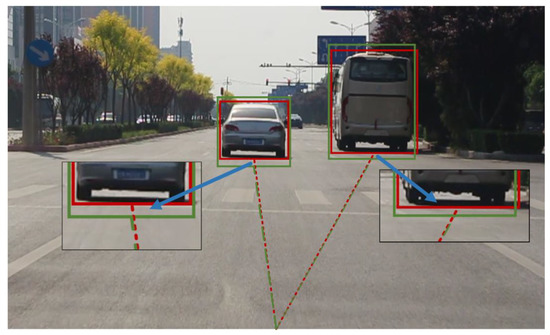

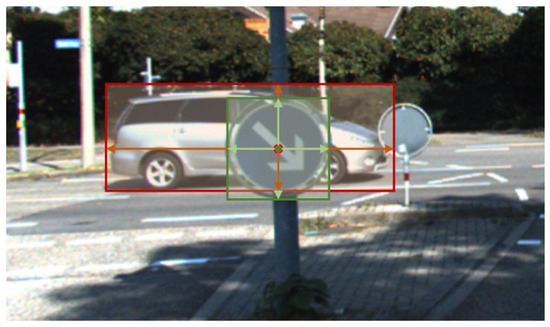

The unstable prediction of the aspect ratio of the bounding box can lead to wrong judgments by the autonomous driving decision-making module, as shown in Figure 4. Different objects have different aspect ratio distributions. For example, the expected value of the aspect ratio of the bounding box of the pedestrian is larger than that of the bounding box at the rear of the vehicle in front. The under-fitting of the model to the aspect ratio distribution of different objects makes model prediction unstable.

Figure 4.

Unstable traffic object detection. Bounding boxes with different aspect ratios can make the decision module make wrong judgments. For example, the monocular ranging algorithm obtains different results and affects the decision of automatic emergency braking.

An aspect-aware head is added at the end of the detector to predict the aspect ratio of objects that concatenates the classification and regression branches. This can solve the robustness problem mentioned above, as shown in Figure 3. The aspect-aware head improves the localization ability of the network. By contrast, learning the distribution of different aspect ratios can improve the model’s classification ability.

Specifically, by labeling the aspect ratio of objects, the model can learn the aspect ratio distribution of each specific category. Pedestrians have different weights, and the mean distribution is higher. Cyclists have a large distribution variance due to their different riding postures. Although vehicles have different viewing angles (e.g., aspect ratios of frontal and side views of vehicles are different), the overall aspect ratio distribution is lower than pedestrians and cyclists. By distinguishing the aspect ratio distribution of different categories, the model can locate the position of objects and classify them more accurately. During training, the aspect prediction head is trained synchronously with the other two branches and indirectly improves model performance by backpropagating the weight updates to these two branches so that the model can extract the aspect ratio features of the objects. During inference, the confident detection score of the predicted objects adds to the disturbance to the aspect ratio based on the existing predicted classification probability. The detection confident score is defined as

where is the predicted aspect ratio of the bounding box , is the aspect ratio calculated from predicted position information from the regression branch, is the predicted classification probability. The larger the aspect ratio difference between the aspect-aware head and the regression branch, the smaller the confidence of the predicted bounding box.

The aspect ratio is defined as

where are the coordinates of the lower right corner point of the bounding box, and are the coordinates of the upper left point. The feature maps are concatenated from the classification branch and regression branch. The entire aspect prediction head only consists of two convolution layers to keep the efficiency achieved by the RELU [22] activation layer.

3.2. Sample Definition Method

The single-stage detector has always been plagued by the imbalance of positive and negative samples. The reason that the accuracy of the two-stage detector is higher than that of the single-stage detector is because the two-stage detector uses RPN or other selection methods in the early stage to alleviate the imbalance of positive and negative samples. The performance gap between anchor-free and anchor-based detectors is caused by the difference in the sample definition method of positive and negative samples. Therefore, selecting an appropriate method for determining positive and negative samples is the key to designing object detectors. Most center-based anchor-free methods select one ground truth center point as a positive sample or select all points in the ground truth box as positive samples. The former causes serious imbalance problems, whereas the latter causes the model to focus too much on complex samples, which results in the loss being unstable and difficult to decrease. In this paper, a sample definition method is proposed based on a center-based anchor-free detector for object detection tasks in autonomous driving.

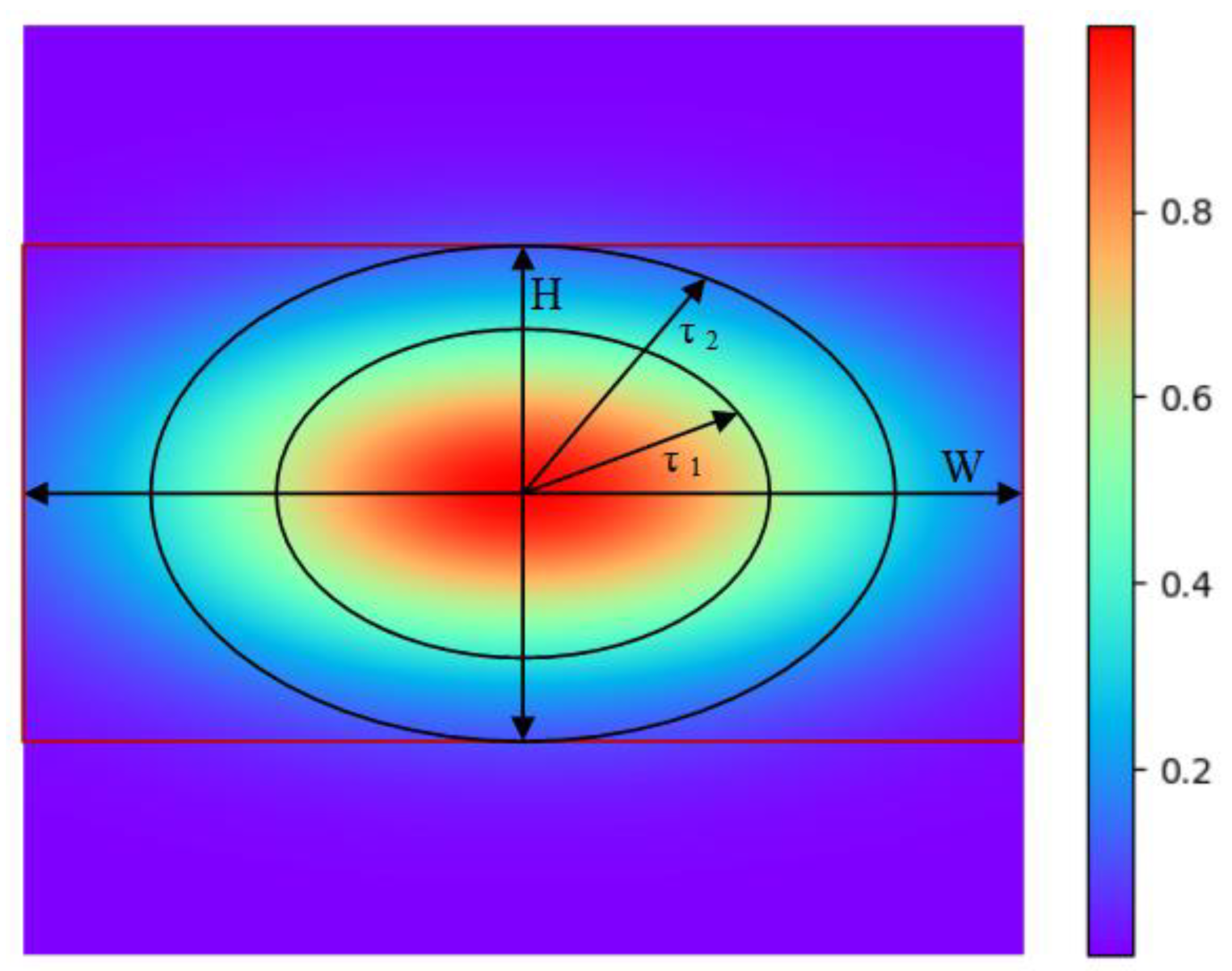

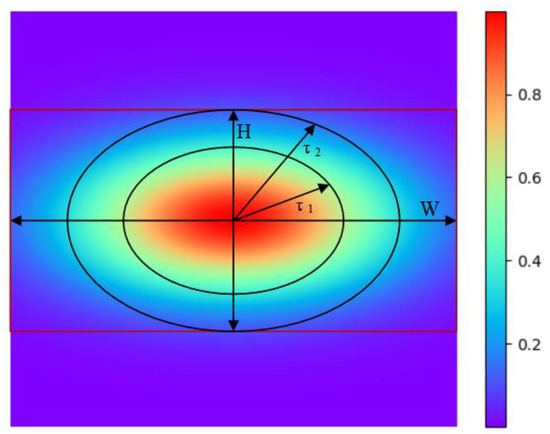

All ground truth center points are mapped to a heatmap , where W and H are the width and height of the input image , respectively, R is a down-sampling factor, and C is the number of categories that use an aspect-aware Gaussian kernel given as follows and demonstrated in Figure 5:

where is an object size-adaptive standard deviation, w and h are the width and height of the object bounding box, respectively, and is a low-resolution equivalent of each ground truth center point . represents the degree of influence of this point on the center point of the object. corresponds to the center point of the object, whereas is the background. Positive samples are defined as the points and negative samples as the points . Points are ignored sample points, where and are hyperparameters that control the number of positive samples. In this paper, are chosen. By proposing the positive sample area, the ignored sample area, and the negative sample area, our model can effectively pay attention to the relationship between the features of the object itself and the background without paying too much attention to the edges of the object, which can also avoid the overfitting of object bounding box edges. The definition of positive samples is also related to the aspect ratio of objects, making the sample definition method more efficient and concentrated. Thus, the problem of the positive and negative sample imbalance can be alleviated.

Figure 5.

Aspect-aware Gaussian kernel. Different distances from the center point have different influence on the definition and selection of positive samples. The Gaussian kernel is sensitive to the width and height of the bounding box.

3.3. Loss Function

The loss function of the proposed method can be divided into three parts that correspond to three heads: the classification part, regression part, and aspect-aware part. The classification loss function is aspect-aware logistic regression based on the focal loss. As shown in the proposed sample definition method, is an aspect-aware Gaussian kernel, indicating the aspect ratio of the bounding box. The heatmap is a pixel-wise decrease from the center point of the bounding box to the surrounding region, and the decreasing speed is related to the aspect ratio.

The classification loss function is defined as

where and are the hyperparameters of the focal loss, and N is the sum of the number of positive and negative samples. As shown in Figure 6, the value of the center point of the image is equal to the maximum value 1. The positive samples are set according to the focal loss when . The ignored area is defined as. The model does not calculate the loss for this area and does not perform backpropagation to make the model not pay too much attention to the edge of the bounding box. For negative samples, controllable variable is added. For the negative sample point near the center point, the penalty is relatively large, and the weight of loss is relatively small, which indicates that the distinction between positive and negative at this position is more blurred.

Figure 6.

Aspect-aware Gaussian kernel in the traffic object. The value of the center point of the image is equal to the maximum value of 1.

The bounding boxes use CIoU [23] to regress the spatial coordinates. As illustrated in Figure 7, the regression objectives , , , and are computed for each location on all feature levels. Therefore, the predicted coordinates of the upper left and lower right corner points are represented as , where . The CIoU resolves the issue of no overlap between the bounding box and ground truth, which results in more stable bounding box regression because it considers the bounding box distance, overlap rate, scale, and penalty terms. Additionally, this may help to avoid divergence throughout the training phase.

Figure 7.

Labeling method for the bounding box. Each location has four parameters for the definition.

The CIoU loss function includes an impact term based on the DIoU [23], which takes the length-to-width ratio of the predicted and ground truth boxes into account:

where β is a trade-off parameter and v is a parameter used to determine the aspect ratio consistency. Additionally, ρ() denotes the distance between the center points of the bounding box and ground truth, and c denotes the diagonal length of the smallest enclosing box that encompasses both boxes.

The binary cross-entropy loss is adopted for the aspect-aware head loss. represents the predicted aspect ratio for each detected bounding box, and is the aspect ratio of the corresponding ground truth box. During training, the gradients from the aspect-aware head loss function can update the weights of regression and the classification branch using backpropagation, which promotes the detection performance of the proposed model. The loss function of the aspect-aware head is defined as

where is the binary cross-entropy loss, is the sigmoid function, and is the number of positive samples. Additionally,

is the total loss function obtained by adding three sub-loss functions (: classification head, : aspect-aware head, and : regression head). and are calculated exclusively for positive samples.

3.4. Multi-Scale Detection

As shown in Figure 8, overlapping ground truths may produce uncertainty, where the same point represents the position of different objects, which is difficult to resolve during training. In this paper, a multi-scale prediction approach is demonstrated to solve the problem of semantic diversity. Following the FPN [24] and the pyramid attention network [25], a method for detecting objects of various sizes using different levels of feature layers is proposed. A pyramid is created using a five-scale feature map denoted by the subscripts . and are extracted from and top-down convolution is performed to mitigate the deterioration caused by increasing the depth of the convolutional layers. are each processed using 3 × 3 convolutions with two strides from . Objects of different scales are mapped on different feature layers for prediction to avoid object occlusion and overlap. Multi-level detection distributes information throughout the many feature layers, which may increase the efficiency of the feature maps and hence detection performance.

Figure 8.

Overlap between different scales of objects. A feature pyramid is used to solve the problem of a point representing multiple semantics, and boundary boxes of different sizes are predicted on various feature layers.

4. Experience and Results

4.1. Dataset

Detection performance in traffic scenes was evaluated using the BCTSDB [26] and KITTI [27] datasets. BCTSDB is a traffic sign collection that comprises 15,690 images and 25,243 annotations, with labels classified as prohibitory, required, or warning. The KITTI dataset contains 7481 training images and 7518 test images, with 80,256 labeled objects for three categories (i.e., vehicle, pedestrian, and cyclist).

4.2. Implementation Details

The network structure was constructed using PyTorch, and the default hyperparameters used were the same as those for MMDetection [28] unless otherwise stated. Two NVIDIA TITAN V graphics cards with 24 GB combined video random access memory were used for the configuration. To train the model, stochastic gradient descent was used, with an initial learning rate of and a warm-up ratio of 0.1. The weight decay and momentum were set to 0.0001 and 0.9, respectively. The backbone network was established using pre-trained weights from ImageNet [29], and other layers used Xavier [30] for parameter initialization. The input images were scaled to a full scale of 640 × 640, maintaining the aspect ratio.

4.3. Validation Experiment

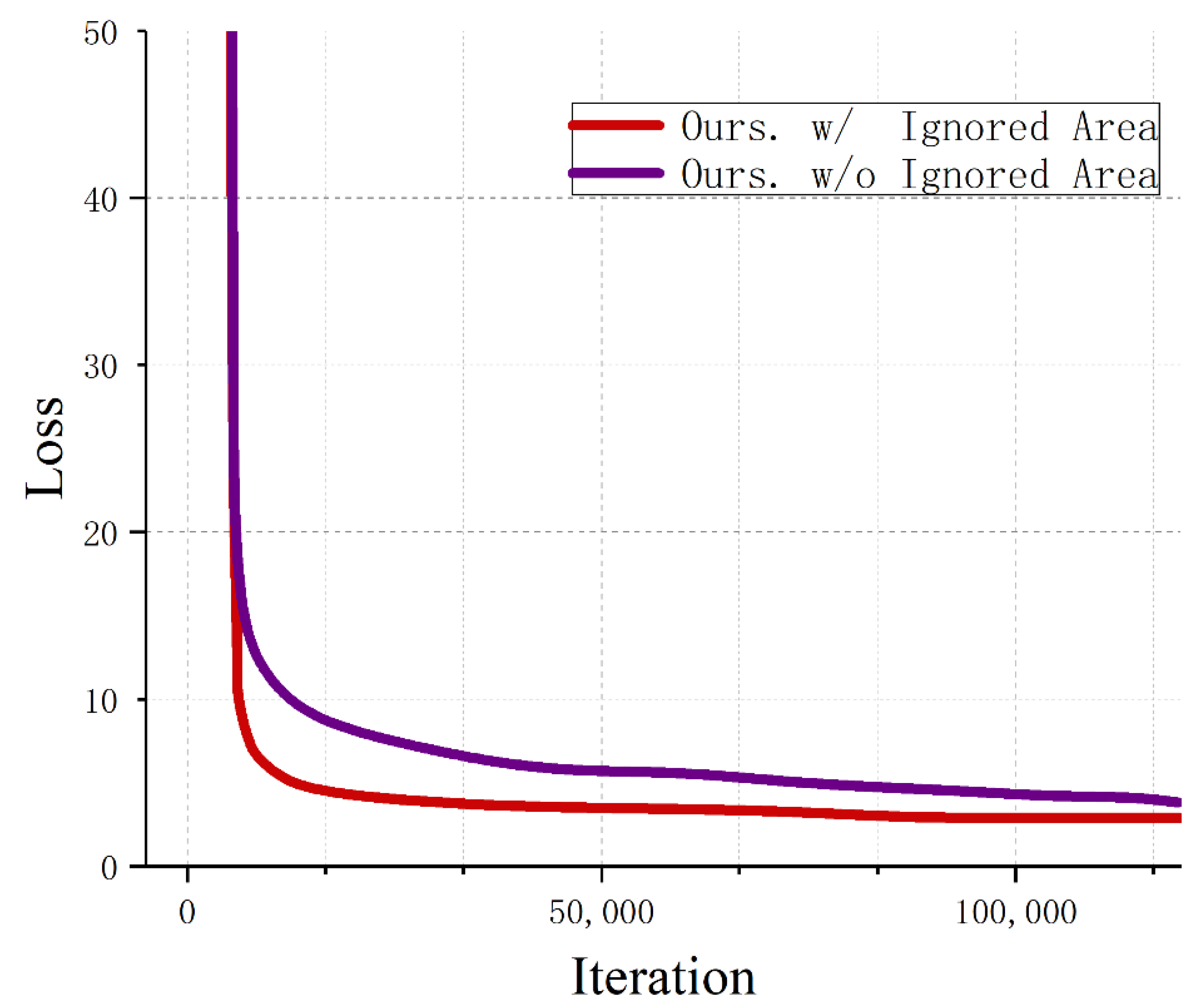

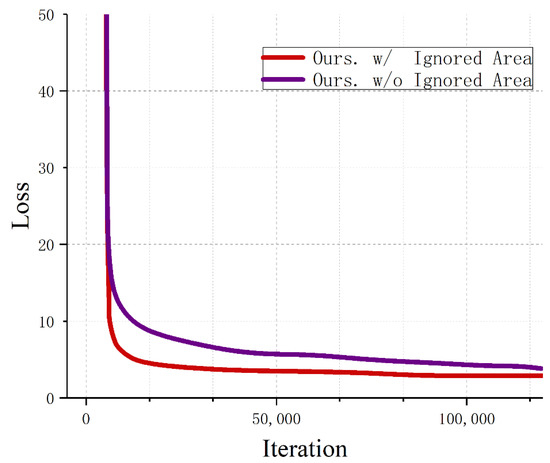

First, the importance of the ignored area is discussed. Experiments verified that the ignored area not only reduced the loss value and increased its stability, but also improved the convergence speed significantly, as illustrated in Figure 9.

Figure 9.

Loss curves of the proposed method with or without the ignored area. The definition of the ignored region in the anchor-free method effectively accelerated the convergence degree of the model.

The anchor-based methods set too many redundant anchor boxes, which resulted in the number of positive samples being much smaller than the number of negative samples: approximately 1:1000. The anchor-free methods discarded preset anchors and used key points for regression, but the imbalanced problem still existed. Table 1 indicates that the proportion of positive and negative samples affected the detection performance of the model. Unbalanced ratios led to a sharp decrease in the detection performance of the model. As shown in Table 2, by replacing the original sample definition methods of different models with the proposed method, the proposed sample definition method alleviated the imbalance problem, thereby improving model detection performance.

Table 1.

Detection results with different sample ratios on BCTSDB.

Table 2.

Detection results of different models with different sample definition methods on BCTSDB.

The loss function in RetinaNet, CenterNet, and FCOS was replaced with the proposed loss function to test the effectiveness of the proposed loss function. Then the results were compared to those predicted by the original models. As shown in Table 3, the experimental results proved that the proposed loss function provided significant improvements, which indicates that the aspect ratio of the bounding box played a positive role in updating the model weights.

Table 3.

Detection results of different models with different loss functions on BCTSDB.

As shown in Table 4, an aspect-aware head was added to different detection models. The experiments demonstrated that it helped the model to learn the aspect ratio distribution of different objects, thus improving the detection accuracy effectively.

Table 4.

Effectiveness experiment for the aspect-aware head for different models on BCTSDB.

A comparison of the performance of the different approaches is summarized in Table 5, which includes the detection results for the anchor-based and anchor-free methods (CenterNet and FCOS). The anchor-based detectors included multi-stage algorithms and single-stage algorithms. AspectNet achieved high detection accuracy, thereby resulting in more competitive outcomes, with AP AP50 AP75 values of 76.2%, 97.3%, and 93.4%, respectively.

Table 5.

Comparison of results with other methods on the BCTSDB val dataset.

The proposed method was also tested on KITTI. Compared with other existing detectors, AspectNet had much better detection accuracy, as shown in Table 6.

Table 6.

Comparison results for detection methods on the KITTI val dataset.

The detection results are shown in Figure 10 and Figure 11 on the KITTI and BCTSDB datasets, respectively. The results demonstrate the proposed method’s effectiveness in traffic scenarios.

Figure 10.

Detection results on the KITTI dataset. AspectNet accurately detected objects of different scales in traffic scenes, notably when multiple objects overlapped, as shown in the second result.

Figure 11.

Detection results for the BCTSDB dataset.

5. Conclusions

In this paper, an anchor-free-based object detection method in autonomous driving was proposed. The main contribution is that an aspect-aware prediction head is added at the end of the detector. The accuracy of the detection on BCTSDB can be improved by adding aspect-aware prediction head in RetinaNet and FCOS. The improved sample definition method was used to alleviate the problem of sample imbalance; the comparison results on BCTSDB datasets show that the detection accuracy can be improved by replacing the original sample definition method in YOLO V3, FCOS and Retinanet models. The proposed loss function was added to strengthen the learning weight of the center point, and the validation experiment shows that this can improve the detection accuracy. An overall validation on public datasets demonstrated that the proposed method can achieve a significant improvement in detection accuracy. The AP50 and AP75 of the proposed method are 97.3% and 93.4% on BCTSDB, and the average accuracies of car, pedestrian, and cyclist are 92.7%, 77.4% and 78.2% on KITTI, respectively, which indicates that the proposed method achieves better results compared to other methods. The proposed method improved detection accuracy, but it still encountered many challenges when applied to real traffic scenarios. In autonomous driving scenarios, higher accuracy is required, and this can be further improved in future work. The experiment in this paper is trained on public datasets and real traffic scenes facing challenging with complex lighting and weather factors. This issue can be addressed by considering Transformers and domain adaptation in future work.

Author Contributions

Conceptualization, T.L. and W.P.; methodology, H.B.; software, T.L. and W.P.; validation, X.F. and H.L.; writing—original draft preparation, T.L.; writing—review and editing, T.L. and W.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Nos. 61802019, 61932012, 61871039) and the Beijing Municipal Education Commission Science and Technology Program (Nos. KM201911417003, KM201911417009, KM201911417001). Beijing Union University Research and Innovation Projects for Postgraduates (No. YZ2020K001). By the Premium Funding Project for Academic Human Resources Development in Beijing Union University under Grant BPHR2020DZ02.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| Abbreviations | Full Name |

| AP | Averaged AP at IoUs from 0.5 to 0.95 with an interval of 0.05 |

| AP50 | AP at IoU threshold 0.5 |

| AP75 | AP at IoU threshold 0.75 |

| APL | AP for objects of large scales (area > 962) |

| APM | AP for objects of medium scales (322 < area < 962) |

| APS | AP for objects of small scales (area < 322) |

| BCE | Binary cross-entropy |

| BCTSDB | BUU Chinese traffic sign detection benchmark |

| CIoU | Complete IoU |

| CNN | Convolutional neural network |

| DIoU | Distance IoU |

| FPN | Feature pyramid network |

| HOG | Histogram of oriented gradients |

| IoU | Intersection over union |

| NMS | Non-maximum suppression |

| RELU | Linear rectification function |

| RPN | Region proposal network |

| SOTA | State-of-the-art |

| SSD | Single-shot multibox detector |

| SVM | Support vector machine |

| VRAM | Video random access memory |

| w | With |

| w/o | Without |

| YOLO | You only look once |

References

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Law, H.; Deng, J. CornerNet: Detecting Objects as Paired Keypoints. Int. J. Comput. Vis. 2020, 128, 642–656. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as Points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: A Simple and Strong Anchor-Free Object Detector. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 4, 1922–1933. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the Gap Between Anchor-Based and Anchor-Free Detection via Adaptive Training Sample Selection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: Seattle, WA, USA, 2020; pp. 9756–9765. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: San Diego, CA, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object Detection with Discriminatively Trained Part-Based Models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Corinna Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; IEEE: Columbus, OH, USA, 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; IEEE: Santiago, Chile, 2015; pp. 1440–1448. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving Into High Quality Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2014; IEEE: Salt Lake City, UT, USA, 2018; pp. 6154–6162. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Lecture Notes in Computer Science; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. ISBN 978-3-319-46447-3. [Google Scholar]

- Zhou, X.; Zhuo, J.; Krahenbuhl, P. Bottom-Up Object Detection by Grouping Extreme and Center Points. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: Long Beach, CA, USA, 2019; pp. 850–859. [Google Scholar]

- Yang, Z.; Liu, S.; Hu, H.; Wang, L.; Lin, S. RepPoints: Point Set Representation for Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; IEEE: Seoul, Korea, 2019; pp. 9656–9665. [Google Scholar]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. FoveaBox: Beyound Anchor-Based Object Detection. IEEE Trans. Image Process. 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Liang, T.; Bao, H.; Pan, W.; Pan, F. ALODAD: An Anchor-Free Lightweight Object Detector for Autonomous Driving. IEEE Access 2022, 10, 40701–40714. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Las Vegas, NV, USA, 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Honolulu, HI, USA, 2017; pp. 6517–6525. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; IEEE: Boston, MA, USA, 2015; pp. 3431–3440. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep Sparse Rectifier Neural Networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Ft. Lauderdale, FL, USA, 11–13 April 2011; Gordon, G., Dunson, D., Dudík, M., Eds.; PMLR: Fort Lauderdale, FL, USA, 2011; Volume 15, pp. 315–323. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. AAAI 2020, 34, 12993–13000. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Honolulu, HI, USA, 2017; pp. 936–944. [Google Scholar]

- Li, H.; Xiong, P.; An, J.; Wang, L. Pyramid Attention Network for Semantic Segmentation. arXiv 2018, arXiv:1805.10180. [Google Scholar]

- Liang, T.; Bao, H.; Pan, W.; Pan, F. Traffic Sign Detection via Improved Sparse R-CNN for Autonomous Vehicles. J. Adv. Transp. 2022, 2022, 3825532. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision Meets Robotics: The KITTI Dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. MMDetection: Open MMLab Detection Toolbox and Benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Glorot, X.; Bengio, Y. Understanding the Difficulty of Training Deep Feedforward Neural Networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010; pp. 249–256. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Las Vegas, NV, USA, 2016; pp. 770–778. [Google Scholar]

- Wang, X.; Yang, M.; Zhu, S.; Lin, Y. Regionlets for Generic Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 2071–2084. [Google Scholar] [CrossRef] [PubMed]

- Yang, B.; Yan, J.; Lei, Z.; Li, S.Z. CRAFT Objects from Images. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Las Vegas, NV, USA, 2016; pp. 6043–6051. [Google Scholar]

- Chen, X.; Kundu, K.; Zhang, Z.; Ma, H.; Fidler, S.; Urtasun, R. Monocular 3D Object Detection for Autonomous Driving. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Las Vegas, NV, USA, 2016; pp. 2147–2156. [Google Scholar]

- Cai, Z.; Fan, Q.; Feris, R.S.; Vasconcelos, N. A Unified Multi-Scale Deep Convolutional Neural Network for Fast Object Detection. In Computer Vision—ECCV 2016; Lecture Notes in Computer Science; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; Volume 9908, pp. 354–370. ISBN 978-3-319-46492-3. [Google Scholar]

- Yi, J.; Wu, P.; Metaxas, D.N. ASSD: Attentive Single Shot Multibox Detector. Comput. Vis. Image Underst. 2019, 189, 102827. [Google Scholar] [CrossRef]

- Liu, S.; Huang, D.; Wang, Y. Receptive Field Block Net for Accurate and Fast Object Detection. In Computer Vision—ECCV 2018; Lecture Notes in Computer Science; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 11215, pp. 404–419. ISBN 978-3-030-01251-9. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).