Dynamic Hand Gesture Recognition for Smart Lifecare Routines via K-Ary Tree Hashing Classifier

Abstract

:Featured Application

Abstract

1. Introduction

- For hand motion and position analysis, we propose a method for extracting hand skeletons;

- For the recognition of image-based hand gestures, we have extracted novel features based on point-based trajectories, frame differencing, orientation histogram, and 3D point clouds.

2. Related Work

3. Material and Methods

3.1. Preprocessing of the Input Videos

3.2. Hand Detection Using Single Shot MultiBox Detector

| Algorithm 1: Hand detection using a single shot multibox detector. |

| Input: Optimized feature vectors. Output: Hand gesture classification. Step 1: Check the length of the hash table (say n). Check the number of entries in the hash table by setting a fixed threshold (say T = 40). If (n > T) then Find the correlation matching or minimum distance between the vectors by the following equation: Now, finding the sum of the vectors Step 2: /*Check the correlation of the new entry*/ If ) Match exists Else Match does not exist End |

3.3. Hand Landmarks Localization Using Skeleton Method

3.4. Features Extraction

3.4.1. Bezier Curves

3.4.2. Frame Differencing

- Initialize the keyframe number with n = 1. So, the keyframes are marked as After that, compute the difference frame between and , . Compute the valid pixels of N.

- If and set n = n + 1, set .

- Set k = k + 1 if the value of k is less than N. Then repeat the steps, otherwise end the procedure.

- After calculating the frame difference, each key point L in the first keyframe and L′ points in the other keyframes positions are calculated using the distance formula defined in Equation (12).

D Point Clouds

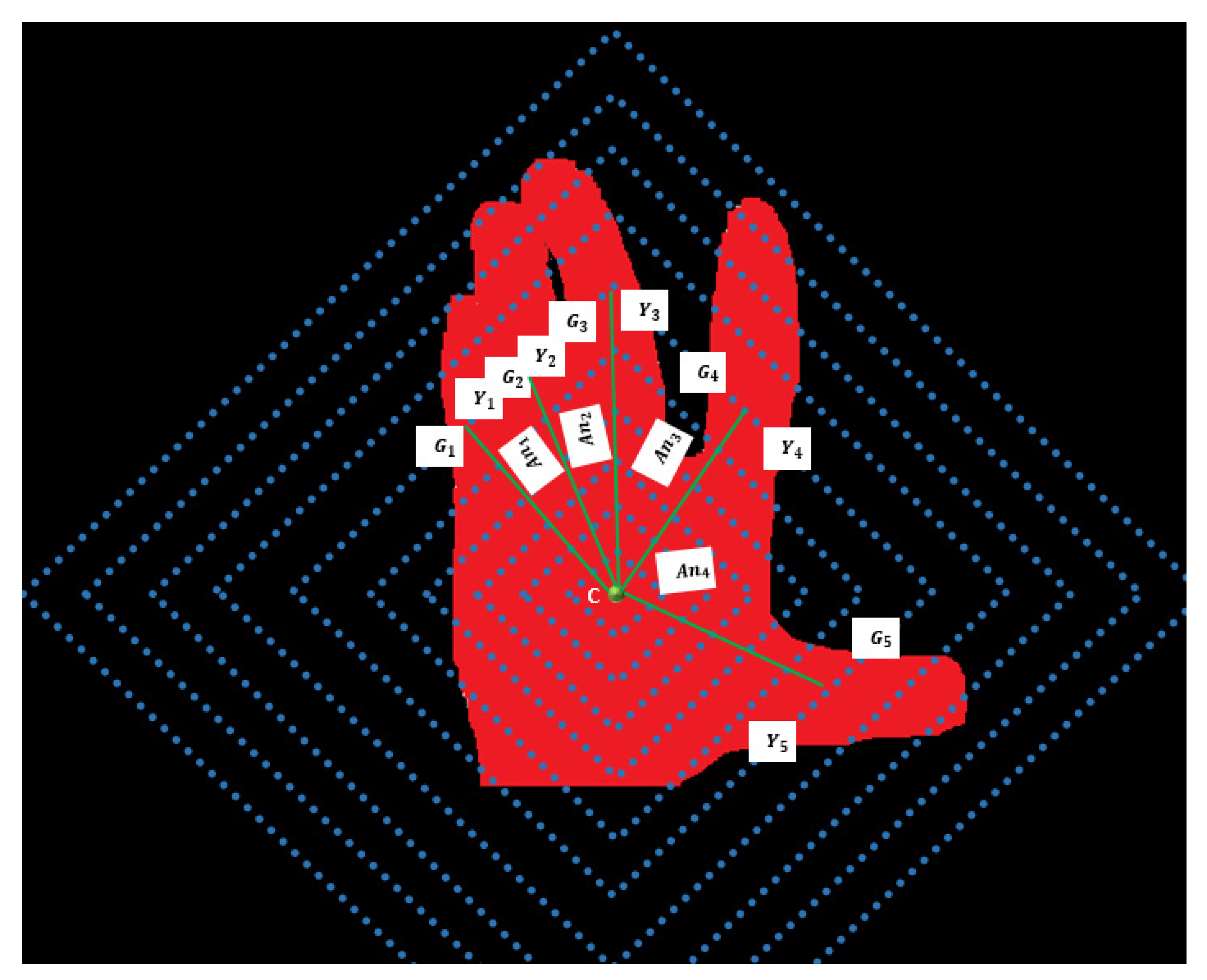

- First, a central point on the palm is taken and the maximum distance d between the central point and the edges Ei point of the gesture region is calculated. After that, ten different lengths of the radius are defined as . Next, the center of the rhombus is defined as C and the radiuses as rn. We have drawn 10 rhombuses (innermost is the first rhombus and outmost is the tenth rhombus) as shown in Figure 7. To highlight the effect of changing hand gestures, the color of the rhombus changes on the hand.

- In Figure 7, it is visible that every rhombus has a different number of intersections with hand gesture regions. For finding the number of stretched fingers S, we have taken the sixth rhombus (according to the thumb rule). In the sixth rhombus, we extracted those points whose colors vary from green to yellow and yellow to green. We define Gi as the point whose color changes from green to yellow and Yi as the point whose values changes from yellow to green.

- Now, for midpoint identification, we define it as Mi which is the midpoint of Gi and Yi. Then, each midpoint Mi and the central point C can be connected through a line and the angles between the adjacent lines are calculated. The angles are represented as Anj (j = 1, 2, 3…I − 1).

- Using the thumb rule, the fifth rhombus is taken as a boundary line to divide the hand gesture into two parts. For instance, we have taken the first part as P1 and the second part as P2, where P1 lies inside the rhombus and P2 is the outside area of the rhombus. Then the ratio R of P1 and P2 is calculated. The R is the gesture region area distribution feature as shown in Algorithm 2. Figure 7 shows the appearance features using 3D point clouds.

| Algorithm 2: Feature extraction. |

| Input: Hands Point based and texture-based data (x, y, z). Output: Feature Vectors (v1, v2, …, vn). featureVectors window_size For HandComponent in [x,y,z] do Handwindow /* Extracting features */ BezierCurves ExtractBezierCurvesFeatures (Hand_window) Frame Differencing ExtractFrameDifferencingFeatures (Hand_window) 3D Point Clouds Extract3DPointCloudsFeatures (Hand_window) featureVectors GetFeatureVectors [BezierCurves, Frame Differencing, 3D Point Clouds] featureVectors.append (featureVectors) End for featureVectors Normalize (featureVectors) return featureVectors |

3.5. Feature FOptimization Using Fuzzy Logic

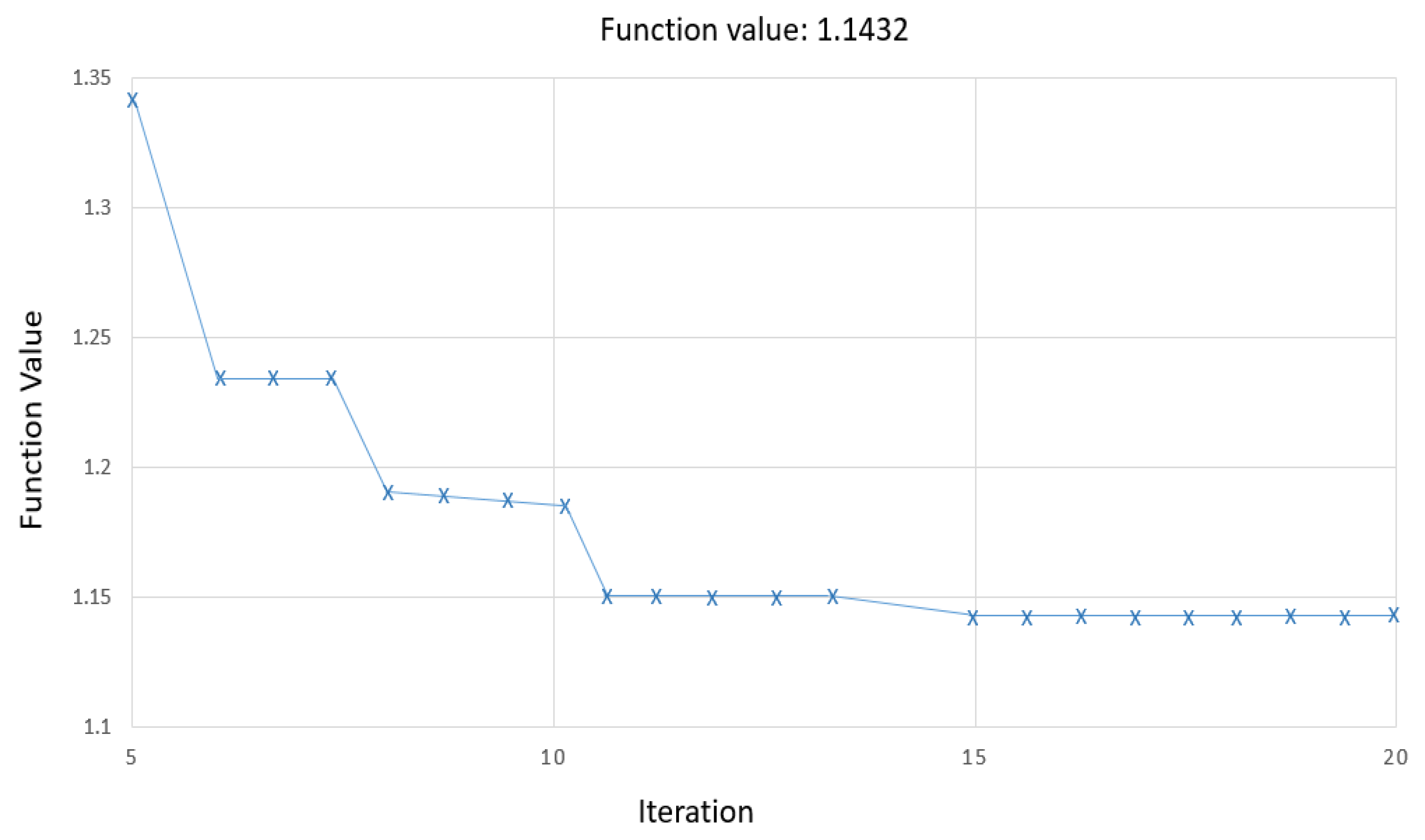

3.6. Hand Gestures Recognition

4. Experimental Setting and Results

4.1. Datasets Descriptions

4.2. Performance Parameters and Evaluations

4.2.1. Experiment I: The Hand Detection Accuracies

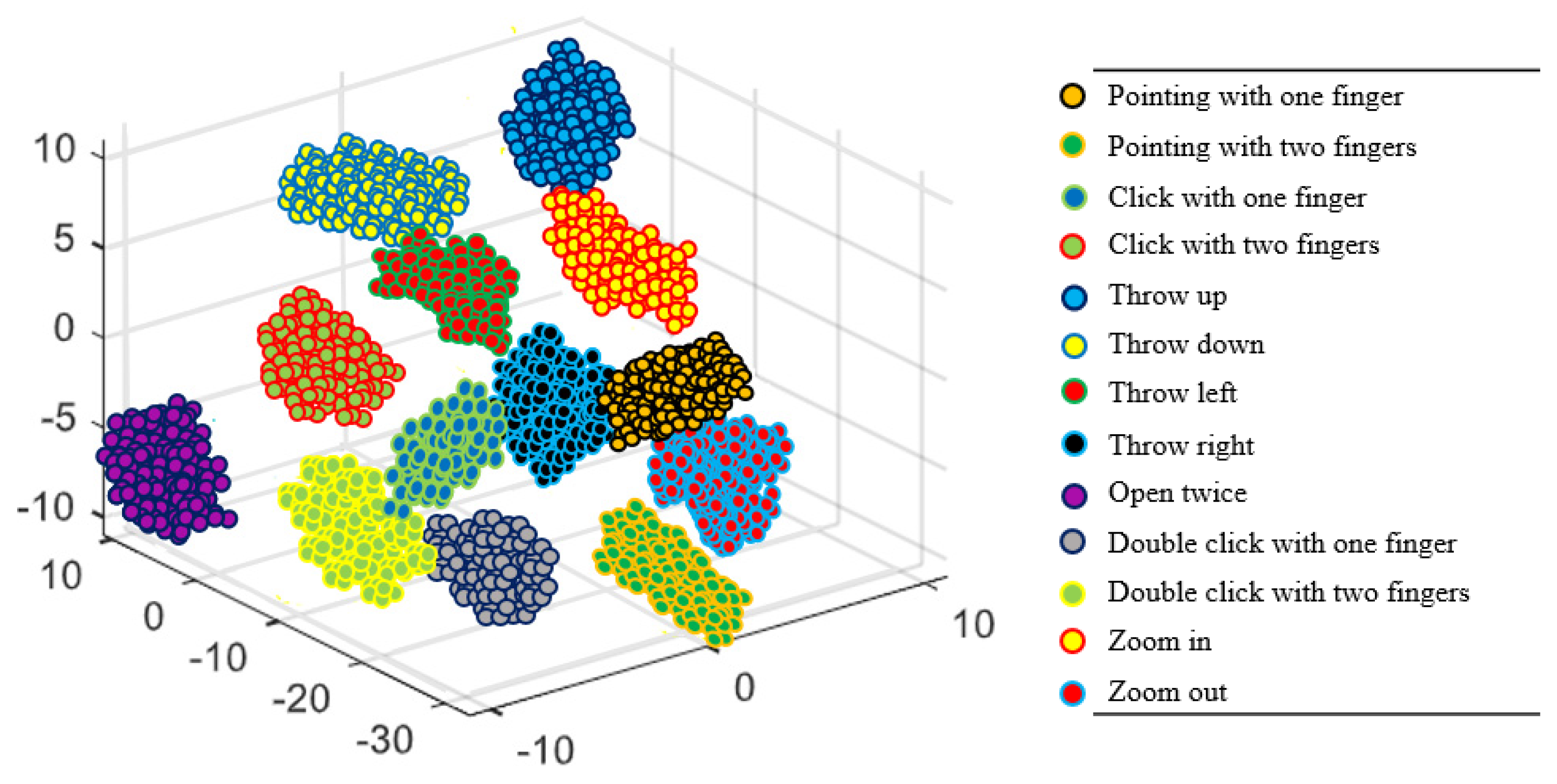

4.2.2. Experiment II: Hand Gestures Classification Accuracies

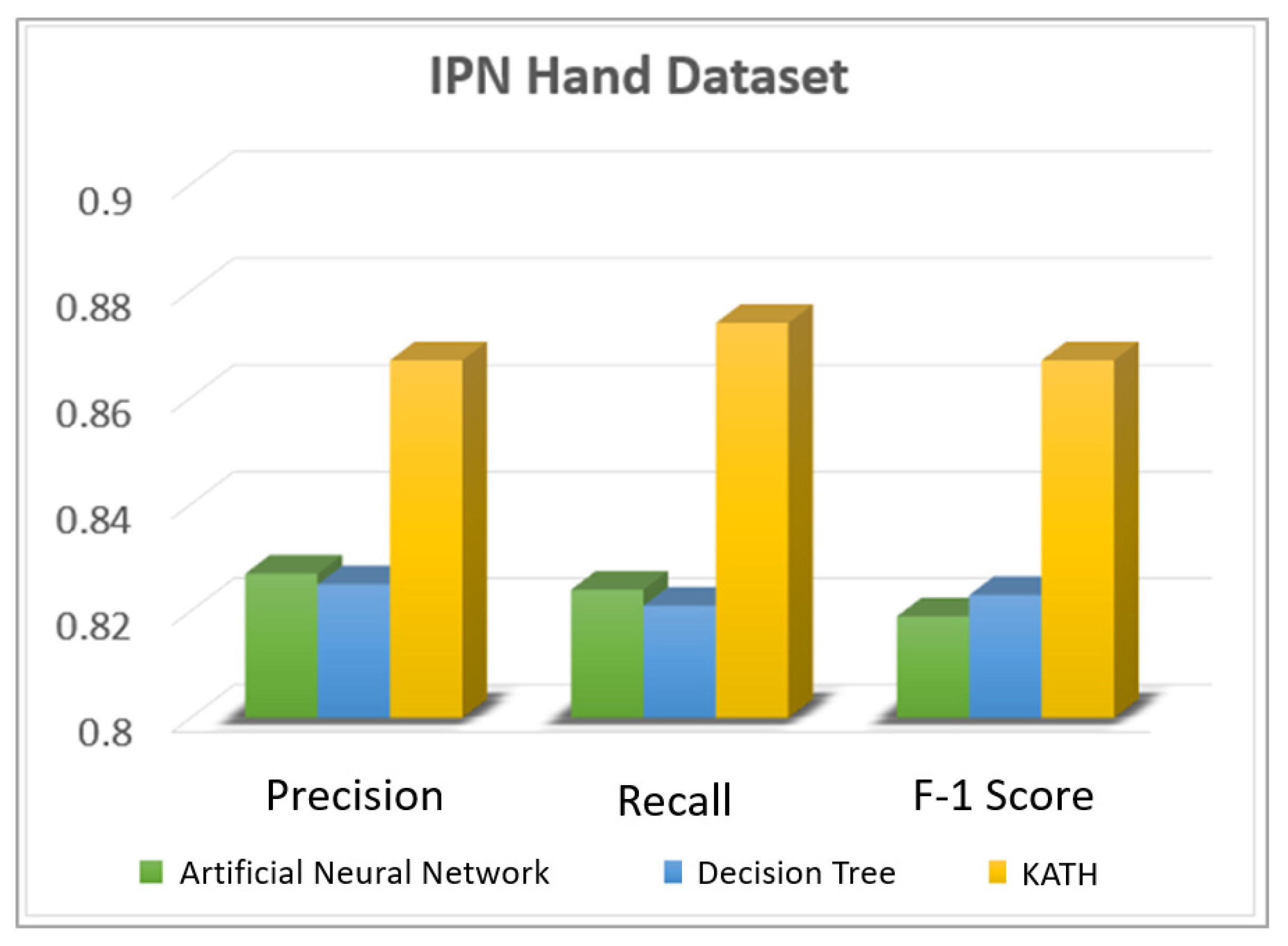

4.2.3. Experiment III: Comparison with Other Classification Algorithms

4.2.4. Experiment IV: Comparison of our Proposed System with State-of-the-Art Techniques

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Khan, M.A.; Javed, K.; Khan, S.A.; Saba, T.; Habib, U.; Khan, J.A.; Abbasi, A.A. Human action recognition using fusion of multiview and deep features: An application to video surveillance. Multimed. Tools Appl. 2020, 19, 1–27. [Google Scholar] [CrossRef]

- Zou, Y.; Shi, Y.; Shi, D.; Wang, Y.; Liang, Y.; Tian, Y. Adaptation-Oriented Feature Projection for One-shot Action Recognition. IEEE Trans. Multimed. 2020, 99, 10. [Google Scholar] [CrossRef]

- Ghadi, Y.; Akhter, I.; Alarfaj, M.; Jalal, A.; Kim, K. Syntactic model-based human body 3D reconstruction and event classification via association based features mining and deep learning. PeerJ Comput. Sci. 2021, 7, e764. [Google Scholar] [CrossRef] [PubMed]

- Van der Kruk, E.; Reijne, M.M. Accuracy of human motion capture systems for sport applications; state-of-the-art review. Eur. J. Sport Sci. 2018, 18, 6. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Mori, G. Multiple tree models for occlusion and spatial constraints in human pose estimation. In Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Amft, O.; Tröster, G. Recognition of dietary activity events using on-body sensors. Artif. Intell. Med. 2008, 42, 121–136. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sun, S.; Kuang, Z.; Sheng, L.; Ouyang, W.; Zhang, W. Optical Flow Guided Feature: A Fast and Robust Motion Representation for Video Action Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Zhu, Y.; Zhou, K.; Wang, M.; Zhao, Y.; Zhao, Z. A comprehensive solution for detecting events in complex surveillance videos. Multimed. Tools Appl. 2019, 78, 1. [Google Scholar] [CrossRef]

- Akhter, I.; Jalal, A.; Kim, K. Adaptive Pose Estimation for Gait Event Detection Using Context-Aware Model and Hierarchical Optimization. J. Electr. Eng. Technol. 2021, 16, 2721–2729. [Google Scholar] [CrossRef]

- Jalal, A.; Lee, S.; Kim, J.T.; Kim, T.S. Human activity recognition via the features of labeled depth body parts. In Proceedings of the International Conference on Smart Homes and Health Telematics, Artiminio, Italy, 12–15 June 2012; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Ghadi, Y.; Manahil, W.; Tamara, S.; Suliman, A.; Jalal, A.; Park, J. Automated parts-based model for recognizing human-object interactions from aerial imagery with fully convolutional network. Remote Sens. 2022, 14, 1492. [Google Scholar] [CrossRef]

- Jalal, A.; Sarif, N.; Kim, J.T.; Kim, T.S. Human activity recognition via recognized body parts of human depth silhouettes for residents monitoring services at smart home. Indoor Built Environ. 2013, 22, 271–279. [Google Scholar] [CrossRef]

- Jalal, A.; Kim, Y.; Kim, D. Ridge body parts features for human pose estimation and recognition from RGB-D video data. In Proceedings of the Fifth International Conference on Computing, Communications and Networking Technologies (ICCCNT), Hefei, China, 11–14 July 2014; pp. 1–6. [Google Scholar]

- Akhter, I.; Jalal, A.; Kim, K. Pose estimation and detection for event recognition using Sense-Aware features and Adaboost classifier. In Proceedings of the 2021 International Bhurban Conference on Applied Sciences and Technologies (IBCAST), Islamabad, Pakistan, 12–16 January 2021. [Google Scholar]

- Jalal, A.; Kamal, S.; Kim, D. Depth Map-based Human Activity Tracking and Recognition Using Body Joints Features and Self-Organized Map. In Proceedings of the 5th International Conference on Computing, Communications and Networking Technologies (ICCCNT), Hefei, China, 11–13 July 2014. [Google Scholar]

- Ghadi, Y.; Akhter, I.; Suliman, A.; Tamara, S.; Jalal, A.; Park, J. Multiple events detection using context-intelligence features. IASC 2022, 34, 3. [Google Scholar]

- Jalal, A.; Kamal, S. Real-time life logging via a depth silhouette-based human activity recognition system for smart home services. In Proceedings of the 2014 11th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Seoul, Korea, 26–29 August 2014; pp. 74–80. [Google Scholar]

- Jalal, A.; Kamal, S.; Kim, D. A depth video sensor-based life-logging human activity recognition system for elderly care in smart indoor environments. Sensors 2014, 14, 11735–11759. [Google Scholar] [CrossRef] [PubMed]

- Ghadi, Y.Y.; Akhter, I.; Aljuaid, H.; Gochoo, M.; Alsuhibany, S.A.; Jalal, A.; Park, J. Extrinsic Behavior Prediction of Pedestrians via Maximum Entropy Markov Model and Graph-Based Features Mining. Appl. Sci. 2022, 12, 5985. [Google Scholar] [CrossRef]

- Gochoo, M.; Tahir, S.B.U.D.; Jalal, A.; Kim, K. Monitoring Real-Time Personal Locomotion Behaviors Over Smart Indoor-Outdoor Environments Via Body-Worn Sensors. IEEE Access 2021, 9, 70556–70570. [Google Scholar] [CrossRef]

- Pervaiz, M.; Ghadi, Y.Y.; Gochoo, M.; Jalal, A.; Kamal, S.; Kim, D.-S. A Smart Surveillance System for People Counting and Tracking Using Particle Flow and Modified SOM. Sustainability 2021, 13, 5367. [Google Scholar] [CrossRef]

- Jalal, A.; Akhtar, I.; Kim, K. Human Posture Estimation and Sustainable Events Classification via Pseudo-2D Stick Model and K-ary Tree Hashing. Sustainability 2020, 12, 9814. [Google Scholar] [CrossRef]

- Khalid, N.; Ghadi, Y.Y.; Gochoo, M.; Jalal, A.; Kim, K. Semantic Recognition of Human-Object Interactions via Gaussian-Based Elliptical Modeling and Pixel-Level Labeling. IEEE Access 2021, 9, 111249–111266. [Google Scholar] [CrossRef]

- Trong, K.N.; Bui, H.; Pham, C. Recognizing hand gestures for controlling home appliances with mobile sensors. In Proceedings of the 2019 11th International Conference on Knowledge and Systems Engineering (KSE), Da Nang, Vietnam, 24–26 October 2019; pp. 1–7. [Google Scholar]

- Senanayake, R.; Kumarawadu, S. A robust vision-based hand gesture recognition system for appliance control in smart homes. In Proceedings of the 2012 IEEE International Conference on Signal Processing, Communication and Computing (ICSPCC 2012), Hong Kong, China, 12–15 August 2012; pp. 760–763. [Google Scholar]

- Chong, Y.; Huang, J.; Pan, S. Hand Gesture recognition using appearance features based on 3D point cloud. J. Softw. Eng. Appl. 2016, 9, 103–111. [Google Scholar] [CrossRef] [Green Version]

- Solanki, U.V.; Desai, N.H. Hand gesture based remote control for home appliances: Handmote. In Proceedings of the 2011 World Congress on Information and Communication Technologies, Mumbai, India, 11–14 December 2011; pp. 419–423. [Google Scholar]

- Jamaludin, N.A.N.; Fang, O.H. Dynamic Hand Gesture to Text using Leap Motion. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 199–204. [Google Scholar] [CrossRef] [Green Version]

- Chellaswamy, C.; Durgadevi, J.J.; Srinivasan, S. An intelligent hand gesture recognition system using fuzzy logic. In Proceedings of the IET Chennai Fourth International Conference on Sustainable Energy and Intelligent Systems (SEISCON 2013), Chennai, India, 12–14 December 2013; pp. 326–332. [Google Scholar]

- Yang, P.-Y.; Ho, K.-H.; Chen, H.-C.; Chien, M.-Y. Exercise training improves sleep quality in middle-aged and older adults with sleep problems: A systematic review. J. Physiother. 2012, 58, 157–163. [Google Scholar] [CrossRef] [Green Version]

- Farooq, A.; Jalal, A.; Kamal, S. Dense RGB-D Map-Based Human Tracking and Activity Recognition using Skin Joints Features and Self-Organizing Map. KSII Trans. Internet Inf. Syst. 2015, 9, 1856–1869. [Google Scholar]

- Jalal, A.; Kamal, S.; Kim, D. Depth silhouettes context: A new robust feature for human tracking and activity recognition based on embedded HMMs. In Proceedings of the 2015 12th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI 2015), Goyang City, Korea, 28 October 2015. [Google Scholar]

- Jalal, A.; Kamal, S.; Kim, D. Individual detection-tracking-recognition using depth activity images. In Proceedings of the 2015 12th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Goyangi, Korea, 28–30 October 2015; pp. 450–455. [Google Scholar]

- Kamal, S.; Jalal, A. A hybrid feature extraction approach for human detection, tracking and activity recognition using depth sensors. Arab. J. Sci. Eng. 2016, 41, 1043–1051. [Google Scholar] [CrossRef]

- Jalal, A.; Kim, Y.-H.; Kim, Y.-J.; Kamal, S.; Kim, D. Robust human activity recognition from depth video using spatiotemporal multi-fused features. Pattern Recognit. 2017, 61, 295–308. [Google Scholar] [CrossRef]

- Kamal, S.; Jalal, A.; Kim, D. Depth images-based human detection, tracking and activity recognition using spatiotemporal features and modified HMM. J. Electr. Eng. Technol. 2016, 11, 1857–1862. [Google Scholar] [CrossRef] [Green Version]

- Gochoo, M.; Akhter, I.; Jalal, A.; Kim, K. Stochastic Remote Sensing Event Classification over Adaptive Posture Estimation via Multifused Data and Deep Belief Network. Remote Sens. 2021, 13, 912. [Google Scholar] [CrossRef]

- Jalal, A.; Kamal, S.; Kim, D. Facial Expression recognition using 1D transform features and Hidden Markov Model. J. Electr. Eng. Technol. 2017, 12, 1657–1662. [Google Scholar]

- Jalal, A.; Kamal, S.; Kim, D. A Depth Video-based Human Detection and Activity Recognition using Multi-features and Embedded Hidden Markov Models for Health Care Monitoring Systems. Int. J. Interact. Multimed. Artif. Intell. 2017, 4, -62–62. [Google Scholar] [CrossRef] [Green Version]

- Jalal, A.; Kamal, S.; Kim, D.-S. Detecting complex 3D human motions with body model low-rank representation for real-time smart activity monitoring system. KSII Trans. Internet Inf. Syst. 2018, 12, 1189–1204. [Google Scholar]

- Jalal, A.; Kamal, S. Improved Behavior Monitoring and Classification Using Cues Parameters Extraction from Camera Array Images. Int. J. Interact. Multimed. Artif. Intell. 2019, 5, 71. [Google Scholar] [CrossRef]

- Jalal, A.; Quaid, M.A.K.; Hasan, A.S. Wearable sensor-based human behavior understanding and recognition in daily life for smart environments. In Proceedings of the 2018 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 17–19 December 2018; pp. 105–110. [Google Scholar]

- Mahmood, M.; Jalal, A.; Sidduqi, M.A. Robust spatio-Temporal features for human interaction recognition via artificial neural network. In Proceedings of the 2018 International Conference on Frontiers of Information Technology (FIT 2018), Islamabad, Pakistan, 17–19 December 2018. [Google Scholar]

- Jalal, A.; Quaid, M.A.K.; Sidduqi, M.A. A Triaxial acceleration-based human motion detection for ambient smart home system. In Proceedings of the 2019 16th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 8–12 January 2019; pp. 353–358. [Google Scholar]

- Jalal, A.; Mahmood, M.; Hasan, A.S. Multi-features descriptors for human activity tracking and recognition in Indoor-outdoor environments. In Proceedings of the 2019 16th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 8–12 January 2019; pp. 371–376. [Google Scholar]

- Jalal, A.; Mahmood, M. Students’ behavior mining in e-learning environment using cognitive processes with information technologies. Educ. Inf. Technol. 2019, 24, 2797–2821. [Google Scholar] [CrossRef]

- Jalal, A.; Nadeem, A.; Bobasu, S. Human Body Parts Estimation and Detection for Physical Sports Movements. In Proceedings of the 2019 2nd International Conference on Communication, Computing and Digital Systems (C-CODE 2019), Islamabad, Pakistan, 6–7 March 2019. [Google Scholar]

- Jalal, A.; Quaid, M.A.K.; Kim, K. A wrist worn acceleration based human motion analysis and classification for ambient smart home system. J. Electr. Eng. Technol. 2019, 14, 1733–1739. [Google Scholar] [CrossRef]

- Ahmed, A.; Jalal, A.; Kim, K. Region and decision tree-based segmentations for multi-objects detection and classification in outdoor scenes. In Proceedings of the 2019 International Conference on Frontiers of Information Technology (FIT 2019), Islamabad, Pakistan, 16–18 December 2019. [Google Scholar]

- Rafique, A.A.; Jalal, A.; Kim, K. Statistical multi-objects segmentation for indoor/outdoor scene detection and classification via depth images. In Proceedings of the 2020 17th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 14–18 January 2020; pp. 271–276. [Google Scholar]

- Ahmed, A.; Jalal, A.; Kim, K. RGB-D images for object segmentation, localization and recognition in indoor scenes using feature descriptor and Hough voting. In Proceedings of the 2020 17th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 14–18 January 2020; pp. 290–295. [Google Scholar]

- Tamara, S.; Akhter, I.; Suliman, A.; Ghadi, Y.; Jalal, A.; Park, J. Pedestrian Physical Education Training over Visualization Tool. CMC 2022, 73, 2389–2405. [Google Scholar]

- Quaid, M.A.K.; Jalal, A. Wearable sensors based human behavioral pattern recognition using statistical features and reweighted genetic algorithm. Multimed. Tools Appl. 2020, 79, 6061–6083. [Google Scholar] [CrossRef]

- Nadeem, A.; Jalal, A.; Kim, K. Human Actions Tracking and Recognition Based on Body Parts Detection via Artificial Neural Network. In Proceedings of the 3rd International Conference on Advancements in Computational Sciences (ICACS 2020), Lahore, Pakistan, 17–19 February 2020. [Google Scholar]

- Badar Ud Din Tahir, S.; Jalal, A.; Batool, M. Wearable Sensors for Activity Analysis using SMO-based Random Forest over Smart home and Sports Datasets. In Proceedings of the 3rd International Conference on Advancements in Computational Sciences (ICACS 2020), Lahore, Pakistan, 17–19 February 2020. [Google Scholar]

- Rizwan, S.A.; Jalal, A.; Kim, K. An Accurate Facial Expression Detector using Multi-Landmarks Selection and Local Transform Features. In Proceedings of the 2020 3rd International Conference on Advancements in Computational Sciences (ICACS), Lahore, Pakistan, 17–19 February 2020; pp. 1–6. [Google Scholar]

- Ud din Tahir, S.B.; Jalal, A.; Kim, K. Wearable inertial sensors for daily activity analysis based on adam optimization and the maximum entropy Markov model. Entropy 2020, 22, 579. [Google Scholar] [CrossRef] [PubMed]

- Alam; Abduallah, S.; Akhter, I.; Suliman, A.; Ghadi, Y.; Tamara, S.; Jalal, A. Object detection learning for intelligent self automated vehicles. In Proceedings of the 2019 IEEE International Conference on Vehicular Electronics and Safety (ICVES), Cairo, Egypt, 4–6 September 2019. [Google Scholar]

- Jalal, A.; Khalid, N.; Kim, K. Automatic recognition of human interaction via hybrid descriptors and maximum entropy markov model using depth sensors. Entropy 2020, 22, 817. [Google Scholar] [CrossRef]

- Batool, M.; Jalal, A.; Kim, K. Telemonitoring of Daily Activity Using Accelerometer and Gyroscope in Smart Home Environments. J. Electr. Eng. Technol. 2020, 15, 2801–2809. [Google Scholar] [CrossRef]

- Jalal, A.; Batool, M.; Kim, K. Stochastic Recognition of Physical Activity and Healthcare Using Tri-Axial Inertial Wearable Sensors. Appl. Sci. 2020, 10, 7122. [Google Scholar] [CrossRef]

- Jalal, A.; Quaid, M.A.K.; Kim, K.; Tahir, S.B.U.D. A Study of Accelerometer and Gyroscope Measurements in Physical Life-Log Activities Detection Systems. Sensors 2020, 20, 6670. [Google Scholar] [CrossRef]

- Rafique, A.A.; Jalal, A.; Kim, K. Automated Sustainable Multi-Object Segmentation and Recognition via Modified Sampling Consensus and Kernel Sliding Perceptron. Symmetry 2020, 12, 1928. [Google Scholar] [CrossRef]

- Ansar, H.; Jalal, A.; Gochoo, M.; Kim, K. Hand Gesture Recognition Based on Auto-Landmark Localization and Reweighted Genetic Algorithm for Healthcare Muscle Activities. Sustainability 2021, 13, 2961. [Google Scholar] [CrossRef]

- Nadeem, A.; Jalal, A.; Kim, K. Automatic human posture estimation for sport activity recognition with robust body parts detection and entropy markov model. Multimed. Tools Appl. 2021, 80, 21465–21498. [Google Scholar] [CrossRef]

- Akhter, I. Automated Posture Analysis of Gait Event Detection via a Hierarchical Optimization Algorithm and Pseudo 2D Stick-Model. Master’s Thesis, Air University, Islamabad, Pakistan, December 2020. [Google Scholar]

- Ud din Tahir, S.B. A Triaxial Inertial Devices for Stochastic Life-Log Monitoring via Augmented-Signal and a Hierarchical Recognizer. Master’s Thesis, Air University, Islamabad, Pakistan, December 2020. [Google Scholar]

- Benitez-Garcia, G.; Olivares-Mercado, J.; Sanchez-Perez, G.; Yanai, K. IPN hand: A video dataset and benchmark for real-time continuous hand gesture recognition. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 4340–4347. [Google Scholar]

- Materzynska, J.; Berger, G.; Bax, I.; Memisevic, R. The jester dataset: A large-scale video dataset of human gestures. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Yamaguchi, O.; Fukui, K. Image-set based Classification using Multiple Pseudo-whitened Mutual Subspace Method. In Proceedings of the 11th International Conference on Pattern Recognition Applications and Methods, Vienna, Austria, 3–5 February 2022. [Google Scholar]

- Zhou, B.; Andonian, A.; Oliva, A.; Torralba, A. Temporal relational reasoning in videos. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 803–818. [Google Scholar]

- Gammulle, H.; Denman, S.; Sridharan, S.; Fookes, C. TMMF: Temporal Multi-Modal Fusion for Single-Stage Continuous Gesture Recognition. IEEE Trans. Image Process. 2021, 30, 7689–7701. [Google Scholar] [CrossRef] [PubMed]

- Shi, L.; Zhang, Y.; Hu, J.; Cheng, J.; Lu, H. Gesture recognition using spatiotemporal deformable convolutional representation. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1900–1904. [Google Scholar]

- Kopuklu, O.; Kose, N.; Rigoll, G. Motion fused frames: Data level fusion strategy for hand gesture recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2103–2111. [Google Scholar]

- Benitez-Garcia, G.; Prudente-Tixteco, L.; Castro-Madrid, L.C.; Toscano-Medina, R.; Olivares-Mercado, J.; Sanchez-Perez, G.; Villalba, L.J.G. Improving real-time hand gesture recognition with semantic segmentation. Sensors 2021, 21, 356. [Google Scholar] [CrossRef] [PubMed]

- Jalal, A.; Batool, M.; Kim, K. Sustainable wearable system: Human behavior modeling for life-logging activities using K-Ary tree hashing classifier. Sustainability 2020, 12, 10324. [Google Scholar] [CrossRef]

| Hand Gestures Recognition for Controlling Smart Home Appliances | |

|---|---|

| Methods | Main Contributions |

| H. Khanh et al. (2019) [24] | The system was developed for controlling smart home appliances using two deep learning models fused with mobile sensors to recognize hand gestures. The mobile sensors were instrumented on smartwatches, smartphones, and smart appliances. The deep learning models helped in the learning and representation of the mobile sensors’ data. |

| Ransalu et al. (2012) [25] | The HGR model was developed to automate the home appliances using hand gestures. First, the hand was detected using the Viola-Jones object detection algorithm. To segment the hand from the image, the YCbCr skin color segmentation technique was used. The filtered hand was refined using dilation and eroding. At last, a multilayer perceptron was used to classify the four-hand gestures, i.e., ready, swing, on/speedup & off. |

| P. N. et al. (2017) [26] | The model consists of a few steps. The hand gestures were captured using the web camera and were then preprocessed to detect the hands. Corner point detection was used to de-noise the images using a MATLAB simulation tool. Based on the gestures, different threshold values were used for controlling the home appliances. The threshold values were generated using the Fast Fourier transform algorithm and the appliances were controlled by the micro-controllers. |

| V. Utpal et al. (2011) [27] | For controlling home appliances and electronic gadgets using hand gestures, the authors detected hands using the YCbCr skin color segmentation model and traced edges. For gesture recognition, the number of fingers was counted, and its orientation was analyzed. The reference background was stored from each frame which was compared with the next frame for reliable hand gesture recognition. |

| Santhana et al. (2020) [28] | They developed a hand gesture recognition system using Leap motion sensors. The system was customized to recognize multiple motion-based hand gestures for controlling smart home appliances. The system was trained using a customized dataset containing various hand gestures to control daily household devices using a deep neural network. |

| Qi et al. (2013) [29] | They developed a hand recognition system for controlling television. The system was categorized into three sub-categories. (1) For static hand gesture recognition, hand features were extracted using a histogram-oriented gradient (HOG), and for recognition, Adaboost training was used. (2) For dynamic hand gesture recognition, first the hand trajectory was recognized and passed through the HMM model for recognition. (3) For finger click recognition, a specific depth threshold was fixed to detect the fingers. The distance between the palm and the fingertip was calculated. The accumulated variance was calculated for each fingertip to recognize the finger click gesture. |

| Yueh et al. (2018) [30] | The authors developed a system to control a TV using hand gestures. First, the hand was detected through skin segmentation and the hand contour was extracted. After that, the system was trained using CNN to recognize hand gestures that were categorized into five branches; (1) menu, (2) direction, (3) go back, (4) mute/unmute, and (5) nothing. After that, CNN also helped in tracking the hand joints to detect commands: (1) increase/ decrease the speed, (2) clicking, and (3) cursor movement. |

| Hand Gestures | Number of Samples | Plain Background | Accuracy (%) | Cluttered Background | Accuracy (%) |

|---|---|---|---|---|---|

| POF | 30 | 30 | 100 | 25 | 83.3 |

| PTF | 30 | 30 | 100 | 26 | 86.6 |

| COF | 30 | 30 | 100 | 26 | 86.6 |

| CTF | 30 | 28 | 93.3 | 26 | 86.6 |

| TU | 30 | 29 | 96.6 | 29 | 96.6 |

| TD | 30 | 29 | 96.6 | 30 | 100 |

| TL | 30 | 27 | 90 | 30 | 100 |

| TR | 30 | 28 | 93.3 | 30 | 100 |

| OT | 30 | 29 | 96.6 | 30 | 100 |

| DCOF | 30 | 30 | 100 | 29 | 96.6 |

| DCTF | 30 | 30 | 100 | 29 | 96.6 |

| ZI | 30 | 30 | 100 | 28 | 93.3 |

| ZO | 30 | 29 | 96.6 | 30 | 100 |

| Mean Accuracy Rate | 97.1% | 94.3% | |||

| Hand Gestures | Number of Samples | Plain Background | Accuracy (%) | Cluttered Background | Accuracy (%) |

|---|---|---|---|---|---|

| SD | 30 | 30 | 100 | 25 | 83.3 |

| SL | 30 | 29 | 96.6 | 27 | 90 |

| SR | 30 | 28 | 93.3 | 24 | 80 |

| SU | 30 | 28 | 93.3 | 26 | 86.6 |

| TD | 30 | 29 | 96.6 | 24 | 80 |

| TU | 30 | 28 | 93.3 | 30 | 100 |

| ZIF | 30 | 29 | 96.6 | 25 | 83.3 |

| ZOF | 30 | 27 | 90 | 30 | 100 |

| S | 30 | 29 | 96.6 | 25 | 83.3 |

| RF | 30 | 30 | 100 | 29 | 96.6 |

| RB | 30 | 30 | 100 | 23 | 76.6 |

| PI | 30 | 28 | 93.3 | 28 | 93.3 |

| SH | 30 | 29 | 96.6 | 30 | 100 |

| Mean Accuracy Rate | 95.6% | 88.6% | |||

| Class | POF | PTF | COF | CTF | TU | TD | TL | TR | OT | DCOF | DCTF | ZI | ZO |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| POF | 8 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| PTF | 0 | 9 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| COF | 0 | 0 | 10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| CTF | 0 | 1 | 0 | 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| TU | 1 | 0 | 0 | 0 | 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| TD | 0 | 0 | 1 | 0 | 0 | 8 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

| TL | 0 | 2 | 0 | 0 | 0 | 0 | 7 | 0 | 0 | 0 | 0 | 1 | 0 |

| TR | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 8 | 0 | 0 | 0 | 1 | 0 |

| OT | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 9 | 0 | 1 | 0 | 0 |

| DCOF | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 10 | 0 | 0 | 0 |

| DCTF | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 9 | 0 | 1 |

| ZI | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 10 | 0 |

| ZO | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 9 |

| Hand Gestures classification mean accuracy = 88.46% | |||||||||||||

| Gestures | SD | SL | SR | SU | TD | TU | ZIF | ZOF | S | RF | RB | PI | SH |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SD | 9 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| SL | 0 | 9 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| SR | 0 | 0 | 9 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| SU | 0 | 0 | 1 | 8 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| TD | 0 | 0 | 0 | 0 | 10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| TU | 0 | 0 | 0 | 0 | 1 | 8 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| ZIF | 0 | 0 | 0 | 0 | 0 | 0 | 9 | 0 | 0 | 1 | 0 | 0 | 0 |

| ZOF | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 9 | 0 | 0 | 0 | 0 | 0 |

| S | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 8 | 0 | 0 | 1 | 0 |

| RF | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 9 | 0 | 0 | 0 |

| RB | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 10 | 0 | 0 |

| PI | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 9 | 0 |

| SH | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 1 | 7 |

| Hand Gestures classification mean accuracy = 87.69% | |||||||||||||

| Authors | IPN Hand Dataset (%) | Authors | Jester Dataset (%) |

|---|---|---|---|

| Yamaguchi et al. (2022) [70] | 60.00 | Zhou et al. (2018) [71] | 82.02 |

| Gammulle et al. (2021) [72] | 80.03 | Shi et al. (2019) [73] | 82.34 |

| Garcia et al. (2020) [68] | 82.36 | Kopuklu et al. (2018) [74] | 84.70 |

| TSN [75] | 68.01 | MFFs [76] | 84.70 |

| Proposed method | 88.46 | Proposed method | 87.69 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ansar, H.; Ksibi, A.; Jalal, A.; Shorfuzzaman, M.; Alsufyani, A.; Alsuhibany, S.A.; Park, J. Dynamic Hand Gesture Recognition for Smart Lifecare Routines via K-Ary Tree Hashing Classifier. Appl. Sci. 2022, 12, 6481. https://doi.org/10.3390/app12136481

Ansar H, Ksibi A, Jalal A, Shorfuzzaman M, Alsufyani A, Alsuhibany SA, Park J. Dynamic Hand Gesture Recognition for Smart Lifecare Routines via K-Ary Tree Hashing Classifier. Applied Sciences. 2022; 12(13):6481. https://doi.org/10.3390/app12136481

Chicago/Turabian StyleAnsar, Hira, Amel Ksibi, Ahmad Jalal, Mohammad Shorfuzzaman, Abdulmajeed Alsufyani, Suliman A. Alsuhibany, and Jeongmin Park. 2022. "Dynamic Hand Gesture Recognition for Smart Lifecare Routines via K-Ary Tree Hashing Classifier" Applied Sciences 12, no. 13: 6481. https://doi.org/10.3390/app12136481

APA StyleAnsar, H., Ksibi, A., Jalal, A., Shorfuzzaman, M., Alsufyani, A., Alsuhibany, S. A., & Park, J. (2022). Dynamic Hand Gesture Recognition for Smart Lifecare Routines via K-Ary Tree Hashing Classifier. Applied Sciences, 12(13), 6481. https://doi.org/10.3390/app12136481