Multimodal Natural Human–Computer Interfaces for Computer-Aided Design: A Review Paper

Abstract

:1. Introduction

2. Methodology

3. Natural HCI for CAD

3.1. The Reasons Why Natural HCI Is Required for CAD

3.2. The Effects of Using Natural HCI in CAD

- 1.

- They can shorten the learning cycle and reduce the difficulty of learning for novice users.

- 2.

- They enable a more intuitive and natural interaction process.

- 3.

- They can enhance the innovative design ability of designers.

4. Ways to Implement Natural HCI for CAD

4.1. Eye Tracking-Based Interaction for CAD

4.2. Gesture Recognition-Based Interaction for CAD

4.3. Speech Recognition-Based Interaction for CAD

4.4. BCI-Based Interaction for CAD

4.5. Multimodal HCI for CAD

- Different modalities can be used to execute different CAD commands. For example, in [69], eye tracking is used to select CAD models and gesture is used to activate different types of manipulation commands.

- Different modalities can be used to execute the same CAD commands at the same time. In another words, there is overlap in the set of CAD commands that can be implemented through different modalities. For example, the task of creating models can be completed both by BCI-enabled commands and speech commands [3]. In this case, users can choose different interfaces according to their preferences. Moreover, to a certain extent, this kind of overlap in commands improves the robustness and flexibility of the interfaces.

- Different modalities can be combined to execute a CAD command synergistically. In this respect, the advantages of different interfaces can be leveraged to work together on a model manipulation task. For example, in most CAD prototype systems combined with speech and gesture [8,12,64], speech is used to activate specific model manipulation commands (translation, rotation, zooming, etc.), while gestures are used to control the magnitude and direction of specific manipulations.

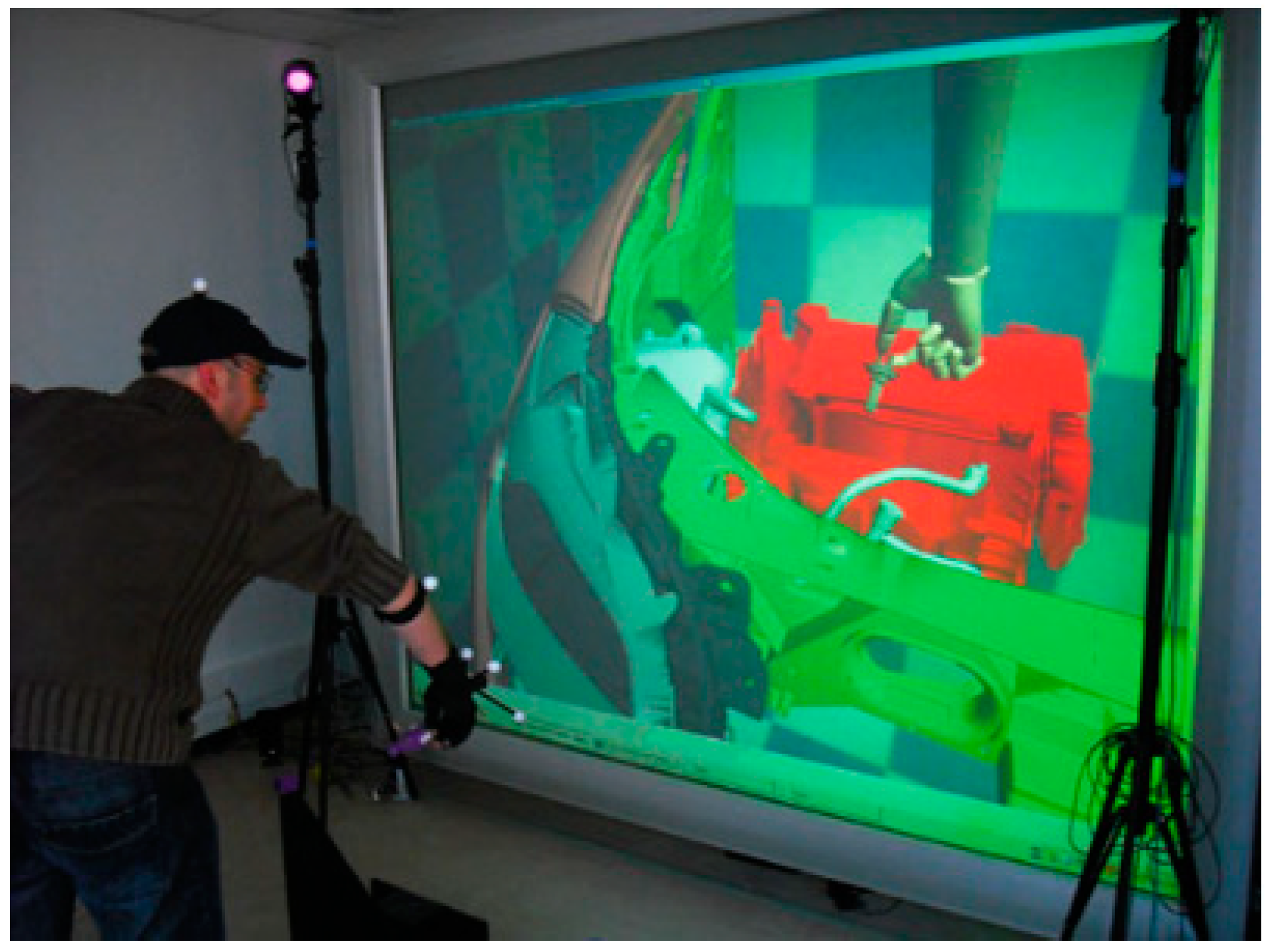

4.6. Other Interfaces for CAD

5. Evaluation of Natural HCI for CAD

- Effectiveness: This metric refers to the recognition accuracy (or error rate) and completeness of modeling tasks while using the novel CAD interaction system. To compute the accuracy parameter, Song et al. [34] carried out a manipulation test for the developed multimodal interface and the errors of translation, rotation, and zooming were recorded. The test results showed that the user performed the zooming task with higher accuracy than the translation task in the novel interaction system.

- Efficiency: This metric is mainly established in terms of the task completion time and includes the system response time. In the view of [117], the time period, when the user is planning how to operate a generate/modified task through the user interface in a CAD system, should also be included as a component of task completion time in addition to the operation execution time. From the time test results, Chu et al. [117] found that using the novel multimodal interface results in a speedup of 1.5 to 2.0 times over the traditional interface (mouse and keyboard) in the process of building simple models, such as block, cylinder, sphere, etc. For more complex models, modeling with a traditional interface takes three to four times longer than with the novel interface [117]. The main factor contributing to these differences is that the number of operations required for traditional interface increases significantly relative to the novel interface with the increase in model complexity. Additionally, the total task completion time is proportional to the total number of operations for modeling. It is worth noting that only users with the same level of experience for CAD were considered in the study of Chu et al. [117]. Future research is needed to conduct a comparative experimental analysis of system performance using users with different experience levels.

- Learnability: This metric describes how easy it is for novice users to interact with CAD systems and accomplish modeling tasks. For multimodal HCIs, learnability is a kind of quality that allows novice users to quickly become familiar with these interfaces and make good use of all their features and capabilities. Generally, the use of user questionnaire is a common method for evaluating the learnability of interaction systems. In the studies of [11,64], users were asked to rate the task on a five-point Likert scale in terms of the ease of performing tasks and satisfaction with the modeling results after the completion of tasks. The results showed that for a multimodal interface-based CAD system, users’ perceptions of the ease of performing tasks were high for all tasks, except for rotation and modeling tasks [64]. On the other hand, some quantitative parameters can also reflect the learnability of a system. For example, the improvement of user performance associated with task repetitions was used to evaluate learnability in [113], including the task completion time and number of collisions. Meanwhile, Chu et al. [117] focused on the number of design steps for modeling and the idle time period when the user knows exactly what to do and is planning how the interaction approach may be performed to achieve the design tasks. In the study of [117], compared to traditional interfaces, a VR-based multimodal system required fewer design steps and less idle time in the modeling process, which indicates that the user could quickly understand what operations need to be performed and complete the modeling tasks.

- User preference: This metric refers to the subjective evaluation of the user’s pleasure in using the novel interface based on the user characteristics, including static characteristics (e.g., age, gender, and language) and dynamical characteristics (e.g., motivation, emotional status, experience, and domain knowledge). This metric is also an important reflection of user-centered natural interaction design. In terms of multimodal systems, it can offer a set of modalities for user input and output depending on the interactional environment and functional requirements [115]. User experience and preference should be fully taken account in the process of the choice of different modalities and the design of a unimodal interface. In the studies of [3,118,119], participants were questioned about their preferred interaction interface and most of them appreciated the use of an immersive multimodal interface for CAD modeling, which can offer a more intuitive interactive environment than traditional interfaces where the user does not have to navigate through a series of windows and menus in order to achieve a desired action. Additionally, the unimodal interfaces, such as gesture-based interfaces [7] and speech-based interfaces [24], should be designed taking into account user preference, not just based on the expert opinion.

- Cognitive load: This metric refers to the amount of mental activity imposed on working memory in the process of modeling tasks, including the necessary information processing capacity and resources. In terms of cognitive psychology [120], the product designer is considered as a cognitive system utilizing CAD systems for modeling efficiently [35]. The cognitive interaction between the designer and system is performed by various types of interfaces. In general, the use of multimodal interfaces could make better use of the human cognitive resources and will thus result in a lower cognitive load on the user during the process of modeling [115,121]. To verify this point, Sharma et al. [71] collected subjective task load data with the NASA TLX method after each task, and evaluated the cognitive load of users in modeling with multitouch and multimodal systems. Following this, the qualitative self-reported data and EEG signals were used to analyze the user’s cognitive load during the process of modeling in the multimodal interface-based system [26]. The results showed that compared with the results of expert users, novice users’ alpha band activity increased when using a multimodal interface, which indicates that their cognitive load decreased [26]. This result was confirmed in the questionnaire responses. Consequently, it was easy for novice users to use a new set of multimodal inputs for modeling compared to expert users. Additionally, some performance features (e.g., reaction time and error rate) and psycho-physiological parameters (e.g., blink rate and pupil diameter) can also be used to evaluate the cognitive load of user [122].

- Physical fatigue: This metric refers to the physical effort needed for users while interacting with the CAD system in order to perform modeling tasks. Even if the multimodal interface puts users in the loop of an intuitive and immersive interactive environment, the repeated execution of body movements (e.g., arm, hand, eye, etc.) for a long time can lead to fatigue and stressful factors for users [35]. Therefore, it is necessary to evaluate multimodal interface-based CAD systems assessing the body motion in the performance of modeling tasks so as to reduce body fatigue and increase user satisfaction in the design process. Administering a questionnaire to users is a mainstream method adopted to evaluate the physical fatigue caused by using multimodal interfaces [6,64]. Meanwhile, the overall body posture, movement pattern, and hand movement distance can also be used as analytical factors for physical fatigue. Toma [35] used the distance covered by hand movement in the performance of a task to compare and evaluate the VR-CAD system with a traditional desktop workspace, and found that multimodal interfaces lead to higher physical fatigue, with the hand movement distance being on average 1.6 times greater than the desktop interface for the modeling process.

6. Devices for Natural HCI

6.1. Devices for Eye Tracking

6.2. Devices for Gesture Recognition

6.3. Devices for Speech Recognition

6.4. Devices for EEG Acquisition

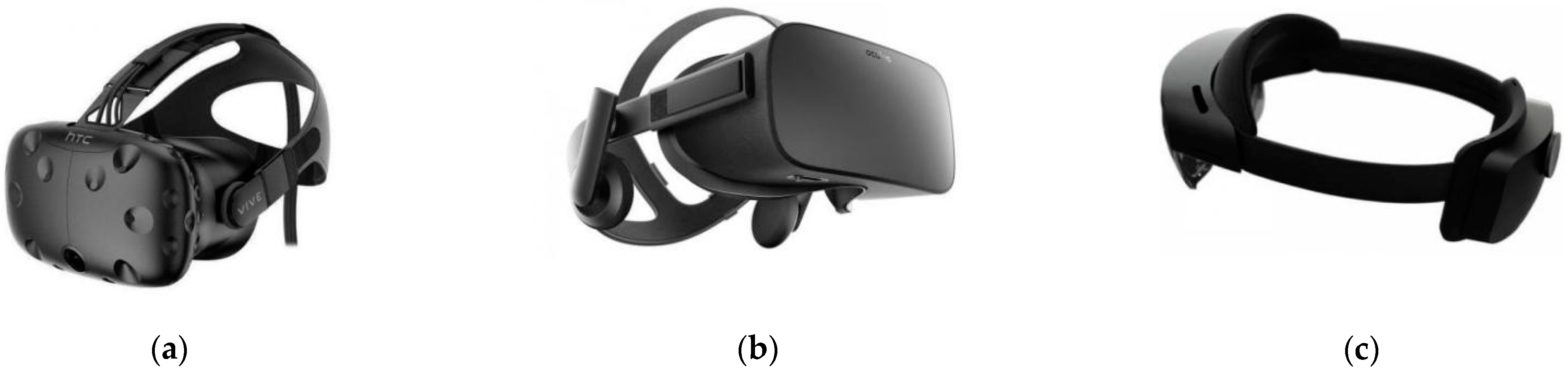

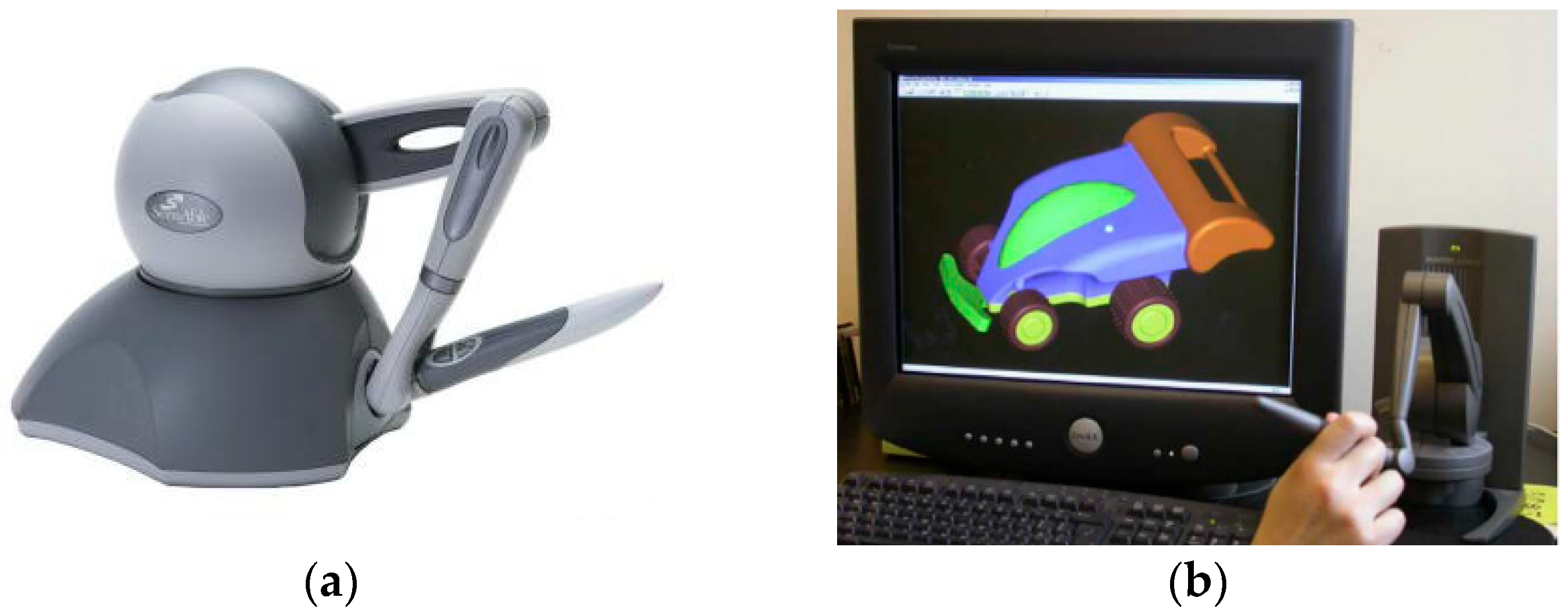

6.5. Other Devices for Natural HCI

7. The Future of Natural HCI for CAD

7.1. Changing from a Large Scale and Independence to Lightweight, Miniaturization and Integration for Interaction Devices

7.2. Changing from System-Centered to User-Centered Interaction Patterns

7.3. Changing from Single Discriminant to Multimodal Fusion Analysis for Interaction Algorithms

7.4. Changing from Single-Person Design to Multiuser Collaborative Design for Interaction

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Allen, J.; Kouppas, P. Computer Aided Design: Past, Present, Future. In Design and Designing: A Critical Introduction; BERG: London, UK, 2012; pp. 97–111. [Google Scholar]

- Bilgin, M.S.; Baytaroğlu, E.N.; Erdem, A.; Dilber, E. A Review of Computer-Aided Design/Computer-Aided Manufacture Techniques for Removable Denture Fabrication. Eur. J. Dent. 2016, 10, 286–291. [Google Scholar] [CrossRef] [Green Version]

- Nanjundaswamy, V.G.; Kulkarni, A.; Chen, Z.; Jaiswal, P.; Verma, A.; Rai, R. Intuitive 3D Computer-Aided Design (CAD) System with Multimodal Interfaces. In Proceedings of the 33rd Computers and Information in Engineering Conference, Portland, OR, USA, 4–7 August 2013; ASME: New York, NY, USA, 2013; Volume 2A, p. V02AT02A037. [Google Scholar] [CrossRef]

- Wu, K.C.; Fernando, T. Novel Interface for Future CAD Environments. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics, The Hague, The Netherlands, 10–13 October 2004; Volume 7, pp. 6286–6290. [Google Scholar] [CrossRef]

- Huang, J.; Rai, R. Conceptual Three-Dimensional Modeling Using Intuitive Gesture-Based Midair Three-Dimensional Sketching Technique. J. Comput. Inf. Sci. Eng. 2018, 18, 041014. [Google Scholar] [CrossRef]

- Ryu, K.; Lee, J.J.; Park, J.M. GG Interaction: A Gaze–Grasp Pose Interaction for 3D Virtual Object Selection. J. Multimodal User Interfaces 2019, 13, 383–393. [Google Scholar] [CrossRef] [Green Version]

- Vuletic, T.; Duffy, A.; McTeague, C.; Hay, L.; Brisco, R.; Campbell, G.; Grealy, M. A Novel User-Based Gesture Vocabulary for Conceptual Design. Int. J. Hum. Comput. Stud. 2021, 150, 102609. [Google Scholar] [CrossRef]

- Friedrich, M.; Langer, S.; Frey, F. Combining Gesture and Voice Control for Mid-Air Manipulation of CAD Models in VR Environments. In Proceedings of the VISIGRAPP 2021—16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Online, 8–10 February 2021; Volume 2, pp. 119–127. [Google Scholar] [CrossRef]

- Huang, Y.-C.; Chen, K.-L. Brain-Computer Interfaces (BCI) Based 3D Computer-Aided Design (CAD): To Improve the Efficiency of 3D Modeling for New Users. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Schmorrow, D.D., Fidopiastis, C.M., Eds.; Springer International Publishing: Cham, Switzerland, 2017; Volume 10285, pp. 333–344. [Google Scholar] [CrossRef]

- Toma, M.-I.; Postelnicu, C.; Antonya, C. Multi-Modal Interaction for 3D Modeling. Bull. Transilv. Univ. Brasov. Eng. Sci. 2010, 3, 137–144. [Google Scholar]

- Khan, S.; Tunçer, B.; Subramanian, R.; Blessing, L. 3D CAD Modeling Using Gestures and Speech: Investigating CAD Legacy and Non-Legacy Procedures. In Proceedings of the “Hello, Culture”—18th International Conference on Computer-Aided Architectural Design Future (CAAD Future 2019), Daejeon, Korea, 26–28 June 2019; pp. 624–643. [Google Scholar]

- Khan, S.; Tunçer, B. Gesture and Speech Elicitation for 3D CAD Modeling in Conceptual Design. Autom. Constr. 2019, 106, 102847. [Google Scholar] [CrossRef]

- Câmara, A. Natural User Interfaces. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2011; Volume 6946, p. 1. [Google Scholar] [CrossRef]

- Shao, L.; Shan, C.; Luo, J.; Etoh, M. Multimedia Interaction and Intelligent User Interfaces: Principles, Methods and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Chu, M.; Begole, B. Natural and Implicit Information-Seeking Cues in Responsive Technology. In Human-Centric Interfaces for Ambient Intelligence; Elsevier: Amsterdam, The Netherlands, 2010; pp. 415–452. [Google Scholar]

- Karray, F.; Alemzadeh, M.; Abou Saleh, J.; Nours Arab, M. Human-Computer Interaction: Overview on State of the Art. Int. J. Smart Sens. Intell. Syst. 2008, 1, 137–159. [Google Scholar] [CrossRef] [Green Version]

- Beeby, W. The Future of Integrated CAD/CAM Systems: The Boeing Perspective. IEEE Comput. Graph. Appl. 1982, 2, 51–56. [Google Scholar] [CrossRef]

- Fetter, W. A Computer Graphic Human Figure System Applicable to Kineseology. In Proceedings of the 15th Design Automation Conference, Las Vegas, GA, USA, 19–21 June 1978; p. 297. [Google Scholar]

- Tay, F.E.H.; Roy, A. CyberCAD: A Collaborative Approach in 3D-CAD Technology in a Multimedia-Supported Environment. Comput. Ind. 2003, 52, 127–145. [Google Scholar] [CrossRef]

- Tornincasa, S.; Di Monaco, F. The Future and the Evolution of CAD. In Proceedings of the 14th International Research/Expert Conference: Trends in the Development of Machinery and Associated Technology, Mediterranean Cruise, 11–18 September 2010; Volume 1, pp. 11–18. [Google Scholar]

- Miyazaki, T.; Hotta, Y.; Kunii, J.; Kuriyama, S.; Tamaki, Y. A Review of Dental CAD/CAM: Current Status and Future Perspectives from 20 Years of Experience. Dent. Mater. J. 2009, 28, 44–56. [Google Scholar] [CrossRef] [Green Version]

- Matta, A.K.; Raju, D.R.; Suman, K.N.S. The Integration of CAD/CAM and Rapid Prototyping in Product Development: A Review. Mater. Today Proc. 2015, 2, 3438–3445. [Google Scholar] [CrossRef]

- Lichten, L. The Emerging Technology of CAD/CAM. In Proceedings of the 1984 Annual Conference of the ACM on The Fifth Generation Challenge, ACM ’84, San Francisco, CA, USA, 8–14 October 1984; Association for Computing Machinery: New York, NY, USA, 1984; pp. 236–241. [Google Scholar] [CrossRef]

- Kou, X.Y.; Xue, S.K.; Tan, S.T. Knowledge-Guided Inference for Voice-Enabled CAD. Comput. Des. 2010, 42, 545–557. [Google Scholar] [CrossRef]

- Thakur, A.; Rai, R. User Study of Hand Gestures for Gesture Based 3D CAD Modeling. In Proceedings of the ASME Design Engineering Technical Conference, Boston, MA, USA, 2–5 August 2015; Volume 1B-2015, pp. 1–14. [Google Scholar] [CrossRef]

- Baig, M.Z.; Kavakli, M. Analyzing Novice and Expert User’s Cognitive Load in Using a Multi-Modal Interface System. In Proceedings of the 2018 26th International Conference on Systems Engineering (ICSEng), Sydney, Australia, 18–20 December 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Esquivel, J.C.R.; Viveros, A.M.; Perry, N. Gestures for Interaction between the Software CATIA and the Human via Microsoft Kinect. In International Conference on Human-Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2014; pp. 457–462. [Google Scholar]

- Sree Shankar, S.; Rai, R. Human Factors Study on the Usage of BCI Headset for 3D CAD Modeling. Comput. Des. 2014, 54, 51–55. [Google Scholar] [CrossRef]

- Turk, M. Multimodal Interaction: A Review. Pattern Recognit. Lett. 2014, 36, 189–195. [Google Scholar] [CrossRef]

- Bhat, R.; Deshpande, A.; Rai, R.; Esfahani, E.T. BCI-Touch Based System: A Multimodal CAD Interface for Object Manipulation. In Volume 12: Systems and Design, Proceedings of the ASME 2013 International Mechanical Engineering Congress and Exposition, San Diego, CA, USA, 15–21 November 2013; American Society of Mechanical Engineers: New York, NY, USA, 2013; p. V012T13A015. [Google Scholar] [CrossRef] [Green Version]

- Oviatt, S. User-Centered Modeling and Evaluation of Multimodal Interfaces. Proc. IEEE 2003, 91, 1457–1468. [Google Scholar] [CrossRef]

- Lee, H.; Lim, S.Y.; Lee, I.; Cha, J.; Cho, D.-C.; Cho, S. Multi-Modal User Interaction Method Based on Gaze Tracking and Gesture Recognition. Signal Process. Image Commun. 2013, 28, 114–126. [Google Scholar] [CrossRef]

- Jaímes, A.; Sebe, N. Multimodal Human Computer Interaction: A Survey. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Figure 1; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3766, pp. 1–15. [Google Scholar] [CrossRef]

- Song, J.; Cho, S.; Baek, S.Y.; Lee, K.; Bang, H. GaFinC: Gaze and Finger Control Interface for 3D Model Manipulation in CAD Application. CAD Comput. Aided Des. 2014, 46, 239–245. [Google Scholar] [CrossRef]

- Toma, M.I.; Gîrbacia, F.; Antonya, C. A Comparative Evaluation of Human Interaction for Design and Assembly of 3D CAD Models in Desktop and Immersive Environments. Int. J. Interact. Des. Manuf. 2012, 6, 179–193. [Google Scholar] [CrossRef]

- Lawson, B.; Loke, S.M. Computers, Words and Pictures. Des. Stud. 1997, 18, 171–183. [Google Scholar] [CrossRef]

- Goel, A.K.; Vattam, S.; Wiltgen, B.; Helms, M. Cognitive, Collaborative, Conceptual and Creative—Four Characteristics of the next Generation of Knowledge-Based CAD Systems: A Study in Biologically Inspired Design. CAD Comput. Aided Des. 2012, 44, 879–900. [Google Scholar] [CrossRef]

- Robertson, B.F.; Radcliffe, D.F. Impact of CAD Tools on Creative Problem Solving in Engineering Design. Comput. Des. 2009, 41, 136–146. [Google Scholar] [CrossRef]

- Jowers, I.; Prats, M.; McKay, A.; Garner, S. Evaluating an Eye Tracking Interface for a Two-Dimensional Sketch Editor. Comput. Des. 2013, 45, 923–936. [Google Scholar] [CrossRef] [Green Version]

- Yoon, S.M.; Graf, H. Eye Tracking Based Interaction with 3d Reconstructed Objects. In Proceeding of the 16th ACM International Conference on Multimedia—MM ’08, Vancouver, BC, Canada, 27–31 October 2008; ACM Press: New York, NY, USA, 2008; p. 841. [Google Scholar] [CrossRef]

- Argelaguet, F.; Andujar, C. A Survey of 3D Object Selection Techniques for Virtual Environments. Comput. Graph. 2013, 37, 121–136. [Google Scholar] [CrossRef] [Green Version]

- Dave, D.; Chowriappa, A.; Kesavadas, T. Gesture Interface for 3d Cad Modeling Using Kinect. Comput. Aided. Des. Appl. 2013, 10, 663–669. [Google Scholar] [CrossRef] [Green Version]

- Zhong, K.; Kang, J.; Qin, S.; Wang, H. Rapid 3D Conceptual Design Based on Hand Gesture. In Proceedings of the 2011 3rd International Conference on Advanced Computer Control, Harbin, China, 18–20 January 2011; pp. 192–197. [Google Scholar] [CrossRef]

- Xiao, Y.; Peng, Q. A Hand Gesture-Based Interface for Design Review Using Leap Motion Controller. In Proceedings of the 21st International Conference on Engineering Design (ICED 17), Vancouver, BC, Canada, 21–25 August 2017; Volume 8, pp. 239–248. [Google Scholar]

- Vinayak; Murugappan, S.; Liu, H.; Ramani, K. Shape-It-Up: Hand Gesture Based Creative Expression of 3D Shapes Using Intelligent Generalized Cylinders. CAD Comput. Aided Des. 2013, 45, 277–287. [Google Scholar] [CrossRef]

- Vinayak; Ramani, K. A Gesture-Free Geometric Approach for Mid-Air Expression of Design Intent in 3D Virtual Pottery. CAD Comput. Aided Des. 2015, 69, 11–24. [Google Scholar] [CrossRef] [Green Version]

- Huang, J.; Rai, R. Hand Gesture Based Intuitive CAD Interface. In Proceedings of the ASME 2014 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Buffalo, NY, USA, 17–20 August 2014. [Google Scholar] [CrossRef]

- Kou, X.Y.; Liu, X.C.; Tan, S.T. Quadtree Based Mouse Trajectory Analysis for Efficacy Evaluation of Voice-Enabled CAD. In Proceedings of the 2009 IEEE International Conference on Virtual Environments, Human-Computer Interfaces and Measurements Systems, Hong Kong, China, 1–13 May 2009; pp. 196–201. [Google Scholar] [CrossRef] [Green Version]

- Samad, T.; Director, S.W. Towards a Natural Language Interface for CAD. In Proceedings of the 22nd ACM/IEEE Design Automation Conference, Las Vegas, NV, USA, 23–26 June 1985; pp. 2–8. [Google Scholar] [CrossRef]

- Salisbury, M.W.; Hendrickson, J.H.; Lammers, T.L.; Fu, C.; Moody, S.A. Talk and Draw: Bundling Speech and Graphics. Computer 1990, 23, 59–65. [Google Scholar] [CrossRef]

- Kou, X.Y.; Tan, S.T. Design by Talking with Computers. Comput. Aided. Des. Appl. 2008, 5, 266–277. [Google Scholar] [CrossRef]

- Menegotto, J.L. A Framework for Speech-Oriented CAD and BIM Systems. In Communications in Computer and Information Science; Springer: Berlin/Heidelberg, Germany, 2015; Volume 527, pp. 329–347. [Google Scholar] [CrossRef]

- Behera, A.K.; McKay, A. Designs That Talk and Listen: Integrating Functional Information Using Voice-Enabled CAD Systems. In Proceedings of the 25th European Signal Processing Conference, Kos, Greek, 28 August–2 September 2017. [Google Scholar]

- Xue, S.; Kou, X.Y.; Tan, S.T. Natural Voice-Enabled CAD: Modeling via Natural Discourse. Comput. Aided. Des. Appl. 2009, 6, 125–136. [Google Scholar] [CrossRef] [Green Version]

- Plumed, R.; González-Lluch, C.; Pérez-López, D.; Contero, M.; Camba, J.D. A Voice-Based Annotation System for Collaborative Computer-Aided Design. J. Comput. Des. Eng. 2021, 8, 536–546. [Google Scholar] [CrossRef]

- Trejo, L.J.; Rosipal, R.; Matthews, B. Brain-Computer Interfaces for 1-D and 2-D Cursor Control: Designs Using Volitional Control of the EEG Spectrum or Steady-State Visual Evoked Potentials. IEEE Trans. Neural Syst. Rehabil. Eng. 2006, 14, 225–229. [Google Scholar] [CrossRef] [Green Version]

- Esfahani, E.T.; Sundararajan, V. Using Brain Computer Interfaces for Geometry Selection in CAD Systems: P300 Detection Approach. In Proceedings of the 31st Computers and Information in Engineering Conference, Parts A and B, Washington, DC, USA, 28–31 August 2011; Volume 2, pp. 1575–1580. [Google Scholar] [CrossRef]

- Esfahani, E.T.; Sundararajan, V. Classification of Primitive Shapes Using Brain-Computer Interfaces. CAD Comput. Aided Des. 2012, 44, 1011–1019. [Google Scholar] [CrossRef]

- Huang, Y.C.; Chen, K.L.; Wu, M.Y.; Tu, Y.W.; Huang, S.C.C. Brain-Computer Interface Approach to Computer-Aided Design: Rotate and Zoom in/out in 3ds Max via Imagination. In Proceedings of the International Conferences Interfaces and Human Computer Interaction 2015 (IHCI 2015), Los Angeles, CA, USA, 2–7 August 2015; Game and Entertainment Technologies 2015 (GET 2015), Las Palmas de Gran Canaria, Spain, 22–24 July 2015, Computer Graphics, Visualization, Computer Vision and Image Processing 2015 (CGVCVIP 2015), Las Palmas de Gran Canaria, Spain, 22–24 July 2015. pp. 319–322. [Google Scholar]

- Postelnicu, C.; Duguleana, M.; Garbacia, F.; Talaba, D. Towards P300 Based Brain Computer Interface for Computer Aided Design. In Proceedings of the 11th EuroVR 2014, Bremen, Germany, 8–10 December 2014; pp. 2–6. [Google Scholar] [CrossRef]

- Verma, A.; Rai, R. Creating by Imagining: Use of Natural and Intuitive BCI in 3D CAD Modeling. In Proceedings of the 33rd Computers and Information in Engineering Conference, Portland, OR, USA, 4–7 August 2013; American Society of Mechanical Engineers: New York, NY, USA, 2013; Volume 2A. [Google Scholar] [CrossRef]

- Moustakas, K.; Tzovaras, D. MASTER-PIECE: A Multimodal (Gesture + Speech) Interface for 3D Model Search and Retrieval Integrated in a Virtual Assembly Application. In Proceedings of the eINTERFACE′05-Summer Workshop on Multimodal Interfaces, Mons, Belgium, 12–18 August 2005; pp. 1–14. [Google Scholar]

- Bolt, R.A. Put-That-There. In Proceedings of the 7th Annual Conference on Computer Graphics and Interactive Techniques—SIGGRAPH ’80, Seattle, WA, USA, 14–18 July 1980; ACM Press: New York, NY, USA, 1980; pp. 262–270. [Google Scholar] [CrossRef]

- Khan, S.; Rajapakse, H.; Zhang, H.; Nanayakkara, S.; Tuncer, B.; Blessing, L. GesCAD: An Intuitive Interface for Conceptual Architectural Design. In Proceedings of the 29th Australian Conference on Computer-Human Interaction, Brisbane, Australia, 28 November–1 December 2017; ACM: New York, NY, USA, 2017; pp. 402–406. [Google Scholar] [CrossRef]

- Hauptmann, A.G. Speech and Gestures for Graphic Image Manipulation. ACM SIGCHI Bull. 1989, 20, 241–245. [Google Scholar] [CrossRef]

- Arangarasan, R.; Gadh, R. Geometric Modeling and Collaborative Design in a Multi-Modal Multi-Sensory Virtual Environment. In Proceedings of the ASME 2000 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Baltimore, MD, USA, 10–13 September 2000; pp. 10–13. [Google Scholar]

- Chu, C.-C.P.; Dani, T.H.; Gadh, R. Multi-Sensory User Interface for a Virtual-Reality-Based Computeraided Design System. Comput. Des. 1997, 29, 709–725. [Google Scholar] [CrossRef]

- Chu, C.-C.P.; Dani, T.H.; Gadh, R. Multimodal Interface for a Virtual Reality Based Computer Aided Design System. In Proceedings of the International Conference on Robotics and Automation, Albuquerque, NM, USA, 20–25 April 1997; Volume 2, pp. 1329–1334. [Google Scholar] [CrossRef]

- Pouke, M.; Karhu, A.; Hickey, S.; Arhippainen, L. Gaze Tracking and Non-Touch Gesture Based Interaction Method for Mobile 3D Virtual Spaces. In Proceedings of the 24th Australian Computer-Human Interaction Conference on OzCHI ’12, Melbourne, Australia, 26–30 November 2012; ACM Press: New York, NY, USA, 2012; pp. 505–512. [Google Scholar] [CrossRef]

- Shafiei, S.B.; Esfahani, E.T. Aligning Brain Activity and Sketch in Multi-Modal CAD Interface. In Proceedings of the ASME 2014 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Buffalo, NY, USA, 17–20 August 2014; Volume 1A, pp. 1–7. [Google Scholar] [CrossRef]

- Sharma, A.; Madhvanath, S. MozArt: A Multimodal Interface for Conceptual 3D Modeling. In Proceedings of the 13th International Conference on Multimodal Interfaces, Alicante, Spain, 14–18 November 2011; pp. 3–6. [Google Scholar] [CrossRef]

- Bourdot, P.; Convard, T.; Picon, F.; Ammi, M.; Touraine, D.; Vézien, J.-M. VR–CAD Integration: Multimodal Immersive Interaction and Advanced Haptic Paradigms for Implicit Edition of CAD Models. Comput. Des. 2010, 42, 445–461. [Google Scholar] [CrossRef]

- Mogan, G.; Talaba, D.; Girbacia, F.; Butnaru, T.; Sisca, S.; Aron, C. A Generic Multimodal Interface for Design and Manufacturing Applications. In Proceedings of the 2nd International Workshop Virtual Manufacturing (VirMan08), Torino, Italy, 6–8 October 2008. [Google Scholar]

- Stark, R.; Israel, J.H.; Wöhler, T. Towards Hybrid Modelling Environments—Merging Desktop-CAD and Virtual Reality-Technologies. CIRP Ann.-Manuf. Technol. 2010, 59, 179–182. [Google Scholar] [CrossRef]

- Ren, X.; Zhang, G.; Dai, G. An Experimental Study of Input Modes for Multimodal Human-Computer Interaction. In Proceedings of the International Conference on Multimodal Interfaces, Beijing, China, 14–16 October 2000; Volume 1, pp. 49–56. [Google Scholar] [CrossRef]

- Jaimes, A.; Sebe, N. Multimodal Human–Computer Interaction: A Survey. Comput. Vis. Image Underst. 2007, 108, 116–134. [Google Scholar] [CrossRef]

- Hutchinson, T.E. Eye-Gaze Computer Interfaces: Computers That Sense Eye Position on the Display. Computer 1993, 26, 65–66. [Google Scholar] [CrossRef]

- Sharma, C.; Dubey, S.K. Analysis of Eye Tracking Techniques in Usability and HCI Perspective. In Proceedings of the 2014 International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 5–7 March 2014; pp. 607–612. [Google Scholar] [CrossRef]

- Brooks, R.; Meltzoff, A.N. The Development of Gaze Following and Its Relation to Language. Dev. Sci. 2005, 8, 535–543. [Google Scholar] [CrossRef] [Green Version]

- Duchowski, A.T. A Breadth-First Survey of Eye-Tracking Applications. Behav. Res. Methods Instrum. Comput. 2002, 34, 455–470. [Google Scholar] [CrossRef]

- Kumar, M.; Paepcke, A.; Winograd, T. Eyepoint: Practical Pointing and Selection Using Gaze and Keyboard. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 28 April–3 May 2007; pp. 421–430. [Google Scholar]

- Majaranta, P.; Räihä, K.-J. Twenty Years of Eye Typing: Systems and Design Issues. In Proceedings of the 2002 Symposium on Eye Tracking Research & Applications, New Orleans, LA, USA, 25–27 March 2002; pp. 15–22. [Google Scholar]

- Wobbrock, J.O.; Rubinstein, J.; Sawyer, M.W.; Duchowski, A.T. Longitudinal Evaluation of Discrete Consecutive Gaze Gestures for Text Entry. In Proceedings of the 2008 Symposium on Eye Tracking Research & Applications, Savannah, GA, USA, 26–28 March 2008; pp. 11–18. [Google Scholar]

- Hornof, A.J.; Cavender, A. EyeDraw: Enabling Children with Severe Motor Impairments to Draw with Their Eyes. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Portland, OR, USA, 2–7 April 2005; pp. 161–170. [Google Scholar]

- Wu, C.-I. HCI and Eye Tracking Technology for Learning Effect. Procedia-Soc. Behav. Sci. 2012, 64, 626–632. [Google Scholar] [CrossRef] [Green Version]

- Hekele, F.; Spilski, J.; Bender, S.; Lachmann, T. Remote Vocational Learning Opportunities—A Comparative Eye-Tracking Investigation of Educational 2D Videos versus 360° Videos for Car Mechanics. Br. J. Educ. Technol. 2022, 53, 248–268. [Google Scholar] [CrossRef]

- Dickie, C.; Vertegaal, R.; Sohn, C.; Cheng, D. EyeLook: Using Attention to Facilitate Mobile Media Consumption. In Proceedings of the 18th Annual ACM Symposium on User Interface Software and Technology, Seattle, WA, USA, 23–26 October 2005; pp. 103–106. [Google Scholar]

- Nagamatsu, T.; Yamamoto, M.; Sato, H. MobiGaze: Development of a Gaze Interface for Handheld Mobile Devices. In Proceedings of the Human Factors in Computing Systems, New York, NY, USA, 10–15 April 2010; pp. 3349–3354. [Google Scholar] [CrossRef]

- Miluzzo, E.; Wang, T.; Campbell, A.T. Eyephone: Activating Mobile Phones with Your Eyes. In Proceedings of the Second ACM SIGCOMM Workshop on Networking, Systems, and Applications on Mobile Handhelds, New Delhi, India, 30 August 2010; pp. 15–20. [Google Scholar]

- Franslin, N.M.F.; Ng, G.W. Vision-Based Dynamic Hand Gesture Recognition Techniques and Applications: A Review. In Proceedings of the 8th International Conference on Computational Science and Technology, Labuan, Malaysia, 28–29 August 2021; Springer: Berlin/Heidelberg, Germany, 2022; pp. 125–138. [Google Scholar]

- Tumkor, S.; Esche, S.K.; Chassapis, C. Hand Gestures in CAD Systems. In Proceedings of the Asme International Mechanical Engineering Congress and Exposition, San Diego, CA, USA, 15–21 November 2013; American Society of Mechanical Engineers: New York, NY, USA, 2013; Volume 56413, p. V012T13A008. [Google Scholar]

- Florin, G.; Butnariu, S. Design Review of Cad Models Using a NUI Leap Motion Sensor. J. Ind. Des. Eng. Graph. 2015, 10, 21–24. [Google Scholar]

- Kaur, H.; Rani, J. A Review: Study of Various Techniques of Hand Gesture Recognition. In Proceedings of the 1st IEEE International Conference on Power Electronics, Intelligent Control and Energy Systems, ICPEICES 2016, Delhi, India, 4–6 July 2016; IEEE: Piscataway, NJ, USA, 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Fuge, M.; Yumer, M.E.; Orbay, G.; Kara, L.B. Conceptual Design and Modification of Freeform Surfaces Using Dual Shape Representations in Augmented Reality Environments. CAD Comput. Aided Des. 2012, 44, 1020–1032. [Google Scholar] [CrossRef]

- Pareek, S.; Sharma, V.; Esfahani, E.T. Human Factor Study in Gesture Based Cad Environment. In Proceedings of the International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Boston, MA, USA, 2–5 August 2015; Volume 1B-2015, pp. 1–6. [Google Scholar] [CrossRef]

- Huang, J.; Jaiswal, P.; Rai, R. Gesture-Based System for next Generation Natural and Intuitive Interfaces. In Artificial Intelligence for Engineering Design, Analysis and Manufacturing-AIEDAM; Cambridge University Press: Cambridge, UK, 2019; Volume 33, pp. 54–68. [Google Scholar] [CrossRef] [Green Version]

- Clark, L.; Doyle, P.; Garaialde, D.; Gilmartin, E.; Schlögl, S.; Edlund, J.; Aylett, M.; Cabral, J.; Munteanu, C.; Edwards, J.; et al. The State of Speech in HCI: Trends, Themes and Challenges. Interact. Comput. 2019, 31, 349–371. [Google Scholar] [CrossRef] [Green Version]

- Gao, S.; Wan, H.; Peng, Q. Approach to Solid Modeling in a Semi-Immersive Virtual Environment. Comput. Graph. 2000, 24, 191–202. [Google Scholar] [CrossRef]

- Rezeika, A.; Benda, M.; Stawicki, P.; Gembler, F.; Saboor, A.; Volosyak, I. Brain–Computer Interface Spellers: A Review. Brain Sci. 2018, 8, 57. [Google Scholar] [CrossRef] [Green Version]

- Amiri, S.; Fazel-Rezai, R.; Asadpour, V. A Review of Hybrid Brain-Computer Interface Systems. Adv. Hum.-Comput. Interact. 2013, 2013, 187024. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Leeb, R.; Keinrath, C.; Friedman, D.; Neuper, C.; Guger, C.; Slater, M. Walking from Thought. Brain Res. 2006, 1071, 145–152. [Google Scholar] [CrossRef]

- Lécuyer, A.; Lotte, F.; Reilly, R.B.; Leeb, R.; Hirose, M.; Slater, M. Brain-Computer Interfaces, Virtual Reality, and Videogames. Computer 2008, 41, 66–72. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Q.; Zhang, L.; Cichocki, A. EEG-Based Asynchronous BCI Control of a Car in 3D Virtual Reality Environments. Chin. Sci. Bull. 2009, 54, 78–87. [Google Scholar] [CrossRef]

- Li, Y.; Wang, C.; Zhang, H.; Guan, C. An EEG-Based BCI System for 2D Cursor Control. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–6 June 2008; pp. 2214–2219. [Google Scholar]

- Chun, J.; Bae, B.; Jo, S. BCI Based Hybrid Interface for 3D Object Control in Virtual Reality. In Proceedings of the 4th International Winter Conference on Brain-Computer Interface—BCI 2016, Gangwon, Korea, 22–24 February 2016; pp. 19–22. [Google Scholar] [CrossRef]

- Esfahani, E.T.; Sundararajan, V. Using Brain–Computer Interfaces to Detect Human Satisfaction in Human–Robot Interaction. Int. J. Hum. Robot. 2011, 8, 87–101. [Google Scholar] [CrossRef] [Green Version]

- Sebe, N. Multimodal Interfaces: Challenges and Perspectives. J. Ambient Intell. Smart Environ. 2009, 1, 23–30. [Google Scholar] [CrossRef] [Green Version]

- Dumas, B.; Lalanne, D.; Oviatt, S. Multimodal Interfaces: A Survey of Principles, Models and Frameworks; Springer: Berlin/Heidelberg, Germany, 2009; pp. 3–26. [Google Scholar] [CrossRef] [Green Version]

- Ye, J.; Campbell, R.I.; Page, T.; Badni, K.S. An Investigation into the Implementation of Virtual Reality Technologies in Support of Conceptual Design. Des. Stud. 2006, 27, 77–97. [Google Scholar] [CrossRef]

- Liu, X.; Dodds, G.; McCartney, J.; Hinds, B.K. Manipulation of CAD Surface Models with Haptics Based on Shape Control Functions. CAD Comput. Aided Des. 2005, 37, 1447–1458. [Google Scholar] [CrossRef]

- Zhu, W. A Methodology for Building up an Infrastructure of Haptically Enhanced Computer-Aided Design Systems. J. Comput. Inf. Sci. Eng. 2008, 8, 0410041–04100411. [Google Scholar] [CrossRef]

- Picon, F.; Ammi, M.; Bourdot, P. Case Study of Haptic Methods for Selection on CAD Models. In Proceedings of the 2008 IEEE Virtual Reality Conference, Reno, NV, USA, 8–12 March 2008; pp. 209–212. [Google Scholar] [CrossRef]

- Chamaret, D.; Ullah, S.; Richard, P.; Naud, M. Integration and Evaluation of Haptic Feedbacks: From CAD Models to Virtual Prototyping. Int. J. Interact. Des. Manuf. 2010, 4, 87–94. [Google Scholar] [CrossRef]

- Kind, S.; Geiger, A.; Kießling, N.; Schmitz, M.; Stark, R. Haptic Interaction in Virtual Reality Environments for Manual Assembly Validation. Procedia CIRP 2020, 91, 802–807. [Google Scholar] [CrossRef]

- Wechsung, I.; Engelbrecht, K.-P.; Kühnel, C.; Möller, S.; Weiss, B. Measuring the Quality of Service and Quality of Experience of Multimodal Human–Machine Interaction. J. Multimodal User Interfaces 2012, 6, 73–85. [Google Scholar] [CrossRef]

- Zeeshan Baig, M.; Kavakli, M. A Survey on Psycho-Physiological Analysis & Measurement Methods in Multimodal Systems. Multimodal Technol. Interact. 2019, 3, 37. [Google Scholar] [CrossRef] [Green Version]

- Chu, C.C.; Mo, J.; Gadh, R. A Quantitative Analysis on Virtual Reality-Based Computer Aided Design System Interfaces. J. Comput. Inf. Sci. Eng. 2002, 2, 216–223. [Google Scholar] [CrossRef]

- Gîrbacia, F. Evaluation of CAD Model Manipulation in Desktop and Multimodal Immersive Interface. Appl. Mech. Mater. 2013, 327, 289–293. [Google Scholar] [CrossRef]

- Feeman, S.M.; Wright, L.B.; Salmon, J.L. Exploration and Evaluation of CAD Modeling in Virtual Reality. Comput. Aided. Des. Appl. 2018, 15, 892–904. [Google Scholar] [CrossRef] [Green Version]

- Community, I.; Davide, F. 5 Perception and Cognition in Immersive Virtual Reality. 2003, pp. 71–86. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.433.9220&rep=rep1&type=pdf (accessed on 10 May 2020).

- Wickens, C.D. Multiple Resources and Mental Workload. Hum. Factors 2008, 50, 449–455. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Wade, J.; Bian, D.; Fan, J.; Swanson, A.; Weitlauf, A.; Warren, Z.; Sarkar, N. Multimodal Fusion for Cognitive Load Measurement in an Adaptive Virtual Reality Driving Task for Autism Intervention. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2015; Volume 9177, pp. 709–720. [Google Scholar] [CrossRef]

- Wickens, C.D.; Gordon, S.E.; Liu, Y.; Lee, J. An Introduction to Human Factors Engineering; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2004; Volume 2. [Google Scholar]

- Goodman, E.; Kuniavsky, M.; Moed, A. Observing the User Experience: A Practitioner’s Guide to User Research. IEEE Trans. Prof. Commun. 2013, 56, 260–261. [Google Scholar] [CrossRef]

- Cutugno, F.; Leano, V.A.; Rinaldi, R.; Mignini, G. Multimodal Framework for Mobile Interaction. In Proceedings of the International Working Conference on Advanced Visual Interfaces, Capri Island, Italy, 21–25 May 2012; pp. 197–203. [Google Scholar]

- Ergoneers Dikablis Glasses 3 Eye Tracker. Available online: https://www.jalimedical.com/dikablis-glasses-eye-tracker.php (accessed on 10 May 2020).

- Tobii Pro Glasses 2—Discontinued. Available online: https://www.tobiipro.com/product-listing/tobii-pro-glasses-2/ (accessed on 10 May 2020).

- Pupil Labs Core Hardware Specifications. Available online: https://imotions.com/hardware/pupil-labs-glasses/ (accessed on 10 May 2020).

- GP3 Eye Tracker. Available online: https://www.gazept.com/product/gazepoint-gp3-eye-tracker/ (accessed on 10 May 2020).

- Tobii Pro Spectrum. Available online: https://www.tobiipro.com/product-listing/tobii-pro-spectrum/ (accessed on 10 May 2020).

- EyeLink 1000 Plus. Available online: https://www.sr-research.com/eyelink-1000-plus/ (accessed on 10 May 2020).

- MoCap Pro Gloves. Available online: https://stretchsense.com/solution/gloves/ (accessed on 10 May 2020).

- CyberGlove Systems. Available online: http://www.cyberglovesystems.com/ (accessed on 10 May 2020).

- VRTRIX. Available online: http://www.vrtrix.com/ (accessed on 10 May 2020).

- Kinect from Wikipedia. Available online: https://en.wikipedia.org/wiki/Kinect (accessed on 10 May 2020).

- Leap Motion Controller. Available online: https://www.ultraleap.com/product/leap-motion-controller/ (accessed on 10 May 2020).

- Satori, H.; Harti, M.; Chenfour, N. Introduction to Arabic Speech Recognition Using CMUSphinx System. arXiv 2007, arXiv0704.2083. [Google Scholar]

- NeuroSky. Available online: https://store.neurosky.com/ (accessed on 10 May 2020).

- Muse (Headband) from Wikipedia. Available online: https://en.wikipedia.org/wiki/Muse_(headband) (accessed on 10 May 2020).

- VIVE Pro 2. Available online: https://www.vive.com/us/product/vive-pro2-full-kit/overview/ (accessed on 10 May 2020).

- Meta Quest. Available online: https://www.oculus.com/rift-s/ (accessed on 10 May 2020).

- Microsoft HoloLens 2. Available online: https://www.microsoft.com/en-us/hololens (accessed on 10 May 2020).

- Sigut, J.; Sidha, S.-A. Iris Center Corneal Reflection Method for Gaze Tracking Using Visible Light. IEEE Trans. Biomed. Eng. 2010, 58, 411–419. [Google Scholar] [CrossRef]

- Chennamma, H.R.; Yuan, X. A Survey on Eye-Gaze Tracking Techniques. Indian J. Comput. Sci. Eng. 2013, 4, 388–393. [Google Scholar]

- Ma, C.; Baek, S.-J.; Choi, K.-A.; Ko, S.-J. Improved Remote Gaze Estimation Using Corneal Reflection-Adaptive Geometric Transforms. Opt. Eng. 2014, 53, 53112. [Google Scholar] [CrossRef]

- Dodge, R.; Cline, T.S. The Angle Velocity of Eye Movements. Psychol. Rev. 1901, 8, 145. [Google Scholar] [CrossRef] [Green Version]

- Stuart, S.; Hickey, A.; Vitorio, R.; Welman, K.; Foo, S.; Keen, D.; Godfrey, A. Eye-Tracker Algorithms to Detect Saccades during Static and Dynamic Tasks: A Structured Review. Physiol. Meas. 2019, 40, 02TR01. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.H.; Monroy, C.; Houston, D.M.; Yu, C. Using Head-Mounted Eye-Trackers to Study Sensory-Motor Dynamics of Coordinated Attention, 1st ed.; Elsevier: Amsterdam, The Netherlands, 2020; Volume 254. [Google Scholar] [CrossRef]

- Lake, S.; Bailey, M.; Grant, A. Method and Apparatus for Analyzing Capacitive Emg and Imu Sensor Signals for Gesture Control. U.S. Patents 9,299,248, 29 March 2016. [Google Scholar]

- Wang, Z.; Cao, J.; Liu, J.; Zhao, Z. Design of Human-Computer Interaction Control System Based on Hand-Gesture Recognition. In Proceedings of the 2017 32nd Youth Academic Annual Conference of Chinese Association of Automation (YAC), Hefei, China, 19–21 May 2017; pp. 143–147. [Google Scholar]

- Grosshauser, T. Low Force Pressure Measurement: Pressure Sensor Matrices for Gesture Analysis, Stiffness Recognition and Augmented Instruments. In Proceedings of the NIME 2008, Genova, Italy, 5–7 June 2008; pp. 97–102. [Google Scholar]

- Sawada, H.; Hashimoto, S. Gesture Recognition Using an Acceleration Sensor and Its Application to Musical Performance Control. Electron. Commun. Jpn. 1997, 80, 9–17. [Google Scholar] [CrossRef]

- Suarez, J.; Murphy, R.R. Hand Gesture Recognition with Depth Images: A Review. In Proceedings of the 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, 9–13 September 2012; Volume 2012, pp. 411–417. [Google Scholar] [CrossRef]

- Abu Shariah, M.A.M.; Ainon, R.N.; Zainuddin, R.; Khalifa, O.O. Human Computer Interaction Using Isolated-Words Speech Recognition Technology. In Proceedings of the ICIAS 2007: International Conference on Intelligent and Advanced Systems, Kuala Lumpur, Malaysia, 25–28 November 2007; pp. 1173–1178. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, M. A Speech Recognition System Based Improved Algorithm of Dual-Template HMM. Procedia Eng. 2011, 15, 2286–2290. [Google Scholar] [CrossRef] [Green Version]

- Chang, S.-Y.; Morgan, N. Robust CNN-Based Speech Recognition with Gabor Filter Kernels. In Proceedings of the Fifteenth Annual Conference of the International Speech Communication Association, Singapore, 14–18 September 2014. [Google Scholar]

- Soltau, H.; Liao, H.; Sak, H. Neural Speech Recognizer: Acoustic-to-Word LSTM Model for Large Vocabulary Speech Recognition. arXiv 2016, arXiv1610.09975. [Google Scholar]

- Tyagi, A. A Review of Eeg Sensors Used for Data Acquisition. Electron. Instrum. 2012, 13–18. Available online: https://www.researchgate.net/profile/Sunil-Semwal/publication/308259085_A_Review_of_Eeg_Sensors_used_for_Data_Acquisition/links/57df321408ae72d72eac238e/A-Review-of-Eeg-Sensors-used-for-Data-Acquisition.pdf (accessed on 10 May 2020).

- Pinegger, A.; Wriessnegger, S.C.; Faller, J.; Müller-Putz, G.R. Evaluation of Different EEG Acquisition Systems Concerning Their Suitability for Building a Brain–Computer Interface: Case Studies. Front. Neurosci. 2016, 10, 441. [Google Scholar] [CrossRef] [Green Version]

- Zerafa, R.; Camilleri, T.; Falzon, O.; Camilleri, K.P. A Comparison of a Broad Range of EEG Acquisition Devices–Is There Any Difference for SSVEP BCIs? Brain-Comput. Interfaces 2018, 5, 121–131. [Google Scholar] [CrossRef]

- Liu, Y.; Jiang, X.; Cao, T.; Wan, F.; Mak, P.U.; Mak, P.-I.; Vai, M.I. Implementation of SSVEP Based BCI with Emotiv EPOC. In Proceedings of the 2012 IEEE International Conference on Virtual Environments Human-Computer Interfaces and Measurement Systems (VECIMS), Tianjin, China, 2–4 July 2012; pp. 34–37. [Google Scholar]

- Guger, C.; Krausz, G.; Allison, B.Z.; Edlinger, G. Comparison of Dry and Gel Based Electrodes for P300 Brain–Computer Interfaces. Front. Neurosci. 2012, 6, 60. [Google Scholar] [CrossRef] [Green Version]

- Hirose, S. A VR Three-Dimensional Pottery Design System Using PHANTOM Haptic Devices. In Proceedings of the 4th PHANToM Users Group Workshop, Dedham, MA, USA, 9–12 October 1999. [Google Scholar]

- Nikolakis, G.; Tzovaras, D.; Moustakidis, S.; Strintzis, M.G. CyberGrasp and PHANTOM Integration: Enhanced Haptic Access for Visually Impaired Users. In Proceedings of the SPECOM’2004 9th Conference Speech and Computer, Saint-Petersburg, Russia, 20–22 September 2004; pp. x1–x7. [Google Scholar]

- Wee, C.; Yap, K.M.; Lim, W.N. Haptic Interfaces for Virtual Reality: Challenges and Research Directions. IEEE Access 2021, 9, 112145–112162. [Google Scholar] [CrossRef]

- Kumari, P.; Mathew, L.; Syal, P. Increasing Trend of Wearables and Multimodal Interface for Human Activity Monitoring: A Review. Biosens. Bioelectron. 2017, 90, 298–307. [Google Scholar] [CrossRef]

- Oviatt, S.; Cohen, P.; Wu, L.; Duncan, L.; Suhm, B.; Bers, J.; Holzman, T.; Winograd, T.; Landay, J.; Larson, J.; et al. Designing the User Interface for Multimodal Speech and Pen-Based Gesture Applications: State-of-the-Art Systems and Future Research Directions. Hum.-Comput. Interact. 2000, 15, 263–322. [Google Scholar] [CrossRef]

- Sharma, R.; Pavlovic, V.I.; Huang, T.S. Toward Multimodal Human-Computer Interface. Proc. IEEE 1998, 86, 853–869. [Google Scholar] [CrossRef]

- Sunar, A.W.I.M.S. Multimodal Fusion- Gesture and Speech Input in Augmented Reality Environment. Adv. Intell. Syst. Comput. 2015, 331, 255–264. [Google Scholar] [CrossRef]

- Poh, N.; Kittler, J. Multimodal Information Fusion. In Multimodal Signal Processing; Elsevier: Amsterdam, The Netherlands, 2010; pp. 153–169. [Google Scholar] [CrossRef]

- Lalanne, D.; Nigay, L.; Palanque, P.; Robinson, P.; Vanderdonckt, J.; Ladry, J.-F. Fusion Engines for Multimodal Input. In Proceedings of the 2009 International Conference on Multimodal Interfaces, Cambridge, MA, USA, 2–4 November 2009; p. 153. [Google Scholar] [CrossRef]

- Zissis, D.; Lekkas, D.; Azariadis, P.; Papanikos, P.; Xidias, E. Collaborative CAD/CAE as a Cloud Service. Int. J. Syst. Sci. Oper. Logist. 2017, 4, 339–355. [Google Scholar] [CrossRef]

- Nam, T.J.; Wright, D. The Development and Evaluation of Syco3D: A Real-Time Collaborative 3D CAD System. Des. Stud. 2001, 22, 557–582. [Google Scholar] [CrossRef]

- Kim, M.J.; Maher, M.L. The Impact of Tangible User Interfaces on Spatial Cognition during Collaborative Design. Des. Stud. 2008, 29, 222–253. [Google Scholar] [CrossRef]

- Shen, Y.; Ong, S.K.; Nee, A.Y.C. Augmented Reality for Collaborative Product Design and Development. Des. Stud. 2010, 31, 118–145. [Google Scholar] [CrossRef]

| Category of Interfaces | Descriptions | References |

|---|---|---|

| Unimodal | Eye Tracking | [39,40,41] |

| Gesture | [5,7,25,27,42,43,44,45,46,47] | |

| Speech | [24,48,49,50,51,52,53,54,55] | |

| BCI | [9,28,56,57,58,59,60,61] | |

| Multimodal | Gesture + Speech | [8,11,12,62,63,64,65,66,67,68] |

| Gesture + Eye Tracking | [6,34,69] | |

| Gesture + BCI | [70] | |

| Gesture + Speech + BCI | [3] | |

| Others | [30,71,72,73,74,75] |

| Signal Modalities | Categories | Devices |

|---|---|---|

| Eye tracking | Head-mounted | Dikablis Glass 3.0 [126], Tobii Glass 2 [127], Pupil Labs Glasses [128] |

| Tabletop | Gazepoint GP3 [129], Tobii Pro Spectrum 150 [130], EyeLink 1000 Plus [131] | |

| Gesture | Sensor-based | MoCap Pro Glove [132], Cyber Glove [133], Vrtrix Glove [134] |

| Vision-based | Kinect [135], Leap Motion [44,136] | |

| Speech | DP-100 Connected Speech Recognition System (CSRS) [63], Microsoft Speech API (SAPI) [54], CMUSphinx [137] | |

| EEG | Saline electrode | Emotiv Epoc+ [3,58] |

| Wet electrode | Neuroscan [56] | |

| Dry electrode | Neurosky MindWave [138], InteraXon Muse [139] | |

| Others | Haptic | SensAble PHANTom [110], SPIDAR [113] |

| VR/AR | HTC Vive [140], Oculus Rift [141], HoloLens 2 [142] | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Niu, H.; Van Leeuwen, C.; Hao, J.; Wang, G.; Lachmann, T. Multimodal Natural Human–Computer Interfaces for Computer-Aided Design: A Review Paper. Appl. Sci. 2022, 12, 6510. https://doi.org/10.3390/app12136510

Niu H, Van Leeuwen C, Hao J, Wang G, Lachmann T. Multimodal Natural Human–Computer Interfaces for Computer-Aided Design: A Review Paper. Applied Sciences. 2022; 12(13):6510. https://doi.org/10.3390/app12136510

Chicago/Turabian StyleNiu, Hongwei, Cees Van Leeuwen, Jia Hao, Guoxin Wang, and Thomas Lachmann. 2022. "Multimodal Natural Human–Computer Interfaces for Computer-Aided Design: A Review Paper" Applied Sciences 12, no. 13: 6510. https://doi.org/10.3390/app12136510

APA StyleNiu, H., Van Leeuwen, C., Hao, J., Wang, G., & Lachmann, T. (2022). Multimodal Natural Human–Computer Interfaces for Computer-Aided Design: A Review Paper. Applied Sciences, 12(13), 6510. https://doi.org/10.3390/app12136510