1. Introduction

The detection of surface defects of automotive engine parts is an important step in manufacturing quality assurance. If the automobile has defects that may cause personal injury and property damage, the automobile manufacturer will recall the products. The State Administration for Market Regulation (SAMR) of China statistics indicate that 3.71 million automobiles were recalled because of engine defects in 2021 alone, accounting for 42.5% of all recalls that year [

1]. The detection of surface defects is often performed manually with the aid of special equipment by workers trained to recognize surface defects. However, this type of inspection can be inefficient and lacks stability, which directly affects the manufacturing quality.

Although the classical machine vision methods can solve these problems to a certain extent [

2,

3], with the emergence of Industry 4.0, conventional machine vision methods have become unable to meet flexibility requirements. In the classical machine vision methods, features are processed manually to adapt to a specific field, but their performances depend on the operator’s experience. For this reason, deep learning-based methods with an automatic feature selection have attracted great attention recently.

A convolutional neural network (CNN) is one of the basic deep learning models, and it was proposed in 1995 by LeCun [

4]. In CNN, a number of convolution layers and pooling layers are used to process the input data. The CNN training is typically performed using the backpropagation (BP) algorithm, and the classification task is completed by a fully connected (FC) layer. In 2006, Hinton et al. [

5] formally proposed the concept of deep learning and paved a path to the research on deep learning in the detection field. In 2012, the Alex Net proposed by Krizhevsky et al. [

6] excelled in the Image Net LSVRC-2012 competition with a top-five error rate of 15.3%. However, a large size of the convolution kernel of this network causes high computation complexity and further restricts an increase in the network layer number. To address this problem, Simonyan et al. [

7] further investigated network depth and proposed a deeper VGG Net model. This model uses multiple small convolution kernels instead of one large convolution kernel and ensures the feature extraction performance of the network by deepening the network layers. This model performed well in the 2014 Image Net competition, but a further increase in the network depth was limited by a gradient phenomenon. To further increase the network depth, He et al. [

8] proposed the Res Net model, where the output of the previous network layer is mapped to the next layer by identity mapping, forming a shortcut connection; this model won in the classification task of the ILSVRC-2015 competition and achieved good results in detection and segmentation tasks.

To apply the various models to different applications of flaw detection and defect classification, many in-depth studies of different network structures have been conducted, and promising research results have been achieved. Girshick et al. [

9] published their seminal work on deep learning and R-CNN-based target detection. They used a selective search algorithm to generate candidate regions, and using the CNN, they achieved a mean average precision (MAP) of 53.3% on the VOC2012 dataset. They also proposed a Fast R-CNN containing a region of interest (RoI) pooling layer [

10]. This method has further improved the detection precision, but the detection framework of this method could not achieve the end-to-end training, which limited further improvement in the detection rate. To that end, Redmon [

11,

12] and Farhadi et al. [

13] proposed the YOLO (You Only Look Once) series network, which uses a grid to divide an image into regions where the target is detected independently. Although this allows inverse propagation of the loss function through the network and increases the training and detection speeds, it results in poor adaptability to intensive and small object detection. To solve this problem, Ren et al. [

14] added a region proposal network (RPN) to the Fast R-CNN and achieved the end-to-end training. This has simultaneously increased the detection precision and training speed, but at the time, there was no framework to implement target detection and case segmentation at the same time. In view of this, He et al. [

15] expanded the Fast R-CNN model by adding a parallel branch of target prediction mask to the boundary framework recognition branch and obtained a good performance.

In the production process of the factory, defect detection based on machine vision uses a computer to process the acquired images, which need the support of special image processing analysis and classification software. The images are usually acquired by one or more cameras at the inspection site. The position of the camera is usually fixed. In general, industrial automation systems are designed to inspect known objects only at fixed locations. By lighting and arranging the scene properly, it is convenient to receive the image features for processing and classification. These features are also known in advance. When processing is highly time-constrained or computation-intensive and exceeds the processing power of the main processor, more powerful hardware devices (e.g., DSPs or FPGAs) are used to accelerate processing [

16]. Based on this defect framework, Czimmermann et al. [

17] reviewed the vision-based defect detection methods, including traditional methods and the latest deep learning-based defect detection methods. To promote intelligent development in factories further, Huang et al. [

18] proposed a smart factory architecture to introduce deep learning technology and the Internet of Things into automated defect detection applications in factories. Lian et al. [

19] proposed a defect detection method that combines the generative adversarial network and CNN to ensure the detection accuracy of minor surface defects by generating enlarged defect image samples. Tabernik et al. [

20] proposed a segmentation deep learning-based method to detect surface cracks. The experiments have shown that this method can achieve a high detection accuracy by using approximately 25–30 defective samples. Recently, there have been few studies on the surface defect detection of automobile engine parts. Since automotive engine parts are small in size (approximately 2.8 cm in length and 1 cm in diameter), their surface defect lengths are of the millimeter order typically, which makes them difficult to recognize visually under normal lighting without using specialized auxiliary equipment. In addition, there are no open-source or reference datasets for the analysis of these types of defects. Furthermore, experimental surface defect detections conducted with the acquired data of automotive engine parts have shown that the two commonly used frameworks in the detection field, the Faster R-CNN and Mask R-CNN, perform poorly in surface defect detection, especially for small defects, due to the lack of targeted analysis of defect features.

Considering all that is mentioned, this paper constructs a surface defect dataset of automobile engine parts using surface defect images with the resolution of three million real pixels acquired on engine parts using a 1080P HDMI high-definition digital microscope and labels the acquired data to optimize the network. Then, a suitable anchor size for the detection segmentation scale of the Mask R-CNN is determined using the labeled data to improve the small defect detection capability. Namely, the appropriate anchor scales for surface defect detection of automotive engine parts are selected by labeled data analysis. Finally, the selected anchor scales are added to the Mask R-CNN structure, proposing an improved anchor Mask R-CNN (IA-Mask R-CNN). Comparative experiments have proven that the proposed model is effective in detecting surface defects of automotive engine parts.

The rest of the paper is organized as follows. The basic structure of the Mask R-CNN and the anchor design of the IA-Mask R-CNN are introduced in detail in

Section 2. The acquisition and production of data, the expansion of the dataset, and the comparison experiment results are presented in

Section 3. The discussion is given in

Section 4. Finally, we conclude our paper in

Section 5.

2. Proposed Methods

In this section, the basic structure of the Mask R-CNN is first described in detail. Then, the limitations of the Mask R-CNN model in defect detection of automotive engine parts are analyzed. After that, the optimal anchor scales are obtained by labeled data analysis and the network performances under different anchor scales combinations are compared to verify the effectiveness of the optimal anchor scale. Finally, an improved anchor network (IA-Mask R-CNN) is proposed to improve the defect detection accuracy of automotive engine parts.

2.1. Mask R-CNN

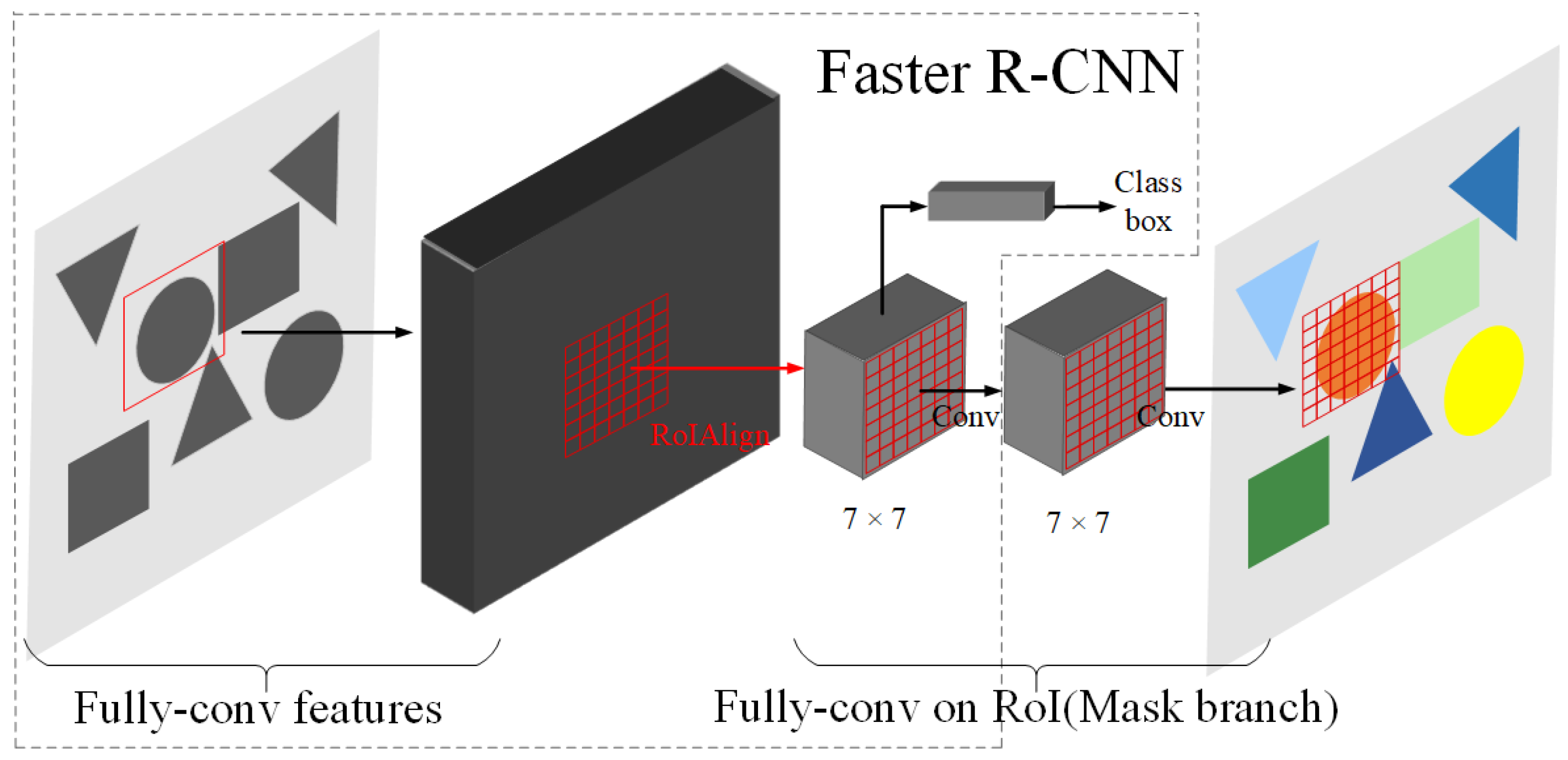

On the basis of the Faster R-CNN, the Mask R-CNN adds a parallel target prediction mask branch to the edge recognition branch. This allows the network to effectively detect a target in an image and also generates a high-quality segmentation mask for each case. In addition, it provides a conceptually simple but versatile segmentation framework of targets, as shown in

Figure 1. This includes a convolution backbone network for feature extraction over the entire image, a bounding box used in the classification and regression of RoI, and the network head of the forecast mask.

The mask branch added to the Mask R-CNN is applied to the small-size fully convolutional network (FCN) of each region proposals. This branch predicts segmentation masks in a pixel-to-pixel manner. Meantime, faced with the problem that the RoI pooling cannot achieve the pixel-to-pixel alignment between the input and output of the Faster R-CNN, this paper proposes a simple quantization-free layer, the RoIAlign layer, to ensure the spatial position accuracy. On this basis, the Mask R-CNN performs segmentation forecast on the mask and classification and independently predicts a binary mask for each classification forecast and also performs the classification using the RoI classification branch of the network.

The Mask R-CNN performs two processes: (1) extracts region proposals using the RPN, and (2) outputs a binary mask for each RoI using the mask branch. These processes are conducted in parallel with the prediction of the bounding-boxes offset. During training, the multi-task loss of each sampled RoI is calculated by:

where

Lmask denotes a mask loss,

Lcls is the classification loss, which represents a logarithmic number between the target and non-target, and

Lbox is the regression loss, which is calculated by:

where

R is the robust loss function

smoothL1, which is expressed as:

For the regression loss, the following four parameterized coordinates are used:

where

x,

y,

w, and

h represent the two coordinates at the center, width, and height of the box, respectively;

x,

xa, and

x* correspond to the predicted, anchor, and ground-truth boxes (similarly for

y,

w, and

h), respectively. Therefore, it may be regarded as the bounding box regression from an anchor box to a nearby ground-truth box.

The mask branch has a Km2-dimensional output for each RoI, with each output encoding K binary masks with a resolution of m × m, where K is the number of categories. Using the per-pixel sigmoid, Lmask is defined as a mean cross-entropy loss. For the RoI related to the ground-truth category k, Lmask is defined only for the kth mask while the other masks’ outputs do not contribute to the loss. The definition of Lmask allows the network to generate masks for each category without competition. The segmentation is decomposed into two branches, the classification branch and mask branch. The mask branch corresponding to the output is obtained using the class label predicted by the classification branch.

The RoIAlign layer eliminates the quantization of the RoIPooling layer and accurately aligns the extracted features with the input, i.e., any quantization of the RoI boundaries or bins is avoided; for instance,

x/16 is used instead of [

x/16] when VGG16 is used as a backbone network. The exact values of the input features of the four uniformly sampled points in each RoI bin are calculated by bilinear interpolation, and the results are compiled by the max-pooling. The RoIPooling and RoIAlign are presented in

Figure 2.

2.2. Surface Defect Detection of Automobile Engine Parts Based on Mask R-CNN

The surface defect detection framework of automotive engine parts based on the Mask R-CNN is designed using the testing procedure shown in

Figure 3. First, local images of automotive engine parts are collected by a 1080P high-definition digital microscope. The image size is 1280 × 720 pixels. Then, the convolutional characteristics of certain features of representative images are extracted by the backbone network. The features are then input into the RPN and branches with masks. This is followed by the acquisition of high-confidence detection frames in the RPN layer and using the Faster R-CNN to obtain the region information of multiple masks on the mask branch. Finally, the input image, the corresponding detection frame, and the mask information are displayed at the same time.

For a backbone network, this study selects the deeper ResNet101 network to ensure the feature extraction capability. This network can achieve residual mapping while providing a shortcut connection to achieve identity mapping, which solves the problem of accuracy decrease with the network depth. The structure of the ResNet101 is displayed in

Figure 4.

In the Mask R-CNN, the number of anchor scales is increased to five to accommodate targets of more scales and thus increase the detection accuracy. The anchor scales of (32, 64, 128, 256, 512) provided by the feature pyramid network (FPN) are used [

21]. Tests conducted on the constructed dataset have shown that this design of the anchor scales still had poor detection performance for small targets. As shown in

Figure 5, surface defects in the yellow circle were not detected. To solve this problem, the anchor design is improved by using the suitable anchor scales for minor defect detection. The most suitable anchor size is obtained by labeled data analysis. In addition, using the appropriate anchor scales, the performance of the Mask R-CNN in detecting small defects is improved.

2.3. IA-Mask R-CNN

The distribution of labeled data plays an important role in the design of the network. Optimizing the network design according to the statistics and analysis of labeled data can accelerate the convergence and improve the target detection ability of the network. Therefore, we have made statistics on the width and height of the labeled data in the collected dataset, as shown in

Figure 6a,b. Moreover, the statistical results are further analyzed on several common anchor scales, as shown in

Table 1.

The anchor scales combination adopted by Mask R-CNN is (32, 64, 128, 256, 512). Combined with

Figure 6 and

Table 1, it can be found that the combination method of Mask R-CNN can cover 94.80% of the samples in height, but only 56.41% in width. The mismatch between the anchor design and the size of the labeled data directly leads to the poor performance of Mask R-CNN in engine surface defect detection. In order to solve this problem, this paper improves the anchor design, the anchor scales are set as (8, 16, 32, 64, 128, 256). This combination can cover 100% in height and 99.87% in width. In addition, the statistical analysis of the anchor ratios is shown in

Figure 6c. The anchor ratios adopted by Mask R-CNN is (0.5, 1, 2), which can cover 95.91% of the samples. Therefore, these ratios are also adopted in our method. In order to verify the effectiveness of designing anchor scales based on labeled data distribution, we consider different anchor scales and compare the convergence performance, as shown in

Figure 7.

By comparing the convergence performances of the five networks with different anchor scales, 20 epochs of training were conducted, and the results are presented in

Table 2, where A represents the baseline, B represents one half of the baseline, C represents a quarter of the baseline, D stands for one-eighth of the baseline, and E stands for one-sixteenth of the baseline. By comparing the results of a total of six statistics, including the total loss and the loss of each part, it has been found that under the anchor scales of (8, 16, 32, 64, 128), the network has a lower convergence value regarding the five aspects, but the convergence of the baseline is poor from all aspects. This result verifies the influence of the anchor scales on the network convergence and shows that labeled data analysis is useful for network design. Therefore, the anchor scales of (8, 16, 32, 64, 128) are added to the Mask R-CNN framework. The Mask R-CNN with the improved anchor design is named IA-Mask R-CNN.

3. Experimental Results and Analysis

The equipment used in constructing the dataset was a 1080P HDMI high-definition digital microscope. All comparative experiments were conducted in the Pycharm2018 development environment using the Python 3.6 programming language. With the exception of the scripts of the JSON files for batch processing (.bash files) and labelme generation, which were written in Notepad++, all other scripts for data preprocessing (.py files) were written using Python3.6 in Pycharm2018. The dataset in the PASCAL VOC format was preprocessed by the labelImg labeling tool before being used in the Faster R-CNN, whereas the dataset in the COCO format was preprocessed by the labelImg labeling tool before being used in the Mask R-CNN. The deep learning environments included: CUDA 9.0.176, cuDNN 7.0.5, Tensorflow_GPU-1.7.0, Tensorboard-1.7.0, and Keras-2.2.4. The loss curve and the network structure diagram were monitored in Tensor board. Due to the limitation in laboratory equipment, the GPU used in the experiment was an NVIDIA GeForce GTX 1050Ti with a video memory of 4 GB.

3.1. Automotive Part Defect Data

The automotive engine parts produced by the Tianjin engine part factory had a length of only 2.8 cm and a diameter of 1 cm, as shown in

Figure 8. The surface defects of the parts included bruise damages and machine marks. In practice, to remove unqualified parts from production, workers on the production line must visually identify millimeter-length surface defects of produced parts using a magnifying glass. However, due to a large number of produced parts on a daily basis, the efficiency of this method of inspection is very low.

To improve the efficiency of defect detection and enable the factory to realize intelligent detection, first, a camera sensor capable of capturing high-definition images of the produced parts was considered. A 1080P HDMI digital microscope, shown in

Figure 9, was selected for acquiring high-definition images of the parts. The image sensor was capable of producing three million pixels with a static camera resolution of 1280 × 720 and a magnification of 10–220 times. The manual focusing range was from zero to 150 mm.

To improve the recognition of a defect area, an independently developed light source is used together with the camera sensor to obtain an image acquisition dataset. We design the independently developed light source based on the light emitting diode (LED) powered by a lithium battery. The volume of this light source is 305 mm × 49 mm × 28 mm, weighing only 200 g, which can be conveniently fixed on the side of the digital microscope. The entire equipment set is shown in

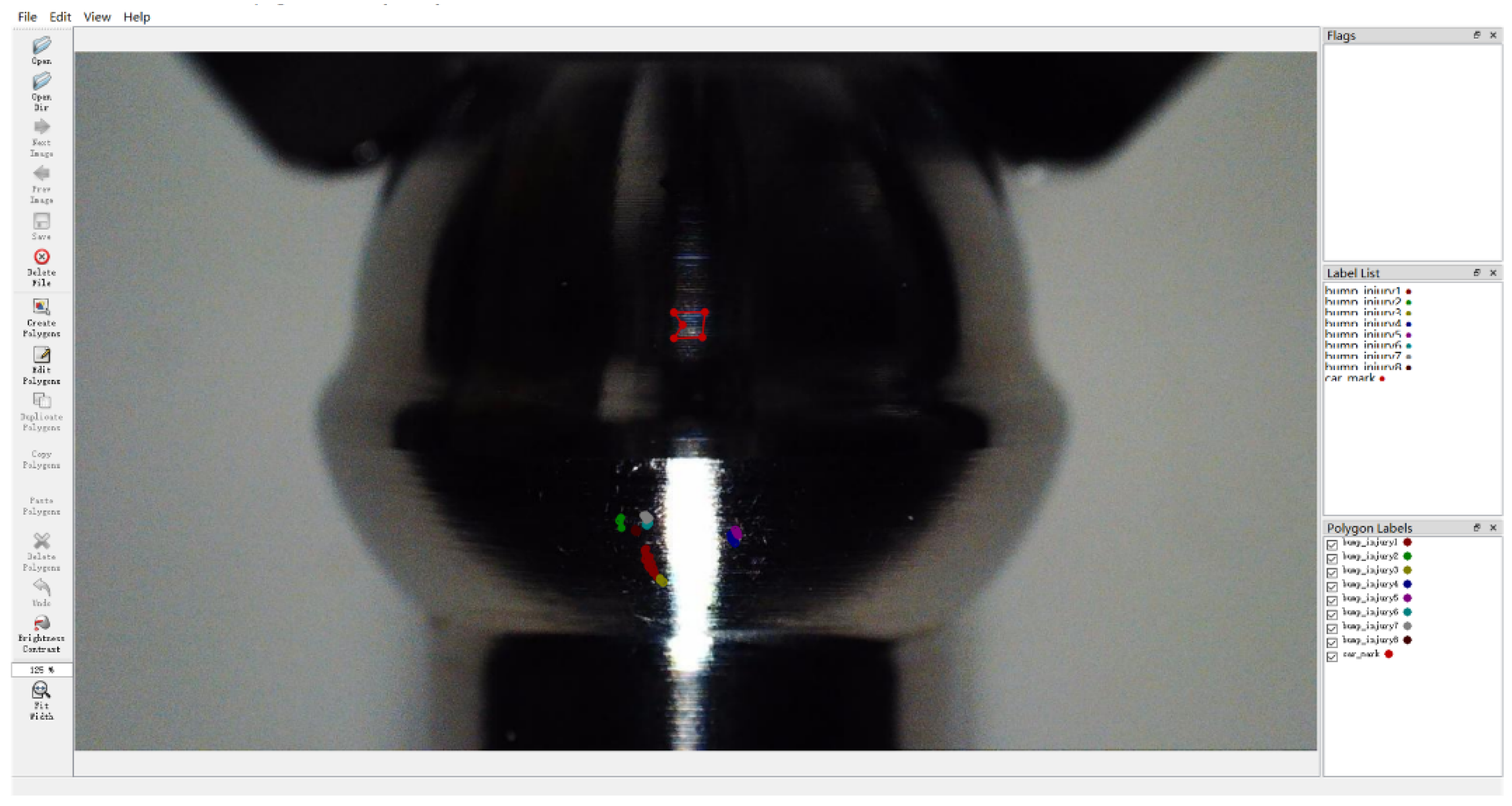

Figure 10. Local image acquisition of bruise damage and machine marks was performed on 560 engine parts, and 560 valid images were obtained. The two categories of defects, namely the bruise damage and machine mark, were manually labeled to validate the effectiveness of the proposed method experimentally. The labeling diagram of automotive engine parts data using the labelImg tool is presented in

Figure 11.

3.2. Quantitative Analysis

To verify the effectiveness of the improved anchor points, after the dataset was constructed, 500 randomly selected labeled images were used for training, and the remaining 60 labeled images were used for testing. The surface defect detection accuracies of automotive engine parts of the Faster R-CNN, Mask R-CNN, and proposed IA-Mask R-CNN models were tested. Due to the limitation of the GPU memory, the batch size in the experiment was set to one. The VGG16 and ResNet101 were first pre-trained on the COCO dataset that contained 80 image categories [

22]. The networks were then fine-tuned using the pre-training weights and, finally, the Faster R-CNN was iterated 80,000 times and the Mask R-CNN and IA-Mask R-CNN were iterated 10,000 times. The quantitative results are shown in

Table 3.

By quantitatively comparing the results of the three detection models, it was observed that, after adding the mask branch, the Mask R-CNN and IA-Mask R-CNN performed better in detecting surface defects of automotive engine parts than the Faster R-CNN and required a shorter training time. In addition, the number of iterations had decreased, and a higher precision was achieved with the support of the ResNet101. After improving the anchor scales, the IA-Mask R-CNN did not require additional training parameters compared to the Mask R-CNN. Under the same pre-training model, training time, and iteration number, the improved network achieved the best accuracy among all the detection models, thus validating the effectiveness of the improved anchor scales.

3.3. Qualitative Analysis

For the purpose of a more intuitive presentation of the comparison results of the three models, part of the test results on the test set are presented and compared qualitatively, as shown in

Figure 12. Since no mask branch was added to the Faster R-CNN, the detected defect area could give only an approximate range, and the detection results were not detailed. Although the Mask R-CNN could achieve detailed defect area detection, it did not consider small targets and also ignored the effect of anchor scales, resulting in missed detections. In contrast, the proposed IA-Mask R-CNN, with the improved anchor design, could detect all defects, even small ones, such as those in the red circle in the bottom row of

Figure 12. In addition, even for relatively large defect areas, the IA-Mask R-CNN had a better detection performance than the Mask R-CNN, as shown in the blue circle in the bottom row of

Figure 12, which further validated the effectiveness of the proposed detection model.