Abstract

In this work, an improved dynamic convolutional neural network (DCNN) model to accurately identify the risk of a forest fire was established based on the traditional DCNN model. First, the DCNN network model was trained in combination with transfer learning, and multiple pre-trained DCNN models were used to extract features from forest fire images. Second, principal component analysis (PCA) reconstruction technology was used in the appropriate subspace. The constructed 15-layer forest fire risk identification DCNN model named “DCN_Fire” could accurately identify core fire insurance areas. Moreover, the original and enhanced image data sets were used to evaluate the impact of data enhancement on the model’s accuracy. The traditional DCNN model was improved and the recognition speed and accuracy were compared and analyzed with the other three DCNN model algorithms with different architectures. The difficulty of using DCNN to monitor forest fire risk was solved, and the model’s detection accuracy was further improved. The true positive rate was 7.41% and the false positive rate was 4.8%. When verifying the impact of different batch sizes and loss rates on verification accuracy, the loss rate of the DCN_Fire model of 0.5 and the batch size of 50 provided the optimal value for verification accuracy (0.983). The analysis results showed that the improved DCNN model had excellent recognition speed and accuracy and could accurately recognize and classify the risk of a forest fire under natural light conditions, thereby providing a technical reference for preventing and tackling forest fires.

1. Introduction

Forests are an indispensable link in the ecological chain and an important ecosystem in nature, and damaging them can seriously affect the Earth’s ecological environment. Forest protection plays an important role in sustainable human development, and preventing forest fires is of the utmost importance [1].

Research shows that forest fire risk monitoring plays a key role in preventing forest fires [2,3]. The steps for monitoring them are (1) identifying hot spots, (2) assessing fire risks, (3) identifying areas vulnerable to forest fires, (4) recognizing manmade infrastructure, and (5) examining the meteorological conditions. These are considered to be influential parameters that play an important role when establishing a model to assess the risk of a forest fire [4,5,6,7]. Generally speaking, the current identification method of forest fire insurance is traditional and singular. At present, geographic information systems (GIS), artificial intelligence (AI), and machine learning (ML) can be used to predict the risk of a forest fire [8,9]. Stula M et al. [10] proposed a forest fire recognition algorithm based on a convolutional neural network (CNN) that can extract and recognize image features, thereby providing excellent recognition accuracy. It was implemented in a forest fire video monitoring system that could achieve good recognition results. Ciprián-Sánchez et al. [11] extracted and combined information from various imaging modes for detecting and extracting features, and predicting the spread of wildfires via a state-of-the-art (SOTA) model based on deep learning (DL); the architecture, loss function, and different types of image combinations of the SOTA model were evaluated to identify the parameters related to improving the segmentation results and comparing them with traditional fire segmentation technology, thereby improving the ability to identify the risk of a forest fire. Moayedi H et al. [12] used a hybrid evolutionary algorithm to build a forest fire risk prediction model; a forest fire sensitivity map of fire-prone areas in Iran was generated based on combining an adaptive neuro-fuzzy inference system, a genetic algorithm, particle swarm optimization, and differential evolution algorithm with reliable accuracy. Vikram R et al. [13] used a support vector machine (SVM) for a semi-supervised classification model to divide the forest area into different subareas: high activity (HA), medium activity (MA), and low activity (LA). Due to energy limitations, a sensor node that only monitored one parameter could predict the fire risk in different areas with 90% accuracy. Moreover, greedy forwarding technology was used to continuously and periodically send data packets from HA and MA areas to the base station, respectively, but prevented them being sent from LA areas. This data forwarding technology improved the network life and reduced congestion during data transmission from forest areas to the base station. Achu A L et al. [14] proposed an automatic fire monitoring system to identify forest fires in their early stages that could predict the size of the flame areas. A deep CNN was used for forest fire risk image classification to extract descriptors from images and was then applied to a logistic regression classifier. After using 882 images to form an ordered data set and related image metadata (such as flame, smoke, fog, cloud, and human elements) during testing, the classification accuracy of 695 daytime and 187 night-time scene images was 94.1% and 94.8%, respectively, with good accuracy in estimating the flame area. Pham B T et al. [15] compared image frames via a segmentation method based on distance measurements in a color channel histogram and a fast frame comparison and extraction algorithm based on time. The module uses key advances in video frame technology and processes the frames through a deep CNN that was trained via normalization prior to segmentation to assist in detecting fire and smoke. The fire risk recognition accuracy was 90%. Michael Y et al. [16] used two pre-trained DL CNN models (vgg16 and resnet50) for fire detection and enhanced the depth of network learning by fine-tuning the network on the basis of a fully connected layer. Although this improved the detection accuracy, it also increased the network training time. However, the deep CNN performed well on complex data sets.

At present, research on forest fire risk detection based on ML mainly involves flame detection and smoke detection [17,18,19,20,21]. The forest fire risk monitoring methods proposed by most scholars are for specific scenes, with several problems that need to be solved. How to recognize the color, form, and texture of fire in different chaotic backgrounds is the key to forest fire risk recognition [22,23,24,25,26]. The purpose of this study was to effectively extract the complex upper layer features of fire risk images and improve the robustness of input conversion by constructing an unmanned aerial vehicle (UAV) image-based forest fire risk prediction model based on a deep learning back-propagation neural network. The problems to be solved in the process of fire risk identification are feature extraction from training image data sets and training with a large number of fire risk image data sets [27,28].

In this study, we attempted to solve the above problems as follows. First, the dynamic CNN (DCNN) network was trained in combination with transfer learning, and multiple pre-trained DCNNs were used to extract features from forest fire images. The traditional DCNN model was improved and the recognition speed and accuracy were compared and analyzed with the other three DCNN model algorithms with different architectures. The difficulty of using DCNN to monitor forest fire risk was solved and the model’s detection accuracy was further improved. Therefore, the forest fire risk model based on the improved DCNN could accurately identify and classify the risk of a forest fire under natural light conditions. The main research contents of this paper were as follows:

- (1)

- The DCNN network was trained in combination with migration learning, and multiple pre-trained DCNN models were used to extract the features of forest fire images.

- (2)

- Principal component analysis (PCA) reconstruction technology was used to enhance image category differentiation.

- (3)

- A 15-layer DCNN model called “DCN_Fire”, as an improvement on the traditional DCNN model, was constructed and analyzed.

- (4)

- The recognition speed and accuracy of the improved DCNN model were compared with three other DCNN models with different architectures.

- (5)

- The DCNN model could accurately recognize the risk of a forest fire risk in natural light; thus it was suitable as an early warning system for forest fires.

In Section 2, we discuss the extraction and enhancement of pertinent image features using the DCNN. Section 3 presents the improved DCNN model and its construction process. Section 4 provides an analysis of the model verification and experimental results. Finally, conclusions based on the study findings are presented in Section 5.

2. Model Construction and Analysis

2.1. Model Construction

Here, we comprehensively evaluate the self-learning ability of a DL CNN. A DCNN model can be used to determine whether there is a fire risk from forest images collected by an UAV by evaluating the influence of different model structural parameters. The proposed improved DCNN model was used to describe the DL CNN model accurately and in detail.

2.1.1. Overall Architecture

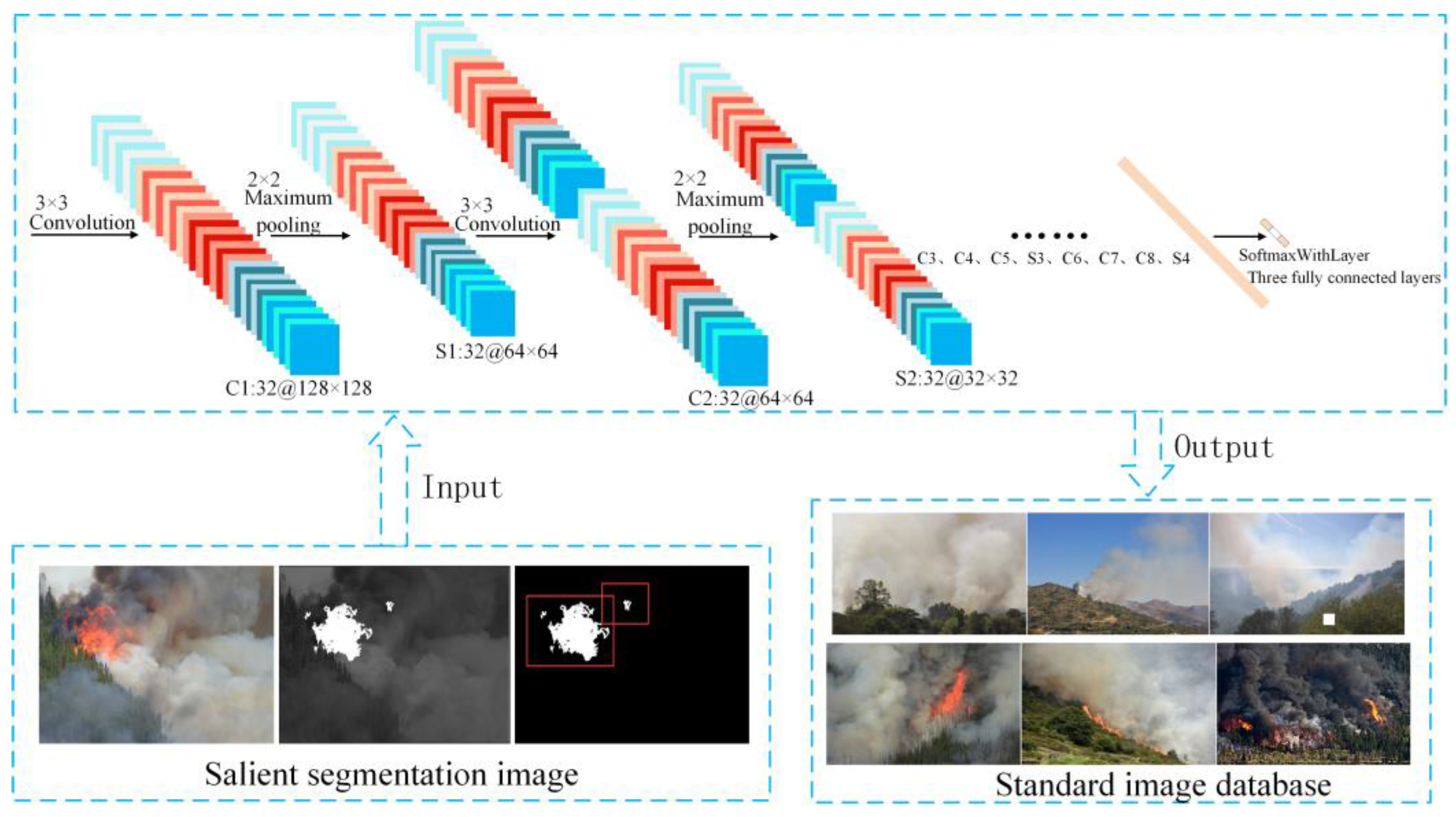

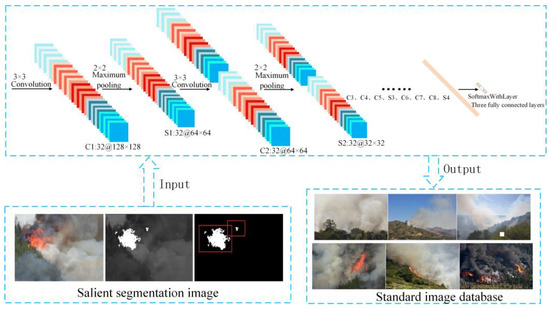

Figure 1 shows the architecture of the improved DCNN model. The model architecture consisted of 15 layers of DCNNs collectively called “DCN_Fire”. The structural parameters are described below. The first 12 layers of the DCNN model were composed of eight convolutions (Conv 1–8) in the convolutional layer and four maximum pooling layers (Pool 1–4). Forest fire features were extracted from a convolutional layer on a lower level and transferred to a higher level. The maximum pooling layers could extract the changeable part of the image and reduce the output size of the convolutional layer. The last classification layer recognized images of fires according to the previously extracted high-level features. The model structure was suitable for extracting different forest flame features from the training data set.

Figure 1.

The architecture of the DCN_Fire model.

2.1.2. Details of the DCN_Fire Model

Input layer: input data comprised a segmentation database of significant images with a pixel size of 128 × 128 in lightning memory-mapped database format.

Convolutional layer: eight convolutions (Conv 1–8) were defined in the convolutional layer. The convolutional kernel size was 3 × 3, the sliding step was one, and Xavier was used to initially configure the parameter weights. A rectified linear unit (relu) was used on this layer as the activation function and the output was sent to the next volume or pooling layer.

Pooling layers: these reduced the data dimensions to avoid over-fitting problems. The kernel size of this layer was set to 2 × 2 by using the maximum pooling method, and the step size was two. Each fully connected layer (FC1 and FC2) had 256 neurons and Xavier mode was used for weight initialization. In the hidden layer, the exit technique was used to reduce the dependence between neurons, and the activation function in the fully connected layer was a relu.

Classification layer: the Softmax function is calculated as

Pixels of size were input into the DCNN, after which the error between the actual output value and the required value was calculated. The stochastic gradient descent method was used on the training-depth CNN. After passing the back-propagation error in the previous layer, the method calculated the derivative on the optimization coefficient and weight. The learning process of the model was iteratively optimized until the training error was small enough to be ignored.

2.2. Transfer Learning

Due to the depth of training, neural networks needed labeled data and a large number of special hardware devices, such as high-powered graphics processors. Training a forest fire risk identification model takes a long time and using transfer learning can solve this problem [29,30,31].

As the latest technology for visual classification, a DCNN pre-trained on large data sets can be reused for new tasks. A DCNN with learning weights can be directly applied to various computer vision situations [32,33]. In this study, the pre-trained DCNN model was used to extract features from forest fire images, which provided effective help for multi-feature fusion; thus, it provided robust class identification.

In the three training structures (AlexNet, VGG-16, and Inception-v3), the class prediction layer of each DCNN was used as a feature extractor. Each layer provided the output for 1000 neurons, which were the neglected top layer in the network.

We used a computer with an Intel®CoreTM i7-3770 CPU with Inception-v3 to obtain bottleneck functions for the published benchmark cifar10 data set [34]. Table 1 summarizes the various architectures of the models.

Table 1.

The architectures of the models.

2.3. Feature Extraction and Classification

For the classification and recognition of forest fire risk images, single multimodal features cannot deal with large changes in the statistical data of images [35,36,37]. We combined classification technology with various features [38] to improve the accuracy of recognizing forest fire risk from images. These features could be expressed as

for and . Multi-feature fusion is mainly used to determine a generalized subspace for distribution , , or . A single feature is normalized according to its energy level as follows:

where

and is the original feature of . The value of the series is calculated by using

The quantity product of the characteristics is calculated as follows:

The summation formula for the characteristics can be written as

Calculating the average pooling value can be achieved by using

The maximum pooling value is calculated by using

The influence of the features is eliminated and the richness of the fusion feature subspace is enhanced in the above feature fusion methods [38]. We used reconstruction technology based on PCA to enhance the distinction between specific categories. The variables x and y are combined as , where , is the real class label of estimated according to its density .

where when x is positive (the greater the value, the higher the confidence level), where λ is the coefficient term, C represents the penalty function, and b is the deviation parameter.

We adopted a linear SVM based on scikit-learn in Python. The bottleneck feature of DCNN is usually linearly separable, and so the features of the fused DCNN model could be processed well by using a linear SVM.

3. Comparative Analysis of the Algorithm Implementation Performance

3.1. Data Source

We used more than 4000 forest fire risk images as the original data that ranged in size from 200 × 200 to 4000 × 4000 pixels and were taken in the Guangdong Longshan and Jiangmen Sihui forest farms. The input images were normalized to produce a standard data set. UAV images usually contain cluttered information, and so some important features can become submerged when their size is directly adjusted.

We could quickly locate the core fire area in a forest fire risk image despite a complex background by dividing the detection area into a recommended area and a selection area by using significance detection and a logistic regression classifier. The specific steps were as follows.

- Step 1

- The region of interest (ROI) proposal. Bayesian-based saliency detection was applied to an original image I; the saliency was mapped to the planarization in the image mask IM; and the original image was masked to areas R1–Rn.

- Step 2

- The color components, image energy, and entropy (vectors X1–Xn) of each ROI were determined to decide whether the characteristics vector in the ROI corresponded to flame or smoke, after which MBR and a fixed size boundary were used to divide the fire area.

We used the color matrix value as the color feature descriptor and the energy and entropy of the angular second-order matrix of an image as the texture descriptor.

3.2. Significance Segmentation and Data Enhancement

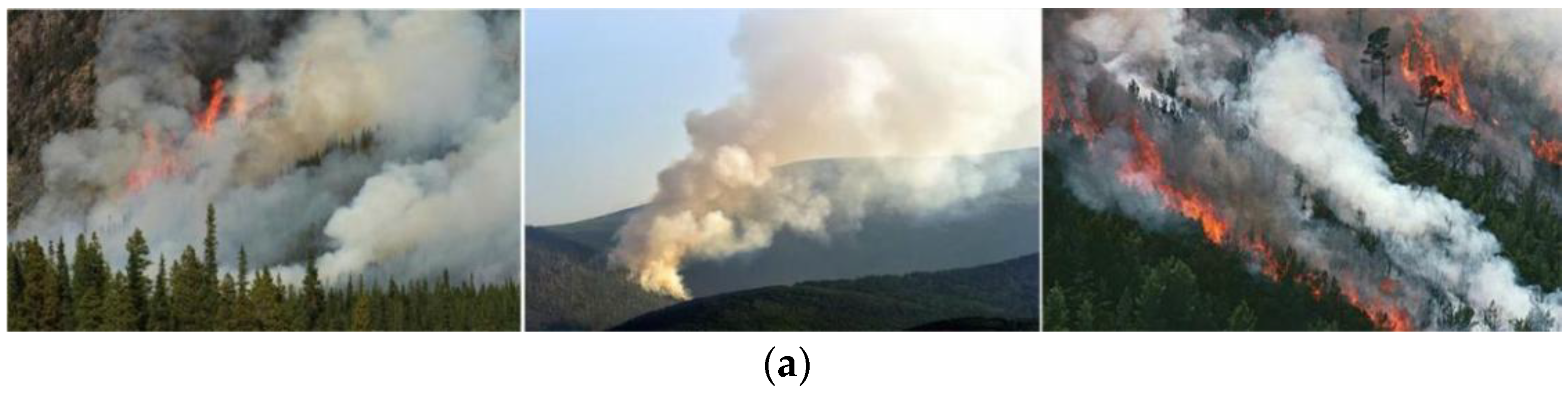

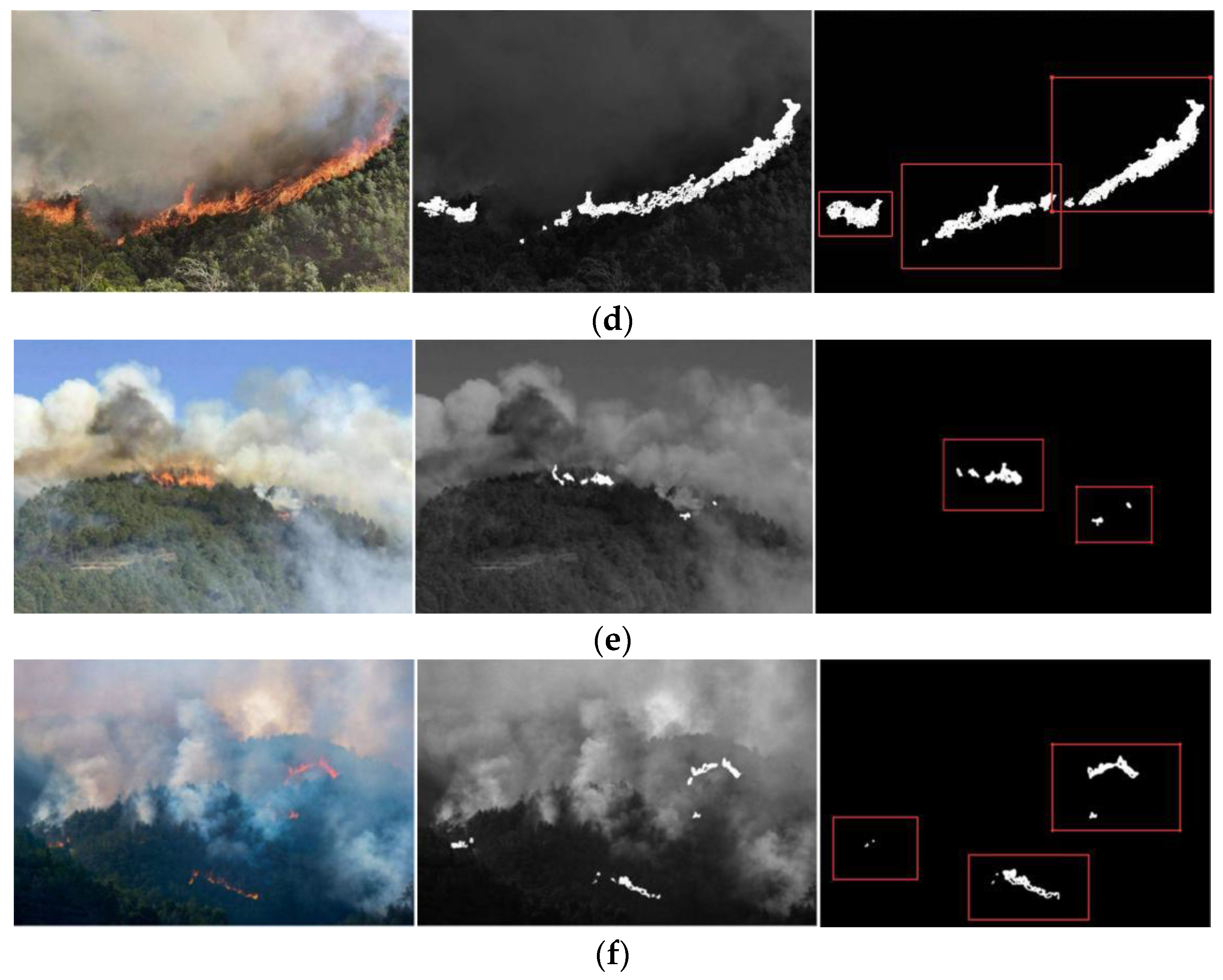

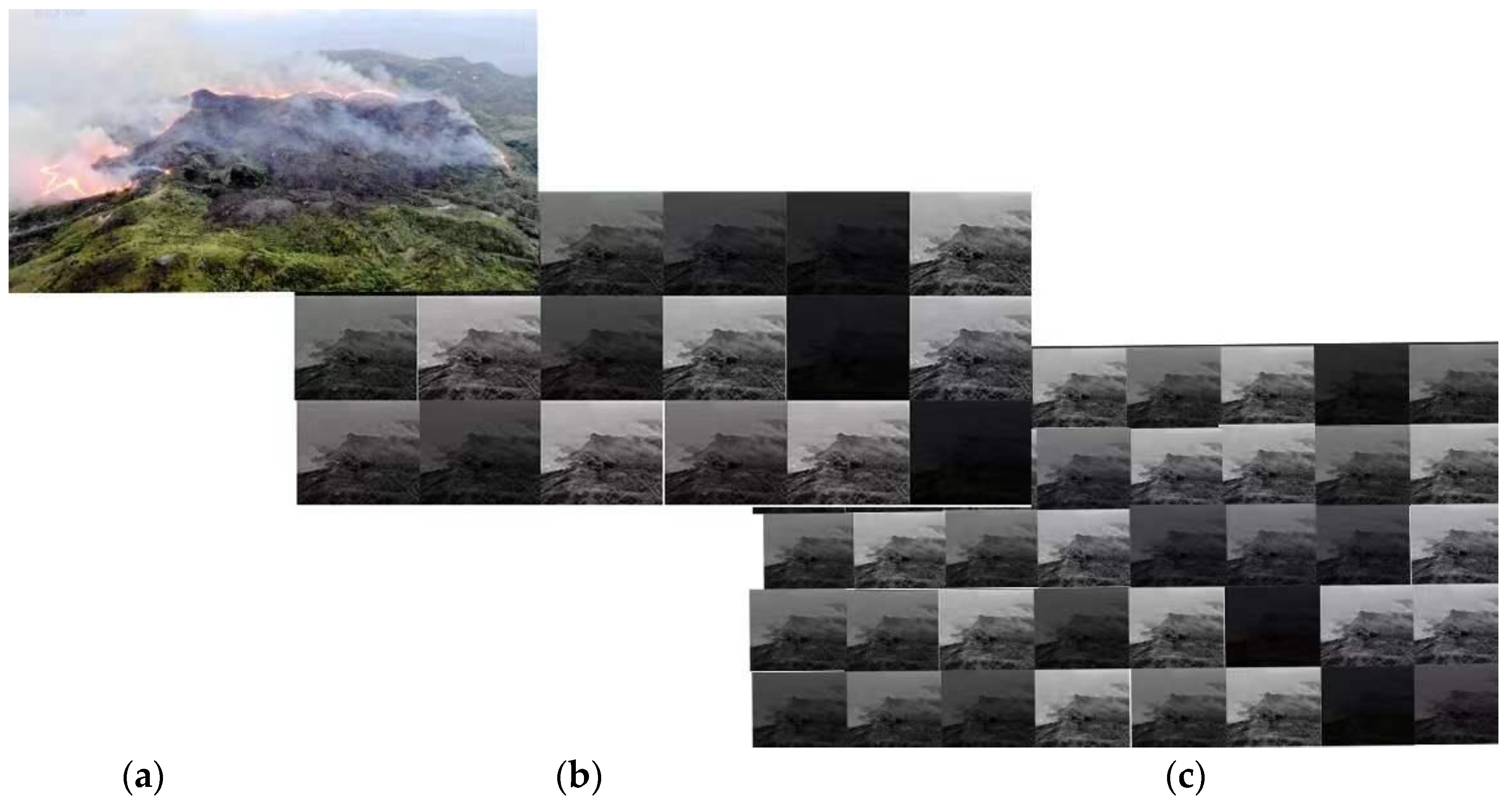

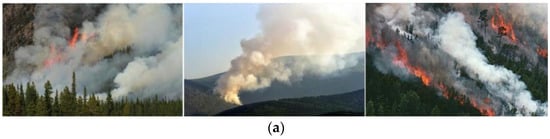

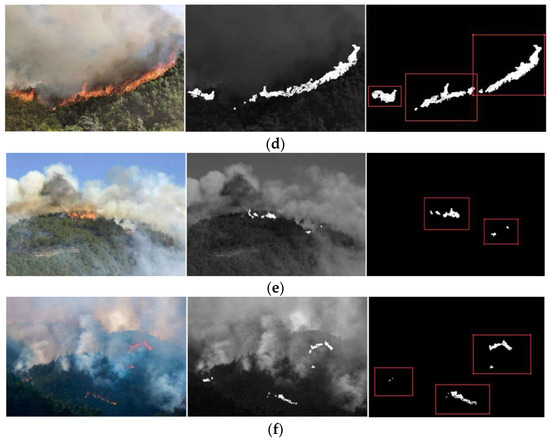

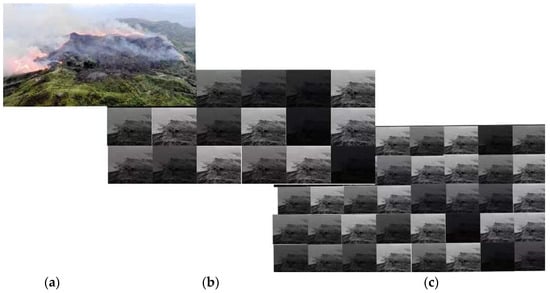

We used significance detection to segment the flame image to standard sizes and train the model. The model was used to process images with flames, smoke, or flames and smoke. Figure 2 shows the processing results.

Figure 2.

Image segmentation based on saliency detection. (a) Original images; (b) significance test results; (c) ROI selection results; and (d) standard segmentation results.

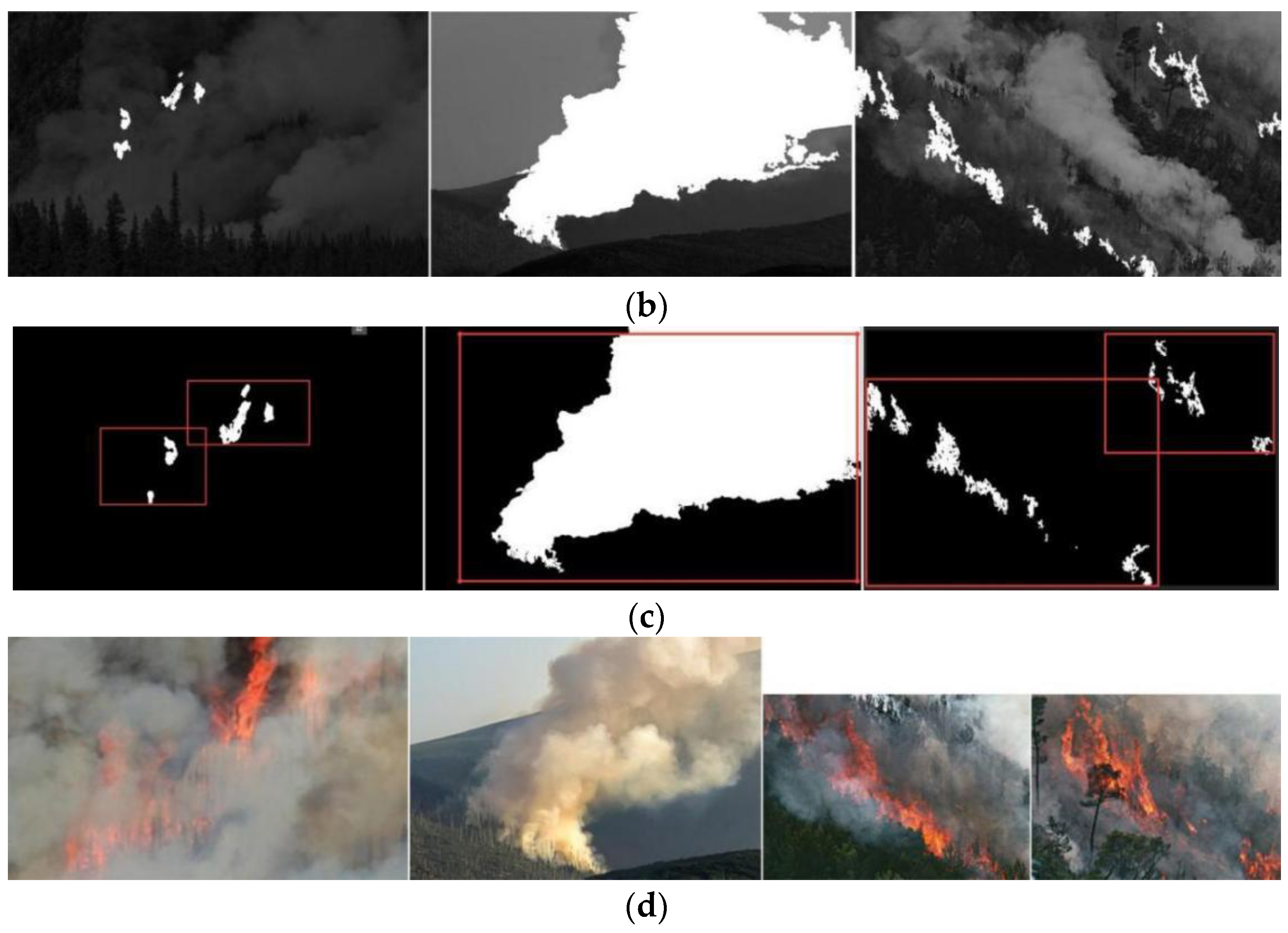

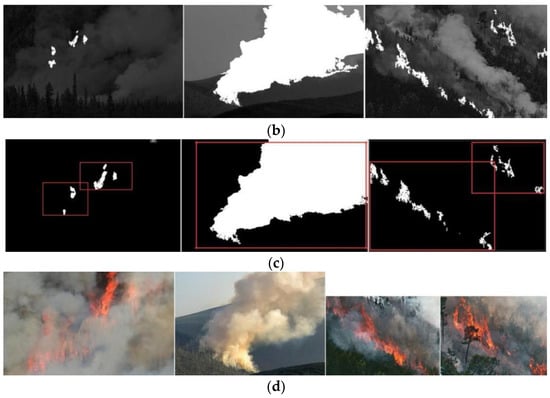

Figure 2a shows the original forest fire images while Figure 2b exhibits the results of significance detection. As shown in Figure 2c, the proposed significance method effectively located the core flame area in the aerial images and even very small ignition areas. In addition, Figure 2d shows that the original image was segmented into independent flame images containing complete flame features using the proposed method. Data enhancement could effectively solve the over-fitting problem caused by using a small training data set. Figure 3 shows examples of the test results.

Figure 3.

Examples of fire location and segmentation scenes. (a–f) Examples 1–6.

We tested the performance of the fire risk location and segmentation algorithm with 550 images of flames selected from the original data set. The test results are reported in Table 2. From the test results, the true positive rate was 7.41% and the false positive rate was 4.8%. Since the images were small, the characteristics of flames and smoke covered most areas. At this time, segmentation was not necessary. To enhance the recognition of fire risk images, the data set was enhanced. The new enhanced image database named “DB_Fire” contained 3845 images. Table 3 shows the parameters of the original image database and enhanced image database.

Table 2.

The performances of the location and segmentation methods.

Table 3.

The image databases.

3.3. Optimization of the DCN_Fire Parameters

The DCN_Fire model parameters included the convolutional core size, number of layers, and loss rate. Some empirical values were determined (the input image size was 128 × 128 × 128) after the full-parameter tuning test. Good results were obtained in terms of verification accuracy for a learning rate of 0.0001, a momentum of 0.9, and 20,000 iterations (Table 4).

Table 4.

The influence of batch size and loss rate on the verification accuracy of DCN_Fire.

4. Model Validation

4.1. Model Performance Verification

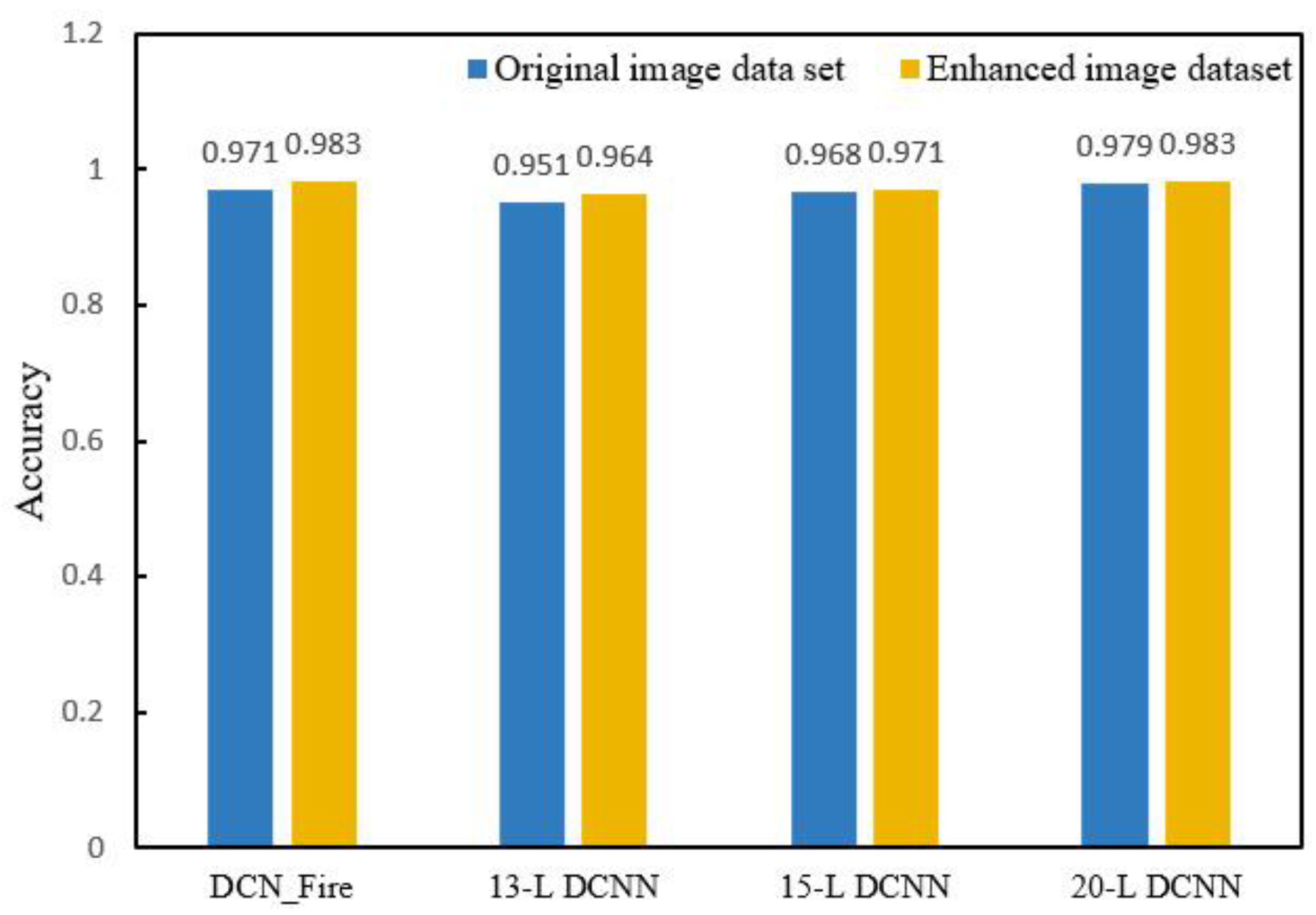

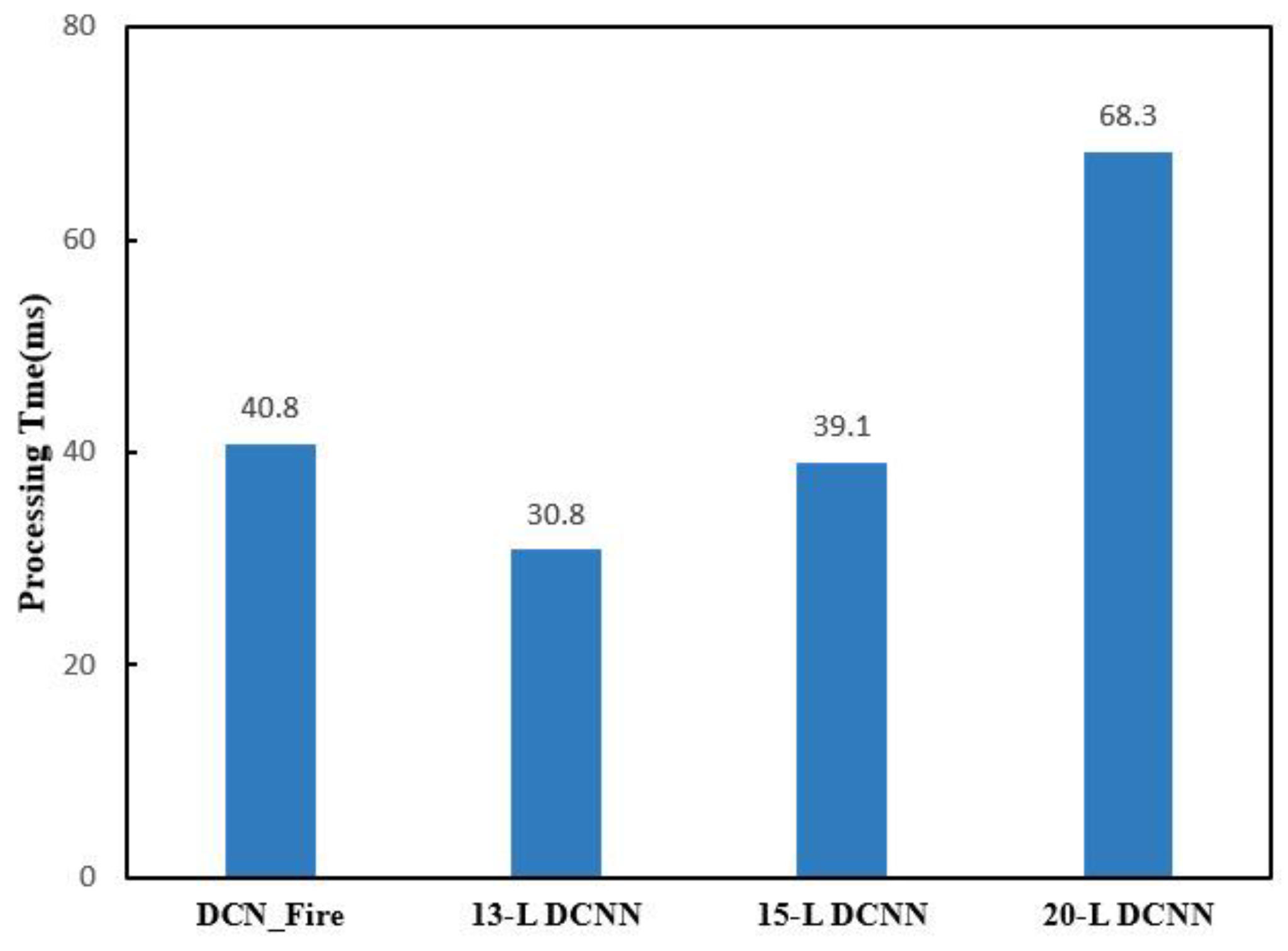

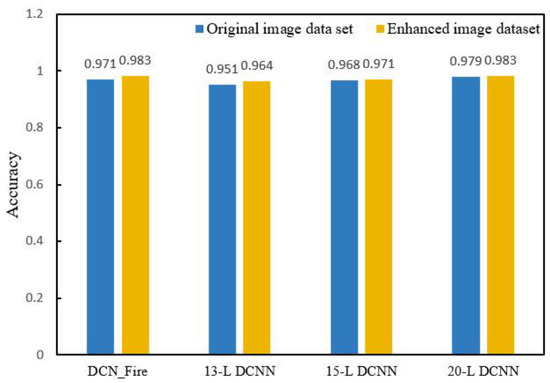

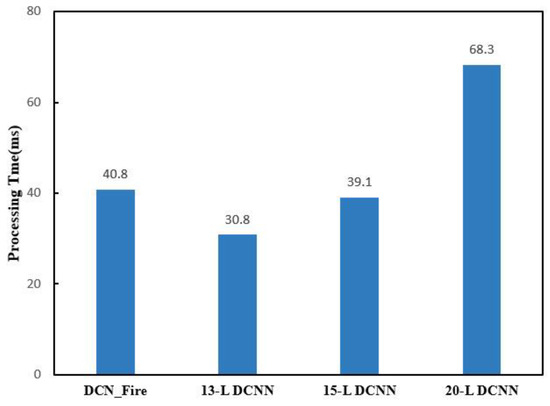

To verify the model’s accuracy, we explored several forest fire risk monitoring DCNN models with various hierarchical structures and calculated the image processing time and verification accuracy of each one using the original and enhanced image data sets. The models involved in the comparison included the DCN_Fire model and 13-layer, 15-layer, and 20-layer DCNN models. Figure 4 shows the comparison of flame image verification accuracy. Figure 5 shows the comparison of flame image processing times by the models.

Figure 4.

Flame image verification accuracy comparison of the DCN_Fire model with 13-layer, 15-layer, and 20-layer DCNN models.

Figure 5.

Flame image processing time comparison of the DCN_Fire model and 13-layer, 15-layer, and 20-layer DCNN models.

Although the 20-layer DCNN model achieved a verification accuracy similar to that of the DCN_Fire model (0.983), its processing time was 1.67 longer due to the larger number of layers, increasing the calculation time and cost. Therefore, the DCN_Fire model was superior in terms of processing time and verification accuracy. Meanwhile, the original and enhanced image data sets were used to evaluate the impact of data enhancement on model accuracy. The data revealed that the verification accuracy of the same model using the enhanced image data set was better than that using the original image data set.

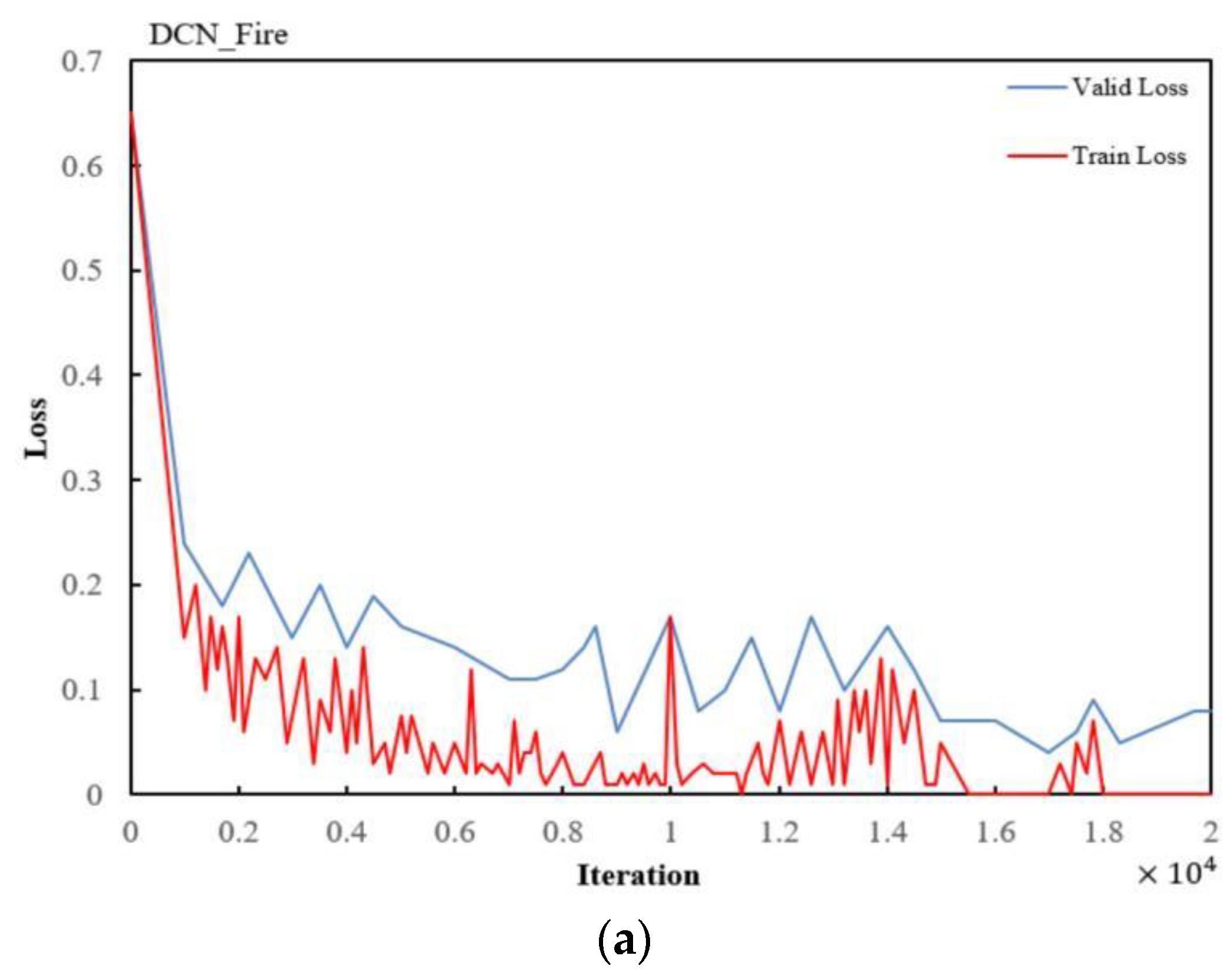

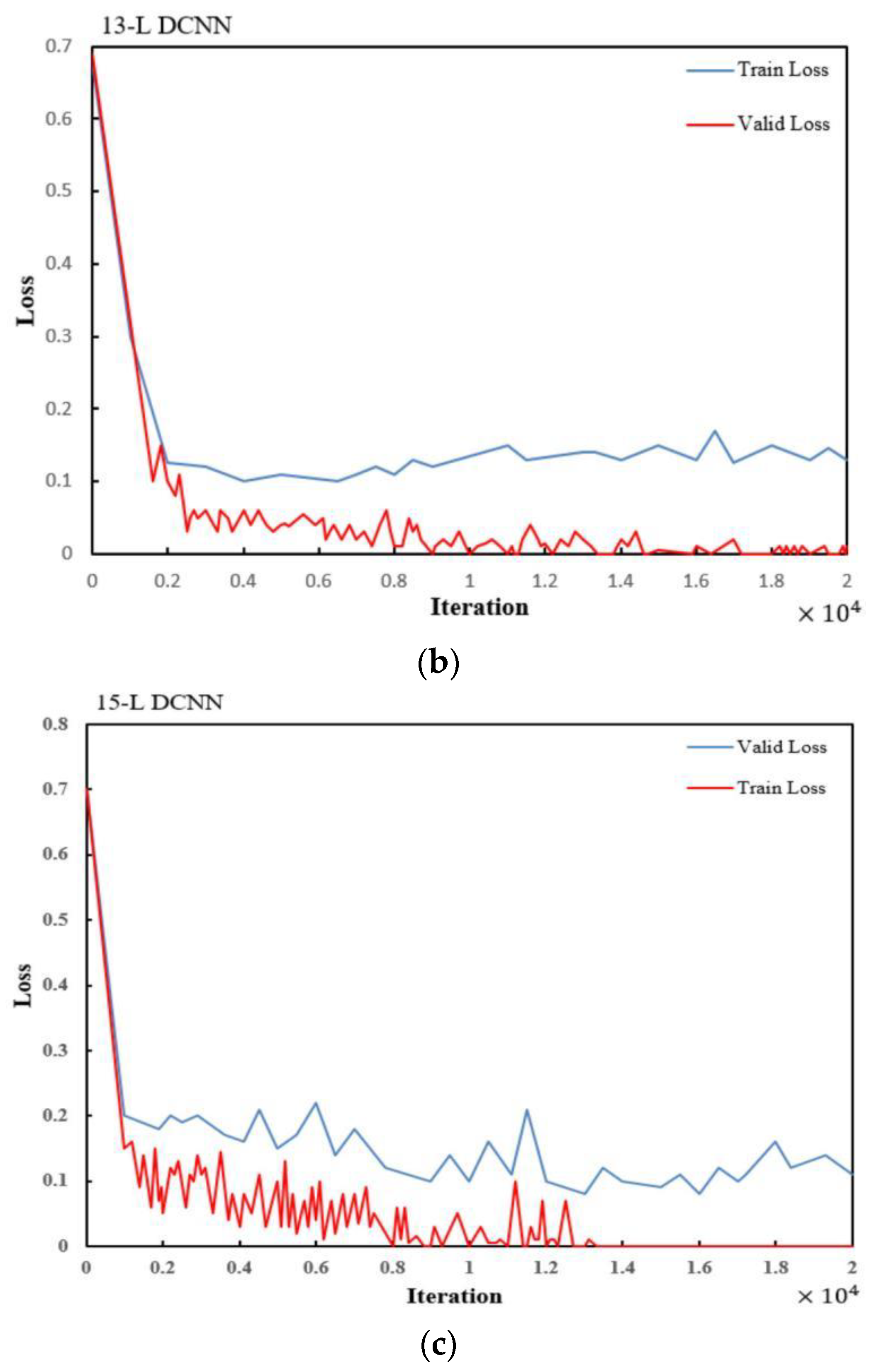

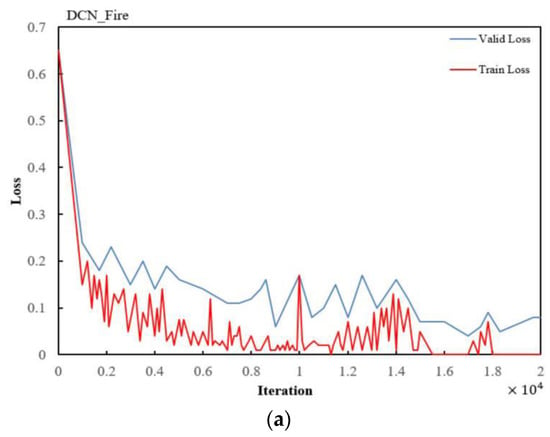

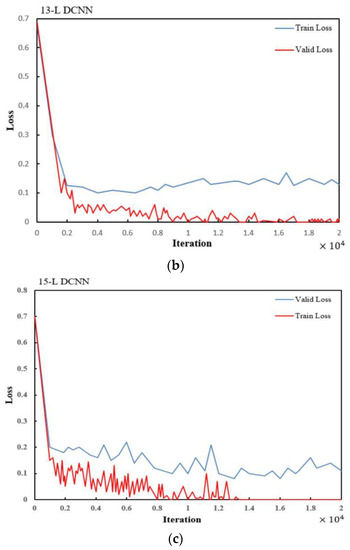

After dividing the image samples into training and verification sets, the DCN_Fire model and the 13-layer and 15-layer DCNN models were trained and their loss values were calculated. Figure 6 shows the training and verification loss curves of the four models.

Figure 6.

Training and validation loss curve of (a) the DCN_Fire model, (b) the 13-layer DCNN model, and (c) the 13-layer DCNN model.

The loss curves is showen in Figure 6, it mark the training loss once every 100 iterations and the validity loss once every 500 iterations.

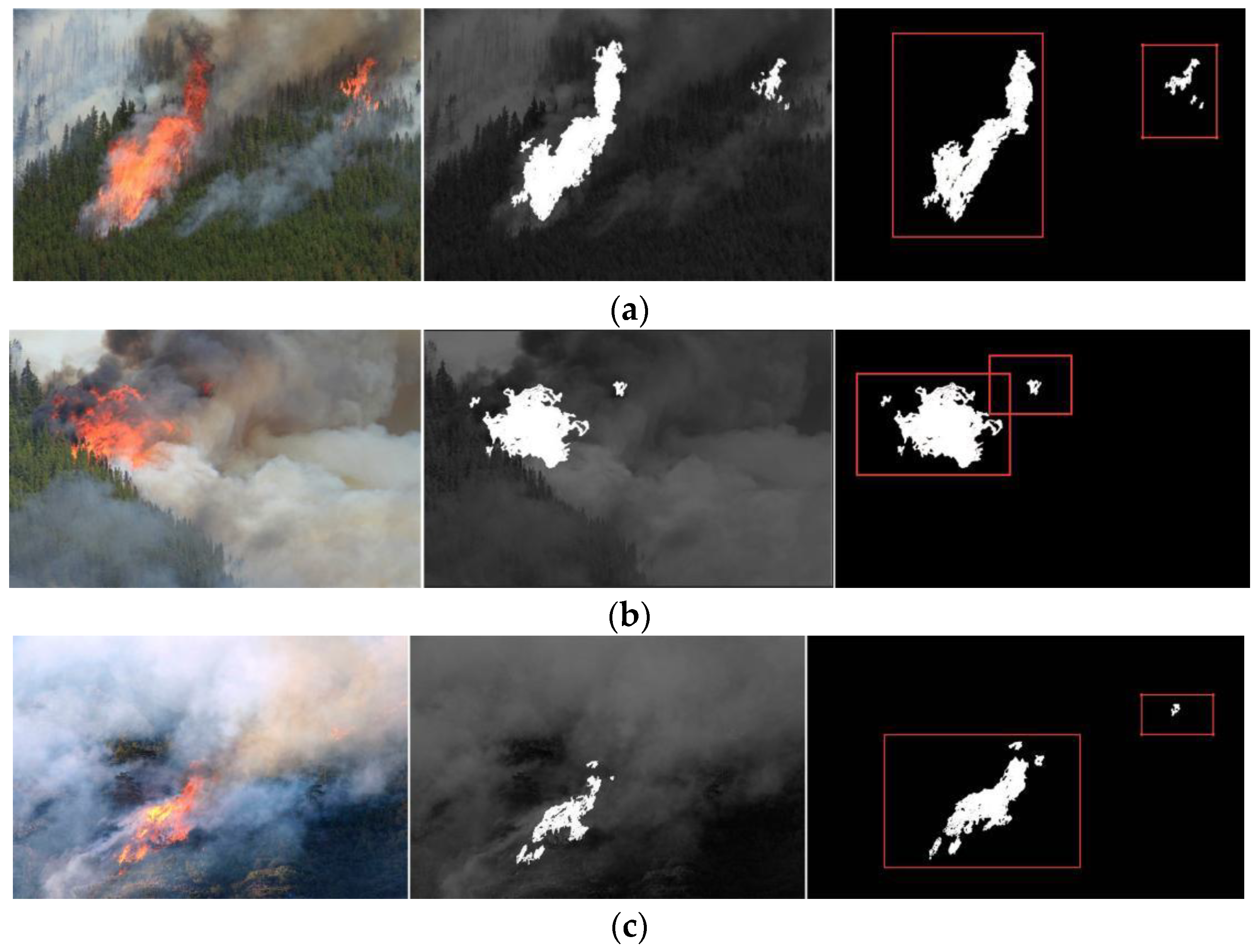

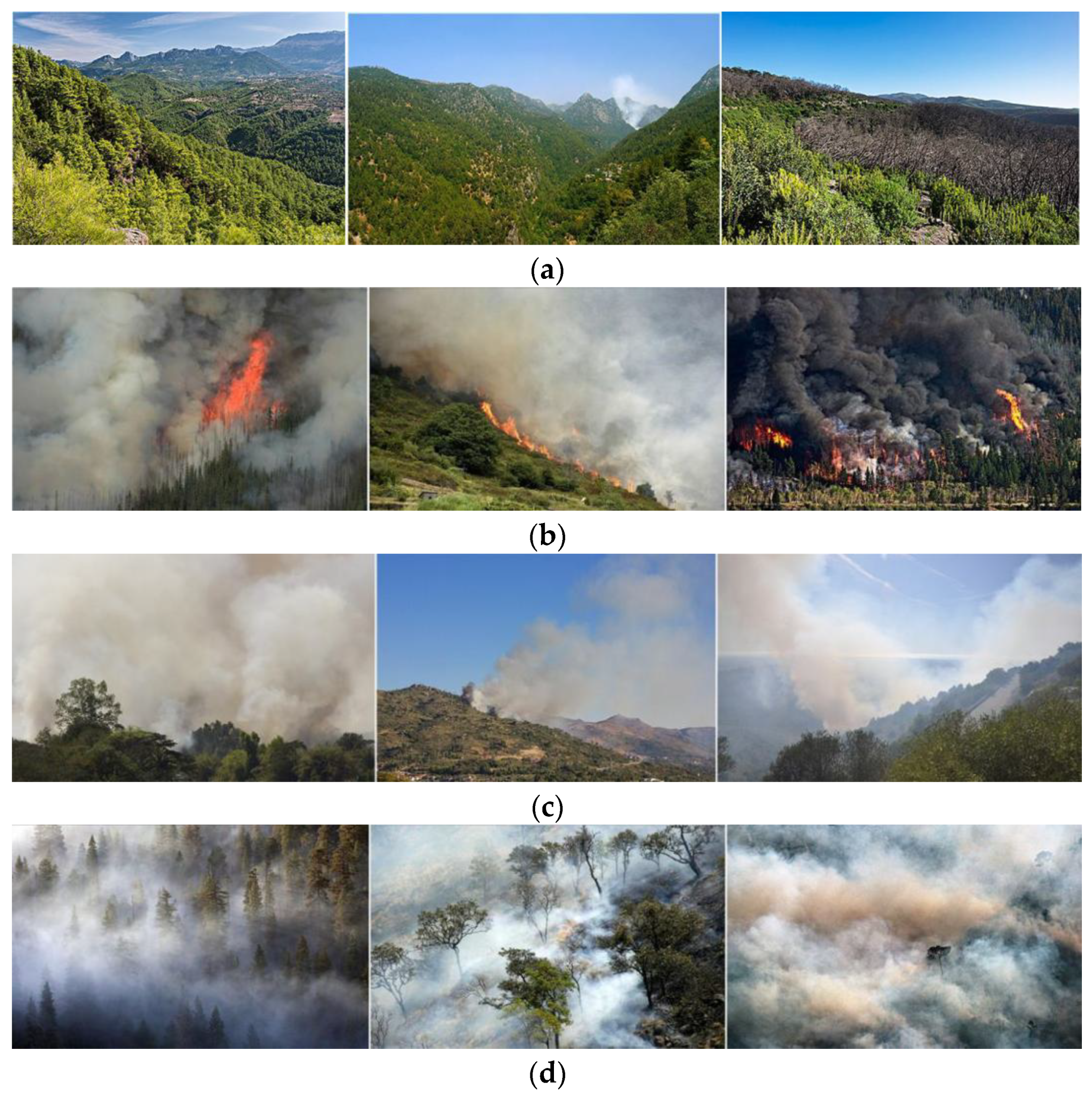

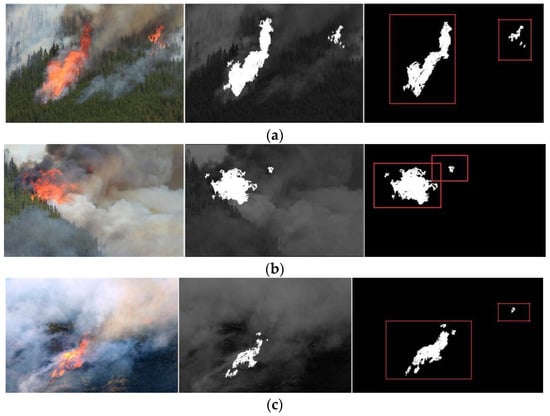

4.2. Analysis of Fire Recognition by the DCN_Fire Model

Figure 7 shows the visual characteristics after the input images had passed through the Conv 2 and Conv 3 layers. It could be observed that the lower layer captured low-level texture, edges, and color features while the higher layer captured sparse higher-level features by eliminating irrelevant content.

Figure 7.

The feature mapping process after the Conv 2 and Conv 3 layers in the DCN_Fire model. (a) The original input image, (b) the Conv 2 feature map, and (c) the Conv 3 feature map.

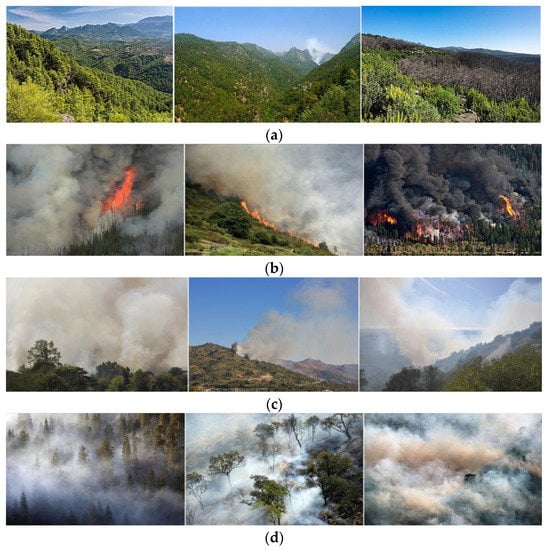

The DCN_Fire model was used to identify forest fire images and verify the effectiveness and rationality of the classification of the forest fire risk from aerial images (Figure 8). Since the smoke features in the smoke (Figure 8c) and mist (Figure 8d) images were highly similar, DCN_Fire mistakenly classified forest mist images as fire risk images. This was not surprising as humans can also mistakenly identify mist as smoke.

Figure 8.

Fire risk image classification using the DCN_Fire model. (a) Images without fire characteristics; (b) images with flames; (c) images with smoke; and (d) misclassified images with mist.

The test set consists of 1200 random images with and without flames from the database. From the results in Table 5, it could be seen see that the false negative rate with the DCN_Fire model (0.13%) was very low.

Table 5.

The performance of the DCN_Fire model on the test data set.

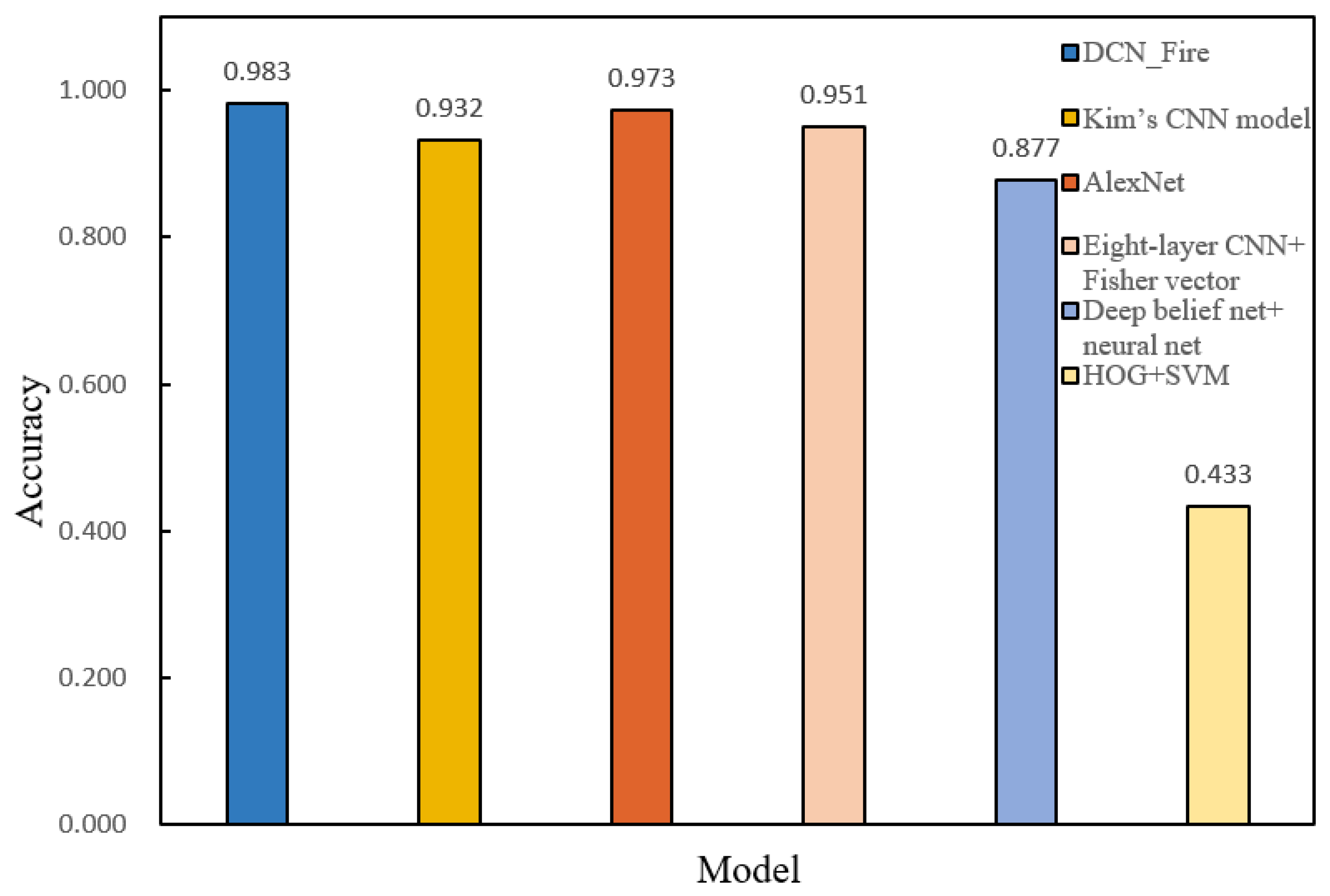

The same training and test data sets were used to verify and compare the recognition accuracy of the DCN_Fire model and other mainstream ML models (Kim’s CNN, AlexNet, 8-layer CNN + Fisher vector, HOG + SVM, and deep belief net + neural net) for fire risk recognition. The training results showed that DCN_Fire was more accurate than the other models and performed well (Figure 9, Table 6).

Figure 9.

Accuracy comparison of the DCN_Fire model and other mainstream ML models.

Table 6.

DCN_ Fire compared with the accuracy of other models.

5. Conclusions

The detection and recognition of forest fire risk images is of great significance for the detection and prevention of forest fires. Combined with migration learning, the work in this paper trained a DCNN network, used multiple pre-trained DCNN models to extract the features of forest fire images, used PCA reconstruction technology to convert features into a shared feature subspace, and established the forest fire risk recognition model. According to the experimental results, the main research conclusions of this paper were as follows:

- (1)

- A DCNN network combined with migration learning was constructed and trained using multiple pre-trained DCNN models to extract features from forest fire images and used PCA reconstruction technology to convert features into a shared feature subspace to establish a forest fire risk recognition model. We used 550 flame images to test the fire risk location performance and the segmentation algorithm. The true positive rate was 7.41% and the false positive rate was 4.8%. When verifying the impact of different batch sizes and loss rates on verification accuracy, the loss rate of the DCN_Fire model of 0.5 and the batch size of 50 provided the optimal value for verification accuracy (0.983).

- (2)

- Comparing the test results showed that the performance of the improved DCNN model was comparable with that of other models. In terms of processing time and verification accuracy, the DCN_Fire model was considered a better DCNN architecture in fire risk identification. When calculating the training and verification losses of several models, those of the DCN_Fire model were minimized.

Although the improved DCNN model achieved good results in the detection of forest fire risk, the detection accuracy still warrants improvement. In future research, we will further optimize the network model structure and improve the network performance of the forest fire risk identification model.

Author Contributions

Conceptualization, S.Z. and W.W.; methodology, S.Z., P.G., W.W. and X.Z.; software, S.Z. and X.Z.; validation, S.Z., W.W. and X.Z.; formal analysis, S.Z., P.G. and X.Z.; investigation, S.Z., W.W. and X.Z.; resources, S.Z., W.W. and X.Z.; data curation, X.Z. and P.G.; writing—original draft preparation, S.Z.; writing—review and editing, W.W. and X.Z.; visualization, S.Z. and X.Z.; supervision, W.W., X.Z. and S.Z.; project administration, W.W. and X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Key Field R&D Program Project of Guangdong Province, China (Grant No. 2019B020223003), Guangzhou Science and Technology Plan Project Innovation Platform Construction and Sharing (Grant No. 201605030013), Guangdong Laboratory of Lingnan Modern Agriculture Project under Grant NT2021009 and the No. 03 Special Project and the 5G Project of Jiangxi Province under Grant 20212ABC03A27.

Acknowledgments

We would like to thank our anonymous reviewers for their critical comments and suggestions for how to improve our manuscript.

Conflicts of Interest

The authors declare that there are no conflict of interest.

References

- Tariq, A.; Shu, H.; Siddiqui, S.; Mousa, B.G.; Munir, I.; Nasri, A.; Waqas, H.; Lu, L.; Baqa, M.F. Forest fire monitoring using spatial-statistical and Geo-spatial analysis of factors determining forest fire in Margalla Hills, Islamabad, Pakistan. Geomat. Nat. Hazards Risk 2021, 12, 1212–1233. [Google Scholar] [CrossRef]

- Vigna, I.; Besana, A.; Comino, E.; Pezzoli, A. Application of the socio-ecological system framework to forest fire risk management: A systematic literature review. Sustainability 2021, 13, 2121. [Google Scholar] [CrossRef]

- Naderpour, M.; Rizeei, H.M.; Khakzad, N.; Pezzoli, A. Forest fire induced Natech risk assessment: A survey of geospatial technologies. Reliab. Eng. Syst. Saf. 2019, 191, 106558. [Google Scholar] [CrossRef]

- Çolak, E.; Sunar, F. Evaluation of forest fire risk in the Mediterranean Turkish forests: A case study of Menderes region, Izmir. Int. J. Disaster Risk Reduct. 2020, 45, 101479. [Google Scholar] [CrossRef]

- Van Hoang, T.; Chou, T.Y.; Fang, Y.M.; Nguyen, N.T.; Nguyen, Q.H.; Xuan Canh, P.; Ngo Bao Toan, D.; Nguyen, X.L.; Meadows, M.E. Mapping forest fire risk and development of early warning system for NW Vietnam using AHP and MCA/GIS methods. Appl. Sci. 2020, 10, 4348. [Google Scholar] [CrossRef]

- Janiec, P.; Gadal, S. A comparison of two machine learning classification methods for remote sensing predictive modeling of the forest fire in the North-Eastern Siberia. Remote Sens. 2020, 12, 4157. [Google Scholar] [CrossRef]

- Gigovic, L.; Jakovljevic, G.; Sekulović, D.; Regodić, M. GIS multi-criteria analysis for identifying and mapping forest fire hazard: Nevesinje, Bosnia and Herzegovina. Teh. Vjesn. 2018, 25, 891–897. [Google Scholar]

- Mohajane, M.; Costache, R.; Karimi, F.; Pham, Q.B.; Essahlaoui, A.; Nguyen, H.; Laneve, G.; Oudija, F. Application of remote sensing and machine learning algorithms for forest fire mapping in a Mediterranean area. Ecol. Indic. 2021, 129, 107869. [Google Scholar] [CrossRef]

- Kalantar, B.; Ueda, N.; Idrees, M.; Janizadeh, S.; Ahmadi, K.; Shabani, F. Forest fire susceptibility prediction based on machine learning models with resampling algorithms on remote sensing data. Remote Sens. 2020, 12, 3682. [Google Scholar] [CrossRef]

- Stula, M.; Krstinic, D.; Seric, L. Intelligent forest fire monitoring system. Inf. Syst. Front. 2012, 14, 725–739. [Google Scholar] [CrossRef]

- Ciprián-Sánchez, J.F.; Ochoa-Ruiz, G.; Rossi, L.; Morandini, F. Assessing the impact of the loss function, architecture and image type for Deep Learning-based wildfire segmentation. Appl. Sci. 2021, 11, 7046. [Google Scholar] [CrossRef]

- Moayedi, H.; Mehrabi, M.; Bui, D.T.; Pradhan, B.; Foong, L.K. Fuzzy-metaheuristic ensembles for spatial assessment of forest fire susceptibility. J. Environ. Manag. 2020, 260, 109867. [Google Scholar] [CrossRef] [PubMed]

- Vikram, R.; Sinha, D.; De, D.; Das, A.K. EEFFL: Energy efficient data forwarding for forest fire detection using localization technique in wireless sensor network. Wirel. Netw. 2020, 26, 5177–5205. [Google Scholar] [CrossRef]

- Achu, A.L.; Thomas, J.; Aju, C.D.; Gopinath, G.; Kumar, S.; Reghunath, R. Machine-learning modelling of fire susceptibility in a forest-agriculture mosaic landscape of southern India. Ecol. Inform. 2021, 64, 101348. [Google Scholar] [CrossRef]

- Pham, B.T.; Jaafari, A.; Avand, M.; Al-Ansari, N.; Dinh Du, T.; Yen, H.P.H.; Phong, T.V.; Nguyen, D.H.; Le, H.V.; Mafi-Gholami, D.; et al. Performance evaluation of machine learning methods for forest fire modeling and prediction. Symmetry 2020, 12, 1022. [Google Scholar] [CrossRef]

- Michael, Y.; Helman, D.; Glickman, O.; Gabay, D.; Brenner, S.; Lensky, I.M. Forecasting fire risk with machine learning and dynamic information derived from satellite vegetation index time-series. Sci. Total Environ. 2021, 764, 142844. [Google Scholar] [CrossRef]

- Naderpour, M.; Rizeei, H.M.; Ramezani, F. Forest fire risk prediction: A spatial deep neural network-based framework. Remote Sens. 2021, 13, 2513. [Google Scholar] [CrossRef]

- Wang, C.; Luo, T.; Zhao, L.; Tang, Y.; Zou, X. Window zooming–based localization algorithm of fruit and vegetable for harvesting robot. IEEE Access 2019, 7, 103639–103649. [Google Scholar] [CrossRef]

- Resco de Dios, V.; Nolan, R.H. Some challenges for forest fire risk predictions in the 21st century. Forests 2021, 12, 469. [Google Scholar] [CrossRef]

- Bowman, D.M.; Williamson, G.J. River flows are a reliable index of forest fire risk in the temperate Tasmanian Wilderness World Heritage Area, Australia. Fire 2021, 4, 22. [Google Scholar] [CrossRef]

- Razavi-Termeh, S.V.; Sadeghi-Niaraki, A.; Choi, S.M. Ubiquitous GIS-based forest fire susceptibility mapping using artificial intelligence methods. Remote Sens. 2020, 12, 1689. [Google Scholar] [CrossRef]

- Salazar, L.G.F.; Romão, X.; Paupério, E. Review of vulnerability indicators for fire risk assessment in cultural heritage. Int. J. Disaster Risk Reduct. 2021, 60, 102286. [Google Scholar] [CrossRef]

- Son, B.H.; Kang, K.H.; Ryu, J.R.; Roh, S.J. Analysis of Spatial Characteristics of Old Building Districts to Evaluate Fire Risk Factors. J. Korea Inst. Build. Constr. 2022, 22, 69–80. [Google Scholar]

- Wang, H.; Dong, L.; Zhou, H.; Luo, L.; Lin, G.; Wu, J.; Tang, Y. YOLOv3-Litchi detection method of densely distributed litchi in large vision scenes. Math. Probl. Eng. 2021, 2021, 8883015. [Google Scholar] [CrossRef]

- Anderson-Bell, J.; Schillaci, C.; Lipani, A. Predicting non-residential building fire risk using geospatial information and convolutional neural networks. Remote Sens. Appl. Soc. Environ. 2021, 21, 100470. [Google Scholar] [CrossRef]

- Maffei, C.; Lindenbergh, R.; Menenti, M. Combining multi-spectral and thermal remote sensing to predict forest fire characteristics. ISPRS J. Photogramm. Remote Sens. 2021, 181, 400–412. [Google Scholar] [CrossRef]

- Hansen, R. The Flame Characteristics of a Tyre Fire on a Mining Vehicle. Min. Metall. Explor. 2022, 39, 317–334. [Google Scholar] [CrossRef]

- Tomar, J.S.; Kranjčić, N.; Đurin, B.; Kanga, S.; Singh, S.K. Forest fire hazards vulnerability and risk assessment in Sirmaur district forest of Himachal Pradesh (India): A geospatial approach. ISPRS Int. J. Geo-Inf. 2021, 10, 447. [Google Scholar] [CrossRef]

- Ozenen Kavlak, M.; Cabuk, S.N.; Cetin, M. Development of forest fire risk map using geographical information systems and remote sensing capabilities: Ören case. Environ. Sci. Pollut. Res. 2021, 28, 33265–33291. [Google Scholar] [CrossRef]

- Lin, G.; Zhu, L.; Li, J.; Zou, X.; Tang, Y. Collision-free path planning for a guava-harvesting robot based on recurrent deep reinforcement learning. Comput. Electron. Agric. 2021, 188, 106350. [Google Scholar] [CrossRef]

- Park, M.; Tran, D.Q.; Lee, S.; Park, S. Multilabel Image Classification with Deep Transfer Learning for Decision Support on Wildfire Response. Remote Sens. 2021, 13, 3985. [Google Scholar] [CrossRef]

- Šerić, L.; Pinjušić, T.; Topić, K.; Blažević, T. Lost person search area prediction based on regression and transfer learning models. ISPRS Int. J. Geo-Inf. 2021, 10, 80. [Google Scholar] [CrossRef]

- Lawal, M.O. Tomato detection based on modified YOLOv3 framework. Sci. Rep. 2021, 11, 1447. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y.; Zhu, M.; Chen, Z.; Wu, C.; Chen, B.; Li, C.; Li, L. Seismic performance evaluation of recycled aggregate concrete-filled steel tubular columns with field strain detected via a novel mark-free vision method. In Structures; Elsevier: Amsterdam, The Netherlands, 2022; Volume 37, pp. 426–441. [Google Scholar]

- Arif, M.; Alghamdi, K.K.; Sahel, S.A.; Alosaimi, S.O.; Alsahaft, M.E.; Alharthi, M.A.; Arif, M. Role of machine learning algorithms in forest fire management: A literature review. J. Robot. Autom. 2021, 5, 212–226. [Google Scholar]

- Quintero, N.; Viedma, O.; Urbieta, I.R.; Moreno, J.M. Assessing landscape fire hazard by multitemporal automatic classification of landsat time series using the Google Earth Engine in West-Central Spain. Forests 2019, 10, 518. [Google Scholar] [CrossRef] [Green Version]

- Akilan, T.; Wu, Q.J.; Zhang, H. Effect of fusing features from multiple DCNN architectures in image classification. IET Image Processing 2018, 12, 1102–1110. [Google Scholar] [CrossRef]

- Roy, A.M.; Bhaduri, J. Real-time growth stage detection model for high degree of occultation using DenseNet-fused YOLOv4. Comput. Electron. Agric. 2022, 193, 106694. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).