1. Introduction

Digitalization of the contemporary agriculture industry refers to the broad application of AI (artificial intelligence), which involves robotics, big data, and machine learning [

1]. Based on statistics from the World Bank, the average age of agricultural workers in the world is over 50 years old and modern trends in employment demonstrate the urbanization of the population, which means the young generation is mostly not interested in farming and agriculture [

2]. Despite the labor shortage in the agriculture field, food consumption is projected to increase in the next decades [

3]. Therefore, to eliminate such a labor shortage, robots and smart farming technology should compensate for human labor in the agriculture industry [

4].

The tomato is a fruit in the worldwide market with a gradually increasing consumption rate year by year. Manual harvesting of tomatoes is a typical labor-intensive work, which makes it impractical human labor in terms of effectiveness. Moreover, tomatoes are very soft and prone to bruising, which makes it difficult to introduce an automatic harvesting system [

5]. Furthermore, one of the challenging issues in tomato harvesting is the separation of the tomato from the stem in a gentle way. The tomato separation is a mostly negligible phenomenon in the design of a gripper tool to grab the tomato [

6]. Additionally, the proposed design should be safe in the harvesting process, because the surrounding workspace environment is quite fragile for rigid bodies.

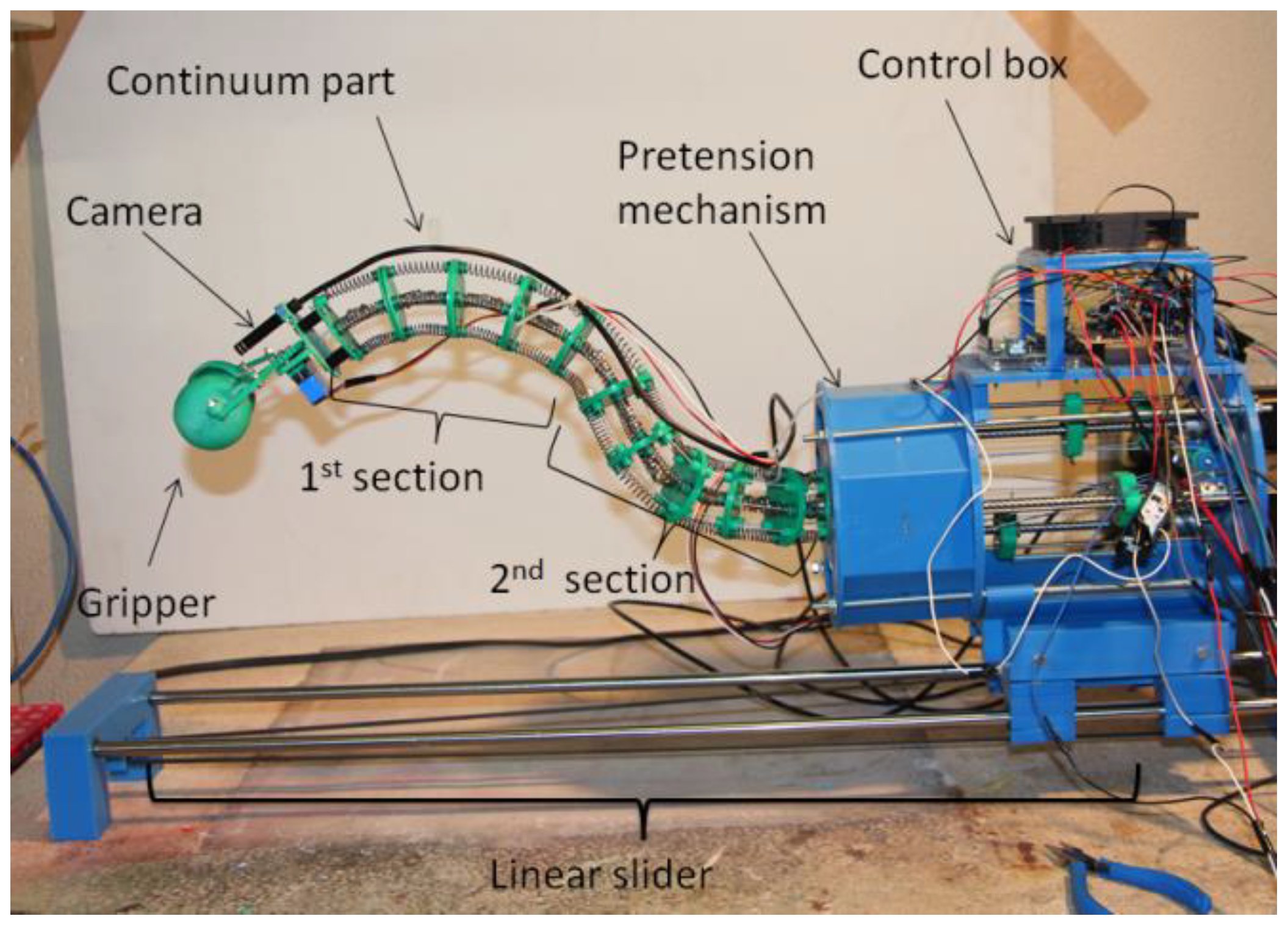

The proposed tomato harvesting robotic system consists of three components: A moving platform to carry the manipulator, the manipulator based on a continuum robot structure, and a grasping tool. Additionally, the software part includes the tomato recognition algorithm and control process.

In the 2010s researchers and scholars developed various types of robots for tomato harvesting, many researchers utilized KUKA, Universal Robots, and SCARA manipulators on mobile robot platforms to collect tomatoes in greenhouses [

7,

8,

9]. However, commercially available manipulators are designed to work in a structured environment, which means in the factory or manufacturing area, where the workspace is always constant and presents no change. The agriculture field tends to change within the growing plants, such a variable working environment demands a new technical solution for harvesting robots [

10]. One of the proposed technical solutions by Dr. Tokunawa proposed a continuum manipulator with a flexible structure, which has proven to be safe with wide reachability but possesses a low payload capacity [

11]. Similar harvesting robot arms are proposed by Henten et al. who designed and developed a cucumber harvesting robot arm with a thermal cutter, of which the successful harvesting rate was reported to be 74% [

12,

13].

Furthermore, the competitive solution proposed by Zhao et al. proposed a dual-arm SCARA robot, where one arm holds a tomato and the other arm cuts the stem. This requires additional training for tomato stem detection, and the two arms must work synchronously with high precision [

7]. A similar solution also had been proposed by Kounalakis et al. which used a UR robot manipulator with an RGBD camera for detection and a cutting tool for tomato separation [

14]. Moreover, detaching the tomato is also challenging. For instance, Wang et al. developed a gripper with a clamp mechanism to cut the stem after grasping the tomato [

15], similar to Zhao et al. Furthermore, Hiroaki et al. proposed a plucking gripper with an infinite rotational joint to automatically detach tomatoes [

16], but the success rate of detaching in the real application was only 60%. A similar gripper design has been also proposed by Root Ai Company as its Virgo robot has a SCARA-type arm that detaches tomatoes by twisting them after grasping [

17]. Panasonic Co., Ltd., (also presented a commercially available tomato picker robot that takes only 2–3 s in one tomato picking cycle, which will be the fastest machine [

18]. However, the above-mentioned prototypes cannot detach tomatoes accompanied by their sepal, which may cause problems in terms of tomato durability during transportation.

The latest crucial part of the harvesting process is a trained tomato detection system based on a machine learning model. There are many proposed solutions by scholars and researchers to recognize the tomato and discriminate between matured and immature tomatoes [

19,

20,

21,

22,

23,

24,

25]. One of the popular methods of tomato detection uses image data obtained by an RGB stereo camera [

26,

27,

28,

29]. The most popular detection model is the YOLO model, the main advantage of the YOLO model is that it can work with low computation devices, such as microprocessor-based boards, such as Rasberry Pi [

30].

This research presents a novel tomato harvesting robot arm based on continuum robot structure and the design of a new grasping tool with a passive stem cutting mechanism. Moreover, this research provides a machine learning model for tomato recognition and a control algorithm for the tomato harvesting process. In this research, the proposed robotic arm is named TakoBot [

31,

32] (Tako in Japanese means octopus, Bot comes from the robot). This paper is organized in the following order: design concept, kinematic/kinetic formulation, development of the recognition system, and experimental results followed by some conclusive remarks.

3. Tomato Recognition System

Recognition of matured tomatoes is also a critical issue among tomato harvesting robots. In this research, we employed machine learning based on neural networks to distinguish matured tomatoes from immature ones and other similar fruits as well. As a tomato classifier, we utilized a neural network YOLO (You Look Only Once) for the recognition of different types of tomatoes. The network architecture of YOLO v5 consists of three consecutive parts: Backbone, Neck, and Head (see

Figure 7). All collected datasets are sent to the CSPDarknet for extracting features (Backbone). After obtaining features, data are transferred to the PANet for highlighting features (Neck). In the end, obtained results, such as class, assessment, position, and object dimensions are evaluated (head) [

32].

In the Backbone stage, the CSPDarknet uses a modified convolutional neural network for connecting layers of the deep learning network with the effect of alleviating vanishing gradient problems. It uses a CSPNet strategy to split the base layer feature map into two parts and then merges them through an inter-stage hierarchy. This splitting and merging will increase the gradient flow through the network [

33].

In the next Neck stage, the PANet is used to improve the instance segmentation process by preserving the spatial information of the object. The PANet is a feature extractor that generates multiple layers of feature map information. It effectively segments and stores spatial information, which helps in localizing pixels to form a mask in the next stage [

34].

In the Head stage, the final evaluation is performed. It applies anchor blocks to extract features and generate the final output vectors containing the predicted bounding box coordinates (center, height, width), forecast confidence score, and probability classes.

In this research, we classified tomatoes into three classes by digit numbers: “0” stands for a red color or matured tomato, “1” for a green tomato or immature tomato, and “2” for a yellow tomato evaluated as one in turning to the red or matured tomato. In a process of recognizing tomatoes on photographic images obtained by the camera, the neural network will indicate them by circumscribing them with rectangles and classifying them by the above-mentioned digits numbers in the left corner.

To assess the performance of the machine learning algorithms, many assessment metrics have been developed. We employ metrics using a confusion matrix (see

Figure 8), which contains four combinations: True Positive (TP)—the number of objects that the classifier evaluates as positive and actual positives; True Negative (TN)—the number of objects that were classified as negative and do not belong to the negative class; False Positive (FP)—number of objects classified as positive, but are negative; False Negative (FN)—the number of objects that the classifier evaluates as negative objects, but in reality are positive.

Figure 8 shows a confusion matrix with normalization for a multiclass (i.e., three classes) classification. The diagonal shows the number of TP combinations for each class: 94% of objects in class 0, 96% of those in class 1, 92% of those in class 2 were classified correctly. Additionally, 1% of those in class 0 are erroneously predicted as objects in class 1, and another 1%—as objects in class 2. The remaining 4% were not assigned to any class, therefore, they are false negative solutions. For class 1, the remaining 4%, except for TP solutions, are also false negative indicators. And for class 2, 1% of all objects are erroneously classified as objects in class 0, and another 1% of the classifier predicted as objects in class 1. The proportion of false-negative decisions from objects in class 2 is 6%.

Based on these matrix combinations, the main metrics of the classification ability of the algorithm are calculated, such as precision, recall, and accuracy; the

Precision metric is the ratio between the true-positive results (TP) and all positively classified objects (TP and FP) that represents the ability to distinguish a given class from all other classes. As shown in

Figure 9, the value of the precision metric rapidly increases as the iteration progresses, which proves that the high accuracy of recognition was achieved in the early stage.

The

recall metric is also determined using TP results, but instead of false-positive decisions (FP), it takes into account the number of objects classified as negative, but positive (FN). This metric evaluates the ability to detect a certain class and shows how many positive examples are lost because of classification. The higher the value of the recall metric, the lower the value of the loss of correct predictions. In other words, the recall metric is associated with the confidence of a trained neural network. In a neural network, the neuron weight is associated with accuracy, the higher the weight the more accurate the trained module.

Figure 10 shows that the initial recall value is less than 0.5, which indicates staying at a poor level for the quality of the algorithm, but it rapidly increases to almost one.

In YOLO, one of the significant metrics is the

confidence metric, which provides information about the reliability of the classifier’s predictions. In the case of increasing the confidence threshold (the mean of precision), the value of the precision metric increases, and the recall will decrease.

Figure 11 shows that the reliability threshold was 0.966 (the mean of 0, 1 and 2 class values), which means that almost all the classes achieve ideal accuracy.

The results of this trial of assessment confirm that the YOLOv5 algorithm has high accuracy, enough to recognize ripe tomatoes.

We experimented in terms of confidence metrics. We prepared real cherry tomatoes obtained from the grocery store and fake cherry tomatoes printed out with dimensions and shapes similar to real ones. Then, we set up the fake tomatoes for recognition; the neural network recognized them as real cherry tomatoes, but with 70–75 percent precision. Furthermore, we put a real tomato neighboring the fake ones, in which the neural network instantly changed the decision and recognized the real tomato with 96 percent precision (

Figure 12).

Figure 13 shows real experimental results of tomato recognition by classifying them into three classes; “0” as red (ripened), “1” as green (no ripened), and “2” as a yellow tomato (expecting to be ripened one soon). The experiment was conducted in the agricultural greenhouse near Almaty city, Kazakhstan. For the dataset, we collected more than 1500 photos of the tomato plant as reference data to train the neural network. In this experiment, we used a borescope camera with a 3-megapixel resolution for dataset collection.

The main difference between YOLO and other Convolutional Neural Network (CNN) algorithms used for object detection is that it recognizes objects very quickly in real-time. The principle of operation of YOLO involves the input of the entire image at once, which passes through the convolutional neural network only once.

The main technical difference of YOLOv5 is that it was implemented on the PyTorch framework. This framework does not require a special API to work with the Python programming language. PyTorch also uses a dynamic graph model to make it easier for machine learning experts to write code.

When detecting objects, YOLOv5 shows relatively good results in recognizing smaller objects compared to Faster RCNN [

35].

Additionally, the Mask R-CNN model was trained on the same dataset.

Table 1 shows the results of calculating the mAp(mean average precision), mAr(mean average recall), and f1 score metrics:

Based on this table, we see that the value of the YOLOv5 metrics shows a very good result compared to Mask R-CNN. Thus, it can be argued that the Mask R-CNN algorithm showed a poor result in training since the values of mAP = 0.13 and F1 score = 0.23 are low.

To measure the distance between a tomato and the camera, we used a measurement method by using a single camera (

Figure 14).

It allows calibrating the relative position of the gripper tool for perfect grasping of the tomato without any damage (

Figure 15).

The camera generates a one-to-one relationship between the object and the image. Using this principle, we can deduce a distance from the camera to an object (

d) from known parameters: focal length (

f), the radius of the tomato in the image plane (

r), and the radius of the tomato in the object plane (

R) with

. However, a drawback of this method is size limitation, this means that the program for the recognition requires information about the real size of the object and compares with predefined size of it. For instance, in this research, we applied this method only to cherry tomatoes of about 30 mm in diameter. This means that the distance measurement is applicable only for tomatoes with about a 30 mm diameter [

35].

4. Kinematic and Kinetic Formulations

4.1. A. Forward Kinematic Formulation

Coordinate systems are set at every universal joint.

The homogeneous coordinate transform matrices:

where

and

are an initial position of the base.

and

are the rotation matrices of the

ith universal joint that has two rotation angles

and

,

is the rotation matrix of the

ith disk with a rotation angle

along the axial axis and L is the length between neighboring universal joints (

Figure 16).

Multiplying the H-matrices successively, we obtain unit vectors and the position vector of the

ith coordinate system;

where

is the position of the

ith universal joint U

i The position vector

of the end-point

and position of sliding plates

of the manipulator are obtained by,

where

is a fixed length between the nth universal joint and the most distal plate.

The position vectors of eight holes

in the first section and for the second section

at the base plate are determined as,

where

is an axial length between the

ith universal joint and the

ith plate, which varies as the plate slides along rods, except

.

4.2. B. Kinetic Formulation

TakoBot has two actuating sections: the first section (distal part) and the second section (proximal part). Each section operates by four actuating wires driven by two motors, in gerenal number of actuated cables are eight. Kinetic formulation describes the motion with force in combination with the springs and motor angle. Moreover, in this formulation, we also need to consider the pretension mechanism formulation in order to calculate the wire tension.

The second segment has m units and the first segment has n−m units.

Four pairs of wires are labeled by and , and , and , d and ,

Equilibrium in moments at U

n belonging to the first segment is

where

=

, etc.,

is a payload applying at the end-point and

is the gravity acceleration vector.

Equilibrium in moments at U

i,

, belonging to the first segment is

where

are wire tensions,

are spring tensions of the

ith unit. “

” means a cross product and “|*|”,

is the mass of one unit including the plate, the rod, and the universal joint (

Figure 17).

The spring tensions are obtained as,

with spring coefficient

k. Equations (9) and (10) contain 3(

n−

m) equations including 4(

n−

m)−1 variables of the

n−

m universal joints angles

, , , and slide length of plates

.

Equilibrium in force at the

ith plate

is,

(10) provide n−m−1 equations. Combined with (7) and (8), we obtain 4(

n−

m)−1 equations, which suffices in number to solve for 4(

n−

m)−1 variables;

for a given set of wire tensions

Equilibrium in moments at U

m, the universal joint located at the most distal position belonging to the second segment is

For the second segment, we can derive similar equations as (8)–(10) by replacing with , {, , , } with {, , , } for in (8) and for in (9) and (10).

As a result, we obtain 4

m equations included by (11), which suffices in number to solve for 4

m variables;

for a given set of wire tensions

(

Figure 17).

4.3. C. Pretention Mechanism Formulation

The pre-tension spring receives 2f, therefore:

where

,

are compression length of the pretension spring of which the spring constant is

and

are determined by the motor rotation angle and wire length (

Figure 18).

where

and

are the compression length of the pretension spring, which are preset initially.

Substituting Equation (12) into Equation (13), we have;

Wire tensions

,

. are determined according to four motor angles

(

Figure 19)

As

where

is a motor rotation angle to generate a pretension,

is a leadeof the screw rod and

is the spring constant of the pretension spring.

4.4. D. Inverse Kinematic Solution

According to the given set of variables

, we calculate the end-point position by Equation (6),

Taking a total differentiation of

with respect to

and also motor angles

,

where

and

and

.

Whereas, let represent the 4n−1 equations provided by Equations (7), (8), (10) and (11), which also include and also the motor angles .

Taking a total differentiation for

as well, we have,

where

and

. Since

is a square matrix, we can solve (19) with respect to the vector

as,

Substituting (17) into (15), we have

which can be solved for

by using a generalized inverse of the Jacobian

where

is a generalized inverse of

and

is a null projection operator of

, and

is a correction of

to minimize a positive scalar potential

by making use of a redundant actuation. We use

and

.

Equation (19) provides a variety of motor angles for a given position and direction variation .

Applying the Euler method, we have the following variational equation,

which is solved by

As a candidate of

, we take

, where

is the z component of

: the unit vector of the end-point orienting an axial direction. It means that the axial direction of the end-point takes on a horizontal plane as far as possible while keeping a designated position (

Figure 20).

6. Experiment

As a controller, we used an Arduino Uno board and TMC2208 motor drivers for the stepping motors. For continuous work, we also equipped a fan to cool the electronic parts

For the experiment, we fabricated cherry tomatoes and hung them in front of the manipulator. The task was to reach and grasp the tomato, detach it from the stem, and put it into the basket. During the experiment, we also tested robot manipulability, such as reaching the object from various angles (

Figure 23).

The total length of the robot arm is 800 mm, the slender part or mobile part of the robot is 400 mm, the other 400 mm is the control box and actuators. The slider length of the platform is 1000 mm, but only 600 mm is available because the stationary part of the manipulator takes 400 mm.

According to the conducted experiment, TakoBot demonstrated high feasibility in working in a confined workspace as well as high reachability. A single arm was enough to perform the given task. In a real-world experiment, additional obstacles were placed in the working space to test the robot’s reachability and the obstacle avoidance capability of the robot arm. In this experiment, we used hard white paper to imitate a confined workspace.

During the experiment, we discovered that harvesting time increased under existing obstacles. In such a case, the robot’s slender part spent more time fitting to the newly constrained environment and reaching the object (

Figure 24). Furthermore, the tomato grasping process also takes more time, taking almost half the time of the whole harvesting process. However, the success rate of the tomato was sufficiently high, and the manipulator was able to grasp all detected tomatoes, but it spent more time completing the task.

7. Conclusions

This research paper described a new continuum robot design and state-of-the-art application of the robot in the agriculture industry. In general, continuum robots have a limited payload capacity feature, therefore, an enormous research and design iteration has been conducted to improve the robot payload capacity capability by using a pretension mechanism. Such a pick and place capability for continuum robots would explore new horizons of robot application. Moreover, based on conducted experimental results, this robot can work in collaboration with humans safely. Proposed kinematics and kinetic formulations are also simplified for broad application. In addition, the proposed gripper tool for tomato separation demonstrated a reliable simple technical solution for the harvesting process.

Additionally, the tomato separation process, tomato grasping tool design, AI-based tomato recognition system, and control principle (kinematic formulation of hardware and control devise) are explained.

However, it was found that the tomato harvesting process took an average of 56 s (

Figure 25), which is slower in comparison with human work. This working cycle time issue could be improved by optimizing the robot size and control algorithm.

The future plan for the robot is to adapt the robot to the real-world environment and improve the control algorithm by implementing a nonlinear model of the predictive control method and collecting data to optimize the robot trajectory. Likewise, improvements could decrease the total harvesting time and success rate of tomato recognition.