A Comprehensive Study of Mobile Robot: History, Developments, Applications, and Future Research Perspectives

Abstract

:1. Introduction

- First law: A robot should not harm a human being or, with inaction, permit a human being to harm them.

- Second law: A robot should follow the tasks specified by a human except in the case where the first law conflicts with the situation.

- Third law: A robot should save its existence except in the cases where the first and second laws conflict with the situation.

2. Literature Survey

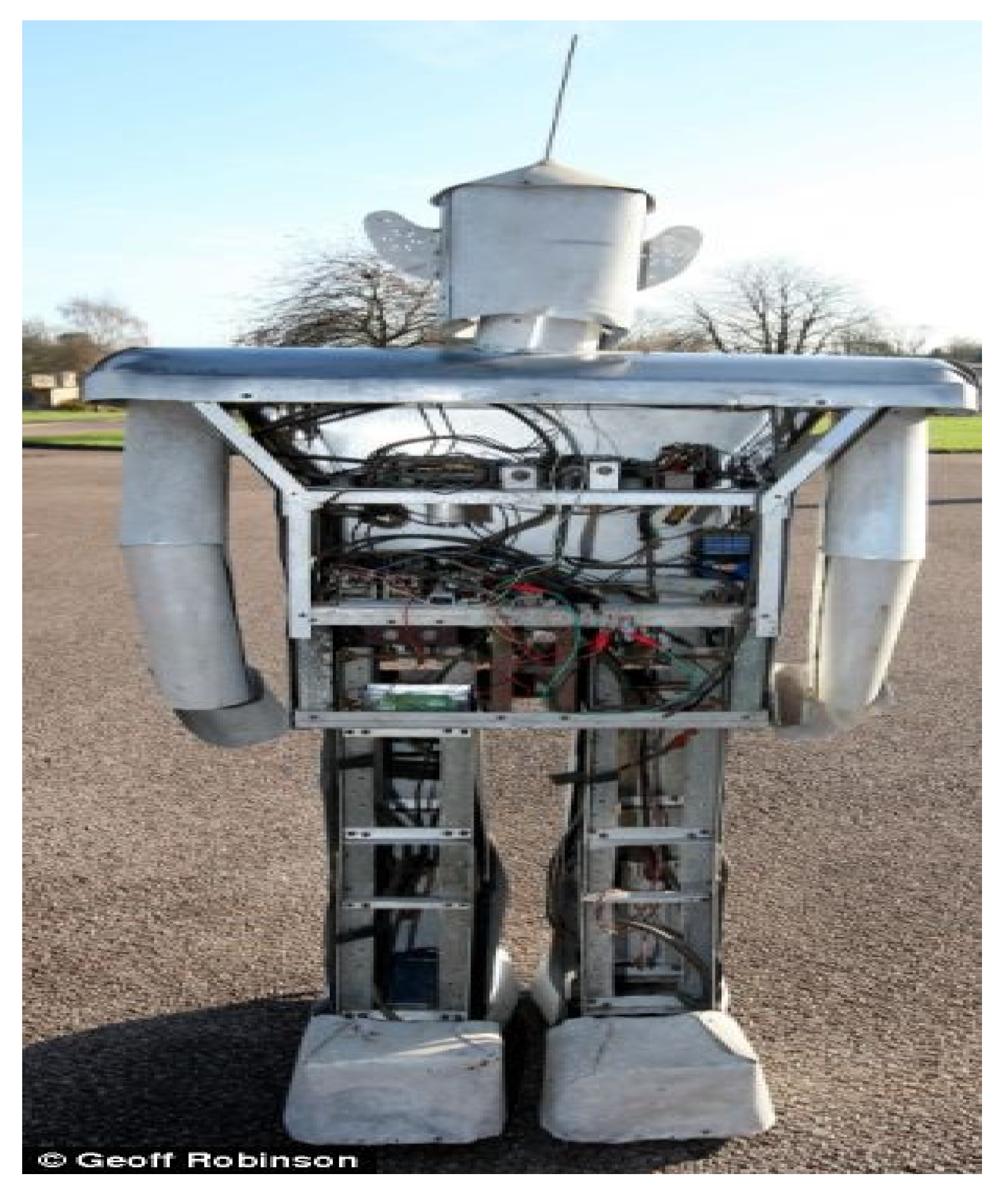

2.1. From 1970 to 1980

2.2. From 1981 to 1990

2.3. From 1991 to 2000

2.4. From 2001 to 2010

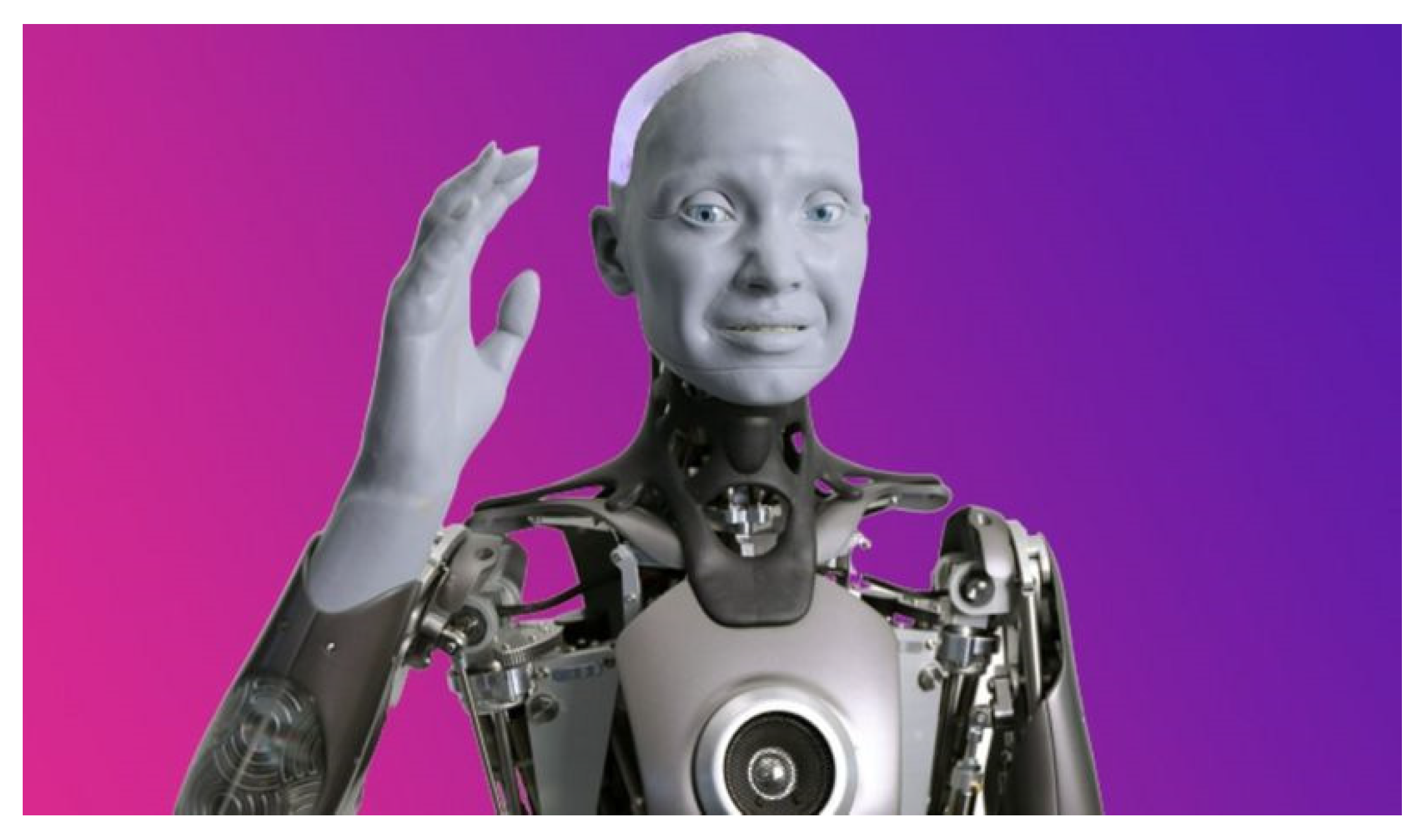

2.5. From 2011 to 2021

3. Autonomous Navigation of a Mobile Robot

3.1. Mapping

3.2. Localization

3.3. SLAM (Simultaneous Localization and Mapping)

3.4. Path Planning

3.5. SPLAM (Simultaneous Planning, Localization, and Mapping)

4. Some Major Applications of Mobile Robots

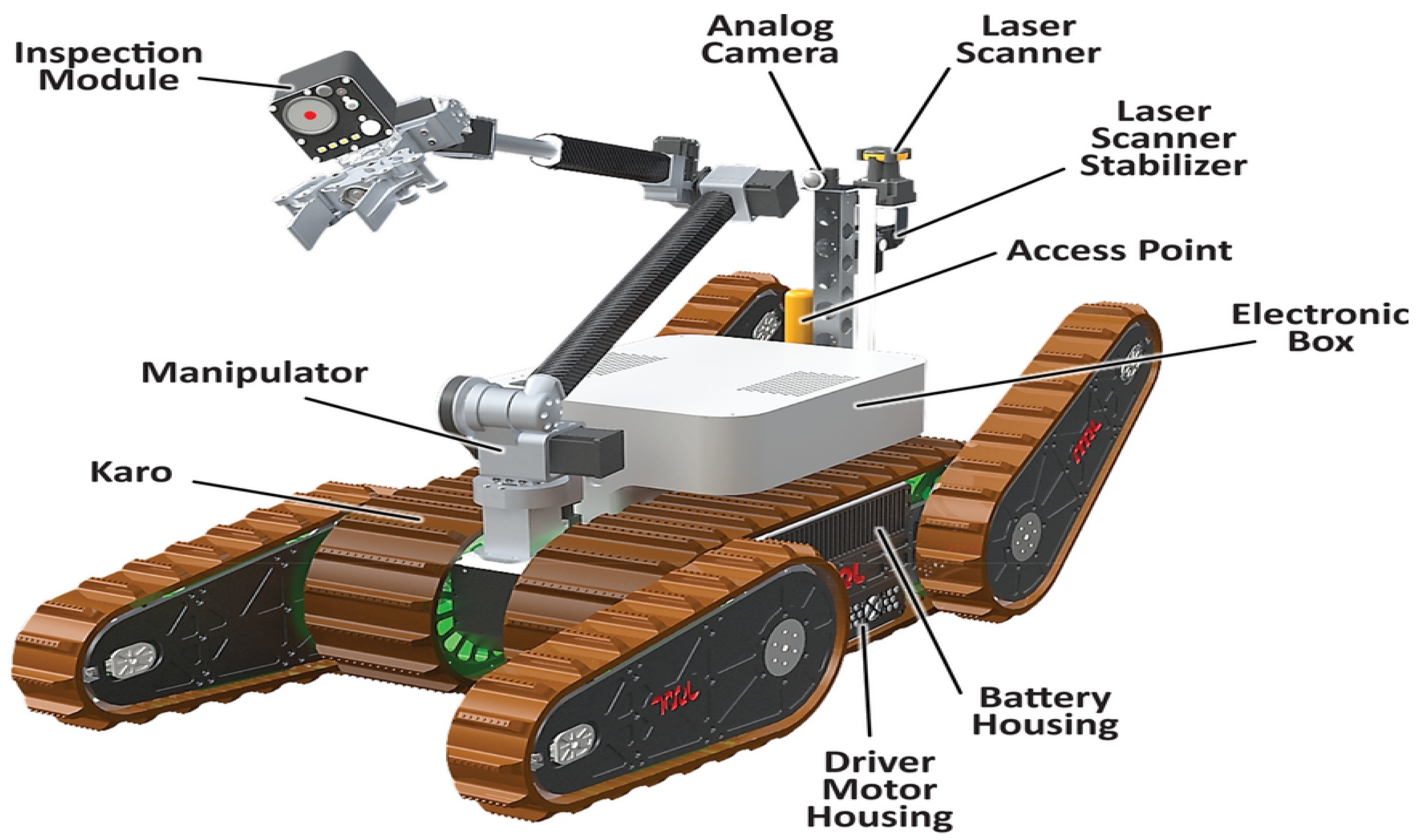

5. Architecture and Components of a Typical Modern Autonomous Mobile Robot

6. The Mechanism of Mobile Robots

7. Intelligent Control System of Mobile Robots

7.1. The A* Algorithms

7.2. Probabilistic Algorithms

7.3. The RRT Algorithms

8. Some Major Impacts of Mobile Robots and Artificial Intelligence

8.1. Impacts on the Workplace

8.2. Impacts on the Industries

8.3. Impacts on Human Lives

8.4. Impacts on the Human-Computer Interactions

9. Future Research Perspectives

10. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Britannica. The Editors of Encyclopedia. “R.U.R.”. Encyclopedia Britannica, 20 November 2014. Available online: https://www.britannica.com/topic/RUR (accessed on 17 June 2022).

- Mennie, D. Systems and Cybernetics: Tools of the Discipline are progressing from the inspirational to the practical. IEEE Spectr. 1974, 11, 85. [Google Scholar] [CrossRef]

- Considine, D.M.; Considine, G.D. Robot Technology Fundamentals. In Standard Handbook of Industrial Automation—Chapman and Hall Advanced Industrial Technology Series; Considine, D.M., Considine, G.D., Eds.; Springer: Boston, MA, USA, 1986; pp. 262–320. [Google Scholar] [CrossRef]

- Nitzan, D. Development of Intelligent Robots: Achievements and Issues. IEEE J. Robot. Autom. 1985, 1, 3–13. [Google Scholar] [CrossRef]

- Oommen, B.; Iyengar, S.; Rao, N.; Kashyap, R. Robot navigation in unknown terrains using learned visibility graphs. Part I: The disjoint convex obstacle case. IEEE J. Robot. Autom. 1987, 3, 672–681. [Google Scholar] [CrossRef]

- Daily Mail Reporter. Built to Last: Robot Made from Crashed Bomber Comes Back to Life after 45 Years Stored in His Inventor’s Garage. 2010. Available online: https://www.dailymail.co.uk/sciencetech/article-1331949/George-foot-robot-comes-life-45-years-stored-inventors-garage (accessed on 17 January 2022).

- History Computer Staff. Shakey the Robot Explained: Everything You Need to Know. January 2022. Available online: https://history-computer.com/shakey-the-robot (accessed on 11 May 2022).

- Philidog, Miso and More Vehicles. Available online: http://www.joostrekveld.net/?p=321 (accessed on 11 May 2022).

- British Broad Casting (BBC). CES 2022: The Humanoid Robot, Ameca, Revealed at CES Show. Available online: https://www.bbc.co.uk/newsround/59909789 (accessed on 19 January 2022).

- Russell, S.; Norvig, P. Artificial Intelligence: A Modern Approach, 3rd ed.; Pearson: London, UK, 2009; p. 1. ISBN 0-13-461099-7. Available online: https://zoo.cs.yale.edu/classes/cs470/materials/aima2010.pdf (accessed on 19 January 2022).

- Robotics Online Marketing Team. How Artificial Intelligence Is Used in Today’s Robot. Association for Advancing Automation. 2018. Available online: https://www.automate.org/blogs/how-artificial-intelligence-is-used-in-today-s-robots (accessed on 19 January 2022).

- Robotics: A Brief History. Available online: https://cs.stanford.edu/people/eroberts/courses/soco/projects/1998-99/robotics/history.html (accessed on 25 January 2022).

- Kirk, D.E.; Lim, L.Y. A Dual-Mode Routing Algorithm for an Autonomous Roving Vehicles. IEEE Trans. Aerosp. Electron. Syst. 1970, 6, 290–294. [Google Scholar] [CrossRef] [Green Version]

- Cahn, D.F.; Phillips, S.R. ROBNOV: A Range-Based Robot Navigation and Obstacle Avoidance Algorithm. IEEE Trans. Syst. Man Cybern. 1975, 5, 544–551. [Google Scholar] [CrossRef]

- McGhee, R.B.; Iswandhi, G.I. Adaptive Locomotion of a Multilegged Robot over Rough Terrain. IEEE Trans. Syst. Man Cybern. 1979, 9, 176–182. [Google Scholar] [CrossRef]

- Blidberg, D.R. Computer Systems for Autonomous Vehicles. In Proceedings of the OCEANS-81, IEEE Conference, Boston, MA, USA, 16–18 September 1981; pp. 83–87. [Google Scholar] [CrossRef]

- Thorpe, C. The CMU Rover and the FIDO Vision Navigation System. In Proceedings of the 1983 3rd International Symposium on Unmanned Untethered Submersible Technology, IEEE Conference, Durham, NH, USA, 25 June 1983; pp. 103–115. [Google Scholar] [CrossRef]

- Harmon, S.Y. Comments on automated route planning in unknown natural terrain. In Proceedings of the IEEE International Conference on Robotics and Automation, Atlanta, GA, USA, 13–15 March 1984; pp. 571–573. [Google Scholar] [CrossRef] [Green Version]

- Meystel, A.; Thomas, M. Computer-aided conceptual design in robotics. In Proceedings of the IEEE International Conference on Robotics and Automation, Atlanta, GA, USA, 13–15 March 1984; pp. 220–229. [Google Scholar] [CrossRef]

- Keirsey, D.; Mitchell, J.; Bullock, B.; Nussmeir, T.; Tseng, D.Y. Autonomous Vehicle Control Using AI Techniques. IEEE Trans. Softw. Eng. 1985, 11, 986–992. [Google Scholar] [CrossRef]

- Harmon, S.Y. The ground surveillance robot (GSR): An autonomous vehicle designed to transit unknown terrain. IEEE J. Robot. Autom. 1987, 3, 266–279. [Google Scholar] [CrossRef] [Green Version]

- Borenstein, J.; Koren, Y. Obstacle Avoidance with Ultrasonic Sensors. IEEE J. Robot. Autom. 1988, 4, 213–218. [Google Scholar] [CrossRef]

- Fujimura, K.; Samet, H. A hierarchical strategy for path planning among moving obstacles (mobile robots). IEEE Trans. Robot. Autom. 1989, 5, 61–69. [Google Scholar] [CrossRef]

- Luo, R.C.; Kay, M.G. Multisensor integration and fusion in intelligent systems. IEEE Trans. Syst. Man Cybern. 1989, 19, 901–931. [Google Scholar] [CrossRef] [Green Version]

- Griswold, N.C.; Eem, J. Control for mobile robots in the presence of moving objects. IEEE Trans. Robot. Autom. 1990, 6, 263–268. [Google Scholar] [CrossRef]

- Shiller, Z.; Gwo, Y.-R. Dynamic motion planning of autonomous vehicles. IEEE Trans. Robot. Autom. 1991, 7, 241–249. [Google Scholar] [CrossRef]

- Zhu, Q. Hidden Markov Model for dynamic obstacle avoidance of mobile robot navigation. IEEE Trans. Robot. Autom. 1991, 7, 390–397. [Google Scholar] [CrossRef]

- Manigel, J.; Leonhard, W. Vehicle control by computer vision. IEEE Trans. Ind. Electron. 1992, 39, 181–188. [Google Scholar] [CrossRef]

- Yuh, J.; Lakshmi, R. An Intelligent Control System for Remotely operated Vehicles. IEEE J. Ocean. Eng. 1993, 18, 55–62. [Google Scholar] [CrossRef]

- Gruver, W.A. Intelligent Robotics in Manufacturing, Service, and Rehabilitation: An Overview. IEEE Trans. Ind. Electron. 1994, 41, 4–11. [Google Scholar] [CrossRef]

- Guldner, J.; Utkin, V.I. Sliding Mode Control for Gradient Tracking and Robot Navigation using Artificial Potential fields. IEEE Trans. Robot. Autom. 1995, 11, 247–254. [Google Scholar] [CrossRef]

- Campion, G.; Bastin, G.; Novel, B.D. Structural Properties and Classification of Kinematic and Dynamic Models of Wheeled Mobile Robots. IEEE Trans. Robot. Autom. 1996, 12, 47–62. [Google Scholar] [CrossRef]

- Hall, D.L.; Llinas, J. An Introduction to Multisensor Data Fusion. Proc. IEEE 1997, 85, 6–23. [Google Scholar] [CrossRef] [Green Version]

- Divelbiss, A.W.; Wen, J.T. Trajectory Tracking Control of a Car-trailer System. IEEE Trans. Control. Syst. Technol. 1997, 5, 269–278. [Google Scholar] [CrossRef] [Green Version]

- Dias, J.; Paredes, C.; Fonseca, I.; Araujo, H.; Batista, J.; Almeida, A.T. Simulating Pursuit with Machine Experiments with Robots and Artificial Vision. IEEE Trans. Robot. Autom. 1998, 14, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Suzumori, K.; Miyagawa, T.; Kimura, M.; Hasegawa, Y. Micro Inspection Robot for 1-in pipes. IEEE/ASME Trans. Mechatron. 1999, 4, 286–292. [Google Scholar] [CrossRef]

- Gaspar, J.; Winters, N.; Santos-Victor, J. Vision-based Navigation and Environmental Representations with an Omnidirectional Camera. IEEE Trans. Robot. Autom. 2000, 16, 890–898. [Google Scholar] [CrossRef]

- Martinez, A.M.; Kak, A.C. PCA versus LDA. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 228–233. [Google Scholar] [CrossRef] [Green Version]

- DeSouza, G.N.; Kak, A.C. Vision for Mobile Robot Navigation: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 237–267. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.M.; Son, K.; Lee, M.C.; Choi, J.W.; Han, S.H.; Lee, M.H. Localization of a Mobile Robot Using the Image of a Moving Object. IEEE Trans. Ind. Electron. 2003, 50, 612–619. [Google Scholar] [CrossRef]

- Murphy, R.R. Human-robot Interaction in Rescue Robotics. IEEE Trans. Syst. Man Cybern. C Appl. Rev. 2004, 34, 138–153. [Google Scholar] [CrossRef]

- Burgard, W.; Moors, M.; Stachniss, C.; Schneider, F.E. Coordinated Multi-Robot Exploration. IEEE Trans. Robot. 2005, 21, 376–386. [Google Scholar] [CrossRef] [Green Version]

- Rentschler, M.E.; Dumpert, J.; Platt, S.R.; Lagnemma, K.; Oleynikov, D.; Farritor, S.M. Modeling, Analysis, and Experimental Study of In Vivo Wheeled Robotic Mobility. IEEE Trans. Robot. 2006, 22, 308–321. [Google Scholar] [CrossRef] [Green Version]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. ManoSLAM: Real-Time Single Camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef] [Green Version]

- Wood, R.J. The First Takeoff of a Biologically Inspired At-Scale Robotic Insect. IEEE Trans. Robot. 2008, 24, 341–347. [Google Scholar] [CrossRef]

- Choi, H.L.; Brunet, L.; How, J.P. Consensus-Based Decentralized Auctions for Robust Task Allocation. IEEE Trans. Robot. 2009, 25, 912–926. [Google Scholar] [CrossRef] [Green Version]

- Glaser, S.; Vanholme, B.; Mammar, S.; Gruyer, D.; Nouvelière, L. Maneuver-Based Trajectory Planning for Highly Autonomous Vehicles on Real Road with Traffic and Driver Interaction. IEEE Trans. Intell. Transp. Syst. 2010, 11, 589–606. [Google Scholar] [CrossRef]

- Song, G.; Wang, H.; Zhang, J.; Meng, T. Automatic docking system for recharging home surveillance robots. IEEE Trans. Consum. Electron. 2011, 57, 428–435. [Google Scholar] [CrossRef]

- Stephan, K.D.; Michael, K.; Michael, M.G.; Jacob, L.; Anesta, E.P. Social Implications of Technology: The Past, the Present, and the Future. Proc. IEEE 2012, 100, 1752–1781. [Google Scholar] [CrossRef]

- Broggi, A.; Buzzoni, M.; Debattisti, S.; Grisleri, P.; Laghi, M.C.; Medici, P.; Versari, P. Extensive Tests of Autonomous Driving Technologies. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1403–1415. [Google Scholar] [CrossRef]

- Endres, F.; Hess, J.; Sturm, J.; Cremers, D.; Burgard, W. 3-D Mapping With an RGB-D Camera. IEEE Trans. Robot. 2014, 30, 177–187. [Google Scholar] [CrossRef]

- Dong, X.; Yu, B.; Shi, Z.; Zhong, Y. Time-Varying Formation Control for Unmanned Aerial Vehicles: Theories and Applications. IEEE Trans. Control Syst. Technol. 2015, 23, 340–348. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhang, R.; Lim, T.J. Throughput Maximization for UAV-Enabled Mobile Relaying Systems. IEEE Trans. Commun. 2016, 64, 4983–4996. [Google Scholar] [CrossRef]

- Rasekhipour, Y.; Khajepour, A.; Chen, S.; Litkouhi, B. A Potential Field-Based Model Predictive Path-Planning Controller for Autonomous Road Vehicles. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1255–1267. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef] [Green Version]

- Nicholson, L.; Milford, M.; Sünderhauf, N. QuadricSLAM: Dual Quadrics from Object Detections as Landmarks in Object-Oriented SLAM. IEEE Robot. Autom. Lett. 2019, 4, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A Survey of Autonomous Driving: Common Practices and Emerging Technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Zhu, K.; Zhang, T. Deep reinforcement learning based mobile robot navigation: A review. Tsinghua Sci. Technol. 2021, 26, 674–691. [Google Scholar] [CrossRef]

- Ohya, I.; Kosaka, A.; Kak, A. Vision-based Navigation by a Mobile Robot with Obstacle Avoidance using Single-camera Vision and Ultrasonic Sensing. IEEE Trans. Robot. Autom. 1998, 14, 969–978. [Google Scholar] [CrossRef] [Green Version]

- Burman, S. Intelligent Mobile Robotics. Technology Focus—A Bimonthly S&T Magazine of DRDO, November–December 2016; 2–16. [Google Scholar]

- Moigne, J.J.L.; Waxman, A.M. Structured Light Patterns for Robot mobility. IEEE J. Robot. Autom. 1988, 4, 541–548. [Google Scholar] [CrossRef]

- Alatise, M.B.; Hancke, G.P. A Review on Challenges of Autonomous Mobile Robot and Sensor Fusion Methods. IEEE Access 2020, 8, 39830–39846. [Google Scholar] [CrossRef]

- Seminara, L.; Gastaldo, P.; Watt, S.J.; Valyear, K.F.; Zuher, F.; Mastrogiovanni, F. Active Haptic Perception in Robots: A Review. Front. Neurorobotics 2019, 13, 53. [Google Scholar] [CrossRef]

- Azizi, A. Applications of Artificial Intelligence Techniques to Enhance Sustainability of Industry 4.0: Design of an Artificial Neural Network Model as Dynamic Behavior Optimizer of Robotic Arms. Complexity 2020, 8564140. [Google Scholar] [CrossRef]

- Azizi, A.; Farshid, E.; Kambiz, G.O.; Mosatafa, C. Intelligent Mobile Robot Navigation in an Uncertain Dynamic Environment. Appl. Mech. Mater. 2013, 367, 388–392. [Google Scholar] [CrossRef]

- Rashidnejhad, S.; Asifa, A.H.; Osgouie, K.G.; Meghdari, A.; Azizi, A. Optimal Trajectory Planning for Parallel Robots Considering Time-Jerk. Appl. Mech. Mater. 2013, 390, 471–477. [Google Scholar] [CrossRef]

- Piñero-Fuentes, E.; Canas-Moreno, S.; Rios-Navarro, A.; Delbruck, T.; Linares-Barranco, A. Autonomous Driving of a Rover-Like Robot Using Neuromorphic Computing. In Advances in Computational Intelligence, IWANN 2021, Lecture Notes in Computer Science; Rojas, I., Joya, G., Català, A., Eds.; Springer: Cham, Switzerland, 2021; Volume 12862. [Google Scholar] [CrossRef]

- Spot by Boston Dynamics. Available online: https://www.bostondynamics.com/products/spot (accessed on 17 June 2022).

- Arleo, A.; Millan, J.D.R.; Floreano, D. Efficient Learning of Variable-resolution Cognitive Maps for Autonomous Indoor Navigation. IEEE Trans. Robot. Autom. 1999, 15, 990–1000. [Google Scholar] [CrossRef] [Green Version]

- Betke, M.; Gurvits, L. Mobile Robot Localization Using Landmark. IEEE Trans. Robot. Autom. 1997, 13, 251–263. [Google Scholar] [CrossRef] [Green Version]

- Chen, S.Y. Kalman Filter for Robot Vision: A Survey. IEEE Trans. Ind. Electron. 2012, 59, 4409–4420. [Google Scholar] [CrossRef]

- Dissanayake, M.W.M.G.; Newman, P.; Clark, S.; Durrant-Whyte, H.F.; Csorba, M. A Solution to the Simultaneous Localization and Map Building (SLAM) Problem. IEEE Trans. Robot. Autom. 2001, 17, 229–241. [Google Scholar] [CrossRef] [Green Version]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef] [Green Version]

- Kavraki, L.E.; Svestka, P.; Latombe, J.C.; Overmars, M.H. Probabilistic Roadmaps for Path Planning in High- dimensional Configuration Spaces. IEEE Trans. Robot. Autom. 1996, 12, 566–580. [Google Scholar] [CrossRef] [Green Version]

- Jensfelt, P.; Kristensen, S. Active Global Localization for a Mobile Robot Using Multiple Hypothesis Tracking. IEEE Trans. Robot. Autom. 2001, 17, 748–760. [Google Scholar] [CrossRef] [Green Version]

- Paden, B.; Čáp, M.; Yong, S.Z.; Yershov, D.; Frazzoli, E. A Survey of Motion Planning and Control Techniques for Self-Driving Urban Vehicles. IEEE Trans. Intell. Veh. 2016, 1, 33–55. [Google Scholar] [CrossRef] [Green Version]

- Huang, S.; Teo, R.S.H.; Tan, K.K. Collision avoidance of multi unmanned aerial vehicles: A review. Annu. Rev. Control. 2019, 48, 147–164. [Google Scholar] [CrossRef]

- Claussmann, L.; Revilloud, M.; Gruyer, D.; Glaser, S. A Review of Motion Planning for Highway Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2020, 21, 1826–1848. [Google Scholar] [CrossRef] [Green Version]

- Floreano, D.; Godjevac, J.; Martinoli, A.; Mondada, F.; Nicoud, J.D. Design, Control, and Applications of Autonomous Mobile Robots. In Advances in Intelligent Autonomous Systems, International Series on Microprocessor-Based and Intelligent Systems Engineering; Tzafestas, S.G., Ed.; Springer: Dordrecht, The Netherlands, 1999; Volume 18, pp. 159–186. [Google Scholar] [CrossRef] [Green Version]

- Nasa’s First Mobile Robot VIPER Will Explore the Lunar Surface When It Launches in 2023. Available online: https://www.firstpost.com/tech/science/nasas-first-mobile-robot-viper-will-explore-the-lunar-surface-when-it-launches-in-2023-9657441.html (accessed on 15 June 2022).

- Arkin, R.C. The impact of Cybernetics on the design of a mobile robot system: A case study. IEEE Trans. Syst. Man Cybern. 1990, 20, 1245–1257. [Google Scholar] [CrossRef]

- Borenstein, J.; Koren, Y. Real-time obstacle avoidance for fast mobile robots. IEEE Trans. Syst. Man Cybern. 1989, 19, 1179–1187. [Google Scholar] [CrossRef] [Green Version]

- Hayes-Roth, B. An architecture for adaptive intelligent systems. Artif. Intell. 1995, 72, 329–365. [Google Scholar] [CrossRef] [Green Version]

- Alami, R.; Chatila, R.; Fleury, S.; Ghallab, M.; Ingrand, F. An Architecture for Autonomy. Int. J. Robot. Res. 1998, 17, 315–337. [Google Scholar] [CrossRef]

- Autonomous Mobile Robot Technology and Use Cases. Intel corporation. Available online: https://www.intel.com/content/www/us/en/robotics/autonomous-mobile-robots/overview (accessed on 10 February 2022).

- Miyata, R.; Fukuda, O.; Yamaguchi, N.; Okumura, H. Object Search Using Edge-AI Based Mobile Robot. In Proceedings of the 6th International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Oita, Japan, 25–27 November 2021; pp. 198–203. [Google Scholar] [CrossRef]

- Habibian, S.; Dadvar, M.; Peykari, B.; Hosseini, A.; Salehzadeh, M.H.; Hosseini, A.H.; Najafi, F. Design and implementation of a maxi-sized mobile robot (Karo) for rescue missions. Robomech J. 2021, 8, 1. [Google Scholar] [CrossRef]

- Moreno, J.; Clotet, E.; Lupiañez, R.; Tresanchez, M.; Martínez, D.; Pallejà, T.; Casanovas, J.; Palacín, J. Design, Implementation and Validation of the Three-Wheel Holonomic Motion System of the Assistant Personal Robot (APR). Sensors 2016, 16, 1658. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fiedeń, M.; Bałchanowski, J. A Mobile Robot with Omnidirectional Tracks—Design and Experimental Research. Appl. Sci. 2021, 11, 11778. [Google Scholar] [CrossRef]

- Klančar, G.; Zdešar, A.; Blažič, S.; Škrjanc, I. Chapter 3—Control of Wheeled Mobile Systems. In Wheeled Mobile Robotics, Butterworth-Heinemann; Klančar, G., Zdešar, A., Blažič, S., Škrjanc, I., Eds.; Elsevier: Amsterdam, The Netherlands, 2017; pp. 61–159. ISBN 9780128042045. [Google Scholar] [CrossRef]

- Saike, J.; Shilin, W.; Zhongyi, Y.; Meina, Z.; Xiaolm, L. Autonomous Navigation System of Greenhouse Mobile Robot Based on 3D Lidar and 2D Lidar SLAM. Front. Plant Sci. 2022, 13, 815218. [Google Scholar] [CrossRef]

- Meystel, A. Encyclopedia of Physical Science and Technology, 3rd ed.; Academic Press: Cambridge, MA, USA, 2003; pp. 1–24. ISBN 9780122274107. [Google Scholar]

- Karur, K.; Sharma, N.; Dharmatti, C.; Siegel, J. A Survey of Path Planning Algorithms for Mobile Robots. Vehicles 2021, 3, 448–468. [Google Scholar] [CrossRef]

- Thrun, S.; Beetz, M.; Bennewitz, M.; Burgard, W.; Cremers, A.B.; Dellaert, F.; Fox, D.; Haehnel, D.; Rosenberg, C.; Roy, N.; et al. Probabilistic Algorithms and the Interactive Museum Tour-Guide Robot Minerva. Int. J. Robot. Res. 2000, 9, 972–999. [Google Scholar] [CrossRef]

- Kuffner, J.; LaValle, S.M. RRT-Connect: An Efficient Approach to Single-Query Path Planning. In Proceedings of the IEEE International Conference on Robotics and Automation, San Francisco, CA, USA, 24–28 April 2000; Volume 2, pp. 995–1001. [Google Scholar] [CrossRef] [Green Version]

- Hawes, N. The Reality of Robots in Everyday Life. University of Birmingham. Available online: https://www.birmingham.ac.uk/research/perspective/reality-of-robots.aspx (accessed on 11 February 2022).

- Smids, J.; Nyholm, S.; Berkers, H. Robots in the Workplace: A Threat to- or Opportunity for- meaningful Work. Philos. Technol. 2020, 33, 503–522. [Google Scholar] [CrossRef] [Green Version]

- Tai, M.C.T. The impact of artificial intelligence on human society and bioethics. Tzu Chi Med. J. 2020, 32, 339–343. [Google Scholar] [CrossRef]

- Atkinson, R.D. Robotics and the Future of Production and Work. Information Technology and Innovation Foundation. 2019. Available online: https://itif.org/publications/2019/10/15/robotics-and-future-production-and-work (accessed on 15 February 2022).

- Naneva, S.; Gou, M.S.; Webb, T.L.; Prescott, T.J. A Systematic Review of Attitudes, Anxiety, Acceptance, and Trust towards social Robots. Int. J. Soc. Robot. 2020, 12, 1179–1201. [Google Scholar] [CrossRef]

- Morana, S.; Pfeiffer, J.; Adam, M.T.P. User Assistance for Intelligent Systems. Bus. Inf. Syst. Eng. 2020, 62, 189–192. [Google Scholar] [CrossRef] [Green Version]

- Maedche, A.; Legner, C.; Benlian, A.; Berger, B.; Gimpel, H.; Hess, T.; Hinz, O.; Morana, S.; Sollner, M. AI-Based Digital Assistants. Bus. Inf. Syst. Eng. 2019, 61, 535–544. [Google Scholar] [CrossRef]

- Luettel, T.; Himmelsbach, M.; Wuensche, H. Autonomous Ground Vehicles—Concepts and a Path to the Future. Proc. IEEE 2012, 100, 1831–1839. [Google Scholar] [CrossRef]

- Kuutti, S.; Bowden, R.; Jin, Y.; Barber, P.; Fallah, S. A Survey of Deep Learning Applications to Autonomous Vehicle Control. IEEE Trans. Intell. Transp. Syst. 2021, 22, 712–733. [Google Scholar] [CrossRef]

- Vermesan, O.; Bahr, R.; Ottella, M.; Serrano, M.; Karlsen, T.; Wahlstrom, T.; Sand, H.E.; Ashwathnarayan, M.; Gamba, M.T. Internet of Robotic Things Intelligent Connectivity and Platforms. Front. Robot. AI 2020, 7, 104. [Google Scholar] [CrossRef]

- Grehl, S.; Mischo, H.; Jung, B. Research Perspective—Mobile Robots in Underground Mining: Using Robots to Accelerate Mine Mapping, Create Virtual Models, Assist Workers, and Increase Safety. AusIMM Bull. 2017, 2, 44–47. [Google Scholar]

- Modic, E.E. 13-Characteristics of an Intelligent Systems Future. Today’s Medical Developments. 2 September 2021. Available online: https://www.todaysmedicaldevelopments.com/article/13-characteristics-intelligent-systems-future (accessed on 5 April 2022).

- Lee, H.; Jeong, J. Mobile Robot Path Optimization Technique Based on Reinforcement Learning Algorithm in Warehouse Environment. Appl. Sci. 2021, 11, 1209. [Google Scholar] [CrossRef]

- Xue, Y.; Sun, J.-Q. Solving the Path Planning Problem in Mobile Robotics with the Multi-Objective Evolutionary Algorithm. Appl. Sci. 2018, 8, 1425. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Raj, R.; Kos, A. A Comprehensive Study of Mobile Robot: History, Developments, Applications, and Future Research Perspectives. Appl. Sci. 2022, 12, 6951. https://doi.org/10.3390/app12146951

Raj R, Kos A. A Comprehensive Study of Mobile Robot: History, Developments, Applications, and Future Research Perspectives. Applied Sciences. 2022; 12(14):6951. https://doi.org/10.3390/app12146951

Chicago/Turabian StyleRaj, Ravi, and Andrzej Kos. 2022. "A Comprehensive Study of Mobile Robot: History, Developments, Applications, and Future Research Perspectives" Applied Sciences 12, no. 14: 6951. https://doi.org/10.3390/app12146951

APA StyleRaj, R., & Kos, A. (2022). A Comprehensive Study of Mobile Robot: History, Developments, Applications, and Future Research Perspectives. Applied Sciences, 12(14), 6951. https://doi.org/10.3390/app12146951