Progressive Multi-Scale Fusion Network for Light Field Super-Resolution

Abstract

:1. Introduction

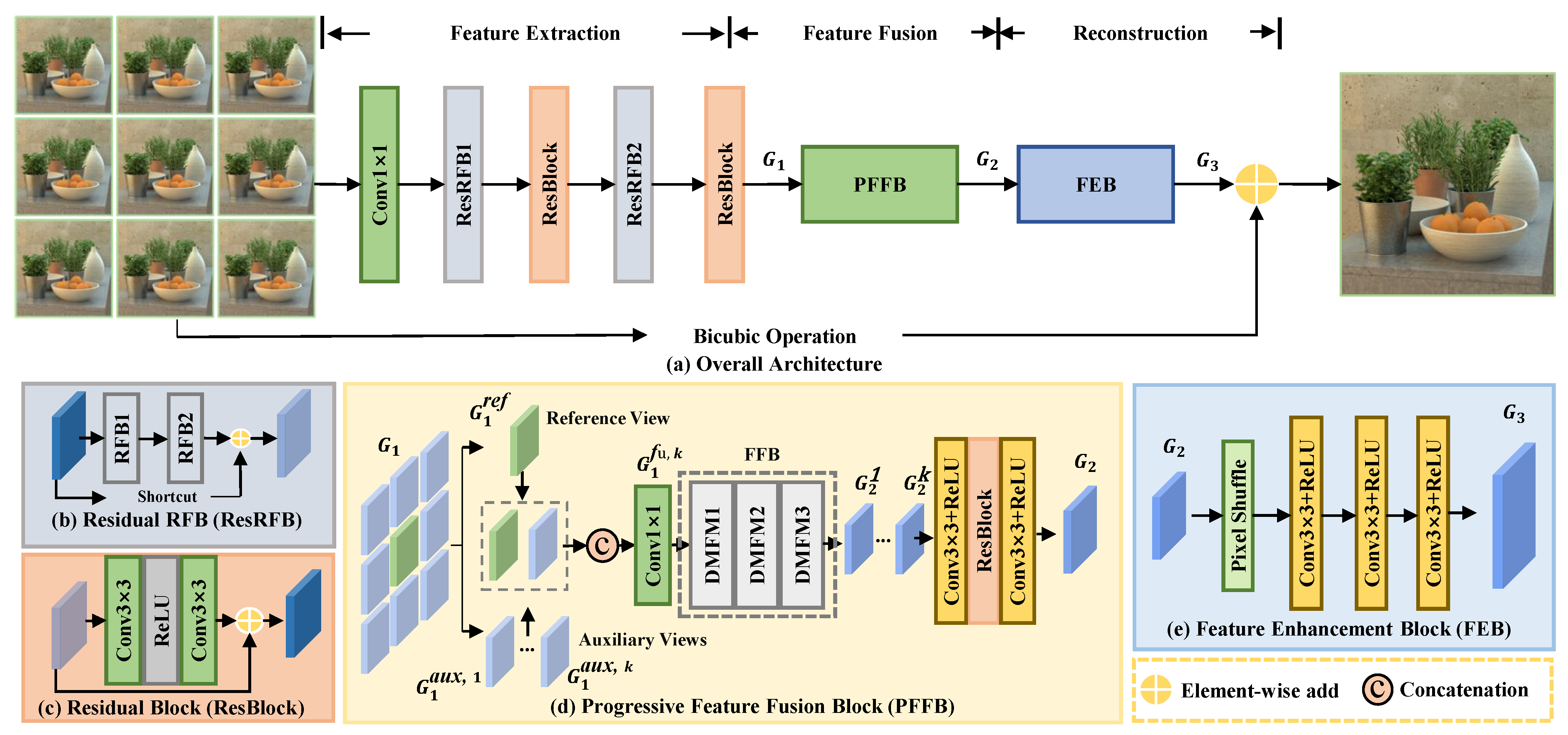

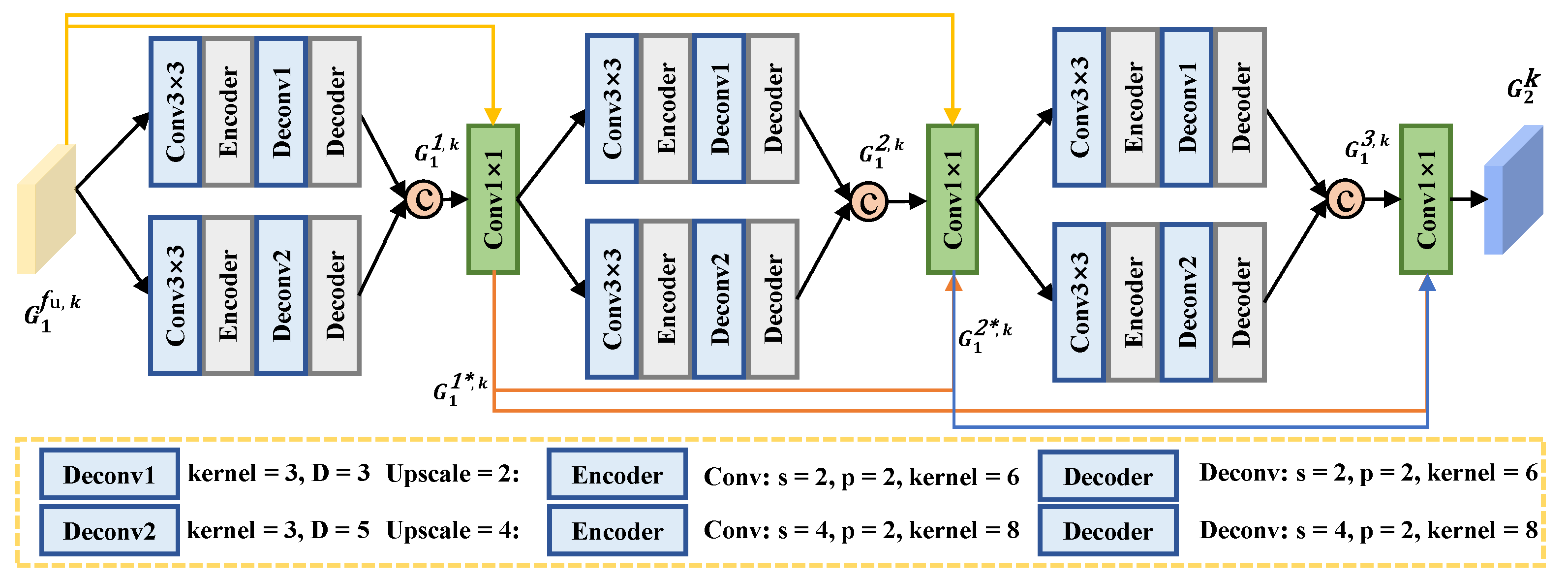

- We design the DMFM using a dual-branch structure to implicitly cover the influence of disparities and incorporate informative information from auxiliary views.

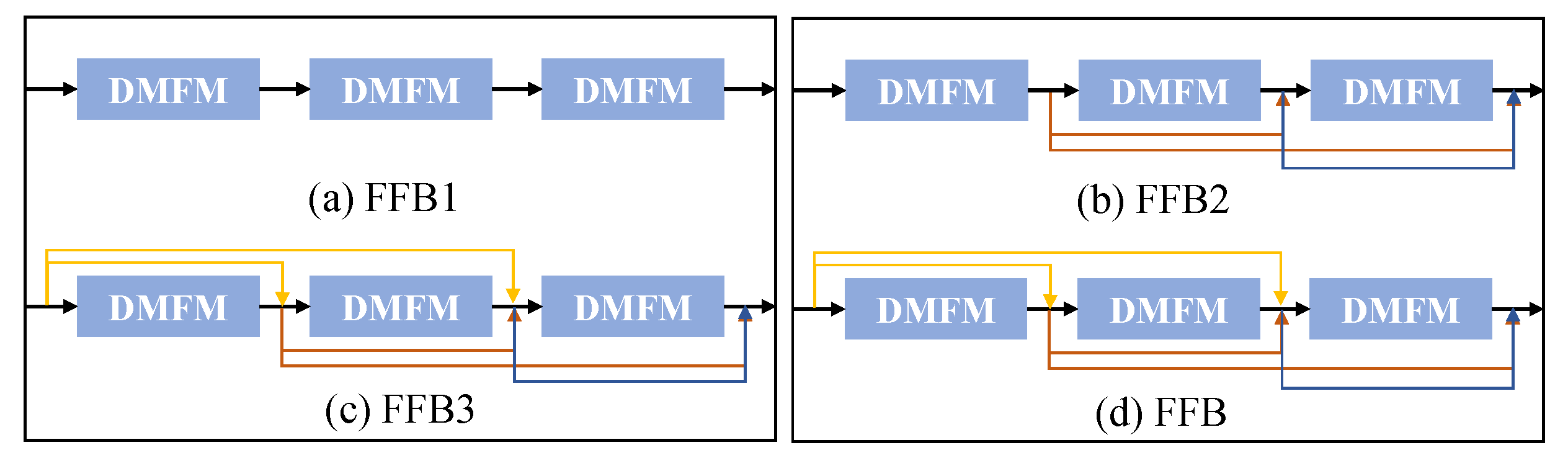

- The core PFFB of our network is mainly constructed by three DMFMs with a dense connection. This block can fully exploit multi-level features and the multi-scale fusion information can be preserved among complementary views. It is demonstrated that complementary features are effectively fused by this block to improve SR performance.

- The performance of our PMFN has achieved improvements compared with the state-of-the-art methods developed in recent years.

2. Related Work

2.1. Single Image Super-Resolution

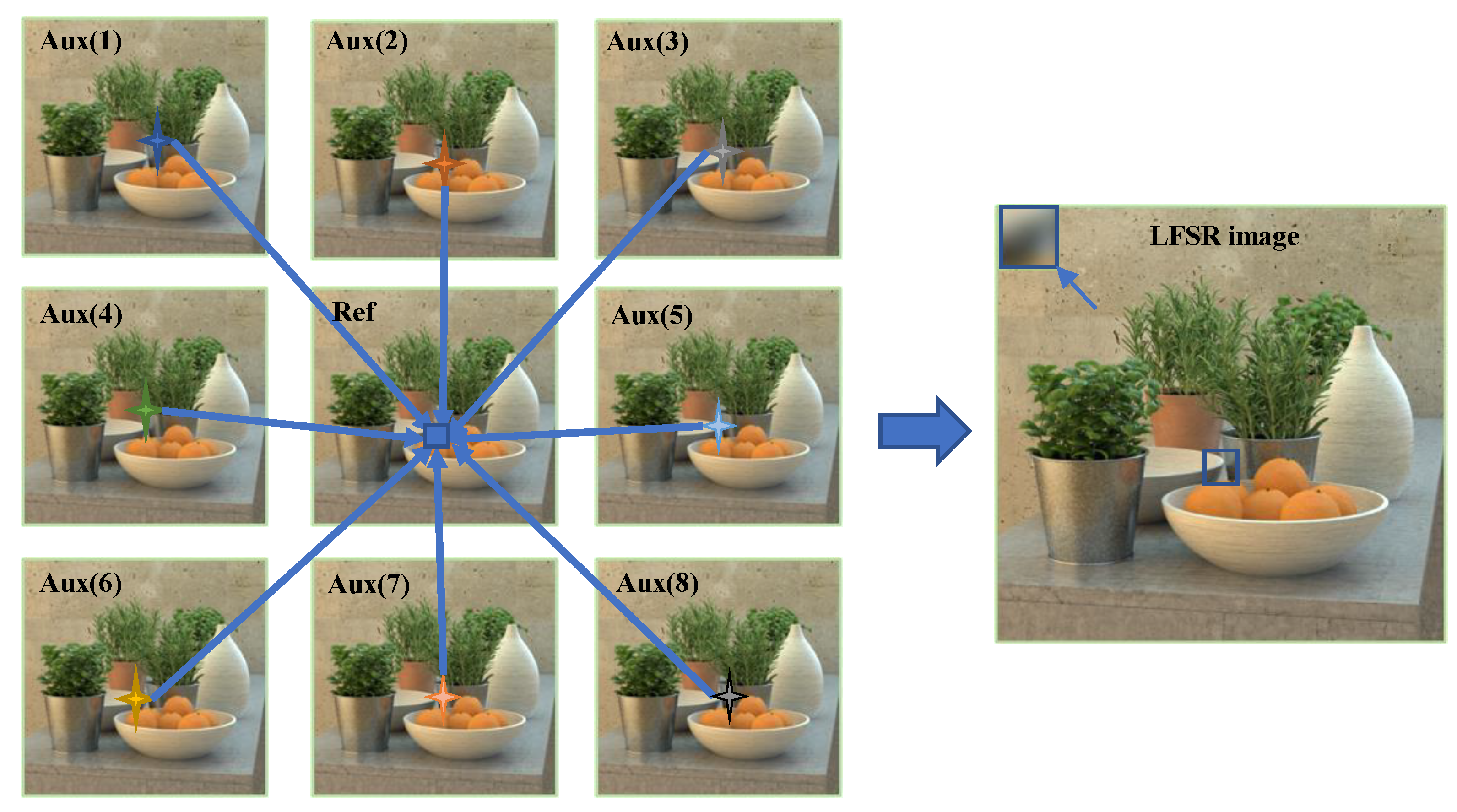

2.2. Light Field Super-Resolution

3. Progressive Multi-Scale Fusion Network

3.1. Overview

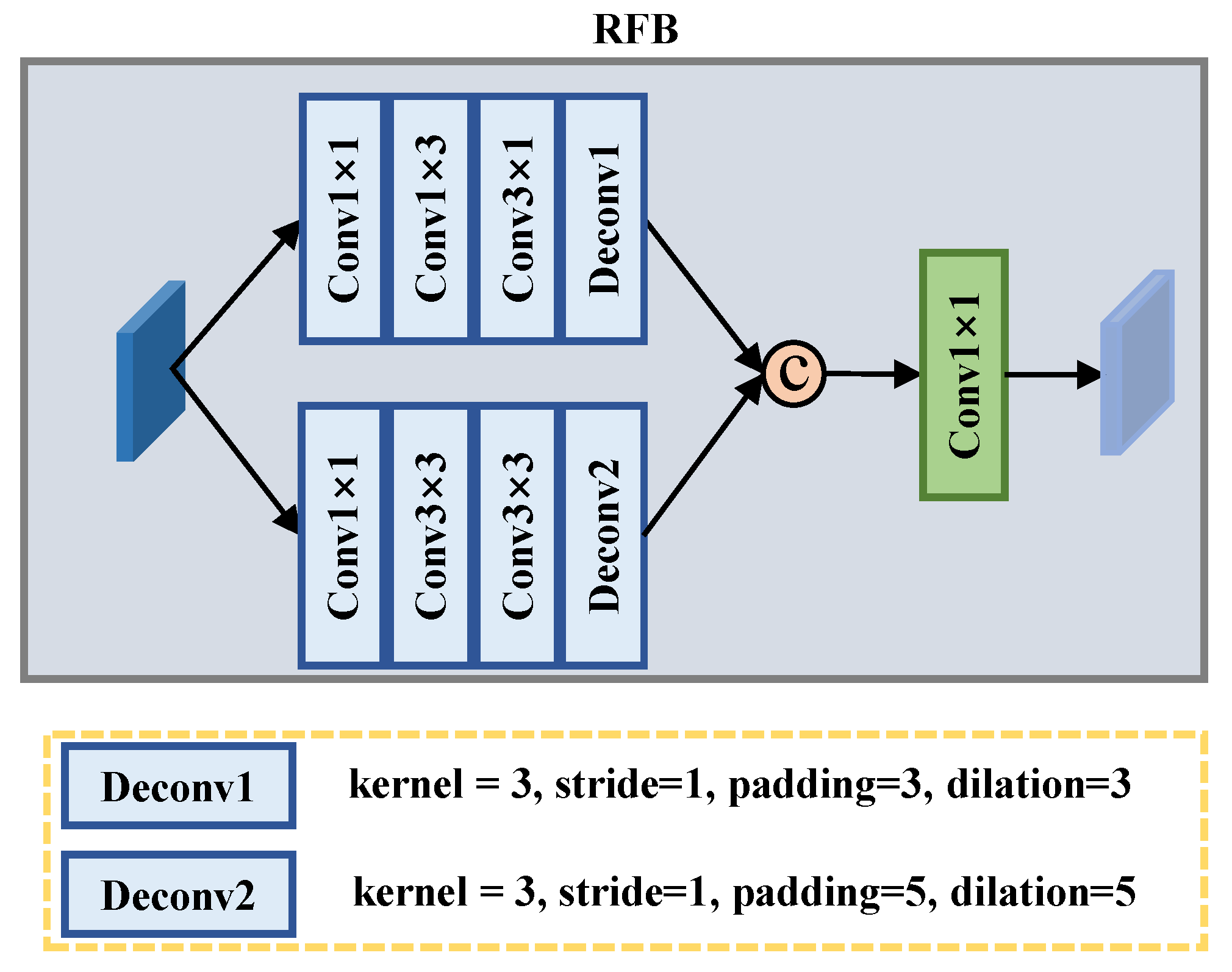

3.2. Residual Receptive Field Block (ResRFB)

3.3. Progressive Feature Fusion Block (PFFB)

3.4. Feature Enhancement Block (FEB)

4. Experiments

4.1. Datasets

4.2. Settings and Implementation Details

4.3. Comparison to the State of the Art

4.3.1. Quantitative Results

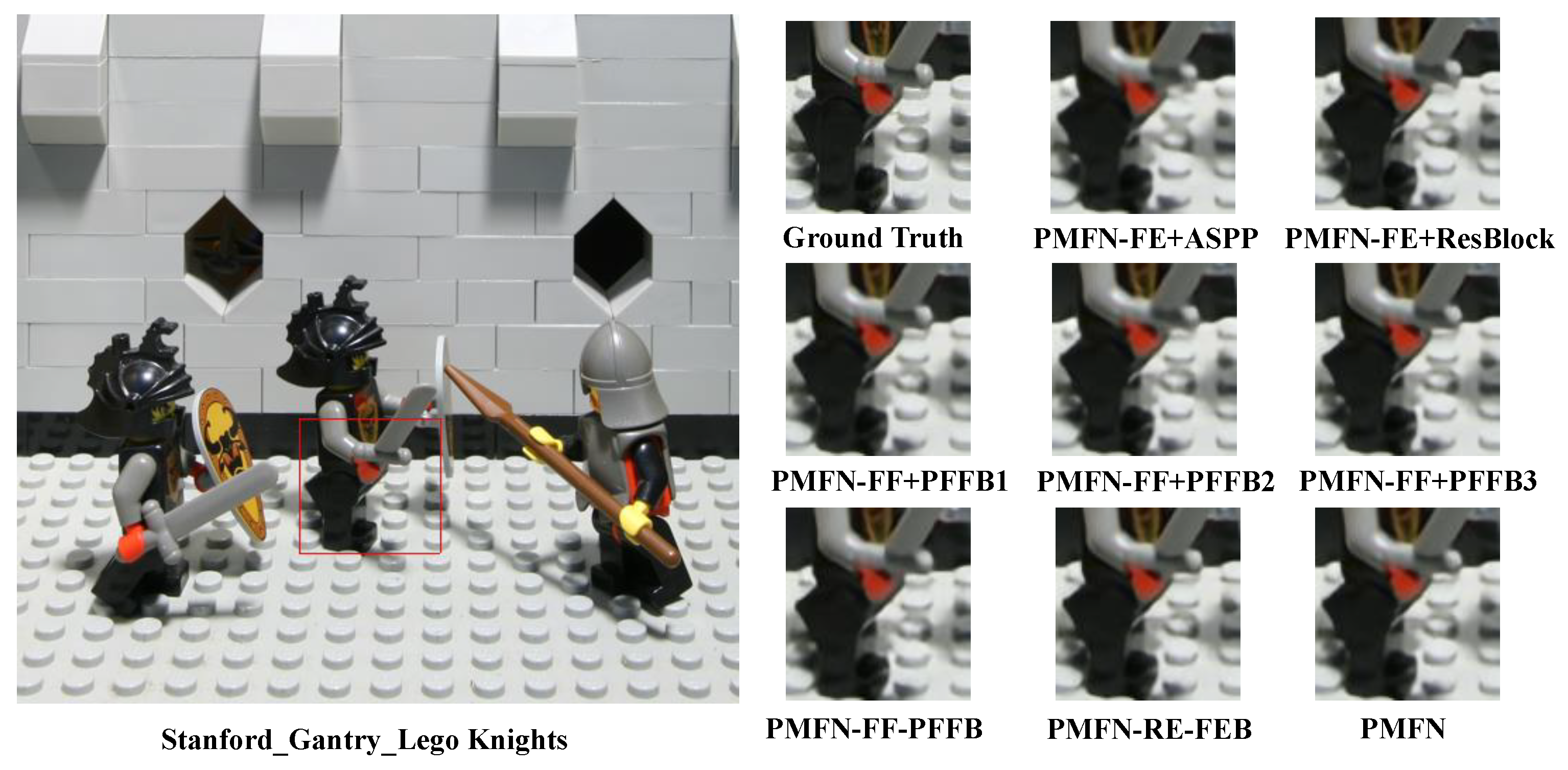

4.3.2. Qualitative Results

4.3.3. Parameters and FLOPs

4.4. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Huang, F.; Chen, K.; Wetzstein, G. The light field stereoscope. ACM Trans. Graph. 2015, 34, 1–12. [Google Scholar]

- Yu, J. A light-field journey to virtual reality. IEEE MultiMedia 2017, 24, 104–112. [Google Scholar] [CrossRef]

- Kim, C.; Zimmer, H.; Pritch, Y.; Sorkine-Hornung, A.; Gross, M.H. Scene reconstruction from high spatio-angular resolution light fields. ACM Trans. Graph. 2013, 32, 73. [Google Scholar] [CrossRef]

- Zhu, H.; Wang, Q.; Yu, J. Occlusion-model guided antiocclusion depth estimation in light field. IEEE J. Sel. Top. Signal Process. 2017, 11, 965–978. [Google Scholar] [CrossRef] [Green Version]

- Piao, Y.; Li, X.; Zhang, M.; Yu, J.; Lu, H. Saliency detection via depth-induced cellular automata on light field. IEEE Trans. Image Process. 2019, 29, 1879–1889. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Li, J.; Wei, J.; Piao, Y.; Lu, H.; Wallach, H.; Larochelle, H.; Beygelzimer, A.; d’Alche Buc, F.; Fox, E. Memory-oriented Decoder for Light Field Salient Object Detection. In Proceedings of the NeurIPS, Vancouver, BC, Canada, 8–14 December 2019; pp. 896–906. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Kim, J.; Kwon Lee, J.; Mu Lee, K. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Wanner, S.; Goldluecke, B. Variational light field analysis for disparity estimation and super-resolution. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 606–619. [Google Scholar] [CrossRef] [PubMed]

- Farrugia, R.A.; Galea, C.; Guillemot, C. Super resolution of light field images using linear subspace projection of patch-volumes. IEEE J. Sel. Top. Signal Process. 2017, 11, 1058–1071. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Liu, F.; Zhang, K.; Hou, G.; Sun, Z.; Tan, T. LFNet: A novel bidirectional recurrent convolutional neural network for light-field image super-resolution. IEEE Trans. Image Process. 2018, 27, 4274–4286. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Lin, Y.; Sheng, H. Residual networks for light field image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11046–11055. [Google Scholar]

- Jin, J.; Hou, J.; Chen, J.; Kwong, S. Light field spatial super-resolution via deep combinatorial geometry embedding and structural consistency regularization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2260–2269. [Google Scholar]

- Wang, Y.; Wang, L.; Yang, J.; An, W.; Yu, J.; Guo, Y. Spatial-angular interaction for light field image super-resolution. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 290–308. [Google Scholar]

- Mo, Y.; Wang, Y.; Xiao, C.; Yang, J.; An, W. Dense Dual-Attention Network for Light Field Image Super-Resolution. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 4431–4443. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, J.; Wang, L.; Ying, X.; Wu, T.; An, W.; Guo, Y. Light field image super-resolution using deformable convolution. IEEE Trans. Image Process. 2020, 30, 1057–1071. [Google Scholar] [CrossRef] [PubMed]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 184–199. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Dai, T.; Cai, J.; Zhang, Y.; Xia, S.T.; Zhang, L. Second-order attention network for single image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11065–11074. [Google Scholar]

- Wang, S.; Zhou, T.; Lu, Y.; Di, H. Contextual Transformation Network for Lightweight Remote-Sensing Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–13. [Google Scholar] [CrossRef]

- Yoon, Y.; Jeon, H.G.; Yoo, D.; Lee, J.Y.; So Kweon, I. Learning a deep convolutional network for light-field image super-resolution. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2015; pp. 24–32. [Google Scholar]

- Zhang, S.; Chang, S.; Lin, Y. End-to-end light field spatial super-resolution network using multiple epipolar geometry. IEEE Trans. Image Process. 2021, 30, 5956–5968. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Zhou, T.; Lu, Y.; Di, H. Detail preserving transformer for light field image super-resolution. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022. [Google Scholar]

- Liu, S.; Huang, D. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 385–400. [Google Scholar]

- Honauer, K.; Johannsen, O.; Kondermann, D.; Goldluecke, B. A dataset and evaluation methodology for depth estimation on 4d light fields. In Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 19–34. [Google Scholar]

- Wanner, S.; Meister, S.; Goldluecke, B. Datasets and benchmarks for densely sampled 4D light fields. In Proceedings of the Vision, Modeling and Visualization (VMV 2013), Lugano, Switzerland, 11–13 September 2013; Volume 13, pp. 225–226. [Google Scholar]

- Rerabek, M.; Ebrahimi, T. New light field image dataset. In Proceedings of the 8th International Conference on Quality of Multimedia Experience (QoMEX), Lisbon, Portugal, 6–8 June 2016. [Google Scholar]

- Le Pendu, M.; Jiang, X.; Guillemot, C. Light field inpainting propagation via low rank matrix completion. IEEE Trans. Image Process. 2018, 27, 1981–1993. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vaish, V.; Adams, A. The (New) Stanford Light Field Archive; Computer Graphics Laboratory, Stanford University: Stanford, CA, USA, 2008; Volume 6. [Google Scholar]

- Rossi, M.; Frossard, P. Geometry-consistent light field super-resolution via graph-based regularization. IEEE Trans. Image Process. 2018, 27, 4207–4218. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yeung, H.W.F.; Hou, J.; Chen, X.; Chen, J.; Chen, Z.; Chung, Y.Y. Light field spatial super-resolution using deep efficient spatial-angular separable convolution. IEEE Trans. Image Process. 2018, 28, 2319–2330. [Google Scholar] [CrossRef] [PubMed]

| Datasets | Training | Test | LF Disparity | Type |

|---|---|---|---|---|

| HCInew [26] | 20 | 4 | [−4,4] | Synthetic |

| HCIold [27] | 10 | 2 | [−3,3] | Synthetic |

| EPFL [28] | 70 | 10 | [−1,1] | Real-world |

| INRIA [29] | 35 | 5 | [−1,1] | Real-world |

| STFgantry [30] | 9 | 2 | [−7,7] | Real-world |

| Total | 144 | 23 |

| Methods | Scale | Datasets | ||||

|---|---|---|---|---|---|---|

| EPFL | HCInew | HCIold | INRIA | STFgantry | ||

| Bicubic | 29.50/0.935 | 31.69/0.934 | 37.46/0.978 | 31.10/0.956 | 30.82/0.947 | |

| VDSR [8] | 32.64/0.960 | 34.45/0.957 | 40.75/0.987 | 34.56/0.975 | 35.59/0.979 | |

| EDSR [19] | 33.05/0.963 | 34.83/0.959 | 41.00/0.987 | 34.88/0.976 | 36.26/0.982 | |

| GB [31] | 31.22/0.959 | 35.25/0.969 | 40.21/0.988 | 32.76/0.972 | 35.44/0.983 | |

| LFSSR [32] | 32.84/0.969 | 35.58/0.968 | 42.05/0.991 | 34.68/0.980 | 35.86/0.984 | |

| resLF [12] | 33.46/0.970 | 36.40/0.972 | 43.09/0.993 | 35.25/0.980 | 37.83/0.989 | |

| LF-ATO [13] | 34.05/0.975 | 37.11/0.976 | 44.15/0.994 | 35.96/0.984 | 39.36/0.992 | |

| LF-InterNet [14] | 34.06/0.975 | 37.05/0.976 | 44.37/0.994 | 35.85/0.984 | 38.60/0.991 | |

| LF-DFNet [16] | 34.17/0.976 | 37.31/0.977 | 44.24/0.994 | 36.04/0.984 | 39.28/0.992 | |

| MEG-Net [23] | 33.30/0.971 | 35.98/0.970 | 42.60/0.992 | 35.19/0.981 | 36.53/0.986 | |

| DPT [24] | 33.77/0.975 | 36.93/0.975 | 43.88/0.994 | 35.68/0.984 | 38.66/0.991 | |

| Ours | 34.63/0.977 | 36.92/0.976 | 43.65/0.993 | 36.54/0.985 | 39.33/0.993 | |

| Bicubic | 25.14/0.831 | 27.61/0.851 | 32.42/0.934 | 26.82/0.886 | 25.93/0.843 | |

| VDSR [8] | 27.22/0.876 | 29.24/0.881 | 34.72/0.951 | 29.14/0.920 | 28.40/0.898 | |

| EDSR [19] | 27.86/0.885 | 29.56/0.886 | 35.09/0.953 | 29.69/0.925 | 28.72/0.907 | |

| GB [31] | 26.02/0.863 | 28.92/0.884 | 33.74/0.950 | 27.73/0.909 | 28.11/0.901 | |

| LFSSR [32] | 28.27/0.908 | 30.72/0.913 | 36.70/0.9590 | 30.31/0.945 | 30.15/0.939 | |

| resLF [12] | 28.14/0.902 | 30.62/0.909 | 36.56/0.968 | 30.22/0.940 | 30.05/0.935 | |

| LF-ATO [13] | 28.74/0.913 | 30.16/0.910 | 37.01/0.970 | 30.88/0.949 | 30.85/0.945 | |

| LF-InterNet [14] | 28.59/0.912 | 30.88/0.914 | 36.95/0.971 | 30.59/0.948 | 30.33/0.940 | |

| LF-DFNet [16] | 28.53/0.910 | 30.66/0.916 | 36.58/0.967 | 30.55/0.944 | 29.87/0.932 | |

| MEG-Net [23] | 28.75/0.916 | 31.10/0.918 | 37.29/0.972 | 30.67/0.949 | 30.77/0.945 | |

| DPT [24] | 28.54/0.911 | 30.92/0.914 | 37.00/0.970 | 30.66/0.948 | 30.65/0.943 | |

| Ours | 29.30/0.917 | 30.93/0.917 | 36.94/0.969 | 31.70/0.950 | 30.97/0.947 | |

| Ang | Scale | Params.(M) | FLOPs(G) | Avg. PSNR/SSIM |

|---|---|---|---|---|

| EDSR [19] | 4× | 38.89 | 40.66 × 25 | 30.18/0.911 |

| LF-SSR [13] | 1.77 | 128.44 | 31.23/0.935 | |

| LF-ATO [13] | 1.36 | 28.08 × 25 | 31.69/0.938 | |

| DPT [24] | 3.78 | 58.64 | 31.56/0.937 | |

| PMFN(Ours) [16] | 3.11 | 49.61 × 25 | 31.97/0.940 |

| Models | Scale | Datasets | Average | ||||

|---|---|---|---|---|---|---|---|

| EPFL | HCInew | HCIold | INRIA | STFgantry | |||

| PMFN-FE + ASPP | 29.15/0.910 | 30.76/0.911 | 36.82/0.968 | 31.47/0.947 | 30.71/0.942 | −0.18/−0.005 | |

| PMFN-FE + ResBlock | 28.95/0.908 | 30.69/0.910 | 36.66/0.967 | 31.35/0.947 | 30.61/0.941 | −0.32/−0.006 | |

| PMFN-FF + FFB1 | 29.07/0.902 | 30.47/0.905 | 36.51/0.966 | 31.08/0.945 | 30.32/0.931 | −0.48/−0.011 | |

| PMFN-FF + FFB2 | 29.17/0.910 | 30.79/0.911 | 36.75/0.968 | 31.51/0.947 | 30.83/0.942 | −0.16/−0.005 | |

| PMFN-FF + FFB3 | 28.92/0.908 | 30.62/0.909 | 36.57/0.967 | 31.21/0.946 | 30.44/0.938 | −0.42/−0.007 | |

| PMFN-FF - FFB | 28.71/0.904 | 30.32/0.903 | 36.28/0.965 | 30.88/0.943 | 29.70/0.928 | −0.79/−0.012 | |

| PMFN-FF - FEB | 29.28/0.912 | 30.88/0.913 | 36.91/0.969 | 31.62/0.948 | 30.88/0.944 | −0.05/−0.003 | |

| PMFN | 29.30/0.917 | 30.93/0.917 | 36.94/0.969 | 31.70/0.950 | 30.97/0.949 | 31.97/0.940 | |

| Ang | Num | Scale | Params.(m) | FLOPs(G) | PSNR |

|---|---|---|---|---|---|

| 5 × 5 | 1 | 4× | 1.37 | 19.35 | 29.07 |

| 2 | 2.23 | 34.46 | 29.15 | ||

| 3 | 3.11 | 49.61 | 29.30 | ||

| 4 | 4.00 | 64.81 | 29.31 | ||

| 5 | 4.90 | 80.03 | 29.33 |

| Ang | Dataset | Scale | PSNR | SSIM | Scale | PSNR | SSIM |

|---|---|---|---|---|---|---|---|

| 3 × 3 | STFgantry | 2× | 38.76 | 0.992 | ×4 | 30.67 | 0.946 |

| HCInew | 36.57 | 0.975 | 30.66 | 0.914 | |||

| 5 × 5 | STFgantry | 2× | 39.33 | 0.993 | ×4 | 30.97 | 0.947 |

| HCInew | 36.92 | 0.976 | 30.93 | 0.917 | |||

| 7 × 7 | STFgantry | 2× | 39.68 | 0.994 | ×4 | 31.12 | 0.951 |

| HCInew | 37.07 | 0.978 | 30.99 | 0.919 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, W.; Ke, W.; Sheng, H.; Xiong, Z. Progressive Multi-Scale Fusion Network for Light Field Super-Resolution. Appl. Sci. 2022, 12, 7135. https://doi.org/10.3390/app12147135

Zhang W, Ke W, Sheng H, Xiong Z. Progressive Multi-Scale Fusion Network for Light Field Super-Resolution. Applied Sciences. 2022; 12(14):7135. https://doi.org/10.3390/app12147135

Chicago/Turabian StyleZhang, Wei, Wei Ke, Hao Sheng, and Zhang Xiong. 2022. "Progressive Multi-Scale Fusion Network for Light Field Super-Resolution" Applied Sciences 12, no. 14: 7135. https://doi.org/10.3390/app12147135

APA StyleZhang, W., Ke, W., Sheng, H., & Xiong, Z. (2022). Progressive Multi-Scale Fusion Network for Light Field Super-Resolution. Applied Sciences, 12(14), 7135. https://doi.org/10.3390/app12147135