Target Recognition and Navigation Path Optimization Based on NAO Robot

Abstract

:1. Introduction

2. NAO Robot’s Monocular Vision Spatial Target Localization

2.1. Image Preprocessing

2.2. Target Recognition

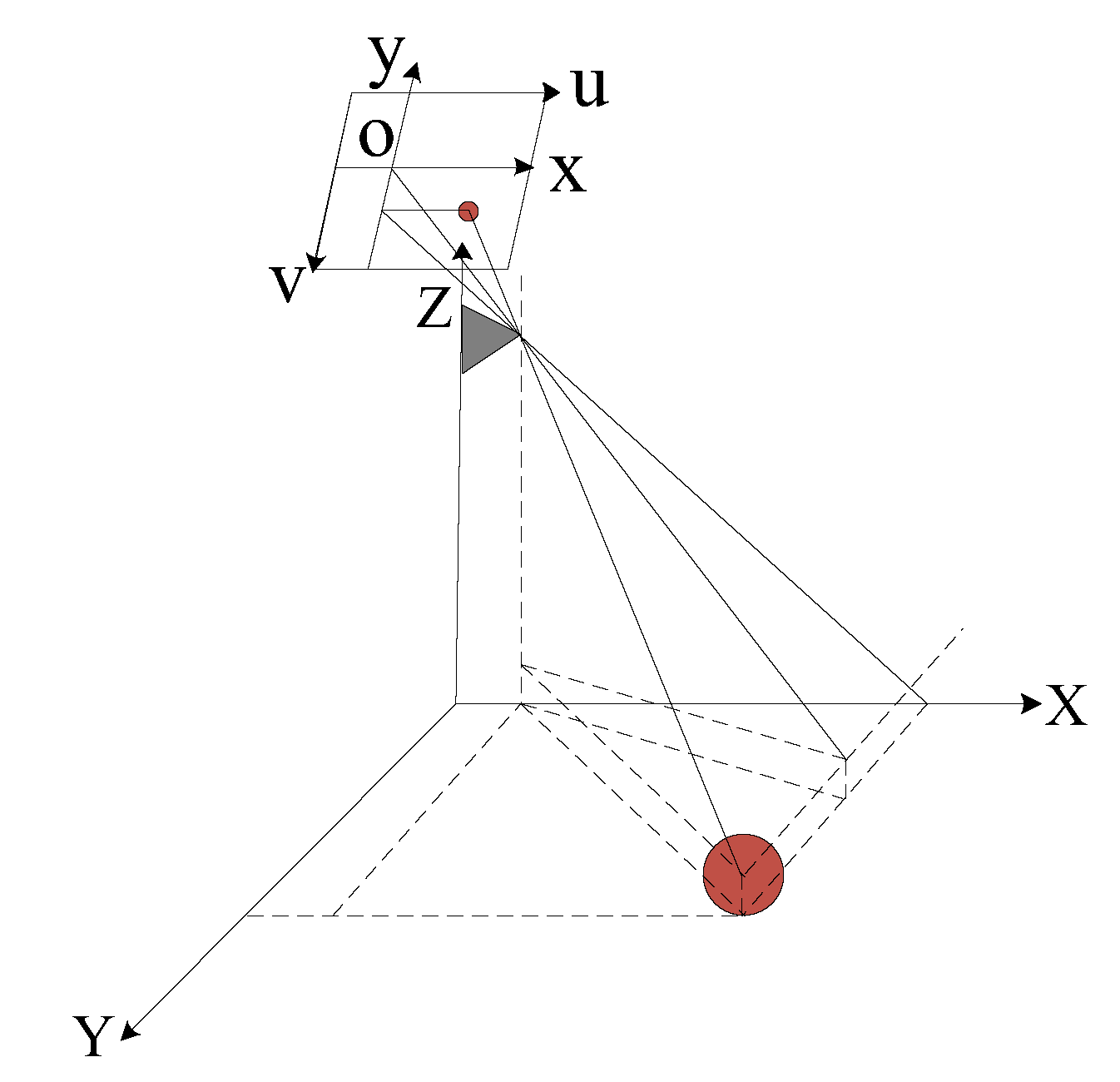

2.3. Monocular Vision Location and Ranging

3. Navigation Theory and Navigation Map

3.1. Simultaneous Localization and Mapping Theory

3.2. Q-Learning Algorithm

3.3. K-Means Algorithm

3.4. Navigation Map Building

- (1)

- Randomly choose k elements from n elements as the center of k clusters.

- (2)

- According to average, each object is assigned to similar clusters.

- (3)

- Recalculate the distance between each cluster and the shortest cluster.

- (4)

- If the distance of any two clusters is less than , the two clusters are merged. The average distance of two original cluster centers is taken as the center of a new cluster. Otherwise, go to step (2) to continue.

- (5)

- If the result no longer changes, the algorithm is over. Otherwise, go to step (4) to continue.

- (1)

- Collect the surrounding environment with two sonar sensors.

- (2)

- Classify these data with the K-means clustering algorithm to detect the number of obstacles and the distance between robot and obstacle.

- (3)

- Make sure the value of penalty and incentive factor according to the detecting results.

- (4)

- The robot updates value according to Equation (12).

- (5)

- The robot selects the next state according to Equation (14).

- (6)

- If the robot meets the end landmark, the experiment is over. Otherwise, go to (1) to continue.

4. Optimal Navigation Path for Mobile Robot

4.1. Map Environment Design for Path Planning

4.2. Improved ABC Algorithm for Finding the Optimal Path

- (1)

- The two search paths do not overlap as Figure 6. The search path is the shortest path.

- (2)

- The two searchers meet at a point as Figure 7. The search path is the common search path.

- (3)

- The two search paths overlap as Figure 8. The search path is the overlap path and another search path.

- (1)

- Initialize starting temperature value.

- (2)

- The food source is updated at each generation, the fitness of the new food source is , and the fitness of the original food source is , If , nothing is done. Otherwise, calculate .

- (3)

- Determine whether to replace the original food source with , where is a parameter between 0 and 1. is the temperature value at every moment and satisfies , namely, the current temperature value is the temperature after the decay of the last time.

5. Experimental Verification

5.1. Experimental Algorithm Verification

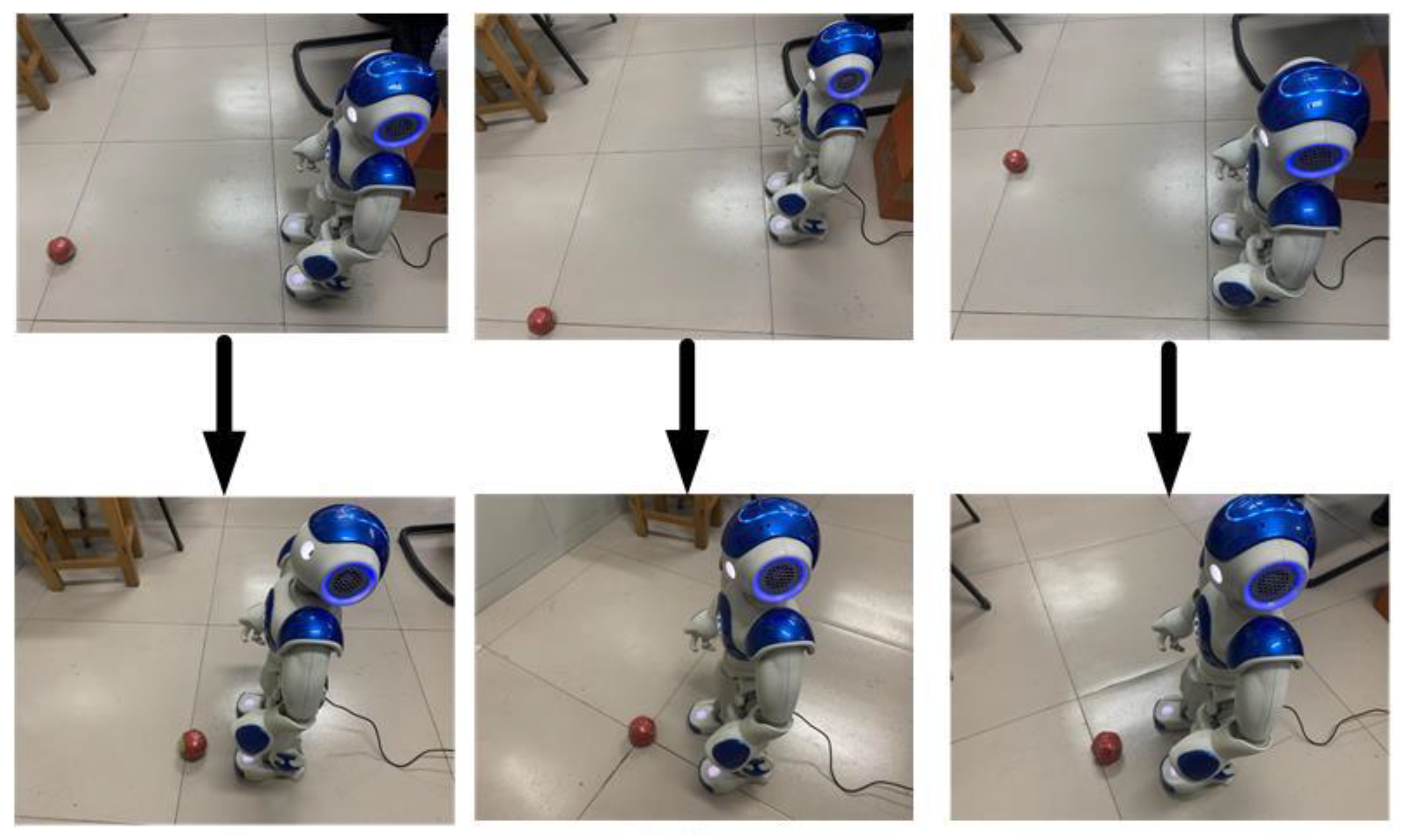

5.2. NAO Robot Experimental Validation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nguyen, V.H.; Hoang, V.B.; Chu, A.M.; Kien, L.M.; Truong, X.T. Toward socially aware trajectory planning system for autonomous mobile robots in complex environments. In Proceedings of the 2020 7th NAFOSTED Conference on Information and Computer Science (NICS), Ho Chi Minh City, Vietnam, 26–27 November 2020. [Google Scholar]

- My, C.A.; Makhanov, S.S.; Van, N.A.; Duc, V.M. Modeling and computation of real-time applied torques and non-holonomic constraint forces/moment, and optimal design of wheels for an autonomous security robot tracking a moving target. Math. Comput. Simul. 2020, 170, 300–315. [Google Scholar] [CrossRef]

- Ranjan, A.; Kumar, U.; Laxmi, V.; Dayal Udai, A. Identification and control of NAO humanoid robot to grasp an object using monocular vision. In Proceedings of the 2017 Second International Conference on Electrical, Computer and Communication Technologies (ICECCT), Coimbatore, India, 22–24 February 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Walaa, G.; Randa, J.B.C. NAO humanoid robot obstacle avoidance using monocular camera. Adv. Sci. Technol. Eng. Syst. J. 2020, 5, 274–284. [Google Scholar]

- Alcaraz-Jiménez, J.J.; Herrero-Pérez, D.; Martínez-Barberá, H. Robust feedback control of ZMP-based gait for the humanoid robot Nao. Int. J. Robot. Res. 2013, 32, 1074–1088. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous Localization and Mapping (SLAM): Part I—The Essential Algorithms. IEEE Robot. Autom. Mag. 2006, 13, 99–108. [Google Scholar] [CrossRef]

- Oßwald, S.; Hornung, A.; Bennewitz, M. Learning reliable and efficient navigation with a humanoid. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, Alaska, 3–7 May 2010; pp. 2375–2380. [Google Scholar] [CrossRef]

- Zhou, Y.; Er, M.J. Self-Learning in Obstacle Avoidance of a Mobile Robot Via Dynamic Self-Generated Fuzzy Q-Learning. In Proceedings of the International Conference on Computational Inteligence for Modelling Control and Automation and International Conference on Intelligent Agents Web Technologies and International Commerce, Sydney, NSW, Australia, 28 November–1 December 2006; IEEE Computer Society: Washington, DC, USA, 2006; p. 116. [Google Scholar]

- Wen, S.; Chen, X.; Ma, C.; Lam, H.K.; Hua, S.L. The Q-Learning Obstacle Avoidance Algorithm Based on EKF-SLAM for NAO Autonomous Walking under Unknown Environments. Robot. Auton. Syst. 2015, 72, 29–36. [Google Scholar] [CrossRef]

- Magree, D.; Mooney, J.G.; Johnson, E.N. Monocular Visual Mapping for Obstacle Avoidance on UAVs. J. Intell. Robot. Syst. Theory Appl. 2013, 74, 471–479. [Google Scholar]

- Shamsuddin, S.; Ismail, L.I.; Yussof, H. Humanoid Robot NAO: Review of Control and Motion Exploration. In Proceedings of the IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 25–27 November 2011; pp. 511–516. [Google Scholar]

- Havlena, M.; Fojtu, P.T. Nao Robot Localization and Navigation with Atom Head; Research Report CTU-CMP-2012-07; CMP: Prague, Czech Republic, 2012. [Google Scholar]

- Erisoglu, M.; Calis, N.; Sakallioglu, S. A New Algorithm for Initial Cluster Centers in Algorithm. Pattern Recognit. Lett. 2011, 32, 1701–1705. [Google Scholar] [CrossRef]

- Rastogi, S.; Kumar, V.; Rastogi, S. An Approach Based on Genetic Algorithms to Solve the Path Planning Problem of Mobile Robot in Static Environment. MIT Int. J. Comput. Sci. Inf. Technol. 2011, 1, 32–35. [Google Scholar]

- Zhu, Q.B.; Zhang, Y.L. An Ant Colony Algorithm Based on Grid Method for Mobile Robot Path Planning. Robot 2005, 27, 132–136. [Google Scholar]

- Brand, M.; Masuda, M.; Wehner, N. Ant Colony Optimization Algorithm for Robot Path Planning. In Proceedings of the International Conference on Computer Design & Applications, Qinhuangdao, China, 25–27 June 2010; pp. 436–440. [Google Scholar]

- Masehian, E.; Sedighizadeh, D. A Multi-objective PSO-based Algorithm for Robot Path Planning. In Proceedings of the IEEE International Conference on Industrial Technology, Via del Mar, Chile, 14–17 March 2010; pp. 465–470. [Google Scholar]

- Shakiba, R.; Salehi, M.E. PSO-based Path Planning Algorithm for Humanoid Robots Considering Safety. J. Comput. Robot. 2014, 7, 47–54. [Google Scholar]

- Shengjun, W.; Juan, X.; Rongxiang, G.; Dongyun, W. Improved artificial bee colony algorithm based optimal navigation path for mobile robot. In Proceedings of the 2016 12th World Congress on Intelligent Control and Automation (WCICA), Guilin, China, 12–15 June 2016; pp. 2928–2933. [Google Scholar] [CrossRef]

- Cai, J.L.; Zhu, W.; Ding, H.; Min, F. An improved artificial bee colony algorithm for minimal time cost reduction. Int. J. Mach. Learn. Cybern. 2013, 5, 743–752. [Google Scholar] [CrossRef]

- Zhang, Y.Y.; Hou, Y.B.; Li, C. Ant Path Planning for Handling Robot Based on Artificial Immune Algorithm. Comput. Meas. Control 2016, 23, 4124–4127. [Google Scholar]

- Gao, W.; Chen, Y.; Liu, Y.; Chen, B. Distance Measurement Method for Obstacles in front of Vehicles Based on Monocular Vision. J. Phys. Conf. Ser. 2021, 1815, 012019. [Google Scholar] [CrossRef]

- Aider, O.A.; Hoppenot, P.; Colle, E. A model-based method for indoor mobile robot localization using monocular vision and straight-line correspondences. Robot. Auton. Syst. 2005, 52, 229–246. [Google Scholar] [CrossRef]

- Weiting, Y.; Baoyu, L.; Wenbin, Z. Image processing method based on machine vision. Inf. Technol. Informatiz. 2021, 143–145. [Google Scholar] [CrossRef]

- Agrawal, N.; Aurelia, S. A Review on Segmentation of Vitiligo image. IOP Conf. Ser. Mater. Sci. Eng. 2021, 131, 012003. [Google Scholar] [CrossRef]

- Hongyu, W.; Wurong, Y.; Liang, W.; Hu, J.; Qiao, W. Fast edge extraction algorithm based on HSV color space. J. Shanghai Jiaotong Univ. 2019, 53, 765–772. [Google Scholar]

- Qinqin, S.; Guoping, Y. Lane line detection based on the HSV color threshold segmentation. Comput. Digit. Eng. 2021, 49, 1895–1898. [Google Scholar]

- Jiansen, L.; Si, X. Fast circle detection algorithm based on random sampling of Hough transform. Technol. Innov. Appl. 2021, 11, 128–130. [Google Scholar]

- Zhengwei, Z.; Wenhao, S.; Zhuqing, J.; Xiao, G. Improved fast circle detection algorithm based on random Hough transform. Comput. Eng. Des. 2018, 39, 1978–1983. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

| Parameters | Value |

|---|---|

| Food sources numbers | 50 |

| Search times | 2000 times |

| No obstacle weight factor | 0.2 |

| Obstacle weight factor | 0.8 |

| Initialize temperature value | 10 |

| Temperature attenuation coefficient | 0.8 |

| Grid Coordinates | Actual Path Coordinates (m) | Route Setting |

|---|---|---|

| (4, 5) | (0.64, 0.80) | Along the upper right 45° walk 1.131 m |

| (9, 10) | (1.44, 1.60) | Turn right 45° and walk for 0.16 m |

| (10, 10) | (1.60, 1.60) | Turn left 45° and walk for 0.679 m |

| (13, 13) | (2.08, 2.08) | Turn right 45° and walk for 0.16 m |

| (14, 13) | (2.24, 2.08) | Turn left 45° and walk for 0.32 m |

| (16, 15) | (2.56, 2.40) | Turn left 45° and walk for 0.16 m |

| (16, 16) | (2.56, 2.56) | Arrive the destination target |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, Y.; Wen, S.; Shi, Z.; Li, H. Target Recognition and Navigation Path Optimization Based on NAO Robot. Appl. Sci. 2022, 12, 8466. https://doi.org/10.3390/app12178466

Jin Y, Wen S, Shi Z, Li H. Target Recognition and Navigation Path Optimization Based on NAO Robot. Applied Sciences. 2022; 12(17):8466. https://doi.org/10.3390/app12178466

Chicago/Turabian StyleJin, Yingrui, Shengjun Wen, Zhaoyuan Shi, and Hengyi Li. 2022. "Target Recognition and Navigation Path Optimization Based on NAO Robot" Applied Sciences 12, no. 17: 8466. https://doi.org/10.3390/app12178466