1. Introduction

Recently, the complexity and fragmentation of the building design process have considerably grown, mainly driven by increasing specialization and tightening of regulations. This fact has led to a significant increase in the availability of design support tools intended to facilitate aspects of the design process.

In the phases that comprise the design of a building, a series of standards must be met. Urban infrastructure and services are generally designed based on people without impairments, and the specific needs of people with disabilities are often not taken into account in the development of cities, public places, buildings and services [

1]. Designers are usually trained to design for a theoretical “average” person who does not exist. Universal design was born with the purpose of designing products or environments that suit a broad range of users, including children, older adults, people with disabilities, people of atypical size or shape, people who are ill or injured, and people inconvenienced by circumstances. In architecture, universal design means creating spaces so they can be accessed, understood, and used to the greatest extent possible by all people, regardless of their age, size, ability, or disability. From the arrangement of the rooms to the choice of colours, many details go into the creation of accessible spaces [

2]. Universal design is about looking at the project from the perspective of the end-user and involving them in the design process. This was the aim of the system presented in this paper: incorporating into the design process a type of user that is not always considered when designing buildings.

When considering a wheelchair user, an element designed in compliance with all current regulations may not be the optimal solution or may not even be usable. For example, a straight access ramp with a compliant slope may reach a certain height, which may cause anxiety for some users if a handrail has not been included.

As the inclusion of people with disabilities has been fostered in many areas (General Assembly, United Nations, 2006), advanced societies have introduced regulations and laws that facilitate the transit of wheelchair users in urban areas and buildings. This has led to a surge in the development and use of wheelchair simulators.

Numerous proposals have been successfully deployed over the last years with different technological supports (movement platforms, haptic devices, displays, etc.) to fulfil different objectives, as is discussed in the next section. In recent years, virtual reality (VR) technology has been increasingly recognized by the architecture, engineering, and construction (AEC) industry for its capacity to offer multisensory 3D environments that immerse the user in a virtual world.

This proposal brought together the ideas of universal design, the developments achieved by wheelchair simulators, and the challenges posed by virtual reality (VR) for the built environment. Specifically, a systematic procedure was presented for the evaluation of accessibility for wheelchair users in the architectural design phase and, subsequently, in the interior design phase of a building. A method for the implementation of this procedure using a virtual reality system was proposed.

In the VR system, a wheelchair simulator was used that differs from any proposal made to date. The main differences were: it did not use a power wheelchair, so it was supposed to be suitable for a wider range of users; it had active haptic rollers that act on the wheels of the chair and allow simulating different types of terrain; and the wheelchair was mounted on a six degrees of freedom platform, while the proposals made so far use platforms with a maximum of four degrees of freedom.

The remainder of the document is organized as follows: A review of previous works is in the next section. The materials and methods section indicates the workflow followed by this method and explains in depth each phase and each interactive element disposed along the scene, such as doors, users’ interfaces, and markers. All the details of the virtual wheelchair simulator used are also presented in detail in this section. The results section provides a more practical view of how to use this method by showing a workflow example. Finally, in the discussion and conclusion sections, we point out the versatility of our proposal and some possible future works that could help to improve the method.

2. Related Works

This literature is divided into two parts. The first part will deal with existing wheelchair simulators, and the second part will review the work proposed for the evaluation of architectural designs using VR systems.

2.1. Wheelchair Simulators

Numerous proposals for wheelchair simulators have been recently developed to meet different objectives. Some of them were deployed with the purpose of contributing to research in rehabilitation. Vailland et al. [

3] presented a platform with four degrees of freedom for the acquisition of safe driving skills for a power wheelchair that addressed the drawbacks of the classic virtual experience, such as cyber-sickness and a sense of presence. This simulator was intended to aid the development of training and rehabilitation applications. The same authors have recently published in [

4] the results of a clinical study in which 29 regular power wheelchair users completed a clinically validated task to compare performances between driving in a virtual environment and driving in real conditions. The work presented in [

5,

6,

7] introduced some other examples of this kind of application.

Other existing proposals focus their efforts on developments that improve the daily lives of users by creating experiences in risk-free environments. Rodriguez [

8] presented a simulator designed to allow children with multiple disabilities to familiarize themselves with the wheelchair. John et al. proposed in [

9] another virtual training system for power wheelchairs that has proven to be successful. Devigne et al. [

10] featured a virtual power wheelchair driving simulator that was designed to allow people with different disabilities to use different control inputs (normal wheelchair joystick, chin control device, or game controller). Some more advanced control proposals for simulators have also been described, such as the one by Pinheiro et al. in [

11]. Here, a brain-computer interface was introduced to enable the operation of a wheelchair in a virtual environment. The target users of this system were those with severe motor disabilities. Tao and Archambault [

12] compared wheelchair handling in a virtual trainer and the real world for reaching task training (specifically, working at a desk, using an elevator, and opening a door). Although chair handling was poorer in the virtual world than in the real world, it was revealed that similar strategies were followed in both cases to achieve the objectives. Likewise, Alshaer et al. [

13] evaluated the factors that most affect user perception and behaviour in a wheelchair-driving VR simulator in a study with 72 participants. They concluded that there were three main immersion factors: the type of display system (head-mounted display vs. monitor), which significantly affected perceptual and behavioural measures; the ability to freely change the field of view (FOV), which only affected behavioural measures; and visualization of the user’s avatar. There was also a proposal that aimed to use VR and wheelchair simulators as a driving force for social integration by allowing wheelchair users to visit archaeological sites in a realistic way [

14].

Several articles have reviewed the literature, exposed the problems and explained what the different approaches achieved [

15,

16,

17]. In one way or another, they all serve to raise awareness of disability, which is essential to changing the design paradigm.

2.2. Architectural Design Evaluation Using VR Systems

Virtual reality has been used in the construction industry for many years. Greenwood et al. [

18] evaluated the use of VR in construction companies from different countries. The objective of this work was to determine the existing level of implementation; and the difficulties and possible solutions in the use of these technologies. Some of the problems identified in this study have already been overcome: the creation of 3D models is not a problem for architectural design because it is a core part of BIM systems, the development of VR applications is a task that, although it requires specific knowledge, does not present major difficulties thanks to the emergence of VR application authoring software, and the continuous appearance on the market of low-cost VR hardware devices allows their almost universal use. This has led to an exponential increase in the use of VR at all stages of the construction process.

Virtual reality (VR) technology has recently been increasingly recognized by the AEC industry for its ability to provide immersive virtual 3D environments, particularly suited to meet the high demand for visual forms of communication during the designing, engineering, construction, and management of the built environment [

19,

20]. According to the bibliometric analysis conducted by Zhang et al. [

21], the three most important topics, out of the six found in the bibliography, related to the application of VR in the AEC industry are: 1. architectural and engineering design, 2. construction project management, and 3. human behaviour and perception. The work presented in this article corresponded to topics 1 and 3.

An interesting work that could be included in architectural and engineering design and that has certain similarities with this proposal is that of Lin et al. [

22]. The VR application presented here allowed healthcare stakeholders and medical staff to review the design of a hospital and propose improvements, starting from an initial BIM model designed by the architects. The visualization system was semi-immersive and consisted of a wide curved screen and a gamepad. This limited one of the advantages of these systems, which is precisely the strength of the immersive VR experience. On the other hand, it was not clear whether the user’s movements in the virtual environment were free or the system only allowed some limited movements. In any case, this system seemed to be rather limited in comparison to WUAD. Another original work on this topic that showed the power of VR in architectural design is that of Keshavarzi et al. [

23]. It presented an application called RadVR that allowed the study of how sunlight strikes a building in different places at different times and dates, allowing an optimization of the design if the results were not as desired.

In the topics of architectural and engineering design and construction project management, some interesting works are those related to collaborative design. For example, Boton [

24] presented a framework that allowed a 4D simulation of the construction of a building. However, it encounters several technological problems that prevent it from making a fully functional system. Du et al. [

25] introduced a procedure for real-time synchronization of data between a BIM system and a VR application. This synchronization allowed automatic and simultaneous updating of BIM design changes in VR headsets, allowing multiple users to interact in the same virtual scenario. Keung et al. [

26] developed a multi-user VR application in which, by using a pair of treadmills, two users can walk in a simulated environment for design and evaluation tasks. Finally, Ververidis et al. [

27] reviewed collaborative virtual reality systems for the AEC industry.

Although BIM models can be exported to standard 3D formats, it would be ideal to integrate the VR system as an additional functionality of the BIM systems. Alizadehsalehi et al. [

28] proposed a BIM-to-XR IDEF0 model that is a viable, easy, and transparent solution for this problem, according to the authors. To detect the strengths and weaknesses of the proposed method, they performed an analysis of the scientific literature and interviews with 40 academics and experienced professionals. The results showed that there were still technological problems that prevented the implementation of the system as proposed by the authors and that there were issues of acceptance and commitment from stakeholders. In the case of Han and Leite [

29], the Generic XR model (GenXR) for the development of XR applications in the AEC industry presented in their work aimed to accelerate the BIM-to-XR process when multiple XR applications were implemented in the same project. To validate the procedure, they created XR applications (VR, AR, and MR) that integrated semantic information into the 3D models and developed and automated the proposed theoretical model. Whenever several XR applications were developed from a BIM model, they reduced the model creation time by 63.8% to 66.7% compared with traditional methods.

The third topic, human behaviour and perception, is related to the following keywords: evacuation, architectural design, wayfinding, occupant behaviour, and user-centred design, according to [

21]. Therefore, our work also falls into this topic. The first work in which a VR system was proposed to assess the accessibility of wheelchair users, dates to 2000 [

30]. In it, a wheelchair simulator and a basic VR application allowed an evaluation of whether a user had access to a building. Oxman et al. [

31] designed a 3D model of a furnished building to evaluate whether the various rooms were accessible to the user. However, the results they presented were not obtained from people with physical disabilities. More recently, Götzelmann and Kreimeier [

32] proposed a new wheelchair simulator for VR applications. Although they performed tests in urban and indoor environments, they did not indicate any systematic procedure for the creation of the VR applications nor any specific goal. Agirachman and Shinozaki [

33] introduced a VR system they called Virtual Reality Design Reviewer (VRDR), which had a wheelchair navigation mode that allowed users to know if collisions could occur in a house tour. In this application, no real wheelchair simulator is used. To simulate wheelchair movement, they simply lowered the user’s point of view with respect to normal navigation and slowed down the navigation speed. A ring the size of a standard wheelchair is generated in the VR application that changes colour when a collision occurs. The contributions made to this topic are far from solving the problem.

This study aimed to provide a feasible solution by proposing a systematic workflow in which the end-user plays a leading role that is independent of the hardware (simulator) used and to offer a design procedure for VR applications.

3. Materials and Methods

3.1. Workflow

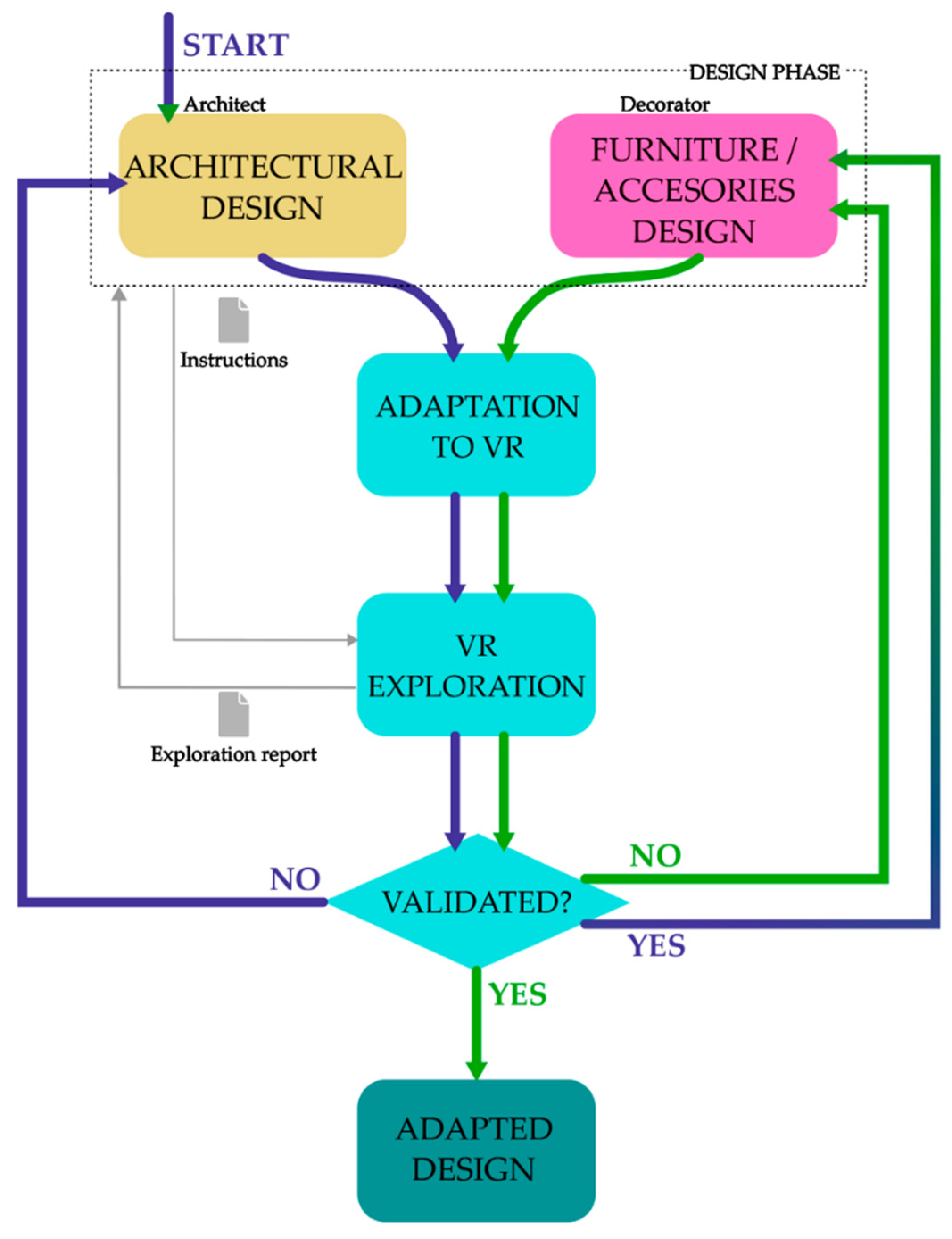

The workflow of the proposal presented in this article is summarized in

Figure 1. It was composed of a double loop with a common path: a design phase, an adaptation of the data to VR phase, and a VR exploration of what has been designed and its validation when the user considered the design to be appropriate. In the first loop, in blue, the basic infrastructure, such as the interior of a building, was subjected to evaluation, and in the second loop, in brown, the previous infrastructure was subjected to evaluation once it had been validated and to which elements such as furniture, accessories, ornaments have been added. Once both designs were validated, the design was considered adapted. The two main actors in this proposal were the designer who oversaw the design phase and the wheelchair user who explored the design in virtual reality, evaluated it, and decided its validation. This process is called Wheelchair User Assisted Design (WUAD).

There was a bidirectional communication channel, in grey, between the designer and the user who evaluated the design either through the instructions that the designer can generate or the report that the wheelchair user can generate. A more detailed explanation of the elements of the workflow is explored in the following sections.

3.2. The Design Phase

In this phase, the architect designed the infrastructure, building, or urban element, considering the requirements imposed by the client and their own criteria and design style. Moreover, the architect applied the current regulations where the infrastructure was to be located. The decoration of the interior or the addition of ornamental elements or accessories was also included in this phase, although it was carried out after the validation of the architect’s design and, usually, by a decorator. Hereafter, we use the term ‘designer’ for the one who executes this phase, whether it is the design of the building or the design of the decoration.

The design was made by using BIM/CAD software that allowed the generation of both 2D drawings and 3D models. Amongst the generated results, what interested us about the workflow proposed in this article was the 3D model, which is the one that will be inspected and assessed by the wheelchair user in the VR application.

Regarding the file format used to export the BIM/CAD design, it must meet the following requirements:

Independent access to the different elements (floors, walls, ceilings, furniture, and fixtures);

Inclusion of materials;

Compatibility with the software used to develop the VR application.

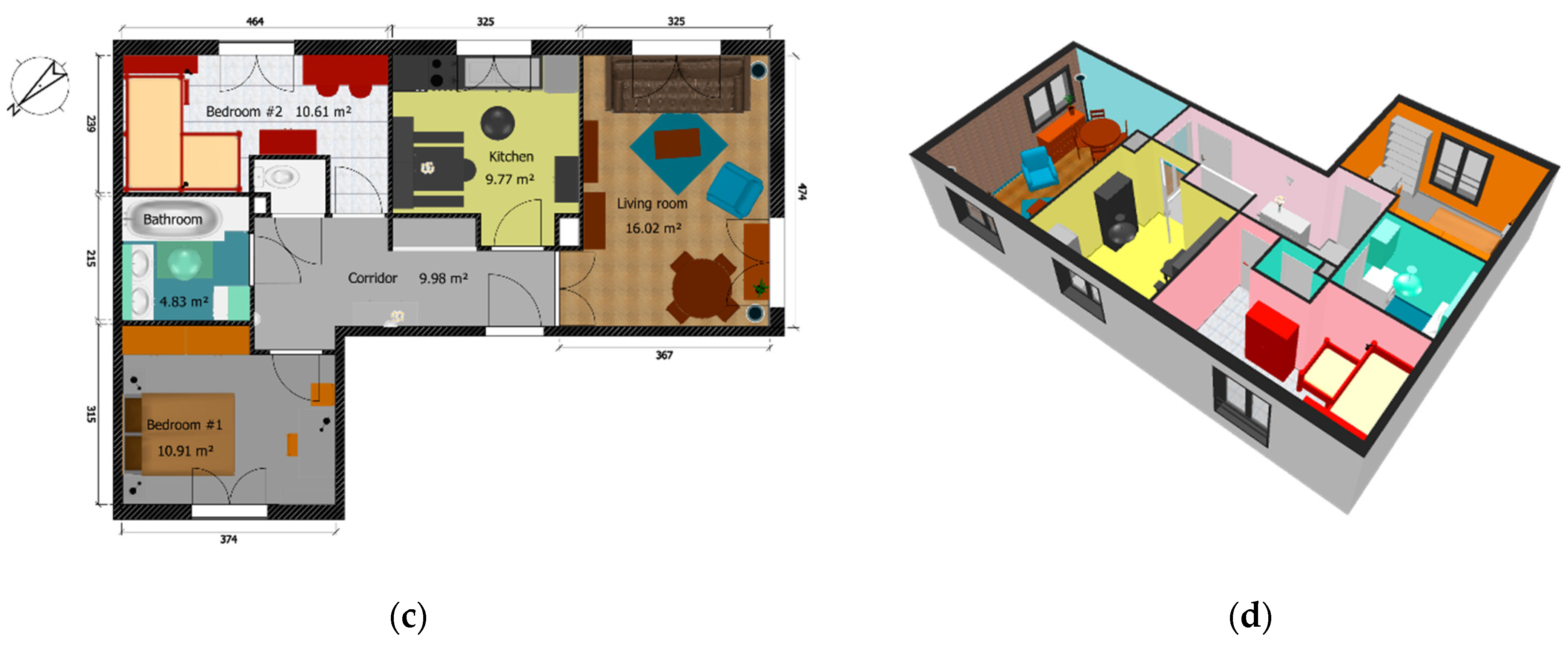

Therefore, the architect initially drew the model of the infrastructure both in 2D and 3D, defining the floors, walls, ceilings, doors, and windows. An example of the design of an apartment is shown in

Figure 2, with the unfurnished and furnished initial 2D and 3D designs. Then, the architect exported the 2D model to an image file and the 3D model to a 3D file format with its textures that were adapted to be used in the VR application.

After the validation of the design, the furniture and accessories were added to the 3D model of the building. Therefore, new files, 3D files, and textures were generated to be imported into the VR application authoring software.

In our proposed workflow, the designer could optionally generate a document in a PDF format that includes instructions and comments to be sent to the user who inspects the design. This user would be able to access these instructions during the exploration at any time they wish.

3.3. Adaptation to VR Application Authoring Software

There are currently two programs that dominate the VR application authoring software market: Unity 3D and Unreal Engine. Both provide a free version to developers. In this work, Unity 3D was used, and the remaining sections were conditioned by this decision. However, the procedure we presented can be adapted without difficulty to the use of Unreal Engine.

The model created in the design phase and explained in the previous section must be imported into Unity in an interchange format (e.g., OBJ). Generally, it was necessary to adapt the model so that it can be used in a virtual scenario. Two stages of adaptation must be applied to the 3D models used in the VR application: the first stage was external to Unity and affected the 3D object definition, as explained in [

22]; the second stage was internal to Unity and added some properties to the 3D objects.

Figure 3 represents the adaptation stages, where after obtaining the 3D model of the designed building, two consecutive steps were applied: 3D object definition and 3D object properties, which are explained in

Section 3.1 and

Section 3.2, respectively.

3.3.1. Adaptation of 3D Object Definition

The external adaptation of 3D models for VR was carried out by modifying three parameters: colour, data type, and resolution. Regarding the colour, the design program must allow the generation of a material map and separated image textures were automatically imported by Unity. Regarding the type of data, all the elements generated by the software must be defined by triangular meshes. Finally, high resolutions of meshes must be avoided so as not to result in a virtual scenario that could not be easily managed in real-time by Unity. In general, BIM/CAD applications generated 3D models with a low number of triangles and with the possibility to add image textures, so in most cases, no adaptation is required. The potential risks were the inclusion of 3D models that have a high resolution and the use of an excessive number of elements in the infrastructure (several floors, high density of furniture, ornaments, accessories, etc.), which involves a drastic increase in the number of polygons in the scene.

In this case, it might be necessary to apply a reduction of the resolution of the elements that make up the final model. The maximum number of polygons that a virtual scene can include cannot be determined in a general way since there are many factors that influence the performance, such as the hardware that will be used for the execution of the application, the textures that the 3D models include, the scene lighting, etc. Based on our experience in the development of other applications and the low complexity of the virtual scenes that we generated, we imposed a limit of 300,000 vertices for the whole scene since this is the maximum recommended value for Oculus Quest applications.

3.3.2. Adaptation of 3D Object Properties

Once the 3D model was imported into Unity, which is a file with meaningful elements, the second stage of adaptation must be applied. This was carried out by an importer module that was developed in our application in Unity.

In this module, the different elements that composed the 3D model were identified, and certain properties were assigned to them that were essential for the correct operation of the application. This stage can be divided into the following steps:

Collision properties: A component, which is named ‘collider’ that is a special bounding box, was added to the 3D model elements to control physical interactions and collisions between 3D objects. This component integrated two functions: to let the virtual wheelchair roll across the floor and to avoid the user walking through objects (walls, doors, and furniture). Colliders were added through Unity to floors, walls, and doors in the first part of the design phase and to furniture in the second one.

Accessible areas: The elements that could be navigated with the wheelchair were manually separated from those that cannot be, such as floors and walls; the rest of the elements added in the design were identified. After that, some portions of the floors could be labelled as accessible areas. This was completed by computing the difference between the floor’s colliders and the furniture’s colliders located on those floors. These areas were the portions of floors free from furniture and where the dimensions of the wheelchair fit. There may be portions of the floor that are unfurnished but too small for the wheelchair to fit, so those portions were not considered accessible areas. Additionally, to include the possibility of exploring the virtual world with only a virtual headset and controllers (without a wheelchair simulator), a component named ‘teleport area’ must be added to the identified floors that we wanted the user to explore.

Interactive elements: To allow interactivity with some 3D elements, convenient properties or components must be added to those elements. So far, only the possibility of making the doors interactive has been included, i.e., the user can open and close them.

In practice, the importer module consisted of a script that detected the names of the elements that composed the 3D model and automatically assigned the corresponding properties. During the design phase, the designer must bear in mind this subsequent step, and they must use a specific nomenclature for the storage of the elements that compose the scene. Specifically, the following labels must be assigned to each type of element: floors, walls, doors, furniture, and accessories. The importer module added the collider component to all the elements it detects. The script also stored the limits or contours of accessible areas for every room. Finally, it added a script to control the doors.

Figure 4 graphically summarizes the functions of the importer module.

Figure 5 shows the separation between the floors, walls, and furniture from the example in

Figure 2 and the addition of colliders.

3.4. Hardware of the Virtual Reality System

The hardware devices of the virtual reality system used in this work have been applied elsewhere by our group. Some examples are described in previous papers [

14]. In this section, a brief description of these hardware elements is made.

The equipment is composed of the following devices:

The wheelchair simulator: This was the most important hardware device in the application. It consisted of a standard wheelchair that sits on a 6 DOF parallel manipulator (such as a Stewart platform). On the platform, there were a pair of haptic rollers on which the wheels of the wheelchair rest. They were used to measure the speed of each wheel. Using these data, the position and orientation of the wheelchair were determined by odometry, which allowed the user’s avatar to be in the virtual world. Therefore, this device served as a motion capture system (MOCAP) for wheelchair users. In addition, this information was fed back to the parallel platform itself so that it simulated the movements that the user was making in the wheelchair. The rollers were active, so they could be programmed to resist the wheelchair’s movement, making it possible to simulate, for example, ramps, different types of floors, etc. The aim of all this was to make the user’s virtual experience as immersive as possible. As explained in [

14], several scripts were programmed to control all these devices. With these scripts, bidirectional communication between the Unity engine and the hardware was established to control the feedback between the real and virtual worlds.

Standard MOCAP systems: In addition to the device described above, which is a MOCAP for wheelchair users, two additional MOCAPs were used. The first was an optical system, Optitrack, which located the user’s upper trunk. The interface between Unity and Optitrack was provided by the Motive Unity SDK. The second was a 5DT data glove, which allowed the capture of movement by the user’s fingers. The communication between the VR application and the data provided by the gloves was performed using the scripts provided by the manufacturer.

Visualization systems: The user used Lenovo Explorer virtual reality headsets, based on the Windows Mixed Reality standard, for the visualization of virtual scenarios in all phases of the design. These headsets have two screens with 2.89” and a resolution of 2880 × 1440 pixels. The field of view (FOV) is 110°, and the frame rate is 90 Hz.

In addition to the visualization system itself, the headset’s integrated cameras and IMU determined the position and orientation of the user’s head by using an inside-out technique. This was particularly useful when using motion platforms since the use of external sensors was avoided. Finally, the manufacturer provided a pair of wireless controllers that allowed the user to interact with the application and the virtual scenario.

3.5. User Interfaces

The objective of the application was twofold: to allow a wheelchair user to explore an infrastructure design in the simulator and to allow them to participate in the design process by providing feedback on their experience. To this end, five user interfaces were created, named as follows:

MainUI: to give access to all the interfaces;

InstructionsUI: to read some initial information from the designer;

MarkersUI: to generate the feedback information for the designer;

MinimapUI: to facilitate the navigation and the feedback representation;

DoorsUI: to customize every door in the building.

When the user pressed the controller button assigned to the menu function, six virtual floating buttons appeared on the screen; as can be observed in

Figure 6, five of them opened each of the previous interfaces and the sixth one, named ‘Quit’, let the user finish the exploration. These interfaces are briefly described in the next sections.

3.5.1. InstructionsUI

In this interface, the user could read some specific instructions, warnings, and advice given by the designer in a PDF file. This PDF might contain both text and images. This was particularly useful for the validation phase, where the user checked the changes made by the designer after the previously left feedback. Therefore, the first step that a user must complete is to read any such instructions.

3.5.2. MarkersUI

This interface allowed the user to generate markers and short texts to leave feedback for the designer by means of the menu interface shown in

Figure 7a. Different categories of markers were created to collect some common issues related to accessibility that can take place in any infrastructure. If the user wanted to place a marker, they opened the MarkersUI interface that would provide a set of common accessibility issues. Clicking one of these will show a subset of options that allowed the user to specify a more precise type of issue. Depending on the chosen option, a different marker, along with a keyboard, appeared next to the interface. Through this keyboard, it was possible to add a comment explaining the problem in more detail. This interface also included the possibility of letting the user manually measure distances within the virtual scene.

The main function of these markers was to indicate where an issue was located and its nature. Within each category, the user could leave the following types of markers:

- 1.

Navigation: Issues related to the movement of the wheelchair inside the building. The following markers are available:

- 1.1.

Size: The user detects that the wheelchair does not fit in any part of the building.

- 1.2.

Manoeuvrability: The user considers that although the wheelchair fits, they do not have enough free space to manoeuvre with it.

- 1.3.

Slope: The user wants to inform about the slope of a ramp or floor.

- 1.4.

Step: The user wants to inform about a step on the floor.

- 2.

Furniture and Accessories: Issues related to the elements added to the building design, furniture, or accessories. The following markers are available:

- 2.1.

Reachability: The user has difficulty reaching something, such as a shelf or the handle of any cabinet.

- 2.2.

Distribution: The user wants to leave a comment about the current furniture distribution in a room.

- 2.3.

Projection or Corner: The user detects a corner or projection in the furniture or accessories that could become a risk for the wheelchair user.

- 3.

Accessibility: Issues related to special technical aids to handicap users. The following markers are available:

- 3.1.

Banister or Grip: The user wants to leave a comment about an installed bannister or grip or wants to suggest installing one.

- 3.2.

Ramp: The user wants to leave a comment about a built ramp or wants to suggest building one.

- 4.

Comment: The user wants to leave some feedback about any issue not included in the previous categories.

- 5.

Measure: The user wants to measure a distance. An example of measurement is represented in

Figure 7b.

When any marker was added, two elements were inserted into the scene: a 3D representation of the marker that was visible during the VR exploration and a 2D representation that was only visible in the mini-map. An example of a marker with a comment is shown in

Figure 7c, and the shape and colour of a distribution marker are depicted in

Figure 7d.

3.5.3. MinimapUI

The mini-map interface had two main functions: to give the user an idea of the whole infrastructure and to let them instantly teleport to another part. When a 3D model of a building is imported, the importer module inserted one camera for every floor of the building. These cameras were oriented to offer a zenithal view of the floor, were scaled to cover the complete floor, and were configured with an orthographic projection.

Figure 8a is a screenshot of a 3D model imported into Unity with the generated camera and its associated zenithal view. During the VR exploration, every camera rendered the scene in real-time as a raw image that was used as a texture for the user interface, which gave the illusion of a 2D CAD map of each floor.

To achieve the teleportation functionality of the mini-map, a correspondence between the rooms of the building and the mini-map image was established. The mini-map is a 2D visualization of the 3D elements from a specific perspective, so the selection of a point in the mini-map automatically selects a point in one of the 3D models. For the imported 3D models, detection of the model’s elements was carried out where each floor’s rooms contained a common prefix, for example, ‘room’. Therefore, when the user clicked on one of the rooms of the map, they were indirectly selecting the 3D floor of the same room.

If the user was allowed to be teleported to any point in a room, some collisions with other elements might arise (they, along with the wheelchair, may appear over a table in the kitchen). To avoid those problems, only the clicked points that were inside the accessible areas as computed by the importer module were considered. Thus, the user was teleported only to safe areas with no collisions.

Another important function of the mini-map was to offer current feedback information; that is, by visualizing this map, the user could check the location of the markers that they inserted so as to review such insertions, edit, or delete them. Each marker was represented with a coloured circle in the mini-map. The markers circumscribed with a square represented markers to which the user added some comments. An example of a mini-map can be viewed in

Figure 8b, where six markers were left by the user: one marker related to size in the small bathroom; two markers related to projection of furniture in the entrance and in the double bedroom; one marker related to manoeuvrability with comments in the single bedroom; one marker related to distribution with comments in the living room; and one marker with free comments in the kitchen.

3.5.4. DoorsUI

This interface allowed the configuration of doors. If the user selected a door and chose the doors option, the interface, depicted in

Figure 9a, appeared over the 3D virtual world. Two parameters are available to be configured:

3.6. Feedback/Design Validation

In the workflow proposed in this article (

Figure 1), the decision-making element that conditions the evolution within the scheme was the validation phase made by the wheelchair user. Thus, after the exploration and inspection of the virtual environment, the user issued a message, which could be of two types: feedback information or validation of the design.

In practice, the user performed an inspection of the infrastructure, and they left markers, with or without comments, to be analysed by the designer. When they decided that the evaluation was concluded, they pressed the ‘Finish’ button in the MainUI interface. At that moment, a report is generated in PDF format containing the following information to be sent to the designer:

Evaluation result

Table of markers

Rooms map

Marker map

Legend

Usage statistics

The evaluation result began the report, and it only had two possible values: ‘Validated’ or ‘Non-validated’. If the user did not add any markers or comments, the result would be ‘Validated’; otherwise, the design was ‘Non-validated’.

The marker table summarized the information related to the markers left by the user in five columns: floor, room, category, marker type, and comments. Every floor and room is assigned a number after importation, so the floor and room numbers indicate the location of the marker in the building. The category and marker were related to the issues that were chosen by the user in the FeedbackUI interface. The comment column showed any text added to every marker by the user.

The room map was a basic map of the building where the number of each floor and room could be checked.

The marker map consisted of the images of the mini-maps for each floor of the explored building. Every mini-map showed the markers inserted by the users with different colours. A legend box was added showing a correspondence between the type of markers and colours.

In the usage statistics section, some information about the distribution of markers and the user’s exploration times were collected, including the exploration times of each room, of each floor, and the total exploration time.

Figure 10 shows an example of a report generated after the exploration of the building in

Figure 2. In this example, an apartment of seven rooms was evaluated for 24 min, six markers were inserted into the map, and three of them included a commentary from the evaluator.

An important factor to consider was the user’s ability to cope with the exploration of and interaction with the virtual world. It was occasionally detected that there were users who experienced motion sickness after using virtual reality systems. Any application with user interfaces and controllers needs a certain amount of practice by the user to be able to optimally exploit it.

Therefore, a phase of training and analysis of the user’s response should be introduced, either to familiarize them with the interfaces and controls or to confirm their aptitude so that their feedback input is valid in the designed workflow.

Regarding the perception of the virtual world, some users reported a distorted perception of the depth of the scene, mainly due to their eyeglasses prescription and the lenses of the virtual reality system. In such cases, they perceived the virtual world dimensions with some distortions and, therefore, their feedback might not be reliable. However, since they were using a virtual 3D model of the wheelchair with real dimensions and were continuously testing if it fits into the followed virtual path, their perception issues were not relevant in that case. There could be other analyses in which such distorted perception could lead to unreliable feedback. Therefore, this aspect should also be considered and detected during the training phase.

4. Results

In this section, a real example of the applicability of the method proposed in this article is shown.

4.1. Software and Hardware

WUAD is independent of the software used in its development. In our case, the applications utilized in this work have been:

Unity 3D is software which has been used, as indicated in

Section 3.3, for the development of the VR application.

Sweet Home 3D is the 3D interior design software used in the creation of architectural and furniture models. This program has interesting advantages: easy floor plan design, a wide range of home furnishings models, allows importing any 3D model that the designer has modelled in other specific software, and allows exporting the complete textured 3D model, in OBJ and MTL format, that has been created, naming each of the objects with easily understandable prefixes: ‘room’, ‘wall’, ‘hinge’, ‘chair, ‘plant’, etc.

Regarding the hardware, in addition to the specific hardware for VR (

Section 3.4), a workstation with an Intel i7 processor, 16 GB of RAM, and an NVIDIA RTX 2060 graphics card was used to control all the devices and run the VR application.

4.2. Architectural Design

The first stage of the procedure consisted of creating a building whose accessibility was to be evaluated. This phase corresponded to the blocks and arrows shown in blue in the workflow of

Figure 1.

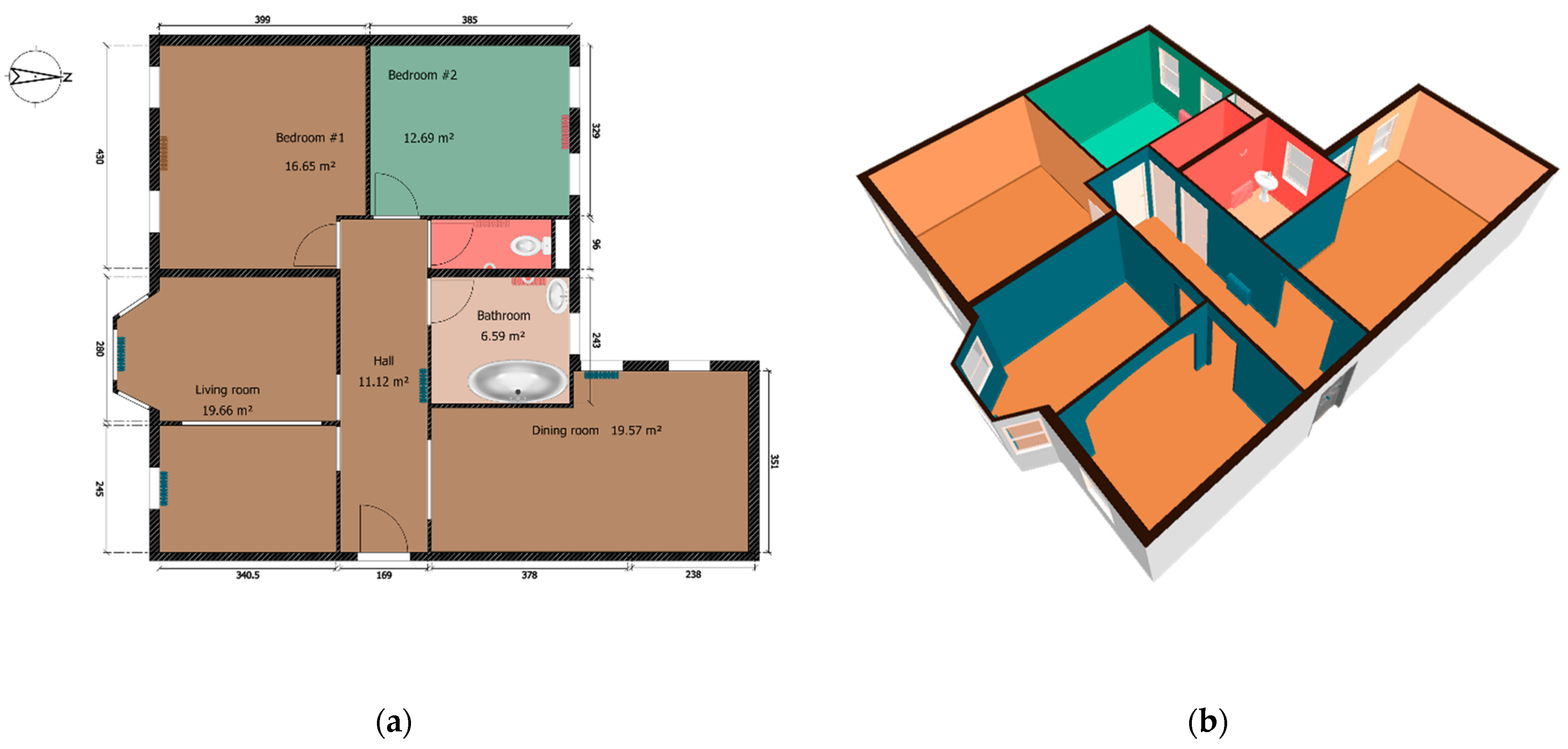

For the evaluation of WUAD, it was decided to use an apartment as a test building. This apartment had two bedrooms, a living room, a dining room with an adjoining kitchen, an office, a bathroom, a toilet, and a hall that connected all these rooms.

The design of the apartment began with the creation by the architect of the two-dimensional floor plan (

Figure 11a), from which the 3D floor plan was created (

Figure 11b). The 3D model and the texture of the 3D plan were exported in OBJ and MTL formats, respectively, ready for the next step. Using Sweet Home 3D in this step, the 3D model did not require any adaptation (

Section 3.3) since the objects were defined by low-resolution triangular meshes and the textures were exported in a standard texture map.

Once the design of the apartment was made, it was adapted with Unity 3D, as explained in

Section 3.3, to create the VR application. Then, using the wheelchair simulator, as well as the different VR devices indicated in

Section 3.4, we proceeded to validate the first design.

During the exploration, the user verified the difficulties they encountered while moving around the different rooms of the apartment. It was important that this task be performed by a person with a physical disability to make the assessment as realistic as possible. When the evaluator found a problem, they included the necessary markers and comments so that these problems could be corrected in the next phase of architectural design. The user utilized the following markers (

Section 3.5.2) in their evaluation:

Manoeuvrability marker, which was the marker numbered 1.2 in

Section 3.5.2, and which fell under the category of navigation markers. This marker was in the office and was accompanied by the following comment: “The office is too narrow to maneuver easily”

A second manoeuvrability marker was placed at the entrance from the dining room to the kitchen.

A final manoeuvrability marker was placed in the kitchen, adding a comment like the one in the office.

As a result, a final report was generated to be sent to the architect, who generated a new design of the apartment where some changes were introduced to the office and the kitchen. In the case of the office, the architect proposed to open the separation wall between the office and the living room, and to slightly move the wall to allow better manoeuvrability with the wheelchair. For the access problems in the dining room and kitchen, it was decided to eliminate the separation wall between the two rooms and to design an open kitchen.

Figure 12a,b shows the new plan of the apartment made after the evaluation.

4.3. Furnitures/Accessories Design

After validating the structure, the designer added the furnishings and decoration that they considered most appropriate for the future tenant of the house, that can be observed in

Figure 13a,b.

The new furniture design was virtually explored by the user and some markers and comments were indicated in the corresponding final report:

Distribution marker, which was the marker numbered 2.3 in

Section 3.5.2, and which fell under the category of furniture and accessories markers. The marker was in the kitchen area and the comment indicated that the location of the kitchen bar may cause manoeuvrability problems (magenta marker in

Figure 14a).

Manoeuvrability marker, which was the marker numbered 1.2 in

Section 3.5.2, and which fell under the category of navigation markers. To emphasize the lack of manoeuvrability indicated above, this marker had been included in the same location as the previous one (red marker in

Figure 14a).

Step marker, which was the marker numbered 3.2 in

Section 3.5.2, and which fell under the category of accessibility markers. Due to the installation of the floor in the bathroom, a small step appeared at the entrance, so the user requested that a small ramp be installed to facilitate access (yellow marker in

Figure 14b).

Comment marker, which was the marker numbered 4 in

Section 3.5.2. The user indicates that the bathtub be changed for a shower (grey marker in

Figure 14c).

Banister or grip marker, which was the marker numbered 3.1 in

Section 3.5.2, and which fell under the category of accessibility markers. The evaluator indicated that it was necessary to include a handhold in the shower that he requested, in the previous marker (cyan marker in

Figure 14c).

In the next iteration of the WUAD workflow (

Figure 1), the designer modified the previous proposal considering the user’s report. They removed some furniture in the dining room to facilitate the manoeuvrability. Moreover, they added a small ramp to access the bathroom and installed a shower with a banister instead of a tub. As a result, the 2D and 3D floor plans shown in

Figure 15a,b were generated by the designer.

The new 3D model was imported into Unity 3D and evaluated by the wheelchair user. As no further modifications were proposed, the design of the apartment was concluded.

5. Discussion

The aim of this study was to propose a new methodology for improving building accessibility from the early phase of design. Although regulations have been recently tightened, there is still much to do to make the lives of people with disabilities easier.

This proposal merged the ideas of universal design, the developments achieved by wheelchair simulators, and the challenges posed by virtual reality (VR) for the built environment. A systematic workflow for the assessment of accessibility for wheelchair users in the architectural design phase and in the interior design phase of a building was proposed. A method for the implementation of this procedure using a virtual reality system that included a wheelchair simulator was presented. It involved the end-user from the first stages of the design process to offer a system that improved the accessibility of buildings.

As is well known, VR technology is growing in demand and popularity in different fields, such as medicine, education, and engineering, due to the excellent simulation tool it can be when properly used. For example, by using VR devices in conjunction with wheelchair-based simulators, it was possible to experience what living with a wheelchair disability and therefore remove barriers at the design stage that the designer would not otherwise have taken into account.

It is also worth noting that this study addressed two of the three most important topics identified in the literature related to the application of VR in the AEC industry: architectural and engineering design and human behaviour and perception. As highlighted in

Section 2, there are still many problems to be solved.

Furthermore, it should be emphasized that the solution presented improved on all proposals made to date, to the best of our knowledge. The VR tool allowed for markers, commentaries, registering the exploration times, generating reports, etc. and all this while using a real wheelchair that can move on a platform with six degrees of freedom, which allowed a sense of realism that was not achieved in most of the simulators found in the literature. So far, there is no other comprehensive proposal like ours: either real simulators are used, but the user cannot move freely through the spaces, or the spaces to be explored can be freely navigated but from a simulated wheelchair.

On the other hand, the implementation of our proposal allowed us to state that it would be advisable to introduce a phase of training and analysis of the user’s response, either to familiarize them with the interfaces and controls or to confirm their aptitude so that their feedback is valid in the designed workflow. We can similarly state that, since the application used a virtual 3D model of the wheelchair with real dimensions and was continuously checking if it fits the virtual trajectory, the perception problems of users with eyeglasses would not be relevant in this case. However, there could be other analyses where such distorted perception could lead to unreliable feedback. Therefore, this aspect should also be considered and detected during the training phase.

The WUAD workflow not only proposed a new methodology that integrates universal design standards but also demonstrated the advantages of using VR applications in research and development fields. Although WUAD used a wheelchair-based simulator, it would also be possible to use the VR device alone. For example, the virtual 3D model of the wheelchair could be operated from other types of peripheral devices instead of a physical wheelchair, such as keyboards or game controllers, which can be easily connected to Unity.

6. Conclusions and Future Work

In this work, we presented a proposal that contributed to further improving the accessibility of buildings by promoting communication between wheelchair users and designers. Being aware of the necessities people with any kind of disability have is crucial to maintaining fairness and helping them live their lives with no more difficulties added. It brought together the ideas of universal design, the achievements of wheelchair simulators, and the advantages offered by virtual reality (VR) for the built environment.

WUAD should not be seen as a static method but as a different methodology, which implies taking advantage of VR applications for simulations and iteratively reporting suitable changes for a better and more practical design.

From this point of view, future work could be directed towards improving the VR application, and the communication channel used to report changes in the proposed method. Furthermore, it is planned to improve the report generated, increase the variety of markers, and develop a multi-user online mode where the designer can be a passive or active witness to the exploration phase.

It would also be interesting to analyse the usability of the application and to study if the designed interfaces can be improved, as well as the format of the feedback between the user and the architect and designer reports.

It is also necessary to study what percentage of users suffer from motion sickness and if there are parts or actions within the application that cause symptoms in order to reduce it as much as possible. In a way, our proposal could be considered as one more of the existing tools to raise awareness about disability, which is essential to change the design paradigm.