Protocols for the Graphic and Constructive Diffusion of Digital Twins of the Architectural Heritage That Guarantee Universal Accessibility through AR and VR

Abstract

:1. Introduction

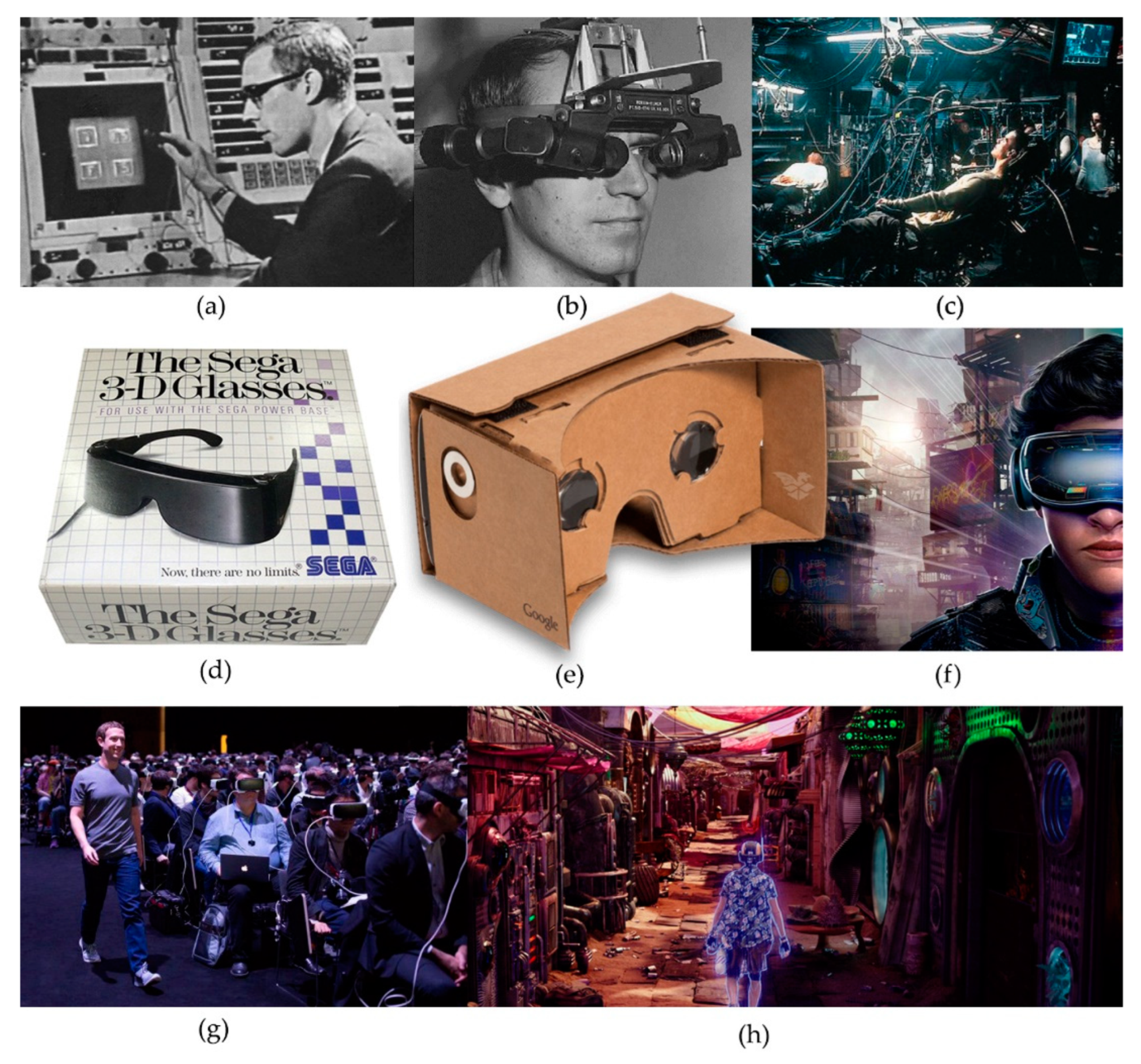

1.1. Related Works: Metaverse, Metatourism, AR and VR

1.2. Literature Review: Terminology and Concepts Applied to Architectural Heritage

- Anyone can access the metaverse regardless of the device they use (mobile, computer, VR glasses, AR glasses, etc.)

- People relate to each other through avatars.

- Metaverses encompass both the real world and the physical world. In other words, we can relate to our environment through augmented reality.

- Virtual reality, augmented reality or even mixed reality devices may be used.

- Metaverses can generate an economy.

2. Materials and Methods

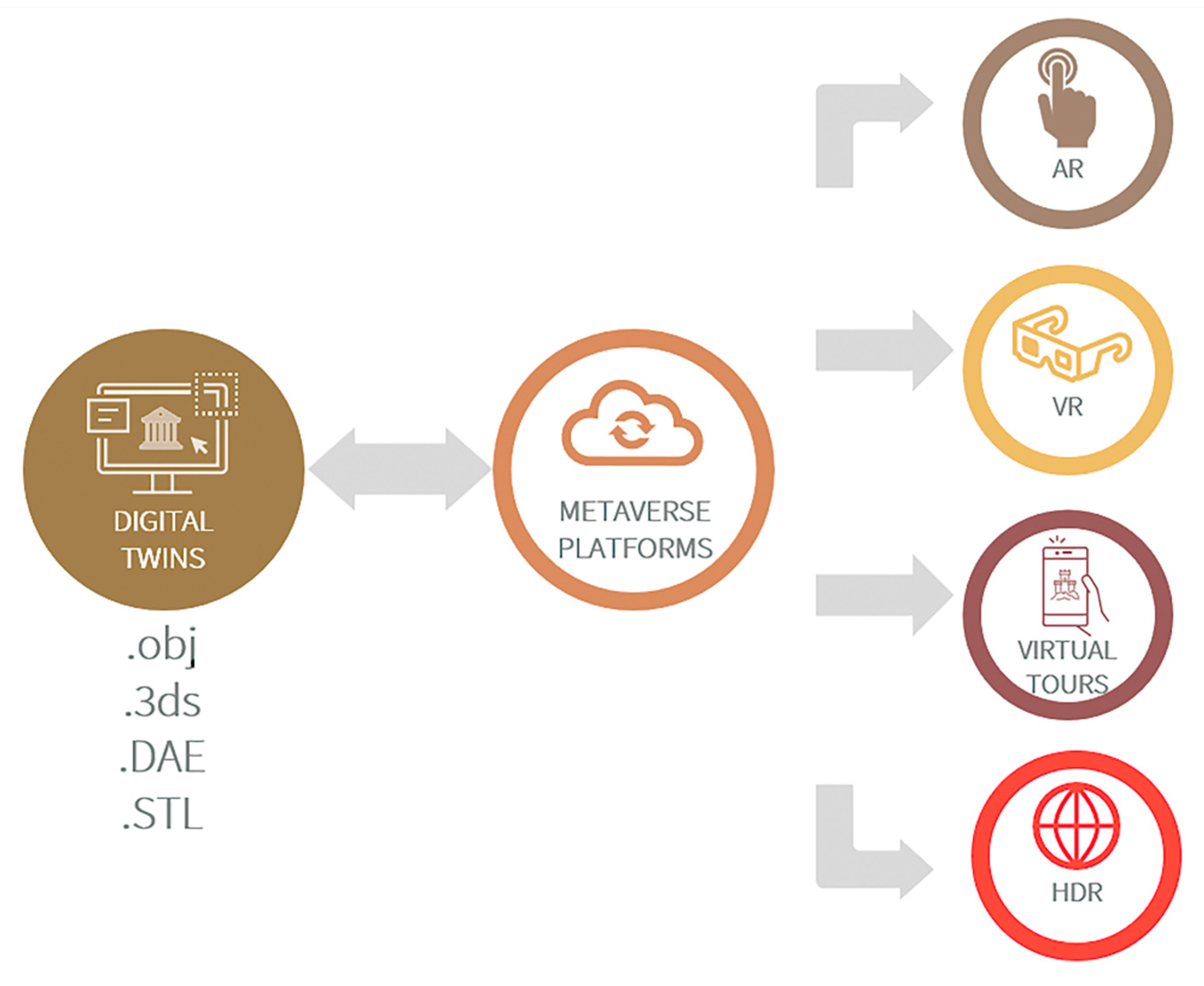

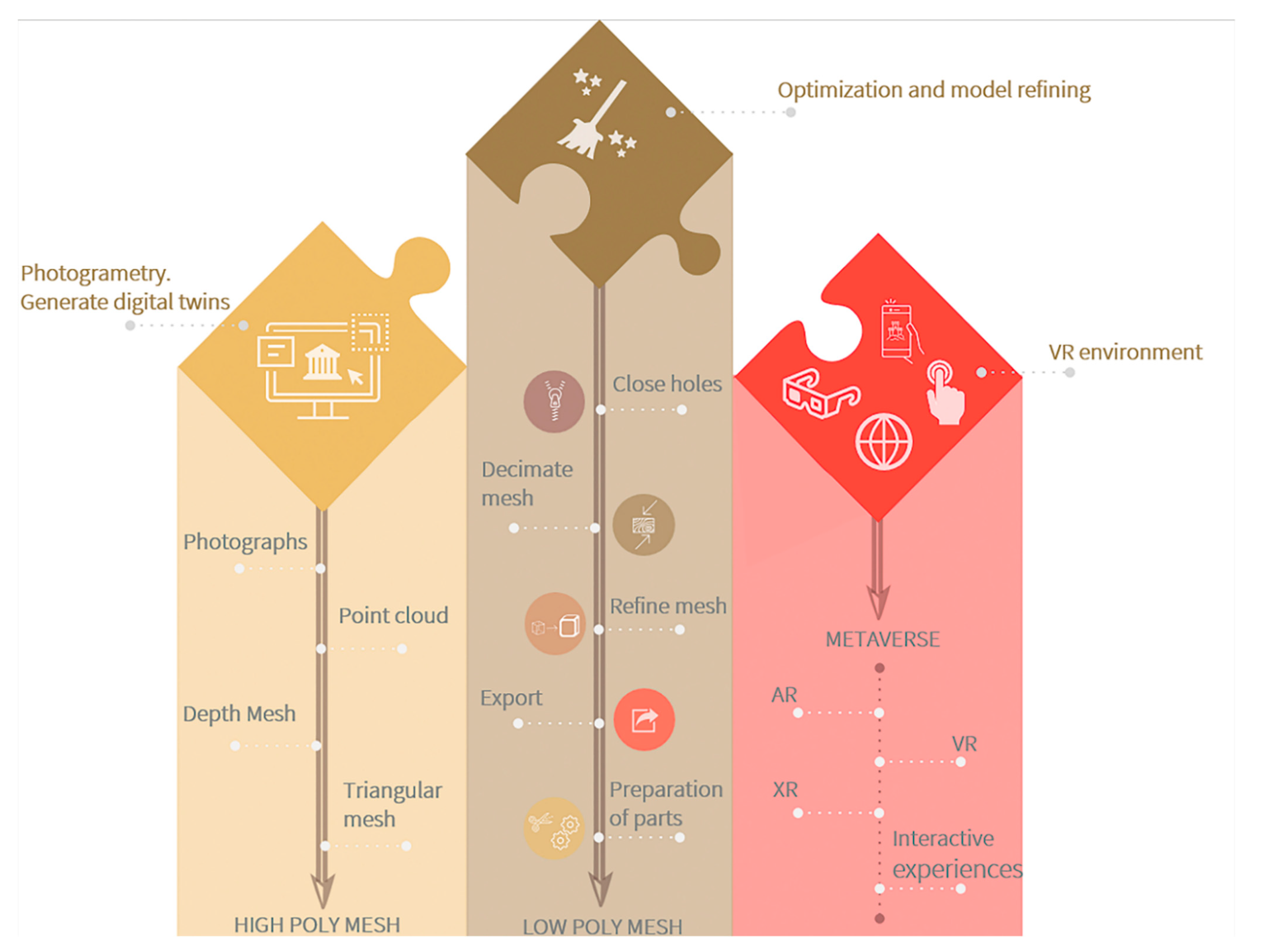

2.1. Work Scheme

- Activity I. The first activity of this workflow establishes a starting point prior to the deepening of the metaverse. In this activity, the heritage asset under study is filtered and selected, and the previously generated digital twin is optimized and prepared for its integration in the metaverse.

- Activity II. This activity is based on a general analysis of the metaverse according to the field in which it is active.

- Activity III. An overview is presented that allows us to distinguish between the different types and analyze the different digital resources currently available that can be used as metaverses to host our digital twins.

- Activity IV. In the development of this activity, the functionalities and capacities of 32 metaverses in which the generated digital twin could be included are listed and analyzed. Based on this detailed analysis, a first screening was carried out according to our requirements (digital infoarchitecture twins obtained through TLS or SFM [44]).

- Activity V. The 7 metaverses selected in the previous screening were analyzed, and a second filter was applied to select a single metaverse from which the results of the virtualization and integration in the metaverse are shown.

- Activity VI. The functionalities of the selected platform were tested with our working model in order to obtain results compatible with VR (virtual reality) and AR (augmented reality) environments [45].

2.2. Activity I: Starting Point Prior to the Deepening of the Metaverse

- Milestone 1: Definition of values sought in the case study.

- Milestone 2: Selection of the case study.

- Milestone 4: Optimization and debugging of the 3D models obtained to obtain exportable digital twins [22].

2.2.1. Milestone I.1: Definition of Values Sought in the Case Study

2.2.2. Milestone I.2: Selection of the Case Study

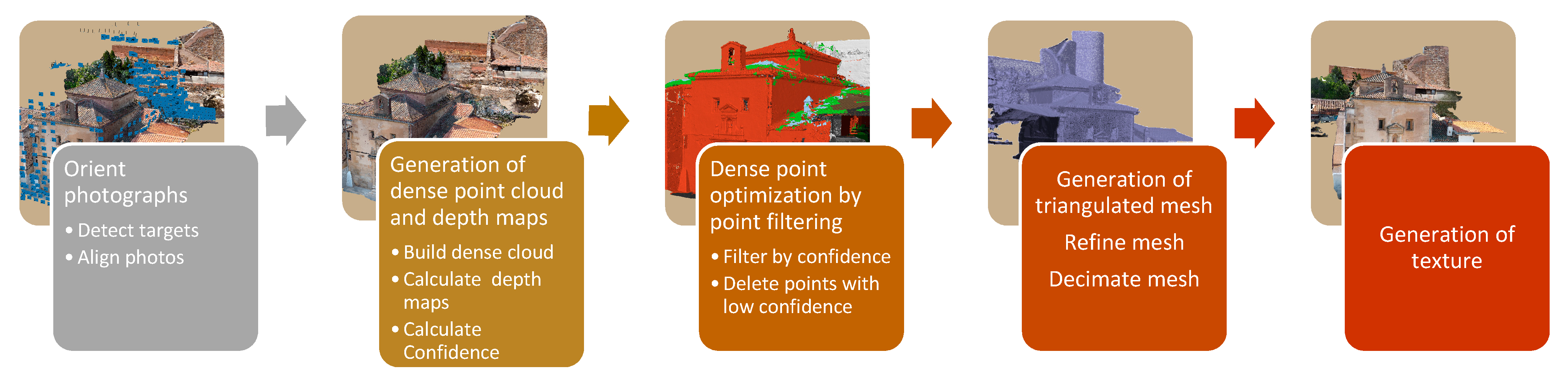

2.2.3. Milestone I.3: Generation of Digital Twins from Photogrammetry with Unmanned Aerial Vehicles (UAVs)

- Orientation of photographs. This orientation allows the scattered point cloud to be obtained.

- Generation of dense point cloud and depth maps.

- Dense point cloud optimization by point filtering.

- Generation of the triangulated mesh.

- Generation of the texture from the images taken by the drone.

2.2.4. Milestone I.4: Optimization and Debugging of the 3D Models Obtained to Obtain Exportable Digital Twins

2.3. Activity II: Filter I. General Analysis of the Metaverse According to the Field in Which They Are Active

2.4. Activity III: Filter II. Analyze the Different Digital Resources Currently Available That Can Be Used as Metaverses to Host Our Digital Twins

2.5. Activity IV: Filter III. Detailed Analysis of the Metaverses

2.6. Activity V: Filter IV. Last Filter to Select a Single Metaverse

2.7. Activity VI: Testing the Functionalities of the Selected Platform with Our Working Model in Order to Obtain Results Compatible with VR (Virtual Reality) and AR (Augmented Reality) Environments

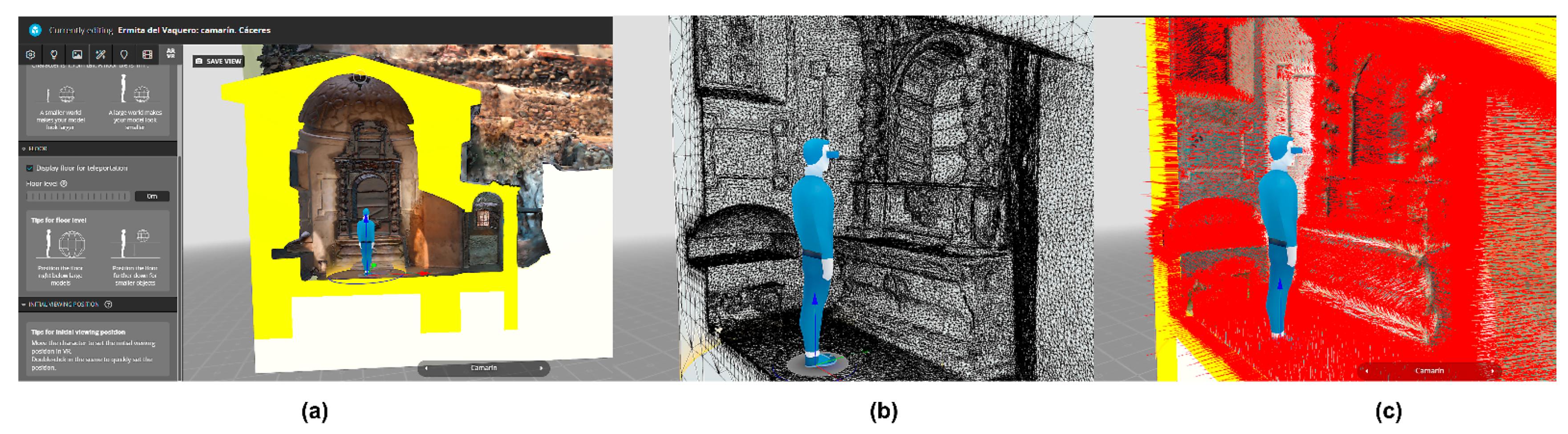

- Milestone VI.1: Setting up the scene. The first step is defining the position of our digital twin in the space, as well as other basic visualization options. In addition, at this milestone, we can even determine the behavior of the materials in the model. In the case study, the textures were obtained through photogrammetry.

- Milestone VI.2: Setting up illumination. Thanks to this option, we can determine the lighting parameters of a maximum of 3 lights as well as include HDR images that supply ambient light to the digital twin.

- Milestone VI.3: Setting up materials. From the decision adopted in milestone 1 about the type of materials the digital twin will have, we can modify the characteristics of each one independently.

- Milestone VI.4: Postprocessing filters. The use of filters for digital content is already normalized in today’s society in order to obtain different ways to visualize anything. In this case, thanks to Sketchfab, we were able to apply up to 10 filters to Vaquero’s Hermitage.

- Milestone VI.5: Setting up annotations. The selected platform allows us to include additional information that contributes to a cognitive improvement of the cultural heritage. This information can appear as text, images and even links to web pages that provide more information.

- Milestone VI.6: Setting up virtual reality and augmented reality. In order to generate immersive experiences in the architectural heritage, it is necessary to define a series of issues, such as the initial position and the size of the avatar with respect to the heritage element that we are interacting with.

2.7.1. Milestone VI.1: Setting Up the Scene

2.7.2. Milestone VI.2: Setting Up Illumination

2.7.3. Milestone VI.3: Setting Up Materials

2.7.4. Milestone VI.4: Postprocessing Filters

2.7.5. Milestone VI.5: Setting Up Annotations

2.7.6. Milestone VI.6: Setting Up Virtual Reality and Augmented Reality

3. Results

4. Discussion

- First of all, as a society, we have an obligation to digitize the world in which we live in order to preserve it as a digital twin for future generations: the present database.

- Secondly, from these digital twins, we have the opportunity to recreate lost architectural environments and future digital environments: foundations of the past and the future.

- There are many digitization methods, each with advantages and disadvantages [11,58,59,62,63,64]. In the present study, a low-cost methodology based on the use of UAVs [23,65] was chosen, which was outlined and incorporated into the workflow. This scheme is supported by other works and the professional experience of the authors [66,67].

- For some time now, new representation technologies have allowed us to create resources and content that reflect how our heritage is and could be. These digital twins, both the digital copies of the present and the digital proposals of the past and future [68], give rise to huge databases that have traditionally been used for the development of restoration projects, as in the case study of the Hermitage of the Vaquero, but once the work was completed, it was complex and difficult to use them as a knowledge tool.

- These databases are a tool of unquestionable value to change our ways of learning, traveling, disseminating, etc.

- In phase 2 of the methodology, in four simple steps, we can upload our virtual twins to a proposed metaverse.

- The choice of the metaverse is based on looking for the one that best suits our needs.

- The resources obtained in the metaverse are effective tools to use in education. They offer us the opportunity to give our students new digital resources through QR codes or other tools that complement and improve learning by offering resources in augmented reality and virtual reality that can be handled by students.

- In the same way, the resources obtained are a way for new tourism capable of reaching all places, regardless of money, location or physical conditions.

- This new tourism can travel through resources (VR or AR) not only to an existing site but also to new virtual environments from the past or proposals for the future.

5. Conclusions and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- De Marco, R.; Parrinello, S. Management of Mesh Features in 3d Reality-Based Polygonal Models to Support Non-Invasive Structural Diagnosis and Emergency Analysis in the Context of Earthquake Heritage in Italy. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 46, 173–180. [Google Scholar] [CrossRef]

- Parrinello, S.; Dell’Amico, A. Experience of Documentation for the Accessibility of Widespread Cultural Heritage. Heritage 2019, 2, 1032–1044. [Google Scholar] [CrossRef]

- Parrinello, S.; Picchio, F. Integration and Comparison of Close-Range Sfm Methodologies for the Analysis and the Development of the Historical City Center of Bethlehem. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 589–595. [Google Scholar] [CrossRef]

- Ángulo Fornos, R. Desarrollo de Modelos Digitales de Información Como Base Para el Conocimiento, la Intervención y la Gestión en el Patrimonio Arquitectónico: De la Captura Digital al Modelo HBIM; Universidad de Sevilla: Seville, Spain, 2020. [Google Scholar]

- Bruno, N.; Roncella, R. HBIM for Conservation: A New Proposal for Information Modeling. Remote Sens. 2019, 11, 1751. [Google Scholar] [CrossRef]

- Banfi, F. The Evolution of Interactivity, Immersion and Interoperability in HBIM: Digital Model Uses, VR and AR for Built Cultural Heritage. Int. J. Geo-Inf. 2021, 10, 685. [Google Scholar] [CrossRef]

- Gisbert Santaballa, A.G. La arqueología virtual como herramienta didáctica y motivadora. Tecnol. Cienc. Y Educ. 2019, 13, 119–147. [Google Scholar] [CrossRef]

- Cruz Franco, P.A.; Rueda Márquez de la Plata, A. La reconstrucción de un edificio en la calle Cedaceros de Madrid a partir de su fachada. In Proceedings of the VIII Jornadas de Patrimonio Arqueológico en la Comunidad de Madrid, Alcalá de Henares, Spain, 18 November 2011. [Google Scholar]

- Cruz Franco, P.A.; Rueda Marquez de la Plata, A.; Cortés Pérez, J.P. Veinte Siglos de Patrimonio de Extremadura en Ocho Puentes; Documentación Digital de las Obras Públicas: Extremadura, Spain, 2018; Volume 1, p. 208. [Google Scholar]

- Sdegno, A. For an Archeology of the Digital Iconography. In Proceedings of the International and Interdisciplinary Conference IMMAGINI? Brixen, Italy, 27–28 November 2017. [Google Scholar]

- Azmi, M.A.A.M.; Abbas, M.A.; Zainuddin, K.; Mustafar, M.A.; Zainal, M.Z.; Majid, Z.; Idris, K.M.; Ari, M.F.M.; Luh, L.C.; Aspuri, A. 3D Data Fusion Using Unmanned Aerial Vehicle (UAV) Photogrammetry and Terrestrial Laser Scanner (TLS). In Proceedings of the Second International Conference on the Future of ASEAN (ICoFA), Singapore, 13–15 May 2018; pp. 295–305. [Google Scholar]

- Obradović, M.V.I.; Đurić, I.; Kićanović, J.; Stojaković, V.; Obradović, R. Virtual reality models based on photogrammetric surveys—A case study of the iconostasis of the serbian orthodox cathedral church of saint nicholas in Sremski Karlovci (Serbia). Appl. Sci. 2020, 10, 2743. [Google Scholar] [CrossRef]

- Cruz Franco, P.A. La Bóveda de Rosca Como Paisaje Cultural y Urbano: Estudio Morfológico y Constructivo a Través de la Ciudad; Universidad Politécnica de Madrid, Escuela Técnica de Arquitectura: Madrid, Spain, 2016. [Google Scholar]

- Cruz Franco, P.A.; Rueda Márquez de la Plata, A.; Cruz Franco, J. From the Point Cloud to BIM Methodology for the Ideal Reconstruction of a Lost Bastion of the Cáceres Wall. Appl. Sci. 2020, 10, 6609. [Google Scholar] [CrossRef]

- Pepe, M.; Costantino, D.; Restuccia Garofalo, A. An Efficient Pipeline to Obtain 3D Model for HBIM and Structural Analysis Purposes from 3D Point Clouds. Appl. Sci. 2020, 10, 1235. [Google Scholar] [CrossRef]

- Ramos Sánchez, J.A.; Cruz Franco, P.A.; Rueda Márquez de la Plata, A. Achieving Universal Accessibility through Remote Virtualization and Digitization of Complex Archaeological Features: A Graphic and Constructive Study of the Columbarios of Merida. Remote Sens. 2022, 14, 3319. [Google Scholar] [CrossRef]

- Cruz Sagredo, M.; Lopez García, M. Inventario de Puentes de Extremadura; Universidad de Extremadura: Cáceres, Spain, 2000. [Google Scholar]

- Parrinello, S.; Francesca, P.; Dell’Amico, A.; De Marco, R. Prometheus. protocols for information models libraries tested on heritage of upper kama sites. msca rise 2018. In Proceedings of the II Simposio UID di Internazionalizzazione Della Ricerca, Patrimoni Culturali, Architettura, Paesaggio e Design tra Ricerca e Sperimentazione Didattica, Matera, Italy, 22 October 2019. [Google Scholar]

- Parrinello, S.; Morandotti, M.; Valenti, G.; Piveta, M.; Basso, A.; Inzerillo, A.; Lo Turco, M.; Picchio, F.; Santagati, C. Digital & Documentation: Databases and Models for the Enhancement of Heritage; Parrinello, S., Ed.; Edizioni dell’Università degli Studi di Pavia: Pavía, Italy, 2019; Volume 1. [Google Scholar]

- Templin, T.P.D. The use of low-cost unmanned aerial vehicles in the process of building models for cultural. tourism, 3D web and augmented/mixed reality applications. Sensors 2020, 20, 5457. [Google Scholar] [CrossRef] [PubMed]

- Gómez Bernal, E. Levantamiento Planimétrico y Propuesta de Accesibilidad en la Casa Romana de la Alcazaba de Mérida; Universidad de Extremadura: Cáceres, Spain, 2021. [Google Scholar]

- Ramos Sánchez, J.A. Utilización de la Metodología BIM en la Gestión del Patrimonio Arqueológico. Caso de Estudio el Recinto Arqueológico de los Llamados Columbarios de Merida; Universidad de Extremadura: Badajoz, Spain, 2021. [Google Scholar]

- Rodriguez Sánchez, C. Levantamiento y Propuesta de Accesibilidad en el Jardín Histórico de la Arguijuela de Arriba (Cáceres); Universidad de Extremadura: Badajoz, Spain, 2021. [Google Scholar]

- Aburbeian, A.M.; Owda, A.Y.; Owda, M. A Technology Acceptance Model Survey of the Metaverse Prospects. AI 2022, 3, 285–302. [Google Scholar] [CrossRef]

- Comes, R.; Neamțu, C.G.D.; Grec, C.; Buna, Z.L.; Găzdac, C.; Mateescu-Suciu, L. Digital Reconstruction of Fragmented Cultural Heritage Assets: The Case Study of the Dacian Embossed Disk from Piatra Roșie. Appl. Sci. 2022, 12, 8131. [Google Scholar] [CrossRef]

- Lee, H.; Hwang, Y. Technology-Enhanced Education through VR-Making and Metaverse-Linking to Foster Teacher Readiness and Sustainable Learning. Sustainability 2022, 14, 4786. [Google Scholar] [CrossRef]

- Liu, Z.; Ren, L.; Xiao, C.; Zhang, K.; Demian, P. Virtual Reality Aided Therapy towards Health 4.0: A Two-Decade Bibliometric Analysis. Int. J. Environ. Res. Public Health 2022, 19, 1525. [Google Scholar] [CrossRef] [PubMed]

- Park, S.; Kim, S. Identifying World Types to Deliver Gameful Experiences for Sustainable Learning in the Metaverse. Sustainability 2022, 14, 1361. [Google Scholar] [CrossRef]

- Ruiz Lanuza, A.P.F.; Pulido Fernández, J.I. El impacto del turismo en los Sitios Patrimonio de la Humanidad. Una revisión de las publicaciones científicas de la base de datos Scopus. Pasos. Rev. Tur. Y Patrim. Cult. 2015, 13, 1247–1264. [Google Scholar] [CrossRef]

- Pelegrín Naranjo, L.; Pelegrín Entenza, N.; Vázquez Pérez, A. An Analysis of Tourism Demand as a Projection from the Destination towards a Sustainable Future: The Case of Trinidad. Sustainability 2022, 14, 5639. [Google Scholar] [CrossRef]

- Rueda Márquez de la Plata, A.; Cruz Franco, P.A.; Ramos Sánchez, J.A. Architectural Survey, Diagnostic, and Constructive Analysis Strategies for Monumental Preservation of Cultural Heritage and Sustainable Management of Tourism. Buildings 2022, 12, 1156. [Google Scholar] [CrossRef]

- Lee, U.-K. Tourism Using Virtual Reality: Media Richness and Information System Successes. Sustainability 2022, 14, 3975. [Google Scholar] [CrossRef]

- Jurlin, K. Were Culture and Heritage Important for the Resilience of Tourism in the COVID-19 Pandemic? J. Risk Financ. Manag. 2022, 15, 205. [Google Scholar] [CrossRef]

- Parrinello, S. The virtual reconstruction of the historic districts of Shanghai European identity in traditional Chinese architecture. Disegnarecon 2020, 13. [Google Scholar] [CrossRef]

- Parrinello, S.; Porzilli, S. Rilievo Laser Scanner 3D per l’analisi morfologica e il monitoraggio strutturale di alcuni ambienti inseriti nel progetto di ampliamento del complesso museale degli Uffizi a Firenze. In Proceedings of the Reuso 2016: Contributi per la Documentazione, Conservazione e Recupero del Patrimonio Architettonico e per la Tutela Paesaggistica, Pavia, Italy, 6–8 October 2016. [Google Scholar]

- Shehade, M.; Stylianou-Lambert, T. Virtual Reality in Museums: Exploring the Experiences of Museum Professionals. Appl. Sci. 2020, 10, 4031. [Google Scholar] [CrossRef]

- Yastikli, N. Documentation of cultural heritage using digital photogrammetry and laser scanning. J. Cult. Herit. 2017, 42, 423–427. [Google Scholar] [CrossRef]

- Gonçalves, A.R.; Dorsch, L.L.P.; Figueiredo, M. Digital Tourism: An Alternative View on Cultural Intangible Heritage and Sustainability in Tavira, Portugal. Sustainability 2022, 14, 2912. [Google Scholar] [CrossRef]

- Cruz Franco, P.A.; Rueda Márquez de la Plata, A. Documentación y estudio de un “agregado” en la Ciudad de Cáceres: Análisis fotogramétrico y gráfico. In Proceedings of the Congreso Internacional sobre Documentación, Conservación y Reutilización del Patrimonio Arquitectónico, Madrid, Spain, 21 June 2013. [Google Scholar]

- Roinioti, E.; Pandia, E.; Konstantakis, M.; Skarpelos, Y. Gamification in Tourism: A Design Framework for the TRIPMENTOR Project. Digital 2022, 2, 191–205. [Google Scholar] [CrossRef]

- Evangelidis, K.; Sylaiou, S.; Papadopoulos, T. Mergin’ Mode: Mixed Reality and Geoinformatics for Monument Demonstration. Appl. Sci. 2020, 10, 3826. [Google Scholar] [CrossRef]

- Fanini, B.; Ferdani, D.; Demetrescu, E.; Berto, S.; d’Annibale, E. ATON: An Open-Source Framework for Creating Immersive, Collaborative and Liquid Web-Apps for Cultural Heritage. Appl. Sci. 2021, 11, 11062. [Google Scholar] [CrossRef]

- Giuffrida, D.; Bonanno, S.; Parrotta, F.; Mollica Nardo, V.; Anastasio, G.; Saladino, M.L.; Armetta, F.; Ponterio, R.C. The Church of S. Maria Delle Palate in Tusa (Messina, Italy): Digitization and Diagnostics for a New Model of Enjoyment. Remote Sens. 2022, 14, 1490. [Google Scholar] [CrossRef]

- Pritchard, D.; Sperner, J.; Hoepner, S.; Tenschert, R. Terrestrial laser scanning for heritage conservation: The Cologne Cathedral documentation project. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 213–220. [Google Scholar] [CrossRef] [Green Version]

- Okanovic, V.; Ivkovic-Kihic, I.; Boskovic, D.; Mijatovic, B.; Prazina, I.; Skaljo, E.; Rizvic, S. Interaction in eXtended Reality Applications for Cultural Heritage. Appl. Sci. 2022, 12, 1241. [Google Scholar] [CrossRef]

- Cruz Franco, J.; Cruz Franco, P.A.; Rueda Márquez de la Plata, A.; Ramos Rubio, J.A.; Rueda Muñoz de San Pedro, J.M. Evolución histórica de la muralla de Cáceres y nuevos descubrimientos. El postigo de San Miguel, un lienzo perdido de la cerca almohade. Rev. De Estud. Extrem. 2016, 1869–1910. [Google Scholar]

- Barea Azcón, P. La iconografía de la Virgen de Guadalupe de México en España. Arch. Español Arte 2007, 80, 186–199. [Google Scholar] [CrossRef]

- Lorite Cruz, P.J. La influencia de la Virgen de Guadalupe en San Miguel de Cebú y el resto de las Filipinas, así como en Guam. In Proceedings of the XIV Jornadas de Historia en Llerena, Llerena, Spain, 25 October 2013. [Google Scholar]

- Tomás, R.; Adrián, R.; Cano, M.; Abellan, A.; Jorda, L. Structure from Motion (SfM): Una técnica fotogramétrica de bajo coste para la caracterización y monitoreo de macizos rocosos. In Proceedings of the 10 Simposio Nacional de Ingeniería Geotécnica, A Coruña, Spain, 13 October 2016. [Google Scholar]

- Stoica, G.D.; Andreiana, V.-A.; Duica, M.C.; Stefan, M.-C.; Susanu, I.O.; Coman, M.D.; Iancu, D. Perspectives for the Development of Sustainable Cultural Tourism. Sustainability 2022, 14, 5678. [Google Scholar] [CrossRef]

- Salem, T.; Dragomir, M. Options for and Challenges of Employing Digital Twins in Construction Management. Appl. Sci. 2022, 12, 2928. [Google Scholar] [CrossRef]

- Yang, B.; Lv, Z.; Wang, F. Digital Twins for Intelligent Green Buildings. Buildings 2022, 12, 856. [Google Scholar] [CrossRef]

- Lv, Z.; Li, Y. Wearable Sensors for Vital Signs Measurement: A Survey. J. Sens. Actuator Netw. 2022, 11, 19. [Google Scholar] [CrossRef]

- Lv, Z.; Wang, J.-Y.; Kumar, N.; Lloret, J. Special Issue on “Augmented Reality, Virtual Reality & Semantic 3D Reconstruction”. Appl. Sci. 2021, 11, 8590. [Google Scholar] [CrossRef]

- Yan, Z.; Lv, Z. The Influence of Immersive Virtual Reality Systems on Online Social Application. Appl. Sci. 2020, 10, 5058. [Google Scholar] [CrossRef]

- Jiang, M.; Pan, Z.; Tang, Z. Visual Object Tracking Based on Cross-Modality Gaussian-Bernoulli Deep Boltzmann Machines with RGB-D Sensors. Sensors 2017, 17, 121. [Google Scholar] [CrossRef]

- Chen, M.; Tang, Q.; Xu, S.; Leng, P.; Pan, Z. Design and Evaluation of an Augmented Reality-Based Exergame System to Reduce Fall Risk in the Elderly. Int. J. Environ. Res. Public Health 2020, 17, 7208. [Google Scholar] [CrossRef] [PubMed]

- Pérez Sendín, M. Prototipado Físico a Partir de Gemelos Digitales Aplicado a la Torre de Bujaco; Universidad de Extremadura: Cáceres, Spain, 2022. [Google Scholar]

- Parrinello, S.; Picchio, F.; De Marco, R.; Dell’Amico, A. Documenting the Cultural Heritage Routes. The Creation of Informative Models of Historical Russian Churches on Upper Kama Region. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W15, 887–894. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Grussenmeyer, P. Documentation of heritage buildings using close—range UAV images: Dense matching issues, comparison and case studies. Photogramm. Record. 2017, 32, 23. [Google Scholar] [CrossRef]

- Parrinello, S.; Gomez-Blanco, A.; Picchio, F. El Palacio del Generalife del Levantamiento Digital al Proyecto de Gestión. Cuaderno de Trabajo Para la Documentación Arquitectónica; Pavia University Press: Pavia, Italy, 2017; Volume 1, p. 224. [Google Scholar]

- Monego, M.; Fabris, M.; Menin, A.; Achilli, V. 3-D Survey Applied to Industrial Archaeology by Tls Methodology. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 449–455. [Google Scholar] [CrossRef]

- Arapakopoulos, A.; Liaskos, O.; Mitsigkola, S.; Papatzanakis, G.; Peppa, S.; Remoundos, G.; Ginnis, A.; Papadopoulos, C.; Mazis, D.; Tsilikidis, O.; et al. 3D Reconstruction & Modeling of the Traditional Greek Trechadiri: “Aghia Varvara”. Heritage 2022, 5, 1295–1309. [Google Scholar] [CrossRef]

- Intignano, M.; Biancardo, S.A.; Oreto, C.; Viscione, N.; Veropalumbo, R.; Russo, F.; Ausiello, G.; Dell’Acqua, G. A Scan-to-BIM Methodology Applied to Stone Pavements in Archaeological Sites. Heritage 2021, 4, 3032–3049. [Google Scholar] [CrossRef]

- Gómez Bernal, E.; Cruz Franco, P.A.; Rueda Marquez de la Plata, A. Drones in architecture research: Methodological application of the use of drones for the accessible intervention in a roman house in the Alcazaba of Mérida (Spain). In Proceedings of the D-SITE. Drines-Systems of Information on Cultural Heritage, Pavia, Italy, 24–26 June 2020. [Google Scholar]

- Viñals, M.J.; Gilabert-Sansalvador, L.; Sanasaryan, A.; Teruel-Serrano, M.-D.; Darés, M. Online Synchronous Model of Interpretive Sustainable Guiding in Heritage Sites: The Avatar Tourist Visit. Sustainability 2021, 13, 7179. [Google Scholar] [CrossRef]

- Rueda Marquez de la Plata, A.; Cruz Franco, P.A.; Cruz Franco, J.; Gibello Bravo, V. Protocol Development for Point Clouds, Triangulated Meshes and Parametric Model Acquisition and Integration in an HBIM Workflow for Change Control and Management in a UNESCO’s World Heritage Site. Sensors 2021, 21, 1083. [Google Scholar] [CrossRef]

- Tan, J.; Leng, J.; Zeng, X.; Feng, D.; Yu, P. Digital Twin for Xiegong’s Architectural Archaeological Research: A Case Study of Xuanluo Hall, Sichuan, China. Buildings 2022, 12, 1053. [Google Scholar] [CrossRef]

- Kushwaha, S.K.P.; Dayal, K.R.; Sachchidanand; Raghavendra, S.; Pande, H.; Tiwari, P.S.; Agrawal, S.; Srivastava, S.K. 3D Digital Documentation of a Cultural Heritage Site Using Terrestrial Laser Scanner—A Case Study. In Applications of Geomatics in Civil Engineering; Springer: Singapore, 2020; pp. 49–58. [Google Scholar] [CrossRef]

- Fryskowska, A.; Walczykowski, P.; Delis, P.; Wojtkowska, M. ALS and TLS data fusion in cultural heritage documentation and modeling. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 147–150. [Google Scholar] [CrossRef] [Green Version]

- Bocheńska, A.; Markiewicz, J.; Łapiński, S. The Combination of the Image and Range-Based 3d Acquisition in Archaeological and Architectural Research in the Royal Castle in Warsaw. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 177–184. [Google Scholar] [CrossRef]

- Grussenmeyer, P.; Landes, T.; Voegtle, T.; Ringle, K. Comparison Methods of Terrestrial Laser Scanning, Photogrammetry and Tacheometry Data for Recording of Cultural Heritage Buildings. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Beijing, China, 3–11 July 2008; pp. 213–218. [Google Scholar]

- Adamopoulos, E.; Rinaudo, F.; Ardissono, L. A Critical Comparison of 3D Digitization Techniques for Heritage Objects. Int. J. Geo-Inf. 2020, 10, 10. [Google Scholar] [CrossRef]

- Valverde, H. Diferentes niveles de realidad mixta en entonrnos museales como una herramienta para cambiar la experiencia de los visitantes en los museos. In Patrimonio Digital: Métodos Computacionales y Medios Interactivos Para Estudiar y Divulgar el Patrimonio Cultural; Jiménez-Badillo, D., Ed.; Instituri Nacional de Antropología e Historia: México City, Mexico, 2021; Volume 1. [Google Scholar]

- Zuo, T.; Jiang, J.; Spek, E.V.d.; Birk, M.; Hu, J. Situating Learning in AR Fantasy, Design Considerations for AR Game-Based Learning for Children. Electronics 2022, 11, 2331. [Google Scholar] [CrossRef]

- Meng, X. Formal Analysis and Application of the New Mode of “VR+Education”. In Proceedings of the ITM Web of Conferences, Timisoara, Romania, 23–26 May 2008; EDP Sciences: Les Ulis, France, 2019; Volume 3. [Google Scholar] [CrossRef] [Green Version]

| Characteristics | |

|---|---|

| Protection | Cultural and architectonical heritage |

| Form | Complex geometries (vaults, arches, domes [13], etc.) |

| Details | Singular elements |

| Dimensions | Architectural elements that can be walked through in VR |

| Metaverse | Interiors to create immersive experiences |

| Characteristics | |

|---|---|

| Name | Vaquero’s Hermitage |

| Coordinates | 39°28′27″ N 6°22′06″ W |

| Altitude | 426 m altitude above sea level |

| Construction | Siglo XVII |

| Extension | Siglo XVIII (baroque-style extension) |

| Area | 281.73 m² |

| Other features | It borders the Almohad wall of Cáceres [46]. |

| Place | VR | AR | Gaming | HDR | System Support | |

|---|---|---|---|---|---|---|

| A-frame | Local/cloud |  |  |  |  | Self-governing |

| Blender | Local |  |  |  |  | Self-governing |

| CloudPlano | Cloud |  |  |  |  | Self-governing |

| Concept3D | Local |  |  |  |  | Self-governing |

| CenarioVR | Local |  |  |  |  | Self-governing |

| Enscape | Local |  |  |  |  | Subordinate to other software |

| Fusion18 | Local |  |  |  |  | Self-governing |

| Google AR/VR | Cloud |  |  |  |  | Self-governing |

| Google Earth VR | Cloud |  |  |  |  | Self-governing |

| IrisVR | Local |  |  |  |  | Subordinate to other software |

| Lumion | Local |  |  |  |  | Subordinate to other software |

| LiveTour | Local |  |  |  |  | Self-governing |

| Marmoset Toobag | Local |  |  |  |  | Subordinate to other software |

| MODO VR | Local |  |  |  |  | Self-governing |

| Pannellum | Local |  |  |  |  | Self-governing |

| Pano2VR | Local |  |  |  |  | Self-governing |

| Panorama Image Viewer | Cloud |  |  |  |  | Self-governing |

| Photo Sphere Viewer | Local |  |  |  |  | Self-governing |

| Powertrak CPQ Software Suite | Local |  |  |  |  | Self-governing |

| Revit | Local |  |  |  |  | Self-governing |

| Shapespark | Local |  |  |  |  | Subordinate to other software |

| Sketchfab | Cloud |  |  |  |  | Self-governing |

| Verge3D | Local |  |  |  |  | Subordinate to other software |

| Vray | Local |  |  |  |  | Subordinate to other software |

| VRdirect | Local/cloud |  |  |  |  | Self-governing |

| TechViz | Cloud |  |  |  |  | Subordinate to other software |

| Trezi | Local |  |  |  |  | Subordinate to other software |

| Twinmotion | Local |  |  |  |  | Subordinate to other software |

| “Digital Twins” Creators | AR/VR from “Digital Twins” (*) | AR/VR from 360° Images and Videos from Reality (*) | Virtual Tour from “Digital Twins” | Virtual Tour from Reality | HDR from “Digital Twins” | HDR from Reality | |

|---|---|---|---|---|---|---|---|

| 3DCloud |  |  |  |  |  |  |  |

| 3DViewer |  |  |  |  |  |  |  |

| A-frame |  |  |  |  |  |  |  |

| Blender |  |  |  |  |  |  |  |

| CloudPlano |  |  |  |  |  |  |  |

| Concept3D |  |  |  |  |  |  |  |

| Cenario VR |  |  |  |  |  |  |  |

| Enscape |  |  |  |  |  |  |  |

| Fusion18 |  |  |  |  |  |  |  |

| Google AR/VR |  |  |  |  |  |  |  |

| Google Earth VR |  |  |  |  |  |  |  |

| IrisVR |  |  |  |  |  |  |  |

| Lumion |  |  |  |  |  |  |  |

| LiveTour |  |  |  |  |  |  |  |

| Marmoset Toolbag |  |  |  |  |  |  |  |

| Marzipano |  |  |  |  |  |  |  |

| MODO VR |  |  |  |  |  |  |  |

| Pannellum |  |  |  |  |  |  |  |

| Pano2VR |  |  |  |  |  |  |  |

| Panorama Image Viewer |  |  |  |  |  |  |  |

| Photo Sphere Viewer |  |  |  |  |  |  |  |

| Powertrak CPQ Software Suite |  |  |  |  |  |  |  |

| Revit |  |  |  |  |  |  |  |

| Shapespark |  |  |  |  |  |  |  |

| Sketchfab |  |  |  |  |  |  |  |

| Verge3D |  |  |  |  |  |  |  |

| Visor 360° |  |  |  |  |  |  |  |

| Vray |  |  |  |  |  |  |  |

| VRdirect |  |  |  |  |  |  |  |

| TechViz |  |  |  |  |  |  |  |

| Trezi |  |  |  |  |  |  |  |

| Twinmotion |  |  |  |  |  |  |  |

| Formats | |

|---|---|

| CloudPlano | .jpg, .jpeg, .png, .tiff |

| Concept3D | CAD files, .jpg, .jpeg, .png and .tiff |

| Enscape | Plugins of Revit, Rhinoceros, SketchUp, Archicad and Vectorworks |

| IrisVR | Plugins for Revit, Naviswork, Rhinoceros and SketchUp and .obj, .fbx files |

| Lumion | Plugins for Revit, SketchUp, Rhinoceros, Vectorworks, AutoCAD, ARCHICAD and BricsCAD, and it is compatible with ALLPLAN and 3ds Max. |

| Shapespark | Extension for SketchUp, 3ds Max, Revit Maya and Cinema 4D. Other .fbx, .obj and .DAE files. |

| Sketchfab | .fbx, .obj, .DAE, .blend, .STL, gltf, .bin, .glb, .3dc, .3ds, .las, .ply, .igs and .usd |

| Digital Twins from Photogrammetry | Free Access for Users | AR from Digital Twins | VR from Digital Twins | |

|---|---|---|---|---|

| CloudPlano |  |  |  |  |

| Concept3D |  |  |  |  |

| Enscape |  |  |  |  |

| IrisVR |  |  |  |  |

| Lumion |  |  |  |  |

| Shapespark |  |  |  |  |

| Sketchfab |  |  |  |  |

| Light Properties | Ground Shadows | Environment | Shadows | Light Intensity | Shadow Bias | HDR from Reality | |

|---|---|---|---|---|---|---|---|

| PBR (lit shading ) |  |  |  |  |  |  |  |

| PBR (shadowless) |  |  |  |  |  |  |  |

| MatCap |  |  |  |  |  |  |  |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cruz Franco, P.A.; Rueda Márquez de la Plata, A.; Gómez Bernal, E. Protocols for the Graphic and Constructive Diffusion of Digital Twins of the Architectural Heritage That Guarantee Universal Accessibility through AR and VR. Appl. Sci. 2022, 12, 8785. https://doi.org/10.3390/app12178785

Cruz Franco PA, Rueda Márquez de la Plata A, Gómez Bernal E. Protocols for the Graphic and Constructive Diffusion of Digital Twins of the Architectural Heritage That Guarantee Universal Accessibility through AR and VR. Applied Sciences. 2022; 12(17):8785. https://doi.org/10.3390/app12178785

Chicago/Turabian StyleCruz Franco, Pablo Alejandro, Adela Rueda Márquez de la Plata, and Elena Gómez Bernal. 2022. "Protocols for the Graphic and Constructive Diffusion of Digital Twins of the Architectural Heritage That Guarantee Universal Accessibility through AR and VR" Applied Sciences 12, no. 17: 8785. https://doi.org/10.3390/app12178785