Deep Anomaly Detection for In-Vehicle Monitoring—An Application-Oriented Review

Abstract

1. Introduction

- Review of a large number of state-of-the-art methods for deep video anomaly detection, aiming to explain their framework and implementation, thus providing a deeper understanding of potential issues raised by the new scenario that these methods did not contemplate. Benchmarks for their performance were compiled as well as publicly accessible source codes to evaluate the ease of applicability;

- Review of a large number of datasets with real anomalies that are used to benchmark state-of-the-art models, investigating if any portion of the available datasets is representative of the real-world settings for in-vehicle monitoring or if the sequences can be repurposed for this matter. As public datasets dedicated to in-vehicle monitoring are lacking, this analysis is vital;

- This work initiates an important discussion on application-oriented issues related to deep anomaly detection for in-vehicle monitoring. Other surveys and reviews have disregarded this scenario and its specificities, despite its relevance, as shown by the listed funded projects that seek application-oriented solutions for in-vehicle monitoring. Possible solutions were proposed, aiming to follow up on future work.

2. Literature Review on Deep Anomaly Detection

2.1. Evaluation Metrics

2.2. Semi-Supervised Strategies

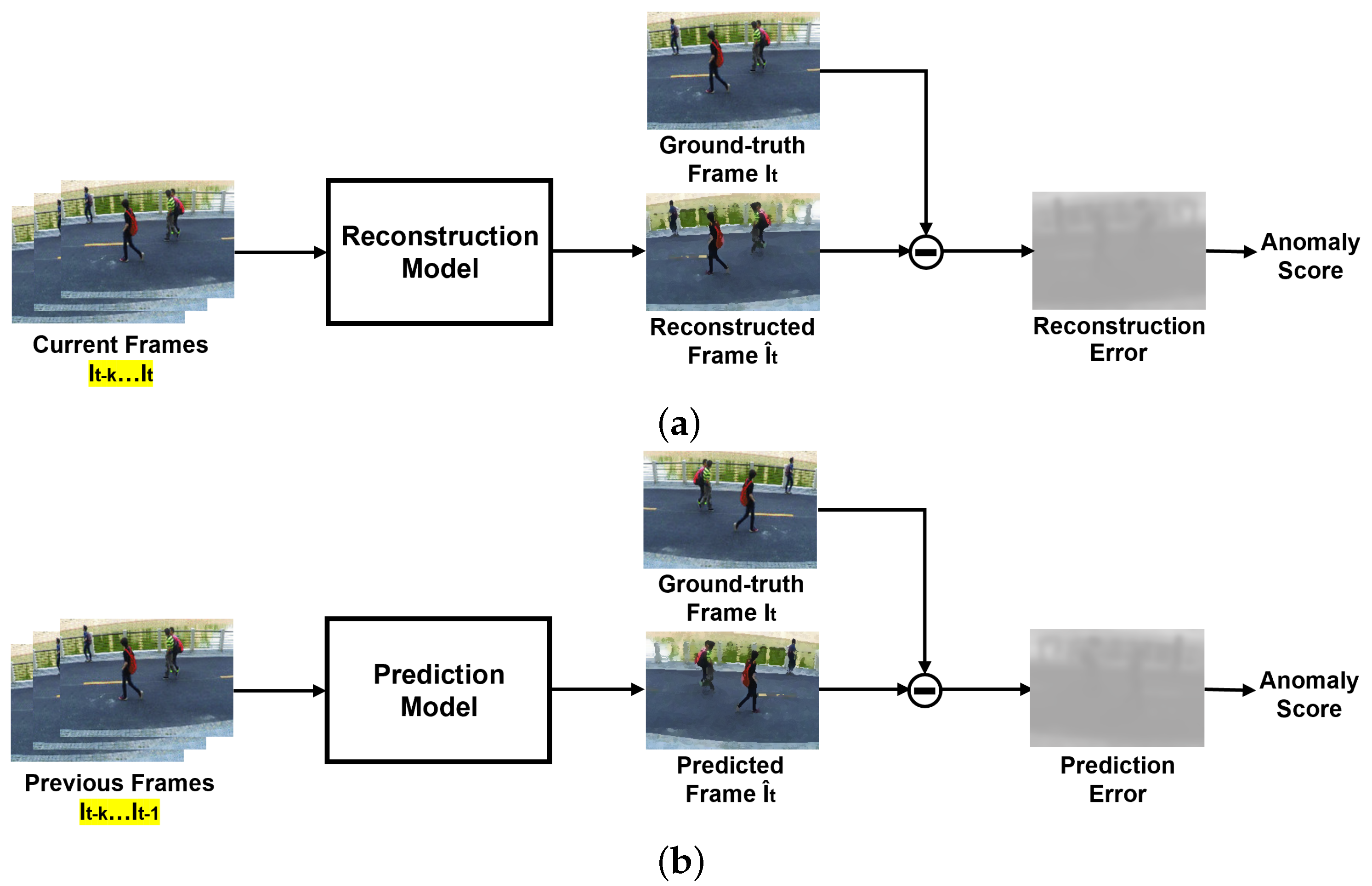

2.2.1. Reconstruction-Based Methods

2.2.2. Prediction-Based Methods

2.3. Weakly Supervised Strategies

2.4. Fully Supervised Strategies

3. Publicly Available Datasets

3.1. Real-World Datasets

3.1.1. Pedestrians and Crowds

3.1.2. Real-World Anomalies

3.1.3. Traffic

3.2. Synthetic Alternatives

4. Projects and Resources

4.1. Projects

4.2. Resources

5. Challenges, Approaches and Opportunities for In-Vehicle Monitoring

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kiran, B.R.; Thomas, D.M.; Parakkal, R. An overview of deep learning based methods for unsupervised and semi-supervised anomaly detection in videos. J. Imaging 2018, 4, 36. [Google Scholar] [CrossRef]

- Xu, D.; Yan, Y.; Ricci, E.; Sebe, N. Detecting anomalous events in videos by learning deep representations of appearance and motion. Comput. Vis. Image Underst. 2017, 156, 117–127. [Google Scholar] [CrossRef]

- Liu, W.; Luo, W.; Lian, D.; Gao, S. Future frame prediction for anomaly detection–a new baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6536–6545. [Google Scholar]

- Luo, W.; Liu, W.; Gao, S. A revisit of sparse coding based anomaly detection in stacked rnn framework. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 341–349. [Google Scholar]

- Augusto, P.; Cardoso, J.S.; Fonseca, J. Automotive interior sensing-towards a synergetic approach between anomaly detection and action recognition strategies. In Proceedings of the 2020 IEEE 4th International Conference on Image Processing, Applications and Systems (IPAS), Virtual Event, 9–11 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 162–167. [Google Scholar]

- Pang, G.; Shen, C.; Cao, L.; Hengel, A.V.D. Deep learning for anomaly detection: A review. ACM Comput. Surv. (CSUR) 2021, 54, 1–38, Article 38. [Google Scholar] [CrossRef]

- Zimek, A.; Schubert, E.; Kriegel, H.P. A survey on unsupervised outlier detection in high-dimensional numerical data. Stat. Anal. Data Min. ASA Data Sci. J. 2012, 5, 363–387. [Google Scholar] [CrossRef]

- Gunning, D.; Stefik, M.; Choi, J.; Miller, T.; Stumpf, S.; Yang, G.Z. XAI—Explainable artificial intelligence. Sci. Robot. 2019, 4, eaay7120. [Google Scholar] [CrossRef]

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly detection: A survey. ACM Comput. Surv. (CSUR) 2009, 41, 1–58, Article 15. [Google Scholar] [CrossRef]

- Pang, G.; Shen, C.; Jin, H.; Hengel, A. Deep weakly-supervised anomaly detection. arXiv 2019, arXiv:1910.13601. [Google Scholar]

- Lu, C.; Shi, J.; Jia, J. Abnormal event detection at 150 fps in matlab. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2720–2727. [Google Scholar]

- Mahadevan, V.; Li, W.; Bhalodia, V.; Vasconcelos, N. Anomaly detection in crowded scenes. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 1975–1981. [Google Scholar]

- Wang, X.; Che, Z.; Jiang, B.; Xiao, N.; Yang, K.; Tang, J.; Ye, J.; Wang, J.; Qi, Q. Robust unsupervised video anomaly detection by multipath frame prediction. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 2301–2312. [Google Scholar] [CrossRef]

- Xu, D.; Ricci, E.; Yan, Y.; Song, J.; Sebe, N. Learning deep representations of appearance and motion for anomalous event detection. arXiv 2015, arXiv:1510.01553. [Google Scholar]

- Hasan, M.; Choi, J.; Neumann, J.; Roy-Chowdhury, A.K.; Davis, L.S. Learning temporal regularity in video sequences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 733–742. [Google Scholar]

- Sabokrou, M.; Fathy, M.; Hoseini, M. Video anomaly detection and localisation based on the sparsity and reconstruction error of auto-encoder. Electron. Lett. 2016, 52, 1122–1124. [Google Scholar] [CrossRef]

- Zhao, Y.; Deng, B.; Shen, C.; Liu, Y.; Lu, H.; Hua, X.S. Spatio-temporal autoencoder for video anomaly detection. In Proceedings of the 25th ACM international conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1933–1941. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Luo, W.; Liu, W.; Gao, S. Remembering history with convolutional lstm for anomaly detection. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 439–444. [Google Scholar]

- Ranzato, M.; Szlam, A.; Bruna, J.; Mathieu, M.; Collobert, R.; Chopra, S. Video (language) modeling: A baseline for generative models of natural videos. arXiv 2014, arXiv:1412.6604. [Google Scholar]

- Wisdom, S.; Powers, T.; Pitton, J.; Atlas, L. Interpretable recurrent neural networks using sequential sparse recovery. arXiv 2016, arXiv:1611.07252. [Google Scholar]

- Gong, D.; Liu, L.; Le, V.; Saha, B.; Mansour, M.R.; Venkatesh, S.; Hengel, A. Memorizing normality to detect anomaly: Memory-augmented deep autoencoder for unsupervised anomaly detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1705–1714. [Google Scholar]

- Ravanbakhsh, M.; Nabi, M.; Sangineto, E.; Marcenaro, L.; Regazzoni, C.; Sebe, N. Abnormal event detection in videos using generative adversarial nets. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1577–1581. [Google Scholar]

- Ganokratanaa, T.; Aramvith, S.; Sebe, N. Unsupervised anomaly detection and localization based on deep spatiotemporal translation network. IEEE Access 2020, 8, 50312–50329. [Google Scholar] [CrossRef]

- Ye, M.; Peng, X.; Gan, W.; Wu, W.; Qiao, Y. Anopcn: Video anomaly detection via deep predictive coding network. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 1805–1813. [Google Scholar]

- Yu, G.; Wang, S.; Cai, Z.; Zhu, E.; Xu, C.; Yin, J.; Kloft, M. Cloze test helps: Effective video anomaly detection via learning to complete video events. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 583–591. [Google Scholar]

- Chen, C.; Xie, Y.; Lin, S.; Yao, A.; Jiang, G.; Zhang, W.; Qu, Y.; Qiao, R.; Ren, B.; Ma, L. Comprehensive Regularization in a Bi-directional Predictive Network for Video Anomaly Detection. In Proceedings of the American Association for Artificial Intelligence, Virtual, 22 February–1 March 2022; pp. 1–9. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Georgescu, M.I.; Barbalau, A.; Ionescu, R.T.; Khan, F.S.; Popescu, M.; Shah, M. Anomaly detection in video via self-supervised and multi-task learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12742–12752. [Google Scholar]

- Lee, S.; Kim, H.G.; Ro, Y.M. BMAN: Bidirectional multi-scale aggregation networks for abnormal event detection. IEEE Trans. Image Process. 2019, 29, 2395–2408. [Google Scholar] [CrossRef] [PubMed]

- Bergmann, P.; Fauser, M.; Sattlegger, D.; Steger, C. Uninformed students: Student-teacher anomaly detection with discriminative latent embeddings. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4183–4192. [Google Scholar]

- Ionescu, R.T.; Khan, F.S.; Georgescu, M.I.; Shao, L. Object-centric auto-encoders and dummy anomalies for abnormal event detection in video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7842–7851. [Google Scholar]

- Doshi, K.; Yilmaz, Y. Any-shot sequential anomaly detection in surveillance videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 934–935. [Google Scholar]

- Doshi, K.; Yilmaz, Y. Continual learning for anomaly detection in surveillance videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 254–255. [Google Scholar]

- Park, H.; Noh, J.; Ham, B. Learning Memory-guided Normality for Anomaly Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14372–14381. [Google Scholar]

- Cai, R.; Zhang, H.; Liu, W.; Gao, S.; Hao, Z. Appearance-motion memory consistency network for video anomaly detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 938–946. [Google Scholar]

- Ramachandra, B.; Jones, M.; Vatsavai, R. Learning a distance function with a Siamese network to localize anomalies in videos. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 2598–2607. [Google Scholar]

- Feng, J.C. Papers for Video Anomaly Detection, Released Codes Collection, Performance Comparision. 2022. Available online: https://github.com/fjchange/awesome-video-anomaly-detection (accessed on 26 July 2022).

- Sultani, W.; Chen, C.; Shah, M. Real-world Anomaly Detection in Surveillance Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6479–6488. [Google Scholar]

- Zhang, J.; Qing, L.; Miao, J. Temporal convolutional network with complementary inner bag loss for weakly supervised anomaly detection. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 4030–4034. [Google Scholar]

- Wan, B.; Fang, Y.; Xia, X.; Mei, J. Weakly supervised video anomaly detection via center-guided discriminative learning. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), Virtual, 6–10 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? A new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Zhu, Y.; Newsam, S. Motion-aware feature for improved video anomaly detection. arXiv 2019, arXiv:1907.10211. [Google Scholar]

- Zhong, J.X.; Li, N.; Kong, W.; Liu, S.; Li, T.H.; Li, G. Graph convolutional label noise cleaner: Train a plug-and-play action classifier for anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1237–1246. [Google Scholar]

- Tian, Y.; Pang, G.; Chen, Y.; Singh, R.; Verjans, J.W.; Carneiro, G. Weakly-supervised video anomaly detection with robust temporal feature magnitude learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 4975–4986. [Google Scholar]

- Li, S.; Liu, F.; Jiao, L. Self-training multi-sequence learning with Transformer for weakly supervised video anomaly detection. In Proceedings of the AAAI, Virtual, 22 February–1 March 2022; Volume 24. [Google Scholar]

- Liu, Z.; Ning, J.; Cao, Y.; Wei, Y.; Zhang, Z.; Lin, S.; Hu, H. Video swin transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 3202–3211. [Google Scholar]

- Feng, J.C.; Hong, F.T.; Zheng, W.S. MIST: Multiple Instance Self-Training Framework for Video Anomaly Detection. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Wu, P.; Liu, J. Learning causal temporal relation and feature discrimination for anomaly detection. IEEE Trans. Image Process. 2021, 30, 3513–3527. [Google Scholar] [CrossRef]

- Ullah, W.; Ullah, A.; Haq, I.U.; Muhammad, K.; Sajjad, M.; Baik, S.W. CNN features with bi-directional LSTM for real-time anomaly detection in surveillance networks. Multimed. Tools Appl. 2021, 80, 16979–16995. [Google Scholar] [CrossRef]

- Ullah, W.; Ullah, A.; Hussain, T.; Muhammad, K.; Heidari, A.A.; Del Ser, J.; Baik, S.W.; De Albuquerque, V.H.C. Artificial Intelligence of Things-assisted two-stream neural network for anomaly detection in surveillance Big Video Data. Future Gener. Comput. Syst. 2022, 129, 286–297. [Google Scholar] [CrossRef]

- Liu, K.; Ma, H. Exploring background-bias for anomaly detection in surveillance videos. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 1490–1499. [Google Scholar]

- Landi, F.; Snoek, C.G.; Cucchiara, R. Anomaly locality in video surveillance. arXiv 2019, arXiv:1901.10364. [Google Scholar]

- Acsintoae, A.; Florescu, A.; Georgescu, M.; Mare, T.; Sumedrea, P.; Ionescu, R.T.; Khan, F.S.; Shah, M. UBnormal: New Benchmark for Supervised Open-Set Video Anomaly Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Adam, A.; Rivlin, E.; Shimshoni, I.; Reinitz, D. Robust real-time unusual event detection using multiple fixed-location monitors. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 555–560. [Google Scholar] [CrossRef] [PubMed]

- Unusual Crowd Activity Dataset of University of Minnesota. 2006. Available online: http://mha.cs.umn.edu/movies/crowdactivity-all.avi (accessed on 2 August 2022).

- Wu, P.; Liu, J.; Shi, Y.; Sun, Y.; Shao, F.; Wu, Z.; Yang, Z. Not only look, but also listen: Learning multimodal violence detection under weak supervision. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020; pp. 322–339. [Google Scholar]

- Rodrigues, R.; Bhargava, N.; Velmurugan, R.; Chaudhuri, S. Multi-timescale trajectory prediction for abnormal human activity detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Village, CO, USA, 1–5 March 2020; pp. 2626–2634. [Google Scholar]

- Pranav, M.; Zhenggang, L. A day on campus—An anomaly detection dataset for events in a single camera. In Proceedings of the Asian Conference on Computer Vision, Kyoto, Japan, 30 November–December 2020. [Google Scholar]

- Ramachandra, B.; Jones, M. Street Scene: A new dataset and evaluation protocol for video anomaly detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 2569–2578. [Google Scholar]

- Dias Da Cruz, S.; Taetz, B.; Stifter, T.; Stricker, D. Autoencoder Attractors for Uncertainty Estimation. In Proceedings of the IEEE International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022. [Google Scholar]

- Georgescu, M.I.; Ionescu, R.; Khan, F.S.; Popescu, M.; Shah, M. A background-agnostic framework with adversarial training for abnormal event detection in video. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4505–4523. [Google Scholar] [CrossRef] [PubMed]

- Chalapathy, R.; Chawla, S. Deep learning for anomaly detection: A survey. arXiv 2019, arXiv:1901.03407. [Google Scholar]

- Sabokrou, M.; Khalooei, M.; Fathy, M.; Adeli, E. Adversarially learned one-class classifier for novelty detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3379–3388. [Google Scholar]

- Nguyen, T.N.; Meunier, J. Anomaly detection in video sequence with appearance-motion correspondence. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1273–1283. [Google Scholar]

- Zaheer, M.Z.; Lee, J.h.; Astrid, M.; Lee, S.I. Old is gold: Redefining the adversarially learned one-class classifier training paradigm. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14183–14193. [Google Scholar]

- Liu, W.; Luo, W.; Li, Z.; Zhao, P.; Gao, S. Margin Learning Embedded Prediction for Video Anomaly Detection with A Few Anomalies. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI 2019, Macao, China, 10–16 August 2019; pp. 3023–3030. [Google Scholar]

- Capozzi, L.; Barbosa, V.; Pinto, C.; Pinto, J.R.; Pereira, A.; Carvalho, P.M.; Cardoso, J.S. Towards Vehicle Occupant-Invariant Models for Activity Characterisation. IEEE Access 2022, accepted. [Google Scholar] [CrossRef]

| Year | Method | AUC Score (%) | |

|---|---|---|---|

| CUHK Avenue | ShanghaiTech | ||

| 2016 | Conv-AE [15] | 70.2 | 60.85 |

| 2017 | ConvLSTM-AE [20] | 77.0 | - |

| S-RNN [4] | 81.7 | 68.0 | |

| 2018 | FFP [3] | 85.1 | 72.8 |

| 2019 | Mem-AE [23] | 83.3 | 71.2 |

| Object-Centric [33] | 90.4 | 84.9 | |

| 2020 | MNAD [36] | 88.5 | 70.5 |

| VEC [27] | 90.2 | 74.8 | |

| 2021 | SSMT [30] | 86.9 | 83.5 |

| ROADMAP [13] | 88.3 | 76.6 | |

| AMMC [37] | 86.6 | 73.7 | |

| 2022 | BDPN [28] | 90.3 | 78.1 |

| Year | Method | Feature Extractor | AUC Score (%) | |

|---|---|---|---|---|

| ShanghaiTech | UCF-Crime | |||

| 2018 | Sultani et al. [40] | C3D-RGB | 86.3 | 75.41 |

| 2019 | IBL [41] | C3D-RGB | - | 78.66 |

| C3D-RGB | 76.44 | 81.08 | ||

| GCN-Anomaly [45] | TSN-Flow | 84.13 | 78.08 | |

| TSN-RGB | 84.44 | 82.12 | ||

| Motion-Aware [44] | PWC-Flow | - | 79.0 | |

| 2020 | ShanghaiTech | UCF-Crime | ||

| AR-Net [42] | I3D-RGB & I3D Flow | 91.24 | - | |

| 2021 | MIST [49] | I3D-RGB | 94.83 | 82.30 |

| RTFM [46] | I3D-RGB | 97.21 | 84.30 | |

| CRFD [50] | I3D-RGB | 97.48 | 84.89 | |

| BD-LSTM [51] | ResNet-50 | - | 85.53 | |

| 2022 | C3D-RGB | 94.81 | 82.85 | |

| MSL [47] | I3D-RGB | 96.08 | 85.30 | |

| VideoSwin-RGB | 97.32 | 85.62 | ||

| Year | Method | AUC Score (%) | ||

|---|---|---|---|---|

| UCF-Crime | CUHK Avenue | ShanghaiTech | ||

| 2019 | Liu et al. [53] | 82.0 | - | - |

| Landi et al. [54] | 77.52 | - | - | |

| 2022 | UBnormal [55] | - | 93.2 | 83.7 |

| Type | Year | Method | Datasets | Major Contributions |

|---|---|---|---|---|

| Semi-supervised | 2016 | Conv-AE [15] | A, SU, P | Estimated abnormality through the reconstruction error of the learnt AE. |

| 2017 | ConvLSTM-AE [20] | A, SU, P | A CNN for appearance encoding and a ConvLSTM for memorising motion information of past frames were integrated with the AE. | |

| S-RNN [4] | A, SU, P, S | Temporally coherent Sparse Coding mapped to a Stacked-RNN, improving parameter optimisation and anomaly prediction speed. | ||

| 2018 | FFP [3] | A, P, S | Future frames were predicted with motion and intensity constraints and were compared with the ground truth to detect anomalies. | |

| 2019 | Mem-AE [23] | A, P, S | Given an input, it used the encoded information as a query to retrieve the most relevant memory items for reconstruction. | |

| Object-Centric [33] | P, U, S | Object-centric AE to encode motion and appearance information, paired with a one-versus-rest classifier to separate normality clusters. | ||

| BMAN [31] | A, P, U, S | Introduced an inter-frame predictor to encode normal patterns, which is used to detect abnormal events in an appearance-motion joint detector. | ||

| 2020 | VEC [27] | A, P, S | Prediction of erased patches of incomplete video events, fully exploiting temporal information in the video. | |

| MNAD [36] | A, P, S | Added a memory module to record prototypical patterns of normal data in memory items, training it with compactness and separateness losses. | ||

| 2021 | SSMT [30] | A, P, S | Considered the discrimination of moving objects and objects in consecutive frames; reconstruction of object-specific appearance information. | |

| AMMC [37] | A, P, S | Combined appearance and motion features to obtain an essential and robust representation of regularity. | ||

| ROADMAP [13] | A, P, S | Used a frame prediction network that handles objects and different scales better; introduced a noise tolerance loss to mitigate background noise. | ||

| 2022 | BDPN [28] | A, P, S | Introduced three constraints to regularise the prediction task from pixel-wise, cross-modal, and temporal-sequence levels. | |

| Weakly-supervised | 2018 | Sultani et al. [40] | UC | Learnt anomaly through an MIL framework by learning a ranking model that predicts high anomaly scores for anomalous video segments. |

| 2019 | IBL [41] | UC | Used an inner bag loss for MIL to increase the gap between the lowest and highest scores in a positive bag and reduce it in a negative one. | |

| GCN [45] | P, S, UC | A GCN was used to clean label noise, to directly apply fully supervised action classifiers to weakly supervised anomaly detection. | ||

| Motion-Aware [44] | UC | Added temporal context to the MIL ranking model by using an attention block; the attention weights helped to identify anomalies better. | ||

| 2020 | AR-Net [42] | S | A dynamic MIL loss enlarged the interclass dispersion; a centre loss reduced the intraclass distance of normal snippets. | |

| 2021 | MIST [49] | S, UC | Implemented a pseudo-label generator and an attention-boosted feature encoder to focus on anomalous regions. | |

| RTFM [46] | P, S, UC, X | A feature magnitude learning function was trained to recognise positive instances; self-attention mechanisms captured temporal dependencies. | ||

| CRFD [50] | S, UC, X | Captured local-range temporal dependencies; enhanced features to the category space and further expanded the temporal modeling range. | ||

| BD-LSTM [51] | UC, UC2L | A BD-LSTM network was used to reduce the inference time of the sequence of frames while maintaining competitive results. | ||

| 2022 | MSL [47] | S, UC, X | Transformer-based MSL network to learn both video-level anomaly probability and snippet-level anomaly scores. | |

| Fully-sup. | 2019 | Liu et al. [53] | UC | Implemented a region loss to explicitly drive the network to learn the anomalous region; a meta learning module prevented severe overfitting. |

| Landi et al. [54] | UC2L | Considered spatiotemporal tubes instead of whole-frame video segments; existing videos were enriched with spatial and temporal annotations to allow bounding box supervision in both its train and test set. | ||

| 2022 | UBnormal [55] | S, UC | Proposed the translation of simulated objects from its dataset to others using a CycleGAN, increasing performance. |

| Dataset | Number of Frames | Scenes | Number of Anomalies | Resolution (px) | |||

|---|---|---|---|---|---|---|---|

| Normal | Abnormal | Total | Types | Total | |||

| UMN [58] | 6165 | 1576 | 7741 | 3 | 1 | 11 | 320 × 240 |

| Subway [57] | 192,548 | 16,603 | 209,151 | 2 | 5 | 65 | 512 × 384 |

| UCSD Ped1 [12] | 9995 | 4005 | 14,000 | 1 | 5 | 54 | 238 × 158 |

| UCSD Ped2 [12] | 2924 | 1636 | 4560 | 1 | 5 | 23 | 320 × 240 |

| CUHK Avenue [11] | 26,832 | 3820 | 30,652 | 1 | 5 | 47 | 640 × 360 |

| ShanghaiTech [4] | 300,308 | 17,090 | 317,398 | 13 | 11 | 130 | - |

| IITB-Corridor [60] | 375,288 | 108,278 | 483,566 | 1 | 10 | - | - |

| ADOC [61] | 162,093 | 97,030 | 259,123 | 1 | 25 | 721 | 1920 × 1080 |

| Street Scene [62] | 159,341 | 43,916 | 203,257 | 1 | 17 | 205 | 1280 × 720 |

| UCF-Crime [40] | - | - | 13,741,393 | 1900 | 13 | - | - |

| XD-Violence [59] | - | - | - | 4754 | 6 | - | - |

| UBnormal [55] | 236,902 | 147,887 | 89,015 | 29 | 22 | 660 | 1080 × 720 |

| Type | Year | Method | Availability | ML Framework |

|---|---|---|---|---|

| Semi-supervised | 2017 | S-RNN [4] | Link, a.o. 01/08/22 | TensorFlow |

| ConvLSTM-AE [20] | Link, a.o. 01/08/22 | Caffe | ||

| 2018 | FFP [3] | Link, a.o. 29/07/22 | TensorFlow | |

| ALOCC [66] | Link, a.o. 01/08/22 | TensorFlow | ||

| 2019 | Mem-AE [23] | Link, a.o. 01/08/22 | PyTorch | |

| AMC [67] | Link, a.o. 01/08/22 | TensorFlow | ||

| 2020 | MNAD [36] | Link, a.o. 27/07/22 | PyTorch | |

| OGNet [68] | Link, a.o. 01/08/22 | PyTorch | ||

| VEC [27] | Link, a.o. 01/08/22 | PyTorch | ||

| Weakly supervised | 2018 | Sultani et al. [40] | Link, a.o. 01/08/22 | Theano |

| 2019 | GCN [45] | Link, a.o. 01/08/22 | PyTorch | |

| MLEP [69] | Link, a.o. 01/08/22 | TensorFlow | ||

| 2020 | AR-Net [42] | Link, a.o. 01/08/22 | PyTorch | |

| XD-Violence [59] | Link, a.o. 30/07/22 | PyTorch | ||

| 2021 | MIST [49] | Link, a.o. 29/07/22 | PyTorch | |

| RTFM [46] | Link, a.o. 01/08/22 | PyTorch |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Caetano, F.; Carvalho, P.; Cardoso, J. Deep Anomaly Detection for In-Vehicle Monitoring—An Application-Oriented Review. Appl. Sci. 2022, 12, 10011. https://doi.org/10.3390/app121910011

Caetano F, Carvalho P, Cardoso J. Deep Anomaly Detection for In-Vehicle Monitoring—An Application-Oriented Review. Applied Sciences. 2022; 12(19):10011. https://doi.org/10.3390/app121910011

Chicago/Turabian StyleCaetano, Francisco, Pedro Carvalho, and Jaime Cardoso. 2022. "Deep Anomaly Detection for In-Vehicle Monitoring—An Application-Oriented Review" Applied Sciences 12, no. 19: 10011. https://doi.org/10.3390/app121910011

APA StyleCaetano, F., Carvalho, P., & Cardoso, J. (2022). Deep Anomaly Detection for In-Vehicle Monitoring—An Application-Oriented Review. Applied Sciences, 12(19), 10011. https://doi.org/10.3390/app121910011