Coastal Sargassum Level Estimation from Smartphone Pictures

Abstract

:Featured Application

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

3.1. Deep Convolutional Neural Networks

3.1.1. AlexNet

3.1.2. Google Net

3.1.3. VGG

3.1.4. ResNet

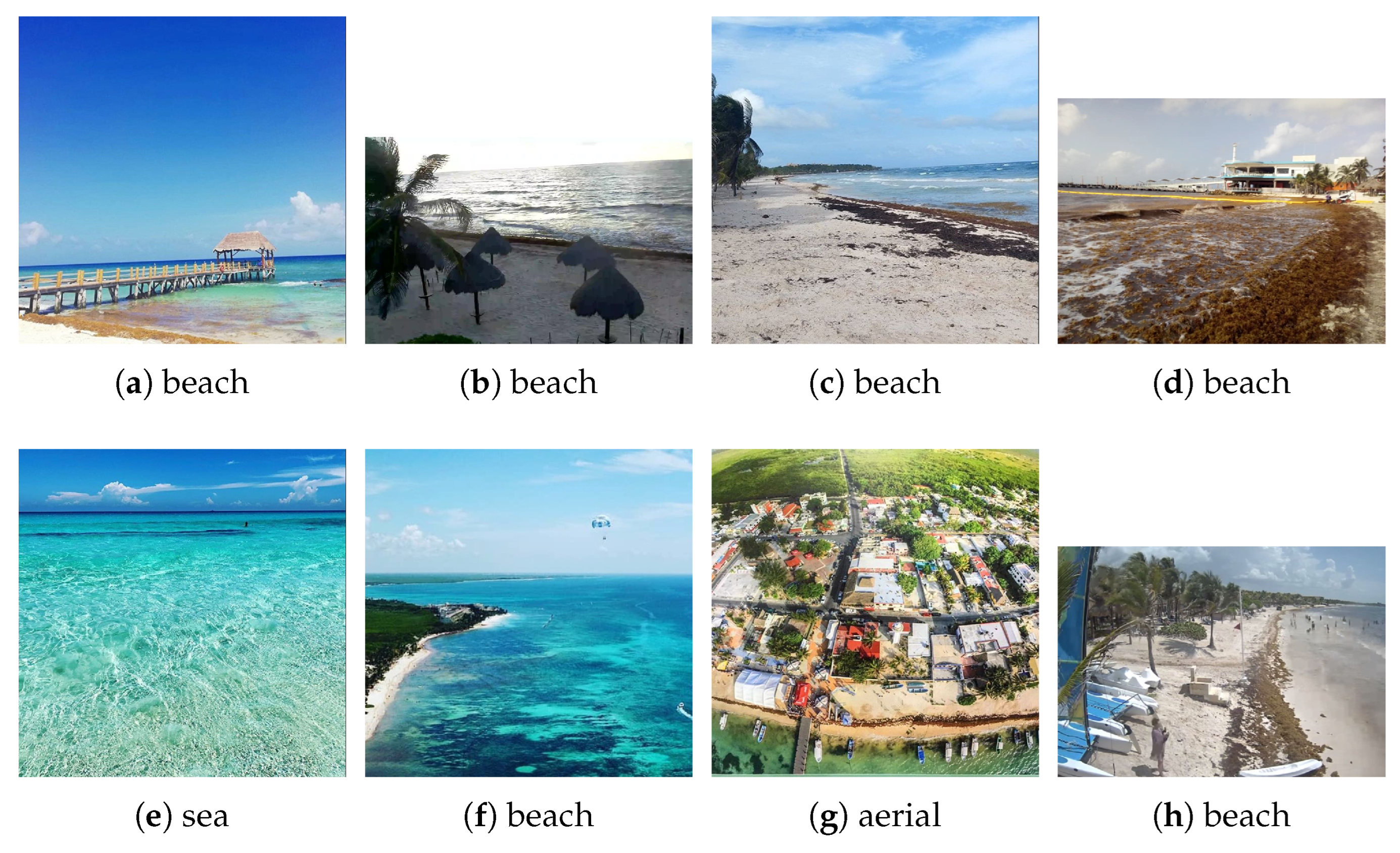

3.2. Sargassum Dataset

4. Experiments and Discussion

4.1. Classification with Deep Convolutional Neural Networks

4.2. Exploratory Experiments

4.3. Exploitation Experiments

4.4. Predictions Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Aguirre Muñoz, A. El sargazo en el caribe mexicano: De la negación y el voluntarismo a la realidad. 2019. Available online: https://www.conacyt.gob.mx/sargazo/images/pdfs/El_Sargazo_en_el_Caribe_Mexicanopdf.pdf (accessed on 12 March 2021).

- Chávez, V.; Uribe-Martínez, A.; Cuevas, E.; Rodríguez-Martínez, R.E.; van Tussenbroek, B.I.; Francisco, V.; Estévez, M.; Celis, L.B.; Monroy-Velázquez, L.V.; Leal-Bautista, R.; et al. Massive Influx of Pelagic Sargassum spp. on the Coasts of the Mexican Caribbean 2014–2020: Challenges and Opportunities. Water 2020, 12, 2908. [Google Scholar] [CrossRef]

- Maurer, A.S.; Gross, K.; Stapleton, S.P. Beached Sargassum alters sand thermal environments: Implications for incubating sea turtle eggs. J. Exp. Mar. Biol. Ecol. 2022, 546, 151650. [Google Scholar] [CrossRef]

- Tonon, T.; Machado, C.B.; Webber, M.; Webber, D.; Smith, J.; Pilsbury, A.; Cicéron, F.; Herrera-Rodriguez, L.; Jimenez, E.M.; Suarez, J.V.; et al. Biochemical and Elemental Composition of Pelagic Sargassum Biomass Harvested across the Caribbean. Phycology 2022, 2, 204–215. [Google Scholar] [CrossRef]

- Arellano-Verdejo, J.; Lazcano-Hernandez, H.E.; Cabanillas-Terán, N. ERISNet: Deep neural network for sargassum detection along the coastline of the mexican caribbean. PeerJ 2019, 2019, e6842. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Balado, J.; Olabarria, C.; Martínez-Sánchez, J.; Rodríguez-Pérez, J.R.; Pedro, A. Semantic segmentation of major macroalgae in coastal environments using high-resolution ground imagery and deep learning. Int. J. Remote Sens. 2021, 42, 1785–1800. [Google Scholar] [CrossRef]

- Cuevas, E.; Uribe-Martínez, A.; de los Ángeles Liceaga-Correa, M. A satellite remote-sensing multi-index approach to discriminate pelagic Sargassum in the waters of the Yucatan Peninsula, Mexico. Int. J. Remote Sens. 2018, 39, 3608–3627. [Google Scholar] [CrossRef]

- Maréchal, J.P.; Hellio, C.; Hu, C. A simple, fast, and reliable method to predict Sargassum washing ashore in the Lesser Antilles. Remote Sens. Appl. Soc. Environ. 2017, 5, 54–63. [Google Scholar] [CrossRef]

- Sun, D.; Chen, Y.; Wang, S.; Zhang, H.; Qiu, Z.; Mao, Z.; He, Y. Using Landsat 8 OLI data to differentiate Sargassum and Ulva prolifera blooms in the South Yellow Sea. Int. J. Appl. Earth Observ. Geoinform. 2021, 98, 102302. [Google Scholar] [CrossRef]

- Wang, M.; Hu, C. Predicting Sargassum blooms in the Caribbean Sea from MODIS observations. Geophys. Res. Lett. 2017, 44, 3265–3273. [Google Scholar] [CrossRef]

- Shin, J.; Lee, J.S.; Jang, L.H.; Lim, J.; Khim, B.K.; Jo, Y.H. Sargassum Detection Using Machine Learning Models: A Case Study with the First 6 Months of GOCI-II Imagery. Remote Sens. 2021, 13, 4844. [Google Scholar] [CrossRef]

- Wang, M.; Hu, C. Satellite remote sensing of pelagic Sargassum macroalgae: The power of high resolution and deep learning. Remote Sens. Environ. 2021, 264, 112631. [Google Scholar] [CrossRef]

- Agency, T.E.S. Sentinel-2 Resolution and Swath. 2015. Available online: https://sentinels.copernicus.eu/∼/resolution-and-swath (accessed on 28 April 2021).

- Arellano-Verdejo, J.; Lazcano-Hernandez, H.E. Crowdsourcing for Sargassum Monitoring Along the Beaches in Quintana Roo. In GIS LATAM; Mata-Rivera, M.F., Zagal-Flores, R., Arellano Verdejo, J., Lazcano Hernandez, H.E., Eds.; Springer International Publishing: New York, NY, USA, 2020; pp. 49–62. [Google Scholar]

- Valentini, N.; Balouin, Y. Assessment of a Smartphone-Based Camera System for Coastal Image Segmentation and Sargassum monitoring. J. Mar. Sci. Eng. 2020, 8, 23. [Google Scholar] [CrossRef] [Green Version]

- Kim, J.; Kang, Y. Automatic Classification of Photos by Tourist Attractions Using Deep Learning Model and Image Feature Vector Clustering. ISPRS Int. J. Geo-Inform. 2022, 11, 245. [Google Scholar] [CrossRef]

- Alvarez-Carranza, G.; Lazcano-Hernandez, H.E. Methodology to Create Geospatial MODIS Dataset. In Communications in Computer and Information Science; Springer: Berlin, Germany, 2019; Volume 1053, pp. 25–33. [Google Scholar] [CrossRef]

- Chen, Y.; Wan, J.; Zhang, J.; Zhao, J.; Ye, F.; Wang, Z.; Liu, S. Automatic Extraction Method of Sargassum Based on Spectral-Texture Features of Remote Sensing Images. In International Geoscience and Remote Sensing Symposium (IGARSS); Institute of Electrical and Electronics Engineers: Yokohama, Japan, 2019; pp. 3705–3707. [Google Scholar]

- Sutton, M.; Stum, J.; Hajduch, G.; Dufau, C.; Marechal, J.P.; Lucas, M. Monitoring a new type of pollution in the Atlantic Ocean: The sargassum algae. In Proceedings of the OCEANS 2019—Marseille, Institute of Electrical and Electronics Engineers (IEEE), Marseille, France, 17–20 June 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Wang, M.; Hu, C. Automatic Extraction of Sargassum Features From Sentinel-2 MSI Images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 2579–2597. [Google Scholar] [CrossRef]

- Gao, B.C.; Li, R.R. FVI—A Floating Vegetation Index Formed with Three Near-IR Channels in the 1.0–1.24 μm Spectral Range for the Detection of Vegetation Floating over Water Surfaces. Remote Sens. 2018, 10, 1421. [Google Scholar] [CrossRef] [Green Version]

- Kumar, A.; Walia, G.S.; Sharma, K. Recent trends in multicue based visual tracking: A review. Expert Syst. Appl. 2020, 162, 113711. [Google Scholar] [CrossRef]

- Christin, S.; Hervet, E.; Lecomte, N. Applications for Deep Learning in Ecology. Methods Ecol. Evol. 2019, 10, 1632–1644. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 26th Annual Conference on Neural Information Processing Systems 2012, Lake Tahoe, NV, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2015), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. (IJCV) 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Vasquez, J.I. Sargazo Dataset. 2021. Available online: https://www.kaggle.com/datasets/irvingvasquez/publicsargazods (accessed on 15 September 2022).

| Network | Feature Extraction | Fine Tuning | From Scratch |

|---|---|---|---|

| AlexNet | 0.522 | 0.556 | 0.467 |

| GoogleNet | 0.485 | 0.4752 | 0.436 |

| ResNet18 | 0.566 | 0.5860 | 0.512 |

| VGG16 | 0.522 | 0.5862 | 0.527 |

| ID | Learning Rate | Optimizer |

|---|---|---|

| 1 | SGD | |

| 2 | SGD | |

| 3 | SGD | |

| 4 | Adam | |

| 5 | Adam | |

| 6 | Adam |

| Experiments | Orthogonal Parameters | Metrics | ||||||

|---|---|---|---|---|---|---|---|---|

| Optimizer | Learning Rate | Epochs | Batch Size | Accuray | Precision | Recall | F1-Score | Training Time |

| SGD | 500 | 100 | 0.60 | 0.59 | 0.60 | 0.60 | 112 min 27 s | |

| SGD | 500 | 100 | 0.59 | 0.58 | 0.59 | 0.58 | 156 min 49 s | |

| SGD | 500 | 100 | 0.56 | 0.56 | 0.56 | 0.56 | 113 min 39 s | |

| Adam | 500 | 100 | 0.36 | - | - | - | 119 min 37 s | |

| Adam | 500 | 100 | 0.64 | 0.65 | 0.64 | 0.64 | 152 min 44 s | |

| Adam | 500 | 100 | 0.59 | 0.60 | 0.59 | 0.58 | 120 min 24 s | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vasquez, J.I.; Uriarte-Arcia, A.V.; Taud, H.; García-Floriano, A.; Ventura-Molina, E. Coastal Sargassum Level Estimation from Smartphone Pictures. Appl. Sci. 2022, 12, 10012. https://doi.org/10.3390/app121910012

Vasquez JI, Uriarte-Arcia AV, Taud H, García-Floriano A, Ventura-Molina E. Coastal Sargassum Level Estimation from Smartphone Pictures. Applied Sciences. 2022; 12(19):10012. https://doi.org/10.3390/app121910012

Chicago/Turabian StyleVasquez, Juan Irving, Abril Valeria Uriarte-Arcia, Hind Taud, Andrés García-Floriano, and Elías Ventura-Molina. 2022. "Coastal Sargassum Level Estimation from Smartphone Pictures" Applied Sciences 12, no. 19: 10012. https://doi.org/10.3390/app121910012